The original ELIZA application was 200-odd lines of code. The Python NLTK implementation is similarly short. An excerpt is provided from NLTK's website (http://www.nltk.org/_modules/nltk/chat/eliza.html):

As you can see from the code, input text was parsed and then matched against a series of regular expressions. Once the input was matched, a randomized response (that sometimes echoed back a portion of the input) was returned. So, something such as, I need a taco would trigger a response of, Would it really help you to get a taco? Obviously, the answer is yes, and, fortunately, we have advanced to the point that technology can provide you one (bless you, TacoBot), but this was early days still. Shockingly, some people actually believed ELIZA was a real human.

But what about more advanced bots? How are they built?

Surprisingly, most chatbots you're likely to encounter aren't even using machine learning (ML); they're what's known as retrieval-based models. This means responses are predefined according to the question and the context. The most common architecture for these bots is something called Artificial Intelligence Markup Language (AIML). AIML is an XML-based schema for representing how the bot should interact given the user's input. It's really just a more advanced version of how ELIZA works.

Let's take a look at how responses are generated using AIML. First, all input are preprocessed to normalize them. This means when you input Waaazzup??? it's mapped to WHAT IS UP. This preprocessing step funnels down the myriad ways of saying the same thing into one input that can run against a single rule. Punctuation and other extraneous input are removed as well at this point. Once that's complete, the input is matched against the appropriate rule. The following is a sample template:

<category> <pattern>WHAT IS UP</pattern> <template>The sky, duh. Pfft. Humans...</template> </category>

That is the basic setup, but you can also layer in wildcards, randomization, and prioritization schemes. For example, the following pattern uses wildcard matching:

<category> <pattern>* FOR ME<pattern> <template>I'm a bot. I don't <star/>. Ever.</template> </category>

Here, the * wildcard matches one or more words before FOR ME and then repeats those back in the output template. If the user were to type in Dance for me!, the response would be I'm a bot. I don't dance. Ever.

As you can see, these rules don't make for anything that approximates any type of real intelligence, but there are a few tricks that strengthen the illusion. One of the better ones is the ability to generate responses conditioned on a topic.

For example, here's a rule that invokes a topic:

<category> <pattern>I LIKE TURTLES</pattern> <template>I feel like this whole <set name="topic">turtle</set> thing could be a problem. What do you like about them? </template> </category>

Once the topic is set, the rules specific to that context can be matched:

<topic name="turtles"> <category> <pattern>* SHELL IS *</pattern> <template>I dislike turtles primarily because of their shells. What other creepy things do you like about turtles? </template> </category> <category> <pattern>* HIDE *</pattern> <template>I wish, like a turtle, that I could hide from this conversation. </template> </category> </topic>

Let's see what this interaction might look like:

>I like turtles!

>I feel like this whole turtle thing could be a problem. What do you like about them?

>I like how they hide in their shell.

>I wish, like a turtle, I could hide from this conversation.

You can see that the continuity across the conversation adds a measure of realism.

You're probably thinking that this can't be state of the art in this age of deep learning, and you're right. While most bots are rule-based, the next generation of chatbots are emerging, and they're based on neural networks.

In 2015, Oriol Vinyas and Quoc Le of Google published a paper, http://arxiv.org/pdf/1506.05869v1.pdf, that described the construction of a neural network based on sequence-to-sequence models. This type of model maps an input sequence, such as ABC, to and output sequence, such as XYZ. These inputs and outputs might be translations from one language to another, for example. In the case of their work here, the training data was not language translation, but rather tech support transcripts and movie dialogues. While the results from both models are interesting, it was the interactions based on the movie model that stole the headlines.

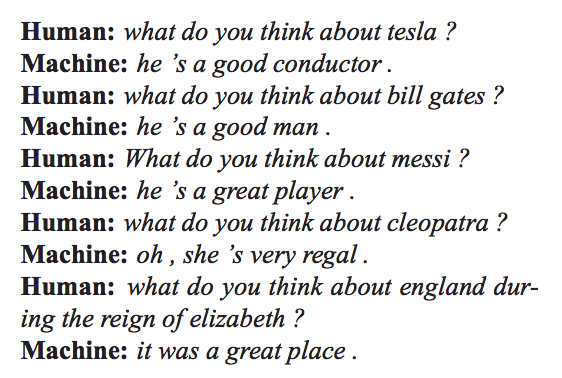

The following are sample interactions taken from the paper:

None of this was explicitly encoded by humans or present in the training set as asked, and, yet, looking at this, it's frighteningly like speaking with a human. But let's see more:

Notice that the model is responding with what appears to be knowledge of gender (he, she), place (England), and career (player). Even questions of meaning, ethics, and morality are fair game:

If that transcript doesn't give you a slight chill, there's a chance you might already be some sort of AI.

I wholeheartedly recommend reading the entire paper. It isn't overly technical, and it will definitely give you a glimpse of where the technology is headed.

We've talked a lot about the history, types, and design of chatbots, but let's now move on to building our own. We'll take two approaches to this. This first will use a technique we saw in previously, cosine similarity, and the second will leverage sequence-to-sequence learning.