Sound effects (SFX) can be classified as anything sonic that is not speech or music. They are essential to storytelling, bringing realism and added dimension to a production. It is worth noting that the art of sound-effect design is about not only creating something big or bizarre but also crafting subtle, low-key moments. Good effects are about conveying a compelling illusion. In general, sound effects can be contextual or narrative. These functions are not mutually exclusive; they allow for a range of possibilities in the sonic continuum between what we listen for in dialogue—namely, sound to convey meaning—and what we experience more viscerally and emotionally in music. With film and video, sound effects are indispensable to overall production value. In early “talking pictures,” it was not uncommon to have no more than a handful of sound effects, whereas WALL-E included a palette of 2,600 sound effects.

Contextual sound emanates from and duplicates a sound source as it is. It is often illustrative and also referred to as diegetic sound. The term derives from the Greek diegesis, meaning to tell or recount and, in the context of film, has come to describe that which comes from within the story space. (Extra-sound, or nondiegetic sound, comes from outside the story space; music underscoring is an example of nondiegetic sound.) If a gun fires, a car drives by, or leaves rustle in the wind, what you see is what you hear; the sound is naturalistic in structure and perspective. In other words, contextual sound is like direct narration.

Narrative sound adds more to a scene than what is apparent and so performs an informational function. It can be descriptive or commentative.

As the term suggests, descriptive sound describes sonic aspects of a scene, usually those not directly connected with the main action. A conversation in a hot room with a ceiling fan slowly turning is contextual sound. Descriptive sound would be the buzzing about of insects, oxcarts lumbering by outside, and an indistinguishable hubbub, or walla, of human activity. A young man leaves his apartment and walks out onto a city street to a bus stop. You hear descriptive sounds of the neighborhood: the rumble of passing cars, a horn, a siren, one neighbor calling to another, a car idling at an intersection, and the hissing boom of air brakes from an approaching bus.

Commentative sound also describes, but it makes an additional statement, one that usually has something to do with the story line. For example: An aging veteran wanders through the uniform rows of white crosses in a cemetery. A wind comes up as he pauses before individual markers. The wind is infused with the faint sound of battle, snippets of conversations, laughter, and music as he remembers his fallen comrades. Or behind the scene of a misty, farm meadow at dawn, the thrum of morning rush hour traffic is heard—a comment on encroaching urban sprawl.

Sound effects have specific functions within the general contextual and narrative categories. They break the screen plane, define space, focus attention, establish locale, create environment, emphasize and intensify action, depict identity, set pace, provide counterpoint, create humor, symbolize meaning, create metaphor, and unify transition. Paradoxical as it may seem, silence is also a functional sound effect.

A film or video without sounds, natural or produced, detaches an audience from the onscreen action. The audience becomes an observer, looking at the scene from outside rather than being made to feel a part of the action—at the center of an acoustic space. In some documentary approaches, for example, this has been a relatively common technique used to convey the sense that a subject is being treated objectively and so there is less possible distraction. The presence of sound changes the audience relationship to what is happening on-screen; it becomes part of the action in a sense. The audience becomes a participant in that there is no longer a separation between the listener-viewer and the screen plane. Therefore it can also be said that sound effects add realism to the picture.

Sound defines space by establishing distance, direction of movement, position, openness, and dimension. Distance—how close to or far from you a sound seems to be—is created mainly by relative loudness, or sound perspective. The louder a sound, the closer to the listener-viewer it is. A person speaking from several feet away will not sound as loud as someone speaking right next to you. Thunder at a low sound level tells you that a storm is some distance away; as the storm moves closer, the thunder grows louder.

By varying sound level, it is also possible to indicate direction of movement. As a person leaves a room, sound will gradually change from loud to soft; conversely, as a sound source gets closer, level changes from soft to loud.

With moving objects, frequency also helps establish distance and direction of movement. As a moving object such as a train, car, or siren approaches, its pitch gets higher; as it moves away or recedes, its pitch gets lower. This phenomenon is known as the Doppler effect (named for its discoverer, Christian J. Doppler, an early nineteenth-century Austrian physicist).

Sound defines relative position—the location of two or more sound sources in relation to one another, whether close, distant, or side-to-side (in stereo) or to the rear-side or behind (in surround sound). Relative position is established mainly through relative loudness and ambience. If two people are speaking and a car horn sounds, the loudness and the ambience of the three sound sources tells you their proximity to one another. If person A is louder than person B with less ambience than person B, and person B is louder than the car horn with more ambience than person A and with less ambience than the car horn (assuming monaural sound), person A will sound closest, person B farther away, and the horn farthest away. If the aural image is in stereo, loudness will influence relative position in panning left to right, assuming the sound sources are on the same plane. Once lateral perspective is established, a further change in loudness and ambience moves the sound closer or farther away.

Openness of outdoor space can be established in a number of ways—for example, thunder that rumbles for a longer-than-normal period of time and then rolls to quiet; wind that sounds thin; echo that has a longer-than-normal time between repeats; background sound that is extremely quiet—all these effects tend to enhance the size of outdoor space. Dimension of indoor space is usually established by means of reverberation (see Chapters 2 and 8). The more reverb there is, the larger the space is perceived to be. For example, Grand Central Station or the main hall of a castle would have a great deal of reverb, whereas a closet or an automobile would have little.

In shots, other than close-ups, in which a number of elements are seen at the same time, how do you know where to focus attention? Of course directors compose shots to direct the eye, but the eye can wander. Sound, however, draws attention and provides the viewer with a focus. In a shot of a large room filled with people, the eye takes it all in, but if a person shouts or begins choking, the sound directs the eye to that individual.

Sounds can establish locale. A cawing seagull places you at the ocean; the almost derisive, mocking cackle of a bird of paradise places you in the jungle; honking car horns and screeching brakes place you in city traffic; the whir and the clank of machinery places you in a factory; the squeak of athletic shoes, the rhythmic slap of a bouncing ball, and the roar of a crowd place you at a basketball game.

Establishing locale begins to create an environment, but more brush strokes are often needed to complete the picture. Honky-tonk saloon music may establish the Old West, but sounds of a blacksmith hammering, horses whinnying, wagon wheels rolling, and six-guns firing create environment. A prison locale can be established in several ways, but add the sounds of loudspeakers blaring orders, picks and shovels hacking and digging, grunts of effort, dogs barking, and whips cracking and you have created a brutal prison work camp. To convey a sense of alienation and dehumanization in a high-tech office, the scene can be orchestrated using the sounds of ringing telephones with futuristic tonalities, whisper-jet spurts of laser printers, the buzz of fluorescent lights, machines humming in monotonous tempos, and synthesized Muzak in the background.

Sounds can emphasize or highlight action. A person falling down a flight of stairs tumbles all the harder if each bump is accented. A car crash becomes a shattering collision by emphasizing the impact and the sonic aftermath—including silence. Creaking floorboards underscore someone slowly and methodically sneaking up to an objective. A saw grinding harshly through wood, rather than cutting smoothly, emphasizes the effort involved and the bite of the teeth.

Whereas emphasizing action highlights or calls attention to something important, intensifying action increases or heightens dramatic impact. As a wave forms and then crashes, the roar of the buildup and the loudness of the crash intensify the wave’s size and power. The slow, measured sound of an airplane engine becoming increasingly raspy and sputtery heightens the anticipation of its stalling and the plane’s crashing. A car’s twisted metal settling in the aftermath of a collision emits an agonized groaning sound. In animation, sound (and music) intensifies the extent of a character’s running, falling, crashing, skidding, chomping, and chasing.

Depicting identity is perhaps one of the most obvious uses of sound. Barking identifies a dog, slurred speech identifies a drunk, and so on. But on a more informational level, sound can also give a character or an object its own distinctive sound signature: the rattle sound of a rattlesnake to identify a slippery villain with a venomous intent; thin, clear, hard sounds to convey a cold character devoid of compassion; labored, asthmatic breathing to identify a character’s constant struggle in dealing with life; the sound of a dinosaur infused into the sound of a mechanical crane to give the crane an organic character; or the sound of a “monster” with the conventional low tonal grunting, breathing, roaring, and heavily percussive clomping infused with a whimpering effect to create sympathy or affection for the “beast.”

Sounds, or the lack of them, help set pace. The incessant, even rhythm of a machine creates a steady pace to underscore monotony. The controlled professionalism of two detectives discussing crucial evidence becomes more vital if the activity around them includes such sonic elements as footsteps moving quickly, telephones ringing, papers being shuffled, and a general walla of voices. Car-chase scenes get most of their pace from the sounds of screeching tires, gunning engines, and shifting gears. Sounds can also be orchestrated to produce a rhythmic effect to enhance a scene. In a gun battle, bursts can vary from ratta tat tat to ratta tat, then a few pauses, followed by budadadada. Additionally, sounds of the bullets hitting or ricocheting off different objects can add to not only the scene’s rhythm but also its sonic variety.

Sounds provide counterpoint when they are different from what is expected, thereby making an additional comment on the action. A judge bangs a gavel to the accompanying sound of dollar bills being peeled off, counterpointing the ideal of justice and the reality of corruption; or a smiling leader is cheered by a crowd, but instead of cheers, tortured screams are heard, belying the crowd scene.

Sounds can be funny. Think of boings, boinks, and plops; the swirling swoops of a pennywhistle; the chuga-chugaburp-cough-chuga of a steam engine trying to get started; and the exaggerated clinks, clanks, and clunks of a hapless robot trying to follow commands. Comic sounds are indispensable in cartoons in highlighting the shenanigans of their characters.

Sound can be used symbolically. The sound of a faucet dripping is heard when the body of a murder victim killed in a dispute over water rights is found. An elegantly decorated, but excessively reverberant, living room symbolizes the remoteness or coldness of the occupants. An inept ball team named the Bulls gathers in a parking lot for a road game; as the bus revs its engine, the sound is infused with the sorry bellow of a dispirited bull, comedically symbolizing the team’s incompetence. A condemned prisoner walks through a steel gate that squeaks when it slams shut. Instead of just any squeak, the sound is mixed with an agonized human groan to convey his anguish.

Sound can create a metaphorical relationship between what is heard and what is seen. A wife leaving her husband after enduring the last straw in a deeply troubled marriage walks out of their house, slamming the door. The sound of the slam has the palpable impact of finality and with it there is a slowly decaying reverberation that reflects a married life that has faded into nothingness. Or a person in a state of delirium hears the constant rat tat tat of a bouncing basketball from a nearby driveway. The repeated rhythm of the sound infiltrates his consciousness and takes him back to a time when, as a musician, that rhythm was part of a song he had made famous.

Sounds provide transitions and continuity between scenes, linking them by overlapping, leading-in, segueing, and leading out.

Overlapping occurs when the sound used at the end of one scene continues, without pause, into the next. A speech being delivered by a candidate’s spokesperson ends one scene with the words “a candidate who stands for...”; the next scene begins with the candidate at another location, saying, “equal rights for all under law.” Or the sound of a cheering crowd responding to a score in an athletic contest at the end of one scene overlaps into the next scene as the captain of the victorious team accepts the championship trophy.

A lead-in occurs when the audio to the next scene is heard before the scene actually begins and establishes a relationship to it. As a character thinks about a forthcoming gala event, the music and other sounds of the party are heard before the scene changes to the event itself. While a character gazes at a peaceful countryside, an unseen fighter plane in action is heard, anticipating and leading into the following scene, in which the same character is a jet pilot in an aerial dogfight.

A segue—cutting from one effect (or recording) to another with nothing in between—links scenes by abruptly changing from a sound that ends one scene to a similar sound that begins the next. As a character screams at the discovery of a dead body, the scream segues to the shriek of a train whistle; a wave crashing on a beach segues to an explosion; the sound of ripping cloth segues to the sound of a jet roar at takeoff.

Lead-out sound is audio to an outgoing scene that carries through to the beginning of the following scene but with no visual scene-to-scene relationship. A cop-and-robber car chase ends with the robber’s car crashing into a gasoline pump and exploding, the repercussions of which carry over into the next scene and fade out as the cop walks up the driveway to his home. Wistful, almost muted sounds of the countryside play under a scene in which boy meets girl, with love at first sight, and fade out under the next shot, showing them in a split screen sitting in their cubicles in different office buildings, staring into space.

A producer was once asked why he chose to show the explosion of the first atomic bomb test with no sound. He replied, “Silence was the most awesome sound that I could find.” Silence is not generally thought of as sound per se—that seems like a contradiction in terms. But it is the pauses or silences between words, sounds, and musical notes that help create rhythm, contrast, and power—elements important to sonic communication.

In situations where we anticipate sound, silence is a particularly powerful element. Thieves break into and rob a bank with barely a sound. As the burglary progresses, silence heightens suspense to an oppressive level as we anticipate an alarm going off, a tool clattering to the floor, or a guard suddenly shouting, “Freeze!” A horrifying sight compels a scream—but with the mouth wide open there is only silence, suggesting a horror that is unspeakable. Birds congregate on telephone wires, rooftops, and TV aerials and wait silently; the absence of sound makes the scene eerie and unnatural. A lone figure waits for an undercover rendezvous in a forest clearing. Using silence can enlarge the space and create a sense of isolation, unease, and imminent danger.

The silence preceding sound is equally effective. Out of perfect stillness comes a wrenching scream. In the silence before dawn, there is the anticipation of the new day’s sounds to come.

Silence is also effective following sound. An explosion that will destroy the enemy is set to go off. The ticking of the bomb reaches detonation time. Then, silence. Or the heightening sounds of passion build to resolution. Then, silence.

There are two sources of sound effects: prerecorded and produced. Prerecorded sound effects are distributed on digital disc or can be downloaded from the Internet (see Chapter 18). Produced SFX are obtained in four ways: they can be created and synchronized to picture in post-production in a studio often specially designed for that purpose, recorded on the set during shooting, collected in the field throughout production or between productions, and generated electronically using a synthesizer or computer. Sound effects are also built using any one or a combination of these approaches. In the interest of clarity, each approach is considered separately here. Regardless of how you produce sound effects, an overriding concern is to maintain sonic consistency.

Sound-effect libraries are collections of recorded sounds that can number from several hundred to many thousand, depending on the distributor. The distributor has produced and recorded the original sounds, collected them from other sources and obtained the rights to sell them, or both.

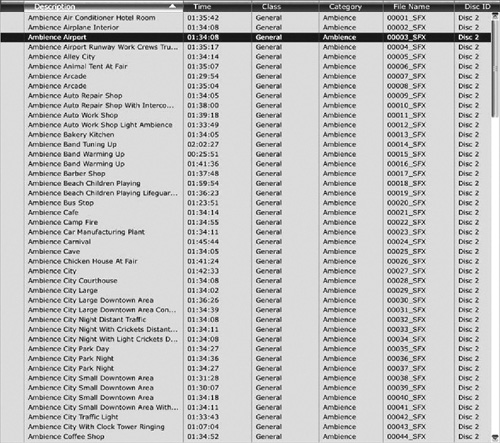

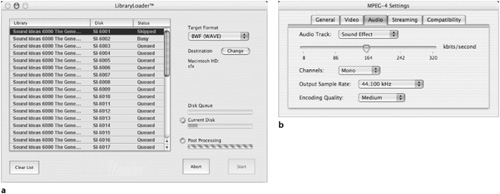

A major advantage of sound-effect libraries is that for one buyout fee you get many different sounds for relatively little cost compared with the time and the expense it would take to produce them yourself (see 15-1). Buyout means that you own the library and have unlimited, copyright-cleared use of it. The Internet provides dozens of sources that make available copyright-cleared sound effects for downloading either free or for a modest charge.[1]

a

Example . 15-1 Sound-effect libraries. (a) Examples of sound effects in one recorded sound-effect library. (b) Example of a detailed list of sound effects by category from another library. Copyright © 2000 Ljudproduktion AB, Stockholm, Sweden. All rights reserved.

CD Number | Track/In | Description | Time |

|---|---|---|---|

Digi-X-01 | 05-01 | Town square—people and birds—environment—hum of voices | 1:42 |

Digi-X-01 | 06-01 | Road junction—squealing brakes | 1:30 |

Digi-X-01 | 07-01 | Rain—heavy—railway station | 1:04 |

Digi-X-01 | 08-01 | Vacuum cleaner—on/off | :55 |

Digi-X-01 | 09-01 | Washing dishes by hand—kitchen | 1:05 |

Digi-X-01 | 10-01 | Door—creaking—large—small | :31 |

Digi-X-01 | 11-01 | Door bell—ding-dong | :07 |

Digi-X-01 | 12-01 | Knocking on door—repeated twice | :06 |

Digi-X-01 | 13-01 | Shower—person showering—bathroom | :36 |

Digi-X-01 | 14-01 | Fire—in open fireplace | :46 |

Digi-X-01 | 15-01 | Roller blind—down/up—flapping round | :14 |

Digi-X-01 | 16-01 | Wall clock—ticking—strikes 12—fly against window—insect | :55 |

Digi-X-01 | 17-01 | Toilet—flushing—old—gurgling—bathroom | :21 |

Digi-X-01 | 18-01 | Tap running—in sink—kitchen | :18 |

Digi-X-01 | 19-01 | Ticking alarm clock—mechanical | :17 |

Digi-X-01 | 20-01 | Lambs—two—outdoors—flies—birds—mammal—insect | 1:00 |

Digi-X-01 | 21-01 | Creek—rushing—water | :58 |

Digi-X-01 | 22-01 | Dogs barking—two—indoors—mammal | :15 |

Digi-X-01 | 23-01 | Milking cow by hand—metal bucket—farm | 1:02 |

Digi-X-01 | 24-01 | Cows in barn—restless—mammal | 1:39 |

b

Boats—Section 6 | |||

|---|---|---|---|

Catalog Number | Subject | Description | Time |

6-046 | Ship’s deck | Large type—anchor chain down quickly | :05 |

6-047 | Coast Guard cutter | Operating steadily—with water sounds | :41 |

6-048 | Water taxi | Start up—steady run—slow down | :47 |

6-049 | Water taxi | Steady run—slow down—rev ups—with water sounds | 1:25 |

6-050 | Fishing boat | Diesel type—slow speed—steady operation | :36 |

6-051 | Tugboat | Diesel type—slow speed—steady operation | :38 |

6-052 | Tugboat | Diesel type—medium speed—steady operation | :38 |

6-053 | Outboard | Fast run-by | :32 |

6-054 | Naval landing craft | Medium speed—steady operation | :50 |

Most broadcast stations and many production houses use libraries because they have neither the personnel nor the budget to assign staff to produce sound effects. Think of what it would involve to produce, for example, the real sounds of a jet fly-by, a forest fire, a torpedo exploding into a ship, or stampeding cattle. Moreover, SFX are available in mono, stereo, and surround sound, depending on the library.

Sound quality, or the absence thereof, was a serious problem with prerecorded sound effects before the days of digital audio, when they were distributed on tape and long-playing (LP) record. The audio was analog, dynamic range was limited, tape was noisy, vinyl LPs got scratched, and too many effects sounded lifeless. Frequently, it was easy to tell that the SFX came from a recording; they sounded “canned.” The sound effects on digital disc have greatly improved fidelity and dynamic range, that is, those that were digitally recorded or transferred from analog to digital with widened dynamic range and sans noise. Be wary, however, of analog SFX transferred to digital disc without noise processing. Two other benefits of sound effects on digital disc are faster access to tracks and the ability to see a sound’s waveform on-screen.

The great advantage of libraries is the convenience of having thousands of digital-quality sound effects at your fingertips for relatively little cost. There are, however, disadvantages.

Sound-effect libraries have three conspicuous disadvantages: you give up control over the dynamics and the timing of an effect; ambiences vary, so in editing effects together they may not match one another or those you require in your production; and the effect may not be long enough for your needs. Other disadvantages are imprecise titles and mediocre sound and production quality of the downloadable materials.

One reason why sound editors prefer to create their own effects rather than use libraries is to have control over the dynamics of a sound, particularly effects generated by humans. For example, thousands of different footstep sounds are available in libraries, but you might need a specific footfall that requires a certain-sized woman walking at a particular gait on a solidly packed road of dirt and stone granules. Maybe no library can quite match this sound.

Timing is another reason why many sound editors prefer to create their own effects. To continue our example, if the pace of the footfall has to match action on the screen, trying to synchronize each step in the prerecorded sound effect to each step in the picture could be difficult.

Matching ambiences could present another problem with prerecorded sound effects. Suppose in a radio commercial you need footsteps walking down a hall, followed by a knock on a door that enters into that hallway. If you cut together two different sounds to achieve the overall effect, take care that the sound of the footsteps walking toward the door has the same ambience as the knock on the door. If the ambiences are different, the audience will perceive the two actions as taking place in different locales. Even if the audience could see the action, the different ambiences would be disconcerting.

To offset this problem, some sound-effect libraries provide different ambiences for specific applications: footsteps on a city street, with reverb to put you in town among buildings and without reverb to put you in a more open area; or gunshots with different qualities of ricochet to cover the various environments in which a shot may be fired. Some libraries provide collections of ambient environments, allowing you to mix the appropriate background sound with a given effect (see 15-2). In so doing be sure that the sound effect used has little or no ambient coloration of its own. Otherwise the backgrounds will be additive, may clash, or both.

Library SFX have fixed lengths. You need a sound to last 15 seconds, but the effect you want to use is only seven seconds long. What to do?

If the effect is continuous, crowd sound or wind, for example, it can be looped (see “Looping” later in this section). If it is not continuous, such as a dog barking and then growling, you are stuck unless you can find two separate effects of the same-sounding dog barking and growling. If it is possible to edit them together to meet your timing needs, be careful that the edit transition from bark to growl sounds natural.

Titles in sound-effect libraries are often not precise enough to be used as guides for selecting material. For example, what does “laughter,” “washing machine,” “window opening,” or “polite applause” actually tell you about the sound effect besides its general subject and application? Most good libraries provide short descriptions of each effect to give an idea of its content (see 15-1b). Even so, always listen before you choose because nuance in sound is difficult to describe in words.

Although many Web sites provide easy access to thousands of downloadable sound effects, many free of charge, care must be taken to ensure that high-quality sound and production values have been maintained. Be wary of SFX that are compressed and that have low resolutions and sampling frequencies—anything with less than 16-bit resolution and a 44.1 kHz sampling rate. Make sure that the sound effects are clean and well produced and do not carry any sonic baggage such as low-level noise, unwanted ambience, brittle high frequencies, muddy bass, and confusing perspective.

Once a sound effect has been recorded, whether it’s originally produced material or from a prerecorded library, any number of things can be done to alter its sonic characteristics and thereby extend the usefulness of a sound-effect collection.

Because pitch and duration are inseparable parts of sound, it is possible to change the character of any recording by varying its playing speed. This technique will not work with all sounds; some will sound obviously unnatural—either too drawn out and guttural or too fast and falsetto. But for sounds with sonic characteristics that do lend themselves to changes in pitch and duration, the effects can be remarkable.

For example, the sound effect of a slow, low-pitched, mournful wind can be changed to the ferocious howl of a hurricane by increasing its playing speed. The beeps of a car horn followed by a slight ambient reverb can be slowed to become a small ship in a small harbor and slowed yet more to become a large ship in a large harbor. Hundreds of droning bees in flight can be turned into a squadron of World War II bombers by decreasing the playing speed of the effect or turned into a horde of mosquitoes by increasing the playing speed.

Decreasing the playing speed of cawing jungle birds will take you from the rain forest to an eerie world of science fiction. A gorilla can be turned into King Kong by slowing the recording’s playing speed. The speeded-up sound of chicken clucks can be turned into convincing bat vocalizations. The possibilities are endless, and the only way to discover how flexible sounds can be is to experiment.

Another way to alter the characteristics of a sound is to play it backward. Digital audio workstations usually have a reverse command that rewrites the selected region in reverse, resulting in a backward audio effect. This is a common technique used in action films and many film trailers. A reverse bloom or slow attack followed by a sudden decay and release in a sound provides moments of sonic punctuation and emphasis for on-screen action.

Sometimes you may need a continuous effect that has to last several seconds, such as an idling car engine, a drum roll, or a steady beat. When the sound effect is shorter than the required time, you can use looping to repeat it continuously. In hard-disk systems, the looping command will continuously repeat the selected region.

Make sure that the edit points are made so that the effect sounds continuous rather than repetitious. Care must be taken to remove any clicks, pops, or other artifacts that may draw attention to the loop and distract from the illusion. Cross-fading loops can help ameliorate some problems when it is difficult to render a seamless loop. That said, even well-edited loops run the risk of sounding repetitious if they are played for too long.

Another looping technique that can sometimes be used to lengthen an effect is to reverse-loop similar sounds against themselves. When done correctly this technique also has the advantage of sounding natural.

Loops can also be designed to create a sound effect. For example, taking the start-up sound of a motorcycle before it cranks over and putting it into a half-second repeat can be used as the basis for the rhythmic sound of a small, enclosed machine area.

By signal-processing a sound effect using reverb, delay, flanging, pitch shifting, and so on, it is possible to create a virtually infinite number of effects. For example, flanging a cat’s meow can turn it into an unearthly cry, adding low frequencies to a crackling fire can make it a raging inferno, and chorusing a bird chirp can create a boisterous flock.

Signal processing also helps enhance a sound effect without actually changing its identity—for example, adding low frequencies to increase its weight and impact or beefing it up by pitch-shifting the effect lower and then compressing it (bearing in mind that too much bottom muddies the overall sound and masks the higher frequencies). Other signal-processing techniques are discussed in the following section.

Although prerecorded sound libraries are readily available—some are quite good—most audio designers, particularly for theatrical film and television, prefer to produce their own effects because doing so allows complete control in shaping a sound to meet a precise need. In addition, if the effect is taken from the actual source, it has the advantage of being authentic. Then too sound designers are sometimes called on to create effects that do not already exist, and they prefer to build these from live sources.

Live sound effects are collected in three ways: by creating them in a studio, by recording them on the set during shooting, and by recording them directly from the actual source, usually in the field, sometimes during production.

Creating sound effects in the studio goes back to the days before television, when drama was so much a part of radio programming, and many ingenious ways were devised to create them. Sound effects were produced vocally by performers and also by using the tools of the trade: bells, buzzers, cutlery, cellophane, doorframes, telephone ringers, water tubs, car fenders, coconut shells, panes of glass—virtually anything that made a sound the script called for. These effects were produced live and were usually done in the studio with the performers. (Effects that were difficult to produce were taken from recordings.) These techniques are still useful and can be further enhanced when combined with the infinite variety of signal-processing options available today.

Many types of sound effects can be produced vocally. Some performers have the ability to control their breathing, manipulate their vocal cords and palate, and change the size and the shape of their mouth to produce a variety of effects, such as animal sounds, pops, clicks, and honks.

There are many notable voice artists. A few of the better known are Joe Siracusa, who was the voice of Gerald McBoing Boing in the 1951 Academy Award-winning cartoon. The story, by Dr. Seuss, relates the adventures of a six-year-old boy who communicates through sound effects instead of using words. Among the hundreds of films to his credit, Frank Welker has done the sounds of the dragon, parrot, and raven in The Page Master, the snake in Anaconda, the gopher in Caddyshack 2, the gorilla in Congo, Godzilla and the junior Godzillas in Godzilla, the insects in Honey, I Shrunk the Kids, and the Martian vocals in Mars Attacks! Fred Newman is probably best known as the sound-effect artist on The Prairie Home Companion. His repertoire of vocal effects not only can be heard on the program but are cataloged, with ways to produce them, in his book Mouth Sounds: How to Whistle, Pop, Boing, and Honk for All Occasions and Then Some (see Bibliography).

Among the keys to effectively producing vocal sound effects are to make sure they do not sound like they come from a human voice and to infuse into the sound the sonic identity or personality that creates the “character.”

The term now applied to manually producing sound effects in the studio is Foley recording, named for Jack Foley, a sound editor with Universal Pictures for many years. Given the history of radio drama and the fact that other Hollywood sound editors had been producing effects in the studio for some time, clearly Jack Foley did not invent the technique. His name became identified with it in the early 1950s when he had to produce the sound of paddling a small survival raft in the ocean. Instead of producing the effect separately and then having it synced to picture later, which had been the typical procedure, he produced the effect in sync with picture at the same time. The inventiveness and efficiency he showed in simultaneously producing and syncing sound effects and picture—saving the sound editor considerable post-production time—impressed enough people that Foley’s name has become associated with the technique ever since.

Today the primary purposes of Foley recording are to produce sound effects that are difficult to record during production, such as for far-flung action scenes or large-scale movement of people and materiel; to create sounds that do not exist naturally; and to produce controlled sound effects in a regulated studio environment.

This is not to suggest that “Foleying” and sonic authenticity are mutually exclusive. Producing realistic Foley effects has come a long way since the days of radio drama and involves much more than pointing a microphone at a sound source on a surface that is not a real surface in an acoustic space that has no natural environment. A door sound cannot be just any door. Does the scene call for a closet door, a bathroom door, or a front door? A closet door has little air, a bathroom door sounds more open, and a front door sounds solid, with a latch to consider. In a sword fight, simply banging together two pieces of metal adds little to the dynamics of the scene. Using metal that produces strong harmonic rings, however, creates the impression of swords with formidable strength. A basketball game recorded in a schoolyard will have a more open sound, with rim noises, ball handling, and different types of ball bounces on asphalt, than will a basketball game played in an indoor gymnasium, which will differ still from the sound of a game played in an indoor arena.

Generally, Foley effects are produced in a specially designed sound studio known as a Foley stage (see 15-3). It is acoustically dry not only because, as with dialogue rerecording, ambiences are usually added later but also because reflections from loud sounds must be kept to a minimum. That said, Foley recording is sometimes done on the actual production set to take advantage of its more realistic acoustic environment.

15-3 A Foley stage. The two Foley walkers are using the various pits and equipment to perform, in sync, the sounds that should be heard with the picture.

Foley effects are usually produced in sync with the visuals. The picture is shown on a screen in the studio, and when a foot falls, a door opens, a paper rustles, a floor squeaks, a man sits, a girl coughs, a punch lands, or a body splashes, a Foley artist generates the effect at the precise time it occurs on-screen. Such a person is sometimes called a Foley walker because Foley work often requires performing many different types of footsteps. In fact, many Foley artists are dancers.

Important to successful Foleying is having a feel for the material—to become, in a sense, the on-screen actor by recognizing body movements and the sounds they generate. John Wayne, for example, did not have a typical walk; he sort of strode and rolled. Other actors may clomp, shuffle, or glide as they walk.

Also important to creating a sound effect is studying its sonic characteristics and then finding a sound source that contains similar qualities. Take the sound of a growing rock. There is no such sound, of course, but if there were, what would it be like? Its obvious characteristics would be stretching and sharp cracking sounds. One solution is to inflate a balloon and scratch it with your fingers to create a high-pitched scraping sound. If the rock is huge and requires a throatier sound, slow down the playing speed. For the cracking sound, shellac an inflated rubber tube. Once the shellac hardens, sonically isolate the valve to eliminate the sound of air escaping and let the air out of the tube. As the air escapes, the shellac will crack. Combine both sounds to get the desired effect.

There are myriad examples of Foley-created effects, many carryovers from the heyday of radio drama. This section presents just a sampling of the range of possibilities.

Door sounds have been a fundamental aspect of Foley recording since its beginnings. The principal sound heard on a door close or open comes from the lock and the jamb. There are numerous ways to achieve this effect for different types of doors:

Squeaky door—twist a leather belt or billfold, turn snug wooden pegs in holes drilled in a block of wood, or twist a cork in the mouth of a bottle

Elevator door—run a roller skate over a long, flat piece of metal

Jail door—the characteristic sound of an iron door is the noise when it clangs shut: clang two flat pieces of metal together, then let one slide along the other for a moment, signifying the bar sliding into place

Other iron door—draw an iron skate over an iron plate (for the hollow clang of iron doors opening); rattling a heavy chain and a key in a lock adds to the effect

Screen door—the distinctive sound comes from the spring and the rattle of the screen on the slam: secure an old spring, slap it against a piece of wood, then rattle a window screen

Swinging doors—swing a real door back and forth between the hands, letting the free edge strike the heels of the hands

Punches, slaps, and blows to the head and body are common Foley effects, as are the sounds of footsteps, falling, and breaking bones. These sounds have broad applications in different genres, from action-adventure to drama and comedy. As effects, they can be created using an imaginative variety of sources and techniques:

Creating blows on the head by striking a pumpkin with a bat, striking a large melon with a wooden mallet or a short length of garden hose—add crackers for additional texture—or striking a baseball glove with a short piece of garden hose

Creating blows on the chin by lightly dampening a large powder puff and slapping it on the wrist close to the mic, holding a piece of sponge rubber in one hand and striking it with the fist, slipping on a thin leather glove and striking the bare hand with the gloved fist, or hanging a slab of meat and punching it with a closed fist

Cracking a whip to heighten the impact of a punch

Pulling a fist in and out of a watermelon to produce the sound of a monster taking the heart from a living person

Creating various footsteps: on concrete—use hard-heeled shoes on composition stone; on gravel—fill a long shallow box with gravel and have someone actually walk on it; on leaves—stir cornflakes in a small cardboard box with fingers; on mud—in a large dishpan containing a little water, place several crumpled and shredded newspapers or paper towels and simulate walking by using the palm of the hand for footsteps; on snow—squeeze a box of cornstarch with the fingers in the proper rhythm or squeeze cornstarch in a gloved hand; on stairs—use just the ball of the foot, not the heel, in a forward sliding motion on a bare floor

Dropping a squash to provide the dull thud of a falling body hitting the ground (to add more weight to the body, drop a sack of sand on the studio floor)

Crushing chicken bones together with a Styrofoam cup for bone breaks

Breaking bamboo, frozen carrots, or cabbage for more bone crunching

Using string to suspend wooden sticks from a board and manipulating the board so that the sticks clack together to create the effect of bones rattling

Creating the effect of breaking bones by chewing hard candy close to the mic, twisting and crunching a celery stalk, or snapping small-diameter dowels wrapped in soft paper

Inserting a mini-mic up the nose to create the perspective of hearing breathing from inside the head

Twisting stalks of celery to simulate the sound of tendons being stretched

Bending a plastic straw to simulate the sound of a moving joint

The sounds of guns and other weaponry can be made from a wide range of other sources when the real thing is not available.

Snapping open a pair of pliers, a door latch, or briefcase catch to cock a pistol

Closing a book smartly; striking a padded leather cushion with a thin, flat stick or a whip; or pricking a balloon with a pin to create a pistol shot

Striking a book with the flat side of a knife handle for a bullet hitting a wall

For the whiz-by ricochet of a passing bullet, using a sling shot with large coins, washers, screws—anything that produces an edge tone—and recording close miked

Whipping a thin, flexible stick through the air and overdubbing it several times to create the sound of whooshing arrows

Piling a collection of tin and metal scraps into a large tub and then dumping it to simulate a metallic crash (for a sustained crash, shake and rattle the tub until the cue for the payoff crash)

Dropping debris or slamming a door for an explosion

Using a lion’s roar without the attack and some flanging to add to an explosion

Recording light motorcycle drive-bys, then pitch-shifting, and reshaping their sound envelopes to use as building material for an explosion

Flushing a toilet, slowed down with reverb, to simulate a depth charge

Banging on a metal canoe in a swimming pool and dropping the canoe into the water above an underwater mic to produce the sounds of explosions beating on the hull of a submarine

Using the venting and exhaust sounds from a cylinder of nitrogen as the basis for a freeze gun

While nothing will substitute for a high-quality ambience recording, the following are ways to both enhance and approximate sounds of the elements and of various creatures:

Folding two sections of newspaper in half, then cutting each section into parallel strips and gently swaying them close to the mic to create the effect of a breeze

Using a mallet against a piece of sheet metal or ductwork to sound like thunder

Letting salt or fine sand slip through fingers or a funnel onto cellophane, or pouring water from a sprinkling can into a tub with some water already in it, for simulating rain

Blowing air through a straw into a glass of water for the effect of a babbling brook (experiment by using varying amounts of water and by blowing at different speeds)

Blowing into a glass of water through a soda straw for a flood

Using the slurpy, sucking sound of a bathtub drain as the basis for a whirlpool or someone sinking into quicksand

Discharging compressed air in a lot of mud to create the dense bubbling sound of a molten pit

Crumpling stiff paper or cellophane near the mic or pouring salt from a shaker onto stiff paper to create the sound of fire; blowing lightly through a soda straw into liquid at the mic for the roar of flames; breaking the stems of broom straws or crushing wrapping paper for the effects of a crackling fire

Crumpling Styrofoam near the mic for the effect of crackling ice

Twisting an inflated balloon for the effect of a creaking ice jam

Scraping dry ice on metal to create the sound of a moving glacier

Clapping a pair of coconut shells together in proper rhythm to create the clippety-clop sound of hoofbeats on a hard road

Running roller skates over fine gravel sprinkled on a blotter for a horse and wagon

Pushing a plunger in a sandbox to simulate horses walking on sand

Using a feather duster in the spokes of a spinning bicycle wheel to create the sound of gently beating wings—or flapping a towel or sheet to generate a more aggressive wing sound

Taking the rubber ball from a computer mouse and dragging it across the face of a computer monitor to create the sound of a bat

Wiping dry glass to use as the basis for a squeak from a large mouse

Lightly tapping safety pins on linoleum to simulate a mouse’s footsteps

Often it takes two, three, or more sounds plus signal processing to come up with the appropriate sound effect. When a sound effect is built in this way, numerous strategies and techniques are employed to achieve the desired impact (see Chapter 20). Every decision informs the whole: selecting source material, layering and combining sounds, editing, (re)shaping sound envelopes, speeding up or slowing down a sound, reversing and looping, and applying reverb, delay, flanging, chorusing, or other processing:

The helicopter sound at the beginning of Apocalypse Now—the blade thwarp, the turbine whine, and the gear sound—was created by synthesizing the sounds of a real helicopter.

The sound of the insects coming out of the heat duct in Arachnophobia was produced by recording Brazilian roaches walking on aluminum. The rasping sound a tarantula makes rubbing its back legs on the bulblike back half of its body was created from the sound of grease frying mixed with high-pressure air bursts.

In Back to the Future III, the basic sound of galloping horses was created using plungers on different types of surfaces. The sound was then rerecorded and processed in various ways to match the screen action.

In WALL-E the howling wind of Earth at the beginning of the film was made from a recording of Niagara Falls. The sound was processed using reverb, slight feedback in the upper frequency range, and some equalization.

The sound blaster weapon in Star Wars was made from recording the sounds of tapping on a radio tower high-tension guy-wire. The characteristic, sweeping, “pass-by feeling” of the sound envelope is a result of higher frequencies traveling along the wire faster than lower frequencies. Similarly, the sound of Eve’s laser in WALL-E was made from striking an extended Slinky.

The sounds of the spaceship Millennium Falcon in Star Wars included tiny fan motors, a flight of World War II torpedo planes, a pair of low-frequency sine waves beating against each other, variably phased white noise with frequency nulls, a blimp, a phased atom bomb explosion, a distorted thunderclap, an F-86 jet firing afterburners, a Phantom jet idling, a 747 takeoff, an F-105 jet taxiing, and a sloweddown P-51 prop plane pass-by. Various textures were taken from each of these sounds and blended together to create the engine noises, takeoffs, and fly-bys.

In Jurassic Park III the sounds of velociraptors were created from a variety of sources, including the hissing of a house cat, snarls and cackles from vultures, ecstatic calls from emperor and Adelie penguins, as well as marine mammals and larger cats. Building on the repertoire of the first two films, the tyrannosaurus sounds were composed of the trumpeting sound of a baby elephant, an alligator growl, and the growls of 15 other creatures, mostly lions and tigers. The inhale of T. Rex’s breathing included the sounds of lions, seals, and dolphins; the exhale included the sound of a whale’s blowhole. The smashing, crashing sound of trees being cut down, particularly Sequoias, was used to create the footfalls of the larger dinosaurs. The sounds of the reptilian dinosaurs were built from the sounds of lizards, iguanas, bull snakes, a rattlesnake (flanged), and horselike snorts. A Synclavier was used to process many of these sounds, including some of the dinosaur footsteps and eating effects.[2]

In the film The PageMaster, the dragon’s sound was produced vocally and reinforced with tiger growls to take advantage of the animal’s bigger and more resonant chest cavity.

The spinning and moving sounds of the main character going from place to place in The Mask were created by twirling garden hoses and power cables to get moving-through-air sounds, layering them in a Synclavier, routing them through a loudspeaker, waving a mic in front of the loudspeaker to get a Doppler effect, and then adding jets, fire whooshes, and wind gusts.

The multilayered sound of the batmobile in Batman and Robin comprised the sounds of an Atlas rocket engine blast, the turbocharger whine of an 800-horsepower Buick Grand National, a Ducati motorcycle, and a Porsche 917 12-cylinder racer.

In St. Elmo’s Fire, which is a phenomenon caused when static electricity leaps from metal to metal, signal-processing the sound of picking at and snapping carpet padding emulated the effect.

For the final electrocution scene in The Green Mile, the sound of metal scraping, human screams, dogs barking, pigs squealing, and other animals in pain were integrated with the idea of making the electricity’s effect like fingernails on a chalkboard.

In Star Trek Insurrection, there are little flying drones, basically robots with wings. Their sound was created by running vinyl-covered cable down a slope, tying a boom box to it on a pulley, and putting a microphone in the middle to get the Doppler effect. A series of different-quality steady-state tones was played back from the boom box’s cassette to create the basic effect. All the sounds were then processed in a Synclavier.

Many different processed sounds went into creating the effects for the hovercraft Nebuchadnezzar in The Matrix. To help create the low metal resonances heard inside the ship, the sound of a giant metal gate that produced singing resonances was pitch-shifted down many octaves.

In Master and Commander, to simulate the sound of a nineteenth-century British frigate in a storm at sea, a large wooden frame was built and put in the bed of a pickup truck. A thousand feet of rope was strung tightly around the frame. The truck was then driven at high speed into a formidable headwind in the Mojave Desert. Acoustic blankets deadened the motor and truck sounds. The sound of the air meeting the lines of rope approximated the shriek of the wind in the frigate’s rigging. A group of artillery collectors provided authentic cannons and cannon shot for the ship-to-ship cannonading. Chain shot (two cannonballs connected by a 2-foot piece of chain, used to take out rigging and sails) and grapeshot (canisters with a number of smaller balls inside, used to kill the opposition) were recorded at a firing range.[3]

Being ingenious in creating a sound effect is not enough. You also have to know the components of the effect and the context in which the effect will be placed. Let us use two examples as illustrations: a car crash and footsteps.

The sound of a car crash generally has six components: the skid, a slight pause just before impact, the impact, the grinding sound of metal, the shattering of glass just after impact, and silence.

The sound of footsteps consists of three basic elements: the impact of the heel, which is a fairly crisp sound; the brush of the sole, which is a relatively soft sound; and, sometimes, heel or sole scrapes.

But before producing these sounds, you need to know the context in which they will be placed. For the car crash, the make, model, and year of the car will dictate the sounds of the engine, the skidding, and, after impact, the metal and the glass. The type of tires and the road surface will govern the pitch and the density of the riding sound; the car’s speed is another factor that affects pitch. The location of the road—for example, in the city or the country—helps determine the quality of the sound’s ambience and reverb. A swerving car will require tire squeals. Of course, what the car crashes into will also affect the sound’s design.

As for the footsteps, the height, weight, gender, and age of the person will influence the sound’s density and the heaviness of the footfall. Another influence on the footfall sound is the footwear—shoes with leather heels and soles, pumps, spiked heels, sneakers, sandals, and so on. The choices here may relate to the hardness or softness of the footfall and the attack of the heel sound or lack thereof. Perhaps you would want a suction-type or squeaky-type sound with sneakers; maybe you would use a flexing sound of leather for the sandals. The gait of the walk—fast, slow, ambling, steady, or accented—will direct the tempo of the footfalls. The type of walking surface—concrete, stone, wood, linoleum, marble, or dirt—and whether it is wet, snowy, slushy, polished, or muddy will be sonically defined by the sound’s timbre and envelope. The amount of reverberation added to the effect depends on where the footfalls occur.

The capacitor microphone is most frequently used in recording Foley effects because of its ability to pick up subtleties and capture transients—fast bursts of sound, such as door slams, gunshots, breaking glass, and footsteps—that constitute so much of Foley work. Tube-type capacitors help smooth harsh transients and warm digitally recorded effects.

Directional capacitors—super-, hyper-, and ultracardioid—are preferred because they pick up concentrated sound with little ambience. These pickup patterns also provide more flexibility in changing perspectives. Moving a highly directional microphone a few inches will sound like it was moved several feet.

Factors such as dynamic range and the noise floor require a good microphone preamp. Recording loud bangs or crashes or soft nuances of paper folding or pins dropping requires the capabilities to handle both a wide variety of dynamics and considerable gain with almost no additional noise. Many standard in-console preamps are not up to the task. Limiting and compression are sometimes used in Foley recording; except for noise reduction, processing is usually done during the final stages of post-production.

Microphone placement is critical in Foleying because the on-screen environment must be replicated as closely as possible on the Foley stage. In relation to mic-to-source distance, the closer the microphone is to a sound source, the more detailed, intimate, and drier the sound. When the mic is farther from a sound source, the converse of that is true—but only to a certain extent on a Foley stage because the studio itself has such a short reverberation time. To help create perspective, openness, or both in the latter instance, multiple miking is often used. Nevertheless, if a shot calls for a close environment, baffles can be used to decrease the room size. If the screen environment is more open, the object is to operate in as open a space as possible. Remember, do not confuse perspective with loudness. It is hearing more or less of the surroundings that helps create perspective.

It is important to know how to record sound at a distance. Equalization and reverb can only simulate distance and room size. Nothing is as authentic as recording in the appropriately sized room with the microphones matching the camera-to-action distance.

A critical aspect of Foley recording cannot be overstated: make sure the effects sound consistent and integrated and do not seem to be outside or on top of the sound track. When a sound is recorded at too close a mic-to-source distance, in poor acoustics, or with the wrong equipment, it is unlikely that any signal processing can save it.

Directors may prefer to capture authentic sounds either as they occur on the set during production, by recording them separately in the field, or both. These directors are willing to sacrifice, within limits, the controlled environment of the Foley stage for the greater sonic realism and authenticity of the actual sound sources produced in their natural surroundings. Or they may wish to use field sound as a reference for Foley recording or to take advantage of a sound’s authenticity and use it as the basis for further processing. Still, a number of factors must be taken into consideration to obtain usable sounds.

If sounds are recorded with the dialogue as part of the action—a table thump, a match strike, a toaster pop—getting the dialogue is always more important than getting the sound effect; the mic must be positioned for optimal pickup of the performers.

When a director wants sound recorded with dialogue, the safest course of action is to record it with a different mic than the one being used for the performers. If the dialogue mic is a boom, use a plant (stationary) mic for the sound effect; if the dialogue is recorded using body mics, use a boom or plant mic for the sound. This facilitates recording sound and dialogue on separate tracks and therefore provides flexibility in post-production editing and mixing.

If for some reason only one mic is used, a boom would be best. Because its mic-to-source position changes with the shot, there is a better chance of capturing and maintaining perspectives with it than with a body or plant mic. Moreover, in single-camera production a scene is shot at least a few times from different perspectives, so there is more than one opportunity to record a usable sound/dialogue relationship.

This point should be emphasized: it is standard in single-camera production to shoot and reshoot a scene. For each shot time is taken to reposition camera, lights, and crew; to redo makeup; to primp wardrobe; and so on. During this time the sound crew also should be repositioning mics. Take advantage of every opportunity to record audio on the set. The more takes of dialogue and sound effects you record, the greater the flexibility in editing and mixing. Even if dialogue or effects are unusable because of mediocre audio quality, it is still wise to preserve the production recording because it is often useful in dialogue rerecording and Foleying as a reference for timing, accent, and expressiveness.

You never want to mask an actor’s line with a sound effect, or any other sound for that matter, or to have a sound effect call attention to itself (unless that is the intent). If sound effects are recorded on the set before or after shooting rather than with the action, try to do it just before shooting. Everyone is usually in place on the set, so the acoustics will match the acoustics of the take. If an effect must be generated by the actor, it can be. And all sounds should be generated from the sound source’s location in the scene.

When recording the effect, there must be absolute quiet; nothing else can be audible—a dog barking far in the distance, an airplane flying overhead, wind gently wafting through trees, crockery vibrating, lights buzzing, crew shuffling, actors primping. The sound effect must be recorded “in the clear” so that it carries no sonic baggage into post-production.

This brings us back to the question: If all these added concerns go with recording sound effects on the set, why bother? Why not save time, effort, and expense and simply Foley them? Some directors do not care for the controlled acoustics of the Foley stage or for artificially created sound. They want to capture the flavor, or “air,” of a particular location, preserve the unique characteristic of a sound an actor generates, and fuse the authenticity of and interaction among sounds as they occur naturally.

This poses considerable challenges in moving-action scenes, such as chases, that take place over some distance. Such scenes are usually covered by multiple cameras; and although multiple microphones may not be positioned with the cameras, using them will capture the sounds of far-flung action. The mics can be situated at strategic spots along the chase route, with separate recorder/mixer units at each location to control the oncoming, passing, and fading sounds. In addition, a mic mounted on the means of conveyance in the chase can be of great help in post-production editing and mixing. For example, on a getaway car, mics can be mounted to the front fender, level with the hubcaps, and aimed at a 45-degree angle toward where the rubber meets the road; or a fish-pole boom can be rigged through the rear window and rested on a door for support. In a horse chase, a mic can be mounted outside the rider’s clothing or fastened to the lower saddle area and pointed toward the front hooves.

Another advantage of multiple-miking sound effects is the ability to capture various perspectives of the same sound. To record an explosion, for example, positioning mics, say, 25, 50, 100, and 500 feet away provides potential for a number of sonic possibilities when editing and mixing. Another reason for recording extremely loud sound from more than one location is that the sound can overmodulate a recorder to the point that much of it may not record. With a digital recording, remember that there is no headroom. That is why, for example, the sound of loud weaponry is compressed—to create a more percussive sound that otherwise might be distorted or sound thinner and weaker than the actual sound.

The obvious microphone to use in many of these situations may seem to be the wireless because of its mobility. As good as wireless systems can be, however, they are still subject to interference from radio frequencies, telephone lines, and passing cars, which causes spitting and buzzing sounds. A directional capacitor microphone connected to a good mic preamp is the most dependable approach to take here. In these applications use very strong shock mounts and tough windscreens. And do not overlook the importance of wearing ear protection when working with loud sounds. Do not monitor these sounds directly: use your eyes and peak meter instead of your ears.

Live sound recorded away from the set for a specific production—or between productions to build sound-effect libraries—is usually collected in the field. As with most field recording, utmost care should be taken to record the effect in perspective, with no unwanted sound—especially wind and handling noise. It is helpful to use a decibel level meter to measure ambient noise levels when working on-location. Like a cinematographer using a light meter, this can inform a range of decisions from mic selection to placement. It cannot be overemphasized that any recording done away from the studio also requires first-rate, circumaural headphones for reliable monitoring.

As with most sound-effect miking, the directional capacitor is preferred for reasons already noted. That said, the parabolic microphone system, using a capacitor mic, is also employed for recording sounds in the field for a long-reach, highly concentrated pickup. This is particularly important when recording weaker sounds, such as insects, certain types of birdcalls, and rustling leaves. Parabolic mics are essentially midrange instruments, so it is probably unwise to use them on sound sources with significant defining low or high frequencies.

For stereo, the middle-side (M-S) and stereo mics are most common because they are easier to set up than coincident or spaced stereo microphones and are more accurate to aim at a sound source. In fact, spaced stereo mics should be avoided because in reproducing the sound image it will jump from one loudspeaker to the other as the effect passes. M-S mics are particularly useful because it is often difficult to control environmental sounds. With an M-S mic, it is possible to keep the environmental sound separate from the principal sound and either not use it or combine the two sounds in the mix.

When recording moving stereo sound effects, be careful that the sound image does not pass across the mic in a straight line as it moves toward the center of the pickup. In certain instances it becomes disproportionately louder compared with its left and right imaging, particularly if the mic array is close to the path of the sound source. Also, the closer the stereo mic-to-source distance, the faster the image will pass the center point. Keep in mind that accurately monitoring stereo requires stereo headphones.

With any directional microphone, it is worth remembering that changing the mic-to-source angle only a few degrees will change the character of the sound being recorded. This can be advantageous or disadvantageous, depending on the effect you are trying to achieve.

Also important in field-recording sound effects is having excellent microphone preamps and the right recording equipment. Digital recorders vary in robustness and in their ability, for example, to deal with subtle sounds, percussive sounds, and dynamic range, particularly if they encode compressed audio.

A key to collecting successful live effects in the field is making sure that the recorded effect sounds like what it is supposed to be, assuming that is your intention. Placing a mic too close to a babbling brook could make it sound like boiling water or liquid bubbling in a beaker. Miking it from too far away, on the other hand, might destroy its liquidity. A flowing stream miked too closely could sound like a river; miking it from too far away could reduce its size and density. A recording of the inside of a submarine could sound too cavernous. Applause might sound like rain or something frying. A spraying fountain might sound like rain or applause. In fact, a spraying fountain often lacks the identifying liquid sound. To get the desired audio, it might be necessary to mix in a watery-type sound, such as a river or a flowing, rather than babbling, brook. A yellow-jacket flying around a box could sound like a deeper, fuller-sounding bumblebee if miked too closely or a mosquito if miked from too far away. A gunshot recorded outdoors in open surroundings sounds like a firecracker; some types of revolvers sound like pop guns.

Keep four other things in mind when recording sounds in the field: be patient, be persistent, be inventive, and, if you really want authenticity, be ready to go anywhere and to almost any length to obtain an effect.

For the film Hindenburg, the sound designer spent six months researching, collecting, and producing the sounds of a dirigible, including sonic perspectives from a variety of locations both inside the ship and on the ground to someone hearing a dirigible passing overhead.

In the film Glory, the sound designer wanted the effects of Civil War weaponry to be as close to authentic as possible, even to the extent of capturing the sound of real Civil War cannonballs and bullets whizzing through the air. Specially made iron cannonballs were shot from cannons forged for the film; this was recorded at 250 yards and at 0.25 mile downrange. These locations were far enough from the initial explosion that it would not contaminate the sound of the projectiles going by. The two distances provided a choice of whiz-by sounds and also the potential for mixing them, if necessary. Because the cannons could not be aimed very well at such distances, not one acceptable whiz-by was recorded during an entire day of taping. For the bullet whizzes, modern rifles would not have worked because the bullet velocity is not slow enough to produce an audible air whiz-by. Using real Civil War bullets, a recordist sat in a gully 400 yards downrange and taped the whiz-bys as the live rounds flew overhead. In the end the cannonball whiz-bys had to be created by playing the bullet whiz-bys in reverse and slowed down.

Finally, when collecting sounds in the field, do not necessarily think literally about what it is you want to capture. Consider a sound’s potential to be built into another effect. For example, the sound of the giant boulder careening toward the frantically running Indiana Jones in Raiders of the Lost Ark was the processed audio of a car rolling down a gravel road, in neutral, with the engine off. The sound was pitch-shifted down and slowed with the lower frequencies boosted in level. In the “Well of Souls” scene, the sounds of seething and writhing in the snake pit were made from recordings of sponges and a cheese casserole. A toilet seat was used for the sound of the opening of the “Ark of the Covenant,” and marine mammals and synthesized sounds evoked the voices of the souls within. Other examples of transforming sound effects are cited throughout this chapter.

High-quality sound effects can be generated electronically with synthesizers and computers and by employing MIDI. This approach is also referred to as electronic Foley. Its advantages are cost-efficiency, convenience, and the unlimited number of sounds that can be created. Its main disadvantage is that electronically generated sound effects sometimes lack the “reality” that directors desire.

A synthesizer is an audio instrument that uses sound generators to create waveforms. The various ways these waveforms are combined can synthesize a vast array of sonic characteristics that are similar to, but not exactly like, existing sounds and musical instruments. They can also be combined to generate a completely original sound. In other words, because the pitch, amplitude, timbre, and envelope of each synthesizer tone generator can be controlled, it is possible to build synthesized sounds from scratch.

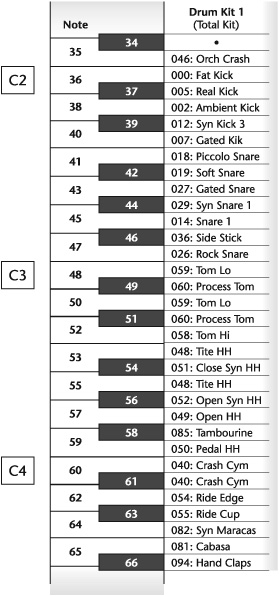

In addition to synthesized effects, preprogrammed sound from libraries designed for specific synthesizer brands and models is available. These collections may be grouped by type of sound effect—such as punches, screams, and thunder—or by instrument category, such as drum, string, brass, and keyboard sounds (see 15-4). These libraries come in various formats, such as hard disk, compact disc, plug-in memory cartridge, and computer files available as separate software or via downloading.

One complaint about synthesized sound has been its readily identifiable electronic quality. With considerably improved synthesizer designs and software, this has become much less of an issue. But take care that the synthesizer and the sound library you use produce professional-quality sound; many do not, particularly those available through downloading, because of the adverse effects of data compression.

The operational aspects of synthesizers and their programs are beyond the purview of this book. Even if they were not, it is extremely difficult to even begin to describe the sonic qualities of the infinite effects that synthesizers can generate. Suffice it to say that almost anything is possible. The same is true of computer-generated sound effects.

A principal difference between the synthesizer and the computer is that the synthesizer is specifically designed to create and produce sounds; the computer is not. The computer is completely software-dependent. With the appropriate software, the computer, like the synthesizer, can also store preprogrammed sounds, create them from scratch, or both. Most computer sound programs make it possible not only to call up a particular effect but also to see and manipulate its waveform and therefore its sonic characteristics (see Chapter 20).

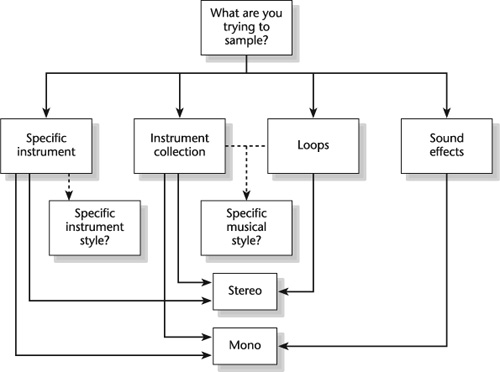

An important sound-shaping capability of many electronic keyboards and computer software programs is sampling, a process whereby digital audio data representing a sonic event, acoustic or electroacoustic, is stored on disk or into electronic memory. The acoustic sounds can be anything a microphone can record—a shout, splash, motor sputter, song lyric, or trombone solo. Electroacoustic sounds are anything electronically generated, such as the sounds a synthesizer produces. The samples can be of any length, as brief as a drumbeat or as long as the available memory allows.

A sampler, basically a specialized digital record and playback device, may be keyboard-based, making use of musical keyboard controllers to trigger and articulate sampled audio; or it may be a rack-mountable unit without a keyboard that is controlled by an external keyboard or a sequencer. A sequencer is an electronic device that stores messages, maintains time relationships among them, and transmits the messages when they are called for from devices connected to it. The sampler may also be computer-based.

With most sampling systems, it is possible to produce an almost infinite variety of sound effects by shortening, lengthening, rearranging, looping, and signal-processing the stored samples. A trombone can be made to sound like a saxophone, an English horn, or a marching band of 76 trombones. One drumbeat can be processed into a thunderclap or built into a chorus of jungle drums. A single cricket chirp can be manipulated and repeated to orchestrate a tropical evening ambience. The crack of a baseball bat can be shaped into the rip that just precedes an explosion. The squeal of a pig can be transformed into a human scream. A metal trashcan being hit with a hammer can provide a formidable backbeat for a rhythm track.

Here sound effects become additional instruments in the underscoring—which, in turn, becomes a form of electroacoustic musique concrete. Consider the music for the film Atonement. The central character is an author, and at the beginning of the story we are introduced to her as a child, writing on a manual typewriter. The clacking punches of the keys, the thud of the spacebar, and the zip of the carriage return create a leitmotiv establishing her identity and bringing us into her immediate soundscape—both literally (i.e., her bedroom) and figuratively, in the internal space of the writer’s mind. Gradually, these sounds become part of the rhythmic and textural fabric of the underscoring. Another example of sound effects being treated as musical material is found in Henry V. In the film the pitched percussive clashes of swords from the epic battle scene were tuned to the minor key signature of Patrick Doyle’s score. The sonic texture of the battle provides a tonal complement to the music.

In solid-state memory systems, such as ROM (read-only memory) and RAM (random-access memory), it is possible to instantly access all or part of the sample and reproduce it any number of times. The difference between ROM- and RAM-based samplers is that ROM-based samplers are factory-programmed and reproduce only; RAM-based samplers allow you to both reproduce and record sound samples. The number of samples you can load into a system is limited only by the capacity of the system’s storage memory.

After a sample is recorded or produced, it is saved to a storage medium, usually a hard disk, compact disc, memory card, or DVD. The stored sound data is referred to as a samplefile. Once stored, of course, a samplefile can be easily distributed. As a result, samplefiles of sounds are available to production facilities in the same way that sound-effect libraries are available. Other storage media are also used for samplefile distribution, such as CD-ROM, erasable and write-once optical discs, and Web sites.

Sample libraries abound, with samples of virtually any sound that is needed. Here is a short list of available samples from various libraries: drum and bass samples including loops, effects pads, and bass-synth sounds; Celtic instrument riffs; arpeggiated synth loops and beats; nontraditional beats and phrases from ethnic folk instruments; violin section phrases and runs; a cappella solo, duet, and choral performances from India, Africa, and the Middle East; otherworldly atmospheres; assortments of tortured, gurgling beats; reggae and hip-hop beats; electronic sound effects and ambiences; multisampled single notes on solo and section brasses, saxophones, and French horns; and acoustic bass performances in funky and straight-eighth styles at various beats per minute.

You should take two precautions before investing in a samplefile library. First, make sure that the samplefiles are in a format that is compatible with your sampler and with the standards and the protocols of the post-production editing environment you plan to work in. There has been increased standardization of samplefile formats for computer-based systems, with two common types being Waveform Audio Format (WAV) and Audio Interchange File Format (AIFF). Second, make sure that the sound quality of the samples is up to professional standards; too many are not, particularly those obtained online. For most audio applications, mono or stereo sounds files recorded at 16-bit depth and 44.1 kHz sampling rate are standard; 24-bit at 48 kHz are optimal for film and video.

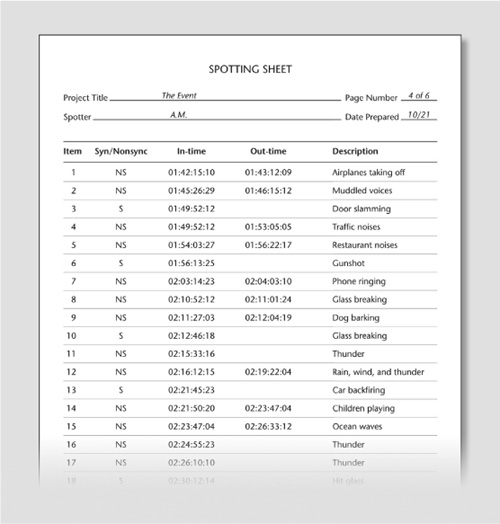

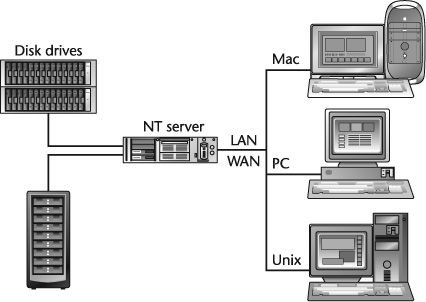

Before recording a sample, plan ahead (see 15-5). It saves time and energy and reduces frustration. When recording samples from whatever source—acoustic or electronic—it is vital that the sound and the reproduction be of good quality. Because the sample may be used many times and in a variety of situations, it is disconcerting (to say the least) to hear a bad sample again and again. Moreover, if the sample has to be rerecorded, it might be difficult to reconstruct the same sonic and performance conditions.

The same advice that applies to miking for digital recording (see Chapter 19) applies to miking for sampling. Only the highest-quality microphones should be used, which usually means capacitors with a wide, flat response; high sensitivity; and an excellent selfnoise rating. If very loud sound recorded at close mic-to-source distances requires a moving-coil mic, it should be a large-diaphragm, top-quality model with as low a selfnoise rating as possible.