Chapter 7. IP Services Plane Security

In this chapter, you learn about the following:

• How services plane traffic differs from data, control, and management plane traffic in terms of packet processing and forwarding

• How services plane traffic can be protected by direct packet classification and policy enforcement mechanisms

• How additional services plane security techniques that use indirect mechanisms can be used to protect signaling and other protocol-specific service support components

Chapter 1, “Internet Protocol Operations Fundamentals,” reviewed the IP traffic planes and provided an introductory explanation of how these traffic planes were processed by various hardware and software architectures. As you learned, the services plane and data plane are both defined as carrying user traffic—that is, traffic that is sourced by and destined to end stations, servers, and other nonrouting infrastructure devices. What distinguishes services plane traffic from data plane traffic is the way in which routers and other network devices must handle these packets.

For example, data plane traffic typically receives very basic processing that mainly involves best-effort, destination-based forwarding. Services plane traffic, on the other hand, typically requires additional, specialized processing above and beyond basic forwarding. In addition, it often also requires end-to-end handling across the network. Examples of services plane traffic include VPN tunneling (MPLS, IPsec, SSL, GRE, and so on), private-to-public translation (IPv6-to-IPv4, NAT, firewall, and IDS/IPS), QoS, voice and video services, and many others.

Services Plane Overview

The services plane refers to user traffic that requires specialized packet handling by network elements above and beyond the standard forwarding logic that is typically applied. That is, services plane traffic includes customer traffic that would normally be part of the data plane and that would normally appear as transit traffic to the routers without specialized handling in the normal forwarding path. However, because specialized services are applied, routers and other forwarding devices must treat these packets in a more complex manner. In some cases, packets must be punted to the slow path for CPU handling. In other cases, dedicated hardware may be required to handle services plane traffic. For example, IPsec and SSL VPNs require high-speed encryption and decryption services, which are often performed in dedicated hardware optimized for this purpose. This is just one example of how services plane traffic differs from data plane traffic. Others are covered in this chapter.

Many aspects of the services plane are heavily dependent upon unique factors such as hardware and software performance, service functions applied, and network architecture and topology. This limits the ability to provide a full range of specific security recommendations. As such, this chapter is organized in a manner that is somewhat different from Chapters 4, 5, and 6, in which you learned about specific mechanisms dedicated exclusively to the protection of their respective IP traffic plane. What you will find for the services plane is that although there are some specific mechanisms designed to protect a specific service, it is not exclusively the case that just these specific protection mechanisms are used. Instead, protection mechanisms used in securing the data plane and control plane must also be configured to provide protection for the services plane functions.

To illustrate how services plane traffic is handled in this regard, this chapter takes a detailed look at several example services plane applications and the special requirements that must be employed to secure these services. From these examples, you will see that several overarching and consistent themes are evident that lead to a set of general processes that you can use to assess and secure other services that you may find in your unique environment. In preview, these overarching themes for services plane traffic include the following:

• The IP services plane often requires specialized packet handling to implement the defined service. For example, the application of QoS markings and policing may require processing support that cannot be provided by hardware within the CEF fast path and results in forwarding performance impacts. (This is very platform dependent.) Whenever performance impacts occur, this should be an indication that protections must be deployed.

• The IP services plane often requires the use of service-specific control plane mechanisms to support the underlying service. For example, IPsec uses the IKE protocol suite for control plane support. Whenever control planes are created and maintained, this should be an indication that protections must be deployed.

• The IP services plane often involves the creation and management of state to establish and maintain the defined service. For example, firewalls create and manage state for TCP sessions passing through them. The creation and management of state always enables attack vectors that would not otherwise exist. Whenever state creation and management is required, this should be an indication that protections must be deployed.

IP services are deployed to provide specialized treatment of user packets in some way. When a service is deployed, it requires capital and operational expenses to roll out the service. Because of this, the service is built and deployed to support a defined capacity within well-defined service-level agreements (SLA). As this is the case, there are several key reasons why the IP services plane must be protected and several goals for selecting appropriate protection mechanisms. These include the following:

• Because each deployed service has finite resources to draw upon, you must ensure the integrity of the services plane such that only legitimate traffic is allowed to take advantage of a specific service type. Services plane traffic generates higher revenues than non-services traffic (as in the case of SPs) or costs more money to deploy (as in the case of enterprises). If unauthorized traffic can use these finite resources, either maliciously or unintentionally, then these resources may not be available for the intended legitimate traffic. This leads to lost revenues or the need to spend additional capital to increase capacity unnecessarily. Thus, the protection requirements here are to permit only authorized traffic to use the service and deny unauthorized traffic from using the service.

• Because services plane traffic often consumes more general-purpose shared resources (memory, CPU interrupt cycles, and so on) that are required to support all traffic planes, you must ensure both that normal data plane traffic does not consume resources to the point where the deployed services plane lacks sufficient resources to function properly, and that when multiple services are deployed, one service type does not impact any other service type. Sufficient network resources must be available during all operating conditions—normal loads, flash-crowd conditions, failover conditions, and so on—so that higher-priority services can receive proper handling, as required. In some cases, this may even be done at the expense of lower-priority traffic. Thus, the requirements here are to protect the network and router resources to support services, but also to prevent services or non-services traffic from jeopardizing the entire network infrastructure.

Note

It is important to distinguish between a secure service and securing a service. The former is something provided by MPLS or IPsec, for example, where aspects of separation, confidentiality (encryption), authentication, and data integrity are applied to specific traffic that belongs to the service. Different to this is securing a service, which is described in this chapter. This includes the things done and steps taken to prevent unauthorized traffic from using a service (theft), and to prevent the service from being rendered unavailable (DoS) to legitimate traffic. IPsec and MPLS are both examples of secure services. However, both of these secure services must themselves be secured for the reasons mentioned in the preceding list.

Finally, it is worth noting that this chapter is not intended to be a primer on the deployment of various services. In most cases, services deployment strategies and options involve complexities that in and of themselves are often the subject of entire books. Instead, this chapter provides, through examples, an illustration of how services operate within the network environment and a method by which to secure them. To accomplish this, three services are used as illustrations: QoS, MPLS VPNs, and IPsec VPNs. Important techniques are identified here, and pointers to additional references are provided for completeness.

As outlined in Chapter 3, “IP Network Traffic Plane Security Concepts,” no single technology (or technique) makes an effective security solution. In addition, not every technology is appropriate in every case, as some may only increase complexity and may actually detract from overall network security. Developing a defense in depth and breadth strategy provides an effective approach for deploying complementary techniques to mitigate the risk of security attacks when appropriate security layers are considered. The optimal techniques will vary by organization and depend on network topology, product mix, traffic behavior, operational complexity, and service requirements. The examples in this chapter illustrate the points outlined in this section and give you the background necessary to evaluate these same or any other services plane applications deployed in your network.

Quality of Service

The term quality of service (QoS) covers a wide range of mechanisms that are applied at the network edge and sometimes within the core to provide differentiated and predictable packet experiences through the network for a variety of reasons. QoS is often described as providing priority processing and access through a congested IP core network. For most modern networks, the core is typically not where congestion occurs, but rather congestion events more commonly happen at the edge. Typical service provider (SP) core networks today are built on OC192 backbones (10 Gbps), and many are scaling to OC768 (40 Gbps) core designs. The edge is typically the more interesting place when considering QoS services.

Although QoS can be applied as a service in and of itself, it is not often deployed in this manner. QoS is most frequently combined with other service offerings such as VPNs (MPLS and IPsec) to prioritize the usage of limited resources (for example, network bandwidth or encryption capacity) and to minimize delay and jitter for voice, video, and other delay-sensitive traffic. For example, a corporation may prioritize voice traffic over other traffic types across its MPLS VPN to prevent lower-priority traffic from disrupting delay sensitive VoIP traffic. In this case, SPs must deploy appropriate QoS mechanisms within the MPLS VPN network to give priority and provide bandwidth guarantees to voice traffic. In Chapter 4, “Data Plane Security,” and Chapter 5, “Control Plane Security,” you already learned about several other practical applications for QoS as data plane and control plane enforcement techniques.

To deploy QoS, you must be capable of identifying the traffic type(s) that you want prioritized, and be willing to sacrifice some traffic at the expense of the higher-priority traffic under congestion conditions. Currently, the scope of this QoS control is limited to a single administrative domain. An enterprise, for example, can control what happens within its network, but it cannot control, by itself, what happens to its traffic as it traverses external networks. In the case of an MPLS VPN, the SP network for all intents becomes an extension of the enterprise network. But since it is administratively part of the SPs domain, SLAs are often negotiated between enterprises and SP to formally define the level of service that will be delivered.

Two distinct IP QoS models are defined here: Integrated Services and Differentiated Services. These two models augment the traditional IP best-effort service model. Integrated Services (IntServ), defined in RFC 1633, is a dynamic resource reservation model based upon RSVP (RFC 2205) signaling. Differentiated Services (DiffServ), defined in RFC 2475, removes the per-flow reservations associated with RSVP and instead uses a simplified (passive) signaling mechanism of classifying individual packets based on a well-defined packet classifier (for example, IP precedence). Only the DiffServ QoS model (using IP precedence) is discussed here.

Note

RSVP is essentially the control plane for the IntServ QoS model. RSVP-based signaling uses the ROUTER-ALERT IPv4 header option to signal end-to-end QoS requirements along a path. A detailed discussion of the RSVP-based QoS model is not discussed here and is considered outside the scope of this book. However, issues and protection mechanisms related to packets with IPv4 header options have been discussed at length in previous chapters. In addition, routing protocol and other control plane protections previously described also apply to RSVP. Without protections, attacks on the RSVP signaling system could result in QoS routing malfunctions, interference of resource reservation, or even failure of QoS provisioning.

Regardless of the model implementation, without suitable protections, QoS is vulnerable to both theft of service and denial of service, which inhibits the delivery of both high- and low-priority services as well as network availability as described in Chapter 4.

This chapter is not intended to be a primer on QoS methods, deployments, and mechanisms, but rather is intended to briefly introduce the methods used to protect a QoS service. To accomplish this, a brief overview is provided that describes the important mechanisms used by the DiffServ QoS model to implement its many functions. In this way, it will become evident that design and implementation considerations must be made when deploying QoS services. Additional details on QoS implementations may be found in the Cisco Press book QoS for IP/MPLS Networks (see the “Further Reading” section), which covers QoS methods and deployment topics in thorough detail.

QoS Mechanisms

Cisco IOS uses an idealized QoS configuration model to provide consistent behavior across platforms. Even though the underlying hardware and software may differ, the end result is intended to be identical, regardless of which device is configured. Understanding exactly how QoS is implemented and how QoS policies are translated within the router will help you understand how to protect this service.

In Cisco IOS, QoS is implemented through the Modular Quality of Service CLI (MQC) command set. You first learned about MQC in Chapters 4 and 5, because it also is the basis for implementing several data and control plane security techniques. MQC itself is discussed in more detail later in this section to help you understand how to secure a QoS implementation. Prior to reviewing MQC, however, some of the basic principles of QoS must be described.

There are four main functional components required for a QoS implementation: classification, marking, policing, and queuing. Referring to Figure 7-1, which you were first introduced to in Chapter 3, you can see that the first three of these four QoS mechanisms apply to ingress and egress processing, but queuing applies only to the egress path. (Note that the Cisco 12000 family is one exception in that it implements ingress queuing in addition to egress queuing.) Functionally, each component performs its job based on seeing an individual packet, comparing it to a policy, and taking some action. Packet statistics (counters) are maintained, primarily so that rate values can be calculated, and this represents the only state involved in the QoS process. Recognizing where state is required and maintained always provides clues as to where protection is required for any service. The concept of state is most often associated with devices like stateful firewalls, which maintain significantly more state, such as per-flow inter-packet relationships for TCP sessions. In the case of QoS, packet counters are the only state maintained. But as you will see, because QoS uses these counters to compute rates that it uses as the basis for its actions, protecting the manipulation of these counters is one of the most important goals in protecting the QoS service.

Figure 7-1. Cisco IOS Feature Order of Operations

Within QoS, even though each of the four components provides its own functionality, there exists an order to their operation and some interdependency from one component to the next. Not every component is required to be deployed, but if they are not all deployed, certain trusts and assumptions must be made about the traffic. These trust relationships and interdependencies are the main focus for securing QoS services. Let’s review each of the four components.

Classification

Classification is the first step in the QoS process and involves identifying packets and comparing each against the configured DiffServ QoS policies to find matches. There is an implied assumption, of course, that some engineering effort has occurred to define policies. The process of classification affects every other step in the QoS process and thus is critical for ensuring correct QoS performance. Classification can be based on any number of items within each packet, as described in Chapter 4, such as source and destination IP address, protocol type and port, IP header precedence/DSCP setting, ingress interface, or even payload contents. The desired outcome of the packet classification process is to end up associating every packet with a particular class of service based on configured explicit or implicit policies that define the packet match criteria.

It is important to note that the classification process only accounts for packets. Within Cisco IOS, counters associated with a defined class of service are incremented as packets that match the traffic class are seen. Cisco IOS also maintains an internal header associated with every packet that is forwarded by the router to keep track of applied features and helps accelerate the processing speed. This internal header is also adjusted to reflect the outcome of the classification process. (As noted in Figure 7-1, the classification process occurs at Step 5, whereas the application of the desired service does not occur until Step 14.)

From a services plane security perspective, you should recognize that all packets must be classified as belonging to some group. That is, no packet should be left unclassified. To facilitate this task, there is a simple mechanism within IOS MQC that allows everything that has not been classified to end up in a catch-all default class that has its own associated policy (that is, class-default).

Note that when QoS is combined with other services such as MPLS or IPsec VPNs, IOS provides a classification mechanism that allows traffic to be either pre- or post- classified with respect to encapsulation within or de-encapsulation from the tunnel. This enables several different versions of QoS transparency, as described in Chapter 4. These are defined within the RFC 3270 MPLS Diffserv Tunneling specification.

Marking

Once classification has identified particular packets, the optional second step of marking can be taken for each packet. Although the classification process sets certain IOS internal flags and increments counters, the marking process (ingress Step 13 or egress Step 11 in Figure 7-1) actually modifies each packet IP header itself in the specified manner. This optional process may be critical for the implementation of an end-to-end QoS policy, network wide. For example, the marking process can set the precedence or DSCP field within the IP header to a particular value. Packets might enter the router with one marking but exit with a different marking based upon the classification and marking. This process is often referred to as packet recoloring, as described in Chapter 4. Other marking options are possible, including manipulations of various Layer 2 and MPLS header fields.

From a services plane security perspective, marking can be critical for enforcing policies elsewhere in the network. Whereas the classification process sets the packet’s internal header (whose scope is effective only within the router), marking modifies the real packet header, which allows for actions to be taken downstream. As an example, an SP may mark (recolor) all packets ingressing its network from untrusted domains in one particular way, and mark its own internal (trusted) traffic in a different way. This gives the SP an additional mechanism to use when securing its control and management plane traffic. To prevent leakage, however, 100 percent coverage must be guaranteed, as described in Chapter 4.

Note

One interesting side note in this example is that because classification and marking of untrusted traffic is done based on ingress interface, there is no concern for spoofed internal IP addresses. Packets arriving via an external interface cannot possibly have originated from inside the network. Positively marking the packet reinforces its origin to other devices within the network and, hence, helps to mitigate spoofing attacks.

Policing

Traffic policing is the third step in the QoS process, and is configured to restrict the rate of traffic as dictated against a particular policy. Policing (ingress Step 14 or egress Step 12 in Figure 7-1) is an optional process and is dependent on classification, but it is unrelated to marking unless the policer policy is explicitly configured to do so, as described below. Policing provides the functional mechanisms to enforce the rate thresholds per class, and drop (or re-mark) traffic when it exceeds the thresholds. It is most useful when applied at the network edge to enforce some agreed traffic rate from or to a network user. As you learned in Chapter 4, policing can also augment interface ACLs by rate limiting traffic up to a configured maximum rate. From a services plane security perspective, policing is applied to traffic matching some classification policy. This is why it is critical to classify all packets accurately. For further information, refer to Chapter 4.

Queuing

Queuing is an optional egress-only function (Step 18 in Figure 7-1) that provides bandwidth management during periods of egress link congestion. Note, some IP router platforms such as the Cisco 12000 series also support ingress queuing. In some cases, the Cisco 12000 uses this ingress queuing, as triggered by a backpressure mechanism signaled through the backplane, to manage egress link congestion. In other cases, the Cisco 12000 uses input queuing to manage internal switch fabric or router backplane congestion. Whether ingress or egress queuing is configured, when congestion is not occurring, queuing is not a factor. Queuing can be implemented either to support congestion avoidance, as in the case where Weighted Random Early Detection (WRED) is deployed, or to support congestion management, as in the case where Low-Latency Queuing (LLQ), Class-Based Weighted Fair Queuing (CBWFQ), or Modified Deficit Round Robin (MDRR) is deployed.

MQC

As mentioned earlier in this section, Cisco IOS implements QoS via the MQC mechanisms. MQC uses three types of constructs to implement QoS:

• class-map: MQC uses the class-map construct as the method within which classification descriptors are defined for a traffic class. Class maps implement the classification function described in the previous list. The class-map construct includes one or more match statements to define the traffic descriptor rules for the class. These match commands allow a wide range of criteria for packet classification, including many Layer 2, 3, 4, and, in some cases, certain Layer 7 attributes. Typically, multiple class-map statements are defined, each representing a distinct traffic class and each containing one or more match statements describing the match criteria for the associated traffic class. When multiple match statements are included, these can be considered as logical AND or logical OR operations using the match-all or match-any keywords, respectively. Class map names are case sensitive. Note that Cisco IOS predefines one class map, class-default (lowercase), as a catch-all class, which simplifies the task of classifying all packets that do not match other class maps defined within a policy map.

• policy-map: MQC uses the policy-map construct to tie together one or more class maps into an overall QoS policy. The policy-map defines the specific QoS actions to be applied for each traffic class. Hence, within the policy-map construct, previously defined class maps are referenced, and then corresponding MQC actions are specified per class-map. QoS actions may include but are not limited to marking using MQC set, policing using MQC police, and queuing using MQC bandwidth commands. Policy maps are processed top-down, and a packet may match one and only one traffic class. Once a packet is classified into a defined traffic class, all subsequent classes are ignored. Only the MQC actions associated with the matched traffic class are applied. Packets that do not satisfy any match criteria for any referenced classes become part of the implicit class-default class. As with class-map names, policy-map names are also case sensitive.

• service-policy: MQC uses the service-policy construct to associate a policy-map with an interface and to specify the direction of applicability. The input or output keyword is used to specify the direction in which the defined actions are taken.

Service policies can be attached to physical interfaces and logical interfaces, such as VLANs and tunnels, and to control plane (receive) interfaces (see the description of CoPP in Chapter 5).

The separation of the classification definitions, policing definitions, and service policy deployment provides flexibility during the creation phase and simplifies the overall QoS configuration process because it allows you to specify a traffic class independently of QoS policy actions. Class maps are created to identify specific traffic types and may be used in one or more policy maps. Each policy map may be applied to one or more interfaces concurrently. Policy statistics and counters are maintained on a per-interface basis.

When creating a QoS policy using MQC, the typical construction chronology is as follows:

1. Create classification ACLs (if needed) for use in the class-map statements as traffic descriptors.

2. Create traffic classes using class-map and match statements, referencing the previously created ACLs as needed.

3. Create policy-map statements to combine the previously defined class-map statements with appropriate QoS actions.

4. Apply each policy-map statement to the appropriate interface(s) using the service-policy statement.

The following examples will help illustrate the use of MQC for QoS.

Packet Recoloring Example

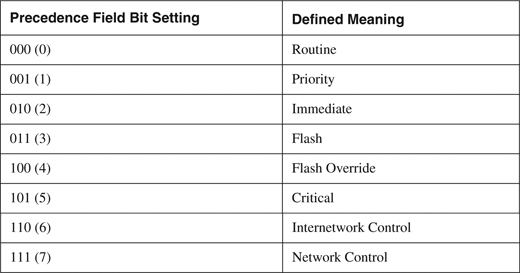

As previously mentioned, recoloring is the term used to describe the process of changing the precedence setting in the IP header of packets as they ingress your network. The IP header Precedence field (see Appendix B, Figure B-1) is used to indicate the level of importance for the packet. The defined values and their meanings are listed in Table 7-1.

Table 7-1. IP Header Precedence Field Settings

As an example, most routing protocols set their own traffic with a precedence value of 6—Internetwork Control. Cisco IOS uses this precedence value for some internal functions as well, such as Selective Packet Discard (SPD), to prioritize these packets within the IOS process-level queues that feed the route processor, as described in Chapter 5. Many QoS deployments take advantage of precedence marking as well. Some Internet sites have been known to purposely set their traffic with IP header Precedence values of 5, 6, or 7 in hopes that their content is provided higher-priority service. Attackers have also been known to set the precedence value in attack packets in hopes of giving their attack higher priority. The general guidance then is to reset (recolor) the IP header Precedence field to a value of 0 for all packets that ingress an external interface, or to whatever value is appropriate for your network and service. Example 7-1 uses MQC match access-group constructs. MQC accomplishes this by defining an ACL that describes the IP header Precedence values (Step 1), configuring a class-map to match on this ACL (Step 2), configuring a policy-map to recolor packets matching this class-map (Step 3), and then applying this policy to the desired interface on the ingress (input) direction using a service-policy (Step 4).

Example 7-1. MQC-Based Recoloring Implementation

! Step 1 - Create ACLs to match IP header Precedence (color)

access-list 160 permit ip any any precedence priority

access-list 160 permit ip any any precedence immediate

access-list 160 permit ip any any precedence flash

access-list 160 permit ip any any precedence flash-override

access-list 160 permit ip any any precedence critical

access-list 160 permit ip any any precedence internet

access-list 160 permit ip any any precedence network

!

! Step 2 - Create a class-map to match ACLs in Step 1

class-map match-color

match access-group 160

!

! Step 3 - Create a policy-map to apply policy (drop/drop)

policy-map re-color

class match-color

set ip precedence routine

!

! Step 4 - Apply service-policy to interface

interface pos1/1

encapsulation ppp

ip address 209.165.200.225 255.255.255.224

service-policy input re-color

!

Notice in Example 7-1 that access-list 160 only matches on IP header Precedence values of 1 through 7, but no explicit test is done for packets with a precedence value of 0. Because tests look for 1 through 7, which represents all other possible values, it is not necessary to test explicitly for 0 to ensure that all packets are being classified. Zero is the default value, and most packets should be set to this value. Of course, it is possible to define another ACL entry to match on 0 (routine), but strictly speaking it is not required. One reason to do so would be to provide statistics via ACL counters, as described next.

Tracking how packets are initially marked can be accomplished most easily by using the show access-list command to display the counters for ACL lines matching different precedence levels of incoming packets. Example 7-2 illustrates this concept.

Example 7-2. Monitoring Recoloring Access List Counters

router-a# show access-list 160

Extended IP access list 160

permit ip any any precedence priority (5637629 matches)

permit ip any any precedence immediate (3916144 matches)

permit ip any any precedence flash (1967437 matches)

permit ip any any precedence flash-override (4034766 matches)

permit ip any any precedence critical (2306059 matches)

permit ip any any precedence internet (8024235 matches)

permit ip any any precedence network (919538 matches)

Monitoring these values over time may give you some indication of impending attacks or even misconfigurations within the network. The use of EEM or custom scripts, as described in Chapter 6, “Management Plane Security,” can be used to provide this type of information.

Traffic Management Example

The following example illustrates the use of QoS and MQC in a traffic management role. In Example 7-3, traffic egressing the PE heading toward the CE is prioritized by IP precedence. This of course assumes that IP precedence values are properly set and can be trusted to reflect the nature of the traffic. In this case, several class-map statements are configured to match on IP precedence directly (no ACL is required), and a policy-map is used to allocate bandwidth via LLQ. LLQ allocates the assigned bandwidth to the priority queue, if configured, using the priority percent keyword. The remaining bandwidth is then allocated to each of the other configured traffic classes belonging to the policy map by using the bandwidth percent keyword. In this case, traffic matching precedence 5 is associated with the priority queue and given 35 percent of the bandwidth, perhaps to accommodate real-time voice traffic. Traffic matching precedence 4 and precedence 3 are given 25 percent and 15 percent of the bandwidth, respectively.

Example 7-3. QoS-Based Traffic Management Implementation

! Step 1 - Create class-map statements to classify traffic based on IP precedence

class-map match-any precedence3

match ip precedence 3

class-map match-any precedence4

match ip precedence 4

class-map match-any precedence5

match ip precedence 5

!

! Step 2 - Create policy-map to allocate bandwidth by class from Step 1

policy-map TrafficMgmt

class precedence5

priority percent 35

class precedence4

bandwidth percent 25

class precedence3

bandwidth percent 15

!

! Step 3 - Apply service-policy to interface

interface Serial1/0/0/2:0

description Circuit-123, Customer ABC-10

bandwidth 1536

ip vrf forwarding ABC

ip address 10.0.1.13 255.255.255.252

no ip directed-broadcast

no ip proxy-arp

no fair-queue

no cdp enable

service-policy output TrafficMgmt

!

Note

In Example 7-3, explicit class-map configurations are only used to match IP precedence 3, 4, and 5 because these classes have explicit bandwidth assignments. The remainder of the traffic (IP precedence values 0, 1, 2, 6, and 7) is handled within the class-default traffic class, which is implicitly defined and controlled. Additional details on this behavior are covered in the Cisco Press book QoS for IP/MPLS Networks (listed in the “Further Reading” section).

The show policy-map command is the primary tool for verifying the operation and configuration of QoS policies within MQC. The output of this command displays counters and rates for the configured actions on each class-map within the policy-map, as well as the always-defined class-default policy. The clear counters command resets all interface counters, including MQC counters, which is useful when comparative measurements are required for troubleshooting or traffic analysis. Example 7-4 illustrates the output of the show policy-map command for the policy defined in Example 7-3. There are no debug commands, however, because the MQC mechanisms are applied in the CEF fast path and the performance impact of debugging would be too great.

Example 7-4. Sample Output from the show policy-map Command

router-a# show policy-map interface Serial 0/0

Serial0/0

Service-policy output: TrafficMgmt

Class-map: precedence5 (match-any)

0 packets, 0 bytes

5 minute offered rate 0 bps, drop rate 0 bps

Match: ip precedence 5

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

Strict Priority

Output Queue: Conversation 264

Bandwidth 35 (%)

Bandwidth 540 (kbps) Burst 13500 (Bytes)

(pkts matched/bytes matched) 0/0

(total drops/bytes drops) 0/0

Class-map: precedence4 (match-any)

0 packets, 0 bytes

5 minute offered rate 0 bps, drop rate 0 bps

Match: ip precedence 4

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

Output Queue: Conversation 265

Bandwidth 25 (%)

Bandwidth 386 (kbps) Max Threshold 64 (packets)

(pkts matched/bytes matched) 0/0

(depth/total drops/no-buffer drops) 0/0/0

Class-map: precedence3 (match-any)

0 packets, 0 bytes

5 minute offered rate 0 bps, drop rate 0 bps

Match: ip precedence 3

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

Output Queue: Conversation 266

Bandwidth 15 (%)

Bandwidth 231 (kbps) Max Threshold 64 (packets)

(pkts matched/bytes matched) 0/0

(depth/total drops/no-buffer drops) 0/0/0

Class-map: class-default (match-any)

3 packets, 72 bytes

5 minute offered rate 0 bps, drop rate 0 bps

Match: any

router-a#

Now that the basic mechanisms of MQC and QoS have been described, it is possible to discuss the main aspects of QoS services plane security.

Securing QoS Services

To deploy QoS and, implicitly, to secure the QoS service, you must take several considerations into account:

• You must expend the engineering effort to adequately define the traffic classes that make up your differentiated services architecture. Ensure that all packets entering or exiting the system can be classified, and that appropriate QoS policies can be applied to each class of traffic. No traffic should be unclassified and uncontrolled. This requires a complete understanding of the network topology, and the traffic flows within this topology.

• You must be able to accurately identify all points in the network where traffic classification can be accomplished. All traffic crossing defined points in the network (for example, the ingress link, egress link, and tunnel interface) must be classified and in many cases marked so that QoS mechanisms can be applied to the traffic either at that point or elsewhere within the network. QoS mechanisms (rates and percentages) assume that all traffic is accounted for. Thus, all traffic should be properly classified and marked because exceptions can disrupt these QoS mechanisms. This high-value service should be protected from theft or abuse by purposeful (malicious) mismarking. Therefore, you must deploy positive classification and marking schemes across all traffic types and boundaries to account for all traffic. From an IP traffic plane security perspective, where the QoS components are deployed is important. In theory, any network element can provide QoS services, assuming the platform is capable of implementing the appropriate mechanisms and performing the required actions. However, as highlighted in prior chapters, there are specific points in the network where implementing certain services makes more sense. In the case of DiffServ QoS, this is primarily (but not exclusively) at the edge of the network, and often in both the ingress and egress directions. External interfaces offer the most logical implementation point for ingress classification and marking. As described in Chapter 3, using the network edge as a reference point allows certain assumptions to be made about ingress packets that cannot be made elsewhere in the network. Recoloring at the edge enables you to perform other QoS and security functions deeper within the network by signaling QoS classification information that indicates the origin of the packets.

• You must be able to apply policies (actions) on all traffic to accomplish the desired goals of the QoS service without impacting overall network operations. If this is a new service, you must ensure that the hardware is capable of adequately supporting the deployment of the service without undue stress. Older platforms may be incapable of deploying certain QoS features at line rate, or may experience a significant increase in CPU utilization, for example. When this is the case, this alone opens a potential vulnerability by exposing other traffic planes, most notably the control plane, to stress potential instability. The deployment of QoS services cannot jeopardize the operations of the network. This can be assured through the use of appropriately scaled hardware and by allowing only applicable traffic to use the higher-priority QoS services. For the Cisco 12000, for example, legacy Engine 0–based line cards not only have limited MQC and QoS support (minimal match support, no marking support, and limited congestion management support), but they also suffer a significant performance degradation of approximately 50 percent when QoS is enabled. Modern Cisco 12000 line cards, such as those based on Engine 3 and Engine 5 (ISE) technologies, are designed as edge services cards and therefore support ingress and egress QoS and MQC (and other services) at line rate. Most CPU-based IOS devices, on the other hand, experience some performance degradation when QoS is enabled, although all MQC functions should be supported. For example, Cisco ISR routers may experience a 10 percent increase in CPU utilization with QoS functions enabled. Designers must also budget for QoS performance impacts and alternate solutions if routers are already stressed (high CPU). When deploying QoS, you should always consult the hardware release notes for your specific platforms to ensure that you understand the implications that enabling these features may have on system performance. In addition, it is always useful to perform laboratory tests under conditions simulating your production environment (including attack conditions) if feasible.

• You should apply defense in depth and breadth principles such as transit ACLs and uRPF to prevent unauthorized traffic from impacting the QoS service. DoS attacks are always more difficult to deal with when they target features that require special processing or that add extra processor burdens.

The preceding list represents recommendations based on generalized QoS and MQC deployments. Obviously, it is not possible to cover every scenario and situation in this chapter. Many other recommendations that are specific to topology and QoS service deployment should be considered based on your particular environment. It is the intention of these discussions and guidelines that you be able to recognize within your specific deployment scheme where potential vulnerabilities exist and how QoS must be protected. For more information on available QoS techniques to mitigate attacks within the IP data plane, see to Chapter 4.

MPLS VPN Services

Multiprotocol Label Switching (MPLS) Virtual Private Networks (VPN) provide traffic isolation and differentiation to create virtual networks across a shared IP network infrastructure. MPLS-based Layer 3 VPNs combine Multiprotocol BGP (M-BGP) using extended community attributes and VPN address families, LDP (RFC 3036) or RSVP-TE (RFC 3209) for label distribution, and router support for Virtual Routing and Forwarding (VRF) instances to create these virtual IP networks. These operate based on the Internet Engineering Task Force (IETF) RFC 4364 specification (which obsoletes RFC 2547bis).

An extensive discussion of the threats to MPLS VPNs was covered in Chapter 2, “Threat Models for IP Networks.” The purpose of this section is to review techniques available to protect MPLS VPN services from the threats described in Chapter 2. This section is not intended to provide detailed MPLS VPN design and implementation guidelines. A short overview of some of the components used in creating MPLS VPNs and some of the more common deployment aspects are covered in review, however. Some level of understanding of MPLS VPN arechitectures and their operational concepts is assumed. For additional information on deploying and securing MPLS VPNs, refer to the Cisco Press book entitled MPLS VPN Security (listed in the “Further Reading” section), which provides details on their architecture, deployment models, and security.

MPLS VPN Overview

As described in previous chapters, MPLS VPNs provide a site-to-site IP VPN service and are rapidly replacing legacy Frame Relay and ATM networks. SPs offer MPLS VPN services across a shared IP infrastructure. The SP IP network not only is shared among MPLS VPN customers but it may also be shared by SP customers of other services, including, for example, Internet transit, IPv6 VPNs (otherwise known as 6VPE), and Layer 2 VPNs (or pseudowires). Although the SP IP network is shared, addressing and routing separation, as well as privacy, are assured between customer VPNs, and between VPNs and the SP global IP routing table. This is inherently achieved through the use of the following mechanisms, as defined by RFC 4364 and as were described in Chapter 2:

• VPN-IPv4 addressing, to ensure unique addressing and routing separation between VPNs

• VRFs, to associate VPNs to physical (or logical) interfaces on provider edge (PE) routers

• Multiprotocol BGP (M-BGP), to exchange VPN routing information between PE routers

RFC 4364 also categorizes the different roles of IP routers within the MPLS VPN architecture, including customer edge (CE), provider edge (PE), provider core (P), and autonomous system boundary routers (ASBR), also described in Chapter 2 and illustrated in Figure 2-15. Unlike an Internet service, an MPLS VPN service is considered trusted; hence, often few or no security measures are applied. The following sections review techniques available to protect each of these different MPLS VPN router types (or categories) from the threats outlined in Chapter 2.

Customer Edge Security

Given the IP addressing and routing separation provided by MPLS VPNs, the CE router is reachable only from within its assigned VPN. Therefore, by default, the CE router is only susceptible to attacks sourced from inside the VPN. Only if the VPN has Internet or extranet connectivity configured (excluding the secure Management VPN per Chapter 6) is it susceptible to external attacks, as was described in Chapter 2. Keep in mind that the CE router is an IP router and is not enabled for any MPLS functionality (with the exception of the Carrier Supporting Carrier [CsC] model, which is described in the “Inter-Provider Edge Security” section later in the chapter). Hence, to mitigate the risk of attacks against the CE router, the data, control, and management plane security techniques described in Chapters 4 through 6 may be applied, including:

• Data plane security

— Interface ACLs

— Unicast RPF

— Flexible Packet Matching (FPM)

— QoS

— IP Options handling techniques

— ICMP data plane techniques

— Disabling IP directed broadcasts

— IP transport and application layer techniques

• Control plane security

— Disabling unnecessary services

— ICMP control plane techniques

— Selective Packet Discard

— IP Receive ACLs (rACLs)

— Control Plane Policing (CoPP)

— Neighbor authentication (MD-5)

— Protocol specific ACL filters

— BGP security techniques (GTSM, prefix filtering, etc.)

• Management plane security

— SNMP techniques

— Disabling unused management plane services

— Disabling idle user sessions

— System banners

— AutoSecure

— SSH

— AAA/TACACS+/RADIUS

— Syslog

— NTP

— NetFlow

— Management VPN (specifically designed for managed MPLS VPN CE routers)

The preceding techniques would be deployed in the same manner as was described in Chapters 4 through 6; hence, they will not be repeated here.

Note

Note, however, the CE router is deployed within a private IP (MPLS) VPN versus being reachable from the wider Internet. Therefore, you may consider deploying only those security techniques that mitigate the risk of significant threats. Spoofing attacks, for example, may not be considered a significant threat within MPLS VPNs. The optimal techniques that provide an effective security solution will vary by organization and depend on network topology, product mix, traffic behavior, operational complexity and organizational mission.

Provider Edge Security

As described in Chapter 2, PE routers associate physical (or logical) interfaces to customer VPNs using VRFs. VRFs are statically assigned to interfaces and cannot be modified without PE router reconfiguration. Using a static VRF configuration provides complete separation between VPNs, and between VPNs and the SP global IP routing table. VPN customer packets cannot travel outside of the assigned VPN unless the SP VPN policies specifically allow for it. Conversely, external packets cannot be injected inside the VPN unless specifically allowed by policy. That is, only a misconfiguration or software vulnerability would allow illegal unauthorized packets to leak into or out of a customer VPN.

Although the PE provides routing and addressing separation between VPNs, it is also IP reachable within each configured VPN. This makes it susceptible to internal IP attacks sourced from within a VPN. Internal attacks sourced from within a private VPN may be considered low risk. However, given that a PE router aggregates many customers and VPNs, an attack against the PE from within one VPN may adversely affect other VPN customers because the PE router shares its resources, including CPU, memory, and (uplink) interface bandwidth, among the different customer VPNs. Hence, although an MPLS VPN assures routing and addressing separation between VPNs and between VPNs and the global IP routing table, collateral damage remains a valid threat.

The PE router appears as a native IP router to VPN customers (excluding CsC customers, as described in the “Inter-Provider Edge Security” section later in the chapter). A single VPN customer site generally has IP reachability to all of the PEs configured for the associated customer VPN. Hence, to mitigate the risk of VPN customer attacks against PE routers, many of the data, control, and management plane security techniques described in Chapters 4 through 6 may be applied. Note, all of these techniques are generally supported for MPLS VPNs and VRF interfaces; however, specific platform restrictions may apply. Further, these techniques would be generally deployed in the same manner as was described in Chapters 4 through 6 and so their application will not be repeated here. However, some additional considerations for MPLS VPN PE routers are described in the following list, including resource management per VPN to limit the risk of collateral damage.

Infrastructure ACL

VPN customers and CE routers require minimal, if any, protocol access to the PE routers. Most MPLS VPN deployments only require dynamic routing (for example, eBGP or EIGRP) between the PE and the directly connected CE. Infrastructure ACLs are specifically designed to prevent IP packets from reaching destination addresses that make up the SP core network, including the PE external interface addresses themselves. Thus, iACLs may be applied on the PE router, inbound on each CE-facing interface to filter all traffic destined to the PE except routing protocol traffic from the directly connected CE router. This type of policy may be applied on the PE, on each CE-facing interface. Note that if the iACLs filter traffic from the directly connected CE router only and not from CE routers associated with other sites within the VPN, additional protection steps may be required. To protect PEs from remote attacks sourced from other sites within the VPN, you could simply not carry the IP prefixes associated with the PE-CE links within the VRF routing table. Or, if CE reachability is required in support of VoIP gateway, firewall, or IPsec services, for example, you may use any one of the three techniques outlined in the “Edge Router External Link Protection” section of Chapter 4. If static routing is used on the PE-CE link, the infrastructure ACL should simply deny all traffic destined to the PE external interfaces. IP rACLs and/or CoPP should also be applied as a second layer of defense in the event that the infrastructure ACL and external link protection policies are bypassed. IP rACLs and CoPP in the context of MPLS VPNs are discussed next.

IP Receive ACL

As described in Chapter 5, IP rACL policies apply to all CEF receive adjacency traffic. However, IP rACLs are not VRF-aware, and thus polices applied on PE routers are unable to distinguish between receive adjacency traffic that is associated with each customer VRF or the global table when filtering solely based upon IP source addresses. Given that the PE supports a distinct VRF table for each customer VPN, and that each customer VPN, as well as the PE itself, may use overlapping IP addressing, this leaves open the possibility for ambiguities within IP rACL policies and potentially allows unauthorized traffic to incorrectly be permitted by the IP rACL. For example, if the IP rACL policy permits all BGP traffic from the 209.165.200.224/27 subnet, then traffic sourced from any 209.165.200.224/27 address within any VPN configured on the PE, or any traffic sourced from an 209.165.200.224/27 address within the global table will be permitted by the IP rACL. This situation can be resolved by also configuring infrastructure ACLs as needed, to fully rationalize each traffic source. It should be noted that IP rACL filtering based upon IP destination address information is not exposed to the same issues because any permitted destination address must be that of a CEF receive adjacency.

Control Plane Policing

CoPP policies that use IP source address information will suffer from the same issues just described for IP rACLs. That is, CoPP is not VRF-aware at this time, and thus does not consider the ingress VRF. This is conceivably less of an issue for CoPP than for IP rACLs in that CoPP typically is provisioned to rate limit traffic types to infrastructure destination IP addresses.

VRF Prefix Limits

Although BGP neighbor prefix limits may be applied as described in Chapter 4 per BGP peer, you may also configure a maximum prefix limit for each VRF table defined within the PE routers. This allows you to limit the maximum number of routes in a VRF table to prevent a PE router from importing too many routes. The VPN prefix limit is protocol independent as well as independent of the number of CE peers or sites within a VPN. To enable this feature, use the maximum routes <limit> {warn-threshold | warn-only} command in IOS VRF configuration mode. This allows you to monitor and limit PE routing table resources used per VPN/VRF. You can use the maximum routes command to monitor and limit the number of routes in a VRF on a PE router. By default, IOS does not limit the maximum number of prefixes per VRF table. You may specify a limit by using the maximum routes command. Routes are rejected when a maximum number as set by the limit argument is reached. A percentage of this maximum number of permitted routes can also be defined by specifying the warn-threshold argument. When configured, IOS generates a Syslog warning message every time a route is added to a VRF when the VRF route count is above the warning threshold. IOS also generates a route rejection Syslog notification when the maximum threshold limit is reached and every time a route is rejected after the limit is reached. To generate a warning message only instead of a imposing a hard VRF prefix limit, use the warn-only keyword within the maximum routes command.

IP Fragmentation and Reassembly

As described in Chapter 2, MPLS VPN PE routers impose an 8-byte MPLS shim for all unicast traffic received from connected CE routers and destined to remote VPN sites across the MPLS core. The addition of the 8-byte MPLS shim may result in IP fragmentation of customer VPN traffic. If IP fragmentation occurs, a flood of VPN traffic may adversely affect the ingress PE router because this traffic must be handled in the slow path. For unicast VPN traffic, any PE fragmented IP packets will be reassembled by the destination address specified within the customer VPN packets. Hence, only the ingress PE router is affected. Conversely, for multicast VPN (MVPN) traffic (which is encapsulated within a 24-byte GRE point-to-multipoint tunnel header and not an MPLS header, per IETF draft-rosen-vpn-mcast-08.txt), the egress PE may be required to reassemble the fragmented MVPN (GRE) packets because the GRE tunnel endpoint (or destination address) is the egress PE itself.

As outlined in Chapter 2, IP routers have a limited number of IP fragment reassembly buffers. Further, fragment reassembly is handled at the IOS process level. If PE routers are required to fragment VPN traffic and/or reassemble fragmented MVPN traffic, they are potentially susceptible to DoS attacks crafted with large packets. Given the different tunnel header encapsulations used for unicast and MVPN traffic (in other words, 8 versus 24 bytes), avoiding unicast fragmentation does not necessarily mitigate the risk associated with MVPN fragmentation and reassembly. Multicast fragmentation must be considered if MVPN services are offered. Additionally, it is also possible for large packets to be used for an ICMP attack. In this scenario, the attacker simply sets the Don’t Fragment (DF) bit of the oversized packets. If the PE router cannot fragment the packet due to the DF bit being set, it sends an ICMP Packet Too Big message back to the source. An excessive volume of these crafted packets can trigger a DoS condition on the router.

The only technique available to mitigate the risks associated with IP fragmentation and reassembly is to engineer the network to simply avoid it. This may be achieved only by ensuring that the MPLS core network from ingress PE to egress PE supports an MTU greater than that of all IP access and aggregation networks (in other words, PE-CE links) and must be large enough to accommodate the additional MPLS and/or GRE encapsulations imposed. In this way, any VPN packets received at the edge are guaranteed not to be fragmented when transiting the core. Further, the MTU setting should be universal across the network edge. Otherwise, fragmentation may occur depending upon the entry or exit points at the network edge.

When fragmentation cannot be eliminated through network design, every effort must be made to mitigate the impacts of any fragmentation and reassembly that may still occur. There are a number of strategies for resolving fragmentation within the context of MPLS VPNs, and the best approach depends on your particular environment and on how much work you are willing to do to prevent fragmentation. There is no panacea, however, and some engineering effort must be expended to determine the best approach.

To avoid fragmentation (and possibly reassembly) on the PEs, the MPLS core network must support an MTU greater than that of the PE-CE links. The best-case scenario, then, is to set the egress interface MTU value of every CE router to a suitable value that guarantees there will be no fragmentation within the MPLS core. For example, assume that the interface MTU is 1500 bytes everywhere within the MPLS core network. The CE egress interface MTU must be reduced, then, by an amount equal to or greater than the maximum combination of tunnel headers imposed across the MPLS core. This includes either the 8-byte label stack imposed for unicast VPN traffic or the 24-byte GRE header imposed for MVPN traffic. If other encapsulations are also used within MPLS core, their overhead must be accommodated as well. For example, MPLS TE tunnels between MPLS core P routers can also influence the maximum packet size to avoid fragmentation. In the preceding example, because all interfaces have an MTU of 1500 bytes, all unicast traffic greater than 1492 bytes would be fragmented by the ingress PE. Similarly, all MVPN traffic greater than 1476 bytes would be fragmented at the ingress PE and then require reassembly at the egress PE.

The main approaches to modifying the interface MTU of the CE links include the following:

• Modify the Interface Layer 2 MTU: By making modifications to the CE egress interface Layer 2 MTU, fragmentation may be avoided on the PE. The interface command mtu <value> is used to set the maximum transmission unit (MTU)—that is the maximum packet size for outbound packets at Layer 2. Thus, any Layer 3 protocols will be subjected to this value (for example, IP). The IOS default MTU setting depends on the interface medium. Table 7-2 lists IOS default MTU values according to media type.

Table 7-2. Cisco IOS Default MTU Values

• Modify the Interface Layer 3 (IP) MTU: To modify the CE egress interface Layer 3 MTU value, use the ip mtu <value> interface configuration command. Note that the Layer 3 interface MTU is protocol-specific. Namely, this Layer 3 interface MTU command applies only to IP packets, whereas the Layer 2 interface MTU command applies to any upper-layer protocols that are transmitted on the interface. With the proper interface or IP MTU setting on the CE, the CE will then perform the IP fragmentation when necessary, and not the ingress PE.

When the CE router is not managed by the SP, the SP cannot rely on each of its customers to set the MTU accordingly on the CE router. Hence, instead of reducing the MTU on the CE router, ideally the SP should increase the MTU within the core of their network to accommodate the maximum PE-CE MTU size plus sufficient overhead for any possible MPLS label stack. Given wide deployment of POS interfaces within MPLS VPN core networks as well as Gigabit Ethernet interfaces enabled for jumbo frames, MTUs of 4470 bytes or 9000 bytes, respectively, are commonly supported. This allows SPs to eliminate the likelihood of fragmentation and reassembly within their MPLS core, assuming the MTU of the PE-CE link is 1500 bytes.

Note

Changing default MTU settings may cause the router to recarve system packet buffers to accommodate the new MTU applied. This may disrupt packet-forwarding operations during the period of time it takes to complete the buffer recarve operations.

Provider Core Security

Excluding the PE router, the SP infrastructure is inherently hidden from MPLS VPN customers, given VPN routing separation. Consequently, it is not possible for a VPN customer to launch direct attacks against core (P) routers due to the absence of IP reachability. Further, MPLS core (P) routers do not carry VPN customer prefixes, hence, the IP rACL, CoPP and VRF prefix limits issues outlined for PE routers do not apply to core (P) routers. Nevertheless, core (P) routers remain susceptible to transit attacks, as described in Chapter 2. Hence, to mitigate the risk of attacks from VPN customers against the core (P) routers, the following techniques may be applied.

Disable IP TTL to MPLS TTL Propagation at the Network Edge

By default, when IP packets are MPLS encapsulated, the IP TTL is copied down into the TTL fields of the imposed MPLS labels. Not only does this allow VPN customer packets to expire within the MPLS core network, but it also provides VPN customers with visibility of the core network using IP traceroute. Both of these conditions represent potential security risks. RFC 3032 and IETF draft-ietf-mpls-icmp-07 specify the interaction between MPLS and ICMP, and allow for ICMP messages generated by the core (P) routers to be sent to a source host within a customer VPN as required, including ICMP Time Exceeded (Type 11) messages. To mitigate the risk of VPN customer packets expiring on core (P) routers, the no mpls ip propagate-ttl forwarded command must be applied on all PE and ASBR routers within IOS global configuration mode. This command disables the propagation of the IP TTL into the MPLS label stack. Instead, the MPLS TTL values are set to 255 (the maximum available value per RFC 3032), preventing VPN customer packets from expiring within the MPLS core network (unless of course a routing loop exists, in which case TTL expiration is desired). Note that disabling IP TTL to MPLS TTL propagation in this way does not break VPN customer IP traceroute. It simply prevents the core (P) routers from being reported when the VPN customer performs IP traceroute. The VPN customer will see only the CE routers and ingress PE router, and not the core (P) routers. In this way, the MPLS core network remains hidden and appears as a single hop. The egress PE router is optionally reported depending upon the MPLS tunneling model applied per RFC 3443. As stated, the no mpls ip propagate-ttl forwarded command must be applied on all edge routers (PEs and ASBRs), because this is where the MPLS encapsulation of VPN customer packets takes place. Further, disabling IP TTL to MPLS TTL propagation does not affect the SP’s ability to use IP traceroute across the internal infrastructure either. For more information on TTL processing in MPLS networks, refer to RFC 3443.

IP Fragmentation

Similar to the description in the previous section for PE routers without proper MTU support across the MPLS core, P routers are also susceptible to fragmentation and/or ICMP attacks resulting from large packets. Excessive fragmentation may trigger a DoS condition in core (P) routers. This can be mitigated with a proper Layer 2 or Layer 3 interface MTU setting at the network edge, as described for PE security techniques in the Provider Edge Security section above, or by ensuring that the MTU within the core of the network is sufficiently large to accommodate the maximum PE-CE MTU size plus sufficient overhead for any possible MPLS label stack.

Router Alert Label

As described in Chapters 2 and 4, VPN customer traffic both with and without IP header options is always MPLS-encapsulated at the ingress PE and forwarded downstream across the MPLS core. There are exceptions, however. VPN packets with IP Source Route options will be MPLS label switched only if the IP addresses specified in the Source Route option are valid addresses within the associated VRF. If not, these packets will be discarded. Once MPLS-encapsulated, however, core (P) routers forward packets based upon the MPLS label stack and do not consider the IP header options of VPN customer packets (because it is beneath the labels and not seen by core (P) routers). RFC 3032 defines an MPLS Router Alert Label, which is analogous to the IP header Router Alert option. When applied, MPLS packets tagged with the Router Alert Label will be punted to the IOS process-level for packet handling. At the time of this writing, there is no industry or IETF standard for IP header option processing in MPLS networks to specify when the MPLS Router Alert Label should (or should not) be imposed. Consequently, each MPLS VPN PE router platform may potentially behave differently in this regard. MPLS PE router platforms that impose the Router Alert Label (at the top of the label stack) may make downstream core (P) routers susceptible to security attacks, given that such packets will be handled at the IOS process level. A sustained attack that sends crafted IP packets having the IP header Router Alert option, for example, to an MPLS VPN PE that imposes the MPLS Router Alert label may trigger a DoS condition within the MPLS core. At the time of this writing, Cisco IOS MPLS VPN PE routers do not impose the MPLS Router Alert label for VPN customer packets. But, again, because there is no industry standard, non-IOS MPLS VPN PE routers may behave differently. If your MPLS VPN PE router imposes the Router Alert label for VPN packets which have an IP header Router Alert option, you should consider filtering these packets at the PE to mitigate the risk they present to the core. Techniques to filter IP options packets on IOS routers are described in Chapter 4.

Network SLAs

Similar to IP TTL handling described at the beginning of this list, when IP packets are MPLS encapsulated, the IP precedence value, by default, is copied down into the EXP fields of the imposed MPLS labels. Hence, without proper QoS policies at the PE, VPN customers may craft their low-priority traffic as high-priority in an attempt to either steal high-priority MPLS core bandwidth from other high-priority services or to launch attacks against high-priority traffic classes, including control plane protocols. Both of these scenarios assume that a DiffServ QoS architecture is implemented within the MPLS core. To mitigate this risk, packet recoloring and policing should be applied uniformly across the network edge, as described in the “Securing QoS Services” section above, and earlier in Chapter 4.

The preceding techniques outline specific steps you may take to protect core P routers in the context of MPLS VPN–based attacks. Only a PE router misconfiguration or software vulnerability would provide IP reachability between a VPN customer and the MPLS core (P) routers. Further, if the SP network also provides other services such as Internet transit, the core P routers may be susceptible to other threats, as described in Chapter 2. Hence, you should also consider deploying the applicable data, control, and management plane security techniques described in Chapters 4 through 6 to mitigate the risks associated with these other threats. Note that IOS also supports MD5 authentication for MPLS LDP, which is the most widely deployed label distribution protocol for MPLS VPN services. Chapter 9, “Service Provider Network Case Study,” illustrates the combination of techniques and defense in depth and breadth principles that SPs should consider to protect their infrastructure and services, including MPLS VPNs.

Inter-Provider Edge Security

As described in Chapter 2, there are two primary components of the inter-provider MPLS VPN architecture: Carrier Supporting Carrier (CsC) and Inter-AS VPNs. CsC is a hierarchical VPN model that enables downstream service providers (DSP), or customer carriers, to interconnect geographically diverse IP or MPLS networks over an MPLS VPN backbone. This eliminates the need for customer carriers to build and maintain their own private IP or MPLS backbone.

Inter-AS is a peer-to-peer model that enables customer VPNs to be extended through multiple SP or multi-domain networks. Using Inter-AS VPN techniques, SPs peer with one another and offer end-to-end VPN connectivity over extended geographical locations for those VPN customers who may be out of reach for a single SP. Both CsC and Inter-AS VPNs maintain segmentation between distinct customer VPNs.

Carrier Supporting Carrier Security

From a security perspective, the CsC-PE router is subject to the same threats as the native MPLS VPN PE router. Similarly, the CsC-CE router is subject to the same threats as the native MPLS VPN CE router. Further, because the customer carrier is itself an SP, the CsC-CE is also a core (P) router from the perspective of DSP customers. The potential threats against the CsC-CE as a customer carrier core router depend upon whether the DSP offers Internet transit or MPLS VPN services, or both. Each of these types of threats were detailed in Chapter 2.

The primary difference from a security perspective between native MPLS VPNs and the CsC model is that data plane packets exchanged between the CsC-CE and CsC-PE routers are MPLS encapsulated. This makes some IP data plane security techniques such as IP ACLs ineffective (as described in the list below). Again, however, this only applies to MPLS labeled data plane packets not native IP packets. Despite the use of MPLS labeled data plane packets, the CsC-CE router is reachable only from within the associated customer carrier VPN and is not susceptible to external attacks through the CsC provider. This is strictly enforced at the CsC-PE through an automatic MPLS label spoofing avoidance mechanism that prevents the CsC-CE from using spoofed MPLS labels to transmit unauthorized packets into another customer VPN. MPLS packets with spoofed labels are automatically discarded upon ingress of the CsC-PE. This is possible because, within IOS, the labels distributed from the CsC-PE to the CsC-CE using either LDP or RFC 3107 (BGP plus labels) are VRF-aware. Hence, CsC provides addressing and routing separation between VPNs equivalent to native MPLS VPNs. Therefore, the security techniques outlined above in the “Customer Edge Security,” “Provider Edge Security,” and “Provider Core Security” sections also apply to CsC services. Additionally, the following security considerations also apply to CsC services:

• Interface IP ACLs: Interface ACLs are IP-based and hence do not apply to MPLS labeled packets. Although IP ACLs may be ineffective against MPLS labeled data plane packets, unlabeled control plane traffic between the CsC-CE and CsC-PE may be filtered using infrastructure IP ACLs.

• CoPP: Similar to IP ACLs outlined directly above, labeled data plane exception traffic such as MPLS packets with the Router Alert Label are always classified into the class-default traffic class of a CoPP policy. This is because (at the time of this writing) MPLS packets are considered Layer 2 and will not match any IP ACL MQC match criteria configured within the CoPP policy.

• IP TTL propagation: The no mpls ip propagate-ttl forwarded command (outlined earlier in the “Provider Core Security” section) which was used to protect the MPLS core (P) routers from TTL expiry attacks, does not apply to CsC-PE routers (or PE interfaces enabled for CsC). This command only applies to ingress IP packets being encapsulated into MPLS. It does not apply to ingress MPLS packets being MPLS label switched because no IP TTL to MPLS TTL propagation operation is performed. Given that the CsC-PE (or PE interface enabled for CsC) receives MPLS labeled packets from the CsC-CE, this command does not apply to CsC services. Hence, in the CsC model, unless this command is applied upstream in the CsC customer’s network, it is possible for CsC customer packets to TTL-expire within the MPLS core of the SP providing the CsC service (in other words, between the ingress and egress CsC-PEs).

• Label distribution: Label distribution between the CsC-CE and CsC-PE may be done using either MPLS LDP or BGP (RFC 3107). Using only BGP, the control plane between the CsC-CE and CsC-PE routers operates similarly to native MPLS VPNs and the different BGP security techniques reviewed in Chapter 5 may be applied. Conversely, MPLS LDP supports MD5 authentication as well as inbound and outbound filtering of label advertisements. Each of these MPLS LDP security techniques were also described in Chapter 5.

Inter-AS VPN Security

Inter-AS VPNs are intended to expand the reach of customer VPNs through multiple SP networks. This is meant to overcome issues where the primary SP footprint may not match the required footprint of the VPN customer, most notably in multinational deployments. RFC 4364 Section 10 outlines three techniques to achieve this, which are widely known within the industry as options (a), (b), and (c). Each has trade-offs in terms of scalability, security, and service awareness. Chapter 2 presented the security threats associated with each option under the condition that the interconnect between each distinct MPLS VPN network is under the control of different SPs (that is, is untrusted). The security of each Inter-AS VPN option is briefly described here:

• Option (a): Within option (a), the ASBR router of each SP network effectively operates as a PE router. Further, each ASBR sees its peer ASBR as a CE router. Hence, all of the security techniques previously outlined for native MPLS VPN PE routers apply equally to ASBR routers configured for Inter-AS VPN option (a). As such, this is the only Inter-AS VPN interconnect model that provides for resource management on a per-VPN basis. That is, option (a) maintains separate data plane and control plane instances per VPN (for example, VRF prefix limits, eBGP peering, IP interface per VRF, and so on), unlike options (b) and (c). For this reason, option (a) is the only IOS Inter-AS VPN interconnect model known at the time of this writing to be deployed in production between two (2) distinct SPs. This technique is illustrated in Figure 2-17.

• Option (b): Within option (b), the ASBR routers use a single Multiprotocol eBGP session to exchange all Inter-AS VPN customer prefixes over a single native IP (non-VRF) interface between SPs. Although this improves ASBR scaling since only a single interconnect and eBGP session required, it prevents ASBR resource management and security policies on a per-VPN basis. That is, all of the per-VPN techniques such as VRF prefix limits, eBGP peering, IP interface per VRF, and so forth, cannot be applied because option (b) carries all Inter-AS VPNs using a shared interconnect and eBGP peering session for each SP peer. In fact, an option (b) ASBR does not need to be configured with any VRF instances. Because there is no VPN isolation, option (b) Inter-AS VPNs share a common fate whereby one Inter-AS VPN may adversely impact connectivity of another, given the shared data and control plane within the ASBR. Also, because no VRF interface configurations are applied on the ASBR, MPLS label spoofing avoidance checks similar to CsC cannot be enforced on the interconnect, which may allow unauthorized access to a customer VPN. Further, the data plane security techniques described in Chapter 4 do not apply to MPLS label switched packets. Hence, Inter-AS VPN option (b) is susceptible to a variety of security risks that cannot be properly mitigated. Because of these weaknesses, option (b) is not known at the time of this writing to be deployed in production between two 2) distinct SPs for Inter-AS VPN connectivity using IOS. Conversely, for multi-domain (or multi-AS) SP networks, option (b) may be considered since the different domains are managed by the same single SP. (This technique is illustrated in Figure 2-18.)

• Option (c): Within option (c), the ASBR routers exchange only PE /32 loopback addresses and associated label information using either MPLS LDP or BGP + Labels (RFC 3107). VPN customer prefixes are then exchanged between route reflectors (RRs) within each SP network (AS) using multihop Multiprotocol-eBGP. (This technique is illustrated in Figure 2-19.) Because this option requires external IP reachability between each SPs (internal) M-BGP route reflector (RR), not only are the RRs exposed to attack, but the MPLS core network of each SP is also now exposed. Similar to option (b), there is no way to verify the integrity of the MPLS label stack, making VPN label spoofing possible. Hence, Inter-AS VPN option (c) also suffers from a variety of security risks, and at the time of this writing, is not known to be deployed in production using IOS because this model is deemed insecure for Inter-AS VPN connectivity between different SPs. Conversely, for multi-domain (or multi-AS) SP networks, option (c) may be considered since the different domains are managed by the same single SP.