![]()

In any effective ETL system, there lies an unseen but valuable system of event and error logging designed to aid in troubleshooting problems, heading off potential issues, and tracking historical trends in data movement and transformation.

In this chapter, we’re going to discuss logging patterns. If you are a lumberjack and are looking for career tips, you can stop reading now—this is a different type of logging.

Let’s be honest—event and error logging probably isn’t at the top of the list when the average ETL professional thinks of his or her favorite things. Consider the types of things we brag about: “I moved 100 million rows in 19 minutes;” “I cleaned up this ugly mess of data without a single line of code;” “I integrated twelve different sources of data into this fact table.” These are the things that, in our geeky culture, would result in a pat on the back or at least an “Attaboy.” For people like us, this is the home run in the bottom of the ninth, the last-second winning field goal, or the goal from center ice.

Event and error logging, on the other hand, rarely evokes such emotion. I doubt if anyone has ever given a high-five over an effective logging strategy. In the board room or the sales pitch, logging gets about as much attention as the color of ink that will be used to sign the contract.

However, when it comes to tasks that can make or break a successful ETL implementation, having an effective and understandable logging strategy is near the top of the list. While not an outwardly visible element of the project deliverables, error and event logging provides a means with which ETL professionals can measure the health of the data pipeline. Capturing the necessary information during ETL execution will allow easier analysis of the fidelity of the downstream data stores.

Why Logging?

Before diving into the discussion of logging patterns, it’s essential to understand why error and event logging is so critical in an ETL project.

It tells you what has happened in the past

Consider for a moment that you fire up SQL Server Management Studio on Monday morning and discover your central SSIS package ran for 23 minutes yesterday, processing a total of ten million records. On their own, those numbers sound very reasonable. But what if this process typically runs for 30 seconds? What if the typical run processes 100 million rows? It’s important to monitor when packages fail, but in the space between package success and failure is the stuff you really need to worry about. An SSIS package that fails on execution instantly tells me that something bad has happened. However, packages that complete without any errors may still present issues that need to be investigated, and only properly logging key metrics will provide the information necessary to track down these latent clues.

Capturing and storing logging information allows you to evaluate over time the expected runtimes, row counts, and anomalies. This is important not only as a troubleshooting mechanism but for proper capacity planning. Capturing data volume patterns over time is essential for ensuring that your organization is proactive about addressing disk space and other capacity constraints.

It tells you what’s happening now

Although the greatest value of logging resides in the ability to analyze execution data over time, another benefit of an effective logging strategy is that it tells you what’s happening right now in your ETL domain. Consider the case of a package that is running for much longer than expected. What tasks are currently running right now? Have any tasks failed? How much data have I processed so far? Without a good system of information logging, it’s going to be difficult to answer these questions while the ETL process is still running.

Properly logged, however, your in-progress SSIS packages and other ETL elements will no longer be a mystery. Good logging practices ensure that you won’t have to wait until the package actually fails before you know something is wrong.

Elements of Logging

Logging consists of the following elements:

- Error logging . The most common and visible element of logging is the capturing of information about any errors that occur during process execution. Sadly, this is sometimes the only logging done. Accurate error logging should ideally contain not just a declaration of failure but an accurate description of the cause of the failure.

- Event logging . Event logging takes error logging to the next level. Rather than simply capturing when something generates a failure in the ETL, a well-designed event logging system will store enough information about the ETL flow that its execution can be evaluated, not just on a Boolean succeed/fail state but on its overall health.

- Start and ending information (for the entire process as well as elements therein).

- Amount of information processed, generally described in terms of row counts. Note that this can be especially useful to log at the data flow or component level, particularly if your packages have allowances for dropping or generating rows during transformation.

- Notifications. Though not purely a function of the logging system, having a means by which system administrators or other responsible individuals can be notified of sentinel events is crucial to ensuring timely response to ETL anomalies. Notifications are typically associated with error events; when a package fails, someone gets notified. However, in mission-critical systems, other scenarios may also need to generate a notification to the ETL administrator. Consider a long-running ETL process that threatens to impact contracted service level agreements (SLAs): even though it may not generate a failure, such a delay would probably need to be dealt with immediately rather than allowing that SLA to fall into breach. With a system of appropriate notifications, support personnel can quickly respond to potential issues before they get out of hand.

As with other tedious tasks, SQL Server Integration Services has integrated facilities to eliminate some of the time and guesswork involved with error and event logging. Starting with the first version of SSIS in 2005, package-level logging was provided as a way to associate executable objects with events, allowing the logging of those intersections to database or file destinations. Starting with SQL Server 2012, the SSIS catalog was introduced, bringing along with it a brand new way to log information. Using the SSIS catalog to log information adds more to the logging footprint.

In addition to these native logging platforms, many ETL developers choose to craft their own homegrown logging solutions to either replace or, more frequently, supplement the information provided by the built-in SSIS logging tools.

A question that comes up frequently about logging is whether or not the native SSIS logging elements are sufficient for capturing all of the information necessary to properly track the inner workings of the ETL operations. In prior versions of SQL Server, the logging capabilities built into SSIS provided some of the information required by ETL logging systems but failed to accommodate the direct logging of other information (most notably, the row counts for various data flow operations). As a result, for capturing logging information, many enterprising SSIS professionals built their own frameworks that blended native SSIS logging with custom logging processes.

For those working with a SQL Server 2012 ETL environment, the integrated logging facilities are greatly improved. In addition to the replacement of package-level logging with server-wide logging, ETL professionals can now enjoy row count logging among the improvements for the current version.

The bottom line is to find the right combination of logging tools to suit the needs of your organization. For shops using older versions of Integration Services or that have highly specialized logging requirements, native logging supplemented by a custom solution may be the best bet. In most cases, though, the logging facilities built into SQL Server 2012 will suffice for common logging needs.

Any conversation about event and error logging in SSIS should begin with server-based logging through the SSIS catalog. Introduced with SQL Server 2012, this vehicle helps to automate and make consistent the process of logging information and events during package execution. With logging in the SSIS catalog, keeping track of the ETL goings-on no longer requires logic embedded in the package.

When packages stored in the catalog are executed, event and error logging information is written to special tables in the SSIS catalog database (SSISDB). Information stored in those tables can then be accessed through a series of views created specifically for reporting from the SSISDB event log tables. Among the key views in this scenario:

- [catalog].[executions]: This view exposes the high-level details of each executable that runs during the ETL operation. The information stored here includes the start time and end time of the execution, the current status of the execution, and metadata about the executable itself.

- [catalog].[event_messages]: This view shows the logged messages associated with each execution. Depending on the size of the SSIS package and the specified logging level (more on the latter momentarily), the number of entries for each execution could be sizeable.

- [catalog].[execution_data_statistics]: This view shows an entirely new segment of native logging capabilities: intrapackage row counts. The data presented through this view includes the names of the source and destination components, the name of the data path itself, and the number of rows transferred.

As you explore the catalog views in the SSISDB database, you’ll find that these are just a few of many such views. Because these are simply views in a user database, you can consume the information logged here just as you would any other data in the system. You can use the SSIS catalog views to build SSRS reports, expose the information through Excel, generate alerts, etc.

First introduced in SQL Server 2012, the concept of the logging levels eliminates much of the guesswork from selecting which events to log. These predefined levels allow the ETL professional to specify at a broad level how much information should be written to the logging tables in the SSIS catalog.

Before the SSIS catalog was introduced, native logging involved the selection of specific tasks and events for which information would be logged. While this does allow a lot of flexibility, it also lends itself to an all-or-nothing logging approach (especially for beginners—spoken from experience!). Unless one chooses to log all events for all components—which can be expensive in terms of performance overhead and storage—it takes some experience and tweaking to get all of the settings right.

With the introduction of logging levels, ETL professionals can now choose from one of four settings to specify how much information will be captured and stored during execution.

- None. As the name implies, no detailed logging will be performed. It’s important to note that this does not mean that there will be no logging for that execution; a record will still be added to the [catalog].[executions] table to track the package execution, but the details (specifically those tracked in the [catalog].[event_messages] table) are not stored when the logging level is set to None.

- Basic. This is the default logging level, providing a reasonable starting point for SSIS catalog logging.

- Performance. This logging level resides between None and Basic in terms of the logging detail.

- Verbose. This gets you everything and the kitchen sink. Verbose logging is a great way to capture everything you might possibly need regarding the execution of SSIS packages. The downsides are that this level of logging can hurt performance and that it requires more attention to the amount of space required to store those logs. It’s important to note that the Verbose setting is the only level that captures row count information.

![]() Note: For an excellent walk-through the new features of SSIS catalog logging, I recommend a blog post by Jamie Thompson—it’s an older post based on a CTP version of SQL Server, but it provides a great overview of the internals of this platform. You can find that post here: http://sqlblog.com/blogs/jamie_thomson/archive/2011/07/16/ssis-logging-in-denali.aspx.

Note: For an excellent walk-through the new features of SSIS catalog logging, I recommend a blog post by Jamie Thompson—it’s an older post based on a CTP version of SQL Server, but it provides a great overview of the internals of this platform. You can find that post here: http://sqlblog.com/blogs/jamie_thomson/archive/2011/07/16/ssis-logging-in-denali.aspx.

Although having the flexibility to craft your own custom ETL process reports is great, I suspect that many such custom reports look very much alike: Package Name, Start Time, End Time, Execution Status. Maybe even a Duration column for those who can’t do date math in their heads. Fortunately, SQL Server Management Studio comes equipped with canned reports that display a common set of execution data for packages stored in the SSIS catalog.

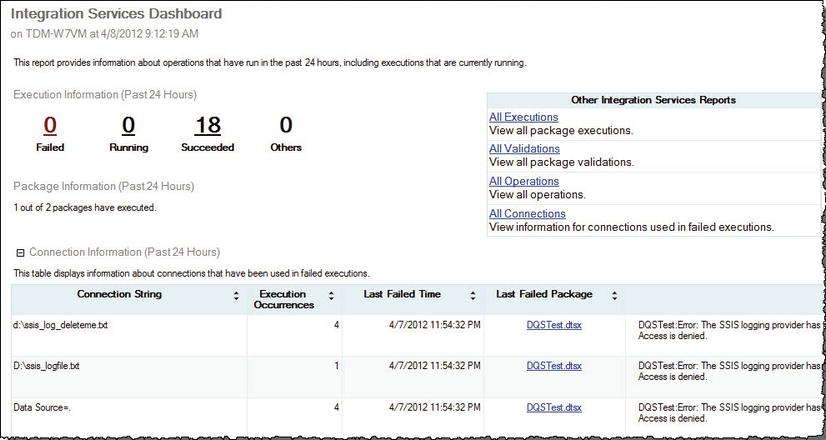

Figure 12-1. Integration Services Dashboard

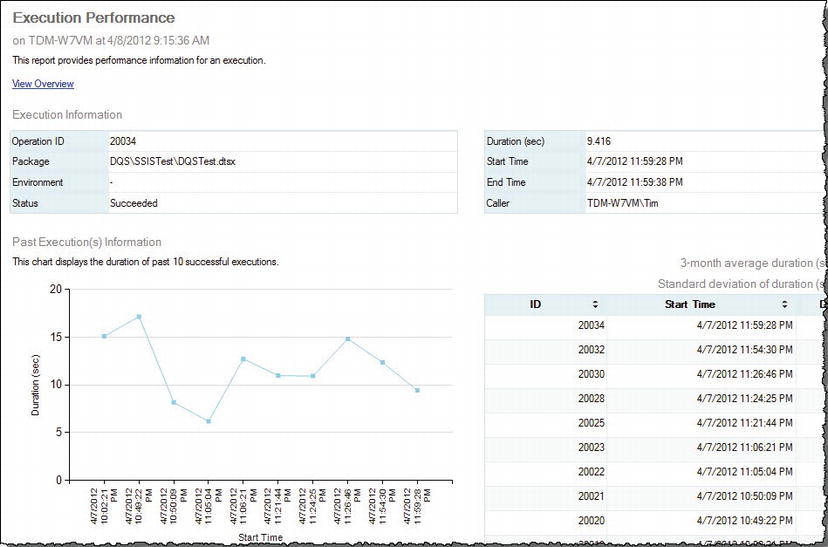

As shown in Figure 12-1, SSMS offers a built-in dashboard report that provides a high-level snapshot of the current status and recent history. Other reports include execution detail report, failed connections report, and one of my favorites, the execution history report shown in Figure 12-2. This report outlines the recent history on a given package, including the most recent execution stats (complete with a data flow component analysis), and a chart showing the execution duration over time.

Figure 12-2. Package Execution Performance report

If there is a downside to be found in these integrated reports, it’s that they are designed purely for consumption within SQL Server Management Studio—there’s no path to push the reports to a standard format (for example, an .rdl file for use in Reporting Services). Furthermore, the information in these reports cannot be directly exported, though it is possible to print the report to a format such as PDF with the right client software.

On the plus side, however, these built-in reports will likely eliminate much of the need to craft custom reports around the SSIS logging subsystem. Almost every ETL professional who has gone through the exercise of adding logging information to an ETL system has also performed the simple but tedious task of creating reports to access said information. Couple these standard reports with the catalog logging, and there’s the potential for significant time savings on each ETL project. Having built from scratch a number of reports very similar to these, I can attest that the built-in execution reports in SSMS are one of the most convenient features of this version of the product.

In addition to the server-based SSIS catalog event logging in SQL Server 2012, ETL developers also have the option of logging events and errors at the package level. Although designed with a similar purpose, the package-level logging in SSIS is a completely separate beast from the catalog logging mentioned earlier.

Package logging has been around since SSIS was first introduced, and is an effective way to track basic information about executions in SSIS. It has been, and remains for many legacy packages, the default mechanism for logging when custom event and error logging is not required.

Although very mature and heavily-used, this type of logging does come with a few downsides:

- The responsibility of logging package events rests on the shoulders of the ETL developers. This is a process that must be repeated for every package, and although the logic can be wrapped into a reusable template, almost any change to the logging specification would require the modification of every package.

- There’s no easy way to add or change logging at runtime. Changing the logging properties on a per-execution basis is technically possible, but to do this, the ETL developer must have set up a logging connection in the package.

- There is no server-wide repository for logging. Unlike logging to the SSIS catalog, which logs to the SSISDB databaseon the SQL Server instance on which the package is executed, package-level logging requires the specification of a destination provider. On the plus side, package logging affords more flexibility than logging to the SSIS catalog. While the latter stores information only in SSISDB, the former allows you to specify both the destination type (SQL Server database, text file, XML file, etc.) and the location (local or remote file or database). To that end, package logging provides a standout benefit not offered through the SSIS catalog: centralized enterprise logging.

- There is no row count logging in the data flow. Given that row count logging is one of the most significant elements of effective ETL data collection, this is a significant downside.

For those working with SSIS versions earlier than 2012, package logging is the only native option available to you; the SSIS catalog first appears in SQL Server 2012.

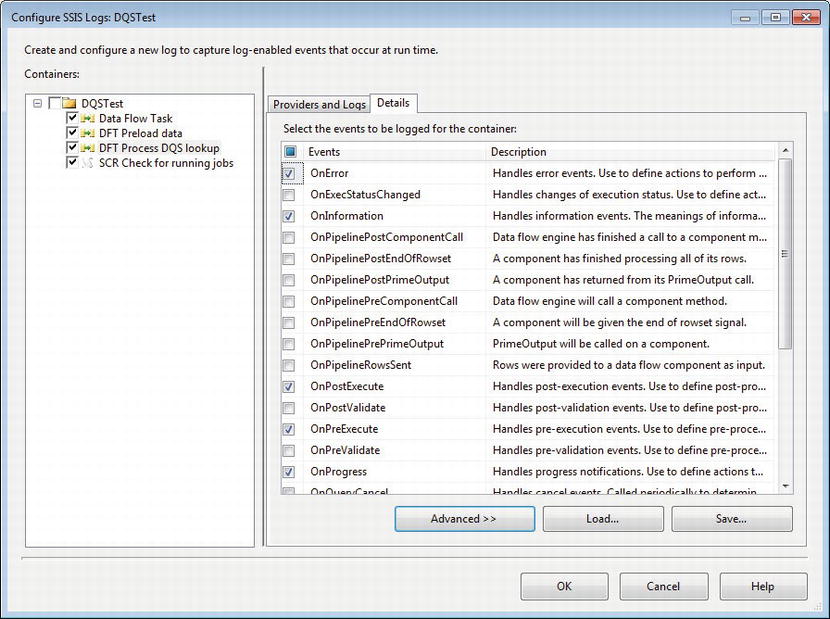

Package logging is largely unchanged since the first version of SSIS in 2005. To engage logging at the package level, the ETL developer will specify the logging provider, either a file or a SQL Server database, and the events that will be logged to said provider. As shown in Figure 12-3, this provides a great deal of flexibility in terms of which ETL elements should be logged and to what degree.

Figure 12-3. Configure package-level logging

So let’s assume that you’ve got an existing ETL infrastructure using package-level logging. You’re happy with what it gives you, but you’re wondering if it’s worth leaning on server logging in the SSIS catalog instead. What’s the tipping point at which you should upgrade?

Based on what I’ve seen, I’m inclined to recommend the SSIS catalog logging in most cases over native package logging. Admittedly you lose some measure of flexibility, but catalog logging is more straightforward, easier to configure at runtime, and provides more coverage. However, if you’re dependent on having task-level configuration for logging events, consider sticking with the package-level logging.

Also worth noting is that there is no restriction against using both SSIS catalog logging in addition to package-level logging. However, if you blend two different logging methods, make sure you take some time to evaluate what you’re capturing to avoid any unnecessary duplication.

There comes a time in every ETL developer’s life when he or she must drive off the paved road. In terms of logging, creating your own custom logging elements is the equivalent of leaving the pavement. Even though creating these customized structures represents a departure from the “proper” native logging components in SSIS, it’s actually quite common, particularly in shops with a large number of SSIS packages.

Almost every custom logging scenario has its own purpose, its own nuances, and its own dedicated resources. However, after observing many different custom SSIS logging setups, I find that there is a common component fingerprint found in many of them:

- Parameterized, reusable stored procedures that write logging information are created in the database to which the logging information will be written.

- Execute SQL Tasks designed to log informational messages are created in SSIS packages. These are either added inline in the control flow of the package, or added to an event handler (usually the OnPostExecute event).

- Separately, additional Execute SQL Tasks are added to log errors or anomalies in the package. These are most often added to OnError event handlers for tasks or the package itself, though they are sometimes used at the business end of a failure constraint in the control flow.

- Occasionally, highly customized logging requirements may introduce the need for a script task or script component to assist with meeting those requirements.

Custom logging is the most difficult to create and maintain because you’re essentially on your own in terms of metadata. However, because there is no real framework (apart from whatever native SSIS tasks you use), you have very few constraints on what you can and can’t do. It certainly takes more elbow grease to get it done, but custom logging allows for flexibility not found in native logging facilities.

Although custom logging has been a part of SSIS since it was SSIS (and even earlier, as DTS), I suspect that the need for custom logging design patterns will diminish as improvements are made in the native logging capabilities of SSIS. Now that one of the principal deficiencies in prior versions regarding logging—the inability to capture row counts—has been addressed in SQL Server 2012, it could be expected that native logging will take the place of custom structures as the go-to mechanism for package execution information.

The quintessential question: Now that I’ve decided to log information in my SSIS packages, what information should I capture? This question is central to every ETL project. Gather too much information and you waste processing time and storage space. Capture too little data and you risk not getting enough to properly troubleshoot errors or head off potential problems.

Every scenario will have different requirements, but the answer to the question “How much information should I log?” will always be the same: Capture the information required. No more, no less.

I realize this isn’t a silver-bullet answer, but it does tell you that the decision of what to capture in your logging strategy isn’t quick or easy. You need to get all of the data you’ll need to figure out what happened in the event of a package failure, analyze patterns to discover data anomalies or other irregularities, and abide by governmental regulations and/or company policies. However, every additional logging element adds processing time and storage to your system, so it’s usually not practical to take a “log everything just in case” approach, particularly on high-volume systems.

To find the right strategy for you, think about the following:

- What do you hope to accomplish by logging? Do you just want to know where and when the ETL process failed, or do you expect to consume the logging information in a proactive system intended to head off problems before they occur?

- How much horsepower do you have? If you have state of the art ETL hardware and network, you can be more liberal in your logging expectations. However, if your SSIS server is a virtual machine on a shared set of disks, you need to be much more careful.

- Are you in a highly-regulated environment? Healthcare and financial institutions are under particular scrutiny, but other shops may have similar reporting and/or auditing requirements. As a rule, I’m a big fan of doing things that keep me from going to jail, so I’m happy to recommend that you design your ETL logging in such a way that it fully complies with federal law. If you need to make shortcuts in logging, this isn’t something you want to play around with.

Creating an effective logging strategy that balances the need for information with the need to maintain a stable and well-performing system isn’t always done on the first swipe. Know that designing a proper logging pattern, like the core ETL process itself, tends to be an iterative process.

Logging Systems

We discussed three different logging platforms—SSIS catalog logging, package logging, and custom logging—earlier in the chapter. Early in the process of designing your logging system, you’ll need to pick which of these will be used to capture logging information from your packages.

There are no hard and fast rules for selecting which logging system should be used in a given scenario. However, the following tips may help point you in the right direction:

- Remember that you’re not limited to a single logging mechanism. You can add custom logging to either package logging or SSIS catalog logging, or even mix all three of them together if you’re daring. When using multiple logging vehicles, take care that you don’t log the same information in multiple ways.

- If you are in a SQL Server 2012 environment, seriously consider using the SSIS catalog for event and error logging. The improvements over prior versions are significant and can address many of the scenarios previously left for custom logging.

- If you need to centralize logging across multiple SSIS servers, you’ll need to lean on package logging or custom logging.

- Consider using custom logging to supplement another logging platform, not as a single means through which to log all required data. As mentioned, custom logging takes significantly more effort than the native facilities, so don’t reinvent the wheel if you don’t have to.

Summary

In this chapter, we’ve discussed design patterns around logging in SSIS, including an exploration of the various high-level approaches to error and event logging. Even though event and error logging isn’t one of the more exciting aspects of data movement, it’s still a critical piece of the puzzle. Effective logging works as an insurance policy, a troubleshooting tool, and even a capacity planning guide. The time invested in building proper logging is rarely wasted.