Chapter 17

![]()

SQL Server Optimization Checklist

If you have read through the previous 16 chapters of this book, then you understand the major aspects involved in performance optimization. You also understand that it is a challenging and ongoing activity.

What I hope to do in this chapter is to provide a performance-monitoring checklist that can serve as a quick reference for database developers and DBAs when in the field. The idea is similar to the notion of tear-off cards of best practices. This chapter does not cover everything, but it does summarize, in one place, some of the major tuning activities that can have a quick and demonstrable impact on the performance of your SQL Server systems. I have categorized these checklist items into the following sections:

- Database design

- Query design

- Configuration settings

- Database administration

- Database backup

Each section contains a number of optimization recommendations and techniques. Where appropriate, each section also cross-references specific chapters in this book that provide more detailed information.

Database design is a broad topic, and it can’t be given due justice in a small section in this query tuning book; nevertheless, I advise you to keep an eye on the following design aspects to ensure that you pay attention to database performance from an early stage:

- Balancing under- and overnormalization

- Benefiting from using entity-integrity constraints

- Benefiting from using domain and referential integrity constraints

- Adopting index-design best practices

- Avoiding the use of the sp_ prefix for stored procedure names

- Minimizing the use of triggers

Balancing Under- and Overnormalization

When designing a database, you have the following two extreme options:

- Save the complete data in a single, flat table with little to no normalization.

- Save the data in fine-grained tables by exploding every attribute into its own table and thus allowing every attribute to save an unlimited number of multiple values.

Reasonable normalization enhances database performance. The presence of wide tables with a large number of columns is usually a characteristic of an undernormalized database. Undernormalization causes excessive repetition of data, which can lead to improper results and often hurts query performance. For example, in an ordering system, you can keep a customer’s profile and all the orders placed by the customer in a single table, as shown in Table 17-1.

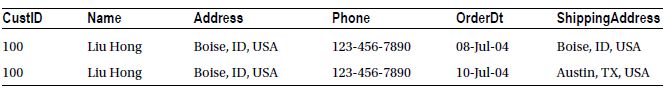

Table 17-1. Original Customers Table

Keeping the customer profile and the order information together in a single table will repeat the customer profile in every order placed by the customer, making the rows in the table very wide. Consequently, fewer customer profiles can be saved in one data page. For a query interested in a range of customer profiles (not their order information), more pages have to be read compared to a design in which customer profiles are kept in a separate table. Also, with every bit of data in one large table, you’re going to see a lot more locking and concurrency issues since more people are going to access the same data out of the same page or row much more frequently. To avoid the performance impact of undernormalization, you must normalize the two logical entities (e.g., customer profile and orders), which have a one-to-many type of relationship, into separate tables, as shown in Tables 17-2 and 17-3.

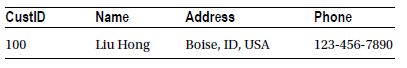

Table 17-2. New Customers Table

Table 17-3. Orders Table

| CustID | OrderDt | ShippingAddress |

| 100 | 08-Jul-04 | Boise, ID, USA |

| 100 | 10-Jul-04 | Austin, TX, USA |

Yes, there are further normalization opportunities possible with these tables; however, that’s up to you, working with your business, to determine if they’re needed.

Similarly, overnormalization is also not good for query performance. Overnormalization causes excessive joins across too many narrow tables. Misestimations on cardinality in one table can seriously impact a large number of others as they get joined. Although a 20-table join can perform perfectly fine and a 2-table join can be a problem, a good rule of thumb is to more closely examine a query when it exceeds 8 to 12 tables in the join criteria. That is not to say that anything below that number is good and anything above that is bad; however, this number of joins should act as a flag for evaluation. To fetch any useful content from the database, a database developer has to join a large number of tables in the SQL queries. For example, if you create separate tables for a customer name, address, and phone number, then you will have to join three tables to retrieve the customer information. If the data (e.g., the customer name and address) has a one-to-one type of relationship and is usually accessed together by the queries, then normalizing the data into separate tables can hurt query performance.

Benefiting from Entity-Integrity Constraints

Data integrity is essential to ensuring the quality of data in the database. An essential component of data integrity is entity integrity, which defines a row as a unique entity for a particular table; that is, every row in a table must be uniquely identifiable. The column or columns serving as the unique row identifier for a table must be represented as the primary key of the table.

Sometimes, a table may contain an additional column (or columns) that also can be used to uniquely identify a row in the table. For example, an Employee table may have the EmployeeID and SocialSecurityNumber columns. The EmployeeID column serves as the unique row identifier, and it can be defined as the primary key. Similarly, the SocialSecurityNumber column can be defined as the alternate key. In SQL Server, alternate keys can be defined using unique constraints, which are essentially the younger siblings to primary keys. In fact, both the unique constraint and the primary key constraint use unique indexes behind the scenes.

It’s worth noting that there is honest disagreement regarding the use of a natural key (e.g., the SocialSecurityNumber column in the previous example) or an artificial key (e.g., the EmployeeID column). I’ve seen both designs succeed, but each approach has strengths and weaknesses. Rather than suggest one over the other, I’ll provide you with a couple of reasons to use both and some of the costs associated with each. An identity column is usually an INT or a BIGINT, which makes it narrow and easy to index, improving performance. Also, separating the value of the primary key from any business knowledge is considered good design in some circles. One of the drawbacks of this approach is that the numbers sometimes acquire business meaning, which should never happen. Another thing to keep in mind: You have to create a unique constraint for the alternate keys to prevent the creation of multiple rows where none should exist. Natural keys provide a clear, human-readable, primary key that has true business meaning. They tend to be wider fields—sometimes very wide—making them less efficient inside indexes. Also, sometimes the data may change, which has a profound trickle-down effect within your database and your enterprise.

Let me just reiterate that either approach can work well and that each provides plenty of opportunities for tuning. Either approach, properly applied and maintained, will protect the integrity of your data.

Besides maintaining data integrity, unique indexes—the primary vehicle for entity-integrity constraints—help the optimizer generate efficient execution plans. SQL Server can often search through a unique index faster than it can search through a nonunique index. This is because each row in a unique index is unique; and, once a row is found, SQL Server does not have to look any further for other matching rows (the optimizer is aware of this fact). If a column is used in sort (or GROUP BY or DISTINCT) operations, consider defining a unique constraint on the column (using a unique index) because columns with a unique constraint generally sort faster than ones with no unique constraint.

To understand the performance benefit of entity-integrity or unique constraints, consider this example. Assume you want to modify the existing unique index on the Production.Product table:

CREATE NONCLUSTERED INDEX [AK_Product_Name]

ON [Production].[Product] ([Name] ASC) WITH (DROP_EXISTING = ON)

ON [PRIMARY] ;

GO

The nonclustered index does not include the UNIQUE constraint. Therefore, although the [Name] column contains unique values, the absence of the UNIQUE constraint from the nonclustered index does not provide this information to the optimizer in advance. Now, let’s consider the performance impact of the UNIQUE constraint (or a missing UNIQUE constraint) on the following SELECT statement:

SELECT DISTINCT

(p.[Name])

FROM Production.Product AS p ;

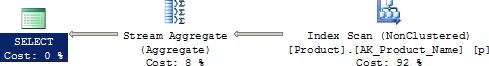

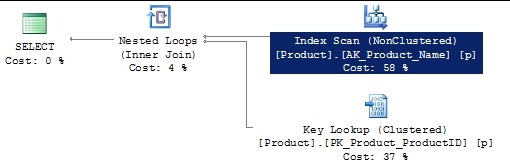

Figure 17-1 shows the execution plan of this SELECT statement.

Figure 17-1 An execution plan with no UNIQUE constraint on the [Name] column

From the execution plan, you can see that the nonclustered AK_ProductName index is used to retrieve the data, and then a Stream Aggregate operation is performed on the data to group the data on the [Name] column, so that the duplicate [Name] values can be removed from the final result set. Note that the Stream Aggregate operation would not have been required if the optimizer had been told in advance about the uniqueness of the [Name] column. You can accomplish this by defining the nonclustered index with a UNIQUE constraint, as follows:

CREATE UNIQUE NONCLUSTERED INDEX [AK_Product_Name]

ON [Production].[Product]([Name] ASC)

WITH (DROP_EXISTING = ON)

ON [PRIMARY] ;

GO

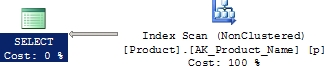

Figure 17-2 shows the new execution plan of the SELECT statement.

Figure 17-2 An execution plan with a UNIQUE constraint on the [Name] column

In general, the entity-integrity constraints (i.e., primary keys and unique constraints) provide useful information to the optimizer about the expected results, assisting the optimizer in generating efficient execution plans.

Benefiting from Domain and Referential Integrity Constraints

The other two important components of data integrity are domain integrity and referential integrity. Domain integrity for a column can be enforced by restricting the data type of the column, defining the format of the input data, and limiting the range of acceptable values for the column. SQL Server provides the following features to implement the domain integrity: data types, FOREIGN KEY constraints, CHECK constraints, DEFAULT definitions, and NOT NULL definitions. If an application requires that the values for a data column be restricted to a range of values, then this business rule can be implemented either in the application code or in the database schema. Implementing such a business rule in the database using domain constraints (such as the CHECK constraint) usually helps the optimizer generate efficient execution plans.

To understand the performance benefit of domain integrity, consider this example:

--Create two test tables

IF (SELECT OBJECT_ID('dbo.Test1')

) IS NOT NULL

DROP TABLE dbo.Test1 ;

GO

CREATE TABLE dbo.Test1 (

C1 INT,

C2 INT CHECK (C2 BETWEEN 10 AND 20)

) ;

INSERT INTO dbo.Test1

VALUES (11, 12) ;

GO

IF (SELECT OBJECT_ID('dbo.Test2')

) IS NOT NULL

DROP TABLE dbo.Test2 ;

GO

CREATE TABLE dbo.Test2 (C1 INT, C2 INT) ;

INSERT INTO dbo.Test2

VALUES (101, 102) ;

Now execute the following two SELECT statements:

SELECT T1.C1,

T1.C2,

T2.C2

FROM dbo.Test1 AS T1

JOIN dbo.Test2 AS T2

ON T1.C1 = T2.C2 AND

T1.C2 = 20 ;

GO

SELECT T1.C1,

T1.C2,

T2.C2

FROM dbo.Test1 AS T1

JOIN dbo.Test2 AS T2

ON T1.C1 = T2.C2 AND

T1.C2 = 30 ;

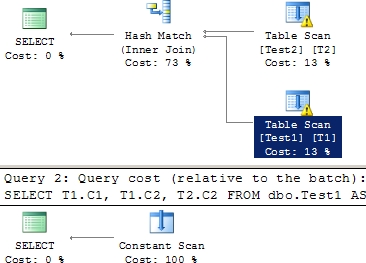

The two SELECT statements appear to be the same, except for the predicate values (20 in the first statement and 30 in the second). Although the two SELECT statements have exactly the same form, the optimizer treats them differently because of the CHECK constraint on the Tl.C2 column, as shown in the execution plan in Figure 17-3.

Figure 17-3 Execution plans with predicate values within and outside the CHECK constraint boundaries

From the execution plan, you can see that, for the first query (with T1.C2 =20), the optimizer accesses the data from both tables. For the second query (with Tl.C2 = 30), the optimizer understands from the correspondingCHECK constraint on the column Tl.C2 that the column can’t contain any value outside the range of 10 to 20. Thus, the optimizer doesn’t even access the data from the tables. Consequently, the relative estimated cost of the second query is 0 percent.

I explained the performance advantage of referential integrity in detail in the “Declarative Referential Integrity” section of Chapter 11.

Therefore, you should use domain and referential constraints, not only to implement data integrity, but also to facilitate the optimizer in generating efficient query plans. To understand other performance benefits of domain and referential integrity, please refer to the “Using Domain and Referential Integrity” section of Chapter 11.

Adopting Index-Design Best Practices

The most common optimization recommendation—and usually the biggest contributor to good performance—is to implement the correct indexes for the database workload. Indexes are unlike tables, which are used to store data and can be designed even without knowing the queries thoroughly (as long as the tables properly represent the business entities). Instead, indexes must be designed by reviewing the database queries thoroughly. Except in common and obvious cases, such as primary keys and unique indexes, please don’t fall into the trap of designing indexes without knowing the queries. Even for primary keys and unique indexes, I advise you to validate the applicability of those indexes as you start designing the database queries. Considering the importance of indexes for database performance, you must be very careful when designing indexes.

Although the performance aspect of indexes is explained in detail in Chapters 4, 6, and 7, I’ll reiterate a short list of recommendations for easy reference:

- Choose narrow columns for indexes.

- Ensure that the selectivity of the data in the candidate column is very high (i.e., the column must have a large number of unique values).

- Prefer columns with the integer data type (or variants of the integer data type). Also, avoid indexes on columns with string data types such as VARCHAR.

- Consider listing columns having higher selectivity first in a multi-column index. .

- Use the INCLUDE list in an index as a way to make an index cover the index key structure without changing that structure. Do this by adding columns to the key, which enables you to avoid expensive lookup operations.

- When deciding which columns to index, pay extra attention to the queries’ WHERE clauses and JOIN criteria columns. These can serve as the entry points into the tables, especially if a WHERE clause criterion on a column filters the data on a highly selective value or constant. Such a clause can make the column a prime candidate for an index.

- When choosing the type of an index (clustered or nonclustered), keep in mind the advantages and disadvantages of clustered and nonclustered index types.

Be extra careful when designing a clustered index because every nonclustered index on the table depends on the clustered index. Therefore, follow these recommendations when designing and implementing clustered indexes:

- Keep the clustered indexes as narrow as possible. You don’t want to widen all your nonclustered indexes by having a wide clustered index.

- Create the clustered index first, and then create the nonclustered indexes on the table.

- If required, rebuild a clustered index in a single step using the DROPEXISTING keyword in the CREATE INDEX command. You don’t want to rebuild all the nonclustered indexes on the table twice: once when the clustered index is dropped and again when the clustered index is re-created.

- Do not create a clustered index on a frequently updated column. If you do so, the nonclustered indexes on the table will create additional load by remaining in sync with the clustered index key values.

- Where applicable, such as when you need aggregations, consider using columnstore indexes.

To keep track of the indexes you’ve created and determine others that you need to create, you should take advantage of the dynamic management views that SQL Server 2012 makes available to you. By checking the data in sys.dm_db_index_usage_stats on a regular basis—say once a week or so—you can determine which of your indexes are actually being used and which are redundant. Indexes that are not contributing to your queries to help you improve performance are just a drain on the system. They require both more disk space and additional I/O to maintain the data inside the index as the data in the table changes. On the other hand, querying

sys.dm_db_missing_indexes_details will show potential indexes deemed missing by the system and even suggest INCLUDE columns. You can access the DMV sys.dm_db_missing_indexes_groups_ stats to see aggregate information about the number of times queries are called that could have benefited from a particular group of indexes. Just remember to test these suggestions thoroughly and don’t assume that they will be correct. All these suggestions are just that: suggestions. All these tips can be combined to give you an optimal method for maintaining the indexes in your system over the long term.

Avoiding the Use of the sp_ Prefix for Stored Procedure Names

As a rule, don’t use the sp_ prefix for user stored procedures since SQL Server assumes that stored procedures with the sp_ prefix are system stored procedures, and these are supposed to be in the master database. Using sp or usp as the prefix for user stored procedures is quite common. This is neither a major performance hit nor a major problem, but why court trouble? The performance hit of the sp_ prefix is explained in detail in the “Be Careful Naming Stored Procedures” section of Chapter 11.

Minimizing the Use of Triggers

Triggers provide a very attractive method for automating behavior within the database. Since they fire as data is manipulated by other processes (regardless of the processes), triggers can be used to ensure certain functions are run as the data changes. That same functionality makes them dangerous since they are not immediately visible to the developer or DBA working on a system. They must be taken into account when designing queries and when troubleshooting performance problems. Because they carry a somewhat hidden cost, triggers should be considered very carefully. Before using a trigger, make sure that the only way to solve the problem presented is with a trigger. If you do use a trigger, document that fact in as many places as you can to ensure that the existence of the trigger is taken into account by other developers and DBAs.

Query Design

Here’s a list of the performance-related best practices you should follow when designing the database queries:

- Use the command SET N0C0UNT ON.

- Explicitly define the owner of an object.

- Avoid nonsargable search conditions.

- Avoid arithmetic operators and functions on WHERE clause columns.

- Avoid optimizer hints.

- Stay away from nesting views.

- Ensure there are no implicit data type conversions.

- Minimize logging overhead.

- Adopt best practices for reusing execution plans.

- Adopt best practices for database transactions.

- Eliminate or reduce the overhead of database cursors.

I further detail each best practice in the following sections.

Use the Command SET NOCOUNT ON

As a rule, always use the command SET NOCOUNT ON as the first statement in stored procedures, triggers, and other batch queries. This enables you to avoid the network overhead associated with the return of the number of rows affected after every execution of a SQL statement. The command SET NOCOUNT is explained in detail in the “Use SET NOCOUNT” section of Chapter 11.

Explicitly Define the Owner of an Object

As a performance best practice, always qualify a database object with its owner to avoid the runtime cost required to verify the owner of the object. The performance benefit of explicitly qualifying the owner of a database object is explained in detail in the “Do Not Allow Implicit Resolution of Objects in Queries” section of Chapter 9.

Avoid Nonsargable Search Conditions

Be vigilant when defining the search conditions in your query. If the search condition on a column used in the WHERE clause prevents the optimizer from effectively using the index on that column, then the execution cost for the query will be high in spite of the presence of the correct index. The performance impact of nonsargable search conditions is explained in detail in the corresponding section of Chapter 11.

Additionally, please be careful when defining your application features. If you define an application feature such as “retrieve all products with product name ending in caps,” then you will have queries scanning the complete table (or the clustered index). As you know, scanning a multimillion-row table will hurt your database performance. Unless you use an index hint, you won’t be able to benefit from the index on that column. However, using an index hint overrides the decisions of the query optimizer, so it’s generally not recommended that you use index hints either (see Chapter 11 for more information). To understand the performance impact of such a business rule, consider the following SELECT statement:

SELECT p.*

FROM Production.Product AS p

WHERE p.[Name] LIKE '%Caps' ;

In Figure 17-4, you can see that the execution plan used the index on the [Name] column, but it had to perform a scan instead of a seek. Since an index on a column with character data types (such as CHAR and VARCHAR) sorts the data values for the column on the leading-end characters, using a leading % in the LIKE condition doesn’t allow a seek operation into the index. The matching rows may be distributed throughout the index rows, making the index ineffective for the search condition and thereby hurting the performance of the query.

Figure 17-4 An execution plan showing a clustered index scan caused by a nonsargable LIKE clause

Avoid Arithmetic Expressions on the WHERE Clause Column

Always try to avoid using arithmetic operators and functions on columns in the WHERE and JOIN clauses. Using operators and functions on columns prevents the use of indexes on those columns. The performance impact of using arithmetic operators on WHERE clause columns is explained in detail in the “Avoid Arithmetic Operators on the WHERE Clause Column” section of Chapter 11, and the impact of using functions is explained in detail in the “Avoid Functions on the WHERE Clause Column” section of the same chapter.

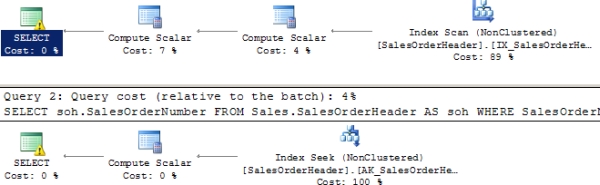

To see this in action, consider the following queries:

SELECT soh.SalesOrderNumber

FROM Sales.SalesOrderHeader AS soh

WHERE 'S05' = LEFT(SalesOrderNumber, 3) ;

SELECT soh.SalesOrderNumber

FROM Sales.SalesOrderHeader AS soh

WHERE SalesOrderNumber LIKE 'S05%' ;

These queries basically implement the same logic: they check SalesOrderNumber to see whether it is equal to S05. However, the first query performs a function on the SalesOrderNumber column, while the second uses a LIKE clause to check for the same data. Figure 17-5 shows the resulting execution plans.

Figure 17-5 Execution plans showing a function that prevents index use

As you can see in Figure 17-5, the first query forces an Index Scan operation, while the second is able to perform a nice, clean Index Seek. These examples demonstrate clearly why you should avoid functions and operators on WHERE clause columns.

Avoid Optimizer Hints

As a rule, avoid the use of optimizer hints, such as index hints and join hints, because they overrule the decision-making process of the optimizer. In most cases, the optimizer is smart enough to generate efficient execution plans, and it works the best without any optimizer hint imposed on it. The same applies to plan guides. Forcing a plan will help in rare circumstances, but it’s usually better to rely on the optimizer to make good choices. For a detailed understanding of the performance impact of optimizer hints, please refer to the “Avoiding Optimizer Hints” section of Chapter 11.

Stay Away from Nesting Views

A nested view exists when one view calls another view, which calls more views, and so on. This can lead to very confusing code for two reasons. First, the views are masking the operations being performed. Second, the query may be very simple, but the execution plan and subsequent operations by the SQL engine can be very complex and expensive. This occurs because the optimizer doesn’t have time to simplify the query, eliminating tables and columns it doesn’t need; instead, the optimizer assumes that all tables and columns are needed. The same rule applies to nesting user-defined functions.

Ensure No Implicit Data Type Conversions

When you create variables in a query, be sure those variables are of the same data type as the columns that they will be used to compare against. Even though SQL Server can and will convert, for example, a VARCHAR to a DATE, that implicit conversion will prevent indexes from being used. You have to be just as careful in situations like table joins, so that the primary key data type of one table matches the foreign key of the table being joined. You may occasionally see a warning in the execution plan to help you with this, but you can’t count on this.

Minimize Logging Overhead

SQL Server maintains the old and new states of every atomic action (or transaction) in the transaction log to ensure database consistency and durability. This can place tremendous pressure on the log disk, often making the log disk a point of contention. Therefore, to improve database performance, you must try to optimize the transaction log overhead. In addition to the hardware solutions discussed later in the chapter, you should adopt the following query-design best practices:

- Choose table variables over temporary tables for small result sets, where possible. Remember: If the result set is not small, you can encounter very serious issues. The performance benefit of table variables is explained in detail in the “Using Table Variables” section of Chapter 10.

- Batch a number of action queries in a single transaction. You must be careful when using this option because, if too many rows are affected within a single transaction, the corresponding database objects will be locked for a long time, blocking all other users trying to access the objects.

- Reduce the amount of logging of certain operations by using the Bulk Logged recovery model. This rule applies primarily when dealing with large-scale data manipulation. You also will use minimal logging when Bulk Logged is enabled, and you use the WRITE clause of the UPDATE statement or drop or create indexes.

Adopt Best Practices for Reusing Execution Plans

The best practices for optimizing the cost of plan generation can be broadly classified into two categories:

- Caching execution plans effectively

- Minimizing recompilation of execution plans

Caching execution plans effectively

You must ensure that the execution plans for your queries are not only cached, but reused often. Do so by adopting the following best practices:

- Avoid executing queries as nonparameterized, ad hoc queries. Instead, parameterize the variable parts of a query and submit the parameterized query using a stored procedure or the spexecutesql system stored procedure.

- If you must use lots of ad hoc queries, enable the “Optimize for Ad Hoc Workload” option, which will create a plan stub instead of a full plan the first time a query is called. This radically reduces the amount of procedure cache used.

- Use the same environment settings (such as ANSI NULLS) in every connection that executes the same parameterized queries. This is important because the execution plan for a query is dependent on the environment settings of the connection.

- As explained earlier in the “Explicitly Define the Owner of an Object” section, explicitly qualify the owner of the objects when accessing them in your queries.

The preceding aspects of plan caching are explained in detail in Chapter 9.

Minimizing Recompilation of Execution Plans

To minimize the cost of generating execution plans for queries, you must ensure that the plans in the cache are not invalidated or recompiled for reasons that are under your control. The following recommended best practices minimize the recompilation of stored procedure plans:

- Do not interleave DDL and DML statements in your stored procedures. You should put all the DDL statements at the top of the stored procedures.

- In a stored procedure, avoid using temporary tables that are created outside the stored procedure.

- Avoid recompilation caused by statistics changes on temporary tables by using the KEEPFIXED PLAN option.

- Prefer table variables over temporary tables for very small data sets.

- Do not change the ANSI SET options within a stored procedure.

- If you really can’t avoid a recompilation, then identify the stored procedure statement that is causing the recompilation, and execute it through the sp_execute_sql system stored procedure.

The causes of stored procedure recompilation and the recommended solutions are explained in detail in Chapter 10.

Adopt Best Practices for Database Transactions

The more effectively you design your queries for concurrency, the faster the queries will be able to complete without blocking one another. Consider the following recommendations while designing the transactions in your queries:

- Keep the scope of the transactions as short as possible. In a transaction, include only the statements that must be committed together for data consistency.

- Prevent the possibility of transactions being left open because of poor error-handling routines or application logic. Do so using the following techniques:

- Use SET XACTABORT ON to ensure that a transaction is aborted or rolled back on an error condition within the transaction.

- After executing a stored procedure or a batch of queries containing a transaction from a client code, always check for an open transaction, and then roll back any open transactions using the following SQL statement:

IF @@TRANC0UNT > 0 ROLLBACK

- Use the lowest level of transaction isolation required to maintain data consistency as determined by your application requirements. The amount of isolation provided by the Read Committed isolation level, the default isolation level, is sufficient most of the time. If excessive locking is occurring, consider using the Read Committed Snapshot isolation level.

The impact of transactions on database performance is explained in detail in Chapter 12.

Eliminate or Reduce the Overhead of Database Cursors

Since SQL Server is designed to work with sets of data, processing multiple rows using DML statements is generally much faster than processing the rows one by one using database cursors. If you find yourself using lots of cursors, reexamine the logic to see whether there are ways you can eliminate the cursors. If you must use a database cursor, then use the database cursor with the least overhead: the FASTFORWARD cursor type (generally referred to as the fast-forward-only cursor). You can also use the equivalent DataReader object in ADO.NET.

The performance overhead of database cursors is explained in detail in Chapter 14.

Here’s a checklist of the server and database configurations settings that have a big impact on database performance:

- Affinity mask

- Memory configuration options

- Cost threshold for parallelism

- Max degree of parallelism

- Optimize for ad hoc workloads

- Query governor cost limit

- Fill factor (%)

- Blocked process threshold

- Database file layout

- Database compression

I cover these settings in more detail in the sections that follow.

As explained in the “Parallel Plan Optimization” section of Chapter 9, the Affinity Mask setting is a special configuration setting at the server level that you can use to restrict the specific CPUs available to SQL Server. It is recommended that you keep this setting at its default value of 0, which allows SQL Server to use all the CPUs of the machine.

Memory Configuration Options

As explained in the “SQL Server Memory Management” section of Chapter 2, it is strongly recommended that the max server memory setting be configured to a nondefault value determined by the system configuration. These memory configurations of SQL Server are explained in detail in the “Memory Bottleneck Analysis” and “Memory Bottleneck Resolutions” sections of Chapter 2.

Cost Threshold for Parallelism

On systems with multiple processors, the parallel execution of queries is possible. The default value for parallelism is 5. This represents a cost estimate by the optimizer of a five-second execution on the query. In most circumstances, I’ve found this value to be too low; in other words, a higher threshold for parallelism results in better performance. Testing on your system will help you determine the appropriate value.

When a system has multiple processors available, by default SQL Server will use all of them during parallel executions. To better control the load on the machine, you may find it useful to limit the number of processors used by parallel executions. Further, you may need to set the affinity so that certain processors are reserved for the operating system and other services running alongside SQL Server. OLTP systems may receive a benefit from disabling parallelism entirely. Try increasing the cost threshold for parallelism first because, even in OLTP systems, there are queries that will benefit from parallel execution. You may also explore the possibility of using the Resource Governor to control some workloads.

Optimize for Ad Hoc Workloads

If the primary calls being made to your system come in as ad hoc or dynamic SQL instead of through well-defined stored procedures or parameterized queries, such as you might find in some of the implementation of object relational mapping (ORM) software, then turning on the optimize for ad hoc workloads setting will reduce the consumption of procedure cache because plan stubs are created for initial query calls, instead of full execution plans. This is covered in detail in Chapter 10.

To reduce contention and prevent a few processes from taking over the machine, you can set query governor cost limit so that any given query execution has an upper time limit in seconds. This value is based on the estimated cost as determined by the optimizer, so it prevents queries from running if they exceed the cost limit set here. Setting the query governor provides another reason to maintain the index statistics; doing so enables you to get good execution plan estimates.

Fill Factor (%)

When creating a new index or rebuilding an existing one, you can use the fill factor setting to specify a default fill factor (i.e., it specifies the amount of free space to be left on a page). Choosing an appropriate value for your system requires testing and knowledge of the use of the system. Fill factor was discussed in detail in Chapter 4.

The blocked process threshold setting defines in seconds when a blocked process report is fired. When a query runs and exceeds the threshold, the report is fired. An alert, which can be used to send an email or a text message, is also fired. Testing an individual system determines what value to set this to. You can monitor for this using events within traces defined by SQL Profiler.

For easy reference, I’ll list the best practices you should consider when laying out database files:

- Place the data and transaction log files of a user database on different disks. This allows the transaction log disk head to progress sequentially without being moved randomly by the nonsequential I/Os commonly used for the data files.

- Placing the transaction log on a dedicated disk also enhances data protection. If a database disk fails, you will be able to save the completed transactions until the point of failure by performing a backup of the transaction log. By using this last transaction log backup during the recovery process, you will be able to recover the database up to the point of failure. This is known as point-in-time recovery.

- Avoid RAID 5 for transaction logs because, for every write request, RAID 5 disk arrays incur twice the number of disk I/Os compared to RAID 1 or 10.

- You may choose RAID 5 for data files, since even in a heavy OLTP system, the number of read requests is usually seven to eight times the number of write requests. Also, for read requests the performance of RAID 5 is similar to that of RAID 1 and RAID 10 with an equal number of total disks.

- Look into moving to a more modern disk subsystem like SSD or FusionIO.

For a detailed understanding of database file layout and RAID subsystems, please refer to the “Disk Bottleneck Resolutions” section of Chapter 2.

Database Compression

SQL Server 2012 supplies data compression with the Enterprise and Developer Editions of the product. This can provide a great benefit in space used and in performance as more data gets stored on a page. These benefits come at the cost of added overhead in the CPU and memory of the system; however, the benefits usually far outweigh the costs. Take this into account as you implement compression.

For your reference, here is a short list of the performance-related database administrative activities that you should perform on a regular basis as part of the process of managing your database server:

- Keep the statistics up-to-date.

- Maintain a minimum amount of index defragmentation.

- Cycle the SQL error log file.

- Avoid automatic database functions such as AUTOCL0SE or AUTOSHRINK.

- Minimize the overhead of SQL tracing.

In the following sections, I cover the preceding activities in more detail.

![]() Note For a detailed explanation of SQL Server 2012 administration needs and methods, please refer to the Microsoft SQL Server Books Online article, “Administration: How To Topics” ( http://msdn.microsoft.com/en-us/library/bb522544.aspx).

Note For a detailed explanation of SQL Server 2012 administration needs and methods, please refer to the Microsoft SQL Server Books Online article, “Administration: How To Topics” ( http://msdn.microsoft.com/en-us/library/bb522544.aspx).

Keep the Statistics Up-to-Date

The performance impact of database statistics is explained in detail in Chapter 7; however, this short list will serve as a quick and easy reference for keeping your statistics up-to-date:

- Allow SQL Server to automatically maintain the statistics of the data distribution in the tables by using the default settings for the configuration parameters AUT0_CREATE_ STATISTICS and AUTO_UPDATE_STATISTICS.

- As a proactive measure, you can programmatically update the statistics of every database object on a regular basis as you determine it is needed and supported within your system. This practice partly protects your database from having outdated statistics in case the auto update statistics feature fails to provide a satisfactory result. In Chapter 7, I illustrate how to set up a SQL Server job to programmatically update the statistics on a regular basis.

- Remember that you also have the ability to update the statistics in an asynchronous fashion. This reduces the contention on stats as they’re being updated; thus, if you have a system with fairly constant access, you can use this method to update the statistics more frequently.

![]() Note Please ensure that the statistics update job is scheduled before the completion of the index

Note Please ensure that the statistics update job is scheduled before the completion of the index

defragmentation job, as explained later in this chapter.

Maintain a Minimum Amount of Index Defragmentation

The following best practices will help you maintain a minimum amount of index defragmentation:

- Defragment a database on a regular basis during nonpeak hours.

- On a regular basis, determine the level of fragmentation on your indexes; and then, based on that fragmentation, either rebuild the index or defrag the index by executing the defragmentation queries outlined in Chapter 4.

- Remember that very small tables don’t need to be defragmented at all.

Cycle the SQL Error Log File

By default, the SQL Server error log file keeps growing until SQL Server is restarted. Every time SQL Server is restarted, the current error log file is closed and renamed errorlog.1. The old errorlog.1 is renamed errorlog.2, and so on. Subsequently, a new error log file is created. Therefore, if SQL Server is not restarted for a long time, as expected for a production server, the error log file may grow to a very large size, making it not only difficult to view the file in an editor, but also very memory unfriendly when the file is opened.

SQL Server provides a system stored procedure, sp_cycle_errorlog, that you can use to cycle the error log file without restarting SQL Server. To keep control over the size of the error log file, you must cycle the error log file periodically by executing sp_cycle_errorlog, as follows:

EXEC master.dbo.sp_cycle_errorlog

Use a SQL Server job to schedule running this query on a regular basis.

Avoid Database Functions Such As AUTO_CLOSE or AUTO_SHRINK

AUTO_CLOSE cleanly shuts down a database and frees all its resources when the last user connection is closed. This means all data and queries in the cache are automatically flushed. When the next connection comes in, not only does the database have to restart, but all the data has to be reloaded into the cache. Also, stored procedures and the other queries have to be recompiled. That’s an extremely expensive operation for most database systems. Leave AUT0_CL0SE set to the default of OFF.

AUTO_SHRINK periodically shrinks the size of the database. It can shrink the data files and, when in Simple Recovery mode, the log files. While doing this, it can block other processes, seriously slowing down your system. More often than not, file growth is also set to occur automatically on systems with AUTO_SHRINK enabled, so your system will be slowed down yet again when the data or log files have to grow. Set your database sizes to an appropriate size, and monitor them for growth needs. If you must grow them automatically, do so by physical increments, not by percentages.

Database backup is a broad topic and can’t be given due justice in this query optimization book. Nevertheless, I suggest that, when it comes to database performance, you be attentive to the following aspects of your database backup process:

- Differential and transaction log backup frequency

- Backup distribution

- Backup compression

The next sections go into more detail on these suggestions.

Incremental and Transaction Log Backup Frequency

For an OLTP database, it is mandatory that the database be backed up regularly so that, in case of a failure, the database can be restored on a different server. For large databases, the full database backup usually takes a very long time, so full backups cannot be performed often. Consequently, full backups are performed at widespread time intervals, with incremental backups and transaction log backups scheduled more frequently between two consecutive full backups. With the frequent incremental and transaction log backups set in place, if a database fails completely, the database can be restored up to a point in time.

Differential backups can be used to reduce the overhead of a full backup by backing up only the data that has changed since the last full backup. Because this is potentially much faster, it will cause less of a slowdown on the production system. Each situation is unique, so you need to find the method that works best for you. As a general rule, I recommend taking a weekly full backup and then daily differential backups. From there, you can determine the needs of your transaction log backups.

Frequently backing up of the transaction log adds a small amount of overhead to the server, especially during peak hours.

For most businesses, the acceptable amount of data loss (in terms of time) usually takes precedence over conserving the log-disk space or providing ideal database performance. Therefore, you must take into account the acceptable amount of data loss when scheduling the transaction log backup, as opposed to randomly setting the backup schedule to a low-time interval.

When multiple databases need to be backed up, you must ensure that all full backups are not scheduled at the same time, so that the hardware resources are not hit at the same time. If the backup process involves backing up the databases to a central SAN disk array, then the full backups from all the database servers must be distributed across the backup time window, so that the central backup infrastructure doesn’t get slammed by too many backup requests at the same time. Flooding the central infrastructure with a great deal of backup requests at the same time forces the components of the infrastructure to spend a significant part of their resources just managing the excessive number of requests. This mismanaged use of the resources increases the backup durations significantly, causing the full backups to continue during peak hours, and thus affecting the performance of the user requests.

To minimize the impact of the full backup process on database performance, you must first determine the nonpeak hours when full backups can be scheduled, and then distribute the full backups across the nonpeak time window, as follows:

1. Identify the number of databases that must be backed up.

2. Prioritize the databases in order of their importance to the business.

3. Determine the nonpeak hours when the full database backups can be scheduled.

4. Calculate the time interval between two consecutive full backups as follows: Time interval = (Total backup time window) / (Number of full backups)

5. Schedule the full backups in order of the database priorities, with the first backup starting at the start time of the backup window and subsequent backups spread uniformly at the time intervals calculated in the preceding equation.

This uniform distribution of the full backups will ensure that the backup infrastructure is not flooded with too many backup requests at the same time, thereby reducing the impact of the full backups on the database performance.

For relatively large databases, the backup durations and backup file sizes usually become an issue. Long backup durations make it difficult to complete the backups within the administrative time windows and thus start affecting the end user’s experience. The large size of the backup files makes space management for the backup files quite challenging, and it increases the pressure on the network when the backups are performed across the network to a central backup infrastructure. Compression also acts to speed up the backup process since fewer writes to the disk are needed.

The recommended way to optimize the backup duration, the backup file size, and the resultant network pressure is to use backup compression. SQL Server 2012 allows for backup compression for the Standard Edition and better.

Summary

Performance optimization is an ongoing process. It requires continual attention to database and query characteristics that affect performance. The goal of this chapter was to provide you with a checklist of these characteristics to serve as a quick and easy reference during the development and maintenance phase of your database applications.