![]()

Transactions, Locking, Blocking, and Deadlocking

In this chapter, I’ll review recipes for handling transactions, lock monitoring, blocking, and deadlocking. I’ll review the SQL Server table option that allows you to either disable lock escalation or enable it for a partitioned table. I’ll demonstrate the snapshot isolation level, as well as Dynamic Management Views (DMVs), which are used to monitor and troubleshoot blocking and locking.

Transactions are an integral part of a relational database system, and they help define a single unit of work. This unit of work can include one or more Transact-SQL statements, which are either committed or rolled back as a group. This all-or-none functionality helps prevent partial updates or inconsistent data states. A partial update occurs when one part of an interrelated process is rolled back or cancelled without rolling back or reversing all of the other parts of the interrelated processes.

A transaction is bound by the four properties of the ACID test. ACID stands for Atomicity, Consistency, Isolation (or Independence), and Durability.

- Atomicity means that the transactions are an all-or-nothing entity—carrying out all the steps or none at all.

- Consistency ensures that the data is valid both before and after the transaction. Data integrity must be maintained (foreign key references, for example), and internal data structures need to be in a valid state.

- Isolation is a requirement that transactions not be dependent on other transactions that may be taking place concurrently (either at the same time or overlapping). One transaction can’t see another transaction’s data that is in an intermediate state, but instead sees the data as it was either before the transaction began or after the transaction completes.

- Durability means that the transaction’s effects are fixed after the transaction has committed, and any changes will be recoverable after system failures.

In this chapter, I’ll demonstrate and review the SQL Server functionality and mechanisms that are used to ensure ACID test compliance, namely: locking and transactions.

There are three possible transaction types in SQL Server: autocommit, explicit, or implicit.

Autocommit is the default behavior for SQL Server, where each separate Transact-SQL statement you execute is automatically committed after it is finished. For example, it is possible for you to have two INSERT statements, with the first one failing and the second one succeeding; the second change is maintained, because each INSERT is automatically contained in its own transaction. Although this mode frees the developer from having to worry about explicit transactions, depending on this mode for transactional activity can be a mistake. For example, if you have two transactions, one that credits an account and another that debits it, and the first transaction failed, you’ll have a debit without the credit. This may make the bank happy, but not necessarily the customer, who had his account debited. Autocommit is even a bit dangerous for ad hoc administrative changes; for example, if you accidentally delete all rows from a table, you don’t have the option of rolling back the transaction after you’ve realized the mistake.

Implicit transactions occur when the SQL Server session automatically opens a new transaction when one of the following statements is first executed: ALTER TABLE, FETCH, REVOKE, CREATE, GRANT, SELECT, DELETE, INSERT, TRUNCATE TABLE, DROP, OPEN, and UPDATE.

A new transaction is automatically created (opened) once any of the aforementioned statements are executed and remains open until either a ROLLBACK or COMMIT statement is issued. The initiating command is included in the open transaction. Implicit mode is activated by executing the following command in your query session:

SET IMPLICIT_TRANSACTIONS ON;

To turn this off (back to explicit mode), execute the following:

SET IMPLICIT_TRANSACTIONS OFF;

Implicit mode can be very troublesome in a production environment, because application designers and end users could forget to commit transactions, leaving them open to block other connections (more on blocking later in this chapter).

Explicit transactions are those you define yourself. This is by far the recommended mode of operation when performing data modifications for your database application. This is because you explicitly control which modifications belong to a single transaction, as well as the actions that are performed if an error occurs. Modifications that must be grouped together are done using your own instruction.

Explicit transactions use the Transact-SQL commands and keywords described in Table 12-1.

Table 12-1. Explicit Transaction Commands

|

Command |

Description |

|---|---|

|

BEGIN TRANSACTION |

Sets the starting point of an explicit transaction. |

|

ROLLBACK TRANSACTION |

Restores original data modified by a transaction and brings data back to the state it was in at the start of the transaction. Resources held by the transaction are freed. |

|

COMMIT TRANSACTION |

Ends the transaction if no errors were encountered and makes changes permanent. Resources held by the transaction are freed. |

|

BEGIN DISTRIBUTED TRANSACTION |

Allows you to define the beginning of a distributed transaction to be managed by Microsoft Distributed Transaction Coordinator (MSDTC). MSDTC must be running both locally and remotely. |

|

SAVE TRANSACTION |

Issues a savepoint within a transaction, which allows you to define a location to which a transaction can return if part of the transaction is cancelled. A transaction must be rolled back or committed immediately after being rolled back to a savepoint. |

|

@@TRANCOUNT |

Returns the number of active transactions for the connection. BEGIN TRANSACTION increments @@TRANCOUNT by 1, while ROLLBACK TRANSACTION resets @@TRANCOUNT to 0 while COMMIT TRANSACTION decrements @@TRANCOUNT by 1. ROLLBACK TRANSACTION to a savepoint has no impact. |

12-1. Using Explicit Transactions

Problem

You are attempting to implement explicit transactions within your code and need to be able to commit the data changes only upon meeting certain criteria; otherwise, the data changes should not occur.

Solution

You can use explicit transactions to COMMIT or ROLLBACK a data modification depending on the return of an error in a batch of statements. See the following:

USE AdventureWorks2014;

GO

/* -- Before count */

SELECT BeforeCount = COUNT(*)

FROM HumanResources.Department;

/* -- Variable to hold the latest error integer value */

DECLARE @Error int;

BEGIN TRANSACTION

INSERT INTO HumanResources.Department (Name, GroupName)

VALUES ('Accounts Payable', 'Accounting'),

SET @Error = @@ERROR;

IF (@Error<> 0)

GOTO Error_Handler;

INSERT INTO HumanResources.Department (Name, GroupName)

VALUES ('Engineering', 'Research and Development'),

SET @Error = @@ERROR;

IF (@Error <> 0)

GOTO Error_Handler;

COMMIT TRANSACTION

Error_Handler:

IF @Error <> 0

BEGIN

ROLLBACK TRANSACTION;

END

/* -- After count */

SELECT AfterCount = COUNT(*)

FROM HumanResources.Department;

GO

This query returns the following:

BeforeCount 16

(1 row(s) affected)

(1 row(s) affected)

Msg 2601, Level 14, State 1, Line 14

Cannot insert duplicate key row in object 'HumanResources.Department'

with unique index 'AK_Department_Name'.

The duplicate key value is (Engineering).

The statement has been terminated.

AfterCount 16

(1 row(s) affected)

How It Works

The first statement in this example validated the count of rows in the HumanResources.Department table, returning 16 rows:

-- Before count

SELECT BeforeCount = COUNT(*)

FROM HumanResources.Department;

A local variable was created to hold the value of the @@ERROR function (which captures the latest error state of a SQL statement):

-- Variable to hold the latest error integer value

DECLARE @Error int

Next, an explicit transaction was started:

BEGIN TRANSACTION

The next statement attempted an INSERT into the HumanResources.Department table. There was a unique key on the department name, but because the department name didn’t already exist in the table, the insert succeeded. See here:

INSERT INTO HumanResources.Department (Name, GroupName)

VALUES ('Accounts Payable', 'Accounting'),

Next was an error handler for the INSERT:

SET @Error = @@ERROR

IF (@Error <> 0) GOTO Error_Handler

This line of code evaluates the @@ERROR function. The @@ERROR system function returns the last error number value for the last-executed statement within the scope of the current connection. The IF statement says if an error occurs, the code should jump to the Error_Handler section of the code (using GOTO).

GOTO is a keyword that helps you control the flow of statement execution. The identifier after GOTO, Error_Handler, is a user-defined code section.

Next, another insert is attempted, this time for a department that already exists in the table. Because the table has a unique constraint on the Name column, this insert will fail:

INSERT INTO HumanResources.Department (Name, GroupName)

VALUES ('Engineering', 'Research and Development'),

The failure will cause the @@ERR0R following this INSERT to be set to a nonzero value. The IF statement will then evaluate to TRUE, which will invoke the GOTO, thus skipping over the COMMIT TRAN to the Error_Handler section:

SET @Error = @@ERROR;

IF (@Error <> 0)

GOTO Error_Handler;

COMMIT TRAN

Following the Error_Handler section is a ROLLBACK TRANSACTION.

Error_Handler:

IF @Error <> 0

BEGIN

ROLLBACK TRANSACTION;

END

Another count is performed after the rollback, and again, there are only 16 rows in the database. This is because both INSERTs were in the same transaction and one of the INSERTs failed. Since a transaction is all-or-nothing, no rows were inserted. See here:

/* -- After count */

SELECT AfterCount = COUNT(*)

FROM HumanResources.Department;

The following are some thoughts and recommendations regarding how to handle transactions in your Transact-SQL code or through your application:

- Keep transaction time as short as possible for the business process at hand. Transactions that remain open can hold locks on resources for an extended period of time, which can block other users from performing work or reading data.

- Minimize resources locked by the transaction. For example, update only tables that are related to the transaction at hand. If the data modifications are logically dependent on each other, they belong in the same transaction. If not, the unrelated updates belong in their own transactions.

- Add only relevant Transact-SQL statements to a transaction. Don’t add extra lookups or updates that are not germane to the specific transaction. Executing a SELECT statement within a transaction can create locks on the referenced tables, which can in turn block other users or sessions from performing work or reading data.

- Do not open new transactions that require user or external feedback within the transaction. Open transactions can hold locks on resources, and user feedback can take an indefinite amount of time to receive. Instead, gather user feedback before issuing an explicit transaction.

12-2. Displaying the Oldest Active Transaction

Problem

Your transaction log is growing, and a backup of the log is not alleviating the issue. You fear an uncommitted transaction may be the cause of the transaction-log growth.

Solution

Use the DBCC OPENTRAN command to identify the oldest active transactions in a database. If a transaction remains open in the database, intentionally or not, this transaction can block other processes from performing activities against the modified data. Also, backups of the transaction log can only truncate the inactive portion of a transaction log, so open transactions can cause the log to grow (or reach the physical limit) until that transaction is committed or rolled back.

This example demonstrates using DBCC OPENTRAN to identify the oldest active transaction in the database:

USE AdventureWorks2014;

GO

BEGIN TRANSACTION

DELETE Production.ProductProductPhoto

WHERE ProductID = 317;

DBCC OPENTRAN('AdventureWorks2014'),

ROLLBACK TRANSACTION;

GO

This query returns the following:

(1 row(s) affected)

Transaction information for database 'AdventureWorks2014'.

Oldest active transaction:

SPID (server process ID): 54

UID (user ID) : -1

Name : user_transaction

LSN : (41:1021:39)

Start time : Dec 24 2014 12:45:53:780AM

SID : 0x010500000000000515000000a065cf7e784b9b5fe77c8770375a2900

DBCC execution completed. If DBCC printed error messages,

contact your system administrator.

How It Works

The recipe started by opening a new transaction and then deleting a specific row from the Production.ProductProductPhoto table. Next, the DBCC OPENTRAN was executed, with the database name in parentheses:

DBCC OPENTRAN('AdventureWorks2014'),

These results showed information regarding the oldest active transaction, including the server process ID, user ID, and start time of the transaction. The key pieces of information from the results are the server process ID (SPID) and start time.

Once you have this information, you can validate the Transact-SQL being executed using DMVs, figure out how long the process has been running, and, if necessary, shut down the process. DBCC OPENTRAN is useful for troubleshooting orphaned connections (connections still open in the database but disconnected from the application or client) and for identifying transactions missing a COMMIT or ROLLBACK statement.

This command also returns the oldest distributed and undistributed replicated transactions, if any exist within the database. If there are no active transactions, no session-level data will be returned.

12-3. Querying Transaction Information by Session

Problem

There is an active transaction that you want to investigate because of reported timeouts.

Solution

This recipe demonstrates how to find out more information about an active transaction by querying thesys.dm_tran_session_transactions DMV. To demonstrate, I’ll describe a common scenario: Your application is encountering a significant number of blocks with a high duration. You’ve been told that this application always opens an explicit transaction prior to each query.

To illustrate this scenario, I’ll execute the following SQL (representing the application code that is causing the concurrency issue):

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

GO

USE AdventureWorks2014;

GO

BEGIN TRAN

SELECT *

FROM HumanResources.Department

INSERT INTO HumanResources.Department (Name, GroupName)

VALUES ('Test', 'QA'),

In a new SQL Server Management Studio query window, I would like to identify all open transactions by querying the sys.dm_tran_session_transactions DMV:

SELECT session_id, transaction_id, is_user_transaction, is_local

FROM sys.dm_tran_session_transactions

WHERE is_user_transaction = 1;

GO

This results in the following (your actual session IDs and transaction IDs will vary):

Now that I have a session ID to work with (again, the session_id you receive may be different), I can dig into the details about the most recent query executed by querying sys.dm_exec_connections and sys.dm_exec_sql_text:

SELECT s.text

FROM sys.dm_exec_connections c

CROSS APPLY sys.dm_exec_sql_text(c.most_recent_sql_handle) s

WHERE c.most_recent_session_id = 51;--use the session_id returned by the previous query

GO

This returns the last statement executed. (I could have also used the sys.dm_exec_requests DMV for an ongoing and active session; however, nothing was currently executing for my example transaction, so no data would have been returned.) See here:

text

-----------------------------------------------------------

BEGIN TRAN

SELECT *

FROM HumanResources.Department

INSERT INTO HumanResources.Department (Name, GroupName)

VALUES ('Test', 'QA'),

Since I also have the transaction ID from the first query against sys.dm_tran_session_transactions, I can use sys.dm_tran_active_transactions to learn more about the transaction itself:

SELECT transaction_begin_time

,tran_type = CASE transaction_type

WHEN 1 THEN 'Read/write transaction'

WHEN 2 THEN 'Read-only transaction'

WHEN 3 THEN 'System transaction'

WHEN 4 THEN 'Distributed transaction'

END

,tran_state = CASE transaction_state

WHEN 0 THEN 'not been completely initialized yet'

WHEN 1 THEN 'initialized but has not started'

WHEN 2 THEN 'active'

WHEN 3 THEN 'ended (read-only transaction)'

WHEN 4 THEN 'commit initiated for distributed transaction'

WHEN 5 THEN 'transaction prepared and waiting resolution'

WHEN 6 THEN 'committed'

WHEN 7 THEN 'being rolled back'

WHEN 8 THEN 'been rolled back'

END

FROM sys.dm_tran_active_transactions

WHERE transaction_id = 12969598; -- change this value to the transaction_id returned in the first

--query of this recipe

GO

This returns information about the transaction start time, the type of transaction, and the state of the transaction:

|

transaction_begin_time |

tran_type |

tran_state |

|---|---|---|

|

2014-12-07 10:03:26.520 |

Read/write transaction |

active |

How It Works

This recipe demonstrated how to use various DMVs to troubleshoot and investigate a long-running, active transaction. The columns you decide to use depend on the issue you are trying to troubleshoot. In this scenario, I used the following troubleshooting path:

- I queried sys.dm_tran_session_transactions in order to display a mapping between the session ID and the transaction ID (identifier of the individual transaction).

- I queried sys.dm_exec_connections and sys.dm_exec_sql_text in order to find the latest command executed by the session (referencing the most_recent_sql_handle column).

- Lastly, I queried sys.dm_tran_active_transactions in order to determine how long the transaction was open, the type of transaction, and the state of the transaction.

Using this troubleshooting technique allows you to go back to the application and pinpoint query calls for abandoned transactions (opened but never committed) and transactions that are inappropriate because they run too long or are unnecessary from the perspective of the application. Before proceeding, you should revisit the first query window and issue the following command to ensure the transaction is no longer running:

ROLLBACK TRANSACTION;

Locking is a normal and necessary part of a relational database system, ensuring the integrity of the data by not allowing concurrent updates to the same data or the viewing of data that is in the middle of being updated. SQL Server manages locking dynamically; however, it is still important to understand how Transact-SQL queries impact locking in SQL Server. Before proceeding to the recipes, I’ll briefly describe SQL Server locking fundamentals.

Locks help prevent concurrency problems from occurring. Concurrency problems (discussed in detail in the next section, “Transaction, Locking, and Concurrency”) can happen when one user attempts to read data that another is modifying, to modify data that another is reading, or to modify data that another transaction is trying to modify.

Locks are placed against SQL Server resources. How a resource is locked is called its lock mode. Table 12-2 reviews the main lock modes that SQL Server has at its disposal.

Table 12-2. SQL Server Lock Modes

|

Name |

Description |

|---|---|

|

Shared lock |

Shared locks are issued during read-only, nonmodifying queries. They allow data to be read but not updated by other processes while being held. |

|

Intent lock |

Intent locks effectively create a lock queue, designating the order of connections and their associated right to update or read resources. SQL Server uses intent locks to show future intention of acquiring locks on a specific resource. |

|

Update lock |

Update locks are acquired prior to modifying the data. When the row is modified, this lock is escalated to an exclusive lock. If not modified, it is downgraded to a shared lock. This lock type prevents deadlocks (discussed later in this chapter) if two connections hold a shared lock on a resource and attempt to convert to an exclusive lock but cannot because they are each waiting for the other transaction to release the shared lock. |

|

Exclusive lock |

This type of lock issues a lock on the resource that bars any kind of access (reads or writes). It is issued during INSERT, UPDATE, and DELETE statements. |

|

Schema modification |

This type of lock is issued when a DDL statement is executed. |

|

Schema stability |

This type of lock is issued when a query is being compiled. It keeps DDL operations from being performed on the table. |

|

Bulk update |

This type of lock is issued during a bulk-copy operation. Performance is increased for the bulk copy operation, but table concurrency is reduced. |

|

Key-range |

Key-range locks protect a range of rows (based on the index key)—for example, protecting rows in an UPDATE statement with a range of dates from 1/1/2014 to 12/31/2014. Protecting the range of data prevents row inserts into the date range that would be missed by the current data modification. |

You can lock all manner of resources in SQL Server, from a single row in a database to a table to the database itself. Lockable resources vary in granularity, from small (at the row level) to large (the entire database). Small-grain locks allow for greater database concurrency, because users can execute queries against specified unlocked rows. Each lock placed by SQL Server requires memory, however, so thousands of individual row locks can also affect SQL Server performance. Larger-grained locks reduce concurrency but take up fewer resources. Table 12-3 details the resources SQL Server can apply locks to.

Table 12-3. SQL Server Lock Resources

|

Resource Name |

Description |

|---|---|

|

Allocation unit |

A set of related pages grouped by data type; for example, data rows, index rows, and large object data rows |

|

Application |

An application-specified resource |

|

Database |

An entire database lock |

|

Extent |

Allocation unit of eight contiguous 8 KB data or index pages |

|

File |

The database file |

|

HOBT |

A heap (table without a clustered index) or B-tree |

|

Metadata |

System metadata |

|

Key |

Index-row lock, helping prevent phantom reads. Also called a key-range lock, this lock type uses both a range and a row component. The range represents the range of index keys between two consecutive index keys. The row component represents the lock type on the index entry |

|

Object |

A database object; for example, a table, view, stored procedure, or function |

|

Page |

An 8 KB data or index page |

|

RID |

Row identifier, designating a single table row |

|

Table |

A resource that locks entire table, data, and indexes |

Not all lock types are compatible with each other. For example, no other locks can be placed on a resource that has already been locked by an exclusive lock. The other transaction must wait, or time out, until the exclusive lock is released. A resource locked by an update lock can have a shared lock placed on it only by another transaction. A resource locked by a shared lock can have other shared or update locks placed on it.

Locks are allocated and escalated automatically by SQL Server. Escalation means that finer-grain locks (row or page locks) are converted into coarse-grain table locks. SQL Server will attempt to initialize escalation when a single Transact-SQL statement has more than 5,000 locks on a single table or index or if the number of locks on the SQL Server instance exceeds the available memory threshold. Locks take up system memory, so converting many locks into one larger lock can free up memory resources. The drawback to freeing up the memory resources, however, is reduced concurrency.

![]() Note SQL Server has a table option that allows you to disable lock escalation or enable lock escalation at the partition (instead of table) scope. I’ll demonstrate this in Recipe 12-5.

Note SQL Server has a table option that allows you to disable lock escalation or enable lock escalation at the partition (instead of table) scope. I’ll demonstrate this in Recipe 12-5.

12-4. Viewing Lock Activity

Problem

You want to check the current locking activity in SQL Server.

Solution

This recipe shows you how to monitor locking activity in the database using the SQL Server sys.dm_tran_locks DMV. The example query being monitored by this DMV will use a table locking hint.

In the first part of this recipe, a new query editor window is opened, and the following command is executed:

USE AdventureWorks2014;

BEGIN TRAN

SELECT ProductID, ModifiedDate

FROM Production.ProductDocument WITH (TABLOCKX);

In a second query editor window, the following query is executed:

SELECT sessionid = request_session_id ,

ResType = resource_type ,

ResDBID = resource_database_id ,

ObjectName = OBJECT_NAME(resource_associated_entity_id, resource_database_id) ,

RMode = request_mode ,

RStatus = request_status

FROM sys.dm_tran_locks

WHERE resource_type IN ('DATABASE', 'OBJECT'),

GO

![]() Tip This recipe narrows down the result set to two SQL Server resource types of DATABASE and OBJECT for clarity. Typically, you’ll monitor several types of resources. The resource type determines the meaning of the resource_associated_entity_id column, as I’ll explain in the “How It Works” section of this recipe.

Tip This recipe narrows down the result set to two SQL Server resource types of DATABASE and OBJECT for clarity. Typically, you’ll monitor several types of resources. The resource type determines the meaning of the resource_associated_entity_id column, as I’ll explain in the “How It Works” section of this recipe.

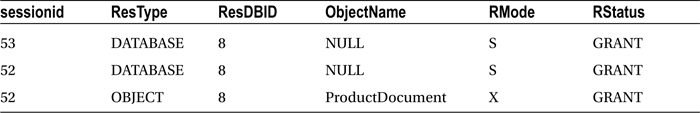

The query returned information about the locking session identifier (server process ID, or SPID), the resource being locked, the database, the object, the resource mode, and the lock status:

How It Works

The example began by starting a new transaction and executing a query against the Production.ProductDocument table using a TABLOCKX locking hint (this hint places an exclusive lock on the table). To monitor which locks were open for the current SQL Server instance, the sys.dm_tran_locks DMV was queried. It returned a list of active locks found in the AdventureWorks2014 database. The exclusive lock on the ProductDocument table could be seen in the last row of the results.

The first three columns define the session lock, resource type, and database ID:

SELECT sessionid = request_session_id ,

ResType = resource_type ,

ResDBID = resource_database_id ,

The next column uses the OBJECT_NAME function. Notice that it uses two parameters (object ID and database ID) in order to specify which name to access:

ObjectName = OBJECT_NAME(resource_associated_entity_id, resource_database_id) ,

I also query the locking request mode and status:

RMode = request_mode ,

RStatus = request_status

Lastly, the FROM clause references the DMV, and the WHERE clause designates two resource types:

FROM sys.dm_tran_locks

WHERE resource_type IN ('DATABASE', 'OBJECT'),

The resource_type column designates what the locked resource represents (for example, DATABASE, OBJECT, FILE, PAGE, KEY, RID, EXTENT, METADATA, APPLICATION, ALLOCATION_UNIT, or HOBT type). The resource_associated_entity_id depends on the resource type, determining whether the ID is an object ID, allocation unit ID, or HOBT ID:

- If the resource_associated_entity_id column contains an object ID (for a resource type of OBJECT)), you can translate the name using the sys.objects catalog view.

- If the resource_associated_entity_id column contains an allocation unit ID (for a resource type of ALLOCATION_UNIT), you can reference sys.allocation_units and reference the container_id. Container_id can then be joined to sys.partitions where you can then determine the object ID.

- If the resource_associated_entity_id column contains a HOBT ID (for a resource type of KEY, PAGE, ROW, or HOBT), you can directly reference sys.partitions and look up the associated object ID.

- For resource types such as DATABASE, EXTENT, APPLICATION, or METADATA, the resource_associated_entity_id column will be 0.

Use sys.dm_tran_locks to troubleshoot unexpected concurrency issues, such as a query session that may be holding locks longer than desired or be issuing a lock resource granularity or lock mode that you hadn’t expected (perhaps a table lock instead of a finer-grained row or page lock). Understanding what is happening at the locking level can help you troubleshoot query concurrency more effectively.

12-5. Controlling a Table’s Lock-Escalation Behavior

Problem

You want to alter how SQL Server behaves with regard to lock escalation.

Solution

Each lock that is created in SQL Server consumes memory resources. When the number of locks increases, memory decreases. If the percentage of memory being used for locks exceeds a certain threshold, SQL Server can convert fine-grained locks (page or row) into coarse-grained locks (table locks). This process is called lock escalation. Lock escalation reduces the overall number of locks being held on the SQL Server instance, thus reducing lock memory usage.

While finer-grained locks do consume more memory, they also can improve concurrency, because multiple queries can access unlocked rows. Introducing table locks may reduce memory consumption, but can also introduce blocking, because a single query holds an entire table. Depending on the application using the database, this behavior may not be desired, and you may want to exert more control over when SQL Server performs lock escalations.

SQL Server has the ability to control lock escalation at the table level using the ALTER TABLE command. You are now able to choose from the following three settings:

- TABLE, which is the default behavior used in SQL Server. When configured, lock escalation is enabled at the table level for both partitioned and nonpartitioned tables.

- AUTO enables lock escalation at the partition level (heap or B-tree) if the table is partitioned. If it is not partitioned, escalation will occur at the table level.

- DISABLE removes lock escalation at the table level. Note that you still may see table locks because of TABLOCK hints or for queries against heaps using a serializable isolation level.

This recipe demonstrates how to modify a table so as to use the AUTO and DISABLE settings:

USE AdventureWorks2014;

GO

ALTER TABLE Person.Address

SET ( LOCK_ESCALATION = AUTO );

SELECT lock_escalation,lock_escalation_desc

FROM sys.tables WHERE name='Address';

GO

This query returns the following:

|

lock_escalation |

lock_escalation_desc |

|---|---|

|

2 |

AUTO |

Next, I’ll disable escalation:

USE AdventureWorks2014;

GO

ALTER TABLE Person.Address

SET ( LOCK_ESCALATION = DISABLE);

SELECT lock_escalation,lock_escalation_desc

FROM sys.tables WHERE name='Address';

GO

This query returns the following:

|

lock_escalation |

lock_escalation_desc |

|---|---|

|

1 |

DISABLE |

How It Works

This recipe demonstrated enabling two SQL Server table options that control locking escalation. The command began with a standard ALTER TABLE statement designating the table name to modify:

ALTER TABLE Person.Address

The second line designated the SET command along with the LOCK_ESCALATION configuration to be used:

SET ( LOCK_ESCALATION = AUTO )

After changing the configuration, I was able to validate the option by querying the lock_escalation_desc column from the sys.tables catalog view:

Once the AUTO option is enabled, if the table is partitioned, lock escalation will occur at the partitioned level, which improves concurrency if there are multiple sessions acting against separate partitions.

![]() Note For further information on partitioning, see Chapter 16.

Note For further information on partitioning, see Chapter 16.

If the table is not partitioned, table-level escalation will occur as usual. If you designate the DISABLE option, table-level lock escalation will not occur. This can help improve concurrency but could result in increased memory consumption if your requests are accessing a large number of rows or pages.

Transaction, Locking, and Concurrency

One of the ACID properties is Isolation. Transaction isolation refers to the extent to which changes made by one transaction can be seen by other transactions occurring in the database (in other words, under conditions of concurrent database access). At the highest possible degree of isolation, each transaction occurs as if it were the only transaction taking place at that time. No changes made by other transactions are visible to it. At the lowest level, anything done in one transaction, whether committed or not, is visible by another transaction.

The ANSI/ISO SQL standard defines four types of interactions between concurrent transactions.

- Dirty reads: These occur while a transaction is updating a row, and a second transaction reads the row before the first transaction is committed. If the original update rolls back, the uncommitted changes will be read by the second transaction, even though they are never committed to the database. This is the definition of a dirty read.

- Nonrepeatable reads: These occur when one transaction is updating data and a second is reading the same data while the update is in progress. The data retrieved before the update will not match the data retrieved after the update.

- Phantom reads: These occur when a transaction issues two reads, and between the two reads, the underlying data is updated with data being inserted or deleted. This causes the results of each query to differ. Rows returned in one query that do not appear in the other are called phantom rows.

- Lost updates: This occurs when two transactions update a row’s value and the transaction to last update the row “wins.” Thus, the first update is lost.

SQL Server uses locking mechanisms to control the competing activity of simultaneous transactions. To avoid concurrency issues such as dirty reads, nonrepeatable reads, and so on, it implements locking to control access to database resources and to impose a certain level of transaction isolation. Table 12-4 describes the available isolation levels in SQL Server.

Table 12-4. SQL Server Isolation Levels

|

ISOLATION LEVEL |

DESCRIPTION |

|---|---|

|

READ COMMITTED (this is the default behavior of SQL Server) |

While READ COMMITTED is used, uncommitted data modifications can’t be read. Shared locks are used during a query, and data cannot be modified by other processes while the query is retrieving the data. Data inserts and modifications to the same table are allowed by other transactions, so long as the rows involved are not locked by the first transaction. |

|

READ UNCOMMITTED |

This is the least restrictive isolation level, issuing no locks on the data selected by the transaction. This provides the highest concurrency but the lowest amount of data integrity, because the data you read can be changed while you read it (as mentioned previously, these reads are known as dirty reads), or new data can be added or removed that would change your original query results. This option allows you to read data without blocking others, but with the danger of reading data “in flux” that could be modified during the read itself (including reading data changes from a transaction that ends up getting rolled back). For relatively static and unchanging data, this isolation level can potentially improve performance by instructing SQL Server not to issue unnecessary locking on the accessed resources. |

|

REPEATABLE READ |

When enabled, dirty and nonrepeatable reads are not allowed. This is achieved by placing shared locks on all read resources. New rows that may fall into the range of data returned by your query can, however, still be inserted by other transactions. |

|

SERIALIZABLE |

When enabled, this is the most restrictive setting. Range locks are placed on the data based on the search criteria used to produce the result set. This ensures that actions such as the insertion of new rows, the modification of values, or the deletion of existing rows that would have been returned within the original query and search criteria are not allowed. |

|

SNAPSHOT |

This isolation level allows you to read a transactionally consistent version of the data as it existed at the beginning of a transaction. Data reads do not block data modifications. However, the SNAPSHOT session will not detect changes being made. |

Transactions and locking go hand in hand. Depending on your application design, your transactions can significantly impact database concurrency and performance. Concurrency refers to how many people can query and modify the database and database objects at the same time. For example, the READ UNCOMMITTED isolation level allows the greatest amount of concurrency, since it issues no locks—with the drawback that you can encounter a host of data-isolation anomalies (dirty reads, for example). The SERIALIZABLE mode, however, offers very little concurrency with other processes when querying a larger range of data.

12-6. Configuring a Session’s Transaction-Locking Behavior

Problem

You want to change the default transaction-locking behavior for Transact-SQL statements used in a connection.

Solution

Use the SET TRANSACTION ISOLATION LEVEL command to set the default transaction-locking behavior for Transact-SQL statements used in a connection. You can have only one isolation level set at a time, and the isolation level does not change unless explicitly set. SET TRANSACTION ISOLATION LEVEL allows you to change the locking behavior for a specific database connection. The syntax for this command is as follows:

SET TRANSACTION ISOLATION LEVEL { READ UNCOMMITTED | READ COMMITTED

REPEATABLE READ

SNAPSHOT | SERIALIZABLE }

In this first example, SERIALIZABLE isolation is used to query the contents of a table. In the first query editor window, the following code is executed:

USE AdventureWorks2014;

GO

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

GO

BEGIN TRANSACTION

SELECT AddressTypeID, Name

FROM Person.AddressType

WHERE AddressTypeID BETWEEN 1 AND 6;

GO

This query returns the following results (while still leaving a transaction open for the query session):

AddressTypeID Name

1 Billing

2 Home

3 Main Office

4 Primary

5 Shipping

6 Archive

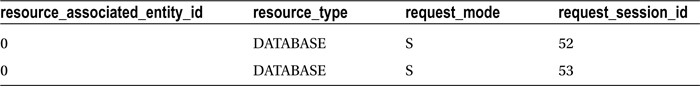

In a second query editor, the following query is executed to view the kinds of locks generated by the SERIALIZABLE isolation level:

SELECT resource_associated_entity_id, resource_type,

request_mode, request_session_id

FROM sys.dm_tran_locks;

GO

This shows several key locks being held for request_session_id 52 (which is the other session’s ID):

Back in the first query editor window, execute the following code to end the transaction and remove the locks:

COMMIT TRANSACTION;

In contrast, the same query is executed again in the first query editor window, this time using the READ UNCOMMITTED isolation level to read the range of rows:

USE AdventureWorks2014;

GO

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;

GO

BEGIN TRANSACTION

SELECT AddressTypeID, Name

FROM Person.AddressType

WHERE AddressTypeID BETWEEN 1 AND 6;

GO

In a second query editor, the following query is executed to view the kinds of locks generated by the READ UNCOMMITTED isolation level:

SELECT resource_associated_entity_id, resource_type,

request_mode, request_session_id

FROM sys.dm_tran_locks;

GO

This returns the following (abridged) results:

Unlike SERIALIZABLE, the READ UNCOMMITTED isolation level creates no additional locks on the keys of the Person.AddressType table.

Returning to the first query editor with the READ UNCOMMITTED query, the transaction is ended for cleanup purposes:

COMMIT TRANSACTION;

I’ll demonstrate the SNAPSHOT isolation level next. In the first query editor window, the following code is executed:

ALTER DATABASE AdventureWorks2014

SET ALLOW_SNAPSHOT_ISOLATION ON;

GO

USE AdventureWorks2014;

GO

SET TRANSACTION ISOLATION LEVEL SNAPSHOT;

GO

BEGIN TRANSACTION

SELECT CurrencyRateID,EndOfDayRate

FROM Sales.CurrencyRate

WHERE CurrencyRateID = 8317;

This query returns the following:

|

CurrencyRateID |

EndOfDayRate |

|---|---|

|

8317 |

0.6862 |

In a second query editor window, the following query is executed:

USE AdventureWorks2014;

GO

UPDATE Sales.CurrencyRate

SET EndOfDayRate = 1.00

WHERE CurrencyRateID = 8317;

GO

Now back to the first query editor; the following query is executed once more:

SELECT CurrencyRateID,EndOfDayRate

FROM Sales.CurrencyRate

WHERE CurrencyRateID = 8317;

GO

This query returns the following:

|

CurrencyRateID |

EndOfDayRate |

|---|---|

|

8317 |

0.6862 |

The same results are returned as before, even though the row was updated by the second query editor query. The SELECT was not blocked from reading the row, nor was the UPDATE blocked from making the modification.

Now, return to the first query window to commit the transaction and reissue the query:

COMMIT TRANSACTION;

SELECT CurrencyRateID,EndOfDayRate

FROM Sales.CurrencyRate

WHERE CurrencyRateID = 8317;

GO

This returns the updated value:

|

CurrencyRateID |

EndOfDayRate |

|---|---|

|

8317 |

1.00 |

How It Works

In this recipe, I demonstrated how to change the locking isolation level of a query session by using SET TRANSACTION ISOLATION LEVEL. Executing this command isn’t necessary if you want to use the default SQL Server isolation level, which is READ COMMITTED. Otherwise, once you set an isolation level, it remains in effect for the connection until explicitly changed again.

The first example in the recipe demonstrated using the SERIALIZABLE isolation level:

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

GO

An explicit transaction was then started, and a query was executed against the Person.AddressType table for all rows that fell within a specific range of AddressTypeID values:

BEGIN TRANSACTION

SELECT AddressTypeID, Name

FROM Person.AddressType

WHERE AddressTypeID BETWEEN 1 AND 6;

In a separate connection, a query was then executed against the sys.dm_tran_locks DMV, which returned information about active locks being held for the SQL Server instance. In this case, we saw a number of key range locks, which served the purpose of prohibiting other connections from inserting, updating, or deleting data that would cause different results in the query’s search condition (WHERE AddressTypeID BETWEEN 1 AND 6).

In the second example, the isolation level was set to READ UNCOMMITTED:

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;

GO

Querying sys.dm_tran_locks again, we saw that this time no row, key, or page locks were held at all on the table, allowing other transactions to potentially modify the queried rows while the original transaction remained open. With this isolation level, the query performs dirty reads, meaning that the query could read data with in-progress modifications, whether or not the actual modification is committed or rolled back later.

In the third example from the recipe, the database setting ALLOW_SNAPSHOT_ISOLATION was enabled for the database:

ALTER DATABASE AdventureWorks2014

SET ALLOW_SNAPSHOT_ISOLATION ON;

GO

This option had to be ON in order to start a SNAPSHOT transaction. In the next line of code, the database context was changed, and SET TRANSACTION ISOLATION LEVEL was set to SNAPSHOT:

USE AdventureWorks2014;

GO

SET TRANSACTION ISOLATION LEVEL SNAPSHOT;

GO

A transaction was then opened, and a query against Sales.CurrencyRate was performed:

BEGIN TRANSACTION

SELECT CurrencyRateID,EndOfDayRate

FROM Sales.CurrencyRate

WHERE CurrencyRateID = 8317;

In the second query editor session, the same Sales.CurrencyRate row being selected in the first session query was modified:

USE AdventureWorks2014;

GO

UPDATE Sales.CurrencyRate

SET EndOfDayRate = 1.00

WHERE CurrencyRateID = 8317;

GO

Back at the first query editor session, although the EndOfDayRate was changed to 1.0 in the second session, executing the query again in the SNAPSHOT isolation level showed that the value of that column was still 0.6862. This new isolation level provided a consistent view of the data as of the beginning of the transaction. After committing the transaction, reissuing the query against Sales.CurrencyRate revealed the latest value.

What if you decide to UPDATE a row in the SNAPSHOT session that was updated in a separate session? Had the SNAPSHOT session attempted an UPDATE against CurrencyRateID 8317 instead of a SELECT, an error would have been raised, warning you that an update was made against the original row while in SNAPSHOT isolation mode.

Msg 3960, Level 16, State 1, Line 2

Cannot use snapshot isolation to access table 'Sales.CurrencyRate'

directly or indirectly in database 'AdventureWorks2014'.

Snapshot transaction aborted due to update conflict.

Retry transaction.

Blocking

Blocking occurs when one transaction in a database session is locking resources that one or more other session transactions want to read or modify. Short-term blocking is usually okay and is expected for busy applications. However, poorly designed applications can cause long-term blocking, unnecessarily keeping locks on resources and blocking other sessions from reading or updating them.

In SQL Server, a blocked process remains blocked indefinitely or until it times out (based on SET LOCK_TIMEOUT), the server goes down, the process is killed, the connection finishes its updates, or something happens to the original transaction to cause it to release its locks on the resource.

Here are some reasons why long-term blocking can happen:

- Excessive row locks on a table without an index can cause SQL Server to acquire a table lock, blocking out other transactions.

- Applications open a transaction and then request user feedback or interaction while the transaction stays open. This is usually when an end user is allowed to enter data in a GUI while a transaction remains open. While open, any resources referenced by the transaction may be held with locks.

- Transactions BEGIN and then look up data that could have been referenced prior to the transaction starting.

- Queries use locking hints inappropriately; for example, if the application needs only a few rows but uses a table-lock hint instead.

- The application uses long-running transactions that update many rows or many tables within one transaction (chunking large updates into smaller update transactions can help improve concurrency).

12-7. Identifying and Resolving Blocking Issues

Problem

You need to identify any blocking processes, and associated TSQL being executed, within your database.

Solution

In this recipe, I’ll demonstrate how to identify a blocking process, view the Transact-SQL being executed by the process, and then forcibly shut down the active session’s connection (thus rolling back any open work not yet committed by the blocking session). First, however, let’s look at a quick background of the commands used in this example.

This recipe demonstrates how to identify blocking processes with the SQL Server DMV sys.dm_os_waiting_tasks. This view is intended to be used in lieu of the sp_who2 system-stored procedure, which was used in previous versions of SQL Server.

After identifying the blocking process, this recipe will then use the sys.dm_exec_sql_text dynamic management function and sys.dm_exec_connections DMV used earlier in the chapter to identify the SQL text of the query that is being executed—and then, as a last resort, forcefully end the process.

To forcefully shut down a wayward active query session, the KILL command is used. KILL should be used only if other methods are not available, including waiting for the process to stop on its own or shutting down or canceling the operation via the calling application. The syntax for KILL is as follows:

KILL {SPID | UOW} [WITH STATUSONLY]

Table 12-5 describes the arguments for this command.

Table 12-5. KILL Command Arguments

|

Argument |

Description |

|---|---|

|

SPID |

This indicates the session ID associated with the active database connection to be shut down. |

|

UOW |

This is the unit-of-work identifier for a distributed transaction, which is the unique identifier of a specific distributed transaction process. |

|

WITH STATUSONLY |

Some KILL statements take longer to roll back a transaction than others (depending on the scope of updates being performed by the session). To check the status of a rollback, you can use WITH STATUSONLY to get an estimate of rollback time. |

Beginning the example, the following query is executed in the first query editor session in order to set up a blocking process:

USE AdventureWorks2014;

GO

BEGIN TRAN

UPDATE Production.ProductInventory

SET Quantity = 400

WHERE ProductID = 1 AND LocationID = 1;

Next, in a second query editor window, the following query is executed:

USE AdventureWorks2014;

GO

BEGIN TRAN

UPDATE Production.ProductInventory

SET Quantity = 406

WHERE ProductID = 1 AND LocationID = 1;

Now, in a third query editor window, this next query is executed:

SELECT blocking_session_id, wait_duration_ms, session_id

FROM sys.dm_os_waiting_tasks

WHERE blocking_session_id IS NOT NULL;

GO

This query returns the following (your results will vary):

|

blocking_session_id |

wait_duration_ms |

session_id |

|---|---|---|

|

53 |

27371 |

52 |

This query identified that session 53 is blocking session 52.

To see what session 53 is doing, execute the following query in the same window as the previous query:

SELECT t.text

FROM sys.dm_exec_connections c

CROSS APPLY sys.dm_exec_sql_text (c.most_recent_sql_handle) t

WHERE c.session_id = 53; --use the blocking_session_id from the previous query

GO

This query returns the following:

text

BEGIN TRAN

UPDATE Production.ProductInventory

SET Quantity = 400

WHERE ProductID = 1 AND LocationID = 1;

Next, to forcibly shut down the session, execute this query:

KILL 53;

This results in the following:

Command(s) completed successfully.

The second session’s UPDATE is then allowed to proceed once the first session’s connection is removed.

How It Works

The recipe demonstrated blocking by executing an UPDATE against the Production.Productlnventory table that had a transaction that had been opened but not committed. In a different session, a similar query was executed against the same table and the same row. Because the other connection’s transaction never committed, the second connection must wait in line indefinitely before it has a chance to update the record.

In a third query editor window, the sys.dm_os_waiting_tasks DMV was queried, returning information on the session being blocked by another session.

When troubleshooting blocks, you’ll want to see exactly what the blocking session_id is doing. To view this, the recipe used a query against sys.dm_exec_connections and sys.dm_exec_sql_text. The sys.dm_exec_connections DMV was used to retrieve the most_recent_sql_handle column for session_id 53. This is a pointer to the SQL text in memory and was used as an input parameter for the sys.dm_exec_sql_text dynamic management function. The text column is returned from sys.dm_exec_sql_text, displaying the SQL text of the blocking process.

![]() Note Often blocks chain, and you must work your way through each blocked process up to the original blocking process using the blocking_session_id and session_id columns.

Note Often blocks chain, and you must work your way through each blocked process up to the original blocking process using the blocking_session_id and session_id columns.

KILL was then used to forcibly end the blocking process, but in a production scenario, you’ll want to see whether the process is valid and, if so, whether it should be allowed to complete or whether it can be shut down or cancelled using the application (by the application end user, for example). Prior to stopping the process, be sure you are not stopping a long-running transaction that is critical to the business, like a payroll update, for example. If there is no way to stop the transaction (for example, the application that spawned it cannot commit the transaction), you can use the KILL command (followed by the SPID to terminate).

12-8. Configuring How Long a Statement Will Wait for a Lock to Be Released

Problem

You need to extend how long a transaction can wait if it is blocked by another transaction.

Solution

When a transaction or statement is being blocked, it is waiting for a lock on a resource to be released. This recipe demonstrates the SET LOCK_TIMEOUT option, which specifies how long the blocked statement should wait for a lock to be released before returning an error.

The syntax is as follows:

SET LOCK_TIMEOUT timeout_period

The timeout period is the number of milliseconds before a locking error will be returned. To set up this recipe’s demonstration, I will execute the following batch:

USE AdventureWorks2014;

GO

BEGIN TRAN

UPDATE Production.ProductInventory

SET Quantity = 400

WHERE ProductID = 1 AND LocationID = 1;

In a second query window, I will execute the following code, which demonstrates setting up a lock timeout period of one second (1,000 milliseconds):

USE AdventureWorks2014;

GO

SET LOCK_TIMEOUT 1000;

UPDATE Production.ProductInventory

SET Quantity = 406

WHERE ProductID = 1 AND LocationID = 1;

After one second (1,000 milliseconds), I will receive the following error message:

Msg 1222, Level 16, State 51, Line 4

Lock request time out period exceeded.

The statement has been terminated.

How It Works

In this recipe, the lock timeout is set to 1000 milliseconds (1 second). This setting doesn’t impact how long a resource can be held by a process, only how long it has to wait for another process to release access to the resource. Before proceeding, you should revisit the first query window and issue the following command to ensure the transaction is no longer running:

ROLLBACK TRANSACTION;

Deadlocking occurs when one user session (let’s call it Session 1) has locks on a resource that another user session (let’s call it Session 2) wants to modify, and Session 2 has locks on resources that Session 1 needs to modify. Neither Session 1 nor Session 2 can continue until the other releases its respective locks, so SQL Server chooses one of the sessions in the deadlock as the deadlock victim.

![]() Note A deadlock victim has its session killed, and its transactions are rolled back.

Note A deadlock victim has its session killed, and its transactions are rolled back.

Here are some reasons why deadlocks can happen:

- The application accesses tables in a different order in each session. For example, Session 1 updates Customers and then Orders, whereas Session 2 updates Orders and then Customers. This increases the chance of two processes deadlocking, rather than accessing and updating a table in a serialized (in order) fashion.

- The application uses long-running transactions, updating many rows or many tables within one transaction. This increases the surface area of rows that can cause deadlock conflicts.

- In some situations, SQL Server issues several row locks, which it later decides must be escalated to a table lock. If these rows exist on the same data pages, and two sessions are both trying to escalate the lock granularity on the same page, a deadlock can occur.

12-9. Identifying Deadlocks with a Trace Flag

Problem

You are experiencing a high volume of deadlocks within your database. You need to find out what is causing the deadlocks.

Solution

If you are having deadlock trouble in your SQL Server instance, you can use this recipe to make sure deadlocks are logged to the SQL Server log appropriately using the DBCC TRACEON, DBCC TRACEOFF, and DBCC TRACESTATUS commands. These functions enable, disable, and check the status of trace flags.

![]() Tip There are other methods in SQL Server for troubleshooting deadlocks, such as using SQL Profiler, but since this book is Transact-SQL focused, I will be focusing on Transact-SQL based options.

Tip There are other methods in SQL Server for troubleshooting deadlocks, such as using SQL Profiler, but since this book is Transact-SQL focused, I will be focusing on Transact-SQL based options.

Trace flags are used within SQL Server to enable or disable specific behaviors for the SQL Server instance. By default, SQL Server doesn’t return significant logging when a deadlock event occurs. Using trace flag 1222, information about locked resources and types participating in a deadlock are returned in an XML format, helping you troubleshoot the event.

The DBCC TRACEON command enables trace flags. The syntax is as follows:

DBCC TRACEON ( trace# [ ,...n ][ ,-1 ] ) [ WITH NO_INFOMSGS ]

Table 12-6 describes the arguments for this command.

Table 12-6. DBCC TRACEON Command Arguments

|

Argument |

Description |

|---|---|

|

trace# |

This specifies one or more trace flag numbers to enable. |

|

-1 |

When -1 is designated, the specified trace flags are enabled globally. |

|

WITH NO_INFOMSGS |

When included in the command, WITH NO_INFOMSGS suppresses informational messages from the DBCC output. |

The DBCC TRACESTATUS command is used to check on the status (enabled or disabled) for a specific flag or flags. The syntax is as follows:

DBCC TRACESTATUS ( [ [ trace# [,...n ]][,][ -1 ]]) [ WITH NO_INFOMSGS ]

Table 12-7 describes the arguments for this command.

Table 12-7. DBCC TRACESTATUS Command Arguments

|

Argument |

Description |

|---|---|

|

trace# [,...n ]] |

This specifies one or more trace flag numbers to check the status of. |

|

-1 |

This shows globally enabled flags. |

|

WITH NO_INFOMSGS |

When included in the command, WITH NO_INFOMSGS suppresses informational messages from the DBCC output. |

The DBCC TRACEOFF command disables trace flags. The syntax is as follows:

DBCC TRACEOFF ( trace# [ ,.. .n ] [ , -1 ] ) [ WITH NO_INFOMSGS ]

Table 12-8 describes the arguments for this command.

Table 12-8. DBCC TRACEOFF Command Arguments

|

Argument |

Description |

|---|---|

|

trace# |

This indicates one or more trace flag numbers to disable. |

|

-1 |

This disables the globally set flags. |

|

WITH NO_INFOMSGS |

When included in the command, WITH NO_INFOMSGS suppresses informational messages from the DBCC output. |

To demonstrate this recipe, a deadlock will be simulated. In a new query editor window, the following query is executed:

USE AdventureWorks2014;

GO

SET NOCOUNT ON;

WHILE 1=1

BEGIN

BEGIN TRANSACTION

UPDATE Purchasing.Vendor

SET CreditRating = 1

WHERE BusinessEntityID = 1494;

UPDATE Purchasing.Vendor

SET CreditRating = 2

WHERE BusinessEntityID = 1492;

COMMIT TRANSACTION

END

In a second query editor window, the following query is executed:

USE AdventureWorks2014;

GO

SET NOCOUNT ON;

WHILE 1=1

BEGIN

BEGIN TRANSACTION

UPDATE Purchasing.Vendor

SET CreditRating = 2

WHERE BusinessEntityID = 1492;

UPDATE Purchasing.Vendor

SET CreditRating = 1

WHERE BusinessEntityID = 1494;

COMMIT TRANSACTION

END

After a few seconds, check each query editor window until the following error message appears on one of the query editors:

Msg 1205, Level 13, State 51, Line 9

Transaction (Process ID 52) was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

Looking at the SQL log found in SQL Server Management Studio, the deadlock event was not logged. I’ll now open a third query editor window and execute the following command:

DBCC TRACEON (1222, -1)

GO

DBCC TRACESTATUS

DBCC TRACESTATUS shows the active traces running for both the local session and globally:

TraceFlag Status Global Session

1222 110 1 1

To simulate another deadlock, I’ll restart the “winning” connection query (the one that wasn’t killed in the deadlock), and then the deadlock “losing” session, causing another deadlock after a few seconds.

After the deadlock has occurred, I will stop the other executing query. Now the SQL log in SQL Server Management Studio contains a detailed error message from the deadlock event, including the database and object involved, the lock mode, and the Transact-SQL statements involved in the deadlock.

When deadlocks occur, you’ll want to find out the queries that are involved so you can troubleshoot them accordingly. The following excerpt from the log shows a deadlocked query:

05/08/2012 20:20:00,spidl6s,Unknown,

UPDATE [Purchasing].[Vendor] set [CreditRating] = @1

WHERE [BusinessEntityID]=@2

From this we can tell which query was involved in the deadlocking, which is often enough to get started with a solution. Other important information you can retrieve by using trace 1222 includes the login name of the deadlocked process, the client application used to submit the query, and the isolation level used for its connection (letting you know whether that connection is using an isolation level that doesn’t allow for much concurrency). See the following log:

... clientapp=Microsoft SOL Server Management Studio - Query hostname=LesRois hostpid=2388 loginname=LesRoisAdministrator isolationlevel=serializable (4) xactid=1147351 currentdb=8 lockTimeout=4294967295 clientoption1=673187936 clientoption2=390200

After examining the SQL log, disable the trace flag in the query editor:

DBCC TRACEOFF (1222, -1)

GO

DBCC TRACESTATUS

Before proceeding, you should now revisit the first query window and issue the following command to ensure the transaction is no longer running:

ROLLBACK TRANSACTION;

How It Works

In this recipe, I simulated a deadlock using two separate queries that updated the same rows repeatedly, but in the opposite order. When a deadlock occurred, the error message was returned to the query editor window, but nothing was written to the SQL log.

To enable deadlock logging to the SQL log, the recipe enabled trace flag 1222. Trace 1222 returns detailed deadlock information to the SQL log. The -1 flag indicated that trace flag 1222 should be enabled globally for all SQL Server connections. To turn on a trace flag, DBCC TRACEON was used, with the 1222 flag in parentheses:

DBCC TRACEON (1222, -1)

To verify that the flag was enabled, DBCC TRACESTATUS was executed:

DBCC TRACESTATUS

After encountering another deadlock, the deadlock information was logged in the SQL log. The flag was then disabled using DBCC TRACEOFF:

DBCC TRACEOFF (1222, -1)

12-10. Identifying Deadlocks with Extended Events

Problem

You are experiencing a high volume of deadlocks within your database. You need to find out the causes of the deadlocks.

Solution

If you are having deadlock trouble in your SQL Server instance, follow this recipe to make sure deadlocks are logged to a file on the filesystem for later review by the DBA team.

To demonstrate this recipe, a deadlock will be simulated. In a new query editor window, I will reuse the code from the previous section (relisted here) to cause a deadlock:

USE AdventureWorks2014;

GO

SET NOCOUNT ON;

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

WHILE 1=1

BEGIN

BEGIN TRAN

UPDATE Purchasing.Vendor

SET CreditRating = 1

WHERE BusinessEntityID = 1494;

UPDATE Purchasing.Vendor

SET CreditRating = 2

WHERE BusinessEntityID = 1492;

COMMIT TRAN

END

In a second query editor window, the following query is executed:

USE AdventureWorks2014;

GO

SET NOCOUNT ON;

WHILE 1=1

BEGIN

BEGIN TRANSACTION

UPDATE Purchasing.Vendor

SET CreditRating = 2

WHERE BusinessEntityID = 1492;

UPDATE Purchasing.Vendor

SET CreditRating = 1

WHERE BusinessEntityID = 1494;

COMMIT TRANSACTION

END

In a third query editor window, the following query is executed to create the extended event session so as to trap the deadlock information. This session should be created prior to running the deadlock scenario that I just relisted from Recipe 12-9. See the following:

CREATE EVENT SESSION [Deadlock] ON SERVER

ADD EVENT sqlserver.lock_deadlock(

ACTION(sqlserver.database_name,sqlserver.plan_handle,sqlserver.sql_text)),

ADD EVENT sqlserver.xml_deadlock_report

ADD TARGET package0.event_file(SET filename=N'C:DatabaseXEDeadlock.xel')

--Ensure the file path exists and permissions are set or change the path.

WITH (STARTUP_STATE=ON)

GO

ALTER EVENT SESSION Deadlock

ON SERVER

STATE = START;

With this Extended Event (XE or XEvent) session created, when the deadlock scripts are executed the deadlock graph with pertinent information from the winning and losing sessions involved in the deadlock will be trapped to the output file at C:DatabaseXEDeadlock.xel. Having encountered a deadlock, I will now run the next script to see what has been captured for the deadlock:

/* read the captured data */

SELECT TargetData AS DeadlockGraph

FROM

(SELECT CAST(event_data AS xml) AS TargetData

FROM sys.fn_xe_file_target_read_file('C:DatabaseXEDeadlock*.xel',NULL,NULL, NULL)

)AS Data

WHERE TargetData.value('(event/@name)[1]', 'varchar(50)') = 'xml_deadlock_report';

This will output the deadlock graph in a basic XML format.

How It Works

In this recipe, I simulated a deadlock using two separate queries that updated the same rows repeatedly, but updating two rows in the opposite order. When a deadlock occurred, the error message was logged to the query editor window, but nothing was written to the SQL log.

To enable deadlock logging to a file on the file system, the recipe enabled an XE session to trap the deadlock graph. This session will trap the SQL statements for each of the sessions involved in the deadlock, along with the associated plan handle and database source.

With the flexibility of Extended Events, this session could be altered to include more or less data while still offering a lightweight means to capture the deadlock for effective troubleshooting.

12-11. Setting Deadlock Priority

Problem

While trying to resolve deadlock issues, you have determined that certain query sessions are less critical, and you want to increase the chance of those sessions being chosen as the deadlock victim.

Solution

You can increase a query session’s chance of being chosen as a deadlock victim by using the SET DEADLOCK_PRIORITY command. The syntax for this command is as follows:

SET DEADLOCK_PRIORITY { LOW | NORMAL | HIGH | <numeric-priority> }

Table 12-9 describes the arguments for this command.

Table 12-9. SET DEADLOCK_PRIORITY Command Arguments

|

Argument |

Description |

|---|---|

|

LOW |

LOW makes the current connection the likely deadlock victim. |

|

NORMAL |

NORMAL lets SQL Server decide based on which connection seems least expensive to roll back. |

|

HIGH |

HIGH lessens the chances of the connection being chosen as the victim, unless the other connection is also HIGH or has a numeric priority greater than 5. |

|

<numeric-priority> |

The numeric priority allows you to use a range of values from -10 to 10, where -10 is the most likely deadlock victim, up to 10 being the least likely to be chosen as a victim. The higher number between two participants in a deadlock wins. |

For example, had the first query from the previous recipe used the following deadlock priority command, it would almost certainly have been chosen as the victim (normally, the default deadlock victim is the connection SQL Server deems least expensive to cancel and roll back):

USE AdventureWorks2014;

GO

SET NOCOUNT ON;

SET DEADLOCK_PRIORITY LOW;

WHILE 1=1

BEGIN

BEGIN TRANSACTION

UPDATE Purchasing.Vendor

SET CreditRating = 1

WHERE BusinessEntityID = 1492;

UPDATE Purchasing.Vendor

SET CreditRating = 2

WHERE BusinessEntityID = 1494;

COMMIT TRANSACTION

END

GO

How It Works

You can also set the deadlock priority to HIGH and NORMAL. HIGH means that unless the other session is of the same priority, it will not be chosen as the victim. NORMAL is the default behavior and will be chosen if the other session is HIGH, but will not be chosen if the other session is LOW. If both sessions have the same priority, the least expensive transaction to roll back will be chosen.