Chapter 7

Domain 5: Cryptography

As a Systems Security Certified Practitioner (SSCP), you may be called upon to be a member of a team and take an active part in the security of data in transit and at rest. Security practitioners utilize cryptographic solutions to meet several fundamental roles, including confidentiality, integrity, and authentication, otherwise known as the CIA triad. The practitioner must be aware of and utilize various cryptographic systems that are designed for the purpose in mind.

Confidentiality includes all of the methods that ensure that information remains private. Data at rest, or data in storage, is information that resides in a permanent location. The data might be stored on a local hard disk drive, on a USB drive, on a network (such as a storage area network or network attached storage), or even in the cloud. It may also include long-term archival data stored on tape or other backup media that must be maintained in storage for a number of years based on various regulations.

Data in transit is the data that is moving from one network to another, within a private network, or even between applications. Perhaps the most widely required goal of cryptosystems is the facilitation of secret communications between individuals and groups. While in transit, data must be kept confidential. This type of data is generally encrypted or encapsulated by an encryption algorithm. Data in transit might be traveling on a private corporate network, on a public network such as the Internet, or on a wireless network, such as when a user is connected to a Wi-Fi access point. Data in transit and data at rest require different types of confidentiality protection.

Data integrity ensures that a message has not been altered while in transit. Various mechanisms are used to ensure that the message received is identical to the message that was sent. Message integrity is enforced through the use of message digests created upon transmission of a message. The recipient of the message rehashes the message and compares the message digest to ensure the message did not change in transit. Digital signing of messages is also used to provide, for example, proof of origin. Integrity can be enforced by both public and secret key cryptosystems.

Authentication is a major function of cryptosystems. A shared secret key may be utilized to authenticate the other party because they will be the only ones to have the same key. Another authentication method is using a private key in an asymmetric cryptographic system. The owner of the private key will be the only person who has it and can be the only person to decrypt messages encrypted with their own public key. This technique authenticates that this person is who they claim to be. This technique provides irrefutable proof that the sender is who they say they are. This particular technique is referred to as non-repudiation.

Throughout this chapter we will explore different techniques used throughout modern cryptographic systems.

Concepts and Requirements of Cryptography

The use of cryptography is eons old. Ancient leaders and monarchs required the ability to send messages to distant armies while keeping the content of the messages confidential. Some primitive messages were written on leather straps that were wound around rods of various sizes. The leather strap was then sent to the receiver who then wound it around their own rod of the appropriate size. This enabled the receiver to read the message on the leather strap. Other types of encryption involved encrypting messages using a circular device; an inner circular ring with an alphabet of letters on it could be rotated against an external ring with another alphabet of letters. Depending upon how the rings were rotated, different characters would match up. Identified as a Caesar cipher, this was one of the initial substitution ciphers. The term ROT-3 indicated that the internal circular mechanism was rotated three characters. The corresponding letter was then read on the outer circular device. Decryption mechanisms like this were in use up through the American Civil War.

Beginning in the Industrial Revolution, various machines were designed to encrypt and decrypt messages, specifically messages sent in wartime. The machine age of cryptography ushered in the use of automatic encryption machines such as the German enigma machine as well as the Japanese red and purple machine. These types of mechanisms created cryptographic messages that were very difficult to decipher.

Today, computers both encrypt and decrypt messages at ever-increasing speeds. Organizations are challenged by ever-increasing speed of computers. Careful consideration must be given to cryptographic systems used for very long-term data storage. According to Moore's law, processing power generally doubles every 18 months. This implies that messages encrypted by today's standards may eventually be broken very easily within just a few years.

Terms and Concepts Used in Cryptography

As a practitioner, you must understand the terms and techniques used in the area of cryptography. Cryptographic methods are used to ensure the confidentiality as well as the integrity of messages. Confidentiality ensures the message cannot be read if intercepted, while integrity ensures that the message remains unchanged during transmission.

As with any science, you must be familiar with certain terminology before you study cryptography. Let's take a look at a few of the key terms used to describe codes and ciphers.

- Key Space A key space is the number of keys that can be created based upon the key length in bits. For instance, if the key is 128 bits in length, the total number of keys that might be created would be represented by 128 bits. This is roughly 3.40282367 * 1038.

- Algorithm An algorithm is a mathematical function that produces a binary output based on the input of either plaintext or ciphertext. A cryptographic algorithm produces ciphertext or encrypted text based on the input of a plaintext message in a cryptographic key. A hashing algorithm produces a message digest of a set length with input of any size plaintext message.

- One-Way Algorithm A one-way algorithm is a mathematical calculation that takes the input of a plaintext message and outputs a ciphertext message. When a one-way algorithm is used, it is mathematically infeasible to determine the original plaintext message from the ciphertext message. One-way algorithms are primarily used in hashing or for verifying the integrity of a message. Plaintext messages are hashed to create a message digest. The message digest is always the same length, depending upon the hashing algorithm.

- Encryption Encryption is the process whereby ciphertext is created by processing a plaintext message through an encryption algorithm and utilizing an encryption key and possibly an initialization vector that results in encrypted text.

- Decryption Decryption is an opposite process to encryption. Ciphertext is processed through an encryption algorithm using a reverse process, which results in plaintext.

- Two-Way Algorithm A two-way algorithm as a mathematical function that may both encrypt and decrypt a message.

- Work Factor The work factor is the time and effort that it would take to break a specific encrypted text. For example, the longer the password, the longer it would take to discover it using brute force. The work factor is a deterrent to a would-be crypto analyst if the effort to break an encryption would require more time and resources and assets than the value of the encrypted information.

- Initialization Vector An initialization vector is an unencrypted random number that is used to create complexity during the encryption process. It works by seeding the encryption algorithm to enhance the effect of the key. The encryption algorithms utilize an initialization vector, and the number of bits in the IV is usually equal to the block size of the encryption algorithm. The IV may be required to be random or just nonrepeating, and in most cases it need not be encrypted. An initialization vector used by an asymmetric algorithm is usually the same size as the block size that the algorithm processes.

- Cryptosystem The cryptosystem involves everything in the cryptographic process, including the unencrypted message, the key, the initialization vector, the encryption algorithm, the cipher mode, the key origination, and the distribution and key management system as well as the decryption methodology.

- Cryptanalysis Cryptanalysis is the study of the techniques used to determine methods to decrypt encrypted messages, including the study of how to defeat encryption algorithms, discover keys, and break passwords.

- Cryptology Cryptology is a science that deals with the encryption and decryption of plaintext messages using various techniques such as hiding, encryption, disguising, diffusion, and confusion.

- Encoding Encoding is the action of changing a message from one format to another using a coding method. It is different than encryption because encoding is the alteration of characters. For instance, an alphabet can be represented by a series of ones and zeros by using an ASCII code. An alphabet can also be transmitted in the form of dots and dashes using Morse code. Entire messages can be encoded by using specific colored flags or the position of flags or by flashing lights between ships at sea.

- Decoding Decoding is the art and science of reading various dots and dashes produced by an electromagnetic Morse code receiver or by visually identifying flag signals or flashing signal lights.

- Key Clustering Key clustering is when two different cryptographic keys generate the same ciphertext from the same plaintext. This indicates a flaw in the algorithm.

- Collusion Collusion occurs when one or more individuals or companies conspire to create fraud.

- Collision A collision is when two different plaintext documents create the same output hash value, which indicates a flaw in the hashing algorithm.

- Ciphertext or Cryptogram The text produced by a cryptographic algorithm through the use of a key or other method. The ciphertext cannot be read and must be decrypted prior to use.

- Plaintext Plaintext, or clear text, is a message in readable format. Plaintext may also be represented in other code formats, such as binary, Unicode, and ASCII.

- Hash Function A one-way mathematical algorithm in which a hash value or message digest is a fixed-size output. The output is always the size specified by the hash function regardless of the original size of the data file. It is impossible to determine the original message based upon the possession of the hash value. Changing any character in the original data file would completely change the hash value. A hash function is utilized as a three-step process:

- The sender creates a hash value of the original message and sends the message and the hash value to the receiver.

- Upon receipt, the receiver creates another hash value.

- The receiver then compares the original received hash value with the derived hash value created upon receipt.

- If the hash values match, the receiver can be assured that the message did not change in transit.

- Key The key, or cryptovariable, is the input required by a cryptographic algorithm. Various cryptographic algorithms require keys of different lengths. Generally, the longer the key, the stronger the cryptographic algorithm or resulting crypto text. A symmetric key must be kept secret at all times. An asymmetric key will feature a public key and a private key, and the asymmetric private key must be kept secret at all times. Keys are always represented by the number of bits—for instance, 56 bits, 256 bits, or 512 bits. There are 8 bits in every character, so a 128-bit key is only 16 alphabetic characters long. A 16-character, 128-bit alphabetic key might be represented as “HIHOWAREYOUTODAY.”

- Symmetric Key A symmetric key is a key used with a symmetric encryption algorithm that must be kept secret. Each party is required to have the same key, which causes key distribution to be difficult with symmetric keys.

- Asymmetric Keys In asymmetric key cryptography, two different but mathematically related keys are used. Each user has both a public key and a private key. The private key can be used to mathematically generate the public key. This is a one-way function. It is mathematically infeasible to determine the private key based only upon the possession of the public key. On many occasions, both keys are referred to as a key pair. It is important for the owner to keep the private key secret.

- Symmetric Algorithm A symmetric algorithm uses a symmetric key and operates at extreme speeds. When using a symmetric algorithm, both the sender and the recipient require the same secret key. This can create a disadvantage in key distribution and key exchange.

- Asymmetric Algorithm An asymmetric algorithm utilizes two keys: a public key and a private key. Either key can be used to encrypt or decrypt a message. It is important to note the relationship of the keys. A message encrypted with the user's public key can be decrypted only by the user's private key and vice versa. Asymmetric algorithms, by design, are incredibly slow compared to symmetric algorithms.

- Digital Certificate The ownership of a public key must be verified. In a public key infrastructure, a certificate is issued by a trusted authority. This certificate contains the public key and other identification information of a user. The digital signature is issued by a recognized trusted certificate authority and generates a web trust whereby the key contained in the certificate is identified as being owned by that user. For instance, when you're logging on to purchase something from Amazon.com, the Amazon.com certificate would contain Amazon's public key. This public key will be used to initiate the logon process. As soon as possible, a secret symmetric key is exchanged, which results in a high-speed secure link between the user and Amazon.com for purchasing purposes.

- Certificate Authority A certificate authority is a trusted entity that obtains and maintains information about the owner of a public key. The certificate authority issues, manages, and revokes digital certificates. The topmost certificate authority is referred to as the root certificate authority. Other certificate authorities, such as an intermediate authority, represent the root certificate authority.

- Registration Authority The registration authority performs data acquisition and validation services of public key owners on behalf of the certificate authority.

- Rounds This is the number of times an encryption process may be performed inside an algorithm. For instance, the AES symmetric algorithm features 10, 12, and 14 rounds based upon key length.

- Exclusive Or (XOR) The exclusive, or XOR, is a digital mathematical function that combines ones and zeros from two different sources in a specific pattern to result in a predictable one or zero. It is a simple binary function in which two binary values are added together. For instance, Table 7.1 is a mathematical truth table that illustrates A XOR B, or that the addition of 0+0 or 1+1 always outputs a 0. If the two values are different, such as 0+1 or 1+0, then the output will always be a 1. The XOR function is a backbone mathematical function used in most cryptographic algorithms. It is depicted in drawings as a circle with crosshairs

.

.

Table 7.1 The XOR truth table

Input A Input B Output (XOR Result) 0 0 0 0 1 1 1 0 1 1 1 0

- Nonrepudiation Nonrepudiation is a method of asserting that the sender of a message cannot deny that they have sent it. Non-repudiation may be created by encrypting a message with the sender's private key. It may also be created by hashing the message to obtain a hash value. Then the sender signs the message by using the sender's private key to encrypt a message hash value. Non-repudiation may also be referred to as providing “proof or origin” or “validating origin.” The origination point is proven by the encryption of the message or hash value with something that exists only at the origination point. For instance, the sender's private key in asymmetric cryptography or a commonly held secret key in symmetric cryptography.

Nonrepudiation may also be imposed upon the receiver of a message. This concept requires that the receiver be authenticated with the receiving system and system logging must be initiated. Thus, the receiver, if audited, cannot deny receiving the message.

- Transposition Transposition is the method of placing plaintext horizontally into a grid and then reading the grid virtually. This transposes the letters and characters.

- Session Keys Session keys are encryption keys used for a single communication session. At termination of the communication session, the key is discarded.

- One-Time Pad A one-time pad is the foundation concept of much of modern cryptography. Secure Sockets Layer (SSL), IPsec, and dynamic one-time password tokens are all based on the concept of a one-time pad. The concept is that a real or virtual paper pad contains codes or keys on each page that are random and do not repeat. Each page of the pad can be used once for a single operation, and then it is discarded—never valid or to be reused again. The one-time use of an encryption key is the most secure form of encryption possible.

- Pseudorandom Number Modern computers cannot create true random numbers. At some point numbers begin to repeat. Users of cryptographic systems must be very careful about the information source on which to base the random number generator. Some pseudorandom number generators are based on keystrokes, and others are based on random traffic on a network or cosmic radiation noise. The worst random number generators are based on anything that is predictable, such as the time and date of the system clock.

- Key Stretching Key stretching is used to make a weak key or password more secure. The technique used in key stretching is to perform a large number of hashing calculations on the original key or password in an effort to increase the workload required to crack or break the key through brute force.

The common method of key stretching is to increase the number of bits from the original key length. For example, a typical password used in business is between 8 and 12 characters in length. This represents only 64 to 96 total bits. Normally, symmetric algorithms require at least a key length of 128 bits for reasonable security. In an effort to stretch a key or password, it is processed through a number of variable-length hash functions. These functions may add one or two bits to the length of the password during each iteration. Passwords may be processed through dozens or hundreds of hash functions, resulting in a total length of 128, 192, or even 256 bits in length. Any attempt to use brute force to crack the key with this length would require a tremendous work factor. This may result in the hacker giving up long before becoming successful.

- In-Band vs. Out-of-Band Key Exchange Keys must be distributed or exchanged between users. In-band key exchange uses communication channels that normally would be used for regular communication including the transmission of encrypted data. This is usually determined to be less secure due to man-in-the-middle attacks or eavesdropping. Out-of-band key exchange utilizes a secondary channel to exchange keys, such as mail, courier, hand delivery, or a special security exchange technique that is less likely to be monitored.

- Substitution Substitution is the process of replacing one letter for another. For instance, when using the Caesar cipher disk, the inner disk is rotated three places, ROT-3, and the corresponding letter can be used as a substitute in the encrypted text.

- Confusion Confusion increases the complexity of an encrypted message by modifying the key during the encryption process, thereby increasing the work factor required in cryptanalysis.

- Diffusion Diffusion increases the complexity of an encrypted message. For instance, very little input or change during the encryption process makes major changes to the encrypted message. Diffusion is also used during a hash algorithm to change the entire hash output for each character modification of the original message.

- Salt A salt is the process of adding additional bits of data to a cleartext key or password prior to it being hashed. Salting extends the length of a password and, once the password is hashed, makes the processes of attacking hashes much more complicated and computationally intensive. Rainbow tables are commonly used in attacks against hashed passwords. The salted password prior to hashing the password will usually make using a rainbow table attack infeasible and unsuccessful. A large salt value prevents pre-computation attacks and ensures that each user's password is hashed uniquely.

- Transport Encryption Information must be kept confidential when sent between two endpoints. Transported encryption refers to the encryption of data in transit. IPsec is a very popular set of transport encryption protocols.

- Key Pairs The term key pair refers to a set of cryptographic keys. A key pair refers to the public and private key in public key infrastructure (PKI) and in a asymmetric cryptosystem.

- Block Cipher (Block Algorithm) An algorithm that works on a fixed block of characters. Most block algorithms utilize standard block sizes such as 128, 192, 256, or 512 although other block sizes are possible. If the number of characters remaining in the last block are less than the block length of the encryption algorithm, the remaining unused character spaces may be padded with null characters.

- Stream Cipher (Stream Algorithm) This is an algorithm that performs encryption on a continuous bit-by-bit basis. Stream ciphers are used when encryption of voice, music, or video is required. Stream-based ciphers are very fast.

Stream ciphers are utilized anytime information such as voice or video data is flowing from one place to another. Stream cipher performs its encryption on a bit-by-bit basis as opposed to a block at a time as with a block cypher. Through a mathematical process called an exclusive OR (XOR), the original information of ones and zeros is merged with an encryption keystream of information. The encryption keystream is generated with the use of the key, which controls both the encryption and decryption processes.

Stream-based ciphers have a set of criteria that should be met for the cipher to be viable:

- The keystream should be unpredictable. An observer should not be able to determine the next bit if given several bits of the keystream.

- If an observer is given the keystream, they should not be able to determine the original encryption key.

- The keystream should be complex and should be constructed on most or all of the encryption key bits.

- The key string should contain many ones and zeros and should go for long periods without repetition.

- The weakest area for any encryption algorithm is repetition. Keystreams should be constructed in such a way as to go for very long periods of time without repetition. Most keystreams will repeat at some point. This point in time should be highly unpredictable.

- Rainbow Tables Rainbow tables are pre-computed hash values intended to provide a reverse lookup method for hash values. Typically, passwords are stored on the computer system in a hash value. Brute-force attacks and dictionary attacks are typically used in password breaking or cracking schemes. The use of this brute-force technique requires significant computing resources and possibly an extended period of time. A rainbow table is a list of hashes that include the plaintext version of passwords. Through the process of comparing the saved password hashes to the rainbow table hashes, the hacker hopes to uncover the original plaintext password.

Cryptographic Systems and Technology

It is important for an SSCP to have a thorough understanding of cryptographic systems and the technology associated with the systems. This includes the various types of ciphers and the appropriate use of ciphers and concepts, including nonrepudiation and integrity. As a security practitioner, you may be involved in various aspects of key management, beginning with key generation, storing keys in key escrow storage, and eventually key wiping or clearing, which is key destruction.

Block Ciphers

Block ciphers operate on blocks, or “chunks,” of the data and apply the encryption algorithm to an entire block of data at one time. As a plaintext document is fed into the block-based algorithm, it is divided into blocks of a preset size. There may be 2 blocks or 4,000 or more blocks, depending on the size of the original data. If the data is smaller than one block or if there is not enough data for the final block, then null characters are placed in the block to pad to the end. Different algorithms have different block sizes, usually in multiples of 64 bits, such as 128, 192, 256, 320, 512, and so forth.

Block Cipher Modes

There are many challenges facing the administrator of a cryptographic system. Among them is the fact that messages may be of any length; they can be as short as one sentence or as long as the text of an encyclopedia and then some. The second challenge is selecting an algorithm with an appropriate key length. One problem with block cipher algorithms is that encrypting the same plaintext with the same key always produces the same output ciphertext. As you can see, it would be impractical to change the key between every block to be encrypted. Therefore, systems have been devised to increase the complexity of the cipher output utilizing the exact same key. Most of these techniques use an additional input called an initialization vector (IV). The initialization vector provides a set of random or nonrepeating bits that may be used during the cipher computation.

Over the years, various cipher modes have been devised to encrypt blocks of text using several methods or combinations of a key and an initialization vector and in one case just a counter. These methods may look complex at the beginning. But, as we will see, it is just a means of making the keystream more complex and ensuring that all of the blocks of cipher text are equally encrypted in a secure manner.

There are five basic block cipher modes that the security practitioner must be aware of. Each of these modes offers a different method of utilizing a key and an initialization vector. You'll notice that there is a primary binary mathematical function utilized in all of the modes. This is the binary function XOR, or exclusive OR. In each of the five modes, the XOR function combines the ones and zeros coming from one direction with the ones and zeros coming from another to output a unique set of ones and zeros based upon the combination of the two sources. In some cases, the streams of ones and zeros will be an input into another part of the cipher mode to be used to encrypt the next block.

In each of the diagrams, there is a block cipher encryption algorithm. Originally this encryption algorithm was DES. In 2001, the National Institute of Standards and Technology (NIST) added AES to the list of approved modes of operation.

The five basic block cipher modes are as follows:

- Electronic Codebook Mode The electronic codebook (ECB mode) is the most basic block cipher mode. Blocks of 64 bits are input into an algorithm using a single symmetric key. If the message is longer than 64 bits, a second, third, or fourth 64-bit block will be encrypted in the same manner using the same key. If all 64-bit blocks are the same text and we're using the same symmetric key, each output block of cipher text would be identical. Electronic codebook mode is used only on very short, smaller than 64-bit messages. Figure 7.1 illustrates that each plaintext block is processed by an algorithm using a symmetric key. If the key is the same and the block is the same, the process will produce the identical ciphertext. This diagram illustrates three blocks being processed. There may be as many blocks as required, and each block is encrypted separately.

Figure 7.1 Electronic codebook (ECB) mode

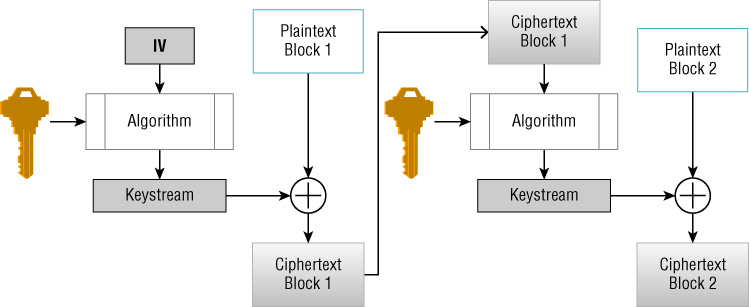

- Cipher Block Chaining Mode Cipher block chaining (CBC) mode is one of the most commonly used of the block cipher modes. Plaintext message is combined or XORed with the initialization vector block by block. Each block is then encrypted into the ciphertext block. Instead of reusing the initialization vector, the system uses the previous ciphertext block in place of the initialization vector. This continues until the entire message is encrypted. In Figure 7.2, you will notice that the first plaintext block is XORed with the initialization vector and then encrypted. The resulting ciphertext block is then XOR (in substitution of the initialization vector) with the second plaintext block, and so forth, until the entire message is encrypted.

Figure 7.2 Cipher block chaining (CBC) mode

- Cipher Feedback Mode Cipher feedback (CFB) mode is the first of three stream cipher modes. Although both DES and AES are block ciphers, both cipher feedback mode and output feedback (OFB) mode are used in a streaming cipher methodology. In cipher feedback mode, there are a number of different block sizes that can be used. Typically, the 8-bit block size is selected because that's the size of a common character. The purpose behind the cipher feedback mode is to encrypt one character at a time, bit by bit. This is accomplished by sending an initialization vector into a shift register, which shifts out 1 bit at a time.

Each bit shifted out is encrypted by the encryption algorithm and then XORed with a bit shifted out of the plaintext shift register. Figure 7.3 illustrates that the XOR output of the encrypted initialization vector in the plaintext is then fed back into the second block cipher encryption and again is XORed with each bit of the second plaintext block. This continues until the entire message is encrypted.

Figure 7.3 Cipher feedback (CFB) mode

- Output Feedback Mode The Output feedback (OFB) mode is the second of the stream cipher modes. It is a very similar operation to the cipher feedback mode with the exception that it uses the encrypted initialization vector as the input to the second block cipher encryption. This method allows the keystream to be prepared and stored in advance, prior to the encryption operation. Figure 7.4 illustrates that the initialization vector is encrypted and then fed to the next block cipher, encrypted once again, and fed to the next block cipher.

Figure 7.4 Output feedback (OFB) mode

- Counter Mode Counter (CTR) mode is the third of the stream cipher modes that turn a block cipher into a stream cipher. It's similar in operation to output feedback mode. A keystream is generated and encrypted through a block cipher algorithm. In this case, rather than use an initialization vector, a 64-bit random data counter is used, which is initiated by a number used only once, or nonce. The counter increments by one digit for each block that is encrypted. As in output feedback mode, the counter is encrypted and then XORed to the plaintext on a bit-by-bit basis. This mode does not feature the feedback technique and causes the keystream to be separate from the data. This makes it possible to encrypt several blocks in parallel. Figure 7.5 illustrates how each block is encrypted separately from the others. The only similarity is that each block has a counter that is incremented by one.

Figure 7.5 Counter (CTR) mode

Symmetric Cryptography

Symmetric cryptography, sometimes called private key or secret key cryptography, uses a single shared encryption key to both encrypt and decrypt data. Symmetric cryptography is referred to as two-way cryptography. A single cryptographic key is used to encrypt and decrypt the information. Symmetric cryptography can be used to encrypt both data at rest and data in transit. When encrypting data at rest on a hard disk, the user possesses a single secret encryption key. Figure 7.6 illustrates that when data in transit is encrypted, both the receiver and the sender require access to the same symmetric key.

Figure 7.6 Symmetric cryptography using one shared key

Symmetric cryptography provides very strong encryption protection provided the symmetric key has not been compromised and has been kept a secret. Key exchange and distribution has proven to be a common problem in symmetric cryptography. Each sender and receiver must possess the identical symmetric key. In the event many senders and receivers communicate using the same key, a problem surfaces if any member leaves the group. New keys must be exchanged. In another example, every person in the group shares a unique key with every other person in the group. As you can see, if this is a large group, it will require a very large number of keys. A simple equation may be used to determine the total number of keys required:

For instance, if there are 10 individuals in a workgroup and each requires a symmetric key to communicate with another within the same workgroup, the equation would appear as follows: 10 * 9 / 2 = 45 keys required. The key management problem increases if, for example, two additional individuals join the workgroup: 12 * 11 / 2 = 66 keys required. By just adding two individuals, 21 additional keys were required.

Symmetric keys may be exchanged both in-band and out-of-band. An in-band exchange makes use of the same communication medium to exchange the symmetric key and the encrypted data. This type of exchange is subject to man-in-the middle or eavesdropping attacks. The out-of-band symmetric key exchange is favored because it uses other methods of symmetric key exchange, such as mail, email, or printed messages, and other methods of exchanging a symmetric key. Public key infrastructure (PKI) is a method of exchanging symmetric keys by first using asymmetric cryptography in establishing a communication link. Once the initial communication link is established, the symmetric key is exchanged, at which time both receiver and sender switch to symmetric cryptography to gain speed.

Symmetric cryptography and the use of a symmetric key is magnitudes of speed faster than asymmetric cryptography due to use of computationally intense mathematical algorithms used in asymmetric algorithms.

Various types of symmetric key algorithms exist. Some are less popular than others, and some have risen to the top ranks of standards and are used to encrypt U.S. government top-secret information. The following is a listing of various symmetric key algorithms:

- Data Encryption Standard The Data Encryption Standard (DES) was the premier symmetric key algorithm for encryption adopted in the mid-1970s by the national Bureau of Standards (now NIST). DES featured a 56-bit key size, which is very small by today's standards. The cryptographic algorithm was broken in 1999. Also in 1999, Triple DES was prescribed as a potential alternative to DES. By 2002, DES was replaced by the Advanced Encryption Standard (AES). The block cipher modes mentioned previously were designed with DES as the central symmetric algorithm.

- Triple Data Encryption Algorithm Triple DES, or 3DES, is a symmetric algorithm that applies DES three times on each data block. 3DES makes use of three keys, one for each operation. The three keys are combined in ways to create three different keying options:

- All three keys are unique.

- Key 1 and key 2 are unique; key 1 and key 3 are the same.

- All three keys are identical.

3DES features several modes of operation utilizing two keys or three keys. Each mode works on a single block at one time. The four most popular modes of operation are as follows:

-

- Using two keys

- Encrypting using key 1, encrypting using key 2, then reencrypting using key 1.

- Encrypting using key 1, de-encrypting using key 2, then reencrypting using key 1. (In some cases it is referred to as key 3, but it is identical to key 1.)

- Using three keys

- Encrypting using key 3, encrypting using key 2, then reencrypting using key 1. This is referred to as EEE3 mode.

- Encrypting using key 3, de-encrypting using key 2, then reencrypting using key 1. This is referred to as EDE3 mode.

- Using two keys

- Advanced Encryption Standard The Rijndael cipher was selected as the successor to both DES and 3DES during the AES selection process. It was included as a standard by NIST in 2001.

AES makes use of a 128-bit block size in three different wavelengths of 128, 192, and 256 bits. The U.S. government denoted that all three key sizes were adequate to encrypt classified information up to the secret level and that the key sizes of 192 and 256 bits offered adequate encryption strength for top secret classified information.

- International Data Encryption Algorithm The International Data Encryption Algorithm (IDEA) was submitted as a possible replacement for DES. It operates using a 120-bit key on 64 bit blocks. During encryption. IDEA performs eight rounds of calculations. It is currently unpatented and free for public use.

- CAST CAST-256, which uses the initials of the creators, Carlisle Adams and Stafford Tavares, was submitted unsuccessfully during the AES competition. Currently in the public domain, CAST is available for royalty-free use. Based originally on CAST-128, which uses smaller block sizes, CAST-256 utilizes 128-bit blocks and key length of 128, 192, 160, 224, and 256 bits.

- Blowfish Blowfish is a strong symmetric algorithm and is referred to as a fast block cipher due to its speed when implemented in software. Developed in 1993 by Bruce Schneider, Blowfish is still in use today. It provides good encryption rates with no effective cryptanalysis. It is currently packaged in a number of encryption products.

Blowfish works on a 64-bit plaintext block size and utilizes a key of 32 bits to 448 bits. The algorithm is slow at changing keys because the keys are precomputed and stored. This makes Blowfish unsuited for some types of encryption applications.

- Twofish Twofish is a symmetric algorithm that was also a contender during the AES competition. A team of cryptographers led by Bruce Schneider developed Twofish as an improved extension of Blowfish. The algorithm makes use of 128-bit blocks in a similar key structure of 128, 192, or 256 bits. It also is in the public domain but is less popular than Blowfish.

- RC4 RC4 is very popular software stream cipher that is used in a number of implementations. The algorithm has known weaknesses, and while it is extensively used in protocols such as Transport Layer Security (TLS), major organizations such as Microsoft and others have recommended disabling RC4 where possible. Originally designed by Ron Rivest in 1987, the algorithm was the backbone of several encryption protocols, including SSL/TLS, Wired Equivalent Privacy (WEP), and Wi-Fi Protected Access (WPA).

- RC5 RC5 is a simple symmetric key block cipher. The algorithm is somewhat unique in that it has a variable block size of 32, 64, or 128 bits and a variable key length from 0 to 2040 bits.

Table 7.2 compares block cipher algorithms and lists the typical block sizes and key sizes.

Table 7.2 Block cipher algorithms

Algorithm Block Size Key Size Advanced Encryption Standard 128 128, 192, 256 Data Encryption Standard (DES) 64 56 Triple Data Encryption Standard (3DES) 64 168 International Data Encryption Algorithm (IDEA) 64 128 Blowfish 64 32–448 Twofish 128 128, 192, 256 RC5 32, 64, 128 0–2040 CAST 64 40–128

Asymmetric Cryptography

Asymmetric cryptography is also called public key cryptography. Asymmetric cryptography makes use of asymmetric cryptographic algorithm. Between symmetric and asymmetric, asymmetric is by far the more versatile. It makes use of a key pair for each user, which consists of a public key and a private key. The user may easily generate both keys as required.

The public and private keys have a mathematical relationship. The private key is generated first, which then generates the public-key. This is referred to as a one-way function because although the private key generates the public-key through a mathematical computation, it is mathematically infeasible for anyone to determine the private key if they have only the public key.

It is important for the security practitioner to understand the relationships between the public key and the private key in an asymmetric cryptosystem. A key possessed by an individual may decrypt a message encrypted by the other key in the key pair. It is important to visualize this relationship because it is the foundation of asymmetric cryptography. In Figure 7.7, Bob and Alice each possesses a public and private key. Each individual must keep their private key secret. They are the only person who knows the private key. This concept will become the foundation of proof of origin and nonrepudiation. As illustrated, the key relationship is only between the key pair possessed by each individual. In other words, either key may be used to encrypt a plaintext message, and only the opposite key, either public or private, may be used to decrypt the message.

Figure 7.7 The relationships of public and private keys in an asymmetric cryptographic system

Asymmetric cryptography is much more scalable than symmetric cryptography. In asymmetric cryptography, the public key may be freely and openly distributed. In Figure 7.8, Bob encrypts his message with his private key. This is a secret key and only he knows it. He then transmits the message to Alice. Alice then decrypts the message using Bob's public key.

Figure 7.8 Proof of origin encrypted message with a private asymmetric key

Although in this illustration Bob has encrypted the message using his private key, the message can be decrypted by any person accessing Bob's public key. So this type of message transmission, where the message is encrypted by this method, is not, in fact, secure. Although the message is encrypted, a man-in-the-middle attack can easily decrypt it. The use of this technique is to provide the proof of origin. By encrypting a message with his private key, Bob has proven that he is the only person who could have sent the message. Logic follows that if Bob's public key can successfully decrypt the message, only Bob using his private key could have encrypted it. Therefore, only Bob could have sent the message.

In most cases, proof of origin as well as confidentiality of the message is required. To accomplish this, the message is in fact encrypted twice. Bob is sending a confidential message to Alice encrypted with his private key. He is providing both proof of origin and confidentiality. He would perform the following steps:

- Encrypt his plaintext message using his private key. This would provide proof of origin because he is the only person who has access to his private key.

- He would then encrypt the same message using Alice's public key. This would provide confidentiality because Alice is the only person who could decrypt this message with the use of her private key.

Upon receiving the message, Alice must perform two steps:

- Alice would first decrypt the entire message using her private key. Since the message was encrypted with her public key, she is the only person able to do this.

- She would then decrypt the message once again using Bob's public key. Since Bob encrypted the message using his private key, only his public key could be used to decrypt it. This proves irrefutably that Bob sent this message and provides proof of origin.

Digital signatures are widely used to sign messages. A digital signature provides both proof of origin (and therefore nonrepudiation) and message integrity. Message integrity is the function of proving that the message did not change between the source and the receiver. This is accomplished by passing the original plaintext message through a hashing algorithm to obtain a message digest or hash value. This message digest or hash value is then encrypted using the sender's private key. By encrypting the message using the private key, the sender is providing proof that they are in fact the sender. Figure 7.9 illustrates the use of a private key to encrypt a hash value to create a digital signature. The steps Bob would take to digitally sign the message would be as follows:

-

Bob would hash his plaintext message to arrive at a hash value or message digest.

- Bob would then encrypt the hash value or message digest with his private key.

Figure 7.9 The creation of a digital signature by encrypting a hash of a message

It is important to note that a digital signature does not provide confidentiality; it only provides proof of origin, nonrepudiation, and message integrity. Should confidentiality be required, Bob would encrypt the entire message using Alice's public key as illustrated in Figure 7.9.

Asymmetric cryptography is much slower than symmetric cryptography due to the very intensive calculations and high processor overhead required. Asymmetric cryptography is generally not suited for encrypting large amounts of data. It is often used to initiate an encrypted session between a sender and receiver so that a symmetric cryptographic key may be immediately exchanged. Once this happens, both the sender and receiver switch to a symmetric cryptosystem, which increases the communication process by magnitudes of speed.

The most widely used asymmetric cryptography solutions are as follows:

- Rivest, Shamir, and Adleman (RSA)

- Diffie-Hellman

- ElGamal

- Elliptic curve cryptography (ECC)

The most famous public key cryptosystem, RSA, is named after its creators Ronald Rivest, Adi Shamir, and Leonard Adleman. In 1977 they proposed the RSA public key algorithm that is still in mainstream use and is the backbone of a large number of well-known security infrastructures produced by companies like Microsoft, Nokia, and Cisco.

The RSA algorithm is based upon the computational difficulty of factoring large prime numbers. Each user of the RSA cryptosystem generates a key pair of public and private keys using a very complex one-way algorithm.

Non-Key-Based Asymmetric Cryptographic Systems

Key distribution has continued to be a problem in cryptosystems. For example, the receiver and the sender of a message might need to communicate with each other, but they have no physical means to exchange key material and no public key infrastructure in place to facilitate the exchange of secret keys. In such cases, key exchange algorithms like the Diffie-Hellman key exchange algorithm prove to be useful in the initial phases of key exchange.

In 1976, Whitfield Diffie and Martin Hellman published a work originally conceptualized by Ralph Merkle. The Diffie-Hellman algorithm establishes a shared secret key between two parties through the use of a series of mathematical computations. In essence, each party has both a secret integer number and a public integer number. Each party respectively sends the public integer number to the other party. Through a series of computational steps, each party arrives at the same integer, which can then be used as a shared secret key.

In 1985, Dr. T. Elgamal published an article describing how the mathematical principles behind the Diffie-Hellman key exchange algorithm could be extended to support an entire public key cryptosystem used for encrypting and decrypting messages. The ElGamal signature algorithm is a digital signature scheme, which is based on the difficulty of computing discrete logarithms rather than like the Diffie-Hellman mathematical process of using the multiplicative group of integers modulo.

The ElGamal signature algorithm is still in use today. When it was released, one of the major advantages of the ElGamal algorithm over the RSA algorithm was that RSA was patented and Dr. Elgamal released his algorithm freely and openly to the public.

The ElGamal cryptosystem is usually referred to as a hybrid cryptosystem. In use, the message itself is encrypted using a symmetric algorithm and ElGamal is then used to encrypt the symmetric key used for the symmetric algorithm. This allows unusually large messages to be transmitted to a receiver and decrypted using the symmetric algorithm and key for message encryption, and the asymmetric encryption for symmetric key exchange.

The disadvantage to ElGamal is that the algorithm doubles the length of any message that it encrypts, thereby providing a major hardship when a very large message is sent over narrow bandwidth or when decrypting a very large message with a low-power device.

Elliptic Curve and Quantum Cryptography

In 1985, two mathematicians, Neil Koblitz from the University of Washington and Victor Miller from International Business Machines (IBM), independently proposed the application of the elliptic curve cryptography (ECC) theory to develop secure cryptographic systems.

RSA, Diffie-Hellman, and other asymmetric algorithms are successful because of the difficulty in factoring large integers composed of two or more prime numbers. The problem with this is the required overhead and processing time to perform the calculation.

The mathematical concepts behind ECC are quite complex. It is important that the construction of parts of cryptographic systems operate as a one-way function. This means that they may easily compute a value in the forward direction, but it should be very near impossible to determine a starting point. For instance, in asymmetric cryptography, the private key and public key are mathematically linked. The private key can be used to generate the public key, but by having possession of the public key, it is impossible to generate or determine the private key.

The security for an elliptic curve protocol is based upon a known base point and a point determined to be on an elliptic curve. The entire process depends upon the ability to compute a point multiplication and the inability to compute the multiple and knowing both sets of points. In summary, it is assumed that finding a discrete logarithm of the elliptic curve element would take an infeasible amount of work factor.

Basically, elliptic curve cryptography is a method of applying discrete logarithm mathematics in order to obtain stronger encryption from shorter keys. For example, an ECC RSA 160-bit key provides the same protection as an RSA 1,024-bit key.

Quantum cryptography is an advanced concept that offers great promise to the world of cryptography. The concept takes advantage of the dual nature of light at the quantum level where it both acts as a wave and is a particle. Quantum cryptography takes advantage of the polarization rotation scheme of light. Successful experiments with quantum cryptography have involved quantum key distribution. With this technology, a shared key may be established between two parties and can be used to generate a shared key that is used by traditional symmetric algorithms. The unique nature of quantum cryptography is that any attempt to eavesdrop on the communication disturbs the nature of the quantum message stream and will be noticed by both parties.

Quantum cryptography technologies are currently in development or in limited use. The prediction for the future is that once it's mature, quantum cryptography will at once replace all existing cryptographic systems and also be able to break existing cryptography.

Hybrid Cryptography

Hybrid cryptography makes use of both asymmetric and symmetric cryptographic communications. This type of cryptography takes advantage of the best attributes of both asymmetric and symmetric cryptography.

The desire is to use symmetric encryption because you can encrypt large amounts of data and it offers great speed. The drawback is in the difficulty of the key exchange. Asymmetric encryption allows for easy key exchange, yet it is very slow and does not encrypt large amounts of data well.

To use hybrid cryptography, you would establish a communication session using asymmetric encryption. Once the session is established, a very large data file would be encrypted with the symmetric key and sent to the receiver. The symmetric key used to encrypt the large data file would then be encrypted with the receiver's public key and sent to the receiver. The receiver decrypts the symmetric key using their private key and then decrypts the large data file using the symmetric key.

This method is used extensively when initiating secure communications using asymmetric cryptography and then switching to a much faster method of encryption using symmetric cryptography.

Steganography

Steganography is simply hiding one message inside another. Known as hiding in plain sight, steganography may be used to hide a text message inside a photograph, an audio recording, or a video recording. It's most popularly employed for hiding a text message inside a photograph such as a JPG or GIF. The steganography algorithm encodes the text message by modifying the least significant bit of various pixels within the photograph.

Using this technique, the text message will be embedded within the picture file but will be undetectable to the naked eye. Proof of existence of the message may be determined by hashing the original photograph and comparing it to a hash of the photograph and the message. In this case, the hash values will not be equal. Steganography is fairly simple and fast to achieve using a number of free algorithms. In some cases, the message may be encrypted prior to embedding within a photograph. Figure 7.10 illustrates a JPEG before and after the text has been embedded. Note that the pictures look remarkably the same to the naked eye.

Figure 7.10 The process of steganography

Digital Watermark

A digital watermark is identification data that is covertly included in either image data or audio/video data. Digital watermarks may be used to verify the authenticity or integrity of an object file or to indicate the identity of the owners. They are utilized to identify the copyright or ownership of an object file. Digital watermarks may very well maintain its integrity even though the underlying media is significantly altered. This is important in such technologies as Digital Rights Management (DRM) where ownership of the media and content is important to prove. As with steganography, an application is required to both embed and read the watermark.

Unlike metadata, which may be listed with the file and include the author, date of creation, and other information, a watermark is invisible to the naked eye. Most watermarks cannot be changed by either data compression or manipulation of the original, such as cropping a photograph. A digital watermark is purely passive marketing of information and does not prevent access or media duplication. Digital watermarks have been used extensively in the music industry and motion picture industry to detect the source of illegally copied media.

Hashing

Hashing is a type of cryptography that does not use an encryption algorithm. Instead, a hash function produces a unique identifier that may usually be referred to as a hash, hash value, message digest, or fingerprint. The hash function, or hashing algorithm, is a one-way function, meaning that the hash may be derived from a document using the hashing process but the hash value itself cannot be used in a reverse function to derive the original document.

Hash values or message digests always produce a fixed-length output regardless of the size of the original document. Hash functions also use the technique of the avalanche effect. Changing one character in the original document will change the entire hash value. For instance, hashing an entire document will create a unique hash value. Typing the letter a anywhere in the document will completely change the hash value. Typing another a in the document will completely change the hash value again. This ensures that an attacker would not be able to determine what character was typed by studying the hash value. And, if two of the same characters were typed consecutively, the hash value would change in such a manner as to not identify the two characters. Figure 7.11 illustrates a number of different hashing algorithms and their output values. Notice the difference between hash values when just one number is changed at the end of the sentence.

Figure 7.11 Comparison of hash values

A hash is used to detect whether a document has changed. To verify the integrity of a document, the document is hashed by the sender, and the hash value is included with the message. Upon receipt, the receiver hashes the message once again, and the hash values are compared. If the hash values are the same, the message did not change in transit.

Hashing algorithms make use of a variable-length input file and output a fixed-length string of characters. For example, Message Digest 5 (MD5) produces a hash output of 128 bits. This means that regardless of whether a simple sentence or an encyclopedia is put through the hashing algorithm, the output will always be 128 bits. The Secure Hash Algorithm (SHA-2) hash function is implemented in some widely used security applications and protocols, including TLS and SSL, PGP, SSH, S/MIME, and IPsec.

The hashing algorithm must be collision free. This means that two different text messages cannot result in the same hash value. In the event that this happens, the hashing algorithm is flawed.

Table 7.3 illustrates the various hashing functions and the resulting length of their hash value.

Table 7.3 Hashing functions and their hash value lengths

| Algorithm | Hash length |

| Secure Hash Algorithm (SHA-1) | 160 |

| SHA-224 | 224 |

| SHA-256 | 256 |

| SHA-384 | 384 |

| SHA-512 | 512 |

| SHA-512/224 | 224 |

| SHA-512/256 | 256 |

| SHA3-224 | 224 |

| SHA3-256 | 256 |

| SHA3-384 | 384 |

| SHA3-512 | 512 |

| Message Digest 5 (MD5) | 128 |

| RIPEMD | 160 |

Message Authentication Code

A Message Authentication Code (MAC) is a message authentication and integrity verification mechanism similar to a hash code or message digest.

When it's in use, a small block of data that is typically 64 bits in length is encrypted with a shared secret key. This block of data is appended onto a message and sent to the receiver. The receiver will decrypt the 64-bit data block and will then determine that the data has not changed in transit.

Authentication is provided to the receiver by the knowledge that the sender is the only other person to possess a secret symmetric key.

A MAC integrity block can take the form of the final encryption block of ciphertext that was generated by the DES encryption algorithm using CBC mode. To arrive at the final encryption block in CBC block mode, a large number of computations in encryptions have taken place. The receiver determines the final block of the algorithm in CBC mode and compares the two blocks. If they match, the receiver can be assured that nothing has changed. The system works because the CBC mode would propagate an error through to the final block if anything changed in the message. The disadvantage of this method of providing both message integrity as well as sender authentication is that it is a very slow process.

HMAC

A keyed-hash message authentication code, or HMAC, features the use of a shared secret key, which is appended to the message prior to hashing, to prove message authenticity. Message authentication codes are used between two parties that share a secret key in order to authenticate information transmitted between the parties. The hash function performs the normal message integrity.

In use, the sender combines the original message with a shared secret key by appending the key to the original message. This combination message and secret key is then hashed to create an HMAC value. The original message without the secret key appended plus the HMAC value is then sent to the receiver. The receiver will append their secret key to the original message and hash the message, creating their own HMAC. If the HMAC received from the sender and the HMAC determined by the receiver are the same, then the message did not change in transit, proving integrity, and the sender is authenticated by the fact that they have possession of a shared secret key.

Key Length

One of the most important security parameters that can be chosen by the security administrator in any cryptographic system is key length. The length of the key has a direct impact on the amount of processing time required to defeat the cryptosystem. It may also have a direct impact on the processing power required and amount of time required to process each block of data.

According to U.S. government and industry standards, the more critical your data, the stronger the key you should use to protect it. The National Security Agency (NSA) has stated that key lengths of the 128 bits, 192 bits, and 256 bits of the AES algorithm are sufficient to protect any government information up to information classified as secret. It has also stated that information classified as top secret must be encrypted by AES using either 192-bit or 256-bit key lengths.

The obvious exception to the rule is elliptic curve cryptography. Due to the calculations used within the algorithm, a shorter key length may be the equivalent to a much larger key length and other algorithms. For instance, it's reported that a 256-bit elliptic curve cryptosystem key is equivalent to a 3,072-bit RSA cryptosystem public key.

It is important to take into consideration the length of time the data must be stored with regard to the length of the cryptosystem key. Moore's law states that processing power (actually, the number of transistors capable of being packed onto a IC chip) doubles about every 18 months. If this is a fact and it currently takes an existing computer one year of processing time to break a cryptosystem key, it will take only 3 months if the attempt is made with contemporary technology 3 years down the road. If records must be retained under a regulatory environment for 3, 7, or 10 years, the appropriate key length must be selected.

Non-repudiation

Non-repudiation prevents the sender of a message from being able to deny that they sent the message. In asymmetric cryptography, non-repudiation is invoked when a sender uses their private key to encrypt a message. Any recipient of the message may then use the sender's public key to decrypt the message. Since the sender was the only person to have access and knowledge of the private key, they cannot deny that they encrypted the message and therefore sent it. The only exception to this rule might be if the sender's private key has been compromised, in which case both the private key and the public key should be discarded.

Non-repudiation is also dependent upon proper authentication. The sender must be properly authenticated to the system and therefore have proper access to the private key. Of course, should an unauthenticated intruder access the individual's private key, non-repudiation would be invalid.

Authentication verifies the authenticity of the sender as well as the recipient of the message. Non-repudiation may also be proved by the receiver provided that the receiver is strongly authenticated into their system and that all of their activities and actions are being actively logged. Therefore, the receipt of the message may be traced to the appropriate receiver.

Non-repudiation is enacted in asymmetric cryptography by the action of the sender encrypting the message with the private key. The only person who could have access to the private key would be the sender. Non-repudiation may be enacted in asymmetric cryptography through the use of a symmetric key. In this case, only the receiver and the sender possess the symmetric key. The symmetric key is a shared secret. Should either party received a message from the other encrypted with the symmetric key, authentication is provided by the fact that the sending person must possess a secret symmetric key. Therefore, they are who they purport to be. A threat to this might be the interception of a private key or secret symmetric key by third-party eavesdropper.

This type of non-repudiation is utilized in the single sign-on methodology of Kerberos. The ticket-granting server maintains a shared secret symmetric key. Each resource in the Kerberos realm also possesses the same shared secret symmetric key or a unique key shared key that is also shared by the ticket granting server. Upon presentation of a ticket containing the key from the ticket-granting server, the resource may conclude that the ticket is valid and authentic by comparing the resource secret key to the ticket granting server security that was included in the ticket. In this case, nonrepudiation may also be used as authentication.

It should be noted that if any more than two individuals possess access to a shared secret symmetric key, such as folks in a workgroup, non-repudiation cannot be achieved. It is impossible to determine, in such an arrangement, who actually used the symmetric key to encrypt the message.

Key Escrow

Key escrow is the process in which keys required to decrypt encrypted data are held in a secure environment in the event that access is required to one or more of the keys. Although users and systems have access to various keys, circumstances may dictate that other individuals within the organization must gain access to those keys.

In a symmetric system all entities in possession of a shared secret key must protect the privacy and secrecy of that key. If it is compromised, lost, or stolen, the entire solution, meaning all entities using that key, is compromised.

In an asymmetric system, each user is able to use their private key to decrypt messages that have been encrypted using their public key. If a user loses access to their private key and the message is encrypted with their public key, the message will be lost.

An escrow system is a storage process in which copies of private keys or secret keys are maintained by a centralized management device or system. The system should securely store the encryption keys as a means of insurance or for recovery if necessary. Keys may be recovered by a key escrow agent and can be used to recover any data encrypted with the damaged or lost key.

Key recovery can be performed only by a key recovery agent or group of agents acting under specific guidance and authority. In some cases, recovery agents may be acting on behalf of a court under the authority of a court order.

A key recovery agent or group of agents should be trusted individuals. Many corporate, government, and banking situations require more than one trusted key recovery agent to be involved as in a two-man policy or dual-control. In such case, a mechanism known as M of N control can be implemented. M of N is a technique where there are multiple key recovery agents (M), and a determined minimum number of these agents (N) must be present and working in tandem in order to extract keys from the escrow database. The use of M of N control ensures accountability among the key recovery agents and prevents any one individual from having complete control over or access to a cryptographic solution.

Keys can be stored in either hardware solutions or software solutions. Both offer unique benefits and shortcomings. Hardware key storage solutions are not very flexible. However, as a key storage device, hardware is more reliable and more secure than a software solution. Hardware solutions are usually more expensive and are subject to physical theft. Some common examples of hardware key storage solutions are smart cards and flash memory drives.

Software key storage solutions generally offer customizable and flexible storage techniques. Anything electronically stored is vulnerable to electronic attacks. Electronic storage techniques rely on the host operating system, and if insufficient controls aren't in place, keys may be stolen, deleted, or destroyed.

Centralized key management is a system where every key generated is usually stored in escrow. Therefore, nothing encrypted by an end user is completely private. In many cases this is unacceptable to a public or open user community because it does not provide any control over privacy, confidentiality, or integrity.

Decentralized key management allows users to generate their own keys and submit keys to a centralized key management authority but to maintain their personal keys in a decentralized key management system as they desire. This limits the number of private keys stored by the centralized key management authority but also increases the risk should something be encrypted with a secret key that is not accessible by a recovery agent.

Key Management

As an SSCP, you should be aware of the various mechanisms, techniques, and processes used to protect, use, distribute, store, and control cryptographic keys. These various mechanisms and techniques all fall under the category of management solutions.

A key management solution should follow these basic rules:

- The key should be long enough to provide the necessary level of protection.

- The shorter the key length or bit length of the algorithm, the shorter the lifetime of the key.

- Keys should be stored in a secure manner and transmitted encrypted.

- Keys should be truly random and should use the full spectrum of the keyspace.

- Keys sequences should never repeat or use a repeating IV.

- The lifetime of a key should correspond to the sensitivity of the data it's protecting.

- If a key is used frequently, its lifetime should be shorter.

- Keys should be backed up or escrowed in case of emergency.

- Keys should be destroyed through the use of secure processes at the end of their lifetime.

In centralized key management, complete control of cryptographic keys is given to the organization and key control is taken away from the end users. Under a centralized key management policy, copies of all or most cryptographic keys are often stored in escrow. This allows administrators to recover keys in the event that a user loses their key, but it also allows management to access encrypted data whenever it chooses. A centralized key management solution requires a significant investment in infrastructure, processing capabilities, administrative oversight, and policy and procedural communication.

Cipher Suites

A cipher suite is a standardized collection of algorithms that include an authentication method, encryption algorithm, message authentication code, and the key exchange algorithm to be used to define the parameters for security and network communication between two parties. Most often the term cipher suite is used in relation to SSL/TLS connections, and each suite is referred to by specific name.

An official TLS Cipher Suite Registry is maintained by IANA (International Assigned Numbers Authority) at the following location:

www.iana.org/assignments/tls-parameters/tls-parameters.xhtml

A cipher suite is made up of and named by four elements. For example, CipherSuite “TLS_DHE_DSS_WITH_DES_CBC_SHA” consists of the following elements:

- A key exchange mechanism (TLS_DHE)

- An authentication mechanism (DSS)

- A cipher (DES_CBC)

- A hashing or message authenticating code (MAC) mechanism (SHA)

In practice, a client requesting a TLS session will send a preference-ordered list of the client-side supported cipher suites as part of the initiation handshake process. The server will reply and negotiate with the client based on the highest-preference cipher suite they have in common.

Ephemeral Key

An ephemeral key is a one-time key generated at time of need for a specific use or for use in a short or temporary time frame. An example might be a key that is used only once for a communications session and then discarded. Ephemeral keys are by definition in contrast to static or fixed keys, which never change. Ephemeral keys are used uniquely and exclusively by the endpoints of a single transaction or session.

Perfect Forward Secrecy

Perfect forward secrecy is a property that states that a session key won't be compromised if one of the long-term keys used to generate it is compromised in the future. In essence, perfect forward secrecy is a means of ensuring that no session keys will be exposed if a long-term secret key is exposed.

Perfect forward secrecy is implemented by using short-term, one-time-use ephemeral keys for each and every session. These keys are generated for a one-time use and discarded at the end of each session or period of time. Session keys may also be discarded and reissued based on the volume of data being transmitted.

Perfect forward secrecy also requires that if the original session key is compromised, only the part of the conversation encrypted by that key would be exposed. It also ensures that if the original asymmetric keys are obtained or disclosed, they could not be used to unlock any prior sessions captured by an eavesdropper or man-in-the-middle trap.

Cryptanalytic Attacks

Any cryptographic system is subject to attack. In some cases this may benefit the cryptographic community by establishing weaknesses and popular cryptographic algorithms. Cryptographic algorithms are often made public for just such attacks to test their veracity. In the real world, attacks against cryptographic systems happen every day. The following list explains various common attacks against cryptographic systems:

- Brute-Force Attack In a brute-force attack, all possible keys are tried until one is found that decrypts the ciphertext. It stands to reason that the longer the key, the harder it is to conduct a brute-force attack. Depending on key length and the resources used, a brute-force attack can take minutes, hours, or even centuries to conduct.

- Rainbow Table Attack A rainbow table is a series of precomputed hash values along with the associated plaintext prehashed value. This provides the original plaintext and the hashed value for the plaintext. For instance, the password “grandma” would be contained on the rainbow table along with its hash value. The use of rainbow tables are used as a method of deconstructing or reverse-engineering a hash value. Since passwords are stored on systems as hash values, if an attacker obtains access to the list of hashed passwords, they could process them against a rainbow table to obtain the original password. Rainbow tables may be obtained in different hash lengths.

- Known Plaintext Attack In a known plaintext attack, the attacker has access to both the plaintext and the ciphertext. The goal of the attacker is to determine the original key used to encrypt the ciphertext. In some cases, they may be analyzing the algorithm used to create the ciphertext.

- Chosen Ciphertext Attack In a chosen ciphertext attack, the attacker has access to the encryption mechanism and the public key or the private key and can process ciphertext in an attempt to determine the key or algorithm.

- Chosen Plaintext Attack In a chosen plaintext attack, the attacker has access to the algorithm, the key, or even the machine used to encrypt a message. The attacker processes plaintext through the cryptosystem to determine the cryptographic result.

- Differential Cryptanalysis Differential cryptanalysis is the study of changes in information as it is processed through a cryptographic system. This method uses the statistical patterns of information changing as it progresses through a system. Several cryptosystems, including DES, have been broken through differential cryptanalysis.

- Ciphertext-Only Attack The ciphertext-only attack is the most difficult attack. The attacker has little or no information other than the ciphertext. The attacker attempts to use frequency of characters, statistical data, trends, and any other information to assist in placing the ciphertext.

- Frequency Analysis Frequency analysis is the study of how often various characters show up in a language. For instance, oftentimes in the English language, a single character may be an I or A, while the frequency of two characters together might be qu, to, be, and others. This will assist the crypto analyst in breaking the encryption.

- Birthday Attack A birthday attack is based on a statistical fact, referred to as the birthday paradox, that there is a probability of two persons having the same birthday depending upon the number of persons in a room. For instance, if there are 23 persons in a room, there is a 50% chance that two of them have the same birthday. This increases to a 99.9% chance if there are 75 persons in the room. This type of statistical attack is used primarily against a hash value in that it is easier and faster to determine collisions based on two plaintext messages equaling the same hash value than it is trying to determine the original plaintext for a given hash value. The birthday attack technique relies on the statistical probability that two events will happen at the same time and that it will be faster to achieve a result using that method rather than having to exploit every possibility such as using brute force.

- Dictionary Attack A dictionary attack is commonly used in a brute-force attack against passwords. A dictionary attack may either be used as a brute-force attack whereby no words are known or filtered down into some suspected plaintext words such as a mother's maiden name or names of pets. These plaintext words are then forced into a specific input space, such as a password field.

Other dictionary attacks hash various dictionary values and compare them against the values in a hashed password file. Dictionary attacks may be successful when some information is known about the individual, such as, for instance, pet names, birth dates, mother's maiden name, names of children, and any words associated with a person's working environment (such as the medical or legal field).

Data Classification and Regulatory Requirements