Chapter 6. The Type System

- Structural types

- Using type constraints

- Type parameters and higher-kinded types

- Existential types

The type system is an important component of the Scala language. It enables lots of rich optimizations and constraints to be used during compilation, which helps runtime speed and prevents programming errors. The type system allows us to create all sorts of interesting walls around ourselves, known as types. These walls help prevent us from accidentally writing improper code. This is done through the compiler tracking information about variables, methods, and classes. The more you know about Scala’s type system, the more information you can give the compiler, and the type walls become less restrictive while still providing the same protection.

When using a type system, it’s best to think of it as an overprotective father. It will constantly warn you of problems or prevent you from doing things altogether. The better you communicate with the type system, the less restrictive it becomes. But if you attempt to do something deemed inappropriate, the compiler will warn you. The compiler can be a great means of detecting errors if you give it enough information.

In his book Imperfect C++, Matthew Wilson uses an analogy of comparing the compiler to a batman. This batman isn’t a caped crusader but is instead a good friend who offers advice and supports the programmer. In this chapter, you’ll learn the basics of the type system so you can begin to rely on it to catch common programming errors. The next chapter will cover more advanced type system concepts, as well as utilizing implicits with the type system.

This chapter will cover the basics of the type system, touching on definitions and theory. The next chapter covers more practical applications of the type system and the best practices to use when defining constraints. Feel free to skip this information if you’re already comfortable with Scala’s type system.

Understanding Scala’s type system begins in first understanding what a type is and how to create it.

6.1. Types

A type is a set of information the compiler knows. This could be anything from “what class was used to instantiate this variable” to “what collection of methods are known to exist on this variable.” The user can explicitly provide this information, or the compiler can infer it through inspection of other code. When passing or manipulating variables, this information can be expanded or reduced, depending on how you’ve written your methods. To begin, let’s look at how types are defined in Scala.

What is a Type?

A good example is the String type. This type includes a method substring, among other methods. If the user called a substring on a variable of type String the compiler would allow the call, because it knows that it would succeed at runtime (move above when passing or manipulating variables).

In Scala, types can be defined in two ways:

- Defining a class, trait or object.

- Directly defining a type using the type keyword.

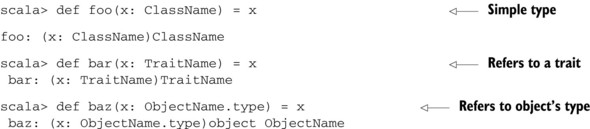

Defining a class, trait, or object automatically creates an associated type for the class, trait, or object. This type can be referred to using the same name as the class or trait. For objects we refer to the type slightly differently due to the potential of classes or traits having the same name as an object. Let’s look at defining a few types and referring to them in method arguments:

Listing 6.1. Defining types from class, trait, or object keywords

As seen in the example, class and trait names can be referenced directly when annotating types within Scala. When referring to an object’s type, you need to use the type member of the object. This syntax isn’t normally seen in Scala, because if you know an object’s type, you can just as easily access the object directly, rather than ask for it in a parameter.

Using objects as parameters can greatly help when defining domain specific languages, as you can embed words as objects that become parameters. For example, we could define a simulation DSL as follows:

object Now

object simulate {

def once(behavior : () => Unit) = new {

def right(now : Now.type) : Unit = ...

}

}

simulate once { () => someAction() } right Now

6.1.1. Types and paths

Types within Scala are referenced relative to a binding or path. As discussed in chapter 5, a binding is the name used to refer to an entity. This name could be imported from another scope. A path isn’t a type; it’s a location of sorts where the compiler can find types. A path could be one of the following:

- An empty path. When a type name is used directly, there’s an implicit empty path preceding it.

- The path C.this where C refers to a class. Using the this keyword directly in a class C is shorthand for the full path C.this. This path type is useful for referring to identifiers defined on outer classes.

- The path p.x where p is a path and x is a stable identifier of x. A stable identifier is an identifier that the compiler knows for certain will always be accessible from the path p. For example, the path scala.Option refers to the Option singleton defined on the package scala. It’s always known to exist. The formal definition of stable members are packages, objects, or value definitions introduced on nonvolatile types. A volatile type is a type where the compiler can’t be certain its members won’t change. An example would be an abstract type definition on an abstract class. The type definition could change depending on the subclass and the compiler doesn’t have enough information to compute a stable identifier from this volatile type.

- The path C.super or C.super[P] where C refers to a class and P refers to a parent type of class C. Using the super keyword directly is shorthand for C.super. Use this path to disambiguate between identifiers defined on a class and a parent class.

Types within Scala are referred to via two mechanisms: the hash (#) and dot (.) operators. The dot operator can be thought of doing the same for types as it does for members of an object. It refers to a type found on a specific object instance. This is known as a path-dependent type. When a method is defined using the dot operator to a particular type, that type is bound to a specific instance of the object. This means that you can’t use a type from a different object, of the same class, to satisfy any type constraints made using the dot operator. The best way to think of this is that there’s a path of specific object instances connected by the dot operator. For a variable to match your type, it must follow the same object instance path. You can see an example of this later.

The hash operator (#) is a looser restriction than the dot operator. It’s known as a type projection, which is a means of referring to a nested type without requiring a path of object instances. This means that you can reference a nested type as if it weren’t nested. You can see an example usage later.

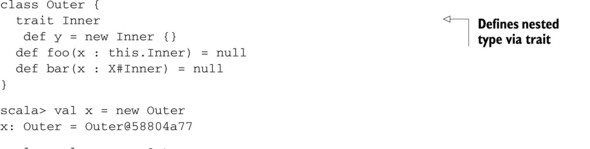

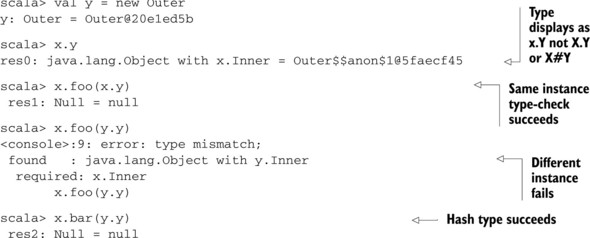

Listing 6.2. Path-dependent types and type projection examples

In the preceding example, the Outer class defines a nested trait Inner along with two methods that use the Inner type. Method foo uses a path dependent type and method bar uses a type projection. Variables x and y are constructed as two different instances of the Outer class. The reference to the y member of an instance of Outer displays its type, java.lang.Object with x.Y when we type this into the REPL. This type displays with the variable instance of Outer, which is x. This is what we meant earlier by our path. To access the correct type Y, you must travel the path through the x variable. If we call the foo method on x using the Inner instance from the same x variable, then the call is successful. But using the Inner instance from the y variable causes the compiler to complain with a type error. The type error explicitly states that it’s expecting the Inner type to come from the same instance as the method call—the x instance.

The bar method was defined using a type projection. The instance restriction isn’t in place as it was for the foo method. When calling the bar method on the x instance using the inner type from the y instance, the call succeeds. This shows that although path-dependent types ( foo.Bar ) require the Bar instances to be generated from the same foo instance, type projections (Foo#Bar ) match any Bar instances generated from any Foo instances. Both path-dependent and type projection rules apply to all nested types, including those created using the type keyword.

Path-Dependent Types Versus Type Projects

All path-dependent types are type projections. A path-dependent type foo.Bar is rewritten as foo.type#Bar by the compiler. The expression foo.type refers to the singleton type of Foo. This singleton type can only be satisfied by the entity referenced by the name foo. The path-dependent type ( foo.Bar ) requires the Bar instances to be generated from the same foo instance, while a type projection Foo#Bar would match any Bar instances generated from any Foo instances, not necessarily the entity referred to by the name Foo.

In Scala, all type references can be written as projects against named entities. The type scala.String is shorthand for scala.type#String where the name scala refers to the package scala and the type String is defined by the String class on the scala package.

There can be some confusion when using path-dependent types for classes that have companion objects. For example, if the trait bar.Foo has a companion object bar.Foo, then the type bar.Foo (bar.type#Foo) would refer to the trait’s type and the type bar.Foo.type would refer to the companion object’s type.

6.1.2. The type keyword

Scala also allows types to be constructed using the type keyword. This can be used to create both concrete and abstract types. Concrete types are created by referring to existing types, or through structural types which we’ll discuss later. Abstract types are created as place holders that you can later refine in a subclass. This allows a significant level of abstraction and type safety within programs. We’ll discuss this more later, but for now let’s create our own types.

The type keyword can only define types within some sort of context, specifically within a class, trait, or object, or within subcontext of one of these. The syntax of the type keyword is simple. It consists of the keyword itself, an identifier, and, optionally, a definition or constraint for the type. If a definition is provided, the type is concrete. If no constraints or assignments are provided, the type is considered abstract. We’ll get into type constraints a little later; for now let’s look at the syntax for the type keyword:

type AbstractType type ConcreteType = SomeFooType type ConcreteType2 = SomeFooType with SomeBarType

Notice that concrete types can be defined through combining other types. This new type is referred to as a compound type. The new type is satisfied only if an instance meets all the requirements of both original types. The compiler will ensure that these types are compatible before allowing the combination.

As an analogy, think of the initial two types as a bucket of toys. Each toy in a given bucket is equivalent to a member on the original type. When you create a compound type of two types using the with keyword, you’re taking two buckets, from two of your friends, and placing all their toys into one larger, compound bucket. When you’re combining the buckets, you notice that one friend may have a cooler version of a particular toy, such as the latest Ninja Turtle action figure, while the other friend, not as wealthy, has a ninja turtle that’s bright yellow and has teeth marks. In this case, you pick the coolest toy and leave it in the bucket. Given a sufficient definition of cool, this is how type unions work in Scala. For Scala, cool refers to type refinement. A type is more refined if Scala knows more about it. You may also have situations where you discover that both friends have broken or incomplete toys. In this case, you would take pieces from each toy and attempt to construct the full toy. For the most part, this analogy holds for compound types. It’s a simple combination of all the members from the original types, with various override rules. Type unions are even easier to understand when looking at them through the lens of structural types.

6.1.3. Structural types

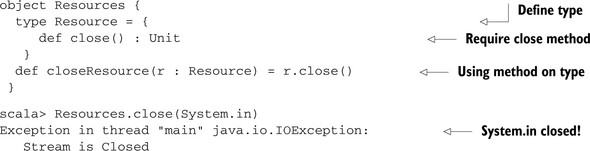

In Scala, a structural type is created using the type keyword and defining what method signatures and variable signatures you expect on the desired type. This allows a developer to define an abstract interface without requiring users to extend some trait or class to meet this interface. One common usage of structural typing is in the use of resource management code.

Structural types are usually implemented with reflection. Reflection isn’t always available on every platform and it can lead to performance issues. It’s best to provide named interfaces rather than use structural types in the general case. However for nonperformance sensitive situations, they can be very useful.

Some of the most annoying bugs, in my experience, are resource-related. We must always ensure that something acquired is released, and something created is eventually destroyed. As such, there’s a lot of boilerplate code common when using resources. I would love to avoid boilerplate code in Scala, so let’s see if structural types can come to the rescue. Let’s define a simple function that will ensure that a resource is closed after some block of code is executed. There’s no formal definition for what a resource is, so we’ll try to define it as anything that has a close method.

Listing 6.3. Resource handling utility

The first thing we do is define a structural type for resources. We define a type of the name Resource and assign it to an anonymous, or structural, resource definition. The resource definition is a block that encloses a bunch of abstract methods or members. In this case, we define the Resource type to have one member, named close. Finally, in the closeResource method, you can see that we can accept a method parameter using the structural type and call the close member we defined in definition. Then we attempt to use our method against System.in, which has a close method. You can tell the call succeeds by the exception that’s thrown. In general, you shouldn’t close the master input or output streams when running inside the interpreter! But it does show that structural types have the nice feature of working against any object. This is nice for dealing with libraries or classes we don’t directly control.

Structural typing also works within nested types and with nested types. We can nest types within the anonymous structural block. Let’s try implementing a simple nested abstract type and see if we can create a method that uses this type.

Listing 6.4. Nested structural typing

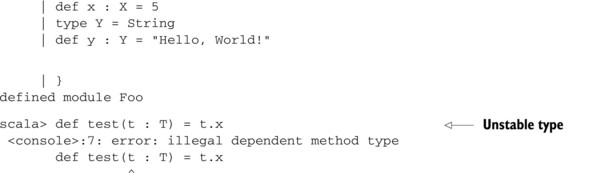

We start by declaring a structural type T. This type contains two nested types: X and Y. X is defined to be equivalent to Int, while Y is left abstract. We then implement a real object Foo that meets this structural type. Now we try to create a test method that should return the result of calling the x method on an instance of type T. We expect this to return an integer, as the x method on T returns the X type, and this is aliased to Int. But the definition of the call fails. Why? Scala doesn’t allow a method to be defined such that the types used are path-dependent on other arguments to the method. In this case, the return value of test would be dependent on the argument to test. We can prove this by writing out the expected return type explicitly:

scala> def test(t : T) : t.X = t.x <console>:7: error: illegal dependent

Therefore, the compiler inferred the path-dependent type here. If instead of using a path-dependent type, we wanted the type project against X we can modify our code. The compiler won’t automatically infer this for us, because the inference engine tries to find the most specific type it can. In this case, t.X is inferred, which is illegal. T#X, on the other hand, is valid in this context, and it’s also known to be an Int by the compiler. Let’s see what signature the compiler creates for something returning the type T#X.

scala> def test(t : T) : T#X = t.x test: (t: T)Int scala> test(Foo) res2: Int = 5

As you can see, the method is defined to return an Int, and works correctly against our Foo object. What does this code look like if we have it use the abstract type Y instead? The compiler can make no assumptions about the type Y, so it only allows you to treat it as the absolute minimum type, or Any. Let’s create a method that returns a T#Y type to see what it looks like:

scala> def test2(t :T) : T#Y = t.y

test2: (t: T)AnyRef{

type X = Int;

def x: this.X;

type Y;

def y: this.Y}#Y

The return type of the test2 method is AnyRef{type X = Int; def x: this.X; typeY; def y: this.Y}#Y. The rather verbose signature shows you how far the compiler goes into enforcing the type of the return. Because T#Y isn’t easily equivalent to another type, the compiler must drag all the information about T around with the type T#Y. Because a type projection isn’t tied to a particular instance, the compiler can be sure that two type projections are compatible. As a quick aside, notice the types of the x and y methods.

The x and y methods have return values that are path-dependent on this. When we defined the x method, we specified only a type of X, and yet the compiler turned the type into this.X. Because the X type is defined within the structural type T, you can refer to it via the identifier X; it refers to the path-dependent type this.X. Understanding when you’ve created a path-dependent type and when it’s acceptable to refer to these types is important.

When you reference one type defined inside another, you have a path-dependent type. Using a path-dependent type inside a block of code is perfectly acceptable. The compiler can ensure that the nested types refer to the exact object instance through examining the code. But to escape a path-dependent type outside this original scope, the compiler needs some way of ensuring the path is the same instance. This can sometimes boil down to using objects and vals instead of classes and defs.

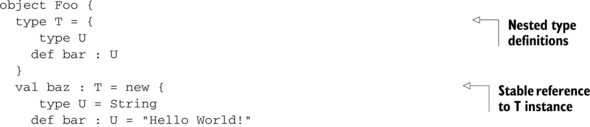

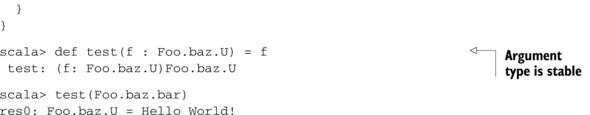

Listing 6.5. Path-dependent and structural types

First we set up the nested types T and U. These are nested on the singleton Foo. We then create an instance of type Foo.T labeled baz. Being a val member, the compiler knows that this instance is unchanging throughout the lifetime of the program and is therefore stable. Finally, we create a method that takes the type Foo.baz.U as an argument. We accept this because the path-dependent type U is defined on a path known to be stable:Foo.baz. When running into path-dependent type issues, we can fix things by finding a way for the compiler to know that a type is stable---that the type will also be well defined. This can usually be accomplished by utilizing some stable reference path.

Let’s look at a more in-depth example of path-dependent types by designing an Observable trait that you can use as a generic mechanism to watch for changes or notify others of a change. The Observable trait should provide two public methods: one that allows observers to subscribe and another that unsubscribes an observer. Observers should be able to subscribe to an Observable instance by providing a simple function callback. The subscription method should return a handle so that an observer can unsubscribe from change events on the observer at a future date. With path-dependent types, we can enforce that this handle is valid only with the originating Observable instance. Let’s look at the public interface on Observable:

trait Observable {

type Handle

def observe(callback: this.type => Unit): Handle = {

val handle = createHandle(callback)

callbacks += (handle -> callback)

handle

}

def unobserve(handle: Handle) : Unit = {

callbacks -= handle

}

protected def createHandle(callback: this.type => Unit): Handle

protected def notifyListeners() : Unit =

for(callback <- callbacks.values) callback(this)

}

The first thing to notice is the abstract Handle type. We’ll use this type to refer to registered observer callback functions. The observe method is defined to take a function of type this.type => Unit and return a handle. Let’s look at the callback type. The callback is a function that takes something of this.type and returns a Unit. The type this.type is a mechanism in Scala to refer to the type of the current object. This is similar to calling Foo.type for a Scala object with one major difference. Unlike directly referencing the current type of the object, this.type changes with inheritance. In a later example, we’ll show how a subclass of Observable will require callbacks to take their specific type as their parameter.

The unobserve function takes in a handle that was previously assigned to a callback and removes that observer. This handle type is path-dependent and must come from the current object. This means even if the same callback is registered to different Observable instances, their handles can’t be interchanged.

The next thing to notice is that we use a function here that isn’t yet defined: createHandle. This method should be able to construct handles to callbacks when they’re registered in an observe method. I’ve purposely left this abstract so that implementers of the observable pattern can determine their own mechanism for differentiating callbacks with handles. Let’s try to implement a default implementation for handles.

trait DefaultHandles extends Observable {

type Handle = (this.type => Unit)

protected def createHandle(callback: this.type => Unit): Handle =

callback

}

The DefaultHandles trait extends Observable and provides a simple implementation of Handle: It defines the Handle type to be the same type as the callbacks. This means that whatever equality and hashing are defined on the callback objects themselves will be used in the Observable trait to store and look up observers. In the case of Scala’s Function object equality and hash code are instance-based, as is the default for any user-defined object. Now that there’s an implementation for the handles, let’s define an observable object.

Let’s create a IntHolder class that will hold an integer. The IntHolder will notify observers every time its internal value changes. The IntHolder class should also allow a mechanism to get the currently held integer and set the integer:

class IntStore(private var value: Int)

extends Observable with DefaultHandles {

def get : Int = value

def set(newValue : Int) : Unit = {

value = newValue

notifyListeners()

}

override def toString : String = "IntStore(" + value + ")"

}

The IntStore class extends the Observable trait from the previous lines of code and mixes in the DefaultHandles implementation for handles. The get method returns the value stored in the IntStore. The set method assigns the new value and then notifies observers of the change. The toString method has also been overridden to provide a nicer printed form. Let’s take a look at this class in action:

scala> val x = new IntStore(5) x: IntStore = IntStore(5) scala> val handle = x.observe(println) handle: (x.type) => Unit = <function1> scala> x.set(2) IntStore(2) scala> x.unobserve(handle) scala> x.set(4)

The x variable is constructed as an IntStore with an initial value of 5. Next an observer is registered that will print the IntStore to the console on changes. The handle to this observer is saved in the handleval. Notice that the type of the handle uses a path-dependent x.type. Next, the value stored in x is changed to 2. The observer is notified and IntStore(2) is printed on the console. Next the handle variable is used to remove the observer. Now, when the value stored in x is changed to 4, the new value isn’t printed to the console. The observer functionality is working as desired.

What happens if we construct multiple IntStore instances and attempt to register the same callback to both? If it’s the same callback, using the DefaultHandles trait means that the two handles should be equal. Let’s try to do this in the REPL:

scala> val x = new IntStore(5)

x: IntStore = IntStore(5)

scala> val y = new IntStore(2)

y: IntStore[Int] = IntStore(2)

scala> y.unobserve(handle1)

<console>:10: error: type mismatch;

found : (x.type) => Unit

required: (y.type) => Unit

y.unobserve(handle1)

^

First we create separate instances, x and y of IntStore. Next we need to create a callback we can use on both observers. Let’s use the same println method as before:

scala> val callback = println(_ : Any) callback: (Any) => Unit = <function1>

Now let’s register the callback on both the x and y variable instances and check to see if the handles are equal:

scala> val handle1 = x.observe(callback) handle1: (x.type) => Unit = <function1> scala> val handle2 = y.observe(callback) handle2: (y.type) => Unit = <function1> scala> handle1 == handle2 res3: Boolean = true

The result is that the handle objects are exactly the same. Note that the == method does a runtime check of equality and works on any two types. This means that theoretically the handle from y could be used to remove the observer on x. Let’s look at what happens when attempting this on the REPL:

scala> y.unobserve(handle1)

<console>:10: error: type mismatch;

found : (x.type) => Unit

required: (y.type) => Unit

y.unobserve(handle1)

^

The compiler won’t allow this usage. The path-dependent typing restricts our handles from being generated from the same method. Even though the handles are equal at runtime, the type system has prevented us from using the wrong handle to unregister an observer. This is important because the type of the Handle could change in the future. If we implemented the Handle type differently in the future, then code that relied on handles being interchangeable between IntStores would be broken. Luckily the compiler enforces the correct behavior here.

Path-dependent types have other uses, but this should give you a good idea of their use and utility. What if, in the Observable example, we had wanted to include some kind of restriction on the Handle type? This is where we can use type constraints.

6.2. Type constraints

Type constraints are rules associated with a type that must be met for a variable to match the given type. A type can be defined with multiple constraints at once. Each of these constraints must be satisfied when the compiler is type checking expressions. Type constraints take the following two forms:

- Lower bounds (subtype restrictions)

- Upper bounds (supertype restrictions, also known as Conformance relations)

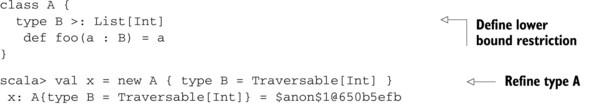

Lower bound restrictions can be thought of as super-restrictions. This is where the type selected must be equal to or a supertype of the lower bound restriction. Let’s look at an example using Scala’s collection hierarchy.

Listing 6.6. Lower bounds on types

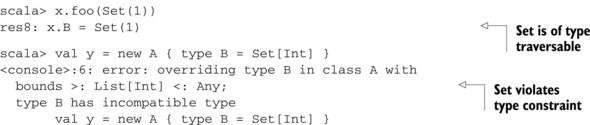

The first thing we do is define type B inside class A to have a lower bound of List[Int]. Then we instantiate a variable x as an anonymous subclass of A, such that type B is stabilized at Traversable[Int]. This doesn’t issue any warning, because Traversable is a parent class of List. The interesting piece here is that we can call our foo method with a Set class. A Set isn’t a supertype of the List class; it’s a subtype of Traversable! Just because the type restriction on type B requires it to be a superclass of List doesn’t mean that arguments matching against type B need to be within List’s hierarchy. They only need to match against the concrete form of type B, which is Traversable. What we can’t do is create a subclass of A where the type B is assigned as a Set[Int]; a Set could be polymorphically referred to as Iterable or Traversable.

Because Scala is a polymorphic object-oriented language, it’s important to understand the difference between the compile-time type constraints and runtime type constraints. In this instance, we are enforcing that type B’s compile-time type information must come from a superclass of List or List itself. Polymorphism means that an object of class Set, which subclasses Traversable, can be used when the compile-time type requires a Traversable. When doing so, we aren’t throwing away any behavior of the object; we’re merely dropping some of our compile-time knowledge of the type. It’s important to remember this when using with lower bound constraints.

Upper bound restrictions are far more common in Scala. An upper bound restriction states that any type selected must be equal to or a lower than the upper bound type. In the case of a class or trait, this means that any selected type must subclass from the class or trait upper bound. In the case of structural types, it means that whatever type is selected must meet the structural type, but can have more information. Let’s define an upper bound restriction.

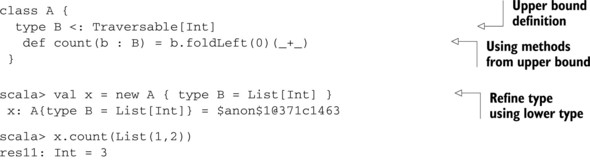

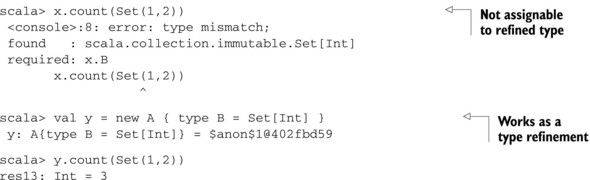

Listing 6.7. Upper bounds on types

First we create a type B that has a lower bound of Traversable[Int]. When we use an unrefined type B, we can use any method defined in Traversable[Int] because we know that any type satisfying B’s type restriction needs to extend Traversable[Int]. Later we can refine type B to be a List[Int], and everything works great. Once refined, we can’t pass other subtypes of Traversable[Int], such as a Set[Int]. The parameters to the count method must satisfy the refined type B. We can also create another refinement of type B that’s a Set[Int], and this will accept Set[Int] arguments. As you can see, upper bounds work the opposite way from lower bounds. Another nice aspect of upper bounds is that you can utilize methods on the upper bound without knowing the full type refinement.

Maximum Upper And Lower Bounds

In Scala, all types have a maximum upper bound of Any and lower bound of Nothing. If the compiler ever warns about incompatible type signatures that include Nothing or Any, without your code referring to them, it’s a good bet that you have an unbounded type somewhere the compiler is trying to infer.

An interesting side note about Scala is that all types have an upper bound of Any and a lower bound of Nothing. This is because all types in Scala descend from Any, while all types are extended by Nothing. If the compiler ever warns about incompatible type signatures that include Nothing or Any, without you having specified them, it’s a good bet that you have an unbounded type somewhere the compiler is trying to infer. The usual cause of this is trying to combine incompatible types, or you have a missing upper or lower bound on a generic type.

In Scala, expressions are polymorphic. If a method accepts an argument of type Any, it can be passed an expression of type Int. When enforcing type constraints on method parameters it may not be necessary to use a type constraint but instead accept the subtype. For example:

def sum[T <: List[Int]](t: T) = t.foldLeft(0)(_+_)

The sum method has a redundant type constraint. Because the type T doesn’t occur in the resulting value, the method could be written as

def sum(t: List[Int]) = t.foldLeft(0)(_+_)

without any changes to the meaning.

Bounded types are immensely useful in Scala. They help us define generic methods that can retain whatever specialized types they’re called with. They help design generic classes that can interoperate with all sorts of code. The standard collection library uses them extensively to enable all sorts of powerful combinations of methods. The collections, and other higher-kinded types, benefit greatly from using both upper and lower bounds in code. To understand how and when to use them, we must first delve into type parameters and higher-kinded types.

6.3. Type parameters and higher-kinded types

Type parameters and higher-kinded types are the bread and butter of the type system. A type parameter is a type definition that’s taken in as a parameter when calling a method, constructing a type, or extending a type. Higher-kinded types are those that accept other types and construct a new type. Just as parameters are key to constructing and combining methods, type parameters are the key to constructing and combining types.

6.3.1. Type parameter constraints

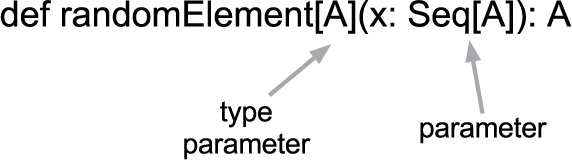

Type parameters are defined within brackets ([]) before any normal parameters are defined. Normal parameters can then use the types named as parameters. Let’s look at a simple method defined using type parameters in figure 6.1:

Figure 6.1. Defining type parameters on a method

This is the definition of a randomElement method. The method takes a type parameter A. This type parameter is then used in the method parameter list. The randomElement method takes a List of some element type, named A, and returns an instance of that element type. When calling the method, we can specify the type parameter as we wish:

scala> randomElement[Int](List(1,2,3))

res0: Int = 3

scala> randomElement[Int](List("1", "2", "3"))

<console>:7: error: type mismatch;

found : java.lang.String("1")

required: Int

randomElement[Int](List("1", "2", "3"))

^

scala> randomElement[String](List("1", "2", "3"))

res1: String = 2

You can see that when we specify Int for the type parameter, the method will accept lists of integers but not lists of strings. But we can specify String as a type parameter and allow lists of strings but not lists of integers. In the case of methods, we can even leave off the type parameter, and the compiler will infer one for us if it can:

scala> randomElement[String](List("1", "2", "3"))

res1: String = 2

This inference is pretty powerful. If there are several arguments to a function, the compiler will attempt to infer a type parameter that matches all the arguments. Scala’s List.apply is a parameterized method. Let’s look at this type inference in action.

scala> List(1.0, "String")

res7: List[Any] = List(1.0, String)

scala> List("String", Seq(1))

res8: List[java.lang.Object] = List(String, List(1))

When passed an integer and a string, the parameter to List is inferred as Any. This is the lowest possible type that both parameters will conform to. Any also happens to be the top type, the one that all values conform to. If we choose different parameters, say a String and a Seq, the compiler infers a lower type, that of java.lang .Object, otherwise known in Scala as AnyRef.

The compiler’s goal, and yours as well, is to preserve as much type information as possible. This is easier to accomplish using type constraints on type parameters.

It’s possible to specify constraints in line with type parameters. These constraints ensure that any type used to satisfy the parameter needs to abide by the constraints. Specifying lower bound constraints also allows you to utilize members defined on the lower bound. Upper bound constraints don’t imply what members might be on a type but are useful when combining several parameterized types.

Type parameters are like method parameters except that they parameterize things at compilation time. It’s important to remember that all type programming is enforced during compilation and all type information must be known at compile time to be useful.

Type parameters also make possible the creation of higher-kinded types.

6.3.2. Higher-kinded types

Higher-kinded types are those that use other types to construct a new type. This is similar to how higher-order functions are those that take other functions as parameters. A higher-kinded type can have one or more other types as parameters. In Scala, you can do this using the type keyword. Here’s an example of a higher-kinded type.

type Callback[T] = Function1[T, Unit]

The type definition declares a higher-kinded type called Callback. The Callback type takes a type parameter and constructs a new Function1 type. The type Callback isn’t a complete type until it’s parameterized.

Type Constructors

Higher-kinded types are also called type constructors because they’re used to construct types. Higher-kinded types can be used to make a complex type---for example, M[N[T, X], Y] look like a simpler type, such as F[X].

The Callback type can be used to simplify the signature for functions that take a single parameter and return no value. Let’s look at an example:

scala> val x : Callback[Int] = y => println(y + 2) x: (Int) => Unit = <function1> scala> x(1) 3

This first statement constructs a Callback[Int] named x that takes an integer, adds it with the value 2 and prints the result. The type Callback[Int] is converted by the compiler into the full type (Int) => Unit. The next statement calls the function defined by x with the value 1.

Higher-kinded types are used to simplify type signatures for complex types. They can also be used to make complex types fit the simpler type signature on a method. Here’s an example:

scala> def foo[M[_]](f : M[Int]) = f foo: [M[_]](f: M[Int])M[Int] scala> foo[Callback](x) res4: Function1[Int, Unit] = <function1>

The foo method is defined as taking a type M that’s parameterized by an unknown type. The _ keyword is used as a placeholder for an unknown, existential type. Existential types are covered in more detail in section 6.5. The next statement calls the method foo with a type parameter of Callback and an argument of x, defined in the earlier example. This would not work with the Function1 type directly.

scala> foo[Function1](x)

<console>:9: error: Function1 takes two type parameters, expected: one

foo[Function1](x)

The foo method can’t be called directly with the Function1 type because Function1 takes two type parameters and the foo method expects a type with only one type parameter.

Higher-kinded types are used to simplify type definitions or to make complex types conform to simple type parameters. Variance is an additional complication to parameterized types and higher-kinded types.

Scala supports a limited version of type lambdas. A type lambda, similar to a function lambda, is a notation where you can define a higher-kinded type directly within the parameter of a function. For the foo method, we can use a type lambda rather than define the Callback type. Here’s an example:

scala> foo[({type X[Y] = Function1[Y, Unit]})#X]( (x : Int) => println(x) )

res7: (Int) => Unit = <function1>

The type lambda is the expression ({type X[Y] = Function1[Y, Unit]})#X. The type X is defined inside parentheses and braces. This constructs an anonymous path containing the type X. It then uses type projection (#) to access the type from the anonymous path. The type X remains hidden behind the anonymous path, and the expression is a valid type parameter, as it refers to a type.

Type lambdas were discovered and popularized by Jason Zaugg, one of the core contributors to the Scalaz framework.

6.4. Variance

Variance refers to the ability of type parameters to change or vary on higher-kinded types, like T[A]. Variance is a way of declaring how type parameters can be changed to create conformant types. A higher-kinded type T[A] is said to conform to T[B] if you can assign T[B] to T[A] without causing any errors. The rules of variance govern the type conformance of types with parameters. Variance takes three forms: invariance, covariance, and contravariance.

It’s impossible, and unsafe, to define them otherwise. If we want to make use of covariance or contravariance, stick to immutable classes, or expose your mutable class in an immutable interface.

Invariance refers to the unchanging nature of a higher-kinded type parameter. A higher-kinded type that’s invariant implies that for any types T, A, and B if T[A] conforms to T[B] then A must be the equivalent type of B. You can’t change the type parameter of T. Invariance is the default for any higher-kinded type parameter.

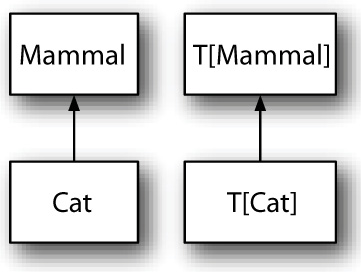

Covariance refers to the ability to substitute a type parameter with its parent type: For any types T, A and B if T[A] conforms to T[B] then A <: B. Figure 6.2 demonstrates a covariant relationship. The arrows in the diagram represent type conformance. The Mammal and Cat relationship is such that the Cat type conforms to the Mammal type; if a method requires something of type Mammal, a value of type Cat could be used. If a type T were defined as covariant, then the type T[Cat] would conform to the type T[Mammal]: A method requiring a T[Mammal] would accept a value of type T[Cat].

Figure 6.2. Covariance

Notice that the direction of conformance arrows is the same. The conformance of T is the same (co-) as the conformance of its type parameters.

The easiest example of this is a list, which is higher-kinded on the type of its elements. You could have a list of strings or a list of integers. Because Any is a supertype of String, we can use a list of strings where a list of Any is expected.

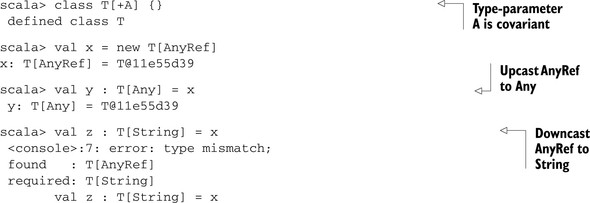

Creating a Covariant parameter is as easy as adding a + symbol before the type parameter. Let’s create a covariant type in the REPL and try out the type conformance it creates.

Listing 6.8. Covariance example

First we construct a higher-kinded class T that takes a covariant parameter A. Next we create a new value with the type parameter bound to AnyRef. Now, if we try to assign our T[AnyRef] to a variable of type T[Any], the call succeeds. This is because Any is the parent type of AnyRef, and our covariant constraint is satisfied. But when we attempt to assign a value of type T[AnyRef] to a variable of type T[String], the assignment will fail.

The compiler has checks in place to ensure that a covariant annotation doesn’t violate a few key rules. In particular, the compiler tracks the usage of a higher-kinded type and ensures that if it’s covariant, that it occurs only in covariant positions in the compiler. The same is true for contravariance. We’ll cover the rules for determining variance positions soon, but for now, we’ll look at what happens if we violate one of our variance positions:

scala> trait T[+A] {

| def thisWillNotWork(a : A) = a

| }

<console>:6: error: covariant type A occurs in

contravariant position in type A of value a

def thisWillNotWork(a : A) = a

As you can see, the compiler gives us a nice message that we’ve used our type parameter A in a position that’s contravariant, when the type parameter is covariant. We’ll cover the rules shortly, but for now it’s important to know that full knowledge of the rules isn’t needed to utilize variance correctly if you have a basic understanding of the concept. You can reason in your head whether a type should be covariant or contravariant and then let the compiler tell you when you’ve misplaced your types. You can use tricks to avoid placing types in contravariant positions when you require covariance, but first let’s look at contravariance.

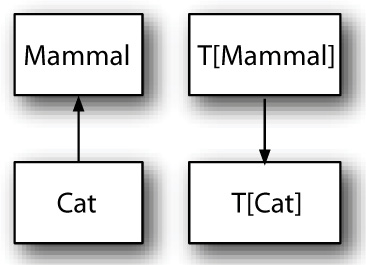

Contravariance is the opposite of covariance. For any types T, A and B, if T[A] conforms to T[B] then A >: B. Figure 6.3 shows the same conformance relationship between Mammal and Cat types. If the type T is defined as contravariant, then a method expecting a type of T[Cat] would accept a value of type T[Mammal]. Notice that the direction of the conformance relationship is opposite (contra-) that of the Mammal–Cat relationship.

Figure 6.3. Contravariance

Contravariance can be harder to reason through but makes sense in the context of a Function object. A Function object is covariant on the return type and contravariant on the argument type. Intuitively this makes sense. You can take the return value of a function and cast it to any supertype of that return value. As for arguments, you can pass any subtype of the argument type. You should be able to take a function of Any => String and cast it to a String => Any but not vice versa. Let’s look at performing this cast using raw methods:

Listing 6.9. Implicit variance of methods

scala> def foo(x : Any) : String = "Hello, I received a " + x

foo: (x: Any)String

scala> def bar(x : String) : Any = foo(x)

bar: (x: String)Any

scala> bar("test")

res0: Any = Hello, I received a test

scala> foo("test")

res1: String = Hello, I received a test

First we create a foo method of type Any => String. Next we define bar a method of type String => Any. As you can see, we can implement bar in terms of foo, and both calls to bar and foo with strings return the same value, as the functions are implemented the same. They do require differing types. We can’t pass an Int variable to the bar method, as it will fail to compile, but we can pass that Int variable to the foo method. Now if we want to construct an object that represents a function, we’d like this same behavior—that is, we’d like to be able to cast the function object as flexibly as possible. Let’s begin by defining our function object.

Listing 6.10. First attempt at defining a function object

scala> trait Function[Arg,Return]

defined trait Function

scala> val x = new Function[Any,String] {}

x: java.lang.Object with Function[Any,String] = $anon$1@39fba2af

scala> val y : Function[String,Any] = x

<console>:7: error: type mismatch;

found : java.lang.Object with Function[Any,String]

required: Function[String,Any]

val y : Function[String,Any] = x

^

scala> val y : Function[Any,Any] = x

<console>:7: error: type mismatch;

found : java.lang.Object with Function[Any,String]

required: Function[Any,Any]

val y : Function[Any,Any] = x

First we create our Function trait. The first type parameter is for the argument to the function and the second is for the return type. We then construct a new value with an argument of type Any and a return value of type String. If we attempt to cast this to a Function[String,Any] (a function that takes a String and returns an Any), the call fails. This happens because we haven’t defined any variance annotations. Let’s first declare our return value as covariant. Return values are actual values, and we know that we can always take a variable and cast it to a supertype.

Listing 6.11. Function object with only covariance

scala> trait Function[Arg,+Return]

defined trait Function

scala> val x = new Function[Any,String] {}

x: java.lang.Object with Function[Any,String] = $anon$1@3c56b64c

scala> val y : Function[String,Any] = x

<console>:7: error: type mismatch;

found : java.lang.Object with Function[Any,String]

required: Function[String,Any]

val y : Function[String,Any] = x

^

scala> val y : Function[Any,Any] = x

y: Function[Any,Any] = $anon$1@3c56b64c

Once again, we declare our Function trait; but this time we declare the return value type covariant. We construct a new value of the Function trait, and again attempt to cast it. The cast still fails due to type mismatch. But we’re able to cast our return value type from String to Any. Let’s use contravariance on the argument value:

Listing 6.12. Function with covariance and contravariance

scala> trait Function[-Arg,+Return]

defined trait Function

scala> val x = new Function[Any,String] {}

x: java.lang.Object with Function[Any,String] = $anon$1@69adff28

scala> val y : Function[String,Any] = x

y: Function[String,Any] = $anon$1@69adff28

Once again we construct a Function trait, only this time the argument type is contra-variant and the return type is covariant. We instantiate an instance of the trait and attempt our cast, which succeeds! Let’s extend our trait to include a real implementation and ensure things work appropriately:

Listing 6.13. Complete function example

scala> trait Function[-Arg,+Return] {

| def apply(arg : Arg) : Return

| }

defined trait Function

scala> val foo = new Function[Any,String] {

| override def apply(arg : Any) : String =

| "Hello, I received a " + arg

| }

foo: java.lang.Object with Function[Any,String] = $anon$1@38f0b51d

scala> val bar : Function[String,Any] = foo

bar: Function[String,Any] = $anon$1@38f0b51d

scala> bar("test")

res2: Any = Hello, I received a test

We create our Function trait, but this time it has an abstract apply method that will hold the logic of the function object. Now we construct a new function object foo with the same logic as the foo method we had earlier. We attempt to construct a bar Function object using the foo object directly. Notice that no new object is created; we’re merely assigning one type to another similar to polymorphically assigning a value of a child class to a reference of the parent class. Now we can call our bar function and receive the expected output.

Congratulations! You’re now an initiate of variance annotations. But there are some situations you may encounter when you need to tweak your code for appropriate variance annotations.

6.4.1. Advanced variance annotations

When designing a higher-kinded type, at some point you’ll you wish it to have a particular variance, and the compiler won’t let you do this. When the compiler restricts variance but you know it shouldn’t, there’s usually a simple transform that can fix your code to compile and keep the type system happy. The easiest example of this is in the collections library.

The Scala collections library provides a mechanism for combining two collections types. It does this through a method called ++. In the actual collections library, the method signature is pretty complicated due to the library’s advanced features, so for this example we’ll use a simplified version of the ++ signature. Let’s attempt to define an abstract List type that can be combined with other lists. We’d like to be able to convert, for example, a list of strings to a list of Any, so we’re going to annotate the ItemType parameter as covariant. We’ll define our ++ method such that it takes another list of the same ItemType and returns a new list that’s the combination of the two lists. Let’s take a look at what happens:

Listing 6.14. First attempt at a list interface

scala> trait List[+ItemType] {

| def ++(other : List[ItemType]): List[ItemType]

| }

<console>:6: error: covariant type ItemType occurs in

contravariant position in type List[ItemType] of value other

def ++(other : List[ItemType]): List[ItemType]

The compiler is complaining that we’re using the ItemType parameter in a contravariant position! This statement is true, but we know that it should be safe to combine two lists of the same type and still be able to cast them up the ItemType hierarchy. Is the compiler too restrictive when it comes to variance? Perhaps, but let’s see if we can work around this.

We’re going to make the ++ method take a type parameter. We can use this new type parameter in the argument to avoid having ItemType in a contravariant position. The new type parameter should capture the ItemType of the other List. Let’s naively use another type parameter

Listing 6.15. Naive attempt to work around variance

scala> trait List[+ItemType] {

| def ++[OtherItemType](other: List[OtherItemType]): List[ItemType]

| }

defined trait List

scala> class EmptyList[ItemType] extends List[ItemType] {

| def ++[OtherItemType](other: List[OtherItemType]) = other

| }

<console>:7: error: type mismatch;

found : List[OtherItemType]

required: List[ItemType]

def ++[OtherItemType](other: List[OtherItemType]) = other

Adding the OtherItemType lets the creation of the List trait succeed. Great! Let’s see if we can use it. We implement an EmptyList class that’s an efficient implementation of a List of no elements. The combination method,++, should return whatever is passed to it, because it’s empty. When we define the method, we get a type mismatch. The issue is that OtherItemType and ItemType aren’t compatible types! We’ve enforced nothing about OtherItemType in our ++ method and therefore made it impossible to implement. Well, we know that we need to enforce some kind of type constraint on OtherItemType and that we’re combining two lists. We’d like OtherItemType to be some type that combines well with our list. Because ItemType is covariant, we know that we can cast our current list up the ItemType hierarchy. As such, let’s use ItemType as the upper bound constraint on OtherItemType. We’ll also need to change the return type of the ++ method to return the OtherItemType, as OtherItemType may be higher up the hierarchy than ItemType is. Let’s take a look:

Listing 6.16. Appropriately working with variance

scala> trait List[+ItemType] {

| def ++[OtherItemType >: ItemType](

| other: List[OtherItemType]): List[OtherItemType]

| }

defined trait List

scala> class EmptyList[ItemType] extends List[ItemType] {

| def ++[OtherItemType >: ItemType](

| other: List[OtherItemType]) = other

| }

defined class EmptyList

Our new definition of empty list succeeds. Let’s take it for a test drive through the REPL and ensure that combining empty lists of various types returns the types we desire.

Listing 6.17. Ensuring the correct type changes

scala> val strings = new EmptyList[String] strings: EmptyList[String] = EmptyList@2cfa930d scala> val ints = new EmptyList[Int] ints: EmptyList[Int] = EmptyList@58e5ebd scala> val anys = new EmptyList[Any] anys: EmptyList[Any] = EmptyList@65685e30 scala> val anyrefs = new EmptyList[AnyRef] anyrefs: EmptyList[AnyRef] = EmptyList@1d8806f7 scala> strings ++ ints res3: List[Any] = EmptyList@58e5ebd scala> strings ++ anys res4: List[Any] = EmptyList@65685e30 scala> strings ++ anyrefs res5: List[AnyRef] = EmptyList@1d8806f7 scala> strings ++ strings res6: List[String] = EmptyList@2cfa930d

First we declare our variables. These are lists of String, Int, Any, and AnyRef. Now let’s combine lists and see what happens. First we try to combine our list of Strings and Ints. You can see that the compiler infers Any as a common superclass to String and Int and gives you a list of Any. This is exactly what we wanted! Next we combine our list of strings with a list of anys and we get another list of anys. Once again, this is as we desired. If we combine the list of strings with a list of AnyRefs, the compiler infers AnyRef as the lowest possible type, and we retain a small amount of type information. If we combine the list of strings with another list of strings, we’ll retain the type of a List[String]. We now have a list interface that’s very powerful and type-safe.

Variance is Hard

In Scala, the choice of making something variant or not is important. The variance annotation can affect a lot of the mechanics in Scala, including type inference. The safest bet when working with variance is to start with everything invariant and mark variance as needed.

In general, when running into covariance or contravariance issues in class methods, its usually the case of introducing a new type parameter and using that for the signature of the method. In fact, the final ++ method definition is far more flexible, and still type-safe; therefore, when you face issues with variance annotations, step back and try to introduce a few new type parameters.

6.5. Existential types

Existential types are a means of constructing types where portions of the type signature are existential, where existential means that although some real type meets that portion of a type signature, we don’t care about the specific type. Existential types were introduced into Scala as a means to interoperate with Java’s generic types, so we’ll start by looking at a common idiom found in Java programs.

In Java, generic types were added to the language later and done so with backward compatibility in mind. As such, the Java collections API was enhanced to utilize generic types but still supports code that’s written without generic types This was done using a combination of erasure and a subdued form of existential types. Scala, seeking to interoperate as closely as possible with Java, also supports existential types for the same reason. Scala’s existential types are far more powerful and expressive than Java’s, as illustrated next.

Let’s look at Java’s List interface:

interface List<E> extends Collection<E> {

E get(int idx);

...

}

The interface has a type parameter E which is used to specify the type of elements in the list. The get method is defined using this type parameter for its return value. This setup should be familiar from the earlier discussion of type parameters. The strangeness begins when we look at the backward compatibility. The older List interface in Java was designed without generic types. Code written for this old interface is still compatible with Java Generics. For example:

List foo = ... System.out.println(foo.get(0));

The generic parameter for the List interface is never specified. Although an experienced Java developer would know that the type returned by the get method is java.lang.Object, they might not fully understand what’s going on. In this example, Java is using existential types when type parameters aren’t specified. This means that the only information known about the missing type parameter is that it must be a subtype of or equivalent to java.lang.Object because all type parameters in Java are a subtype of or equivalent to java.lang.Object. This allows the older code, where List had no generic type parameter and the get method returned the type Object, to compile directly against the new collections library.

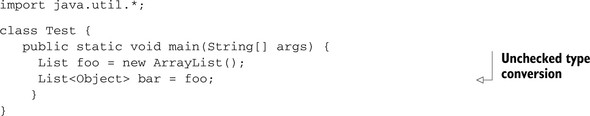

Is creating a list without any type parameters equivalent to creating a List<Object>? The answer is no; they have a subtle difference. When compiling the following code, you’ll see unchecked warnings from the compiler:

When passing the -Xlint flag to javac, the following warning is displayed:

es.java:7: warning: [unchecked] unchecked conversion

found : java.util.List

required: java.util.List<java.lang.Object>

List<Object> bar = foo;

^

1 warning

Java doesn’t consider List and List<Object> the same type, but it will automatically convert between the two. This is done because the practical difference between these two types, in Java, is minimal. In Scala, existential types take on a slightly different flavor. Let’s create an existential type in a Java class and see what it looks like in the Scala REPL. First, the Java class:

public class Test {

public static List makeList() {

return new ArrayList();

}

}

The Test class provides a single makeList method that returns a List with existential type signature. Let’s start up the Scala REPL and call this method:

scala> Test.makeList() res0: java.util.List[_] = []

The type returned in the REPL is java.util.List[_]. Scala provides a convenience syntax for creating existential types that uses the underscore in the place of a type parameter. We’ll cover the full syntax shortly; but this shorthand is more commonly found in production code. This _ can be considered a place holder for a single valid type. The _ is different from closure syntax because it isn’t a placeholder for a type argument, but rather is a hole in the type. The compiler isn’t sure what the specific type parameter is, but it knows that there is one. You can’t substitute a type parameter later; the hole remains.

Deviation from Java

We can’t add things to a List[_] unless the compiler can determine that they’re of the same type as the list. This means we can’t add new values to the list without some form of casting. In Java, this operation would compile and an Unchecked warning would be issued.

Existential types can also have upper and lower bounds in Scala. This can be done by treating the _ as if it were a type parameter. Let’s take a look:

scala> def foo(x : List[_ >: Int]) = x foo: (x: List[_ >: Int])List[Any]

The foo method is defined to accept a List of some parameter that has a lower bound of Int. This parameter could have any type; the compiler doesn’t care which type, as long as it’s Int or one of its super types. We can call this method with a value of type String, because Int and String share a parent, Any. Let’s look.

scala> foo(List("Hi"))

res9: List[Any] = List(Hi)

When calling the foo method with a List("Hi") the call succeeds, as expected.

6.5.1. The formal syntax of existential types

Scala’s formal syntax for existential types uses the forSome keyword. Here’s the excerpt explaining the syntax from the Scala Language Specification:

Scala Language Specification—Excerpt from Section 3.2.10

An existential type has the form T forSome {Q} where Q is a sequence of type declarations.

In the preceding definition, the Q block is a set of type declarations. Type declarations, in the Scala Language Specification could be abstract type statements or abstract val statements. The declarations in the Q block are existential. The compiler knows that there’s some type that meets these definitions, but doesn’t remember what that type is specifically. The type declared in the T section can then use these existential identifiers directly. This is easiest to see by converting the convenient syntax into the formal syntax. Let’s take a look:

scala> val y: List[_] = List()

y: List[_] = List()

scala> val x: List[X forSome { type X }] = y

x: List[X forSome { type X }] = List()

The y value is constructed having the type List[_]. The x value is constructed to have the type List[X forSome { type X }]. In x value, the type X is existential and acts the same as the _ in the y’s type. A more complicated scenario occurs when the existential type has a lower or upper bound restriction. In that case, the entire lower bound or upper bound is translated into the forSome block:

scala> val y: List[_ <: AnyRef] = List()

y: List[_ <: AnyRef] = List()

scala> val x: List[X forSome { type X <: AnyRef }] = y

x: List[X forSome { type X <: AnyRef }] = List()

The first value, y, has the type List[_ <: AnyRef]. The existential _ <: AnyRef is translated to type X <: AnyRef in the forSome section. Remember that all type declarations in the forSome blocks are treated as existential that can be used in the left hand side type. In this case, the left-hand side type is X. The forSome block could be used for any kind of type declaration, including values or other existential types.

And now it’s time for a more complex example involving existential types. Remember the Observable trait from the dependent type section? Let’s take another look at the interface for the Observable trait:

trait Observable {

type Handle

def observe(callback: this.type => Unit) : Handle = ..

def unobserve(handle: Handle) : Unit = ...

...

}

Imagine we wanted some generic way to interact with the Handle types that are returned from this object. You can declare a type that can represent any Handle using existential types. Let’s look at this type:

type Ref = x.Handle forSome { val x: Observable }

The type is declared with the name Ref. The forSome block contains a val definition. This means that the value x is existential: the compiler doesn’t care which Observable value, only that there is one. On the left-hand side of the type declaration is the path-dependent type x.Handle. Notice that it isn’t possible to create this existential type using the convenience syntax, as the _ can only stand in for a type declaration.

Let’s create a trait that will track all the handles from Observables using the Ref type. We’d like this trait to maintain a list of handles such that we can appropriately clean up the class when needed. This cleanup involves unregistering all observers. Let’s look at the trait:

trait Dependencies {

type Ref = x.Handle forSome { val x : Observable }

var handles = List[Ref]()

protected def addHandle(handle: Ref) : Unit = {

handles :+= handle

}

protected def removeDependencies() {

for(h <- handles) h.remove()

handles = List()

}

protected def observe[T <: Observable](

obj : T)(handler : T => Unit) : Ref = {

val ref = obj.observe(handler)

addHandle(ref)

ref

}

}

The trait Dependencies redefines the type Ref as before. The handles member is defined as a List of Ref types. An addHandle method is defined, which takes a handle, the Ref type, and adds it to the list of handles. The removeDependencies method loops over all registered handles and calls their remove method. Finally, the observe method is defined such that it registers a handler with an observable and then registers the Handle returned using the addHandle method.

You may be wondering how we’re able to call remove on the Handle type. This is invalid code using the Observable trait as defined earlier. The code will work this minor addition to the Observable trait:

trait Observable {

type Handle <: {

def remove() : Unit

}

...

}

The Observable trait is now defined such that the Handle type requires a method remove() : Unit. Now you can use the Dependencies trait to track handlers registered on observables. Let’s look at a REPL session demonstrating this:

scala> val x = new VariableStore(12)

x: VariableStore[Int] = VariableStore(12)

scala> val d = new Dependencies {}

d: java.lang.Object with Dependencies = $anon$1@153e6f83

scala> val t = x.observe(println)

t: x.Handle = DefaultHandles$HandleClass@662fe032

scala> d.addHandle(t)

scala> x.set(1)

VariableStore(1)

scala> d.removeDependencies()

scala> x.set(2)

The first line creates a new VariableStore which is a subclass of Observable. The next statement constructs a Dependencies object, d, to track registered observable handles. The next two lines register the function println with the Observablex and add the handle to the Dependencies object d. Next, the value inside the Variable-Store is changed. This invokes the observers with the current value and the registered observer println is called to print VariableStore(1) on the console. After this, the removeDependencies method is used on the object d to remove all tracked observers. The next line again changes the value in the VariableStore and no values are output on the console.

Existential types provide a convenient syntax to represent these abstract types and interact with them. Although the formal syntax isn’t frequently used, this uncommon situation is the most common case where it’s needed. It’s a great tool to understand and use when running into nested types that seem inexpressible, but it shouldn’t be needed in most situations.

6.6. Summary

In this chapter, you learned the basic rules governing Scala’s type system. We learned how to define types and combine them. We looked at structural typing and how you can use it to emulate duck typing in dynamic languages. We learned how to create generic types using type parameters and how to enforce upper and lower bounds on types. We looked at higher-kinded types and type lambdas and how you can use them to simplify complex types. We also looked into variance and how to create flexible parameterized classes. Finally we explored existential types and how to create truly abstract methods. These basic building blocks of the Scala type system are used to construct advanced models of behavior and interactions within a program. In the next chapter, we use these fundamentals to implement implicit resolution and advanced type system features.