Chapter 17. Engineering for Data Durability

SREs live and breathe reliability, but to many engineers the word reliability is synonymous with availability: “How do we keep the site up?” Reliability is a multifaceted concern, however, and an extremely important part of this is durability: “How do we avoid losing or corrupting our data?”

Engineering for durability is of paramount importance for any company that stores user data. Most companies can survive a period of downtime, but few can survive losing a significant fraction of user data. Building expertise in durable systems is particularly challenging, however; most companies improve availability over time as they grow and as their systems mature, but a single durability mistake can be a company-ending event. It’s therefore important to invest effort ahead of time to understand real-world durability threats and how to engineer against them.

Replication Is Table Stakes

If you don’t want to lose your data, you should store multiple copies of it. You probably didn’t need a book to tell you this. We’ll breeze through most of this pretty quickly because this is really just the basic requirements when it comes to durability.

Backups

Back up your data. The great thing about backups is that they’re logically and physically disjointed from your primary data store: an operational error that results in loss or corruption of database state probably won’t impact your backups. Ideally, these should be stored both local to your infrastructure and also off-site, to provide both fast local access and to safeguard against local physical disasters.

Backups have some major limitations, however, particularly with regard to recovery time and data freshness. Both of these can conspire to leave you vulnerable to more data loss or downtime than you might expect.

Restoration

Restoring state from backups can take a surprisingly long time, especially if you haven’t practiced recovering from backup recently. In fact, if you haven’t tested your backups recently, it’s possible they don’t even work at all!

In the early days of Dropbox, we were dismayed to discover that it was going to take eight hours just to restart a production database after a catastrophic failure. Even though all of the data was intact, we had set the innodb_max_dirty_pages_pct MySQL parameter too high, resulting in MySQL taking eight hours just to scan the redo log during crash recovery.1 Fortunately, this database didn’t store critical data, and we were able bring dropbox.com back online in a couple of hours by bypassing the database altogether, but this was certainly a wake-up call. Our operational sophistication has improved dramatically since then, which I outline later in this chapter.

Freshness

Backups represent a previous snapshot in time and will usually result in loss of recent data when you restore from them. You typically want to store both full-snapshot backups for fast recovery and for historical versioning, along with incremental backups of more recent state to minimize staleness. Any stronger guarantees than this, however, require an actual replication protocol.

Replication

Database replication techniques range from asynchronous replication to semi-synchronous replication to full quorum or consensus protocols. The choice of replication strategy will be informed by how much inconsistency you can tolerate and, to some extent, the performance requirements of the database. Although replication is often essential for durability, it can also be a source of inconsistency. You must take care with asynchronous replication schemes to ensure that stale data isn’t erroneously read from a replica database. A database promotion to a replica that is lagging from the master can also introduce permanent data loss.

Typically, a company will run one primary database and two replicas, and then just assume that the durability problem has been solved. More elaborate storage systems will often require more elaborate replication mechanisms, particularly when storage overhead is a serious concern. For a company like Dropbox that stores exabytes of data, there are more effective ways of providing durability than basic replication. Techniques like erasure coding are adopted instead, which store coded redundant chunks of data across many disks and achieve higher durability with lower storage overhead. Dropbox utilizes variants on erasure coding that are designed to distribute data across multiple geographical regions while minimizing the cross-region network bandwidth required to recover from routine disk failures.

Estimating durability

Regardless of the choice of replication technique, an obvious question you might have is, “How much durability do I actually have?” Do you need one database replica? Two? More?

One convenient way of estimating durability is to plug some numbers into a Markov model. Let’s jump into some (simplified) math here, so take a deep breath—or just skip to the end of this section.

Let’s assume that each disk has a given mean time to failure (MTTF) measured in hours and that we have operational processes in place to replace and re-replicate a failed disk with a given mean time to recovery (MTTR) in hours. We’ll represent these as failure and recovery rates of and , respectively. This means that we assume that each disk fails at a rate of λ failures per hour on average, and that each individual disk failure is replaced at a rate of μ recoveries per hour.

For a given replication scheme, let’s say that we have n disks in our replication group, and that we lose data if we lose more than m disks; for example, for three-way database replication we have n = 3 and m = 2; for RS(9,6) erasure coding, we have n = 9 and m = 3.

We can take these variables and model a Markov chain, shown in Figure 17-1, in which each state represents a given number of failures in a group and the transitions between states indicate the rate of disks failing or being recovered.

Figure 17-1. Durability Markov model

In this model, the flow from the first to second state is equal to nλ because there are n disks remaining that each fail at a rate of λ failures per hour. The flow from the second state back to the first is equal to μ because we have only one failed disk, which is recovered at a rate of μ recoveries per hour, and so on.

The rate of data loss in this model is equivalent to the rate of moving from the second-last state to the data-loss state. This flow can be computed as

for a data loss rate of R(loss) replication groups per hour.

This simplified failure model allows us to plug in some numbers and estimate how likely we are to lose data.

Let’s say our disks have an Annualized Failure Rate (AFR) of 3%. We can compute MTTF from AFR by using (this is approximately ); in this case MTTF = 287,795 hours. Let’s say we have some reasonably good operational tooling that can replace and re-replicate a disk within 24 hours after failure, so MTTR = 24. If we adopt three-way data replication we have n = 3, m = 2, , and .

Plugging these into the equation earlier, we get R(loss) = 7.25 × 10–14 data loss incidents per hour or 6.35 × 10–10 incidents per year. This means that a given replication group is safe in a given year with probability 0.9999999994.

Wait, that’s pretty safe, right?

Well, nine 9s of durability is pretty decent. If you have a huge number of replication groups, however, failure becomes more likely than this, because each individual group has nine 9s of durability, but in aggregate it’s more likely that one will fail. If you’re a big consumer storage company, you’ll likely want to push durability further than this. If you reduce recovery delay or buy better disks or increase replication factor, it’s pretty easy to push durability numbers much higher. Dropbox achieves theoretical durability numbers well beyond twenty-four 9s, aided by some fancy erasure coding and automatic disk recovery systems. According to these models, it’s practically impossible to lose data. Does that mean we get to pat ourselves on the back and go home?

Unfortunately not…

Your durability estimate is only an upper bound!

It’s fairly easy to design a system with astronomically high durability numbers. Twenty-four 9s is an MTTF of 1,000,000,000,000,000,000,000,000 years. When your MTTF dwarfs the age of the universe, it might be time to reevaluate your priorities.

Should we trust these numbers, though? Of course not, because the secret truth is that adherence to theoretical durability estimates is missing the point. They tell you how likely you are to lose data due to routine disk failure, but routine disk failure is easy to model for and protect against. If you lose data due to routine disk failure, you’re probably doing something wrong.

What’s the most likely way you’re going to lose data? Is it losing a specific set of half a dozen different disks across multiple geographical regions within a narrow window of time? Or, is it an operator accidentally running a script that deletes all your files, or a firmware bug that causes half your storage nodes to fail simultaneously, or a software bug that silently corrupts 1% of your objects? Protecting against these threats is where the real SRE work comes in.

Real-World Durability

In the real world, the incidents that affect you worst are the ones that you don’t see coming: the “unknown unknowns.” If you saw something coming and didn’t protect against it, you weren’t doing your job anyway! Because we never know when a truck will plow into a data center or when someone will let an intern loose on production infrastructure, we need to devote our efforts to not only reducing the scope of bad things that can happen, but also being able to recover from the things that do. Bad things will happen. Reliable companies are the ones that are able to respond and recover before a user is impacted.

So, where do we start when guarding against the unknown? We’re going to devote the rest of this chapter to durability strategies that span the four pillars of durability engineering:

Isolation

Protection

Verification

Automation

Isolation

Strong isolation is the key to failure independence.

Durability on the basis of replication hinges entirely on these replicas failing independently. Even though this might seem pretty obvious, there is usually a large correlation between failure of individual components in a system. A data center fire might destroy on-site backups; a bad batch of hard drives might fail within a short timeframe; a software bug might corrupt all your replicas simultaneously. In these situations, you’ve suffered from a lack of isolation.

Physical isolation is an obvious concern, but perhaps even more important are investments in logical and operational isolation.

Physical isolation

To put it succinctly, store your stuff on different stuff.

There’s a wide spectrum of physical failure domains that spans from disks to machines, to racks, to rows, to power feeds, to network clusters, to data centers, to regions or even countries. Isolation improves as you go up the stack, but usually comes with a significant cost, complexity, or performance penalty. Storing state across multiple geographic regions often comes with high network and hardware costs, and often entails a big increase in latency. At Dropbox, we design data placement algorithms taking into consideration the power distribution schematics in the data center to minimize the impact of a power outage. For many companies this is clearly overkill.

Every company needs to pick an isolation level that matches its desired guarantees and technical feasibility, but after a level is chosen, it’s important to own it. “If this data center burns down, we’re going to lose all of our data and the company is over” is not necessarily an irresponsible statement, but it is irresponsible to be caught by surprise when you’re unsure of what isolation guarantees you actually have.

There are other dimensions to physical isolation, like dual-sourcing your hardware or operating multiple hardware revisions of disks and storage devices. Although this is outside the scope of many companies, large infrastructure providers will usually buy hardware components from multiple suppliers to minimize the impact of a production shortage or a hardware bug. You’d likely be surprised how often bugs show up in components like disk drivers, routing firmware, and RAID cards. Judicious adoption of hardware diversity can reduce the impact of catastrophic failures and provide a backup option if a new hardware class doesn’t pass production validation testing.

Logical isolation

Failures tend to cascade and bugs tend to propagate. If one system goes down, it will often hose everything else in the process. If one bad node begins writing corrupt data to another node, that data can propagate through the system and corrupt an increasingly large share of your storage. These failures happen because distributed systems often have strong logical dependencies between components.

The key to logical isolation is to build loosely coupled systems.

Logical isolation is difficult to achieve. Database replicas will happily store corrupt data issued to them by the primary database. A quorum consensus protocol like Zookeeper will also suffer the same fate. These systems are not only tightly coupled from a durability perspective, they’re tightly coupled from an availability perspective: if a load spike leads to a failure of one component, there is usually an even greater load applied to the other components, which subsequently also fail.

Strong logical isolation usually needs to be designed into the underlying architecture of a system.

One example at Dropbox is region isolation within the storage system. File objects at Dropbox are replicated extensively within a single geographical region, but also duplicated in a completely separate geographical region on the other side of the country. The replication protocols within a region are quite complicated and designed for high storage efficiency, but the API between the two regions is extremely simple: primarily just put and get, as illustrated in Figure 17-2.

Figure 17-2. Isolated multiregion storage architecture

Strong isolation between regions is a nice way to use modularity to hide complexity, but it comes at a significant cost in terms of replication overhead. So why would a company spend more than it needs to for storage?

The abstraction boundary between storage regions makes it extremely hard for cascading issues to propagate across regions. The loose logical coupling and simple API makes it difficult for a bug or inconsistency in one region to impact the other. The loose coupling also makes it possible to take an entire region down in an emergency without impacting our end users; in fact, we take a region down every week as a test exercise. This architecture results in a system that is extremely reliable from an availability and durability perspective, without imposing a significant operational burden on the engineers who run the system.

Operational isolation

The most important dimension to isolation is operational isolation, yet it is one that is often overlooked. You can build one of the world’s most sophisticated distributed storage systems, with extensive replication and physical and logical isolation, but then let someone run a fleet-wide firmware upgrade that causes disk writes to fail, or to accidentally reboot all your machines. These aren’t academic examples; we’ve seen both happen in the early days of Dropbox.

Typically, the most dangerous component of a system is the people who operate it. Mature SRE organizations recognize this and build systems to protect against themselves. I elaborate more on protections in the next section, but one critical set of protections is isolation across release process, tooling, and access controls. This means implementing restrictions to prevent potentially dangerous batch processes from running across multiple isolation zones simultaneously.

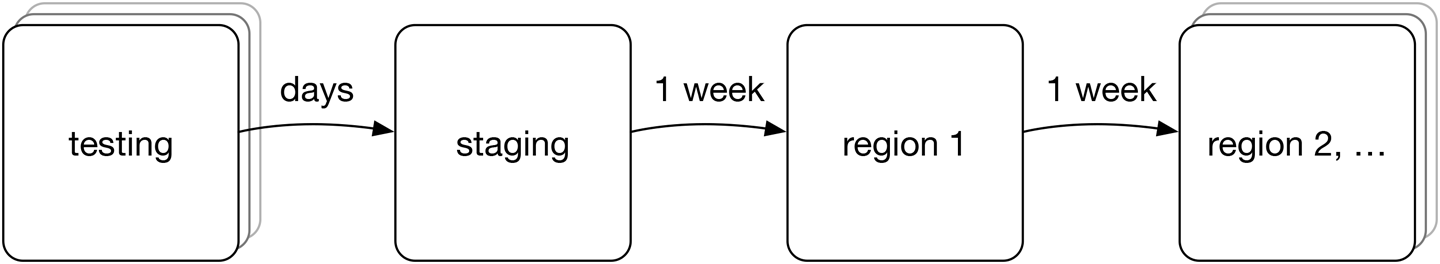

Operational isolation is enforced at many layers of the Dropbox stack, but one particular example is isolation in the code release process for the storage system. Some parts of the Dropbox code base are pushed to production every day, in use cases for which correctness or durability aren’t at stake, but for the underlying storage system, we adopt a multiweek release process that ensures that at least one fully replicated copy of data is stored on a thoroughly vetted version of the code at all times, as depicted in Figure 17-3.

Figure 17-3. Storage system code release process.

All new versions of storage code go through an extensive unit testing and integration testing process to catch any trivial errors, followed by overnight end-to-end durability testing on a randomized synthetic workload. We also run a set of long-running version-skew tests during which different code versions are run simultaneously on different nodes in the system; code pushes aren’t atomic, so we need to ensure that old and new versions of code are compatible with each other. After these tests have all passed, the release is automatically marked as “green” and is ready to be pushed to the staging cluster.

The staging cluster is an actual production deployment of the storage system that stores a mirror of a subset of production data. This is a relatively large cluster storing many tens of petabytes across multiple geographical regions. The code runs on this cluster for a full week and is subjected to the same workloads, monitoring, and verification as the rest of the storage system. After a week in staging the individual “DRIs” (directly responsible individuals—the engineers who “own” each subsystem) sign off that each software component is operating correctly and without any significant performance degradation. At this point, the software is ready to be pushed to the first real production region.

Code is pushed to the first production region and runs for another week before it can be safely pushed to the remaining regions. Recall that the multiregion architecture of the storage system ensures that all data in one region is replicated in at least one other region, avoiding any risk of data loss from a single code push. Internal verification systems are tuned to detect any anomalies within this one-week timeframe, but in practice, any potential durability issues are caught well before making it this far. The multiregion deployment model is tremendously valuable, however, at catching any performance or availability issues that arise only at large scale.

The release process is pipelined so that there are always multiple versions of code rolling out to production. The process itself is also automated to a large extent to avoid any operator errors. For practical reasons, we also support a “break-glass” procedure to allow sign-off on any emergency changes that need to be fast-tracked to production, along with extensive logging.

The thoroughness in this release process allows us to provide an extremely high level of durability, but it’s clearly overkill for the vast majority of use cases. Operational isolation comes at a cost, which is usually measured in inconvenience or engineer frustration. At a small company, it can be incredibly convenient to run a batch job across all machines simultaneously, or for engineers to be able to SSH into any server in the fleet. As a company grows, though, investments in operational isolation not only guard against major disasters, but also allow a team to move faster by providing guardrails within which to iterate and develop quickly.

Protection

Your biggest durability threat is yourself.

In an ideal world, we would always catch bad things before they happen. This is an unrealistic goal, of course, but still worth investing significant effort in attempting to achieve. In addition to safeguards to prevent disasters, you need a well-thought-out set of recovery mechanisms to mitigate any issues that slip through the cracks.

Testing

The first protection most engineers think of is simply testing. Everyone knows that you need good tests to produce reliable software. Companies often invest significant effort in unit testing to catch basic logic errors but underinvest in comprehensive end-to-end integration testing. Mocking out parts of the software stack allows fine-grained testing but can hide complex race conditions or cross-system interactions that occur in a real production deployment. In any distributed system, there is no substitute for running the full software stack in a test environment and validating correctness on long-running workloads. In more advanced use cases, you can use fault-injection to trigger failures of system components during this integration testing. In our experience, codepaths that handle failures and corner cases are far more error-prone than more common flows.

Safeguards

As mentioned previously, the biggest durability risk in a system is often yourself. So how do you guard against your own fallibility? Let’s begin with an example of one of the worst production issues in the history of Dropbox.

Many years ago, an operator accidentally left out a set of quotation marks when running a distributed shell operation across a set of databases. The operator intended to run

dsh --group "hwclass=database lifecycle=reinstall" reimage.sh

but instead typed the following:

dsh --group hwclass=database lifecycle=reinstall reimage.sh

This is an easy mistake to make, but the end result was disastrous. This command caused the command lifecycle=reinstall reimage.sh to attempt to run on every database in the fleet instead of just running reimage.sh on databases that were slated for reinstall, taking down a large fraction of our production databases. The immediate impact of this was a widely publicized two-day outage during which a number of Dropbox services were unavailable, but the long-term impact was a process of significant investment to prevent any such events from ever happening again.

The immediate point of reflection following an outage such as this is that the operator is not to blame. If it’s possible for a simple mistake to cause a large-scale outage, that’s a process failure, not a personnel failure. Don’t hate the player, hate the game.

There are many obvious safeguards to implement in a situation like this. We added access controls that prevent rebooting a live database host—a very basic protection, but one that’s often overlooked. We changed the syntax of the distributed shell command to make it less vulnerable to typos. We added isolation-based restrictions within our tooling that reject any distributed command that is run simultaneously across multiple isolation domains. Most important, however, we significantly invested in automation to obviate the need for an operator to ever need to run a script like this, and instead relied on systems more reliable than a human at a keyboard.

The need for protections must be maintained as an engineering principle so that safeguards are developed alongside initial deployment of the systems they protect.

Recovery

Failures will happen. The mark of a strong operational team is the ability to recover from disasters quickly and without long-term impact on customers.

An important design consideration is to attempt to always have an undo button—that is, to design systems such that there is sufficient state to reverse an unintended operation or to recover from an unexpected corruption. Logs, backups, and historical versions can greatly aid in recoverability following an incident, particularly when accompanied by a well-rehearsed procedure for actually performing the recovery.

One technique for mitigating the impact of dangerous transformations is to buffer the underlying mutation for sufficient time to run verification mechanisms on the new state. The file deletion life cycle at Dropbox is designed in such a way so as to prevent live-deleting any object in the system, and instead wraps these transformations with comprehensive safeguards, as demonstrated in Figure 17-4.

Figure 17-4. Object deletion flow

Application-level file deletion at Dropbox is a relatively complex process involving user-facing restoration features, version tracking, data retention policies, and reference counting. This process has been extensively tested and hardened over many years, but the inherent complexity poses a risk from a recovery perspective. As a final line of defense, we protect against deletions on the storage disks themselves, to guard against any potential issues in these higher layers.

When a delete is issued to a physical storage node, the object isn’t immediately unlinked. Instead, it is moved to a temporary “trash” location on the disk for safe-keeping while we perform further verification. A system called the Trash Inspector iterates over all volumes in trash and ensures that all objects have either been legitimately deleted or safely moved to other storage nodes (in the course of internal filesystem operations). Only after trash inspection has passed and a given safety period has elapsed is the volume eligible for unlinking from disk via an asynchronous process that can be disabled during an emergency.

Recovery mechanisms are often expensive to implement and maintain, especially in storage use cases in which they impose additional storage overhead and higher hardware utilization. It’s thus important to analyze where irrevocable transformations occur in the stack and to make informed decisions about where recovery mechanisms need to be inserted to provide comprehensive risk mitigation.

Verification

You will mess up; prioritize failure detection.

In a large, complex system the best protection is often the ability to detect anomalies and recover from them as soon as they occur. This is clearly true when you are monitoring availability of a production system or when tracking performance characteristics, but it is also particularly relevant when you’re engineering for durability.

The Power of Zero

Is your storage system correct? Is it really, really correct? Can you say with 100% confidence that the data in your system is consistent and none of it is missing? In many storage systems this is certainly not the case. Maybe there was a bug five years ago that left some dangling foreign key relations in your database. Maybe Bob ran a bad database split a while ago that resulted in some rows on the wrong shards. Maybe there’s a low background level of mysterious 404s from the storage system that you just keep an eye on and make sure the rate doesn’t go up. Issues like this exist in systems everywhere, but they come at a huge operational cost. They obscure any new errors that occur, they complicate monitoring and development, and they can compound over time to further compromise the integrity of your data.

Knowing with high certainty that a system has zero errors is a tremendously empowering concept. If a system has an error today but didn’t yesterday, something bad happened between then and now. It allows teams to set tight alert thresholds and respond rapidly to issues. It allows developers to build new features with the confidence that any bugs will be caught quickly. It minimizes the mental overhead of having to reason about corner cases regarding data consistency. Most important, however, it allows a team to sleep well at night knowing that they are being worthy of their users’ trust.

The first step toward having zero errors is knowing whether you have any errors to begin with. This insight often requires significant technical investment but pays heavy dividends.

Verification Coverage

At Dropbox, we built a highly reliable geographically distributed storage system that stores multiple exabytes of data. It’s a completely custom software stack from the ground up, all the way from the disk scheduler to the frontend nodes servicing external traffic. Although developing this system in-house was a huge technical undertaking, the largest part of the project wasn’t actually writing the storage system itself. Significantly more time and effort were devoted to developing verification systems than the underlying system they are verifying! This might seem surprising outside the context of a system that is required to be absolutely correct at all times.

We operate a deep stack of verification systems at Dropbox. In aggregate, these systems generate almost as much system load as the production traffic generated by our users! Some of these verifiers cover individual critical system components and some provide end-to-end coverage, with the full stack designed to provide immediate detection of any serious data correctness issues.

Disk Scrubber

The Disk Scrubber runs on every storage node and continually reads each block on disk, validating the contents against our application-level checksums. Internal disk checksums and S.M.A.R.T. status reporting provide the illusion of a reliable storage medium, but corruptions routinely slip through these checks, especially in storage clusters numbering hundreds of thousands or millions of disks. Blocks on disk become silently corrupted or go missing, disks fail without notice, and fsyncs don’t always fsync.

The Disk Scrubber finds errors on disks every single day at Dropbox. These errors trigger an automated mechanism that re-replicates the data and remediates the disk failure. Rapid detection and recovery from disk corruption is crucial to achieving durability guarantees. Recall that the durability formula for R(loss) given earlier in this chapter depends heavily on MTTR from failure. If MTTR is estimated at 24 hours but it takes a month to notice a silent disk corruption, you will have missed your durability targets by several orders of magnitude.

Index Scanner

The Index Scanner continually iterates over the top-level storage indices, looks up the set of storage nodes that are meant to be storing a given block, and then checks each of these nodes to make sure the block is there. The scanner provides an end-to-end check within the storage system to ensure that the storage metadata is consistent with the blocks stored by the disks themselves. The scanner itself is a reasonably large system totaling many hundreds of processes, generating over a million checks per second.

Storage Watcher

One challenge we faced when building verifiers was that if the same engineer who wrote a component also implements the corresponding verifier, they can end up baking any of their broken assumptions into the verifier itself. We wanted to ensure that we had true end-to-end black-box coverage, even if an engineer misunderstood an API contract or an invariant.

The Storage Watcher was written by an SRE who wasn’t involved in building the storage system itself. It samples 1% of all blocks written to the system and attempts to fetch them back from storage after a minute, an hour, a day, a week, and a month. This provides full-stack verification coverage if all else fails and alerts us to any potential issues that might arise late in the lifetime of a block.

Watching the Watchers

There’s nothing more comforting than an error graph that is literally a zero line, but how do you know that the verifier is even running? It might sound silly, but a verification system that never reports any errors looks a lot like a verifier system that isn’t working at all.

At steady state, the Index Scanner mentioned earlier doesn’t actually report any errors, because there aren’t any errors to report. At one point early in the development of the system the scanner actually stopped working, and it took us a few days to notice! Clearly this is a problem. Untested protections don’t protect anything.

You should use a verification stack in conjunction with disaster recovery training to ensure that durability issues are quickly detected, appropriate alerts fire, and failover mechanisms provide continual service to customers. In our production clusters, these tests involve nondestructive changes like shutting down storage nodes, failing databases, and black-holing traffic from entire clusters. In our staging clusters, this involves much more invasive tests like manually corrupting blocks on disk or forcing recovery of metadata from backup state on the storage nodes themselves. These tests not only demonstrate that the verification systems are working, but allow operators to train in the use of recovery mechanisms.

Automation

You don’t have time to babysit.

One of the highest-leverage activities an SRE can engage in is investing in strong automation. It’s important not to automate too fast: after all, you need to understand the problem before attempting to solve it. But days or weeks of automation work can save many months of time down the track. Automation isn’t just an efficiency initiative, however; it has important implications for correctness and durability.

Window of Vulnerability

As discussed previously, MTTR has a significant impact on durability because it defines the window of vulnerability within which subsequent failures might cause a catastrophic incident. There is a limit to how tight you can set pager thresholds, though, and to how many incidents an operator can respond to immediately. Automation is needed to achieve sufficiently low response times.

One illustrative example is to compare a traditional Redundant Array of Independent Disks (RAID) array to a distributed storage system that automatically re-replicates data to other nodes if a disk fails. In the case of a RAID array, it might take a few days for an operator to show up on-site and replace a specific disk, whereas in an automated system, this data might be re-replicated in a small number of hours. The same comparison applies to primary-backup database replication: smaller companies will often require an operator to manually trigger a database promotion if the primary fails, whereas in larger infrastructure footprints, automatic tooling is used to ensure that the availability and durability impact from a database failure is kept to a minimum.

Operator Fatigue

Operator fatigue is a real problem, and not just because your team will eventually all quit if they keep getting paged all day. Excessive alerting can cause alert blindness by which operators end up overlooking legitimate issues. We’ve observed situations in which an operator has been tempted just to pipe the Unix yes command into a command-line tool that asked hundreds of times whether various maintenance operations were authorized, which defeated the purpose of the checks to begin with.

Rules and training can go only so far. Good automation is ultimately a necessary ingredient to allow operators to focus on high-leverage work and on critical interrupts rather than become bogged down in error-prone busy-work.

As a system scales in size, the operational processes that accompany it also need to scale. It would be impossible for a team running many thousands of nodes to manually intervene any time there was a minor hardware issue or a configuration problem. Management of core systems at Dropbox are almost entirely automated by a system that supports plug-ins both for detecting problems within the fleet and for safely resolving them, with extensive logging for analysis of persistent issues.

Systems that have the ability to automatically remediate alerts need to be implemented extremely carefully, given that they have the power to accidentally sabotage the infrastructure that they’re designed to protect. Automatic remediation systems at Dropbox are subject to the same isolation protections discussed earlier in this chapter, along with stringent rate limits to ensure that no runaway changes are performed before an operator can intervene and recover. It is also important to implement significant monitoring for any trends in production issues—it is very easy for a system such as this to hide issues by automatically fixing them, obscuring any emergent negative trends in hardware or software reliability.

Reliability

The primary motivation for automation is simply reliability; you are not as reliable as a shell script. You can test, audit, and run automation systems continuously in ways in which you can’t rely on an operator.

One example of a critical automation system at Dropbox is the disk remediation workflow, shown in Figure 17-5, which is designed to safely manage disk failures without operator intervention.

Figure 17-5. Automatic disk-remediation process

When a minor disk issue such as a corrupt block or a bad sector is detected, the data is recovered from other copies in the system and re-replicated elsewhere, and then the storage node is just allowed to continue running. Issues like this are sufficiently routine such that they don’t justify further action unless disk failure rates begin shifting over time. After a certain number of errors, however, the system determines that there are serious problems with the disk or filesystem, recovers and re-replicates data that was on the disk, and then reprovisions the disk with a new clean filesystem. If this cycle repeats multiple times, the disk is marked as bad, the data is recovered and re-replicated, and a ticket is filed for data center operators to physically replace the disk. Because the data is immediately re-replicated, the replacement of the physical disk can happen any time down the track, and usually happens in infrequent batches. After a disk is removed from the machine it is kept for a safe-keeping period before eventually being physically shredded on-site.

This entire process is completely automatic and involves no human intervention up until the disk is physically pulled from the machine. The process also maintains the invariant that no operator is ever allowed to touch a disk that holds critical production data. Disks stay in the system until all the data that they held has been reconstructed from other copies and re-replicated elsewhere. Investing in development of this automation system allows seamless operation of an enormous storage system with only a handful of engineers.

Conclusion

There was a lot of stuff proposed in this chapter, and not every company needs to go as far as geo-distributed replication or complete alert autoremediation. The important point to remember is that the issues that will bite you aren’t the disk failure or database outage you expect, but the black swan event that no one saw coming. Replication is only one part of the story and needs to be coupled with isolation, protection, recovery, and automation mechanisms. Designing systems with failure in mind means that you’ll be ahead of the game when the next unknown-unknown threatens to become the next unforeseen catastrophe.

Contributor Bio

James Cowling is a principal engineer at Dropbox and served as technical lead on the project to build and deploy the multi-exabyte geo-distributed storage system that stores Dropbox file data. James has also served as team lead for the Filesystem and Metadata storage teams. Before joining the industry, James received his PhD at MIT specializing in large-scale distributed transaction processing and consensus protocols.

1 We were running MySQL 5.1 at the time; this is much less of a problem with versions 5.5 and above.