IF YOUR SOFTWARE DOESN’T work, you won’t sell it. Worse, you’ll be embarrassed by it. This is why I apply the High School Embarrassment Test (HSET™) to any product I want to ship. The HSET works because high school did deep psychological damage to most of us and left behind hormone-based scars that industries like Hollywood have mined to great effect. You can leverage these scars as well. All you need to do is ask yourself: am I sure I won’t be embarrassed when an old high school friend sees my product? That’s all there is to the HSET.

The HSET helps ensure that your team is happy. Tom DeMarco and Timothy Lister point out in their book Peopleware: Productive Projects and Teams (Dorset House) that one of the best ways to destroy your team is to ask them to ship something they aren’t proud of. Remember, your engineering team members have old high school buddies too. You need to ensure that your team isn’t embarrassed.

So, how do you ensure that the software you ship does not embarrass you? There are eight major steps you can take that will have a substantial impact on the quality of your shipping product:

Insist on test-driven development.

Build a testing team around a great test lead.

Review your test plan and test cases personally.

Automate testing.

Dogfood religiously.

Have a big bug bash.

Triage your bugs diligently.

Establish trusted testers as a last line of defense.

If you do these eight things, you’ll be well on your way to shipping a great product. Let’s dig into how to do them.

There’s an expression routinely vouchsafed in advertisements posted on Google’s restroom walls that reads “Debugging sucks. Testing rocks.” This mantra is powerful. Debugging requires you to deconstruct and disassemble your software until you get to the point where you find the problem. That’s effectively moving backward. Moving backward is the opposite of shipping. In addition to helping you feel confident that you’re doing the right thing, test-driven development helps your team survive in complicated systems environments, because as soon as another engineer or team breaks an interface you depend on, the tests will fail.

Test-driven development is covered extensively in other references (see Appendix C), but here’s an overview of the process:

Eddie Engineer breaks the work down into pieces that perform simple operations. These are called units. For example,

countToTen()is a software unit.Before Eddie writes the

countToTenmethod, he writes a test—known as a unit test. This basically says, “IfcountToTen()is equal to 10, then pass; else, fail.”Now that Eddie has the unit test written, he can write the

countToTenmethod. If Eddie’s index is off on the loop andcountToTen()actually outputs 9, the test will fail.When the software builds, all the unit tests are run automatically.

Pretty straightforward, right? It is. It takes some discipline, though. What’s extra-great about test-driven development is that regressions are easier to spot because each build runs tests automatically. Look into software like JUnit (for Java-based unit testing) to automate your build and verification procedures.

No matter how good your engineering team is and regardless of how many unit tests they have written, you will have bugs. Your best plan of action to find these bugs is to hire or appoint someone to be the test guru. This test lead will be the primary owner of release quality and a critical partner to product management, engineering, and marketing leads. Test leads are responsible for making sure that the test cases are well written, cover the right areas, and are well executed. A great test lead will continuously train less experienced testers and help design great test automation architecture. If you have a really strong test lead, that individual will be sharp enough to push the engineering team to build more and better unit tests.

Another key reason why you want to start with a great test lead is that the test team culture is frequently unlike the engineering team culture. Your test team is trying to discover problems all day long. In a typical but poorly run engineering team, the test team generates complaints daily, and that can be hard to take. The processes, disciplines, and standards are a bit different than typical engineering teams, so having a solid test lead who can help manage the test team will help you immensely, even if you’ve done a great job of embedding testing with engineering.

Your test lead will also help you solve the unique problem of hiring testers. Brilliant test engineers are hard to come by because most folks who can write great software want to write their own software, not test the software your engineering team wrote. There are two ways to extract yourself from this dilemma: maintain a lower hiring bar and hire managers, or maintain a high hiring bar and hire contractors. As I’ll describe next, there are advantages to both approaches, but I favor hiring contractors.

I’m not a fan of hiring managers to hire test engineers who are not rock stars. I believe the practice establishes the wrong incentives. It builds in hierarchy, and your talented test manager has to spend a substantial portion of his or her time managing nonstellar performers. However, the opportunity to manage people is a strong incentive for some individuals.

What’s even worse about maintaining a lower hiring bar is that eventually you’re going to have to promote some of these folks. After five years, they’re going to feel entitled to a promotion and you’ll be in a situation where you’ve got B-level people in management. Unfortunately, the expression “As hire As, Bs hire Cs” is definitely true in the software industry. When you hit this stage of corporate maturity, your productivity and quality will sharply decline as a result of your C-grade players.

There are some disadvantages to working with contracted testers. These disadvantages include:

You need a development lead to own the relationship with the testers. For this reason, embedding contractors within a team adds overhead to your engineering team.

Training costs are sunk and irretrievable.

Your ramped-up test talents’ contracts can expire, and they might take their knowledge elsewhere.

In my opinion, the advantages of contracted testers outweigh the disadvantages. Some of the advantages include:

Contractors cost the organization less in terms of people management than full-time hires do.

It is easier to engage with an agency than it is to hire, so you can ramp up faster. And you can maintain a consistent quality bar.

You don’t promote the C-grade players.

Regardless of how you build your test team, you still need someone to write a test plan and you need to ensure the quality of the test cases, which means you need to review and approve the test plan.

A test plan is composed of many test cases and is derived from your product requirements document. It is therefore reasonable to expect that if your product requirements document stinks, your testing team is set up for failure. But if you did your job well, then your test lead can do a great job too.

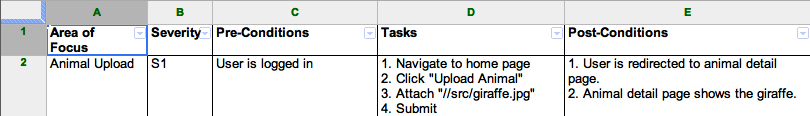

The test plan is generally created in a spreadsheet so you can organize the test cases well. Check to see that your test cases have the following descriptive elements:

- Area of focus

This column describes what part of the user experience will be tested, so you can group similar tests together.

- Severity

The severity defines what level of bug you should file if this test fails, generally on a scale of 1–4.

- Preconditions

Preconditions establish what the tester must do before starting the test. For example, if you were writing a test for a shopping cart credit card verification process, the preconditions might require that the user be logged in, have added an item to the cart, and have entered a zip code. Now the test can start.

- Tasks to perform

The tasks are the meat of the test, described as a series of steps. If any step fails, the test will fail.

- Post-conditions

The post-conditions describe the final state of the application. To continue the example, the post-conditions might be that the user sees a confirmation page with a confirmation number, and the credit card is charged the correct amount in less than 10 seconds.

Figure 5-1 shows an example test case.

Because you’ve included the “severity” of each test, you can create quicker but less complete test passes by running only the high-priority tests. Test passes like these are good for verifying small changes. You can test only the small change and the high-severity tests in much less time than running all the tests. It’s important to still run the high-severity tests, even if you think the small change is well isolated from other features. You want to make sure that some fundamental feature wasn’t accidentally turned off. In a complicated software system with weak unit testing, it is easy to break major features—and the HSET preaches a “better safe than very sorry” approach.

The output of a full test pass is bugs and sometimes a sense of surprise. This is a great moment for you, as the team lead, to reinforce a “bad news is good news” mantra and greet the number of bugs found by the test team with loud applause. Think about it: you need the test team motivated to find failures. If your team ends up demoralized each time there’s a test pass, or if the relationship between test and development becomes acrimonious, you’re going to end up with fewer bugs and—wait for it—embarrassment!

Reviewing test cases can be incredibly boring. You need to do it nevertheless, if only to empathize with your test team. Here’s a trick: instead of slogging through all of your test cases, which is ideal and will get you a round of applause from anyone who notices, focus on the following three things:

- User experience

Make sure that there are cases that cover vital parts of the user experience, especially the “getting started” workflows and error cases.

- Security and privacy

Tests should try to break your website.

- Dependencies

If you rely on a database, third-party service, or software you didn’t build in-house, make sure those dependencies are tested rigorously. They’re likely to break or change without notice.

If your test plan covers these major areas, you’re starting from a good place.

Remember how hard it is to hire a great test lead? One of the best work-arounds for this challenge is to find a test lead who’s willing to write test automation. If your test lead is able to craft testing systems that work independently from your production code, you’ve created a great project for a test engineer. What’s more, that software can run constantly and do the work of dozens of people.

You may be thinking, “Wait—that’s a lot of extra software to write!” Luckily, most test automation can be written in scripting languages, doesn’t need to scale particularly well, and can use established, preexisting frameworks. Test development can therefore be more efficient.

As a team lead you probably don’t need to own test automation, but you do need to make sure that it’s being built, because while you’ll never be able to afford enough testers, you will be able to afford enough computers on which to run the automation.

Microsoft pioneered the notion of “eating your own dogfood,” which means you should use the software you intend to ship within your company. Put another way, don’t feed your team peoplefood but give your customers dogfood. Forcing you and your team to suffer through customer pain is a great way to instill a sense of urgency, understand customer problems, and find defects. Amazon and Google dogfood religiously.

Dogfooding can be challenging when there’s a good alternative, such as the prior, less buggy version of your software. For example, Google wanted employees to dogfood Google Docs, and the best way to force that issue was to stop installing Microsoft Office on corporate computers by default. In addition to driving dogfood of Google Docs, the practice saved money!

In some cases, making it easier to taste the dogfood also works. For example, Amazon wanted employees to dogfood Amazon Prime, so they made it available to employees at a discount. I’ve seen teams offer awards (from t-shirts to iPads) for the most unique bugs reported. I even saw one engineering director offer a $5,000 bounty for a successful series of steps to reproduce a “heisenbug.” A heisenbug is a bug that spuriously appears and disappears, following the Heisenberg uncertainty principle;[3] they’re a pain in the ass. However you do it, make dogfooding a key part of your team culture even if the other teams you work with don’t do it.

One fun part of the dogfood experience is that you’ll find your CEO always has some kind of awful experience that nobody else has seen. The first time this happens to you, you’ll be chagrined. This was precisely the person you wanted to impress, and you botched it! Forgive yourself now, because soon you’ll understand that every team lead has the same experience. Jeff Bezos always finds bizarre bugs. Things always break on Larry Page’s computer. There are many examples of these failures, and one pretty common reason for them: these execs are awesome dogfooders.

In Larry’s case, he has more alpha software on his computer and more experiments running on his account than you could possibly imagine, because team leads like you are desperate to convince Larry that they are making progress. It’s no surprise that there are bizarre interactions. Your best defense in Larry’s case is to figure out what else could interact with your product and plan for it.

In Jeff’s case, he brings a completely fresh perspective to your product because he’s never seen it before and has no idea what he’s supposed to do, so he breaks it. The best thing you can do in Jeff’s case is to try to think like Jeff. Put on your giant-alien-brain mask, get some coffee, clear your browser cache, reformat your hard drive, and try to forget everything you ever knew about your product. Then use it.

If you’ve decided to dogfood religiously, you’ll want to follow some best practices to get the most mileage from your dogfood experience:

- Plan a “dogfood release”

The dogfood release is when you give your software to your colleagues within the company. It’s a key milestone immediately after feature complete and before code complete. The dogfood release gives you a milestone where you show real progress. Also, soliciting your team’s peers for kudos and feedback helps build the team’s morale and ensure your product is on track.

- Make it easy for others to send you bug reports

Establishing a mailing list for dogfood bugs is a great way to monitor incoming defect volume. If you don’t have a fast way for all of your dogfooders to enter bugs in your bug tracking system, you can easily create an online form in Google Docs that will organize and report on bugs. You can ask your test lead to write bugs based on these incoming email messages.

- Continue to dogfood after you ship

Amazon and Google both maintain experimental frameworks that enable dogfooders to see specific features. These frameworks allow the software to run on production infrastructure but only be seen by internal users. It’s a wise investment to build similar systems for your teams, because making your systems production ready can take significant time, and bugs take a while to emerge in dogfood. Having a framework that enables internal users to run on production systems allows you to collect feedback and complete production work in parallel.

- Make dogfooding a core corporate value

It’s pretty common to find that your colleagues don’t dogfood. Or, if they do dogfood, they’re too busy to file bugs. Shame on them! But whining won’t get them to be better dogfooders. The best you can do is follow the previous suggestions and remind your colleagues to dog-food. If dogfooding isn’t working, work to understand why and fix it. In the meantime, you can rely on trusted testers (more on them later).

A bug bash is an event where your team, or your whole company, takes a dedicated period of time—typically an hour—to find as many bugs in your dogfood product as possible. A good bug bash will almost certainly find a bunch of bugs that you’ll want to fix. You’ll want to do four things to encourage a good bug bash:

Incentivize people to bug bash. Offer an award. T-shirts are shockingly effective.

Make the bug bash a key milestone in your project plan. Schedule the bug bash so your entire extended team knows when it will happen and can get involved.

Build bug bashes into your development and testing schedule.

Say thank you for every bug. Remember, bad news is good news. Every bad bug is good news.

I frequently ask product management candidates, “How do you triage bugs?” when I perform phone screens. I’m always amazed at how incomplete the answers are! I think bugs are as simple to triage as 1, 2, 3!

Grade bugs based on frequency, severity, and cost to fix.

Meet daily to review your new bugs with your dev lead and test lead.

Continually make it harder to accept new bugs as launch blockers. If you don’t, you’ll never hit zero bug bounce (ZBB), which means no launch blocking bugs are reintroduced. If you never hit ZBB, you’ll never ship.

The first of these three steps is bug grading. Your goal is to figure out which bugs you should fix, and that’s not as simple as fixing only the really bad ones, because some bugs are ugly and very easy to fix. So you need to look at three dimensions when you grade a bug:

- Frequency

Frequency is your measure of how often the bug occurs. One time out of 10? Does it appear only when servers restart? Or maybe it happens every time a user logs in? The more frequently a bug occurs, the more important it is that you fix it.

- Severity

You want to assess how damaging to the user experience the bug is. If the bug is a big security or privacy hole, it’s a high-severity bug. If there’s a spelling mistake, that’s a low-severity bug, even if it is moderately embarrassing.

- Cost to fix

That spelling mistake is really cheap to fix. A bug where you can’t shard a user session across multiple servers, on the other hand, is going to be very expensive to fix, and you’ll likely have to trade some features for that change.

After you and the team understand how you’re going to grade the bugs, you enter step 2, in which you have a daily bug triage meeting to decide which bugs you will fix. The PM, the dev lead, and the test lead should get together and go through the bugs. The gotcha in this process is that it can take forever if the three of you try to figure out what is going on in each bug. And bug triage can be really boring. You want to try to move through your triage meeting as fast as possible. In triage, try to do the following:

- Establish a general bug bar

For me, this bar starts at: “Would I be embarrassed if my high school buddies encountered this? And how many of them would encounter it? And would it do them any lasting damage if they hit the bug?” You, your dev lead, and your test lead may all have a different point of view on these dimensions, but you’ll converge pretty quickly.

- Move through the bugs from most severe to least severe

Your test team will provide an initial rating for bugs so that you get the worst bugs addressed quickly.

- Allocate a specific amount of time for your triage

If you run out of time, continue the next day. This process will help you manage your energy.

- Only talk about frequency, severity, and cost

One of the reasons you have a test lead in the triage meeting is so that he or she can comment on the cost of fixing a bug and also identify innocuous bugs that expose deeper, scarier flaws. Be vigilant and avoid deep dives into finding the root cause of every issue at this stage! If you find a bug that may be more severe than you thought, boost the severity rating and move on.

- Spend less than one minute per bug

If you don’t know what’s going on with a bug, reassign to the reporter for clarification. If you need to investigate the bug further before you can triage, add that to a special list of “investigation bugs.” The one-minute rule helps eliminate excessively detailed discussions. I’ve found that once you’ve conditioned the team to this pace, everyone wants to keep it going, because nobody likes bug triage.

After some time, you’ll find that even though your bug count is going down nicely, new bugs keep popping up. This is the third step of triage: you have to keep moving the bug bar up, making it harder to declare that a bug is a launch blocker. As general guidance, this principle may seem counterintuitive. After all, you don’t want to be embarrassed, right? The reality is that you’re constantly writing new software, and that means you’re introducing new bugs. If you want to hit ZBB, you have to stop adding bugs to the list of launch blockers. Progressively, and carefully, raise the bug bar as you get closer to launch.

Trusted testers are users under NDA (nondisclosure agreement) who use the dogfood version of your product before it ships. They are using different computers than your team, have different expectations, and are generally much less technical than you. As a result, their feedback is immensely valuable.

At Amazon, I had a group of trusted Customer Reviews writers who could give us great feedback. I gave them my direct email address—they frequently found production issues faster than my engineering team. They also didn’t hesitate to email Jeff Bezos, and when they did, I got Jeff Mail. When you’re a team lead and you get Jeff Mail, you drop everything and address it!

At Google, we had hundreds of businesses in the trusted tester program for Google Talk. We turned on the same experiments we used internally and asked them to send us bugs. They gave us great feedback and helped us pinpoint quality issues.

To make the trusted tester system work—which in the case of Talk meant we had ~15% active participation—I followed these best practices:

- Have the businesses sign an NDA and provide the correct contact information

The NDA for a trusted tester may need to be different than your hiring or business development NDAs because you want to protect your right to use any improvements that your customers suggest. Ask your lawyer for advice on what your NDA should include.

- Create rough “getting started” documentation, including a list of known issues

A Google Site is a nice way to aggregate these artifacts, because it’s easy to share with arbitrary email accounts and can be updated very quickly and easily.

- Create an email alias that delivers to the whole engineering team and from which you can email

If you configure your email this way, replies will go to the whole team, not just to you. I’m a firm believer in bringing customers as close to the engineering team as possible. It helps make the software real, and that’s motivating for an engineering team. It also may reduce your workload because your engineering team can help answer questions from their users.

- Add these customers to the same dogfood experience that the engineering team uses

In some situations you may have a daily build that your engineering team uses, and you don’t want your trusted testers on that. Daily builds are too unstable because they are not tested and change too quickly.

- Survey your trusted testers

You can use Google Spreadsheets forms, or SurveyMonkey, to get a general impression of product quality. This survey is also a nice opportunity to get a sense of price sensitivity, since the users are actually experiencing the product.

- Update your trusted testers on changes

With each email update you send, you’ll find a little bounce in usage. Ideally, you can time your updates with software updates so you get some external test coverage.

It seems to me that it’s always the little things that get you. If you’re doing a good job dogfooding, you’re not going to be embarrassed by the majority of your product. But some of the most complicated parts of your product form the out-of-the-box experience (meaning, “I just opened the box; what’s inside?” not “Hmm, let’s think outside the box now!”). Specific things to look for are how you create an account and populate that account with data. As a dogfooder, you probably performed those tasks only once, and that was four months ago!

Pinterest.com is a great example of how to create a brilliant out-ofthe-box experience. It’s incredibly easy to sign up—you use your Facebook account—and your landing page is great because Pinterest suggests people for you to follow and fills your page with lovely images that you care about.

Here’s a tip to help ensure you experience what new users experience: when you hit feature complete and again when you hit code complete, make sure you delete all your data and accounts and start from scratch.