Advanced optical network architecture for the next generation internet access

Abstract

Optical networks provide fast, efficient, and reliable access to clusters of end users, and at the same time can guarantee the quality of service. A novel access optical architecture is presented that takes advantage of the latest developments in access networks, utilizes high bandwidth, and scales according to its served customers. Penetration, scalability, geographical and population studies are conducted to ensure it fulfills its purpose. Prediction and aggregation of traffic data are studied along with the proposed architecture to expand the exploitation of its capabilities. Finally, a new bandwidth allocation method is presented that is tuned to provide performance under this network architecture.

Keywords

1. Introduction

1.1. Convergence to the digital communication age

1.2. Introducing the PANDA architecture

1.3. Increasing performance with traffic aggregation and prediction

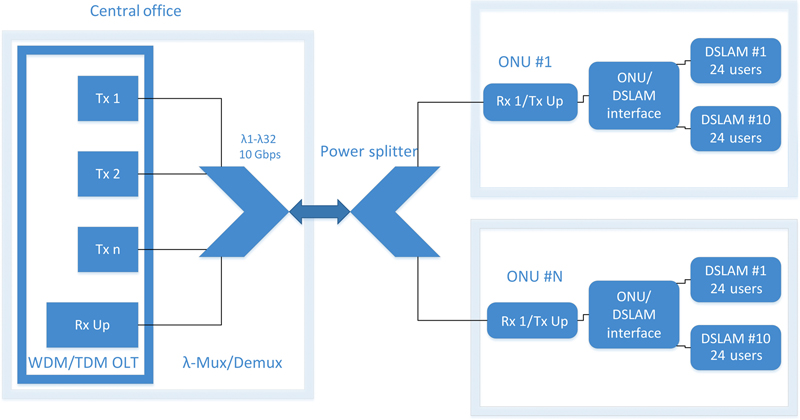

2. PANDA architecture

2.1. VDSL enduser access

2.2. Multiwavelength passive optical network

2.3. The MAC layer

2.4. Lines rates and poplation coverage

Table 11.1

Attainable Rates and Total Users

| D/S per ONU (Gbps) | Wavelengths | Users per DSLAM | U/S per ONU (Gbps) | DSLAM Uplink (Gbps) | DSLAMs per ONU | Users | D/S per User (Mbps) | U/S per User (Mbps) |

| 10 | 16 | 24 | 10 | 1 | 10 | 3840 | 42 | 2.60 |

| 10 | 16 | 48 | 10 | 1 | 10 | 7680 | 21 | 1.30 |

| 10 | 16 | 24 | 10 | 2 | 5 | 1920 | 83 | 5.21 |

| 10 | 16 | 48 | 10 | 2 | 5 | 3840 | 42 | 2.60 |

| 10 | 32 | 24 | 10 | 1 | 10 | 7680 | 42 | 1.30 |

| 10 | 32 | 48 | 10 | 1 | 10 | 15360 | 21 | 0.65 |

| 10 | 32 | 24 | 10 | 2 | 5 | 3840 | 83 | 2.60 |

| 10 | 32 | 48 | 10 | 2 | 5 | 7680 | 42 | 1.30 |

2.5. Extensions

Table 11.2

Line Rate and Population Coverage

| Wavelengths | Users per ONU | Users | D/S per User (Mbps) | U/S per User (Mbps) |

| 16 | 50 | 800 | 200 | 12.5 |

| 16 | 25 | 400 | 400 | 25.0 |

| 16 | 17 | 267 | 600 | 37.5 |

| 16 | 13 | 200 | 800 | 50.0 |

| 16 | 10 | 160 | 1000 | 62.5 |

| 32 | 50 | 1600 | 200 | 6.3 |

| 32 | 25 | 800 | 400 | 12.5 |

| 32 | 17 | 533 | 600 | 18.8 |

| 32 | 13 | 400 | 800 | 25.0 |

| 32 | 10 | 320 | 1000 | 31.3 |

2.6. Scalability study

2.7. Geographical study

Table 11.3

Geographical Coverage for Different Data Rates

| Nominal Rate (Mbps) | Convergence Distance (m) | Coverage for Different Areas | Total Coverage (%) | |||

| Dense Urban (%) | Urban (%) | Suburban (%) | Rural (%) | |||

| 100 | 150 | 98.4 | 86.0 | 19.1 | 37.7 | 80.20 |

| >80 | 500 | 100 | 100 | 96.1 | 85.1 | 99.30 |

| >50 | 900 | 100 | 100 | 100 | 87.9 | 99.88 |

| >30 | 1200 | 100 | 100 | 100 | 88.9 | 99.89 |

3. Aggregation and prediction of traffic

3.1. Introduction

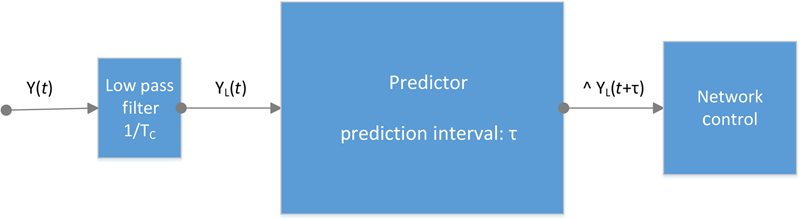

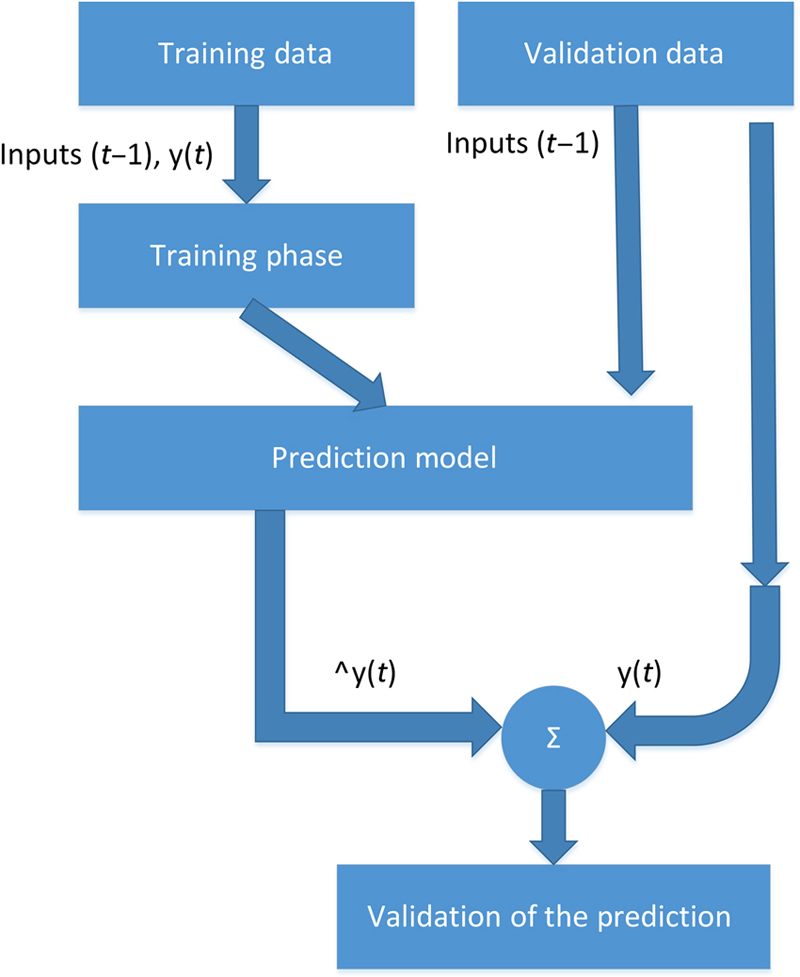

3.2. Traffic analysis and prediction

(11.1)

(11.1)

(11.6)

(11.6)

(11.7)

(11.7)

(11.8)

(11.8)

(11.9)

(11.9)

(11.10)

(11.10)

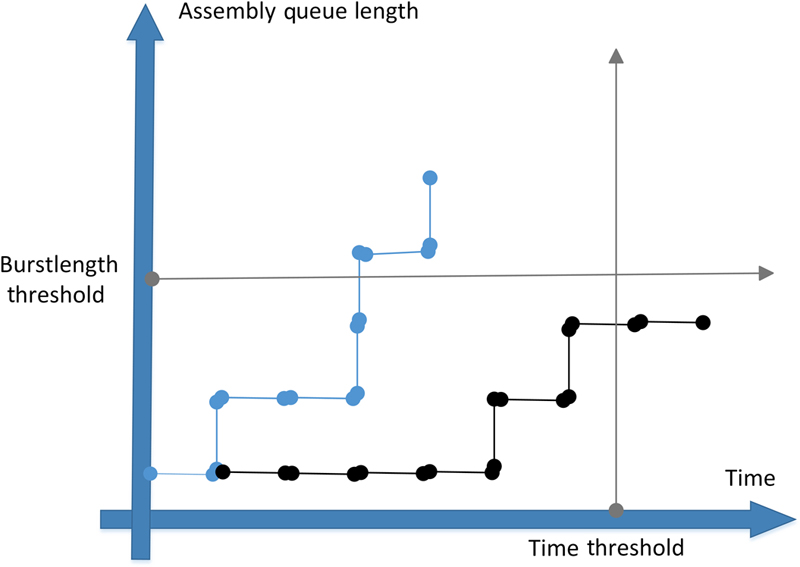

3.3. Traffic aggregation strategies

(11.11)

(11.11)4. DBA simulation and results

4.1. PANDA DBA structure

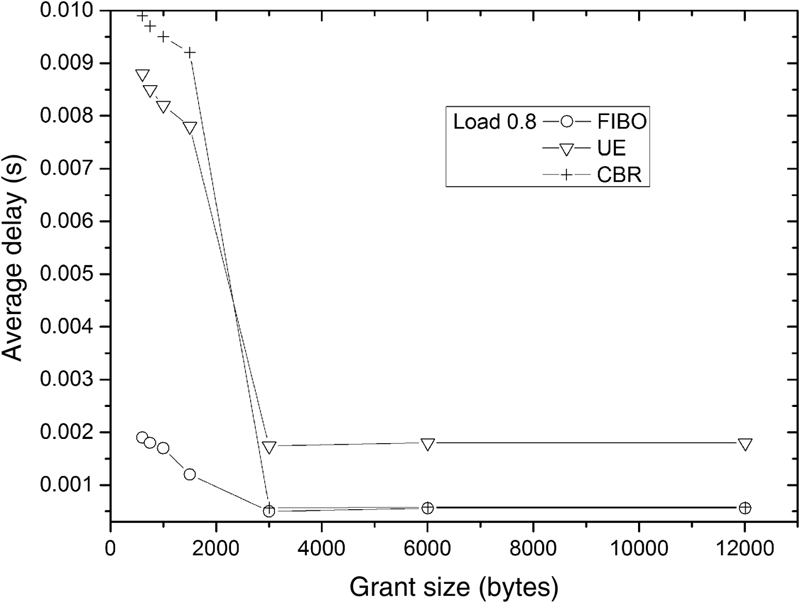

4.2. Proposed FIBO DBA algorithm