Smart transportation systems (STSs) in critical conditions

Abstract

In the context of smart transportation systems (STSs) in smart cities, the use of applications that can help in case of critical conditions is a key point. Examples of critical conditions may be natural-disaster events such as earthquakes, hurricanes, floods, and manmade ones such as terrorist attacks and toxic waste spills. Disaster events are often combined with the destruction of the local telecommunication infrastructure, if any, and this implies real problems to the rescue operations.

The quick deployment of a telecommunication infrastructure is essential for emergency and safety operations as well as the rapid network reconfigurability, the availability of open source software, the efficient interoperability, and the scalability of the technological solutions. The topic is very hot and many research groups are focusing on these issues. Consequently, the deployment of a smart network is fundamental. It is needed to support both applications that can tolerate delays and applications requiring dedicated resources for real-time services such as traffic alert messages, and public safety messages. The guarantee of quality of service (QoS) for such applications is a key requirement.

In this chapter we will analyze the principal issues of the networking aspects and will propose a solution mainly based on software defined networking (SDN). We will evaluate the benefit of such paradigm in the mentioned context focusing on the incremental deployment of such solution in the existing metropolitan networks and we will design a “QoS App” able to manage the quality of service on top of the SDN controller.

Keywords

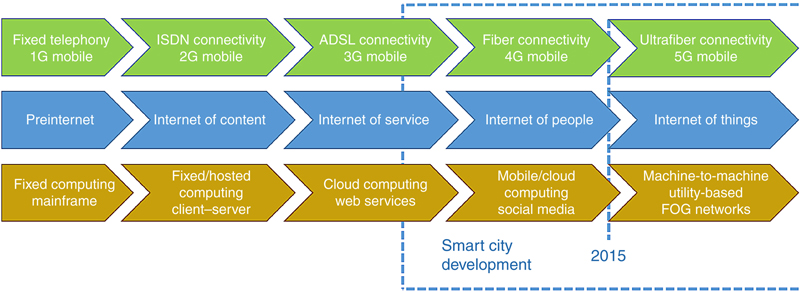

1. Introduction

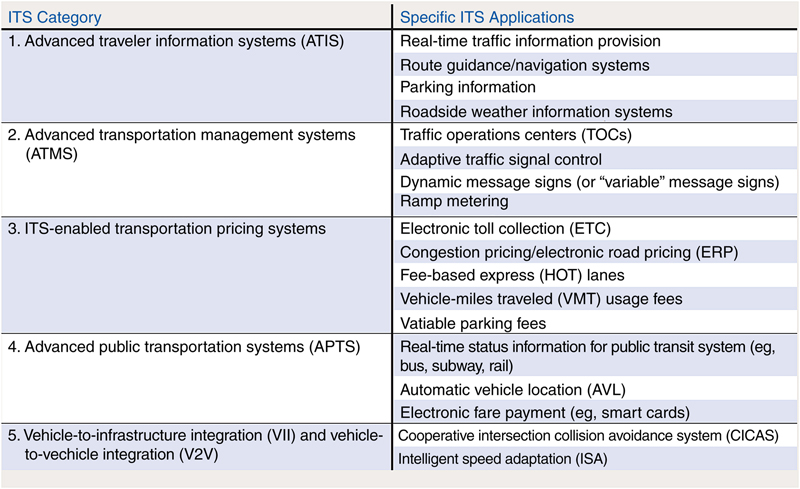

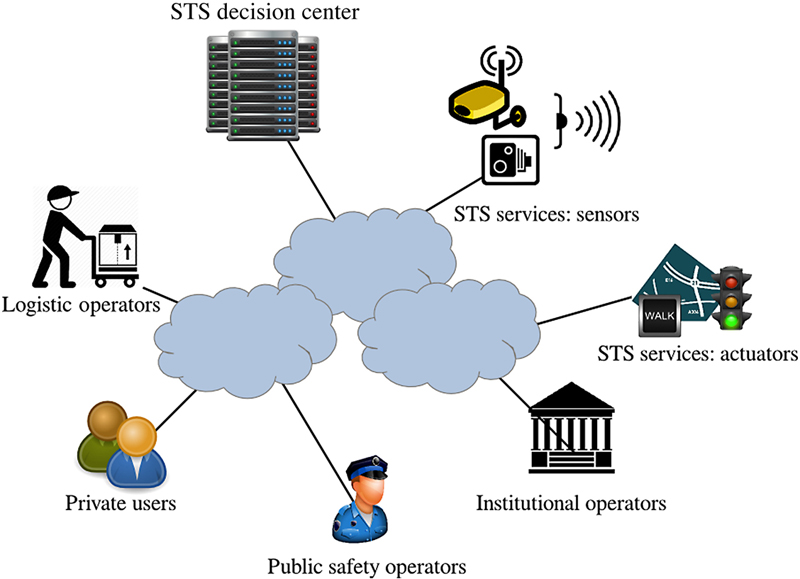

2. Smart transportation systems

Table 14.1

Principal Actors in a STS

| Sensors | Sensors and networks of sensors. They can be fixed (eg, traffic sensors, cameras, etc.) or mobile (eg, vehicles) |

| Decision center | One or more control and decision centres, where all the data are collected and where algorithms are executed and decisions taken |

| Actuators | A network of actuators (eg, semaphores, mobile barriers, etc.) to remotely control traffic |

| Information systems | Information systems to send messages to citizens/vehicles and public safety users (eg, Variable Messages Panels, SMS services, etc.); |

| Users | Citizens; police or other institutions that interact with the STS to provide information or to request data; STS services that control all the sensors and actuators, gathering information or sending specific commands; other actors that use STS services |

| Networks | Networks that interconnect all the elements mentioned previously and guarantee a certain level of quality of service |

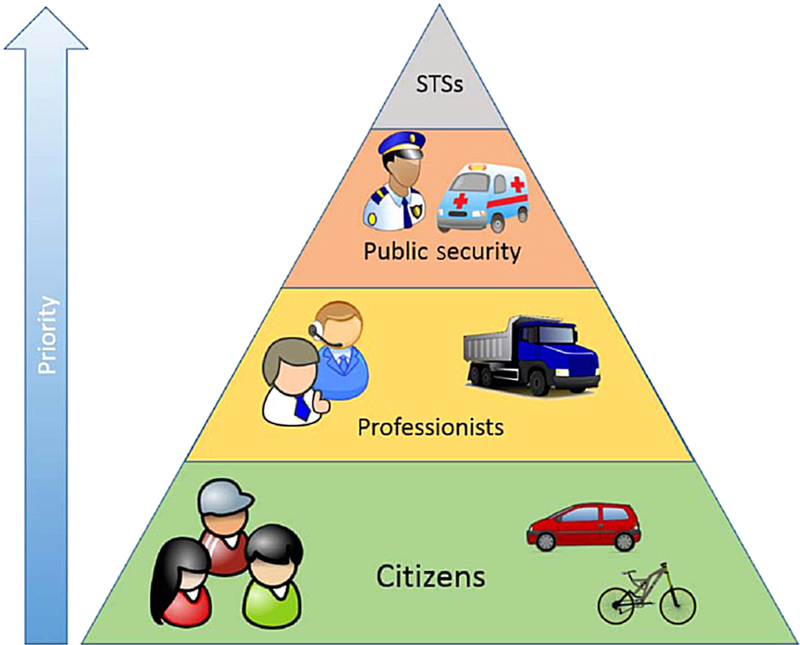

2.1. Users and main applications in an STS

2.2. STS in critical conditions

2.3. Security aspects in STS

3. Network design for smart transportation systems in critical conditions

3.1. The limits of current network technologies to support STS

Table 14.2

Performance Requirements of Some STS Usersab

| Scenario | Low/Middle Criticism | High Criticism |

| Private user that requires a path from A to B with an application on his/her smartphone | Availability: 99% Completion time: [5–15 s] Information loss: 0 |

Availability: ∼90% Completion time: [25–40 s] Information Loss: 0 |

| Logistic operator user that has to fulfil some deliveries and requires the optimum path | Availability: 99.5% Completion time: [2–5 s] Information loss: 0 |

|

| STS that sends alert messages to the smartphones of citizens/professionals to warn about a criticism in the city | Availability: ∼99.9% Completion time: [10 s] Information loss: 0 |

Availability: ∼99.999% Completion time: [5 s] Information loss: 0 |

| Policeman that interacts with STS to resolve a criticism in the city | Availability: ∼99.99% Completion time: [2–5 s] Information loss: 0 |

Availability: ∼99.999% Completion time: [1–2 s] Information loss: 0 |

Institutional operators that want to send video/images to STS to document a particular situation Institutional operators that want to view some video/images to follow a particular situation |

Availability: ∼99.99% Video—Completion time: [2 s] Video—Information loss: ∼10−2 Images—Completion time: [1–2 s] Images—Information loss: 0 |

Availability: ∼99.999% Video—Completion time: [1s] Video—Information loss: ∼10−3 Images—Completion time: [1–2 s] Images—Information loss: 0 |

a ITU-T G.1010, End-user multimedia QoS categories, 11/2001.

b Optionally, it can also be included as an indication of the user/service priority. This priority is intended as a parameter to use in case of call admission control (CAC). For example, in case of network outage due to catastrophic events, STS could serve the requests from public safety users before those of private users due to the high priority characterization.

3.2. Toward a new network architecture

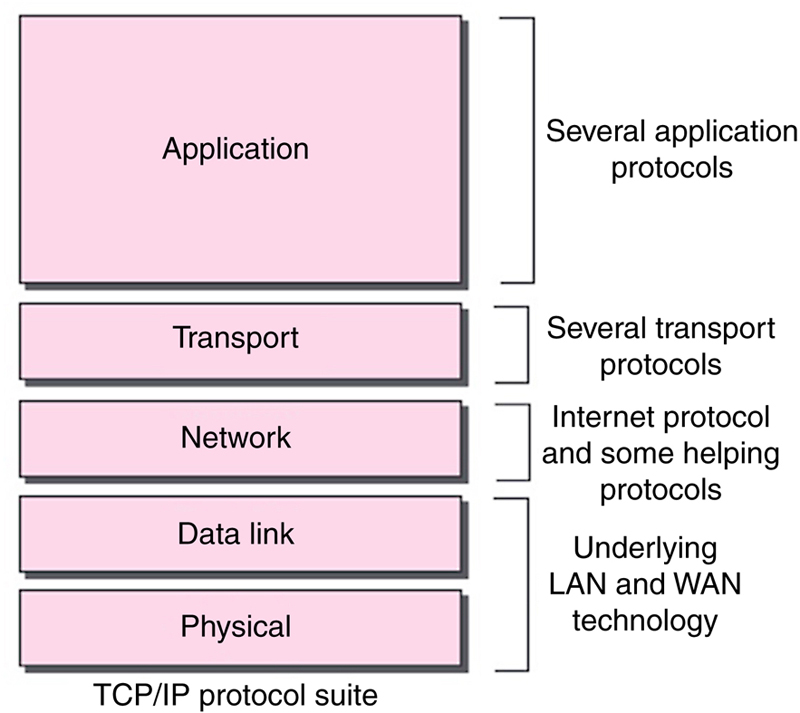

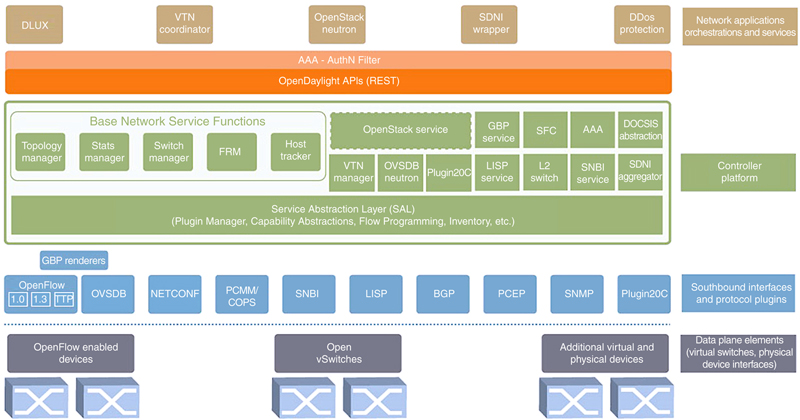

4. Software-defined networking

4.1. Brief history

4.1.1. Active networking

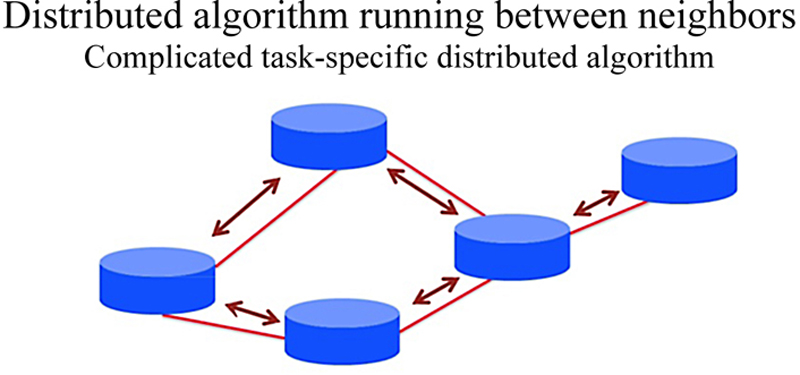

4.1.2. Separating control and data planes

4.2. Why did it not work out?

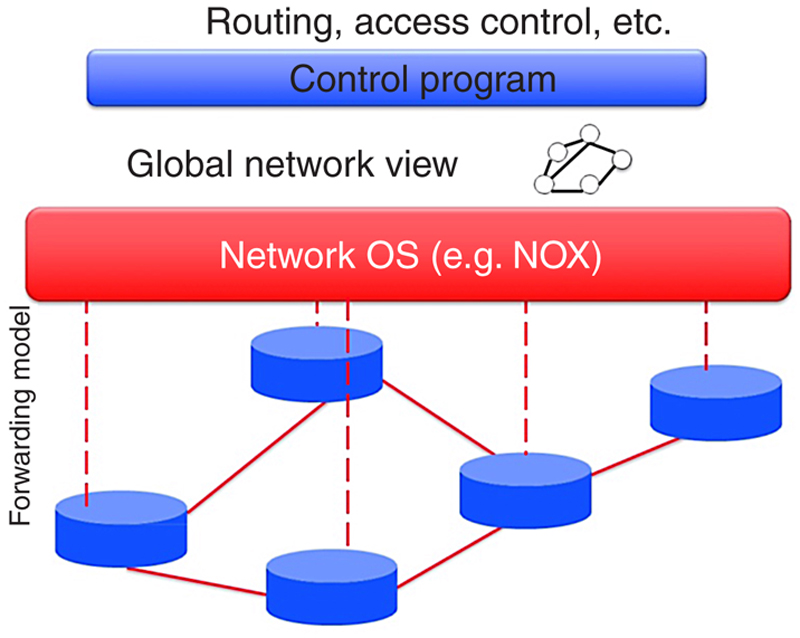

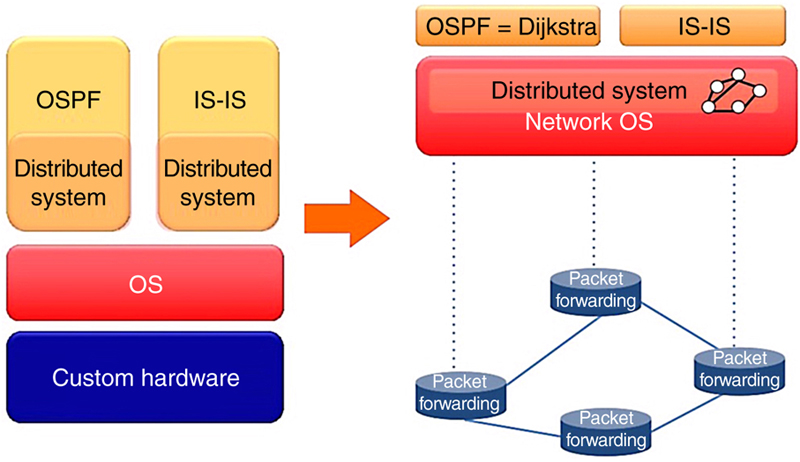

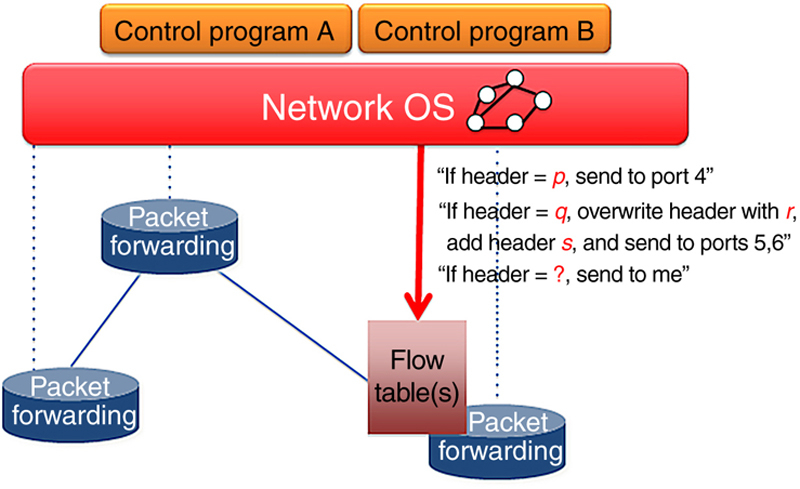

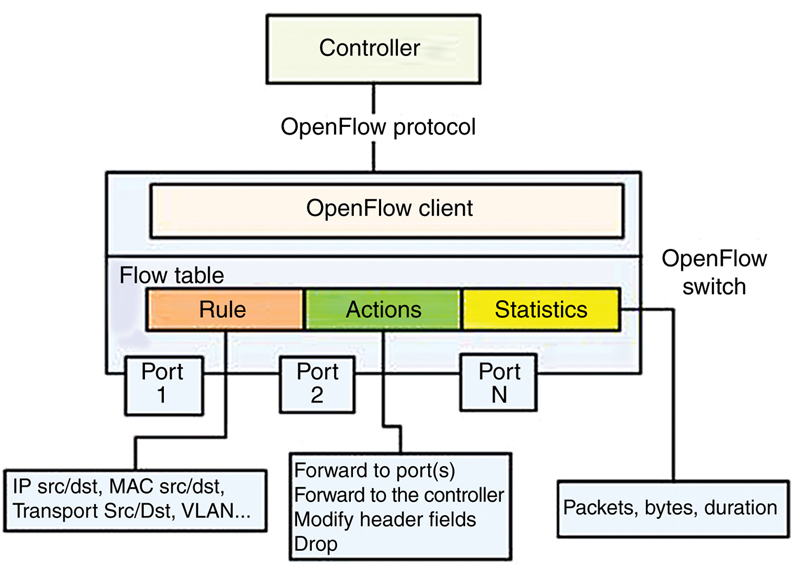

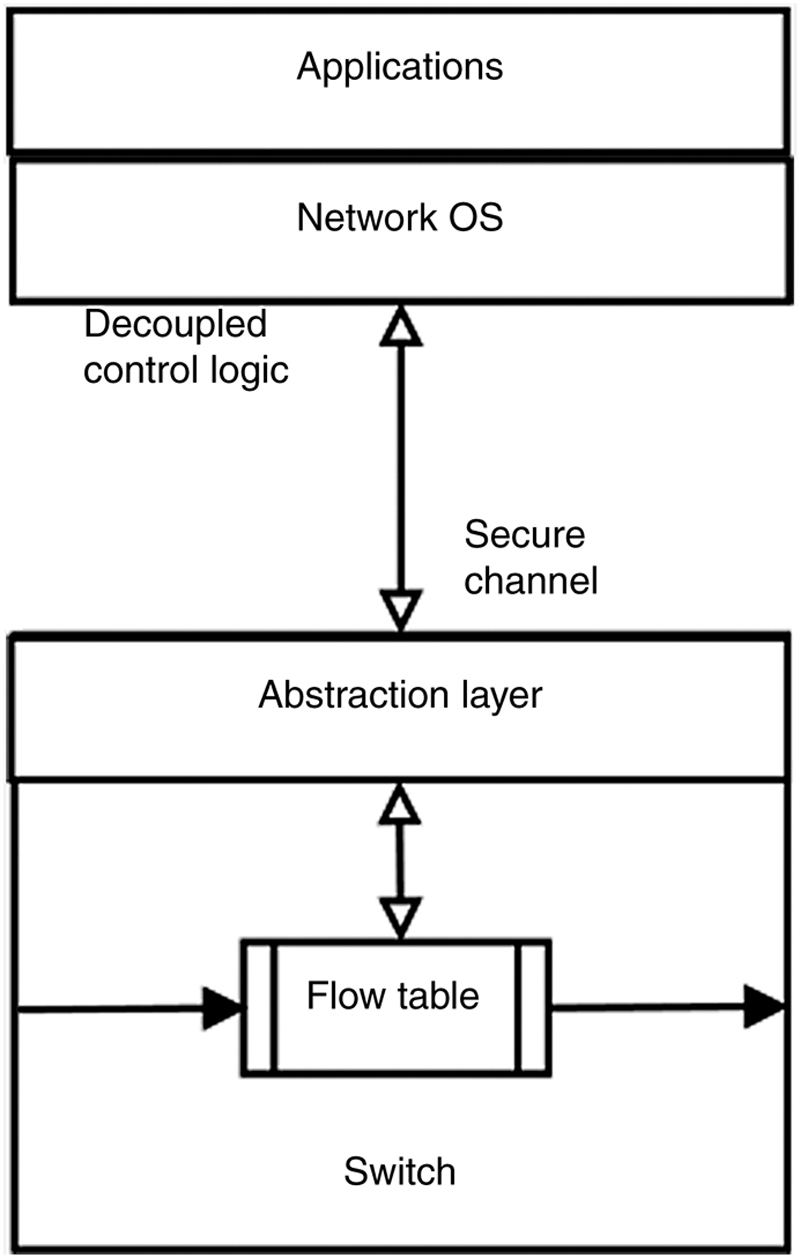

4.3. Theoretical aspects

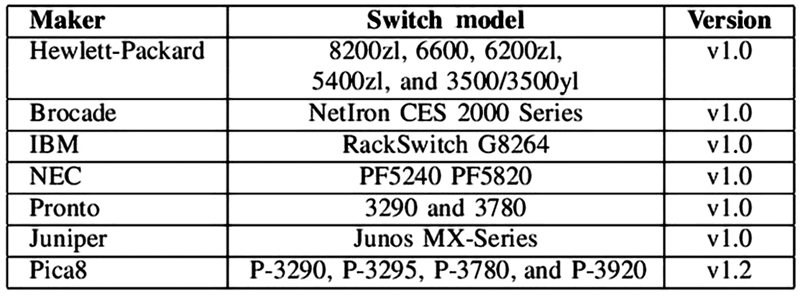

4.4. SDN in practice

4.4.1. Implementation aspects: forwarding model

4.4.2. Implementation aspects: controller

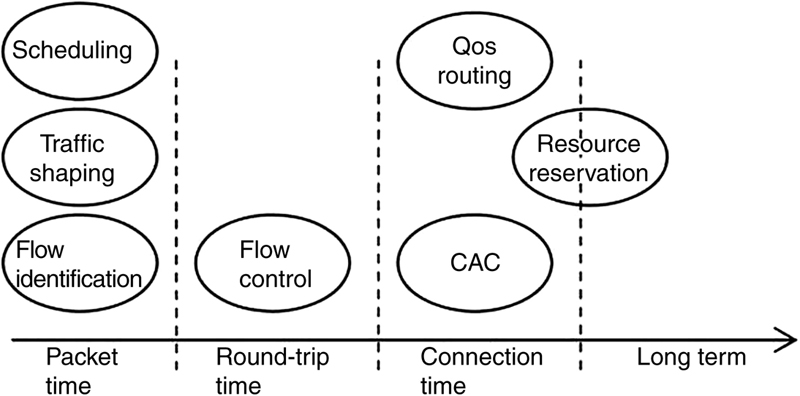

5. QoS applications for STS

5.1. STS scenario

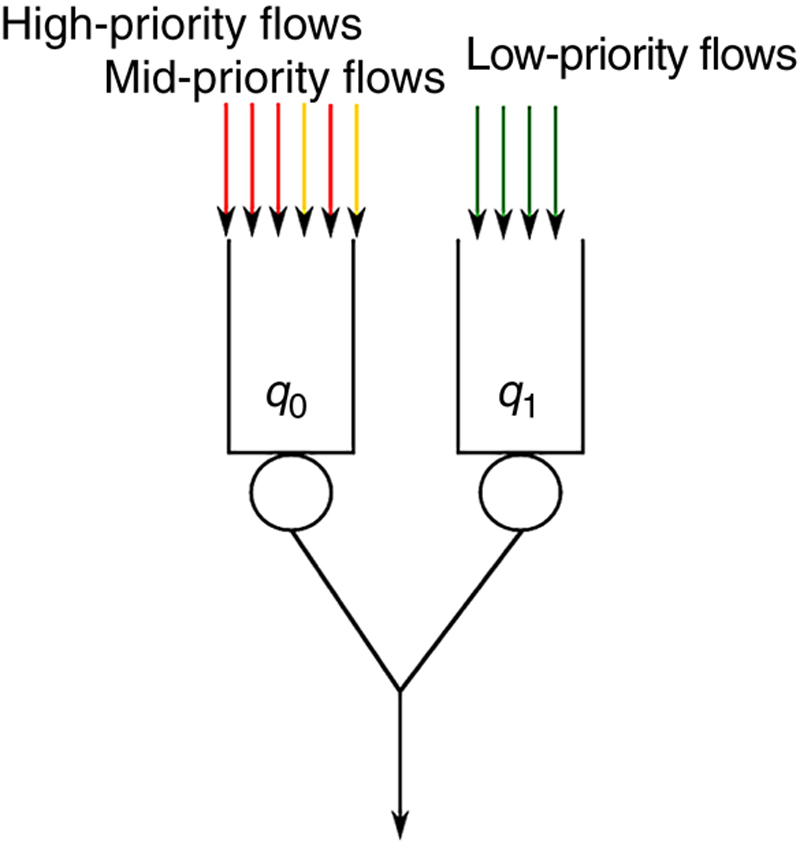

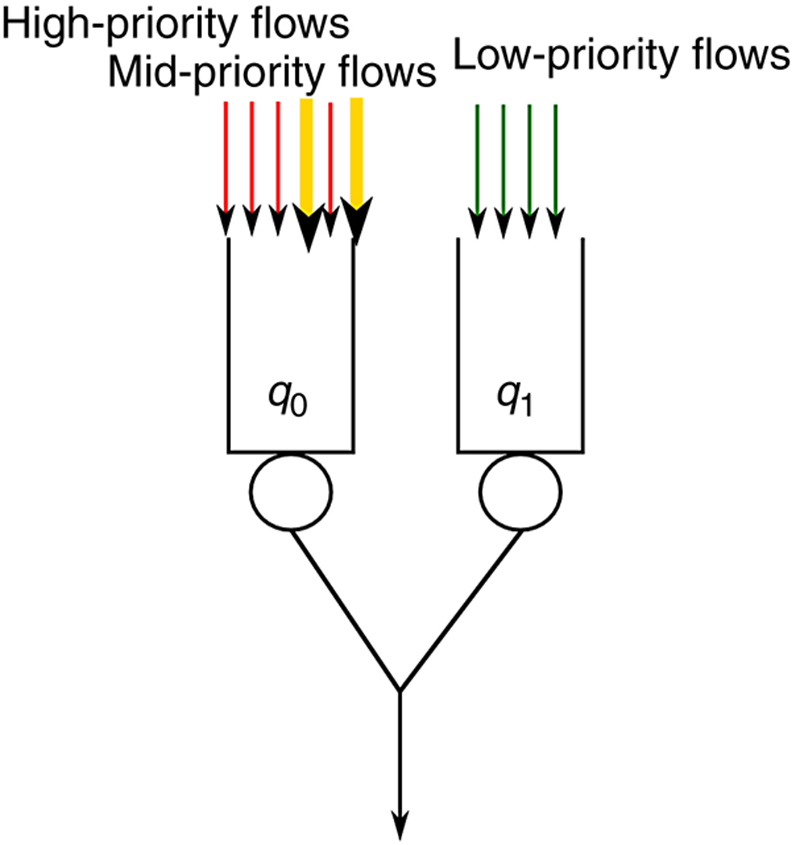

Table 14.3

BeaQoS Statistics

| Statistics Available in OpenFlow 1.0 | Statistics Computed by BeaQoS | |

| Tx bytes per flow | → | Estimated rate per flow |

| Tx bytes per port | → | Estimated rate per port |

| Tx bytes per queue | → | Estimated rate per queue |

| Flow match | ||

| Flow actions | → | Flows per queue |

| Queue ID |

Table 14.4

Queues Configuration and Traffic Characteristics

| Queue ID | Service Rate | Buffer Size |

| 0 | 4 Mbit/s | 1000 packets |

| 1 | 16 Mbit/s | 1000 packets |

| Traffic Descriptor | Rate | Percentage |

| HP/MP | 40-60 kbit/s | 40% |

| MP no conformant | 200-800 kbit/s | 20% |

| LP | 200-800 kbit/s | 40% |