C H A P T E R 14

Unit Testing

More than the act of testing, the act of designing tests is one of the best bug preventers known. The thinking that must be done to create a useful test can discover and eliminate bugs before they are coded — indeed, test-design thinking can discover and eliminate bugs at every stage in the creation of software, from conception to specification, to design, coding and the rest.

—Boris Beizer

You can see a lot by just looking.

—Yogi Berra

As was emphasized in the last chapter, nobody's perfect, including software developers. In Chapter 13 we talked about different things to look for when you know there are errors in your code. Now we're going to talk about how to find those errors. Of the three types of errors in your code, the compiler will find the syntax errors and the occasional semantic error. In some language environments, the run-time system will find others (to your users chagrin). The rest of the errors are found in two different ways – testing, and code reviews and inspections. In this chapter, we'll discuss testing, when to do it, what it is, how to do it, what your tests should cover, and the limitations of testing. In the next chapter we'll talk about code reviews and inspections.

There are three levels of testing in a typical software development project: unit testing, integration testing, and system testing. Unit testing is typically done by you, the developer. With unit testing, you're testing individual methods and classes, but you're generally not testing larger configurations of the program. You're also not usually testing interfaces or library interactions – except those that your method might actually be using. Because you are doing unit testing, you know how all the methods are written, what the data is supposed to look like, what the method signatures are, and what the return values and types should be. This is known as white-box testing. It should really be called transparent-box testing, because the assumption is you can see all the details of the code being tested.

Integration testing is normally done by a separate testing organization. This is the testing of a collection of classes or modules that interact with each other; its purpose is to test interfaces between modules or classes and the interactions between the modules. Testers write their tests with knowledge of the interfaces but not with information about how each module has been implemented. From that perspective the testers are users of the interfaces. Because of this, integration testing is sometimes called gray-box testing. Integration testing is done after unit tested code is integrated into the source code base. A partial or complete version of the product is built and tested, to find any errors in how the new module interacts with the existing code. This type of testing is also done when errors in a module are fixed and the module is re-integrated into the code base.

System testing is normally done by a separate testing organization. This is the testing of the entire program (the system). System testing is done on both internal baselines of the software product and on the final baseline that is proposed for release to customers. The separate testing organization uses the requirements and writes their own tests without knowing anything about how the program is designed or written. This is known as black-box testing because the program is opaque to the tester except for the inputs it takes and the outputs it produces. The job of the testers at this level is to make sure that the program implements all the requirements. Black box testing can also include stress testing, usability testing, and acceptance testing. End users may be involved in this type of testing.

The Problem with Testing

So, if we can use testing to find errors in our programs, why don't we find all of them? After all, we wrote the program, or at least the fix or new feature we just added, so we must understand what we just wrote. We also wrote the tests. So why do so many errors escape into the next phase of testing or even into users hands?

Well, there are two reasons we don't find all the errors in our code. First, we're not perfect. This seems to be a theme here. But we're not. If we made mistakes when we wrote the code, why should we assume we won't make some mistakes when we read it or try to test and fix it? This happens for even small programs, but it's particularly true for larger programs. If you have a 50,000 line program, that's a lot to read and understand and you're bound to miss something. Also, static reading of programs won't help you find those dynamic interactions between modules and interfaces. So we need to test more intelligently and combine both static (code reading) and dynamic (testing) techniques to find and fix errors in programs.

The second reason that errors escape from one testing phase to another and ultimately to the user is that software, more than any other product that humans manufacture, is very complex. Even small programs have many pathways through the code and many different types of data errors that can occur. This large number of pathways through a program is called a combinatorial explosion. Every time you add an if statement to your program, you double the number of possible paths through the program. Think about it; you have one path through the code if the conditional expression in the if statement is true, and a different path if the conditional expression is false. Every time you add a new input value you increase complexity and increase the number of possible errors. This means that, for large programs, you can't possibly test every possible path through the program with every possible input value. There are an exponential number of code path/data value combinations to test every one.

So what to do? Well, if brute force won't work, then you need a better plan. That plan is to identify those use cases that are the most probable and test those. You need to identify the likely input data values and the boundary conditions for data, and figure out what the likely code paths will be and test those. That, it turns out, will get you most of the errors. Steve McConnell says in Code Complete that a combination of good testing and code reviews can uncover more than 95% of errors in a good-sized program.1 That's what we need to shoot for.

______________

1 McConnell, S. Code Complete 2: A Practical Handbook of Software Construction. (Redmond, WA: Microsoft Press, 2004.)

That Testing Mindset

There's actually another problem with testing – you. Well, actually, you, the developer. You see, developers and testers have two different, one might say adversarial, roles to play in code construction. Developers are there to take a set of requirements and produce a design that reflects the requirements and write the code that implements the design. Your job as a developer is to get code to work.

A tester's job, on the other hand, is to take those same requirements and your code and get the code to break. Testers are supposed to do unspeakable, horrible, wrenching things to your code in an effort to get the errors in it to expose themselves to the light of day. Their job is to break stuff. You the developer then get to fix it. This is why being a tester can be a very cool job.

You can see where this might be an adversarial relationship. You can also see where developers might make pretty bad testers. If your job is to make the code work, you're not focused on breaking it. So your test cases may not be the nasty, mean test cases that someone whose job it is to break your code may come up with. In short, because they're trying to build something beautiful, developers make lousy testers. Developers tend to write tests using typical, clean data. They tend to have an overly optimistic view of how much of their code that a test will exercise. They tend to write tests assuming that the code will work; after all it's their code, right?

This is why most software development organizations have a separate testing team, particularly for integration and system testing. The testers write their own test code, create their own frameworks, do the testing of all new baselines and the final release code, and report all the errors back to the developers who then must fix them. The one thing testers normally do not do is unit testing. Unit testing is the developer's responsibility, so you're not off the hook here. You do need to think about testing, learn how to write tests, how to run them, and how to analyze the results. You need to learn to be mean to your code. And you still need to fix the errors.

When to Test?

Before I get around to discussing just how to do unit testing and what things to test, let's talk about when to test. Current thinking falls into two areas: the more traditional approach is to write your code, get it to compile, so you've eliminated the syntax errors, and then write your tests and do your unit testing after you feel the code for a function or a module is finished. This has the advantage that you've understood the requirements and written the code and while you were writing the code you had the opportunity to think about test cases. Then you can write clear test cases. In this strategy testing and debugging go hand in hand and occur pretty much simultaneously. It allows you to find an error, fix it, and then re-run the failed test right away.

A newer approach that flows out of the agile methodologies, especially out of Extreme Programming, is called test-driven development (TDD). With TDD, you write your unit tests before you write any code. Clearly if you write your unit tests first, they will all fail – at most you'll have the stub of a method to call in your test. But that's a good thing because in TDD your goal when you write code is to get all the tests to pass. So if you've written a bunch of tests, you then write just enough code to make all the tests pass and then you know you're done! This has the advantage of helping you keep your code lean, which implies simpler and easier to debug. You can write some new code, test it; if it fails write some more code, if it passes, stop. It also gives you, right up front, a set of tests you can run whenever you make a change to your code. If the tests all still pass, then you haven't broken anything by making the changes. It also allows you to find an error, fix it, and then re-run the failed test right away.

So which way is better? Well, the answer is another of those “it depends” things. Generally, writing your tests first gets you in the testing mind-set earlier and gives you definite goals for implementing the code. On the other hand, until you do it a lot and it becomes second nature, writing tests first can be hard because you have to visualize what you're testing. It forces you to come to terms with the requirements and the module or class design early as well. That means that design/coding/testing all pretty much happen at once. This can make the whole code construction process more difficult. TDD works well for small to medium sized projects (as do agile techniques in general), but it may be more difficult for very large programs. TDD also works quite well when you are pair programming. In pair programming, the driver is writing the code while the navigator is watching for errors and thinking about testing. With TDD, the driver is writing a test while the navigator is thinking of more tests to write and thinking ahead to the code. This process tends to make writing the tests easier and then flows naturally into writing the code.

Give testing a shot both before and after and then you can decide which is best.

What to Test?

Now that we've talked about different phases of testing and when you should do your unit testing, it's time to discuss just what to test. What you're testing falls into two general categories: code coverage and data coverage.

- Code coverage has the goal of executing every line of code in your program at least once with representative data so you can be sure that all the code functions correctly. Sounds easy? Well, remember that combinatorial explosion problem for that 50,000 line program.

- Data coverage has the goal of testing representative samples of good and bad data, both input data and data generated by your program, with the objective of making sure the program handles data and particularly data errors correctly.

Of course there is overlap between code coverage and data coverage; sometimes in order to get a particular part of your program to execute you have to feed it bad data, for example. We'll separate these as best we can and come together when we talk about writing actual tests.

Code Coverage: Test Every Statement

Your objective in code coverage is to test every statement in your program. In order to do that, you need to keep several things in mind about your code. Your program is made up of a number of different types of code, each of which you need to test.

First, there's straight line code. Straight line code illuminates a single path through your function or method. Normally this will require one test – per different data type (see below for data coverage).

Next there is branch coverage. With branch coverage you want to test everywhere your program can change directions. That means you need to look at control structures here. Take a look at every if and switch statement, and every complex conditional expression – those that contain AND and OR operators in them. For every if statement you'll need two tests – one for when the conditional expression is true and one for when it's false. For every switch statement in your method you'll need a separate test for each case clause in the switch, including the default clause (all your switch statements have a default clause, right?). The logical and (&&) and or (||) operators add complexity to your conditional expressions, so you'll need extra test cases for those.

Ideally, you'll need four test cases for each (F-F, F-T, T-F, T-T), but if the language you are using uses short-cut evaluation for logical operators, as do C/C++ and Java, then you can reduce the number of test cases. For the or operator you'll still need two cases if the first sub-expression is false, but you can just use a single test case if the first sub-expression evaluates to true (the entire expression will always be true). For the and operator, you'll only need a single test if the first sub-expression evaluates to false (the result will always be false) but you need both tests if the first sub-expression evaluates to true.

Then there is loop coverage. This is similar to branch coverage above. The difference here is that in for, while, or do-while loops you have the best likelihood of introducing an off-by-one error and you need to test for that explicitly. You'll also need a test for a “normal” run through the loop, but you'll need to test for a couple of other things too. First will be the possibility for the pre-test loops that you never enter the loop body – the loop conditional expression fails the very first time. Then you'll need to test for an infinite loop – the conditional expression never becomes false. This is most likely because you don't change the loop control variable in the loop body, or you do change it, but the conditional expression is wrong from the get-go. For loops that read files, you normally need to test for the end-of-file marker (EOF). This is another place where errors could occur either because of a premature end-of-file or because (in the case of using standard input) end-of-file is never indicated.

Finally, there are return values. In many languages, standard library functions and operating system calls all return values. For example, in C, the fprintf and fscanf functions return the number of characters printed to an output stream and the number of input elements assigned from an input stream, respectively. But hardly anyone ever checks these return values.2 You should!

Note that Java is a bit different than C or C++. In Java many of the similarly offending routines will have return values declared void rather than int as in C or C++. So the above problem occurs much less frequently in Java than in other languages. It's not completely gone however. While the System.out.print() and System.out.println() methods in Java are both declared to return void, the System.out.printf() method returns a PrintStream object that is almost universally ignored. In addition, it's perfectly legal in Java to call a Scanner's next() or nextInt() methods or any of the methods that read data and not save the return value in a variable. Be careful out there.

Data Coverage: Bad Data Is Your Friend?

Remember in the chapter on Code Construction we talked about defensive programming, and that the key to defending your program was watching out for bad data, detecting and handling it so that your program can recover from bad data or at least fail gracefully. Well, this is where we see if your defenses are worthy. Data coverage should examine two types of data, good data and bad data. Good data is the typical data your method is supposed to handle. These tests will test data that is the correct type and within the correct ranges. They are just to see if your program is working normally. This doesn't mean you're completely off the hook here. There are still a few cases to test. Here's the short list:

- Test boundary conditions. This means to test data near the edges of the range of your valid data. For example, if your program is computing average grades for a course, then the range of values is between 0 and 100 inclusive. So you should test grades at, for example, 0, 1, 99, and 100. Those are all valid grades. But you should also test at -1, and 101. Both of these are invalid values, but are close to the range. In addition, if you are assigning letter grades, you need to check at the upper and lower boundaries of each letter grade value. So if an F is any grade below a 60, you need to check 59, 60, and 61. If you're going to have an off-by-one error, that's where to check.

- Test typical data values. These are valid data fields that you might normally expect to get. For the grading example above, you might check 35, 50, 67, 75, 88, 93, and so on. If these don't work you've got other problems.

- Test pre- and post-conditions. Whenever you enter a control structure – a loop or a selection statement, or make a function call, you're making certain assumptions about data values and the state of your computations. These are pre-conditions. And when you exit that control structure, you're making assumptions about what those values are now. These are post-conditions. You should write tests that make sure that your assumptions are correct by testing the pre- and post-conditions. In languages that have assertions (including C, C++, and Java), this is a great place to use them.

____________

2 Kernighan, B. W. and R. Pike. The Practice of Programming. (Boston, MA: Addison-Wesley, 1999.)

Testing valid data and boundary conditions is one thing, but you also need to test bad data.

- Illegal data values. You should test data that is blatantly illegal to make sure that your data validation code is working. We already mentioned testing illegal data near the boundaries of your data ranges. You should also test some that are blatantly out of the range.

- No data. Yup, test the case where you are expecting data and you get nothing. This is the case where you've prompted a user for input and instead of typing a value and hitting the return key, they just hit return. Or the file you've just opened is empty. Or you're expecting three files on the command line and you get none. You've got to test all of these cases.

- Too little or too much data. You have to test the cases where you ask for three pieces of data and only get two. Also the cases where you ask for three and you get ten pieces of data.

- Uninitialized variables. Most language systems these days will provide default initialization values for any variable that you declare. But you should still test to make sure that these variables are initialized correctly. (Really, you should not depend on the system to initialize your data anyway; you should always initialize it yourself.)

Characteristics of Tests

Robert Martin, in his book Clean Code describes a set of characteristics that all unit tests should have using the acronym F.I.R.S.T.:3

Fast. Tests should be fast. If your tests take a long time to run, you're liable to run them less frequently. So make your tests small, simple, and fast.

Independent. Tests should not depend on each other. In particular, one test shouldn't set up data or create objects that another test depends on For example, the JUnit testing framework for Java has separate set-up and tear-down methods that make the tests independent. We'll examine JUnit in more detail later on.

Repeatable. You should be able to run your tests any time you want, in any order you want, including after you've added more code to the module.

Self-Validating. The tests should either just pass or fail; in other words, their output should just be boolean. You shouldn't have to read pages and pages of a log file to see if the test passed or not.

Timely. This means you should write the tests when you need them, so that they're available when you want to run them. For agile methodologies that use TDD, this means write the unit tests first, just before you write the code that they will test.

_____________

3 Martin, R. C. Clean Code: A Handbook of Agile Software Craftsmanship. (Upper Saddle River, NJ: Prentice-Hall, 2009.)

Finally, its important that just like your functions, your tests should only test one thing; there should be a single concept for each test. This is very important for your debugging work because if each test only tests a single concept in your code, a test failure will point you like a laser at the place in your code where your error is likely to be.

How to Write a Test

Before we go any further, let's look a bit deeper into how to write a unit test. We will do this by hand now to get the feel for writing tests and we'll examine how a testing framework helps us when we talk about JUnit in the next section. We'll imagine that we are writing a part of an application and go from there. We'll do this in the form of a user story, as it might be done in an Extreme Programming (XP) environment.4

In XP the developers and the customer get together to talk about what the customer wants. This is called exploration. During exploration the customer writes a series of stories that describe features that they want in the program. These stories are taken by the developers and broken up into implementation tasks and estimated. Pairs of programmers take individual tasks and implement them using TDD. We'll present a story, break it up into a few tasks, and implement some tests for the tasks just to give you and idea of the unit testing process.

The Story

We want to take as input a flat file of phone contacts and we want to sort the file alphabetically and produce an output table that can be printed.

Really, that's all. Stories in XP projects are typically very short – the suggestion is that they be written on 3 × 5 index cards.

So we can break this story up into a set of tasks. By the way, this will look suspiciously like a design exercise; it is.

The Tasks

We need a class that represents a phone contact.

We need to create a phone contact.

We need to read a data file and create a list of phone contacts. (This may look like two things, but it's really just one thing – converting a file in to a list of phone contacts.)

We need to sort the phone contacts alphabetically by last name.

We need to print the sorted list.

______________

4 Newkirk, J. and R. C. Martin. Extreme Programming in Practice. (Boston, MA, Addison-Wesley, 2001.)

The Tests

First of all, we'll collapse the first two tasks above into a single test. It makes sense once we've created a phone contact class to make sure we can correctly instantiate an object; in effect we're testing the class' constructors. So let's create a test.

In our first test we'll create an instance of our phone contact object and print out the instance variables to prove it was created correctly. We have to do a little design work first. We have to figure out what the phone contact class will be called and what instance variables it will have.

A reasonable name for the class is PhoneContact, and as long as it's alright with our customer, the instance variables will be firstName, lastName, phoneNumber, and emailAddr. Oh, and they can all be String variables. It's a simple contact list. For this class we can have two constructors. A default constructor that just initializes the contacts to null and a constructor that takes all four values as input arguments and assigns them. That's probably all we need at the moment. Here's what the test may look like:

public class TestPhoneContact

{

/**

* Default constructor for test class TestPhoneContact

*/

public TestPhoneContact() {

}

public void testPhoneContactCreation() {

String fname = "Fred";

String lname = "Flintstone";

String phone = "800-555-1212";

String email = "[email protected]";

PhoneContact t1 = new PhoneContact();

System.out.printf("Phone Contact reference is %H

", t1);

PhoneContact t2 = new PhoneContact(fname, lname, phone, email);

System.out.printf("Phone Contact:

Name = %s

Phone = %s

Email = %s

",

t2.getName(), t2.getPhoneNum(),

t2.getEmailAddr());

}

}

Now this test will fail to begin with because we've not created the PhoneContact class yet. That's okay. So let's do that now. The PhoneContact class will be simple, just the instance variables, the two constructors, and getter and setter methods for the variables. A few minutes later we have:

public class PhoneContact {

/**

* instance variables */

private String lastName;

private String firstName;

private String phoneNumber;

private String emailAddr;

/*

* Constructors for objects of class PhoneContac

*/

public PhoneContact() {

lastName = "";

firstName = "";

phoneNumber = "";

emailAddr = "";

}

public PhoneContact(String firstName, String lastName,

String phoneNumber, String emailAddr) {

this.lastName = lastName;

this.firstName = firstName;

this.phoneNumber = phoneNumber;

this.emailAddr = emailAddr;

}

/**

* Getter and Setter methods for each of the instance variable

*/

public String getName() {

return this.lastName + ", " + this.firstName;

}

public String getLastName() {

return this.lastName;

}

public String getFirstName() {

return this.firstName;

}

public String getPhoneNum() {

return this.phoneNumber;

}

public String getEmailAddr() {

return this.emailAddr;

}

public void setLastName(String lastName) {

this.lastName = lastName;

}

public void setFirstName(String firstName) { this.firstName = firstName;

}

public void setPhoneNum(String phoneNumber) {

this.phoneNumber = phoneNumber;

}

public void setEmailAddr(String emailAddr) {

this.emailAddr = emailAddr;

}

}

The last thing we need is a driver for the test we've just created. This will complete the scaffolding for this test environment.

public class TestDriver

{

public static void main(String [] args)

{

TestPhoneContact t1 = new TestPhoneContact();

t1.testPhoneContactCreation();

}

}

Now, when we compile and execute the TestDriver we'll get displayed on the output console something like this:

Phone Contact reference is 3D7DC1CB

Phone Contact:

Name = Flintstone, Fred

Phone = 800-555-1212

Email = [email protected]

The next task is to read a data file and create a phone contact list. Here, before we figure out the test or the code we need to decide on some data structures.

Since the story says “flat file of phone contacts” we can just assume we're dealing with a text file where each line contains phone contact information. Say the format mirrors the PhoneContact class and is “first_name last_name phone_number email_addr” one entry per line.

Next we need a list of phone contacts that we can sort later and print out. Because we want to keep the list alphabetically by last name, we can use a TreeMap Java Collections type to store all the phone contacts. Then we don't even need to sort the list because the TreeMap class keeps the list sorted for us. It also looks like we'll need another class to bring the PhoneContact objects and the list operations together. So what's the test look like?

Well, in the interest of keeping our tests small and to adhere to the “a test does just one thing” maxim, it seems like we could use two tests after all, one to confirm that the file is there and can be opened, and one to confirm that we can create the PhoneContact list data structure. For the file opening test, it looks like we'll need a new class that represents the phone contact list. We can just stub that class out for now, creating a simple constructor and a stub of the one method that we'll need to test. That way we can write the test (which will fail because we don't have a real method yet). The file opening test looks like

public void testFileOpen() {

String fileName = "phoneList.txt";

PhoneContactList pc = new PhoneContactList();

boolean fileOK = pc.fileOpen(fileName);

if (fileOK == false) {

System.out.println("Open Failed");

System.exit(1);

}

}

which we add to the testing class we created before. In the TestDriver class above we just add the line

t1.testFileOpen();

to the main() method. Once this test fails, you can then implement the new class, and fill in the stubs that we created above. The new PhoneContactList class then looks like

import java.util.*;

import java.io.*;

public class PhoneContactLis

{

private TreeMap<String, PhoneContact> phoneList;

private Scanner phoneFile;

/**

* Constructors for objects of class PhoneContactLis

*/

public PhoneContactList() {

}

public PhoneContactList(PhoneContact pc)

}

phoneList = new TreeMap<String, PhoneContact>();

phoneList.put(pc.getLastName(), pc);

}

public boolean fileOpen(String name)

{

try {

phoneFile = new Scanner(new File(name));

return true;

} catch (FileNotFoundException e) {

System.out.println(e.getMessage());

return false;

} }

}

So this is how your test-design-develop process will work. Try creating the rest of the tests we listed above and finish implementing the PhoneContactList class code. Good luck.

JUnit: A Testing Framework

In the previous section, we created our own test scaffolding, and hooked our tests into it. Many development environments have the facilities to do this for you. One of the most popular for Java is the JUnit testing framework that was created by Eric Gamma and Kent Beck (see www.junit.org).

JUnit is a framework for developing unit tests for Java classes. It provides a base class called TestCase that you extend to create a series of tests for the class you are creating. JUnit contains a number of other classes, including an assertion library used for evaluating the results of individual tests, and several applications that run the tests you create. A very good FAQ for JUnit is at http://junit.sourceforge.net/doc/faq/faq.htm#overview_1.

To write a test in JUnit, you must import the framework classes and then extend the TestCase base class. A very simple test would look like this:

import junit.framework.TestCase;

public class SimpleTest extends TestCase {

public SimpleTest(String name) {

super(name);

}

public void testSimpleTest() {

LifeUniverse lu = new LifeUniverse();

int answer = lu.ultimateQuestion();

assertEquals(42, answer);

}

}

Note that the single-argument constructor is required. The assertEquals() method is one of the assertion library (junit.framework.Assert) methods which, of course, tests to see if the expected answer (the first parameter) is equal to the actual answer (the second parameter). There are many other assert*() methods. The complete list is at http://junit.sourceforge.net/javadoc/.

Because JUnit is packaged in a Java jar file, you either need to add the location of the jar file to your Java CLASSPATH environment variable, or add it to the line when you compile the test case from the command line. For example, to compile our simple test case we would use this:

% javac -classpath $JUNIT_HOME/junit.jar SimpleTest.java

where $JUNIT_HOME is the directory where you installed the junit.jar file.

Executing a test from the command line is just as easy as compiling. There are two ways to do it. The first is to use one of the JUnit pre-packaged runner classes, which takes as its argument the name of the test class

java -cp .:./junit.jar junit.textui.TestRunner SimpleTest

which results in

where there is a dot for every test that is run, the time the entire test suite required, and the results of the tests.

You can also execute the JUnitCore class directly, also passing the name of the test class as an argument to the class

java -cp .:./junit.jar org.junit.runner.JUnitCore SimpleTest

which results in

JUnit version 4.8.2

.

Time: 0.004

OK (1 test)

JUnit is included in many standard integrated development environments (IDEs). BlueJ, NetBeans, and Eclipse all have JUnit plug-ins, making the creation and running of unit test cases nearly effortless.

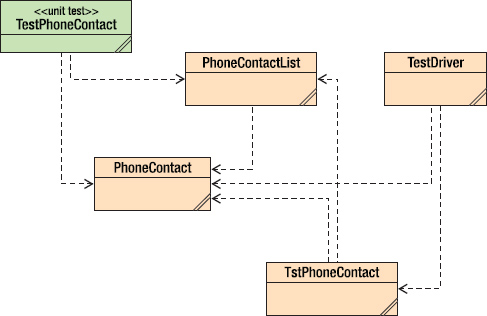

For example, with our example above and using BlueJ, we can create a new Unit Test class and use it to test our PhoneContact and PhoneContactList classes. See Figure 14-1.

Figure 14-1.The PhoneContact Test UML diagrams

Our test class, TestPhoneContact now looks like:

public class TestPhoneContact extends junit.framework.TestCase

{

/**

* Default constructor for test class TestPhoneContact

*/

public TestPhoneContact(String name) {

super(name);

}

/**

* Sets up the test fixture.

* Called before every test case method.

*/

protected void setUp()

{

}

/**

* Tears down the test fixture.

* Called after every test case method.

*/

protected void tearDown()

{

}

public void testPhoneContactCreation() {

String fname = "Fred";

String lname = "Flintstone";

String phone = "800-555-1212";

String email = "[email protected]";

PhoneContact pc = new PhoneContact(fname, lname, phone, email);

assertEquals(lname, pc.getLastName());

assertEquals(fname, pc.getFirstName());

assertEquals(phone, pc.getPhoneNum());

assertEquals(email, pc.getEmailAddr());

}

public void testFileOpen() {

String fileName = "phoneList.txt";

PhoneContactList pc = new PhoneContactList();

boolean fileOK = pc.fileOpen(fileName);

assertTrue(fileOK);

if (fileOK == false) {

System.out.println("Open Failed, File Not Found");

System.exit(1);

}

}

}

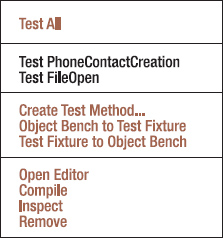

To run this set of tests in BlueJ, we select Test All from the drop-down menu shown in Figure 14-2.

Figure 14-2. The JUnit Menu - Select Test All to run the tests

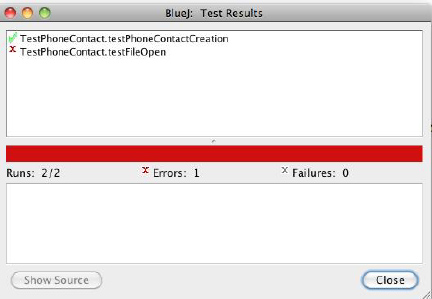

Because we don't have a phoneList.txt file created yet, we get the output shown in Figure 14-3.

Figure 14-3. JUnit Testing output

Here, we note that the testFileOpen() test has failed.

Every time we make any changes to our program we can add another test to the TestPhoneContact class and re-run all the tests with a single menu selection. The testing framework makes is much easier to create individual tests and whole suites of tests that can be run every time you make a change to the program. This lets us know every time we make a change if we've broken something or not. Very cool.

Testing Is Good

At the end of the day, unit testing is a critical part of your development process. Done carefully and correctly, it can help you remove the vast majority of your errors even before you integrate your code into the larger program. TDD, where you write tests first and then write the code that makes the tests succeed, is an effective way to catch errors in both low-level design and coding and allows you to easily and quickly create a regression test suite that you can use for every integration and every baseline of your program.

Conclusion

From your point of view as the developer, unit testing is the most important class of testing your program will undergo. It's the most fundamental type of testing, making sure your code meets the requirements of the design at the lowest level. Despite the fact that developers are more concerned with making sure their program works than with breaking it, developing a good unit testing mindset is critical to your development as a mature, effective programmer. Testing frameworks make this job much easier than in the past and so learning how your local testing framework operates and learning to write good tests is a skill you should work hard at. Better that you should find your own bugs than the customer.

References

Kernighan, B. W. and R. Pike. The Practice of Programming. (Boston, MA: Addison-Wesley, 1999.)

Martin, R. C. Clean Code: A Handbook of Agile Software Craftsmanship. (Upper Saddle River, NJ: Prentice-Hall, 2009.)

McConnell, S. Code Complete 2: A Practical Handbook of Software Construction. (Redmond, WA: Microsoft Press, 2004.)

Newkirk, J. and R. C. Martin. Extreme Programming in Practice. (Boston, MA, Addison-Wesley, 2001.)