7 Assessing and Improving the Development and Test Processes

This chapter describes different assessment and improvement models that relate either generally to the improvement of processes, products, or services (TQM, Kaizen, Six Sigma), or specifically to the software development or test process. It also explains four appraisal or assessment models: CMMI (Capability Maturity Model Integration), ISO/IEC 15504 (the “SPICE” model for software development), TMM (Testing Maturity Model) and TPI (Test Process Improvement); the two latter ones are designed specifically for the test process.

A whole range of studies and statistics have shown that many software development projects do not achieve their required objectives at all or achieve them only partly. One of the well-known publications is the Standish Group Chaos Report ([URL: Standish Group]). Over the past years, the percentage of successful projects—i.e., projects that have stayed within the estimated budget and schedule and have delivered the required functionality—has leveled at around 33%. Approximately one fifth of the projects are a total failure. The remaining and largest proportion of projects deliver their software systems too late, at considerably higher costs, and with a functionality unsatisfactory to the customer.

Chaos Report

According to the Chaos Report, the proportion of successful projects has decreased even further over the past years. There is a large variety of different approaches intended to raise the percentage of successful projects. Some of them, for instance Total Quality Management (TQM), relate to the management of projects or the production of goods in a more general way, whereas others concentrate specifically on the improvement of software development processes. Examples are SPICE and Test Process Improvement (TPI). TPI focuses exclusively on the test process.

What all of the approaches have in common is that they are concerned with the solution of the problems exposed in the Chaos Report. As such, they aim at the following:

Improvement objectives

![]() Realistic project planning and successful implementation of the plans

Realistic project planning and successful implementation of the plans

![]() Transparency of project status and project progress

Transparency of project status and project progress

![]() Enhancing the quality of released (software) products

Enhancing the quality of released (software) products

![]() Lowering of costs during development (and maintenance)

Lowering of costs during development (and maintenance)

![]() Reducing time to market

Reducing time to market

Defect prevention and early detection may help to achieve or support these goals. Testing makes a substantial contribution to this process in that it appraises quality and enables controlling activities in case of poor quality. Early defect detection and removal lead to reduced costs and shorter development times because defects detected downstream are a lot more expensive and take up much more time.

Defect prevention and early defect detection

The following sections look at the different improvement approaches in some more detail.

7.1 General Techniques and Approaches

These approaches to process assessment and improvement can be applied not only to software development processes but also more generally to the development and manufacturing of products. They can also be applied in the service industry.

The following sections introduce a small selection of these approaches—namely “TQM”, “Kaizen”, and “Six Sigma”—together with their associated practices and possible application in the software development process.

7.1.1 Total Quality Management (TQM)

The International Organization for Standardization (ISO) describes Total Quality Management as “a management approach for an organization, centered on quality, based on the participation of all its members and aiming at long-term success through customer satisfaction, and benefits to all members of the organization and to society”.

As the term “total” implies, TQM seeks to realize a really comprehensive concept in which all members at all hierarchical levels in an organization are involved. TQM considers the interests of the customer, of everyone in the organization, and of the suppliers. In short, all are working in concert.

Total

Quality takes center stage, especially when it comes to customer satisfaction. Moreover, behavioral changes in the organization are supposed to improve employee satisfaction, increase productivity, lower costs, and shorten development and production cycles.

Quality

Such a concept requires active planning, development, and maintenance; in other words, it’s needs to be “managed”.

Management

TQM does not provide a plan, nor does it propose detailed practices; instead, it imparts a basic attitude toward quality and economic action, However, TQM does provide a number of relevant principles, which are briefly listed here:

No instructions—it’s an attitude.

![]() Customer orientation: Do not produce what is technically feasible but what the customer requests and desires.

Customer orientation: Do not produce what is technically feasible but what the customer requests and desires.

![]() Process orientation: Software systems are developed based on a defined process that is reproducible and that can be improved. The causes of poor quality lie in an insufficient process. Quality is not a random product but the result of a planned and repeatable process.

Process orientation: Software systems are developed based on a defined process that is reproducible and that can be improved. The causes of poor quality lie in an insufficient process. Quality is not a random product but the result of a planned and repeatable process.

![]() Primacy of quality: Quality has absolute priority. Ambiguity or inaccuracies that may lead to defects must be corrected or removed immediately before the development process is continued. “We’ll solve the problem later!” should never be heard.

Primacy of quality: Quality has absolute priority. Ambiguity or inaccuracies that may lead to defects must be corrected or removed immediately before the development process is continued. “We’ll solve the problem later!” should never be heard.

![]() All employees accept accountability or ownership: Each employee is responsible for quality and considers it an integral part of his daily work.

All employees accept accountability or ownership: Each employee is responsible for quality and considers it an integral part of his daily work.

![]() Internal customer-supplier relationship: Formal acceptance and delivery of interim products are planned during software development and not just at final delivery to the customer. This way employees develop an awareness for their responsibility toward the quality of the intermediate products. In the end, this helps to enhance the quality of the final product.

Internal customer-supplier relationship: Formal acceptance and delivery of interim products are planned during software development and not just at final delivery to the customer. This way employees develop an awareness for their responsibility toward the quality of the intermediate products. In the end, this helps to enhance the quality of the final product.

![]() Continuous improvement: We do not need to make revolutionary changes; small step-by-step improvements collectively implemented will bring about the desired results.

Continuous improvement: We do not need to make revolutionary changes; small step-by-step improvements collectively implemented will bring about the desired results.

![]() Stabilizing improvements: During the rollout phase of changes, appropriate measures must be taken to ensure that these changes will not be forgotten again in the daily routine. This is the only way to ensure that they will have a long-term effect.

Stabilizing improvements: During the rollout phase of changes, appropriate measures must be taken to ensure that these changes will not be forgotten again in the daily routine. This is the only way to ensure that they will have a long-term effect.

![]() Rational decisions: Decisions and changes must be rationalized explicitly and based on facts. As a prerequisite, this requires a concept containing continuous data collection.

Rational decisions: Decisions and changes must be rationalized explicitly and based on facts. As a prerequisite, this requires a concept containing continuous data collection.

![]() A good overview of and introduction to TQM can be found in [Goetsch 02]. The rules and principles provided by TQM can be easily be applied in the test process. In TQM, test managers will find a lot of useful ideas for their daily work.

A good overview of and introduction to TQM can be found in [Goetsch 02]. The rules and principles provided by TQM can be easily be applied in the test process. In TQM, test managers will find a lot of useful ideas for their daily work.

Test management and TQM

Example: Applying TQM principles in VSR testing

![]() Customer orientation: The customer agrees with the test schedule. This involves a customer review of the test schedule as a result of which he provides suggestions stating which test topics, in his view, address important or less important aspects.

Customer orientation: The customer agrees with the test schedule. This involves a customer review of the test schedule as a result of which he provides suggestions stating which test topics, in his view, address important or less important aspects.

![]() Process orientation: Testing follows the test process described in chapter 2. The process has been adapted in part to the specific requirements of the project and is documented in a system that is easily accessible via an intranet. Each team member assumes a defined role (e.g., test manager, test designer). Role-related instruction and training plans are in place.

Process orientation: Testing follows the test process described in chapter 2. The process has been adapted in part to the specific requirements of the project and is documented in a system that is easily accessible via an intranet. Each team member assumes a defined role (e.g., test manager, test designer). Role-related instruction and training plans are in place.

![]() Primacy of quality: Testing stops as soon as the defined test exit criteria have been met, never before. All detected flaws and defects are documented. There is no unclassified or nonassigned incident. The Change Control Board (CCB) decides on correction measures.

Primacy of quality: Testing stops as soon as the defined test exit criteria have been met, never before. All detected flaws and defects are documented. There is no unclassified or nonassigned incident. The Change Control Board (CCB) decides on correction measures.

![]() All employees accept accountability or ownership: Each test team member is responsible for the quality of his own work products, from the test plan and the test schedule down to the incident report. These test work products, too, are subject to (peer) reviews and corrected, if necessary.

All employees accept accountability or ownership: Each test team member is responsible for the quality of his own work products, from the test plan and the test schedule down to the incident report. These test work products, too, are subject to (peer) reviews and corrected, if necessary.

![]() Internal customer-supplier relationship: Development delivers its product to component testing. The lower test level delivers the product to the next level higher up. Deliveries are performed in a formal way and accompanied by release, delivery, and acceptance tests.

Internal customer-supplier relationship: Development delivers its product to component testing. The lower test level delivers the product to the next level higher up. Deliveries are performed in a formal way and accompanied by release, delivery, and acceptance tests.

![]() Continuous improvement: After completion of each test cycle, the test team meets to perform a postmortem analysis. Weak points that can be improved in the test process, in the application of test methods, or in the test schedule are documented. The most beneficial improvement actions are identified and implemented in the next test cycle (if required, in several steps).

Continuous improvement: After completion of each test cycle, the test team meets to perform a postmortem analysis. Weak points that can be improved in the test process, in the application of test methods, or in the test schedule are documented. The most beneficial improvement actions are identified and implemented in the next test cycle (if required, in several steps).

![]() Stabilizing improvements: Implemented changes are subject to particular monitoring. It needs to be verified whether changes have the intended results and if people abide by them; i.e., whether everybody concerned has changed their working methods accordingly.

Stabilizing improvements: Implemented changes are subject to particular monitoring. It needs to be verified whether changes have the intended results and if people abide by them; i.e., whether everybody concerned has changed their working methods accordingly.

![]() Rational decisions: The test management tool provides an abundance of statistics and metrics. A small number of well-understood metrics (e.g., requirements coverage new/changed/stable, number of tests passed/failed/blocked, number of defects low/medium/high) are analyzed after each test cycle. Decisions on test completion, product release, or test schedule changes are made based on continuous data capture during each cycle and after actual data has been compared with the test exit criteria.

Rational decisions: The test management tool provides an abundance of statistics and metrics. A small number of well-understood metrics (e.g., requirements coverage new/changed/stable, number of tests passed/failed/blocked, number of defects low/medium/high) are analyzed after each test cycle. Decisions on test completion, product release, or test schedule changes are made based on continuous data capture during each cycle and after actual data has been compared with the test exit criteria.

7.1.2 Kaizen

Kaizen is a Japanese concept and a compound of Kai (change) and Zen (for the better). Kaizen means “continuous improvement”. The basic idea is this: As soon as a system or process has been implemented, it begins to degenerate if it is not permanently maintained and improved.

Continuous improvement

As in TQM, Kaizen involves all employees. Improvements are implemented through gradual, stepwise perfection and optimization. The goal of both management approaches is to have employees increasingly identify with the organization and to give it continuous competitive edge. The “Continuous Improvement Process” (CIP) may be considered an essential concept of Kaizen1.

Everyone is involved.

The foundations of Kaizen are as follows:

Foundations of Kaizen

![]() Intensive use and optimization of the employee suggestion system2

Intensive use and optimization of the employee suggestion system2

![]() Appreciation and regard for employees’ striving for improvement

Appreciation and regard for employees’ striving for improvement

![]() Established, small group discussion circles addressing defects and improvement suggestions

Established, small group discussion circles addressing defects and improvement suggestions

![]() “Just in time” production to eliminate wasted effort (overproduction or excess inventory)

“Just in time” production to eliminate wasted effort (overproduction or excess inventory)

![]() 5 Ss process for the improvement of workplaces (see below)

5 Ss process for the improvement of workplaces (see below)

![]() “Total Productive Maintenance” (TPM) for maintenance and servicing of all means of production

“Total Productive Maintenance” (TPM) for maintenance and servicing of all means of production

The 5 Ss process serves as a basis for a clean, safe, and standardized working environment in the organization. The 5 Ss stand for the following five Japanese words:

5 S’s process

![]() Seiri – tidiness; old and useless items have no place at the workplace.

Seiri – tidiness; old and useless items have no place at the workplace.

![]() Seiton – orderliness; “everything” has its place for quick retrieval and storage.

Seiton – orderliness; “everything” has its place for quick retrieval and storage.

![]() Seiso – cleanliness; keep the workplace nice and tidy at all times.

Seiso – cleanliness; keep the workplace nice and tidy at all times.

![]() Seiketsu – standardization of all practices and processes.

Seiketsu – standardization of all practices and processes.

![]() Shitsuke – discipline; all activities are performed in a disciplined way.

Shitsuke – discipline; all activities are performed in a disciplined way.

The following organizational key principles are based on Kaizen:

Principles

1. Every day, some improvement must be made somewhere in the organization.

2. The improvement strategy depends on requirements and customer satisfaction.

3. Quality is always more important than profit.

4. Coworkers are encouraged to point out problems and to make suggestions for their removal.

5. Problems are solved systematically and collaboratively in groups made up of people coming from different functional areas.

6. Process-oriented thinking is a prerequisite for continuous improvement.

These principles and concepts are very similar to TQM but show a strong Japanese influence, which might make it difficult for them to be applied fully outside Japan or Asia. Kaizen puts its focus more on the manufacturing of goods than on software development; nevertheless, there are enough ideas and suggestions that are useful to the test manager, such as, for instance, the suggestion system or the employee appreciation program. More details on Kaizen can be found in [Imai 86].

Test management and Kaizen

7.1.3 Six Sigma

Six Sigma is another approach or framework for process improvement, using data and statistical analysis to identify problems and improvement opportunities. The objective is to produce faultless products with faultless processes.

Static analysis

The term “Six Sigma” is derived from statistics and refers to the standard deviation of a statistical distribution. The function of the Gaussian distribution is specified by two parameters: the mean and the standard deviation. A 3×Sigma standard deviation, for instance, means that the process in question is approximately 93.3% defect free; in other words, we get around 66.800 defects out of 1 million opportunities. The 6×Sigma value is only 3.4 defects per 1 million units, which means almost zero defects. Six Sigma thus signifies a systematic reduction of deviations until it reaches the “ideal” state of being almost defect free.

σ(Greek Sigma) stands for “standard deviation”

The Six Sigma key statements and concepts are as follows:

Basic concepts

![]() Quality decides: Customer wishes and customer satisfaction are of paramount importance.

Quality decides: Customer wishes and customer satisfaction are of paramount importance.

![]() Avoid flaws: No product release that does not fulfill customer expectations.

Avoid flaws: No product release that does not fulfill customer expectations.

![]() Ensure process maturity: High-grade goods can only be produced with high-quality processes.

Ensure process maturity: High-grade goods can only be produced with high-quality processes.

![]() Keep variation small: Deliver constant quality to the customer. What the customer “sees and feels” is important.

Keep variation small: Deliver constant quality to the customer. What the customer “sees and feels” is important.

![]() Continuous workflow: A consistent and predictable process guarantees customer product satisfaction.

Continuous workflow: A consistent and predictable process guarantees customer product satisfaction.

![]() Orientation toward Six Sigma: Customer needs are satisfied and process performance is improved.

Orientation toward Six Sigma: Customer needs are satisfied and process performance is improved.

Six Sigma practices are based on methods such as DMAIC, DMADV, and DFSS. DMAIC stands for “define, measure, analysis, improve, control” and is used for existing processes, describing a cycle in which the individual steps are to be performed. DMADV is an acronym for “define, measure, analysis, design, verify” and is more or less the equivalent of DMAIC but is used to create new product designs or process designs, as in software development. Principally, DMADV deploys the so-called Quality Function Deployment (QFD), which systematically maps customer requirements into the terminology and (implementation) possibilities of developers, testers, and (quality) managers. In doing so, the method looks at one or more possible solutions to determine and quantify their suitability. DFSS is short for “design for Six Sigma” and is supposed to ensure that new processes satisfy Six Sigma requirements right from the beginning.

DMAIC, DMADV, and DFSS

Performing Six Sigma projects in an organization requires training. The training levels are usually described through the Japanese martial arts belt system:

Six Sigma training levels

![]() Master Black Belt: responsible for project consulting and training

Master Black Belt: responsible for project consulting and training

![]() Black Belt: project leader of large and complex projects

Black Belt: project leader of large and complex projects

![]() Green Belt: project leader of smaller projects

Green Belt: project leader of smaller projects

![]() Yellow Belt: has basic Six Sigma knowledge

Yellow Belt: has basic Six Sigma knowledge

Six Sigma shows many parallels with TQM and Kaizen, too. The main difference is freedom from defects, proof of which is provided by statistical data collections. Certainly, one of the test manager’s goals is to achieve freedom from defects in the systems he tests. To get there he has to aim high. However, in no other area of software development is there so much sound data available for statistical analysis as in software testing, which makes statistical analysis and Six Sigma definitely an approach well worth implementing. On the other hand, we need comprehensive and above all comparable data to be able to apply Six Sigma statistical evaluation. In most cases, direct comparison of data from different projects is not that easy; the basic Six Sigma principles, however, can be applied to the test process. Detailed information relating to Six Sigma and corresponding tool support can be found in [John 06].

Test management and Six Sigma

7.2 Improving the Software Development Process

The following sections address process assessment, improvement methods, and techniques specific to software development.

Chapter 3 introduced different software development processes. Each of the different process models defines a specific approach to software development that can be implemented in projects in many different ways. How good the approaches are implemented can be assessed, we talk about the maturity level of the (“practiced”) process.

The maturity level refers to the activities or processes defined in the development model compared to so-called “best practices” and their practical implementation. Best practices refer to activities that have, over many years, proven to be successful. The more accurately processes are defined in the development model and the higher the degree of completeness and accuracy of their implementation, the higher is their maturity level.

Best practices

A process’s level of maturity depends on the accuracy with which it is described in the development model and the degree of completeness and accuracy with which it is implemented and performed. The degree of maturity is often specified in maturity levels defining a logical sequence of improvement steps.

We do not wish to place any particular emphasis here on the fact that maturity evaluations are often performed simply to prove maturity “to the outside world” for marketing purposes; instead, we would like the concept to be seen as serving the purpose of “improving the inner processes and procedures” that carry a project through its life cycle.

Higher maturity levels comprise lower ones; i.e., it is useful to adopt a step-by-step approach. For example, level 2 is reached if the software development process is described in more detail compared to level 1 and if it has been implemented accordingly. Process-related planning, monitoring, and control are important factors in the assessment of process maturity. Increasing maturity levels mean that plan dates and the achievement of cost and quality goals can be predicted with increasing accuracy.

Maturity level

To determine maturity levels, “assessments”3 are performed. During an assessment, actual processes are compared with the requirements of the assessment models4, improvement potentials are identified, and an appropriate maturity level rating is assigned. The assessment uncovers strengths and weaknesses in the software development process that, together with the identified improvement potentials and the comparison of the assessment results with the target maturity profile, forms the basis for the planning and implementation of the process improvement actions.

Assessments

Assessment and maturity models propose some concrete measures for this purpose. In recent years, several of such models for the evaluation of software development processes have been published. All of them provide guidelines or practices by which an organization or, to be more precise, the processes and procedures of projects in an organization can be investigated, evaluated, and subsequently improved. Thus, these models are management tools for process optimization in an organization.

The following sections describe two well-known assessment models currently adopted by many industrial organizations: CMMI and ISO/IEC 15504 (SPICE).

7.2.1 Capability Maturity Model Integration (CMMI)

Capability Maturity Model Integration (CMMI) Version 1.1 [URL: CMMI] was developed by the Software Engineering Institute (SEI) of Carnegie Mellon University in Pittsburgh and released in 2002, superseding the Capability Maturity Model (CMM). CMM had been developed by the SEI in 19935 and had gone through several updates.

The basic idea of the CMMI model and any other assessment model is that improvements in software development processes will inevitably improve the quality of the developed system, lead to more accurate schedule and resource planning, and make for better implementation of the plans.

In CMMI6, four disciplines are defined:

Disciplines

![]() Systems Engineering (CMMI-SE)

Systems Engineering (CMMI-SE)

![]() Software Development (CMMI-SW)

Software Development (CMMI-SW)

![]() Integrated Process and Product Development (CMMI-IPPD)

Integrated Process and Product Development (CMMI-IPPD)

![]() Supplier Sourcing (CMMI-SS)

Supplier Sourcing (CMMI-SS)

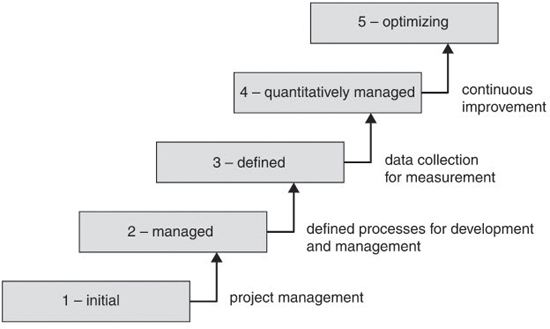

CMMI models have two different representations: staged and continuous [URL: CMMI-TR]. The staged representation consists of five maturity levels, whereas in the continuous representation, capability levels are assigned to individual process areas (see the following list). Each maturity level characterizes the whole of the organization. The following list briefly describes the five maturity levels, which belong to a staged representation (see also figure 7-1):

Staged and continuous representation

![]() Level 1: Initial

Level 1: Initial

Five maturity levels

Processes are not defined or insufficiently defined. Development processes are ad hoc and chaotic.

![]() Level 2: Managed

Level 2: Managed

Essential management processes are established and applied in projects, though in different ways or degrees.

![]() Level 3: Defined

Level 3: Defined

Standard processes are introduced throughout the organization.

![]() Level 4: Quantitatively managed

Level 4: Quantitatively managed

Decisions on improvements are made based on quantitative measures. Performance measures are used intensively throughout the organization.

![]() Level 5: Optimizing

Level 5: Optimizing

This level is characterized by systematic and continuous process improvement. Assessment of success or failure is based on quantitative statistics.

Figure 7–1 CMMI maturity levels

Another important structural element in CMMI, besides the maturity level components, consists of the process areas that cover or combine all requirements relating to one particular area. For each area, a set of goals has been defined, distinguishing between specific goals for each individual area and generic goals describing all the activities that need to be performed in order to achieve long-term, efficient implementation of the specific goals (process institutionalization).

Specific and generic goals

In CMMI, the process areas in table 7-1 are assigned to the four higher maturity levels ([URL: CMMI V1.2 Model], page 44).

Process areas

Table 7–1 Maturity levels and process areas

Level |

Process areas |

2 – Managed |

Requirements Management |

Project Planning |

|

Project Monitoring and Control |

|

Supplier Agreement Management |

|

Measurement and Analysis |

|

Process and Product Quality Assurance |

|

Configuration Management |

|

3 – Defined |

Requirements Development |

Technical Solution |

|

Product Integration |

|

Verification |

|

Validation |

|

Organizational Process Focus |

|

Organizational Process Definition |

|

Organizational Training |

|

Integrated Project Management |

|

Risk Management |

|

Decision Analysis and Resolution |

|

4 – Quantitatively Managed |

Organizational Process Performance |

Quantitative Project Management |

|

5 – Optimizing |

Organizational Innovation and Deployment |

CMMI comes with two types of representation: staged and continuous. Staged representation was already used in CMM (see figure 7-1); continuous representation assigns the process areas listed in table 7-1 to the following four categories:

Continuous representation

![]() Process management

Process management

![]() Project management

Project management

![]() Engineering

Engineering

![]() Support

Support

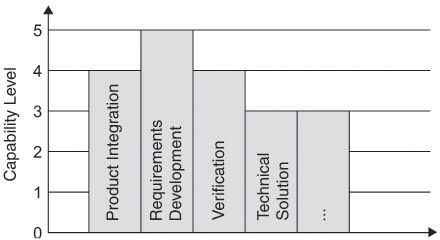

The continuous representation specifies five generic goals indicating the degree of institutionalization of a particular process. The path to complete implementation is divided into six capability levels (0 = incomplete, 1 = performed, 2 = managed, 3 = defined, 4 = quantitatively managed, and 5 = optimizing). The capability level refers to one process area only. This is in contrast to the maturity level in the staged model, which covers a level-specific set of process areas representing the overall level of maturity of the organizational unit. Continuous representation allows a much more specific description and evaluation of the respective process or process area (see, for example, figure 7-2).

Generic goals and capability levels

Figure 7–2 Capability grade of individual process areas

Testing in CMMI

The process areas verification and validation are of utmost importance to the test manager. In the staged model, both areas belong to maturity level 3 (defined), whereas in the continuous model, they are part of the engineering discipline. Both process areas will be described in the following sections. Further information on CMMI is provided by [Chrissis 06] and Internet sources (e.g., [URL: CMMI], [URL: CMMI V1.2 Model]).

Process areas: verification and validation

Verification

“CMMI requirements regarding verification are of a relatively general nature, requiring verification to be prepared, that work products are selected for verification, peer reviews are conducted (...) and, generally, verification be executed” (translation from the German book [Kneuper 06], page 56).

To verify the implementation of specifications, the following methods are mentioned: (peer) review, test, and (static) analysis.7

Validation

Validation is the process of verifying if customer requirements have been implemented and must be seen in close connection with the requirements development process area (maturity level 3, Engineering discipline).

“The task is to verify constantly and repeatedly if the defined results and requirements actually achieve the intended benefit. For this reason validation is a step within the requirements development process (...).

This also holds true vice versa: If requirements development and verification have gone well, there will not be much left to do for validation except for the user to accept the system” (translation from the German book [Kneuper 06], page 58/59).

CMMI requires that validation be prepared and performed; however, it does not specify any concrete and applicable (checking or verification) methods.

Test manager and CMMI

The test manager will not find any more detailed descriptions or support than what he already knows about the fundamental test process (see chapter 2). For instance, he gets told what activities are necessary to set up a test environment.8 What becomes clear, however, is that being a part of the software development process, the test process also profits if the development process has a high maturity level.

7.2.2 ISO/IEC 15504 (SPICE)

In 1993, the Software Process Improvement and Capability dEtermination (SPICE [URL: SPICE]) project was launched to unify available evaluation methods such as CMMI, Trillium9, Software Technology Diagnostic10, and Bootstrap11 and to define an international standard.

Besides other models, the SPICE project group used CMM as a basis to develop a fairly similar approach to the one used in CMMI. The project led to the ISO/IEC 15504 standard, which was published as a technical report in 1998. Currently, parts 1 to 5 are published and further parts are under preparation (see [URL: ISO]).

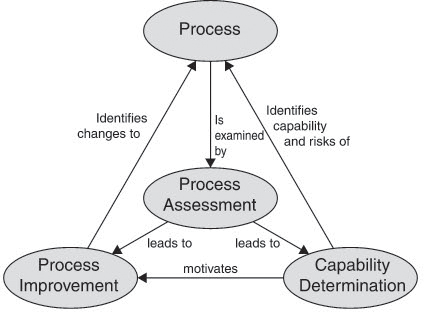

In contrast to CMMI, the SPICE model consists of continuous representation only and identifies individual process capability levels. To make it possible to assess the different processes used in different business sectors, variants such as “Automotive SPICE”, “SPICE4SPACE”, “MEDISPICE”, etc. have been developed. The standard forms the framework for a consistent performance evaluation of the “practiced” processes applied in an organizational unit, comprising process evaluation, process improvement, and performance evaluation (see figure 7-3).

Figure 7–3 Correlation between process, process evaluation, process improvement, and performance evaluation (Figure from http://www.sqi.gu.edu.au/spice/)

The model defines six capability levels:

ISO/IEC 15504 Capability level

The process is

![]() incomplete (level 0),

incomplete (level 0),

![]() performed (level 1),

performed (level 1),

![]() managed (level 2),

managed (level 2),

![]() established (level 3),

established (level 3),

![]() predictable (level 4), or

predictable (level 4), or

![]() optimizing (level 5).

optimizing (level 5).

One of the criticisms of the CMM maturity level model was that the step from level 1 (initial) to level 2 (managed) was considered too big. However, the maturity levels in CMM relate to all the process areas of that maturity level. This criticism was taken up in SPICE and CMMI in the continuous representation, and an additional lower level was defined. The upper four capability levels are comparable to those in CMM and the continuous representation in CMMI. The two lower levels are briefly characterized here:

One additional level

![]() “Level 0; Not-Performed: There is general failure to perform the base practices in the process. There are no easily identifiable work products or outputs of the process.

“Level 0; Not-Performed: There is general failure to perform the base practices in the process. There are no easily identifiable work products or outputs of the process.

![]() Level 1; Performed-Informally: Base practices of the process are generally performed. The performance of these base practices may not be rigorously planned and tracked. Performance depends on individual knowledge and effort. Work products of the process testify to the performance. Individuals within the organization recognize that an action should be performed, and there is general agreement that this action is performed as and when required. There are identifiable work products for the process.” ([URL: SPICE doc] Part 2)

Level 1; Performed-Informally: Base practices of the process are generally performed. The performance of these base practices may not be rigorously planned and tracked. Performance depends on individual knowledge and effort. Work products of the process testify to the performance. Individuals within the organization recognize that an action should be performed, and there is general agreement that this action is performed as and when required. There are identifiable work products for the process.” ([URL: SPICE doc] Part 2)

Processes are categorized into process categories and organized into nine process groups in [ISO 15504] to gain more clarity within the reference model:

Five process areas

Primary (PLC)

![]() Acquisition (ACQ)

Acquisition (ACQ)

![]() Supply (SPL)

Supply (SPL)

![]() Engineering (ENG)

Engineering (ENG)

![]() Operation (OPE)

Operation (OPE)

Organization & Management (OLC)

![]() Management (MAN)

Management (MAN)

![]() Process Improvement (PIM)

Process Improvement (PIM)

![]() Resource and Infrastructure (RIN)

Resource and Infrastructure (RIN)

![]() Reuse (REU)

Reuse (REU)

Support (SLC)

![]() Support (SUP)

Support (SUP)

Each of the process groups is divided even further, amounting to several hundred base processes or base practices. Similarly to CMMI, there are also generic practices that are generally applicable and not assigned to individual processes. Examples are the planning of a process or the training of staff members; such practices are applicable to all processes.

Base processes

Software construction (ENG.6), Software testing (ENG.8), and System testing (ENG.10) belong to the Engineering (ENG) process category and are of particular interest12 to the test manager. The same applies to Verification (SUP.2) and Validation (SUP.3) which belong to the Support (SUP) category.

The standard specifies that testing should be performed by persons or teams that are independent from developers. Planning and test preparation is to be started in parallel to the analysis and design phase. SPICE in particular requires full traceability of requirements to test cases already at level 1. Both test process (ENG.8) and (ENG.10) are discussed in the following sections. Internet sources (e.g., [URL: SPICE] and [URL: ISO]) provide further information on ISO/IEC 15504.

Software Test (ENG.8)

For software testing, the model lists the following activities (practices):

![]() Develop tests for integrated software product

Develop tests for integrated software product

![]() Test integrated software product

Test integrated software product

![]() Regression test integrated software

Regression test integrated software

Each activity is accompanied by a brief explanation. During test specification for the integrated software system, the different processes (requirements specification, design specification, implementation) are stated and a test specification must be created in parallel.

System Test (ENG.10)

The same activities are listed as in software test (ENG.8), with the addition of

![]() Confirm system readiness.

Confirm system readiness.

Similar to software test, each activity is accompanied by a brief explanation. ENG.10, too, explicitly points out that preparatory tasks are to be performed in parallel to development.

Test Manager and SPICE

The SPICE standard provides test managers with relevant task descriptions and lists and explains all the documents to be developed by them or their test teams. Explanations, however, are not very detailed, but it becomes clear that a structured development process is of advantage for the test process.

7.2.3 Comparing CMMI with SPICE

With the replacement of CMM by CMMI, the differences to ISO/IEC 15504 have become smaller. This is not surprising as it was one of the SPICE project’s objectives to harmonize the different evaluation models. The close correlation between the two models is emphasized by the fact that CMMI can be used as a reference model in an ISO/IEC 15504 assessment. Nevertheless, both models contain issues that are not treated in the other model.

From the test manager’s point of view, particulars relating to the test process are too general and too imprecise to provide him with concrete assistance in his daily work.

7.3 Evaluation of Test Processes

Since CMMI and ISO/IEC 15504 do not cover the test process sufficiently, evaluation and improvement models have been developed that focus exclusively on the test process. Two such models are introduced in the following section, the “Testing Maturity Model” (TMM) and the “Test Process Improvement” (TPI) model.

7.3.1 Testing Maturity Model (TMM)™

The Testing Maturity Model was developed by the Illinois Institute of Technology in Chicago in 1996 ([Burnstein 96]), using as one of its bases the Capability Maturity Model (CMM). In analogy to CMM, TMM uses the concept of maturity models for process evaluation and improvement.

CMM as basis

TMM puts particular focus on testing as a process to be evaluated and improved. Improvement of the process is supposed to achieve the following objectives (according to [Burnstein 96], page 9):

![]() smarter testers

smarter testers

![]() higher quality software

higher quality software

![]() the ability to meet budget and scheduling goals

the ability to meet budget and scheduling goals

![]() improved planning

improved planning

![]() the ability to meet quantifiable testing goals

the ability to meet quantifiable testing goals

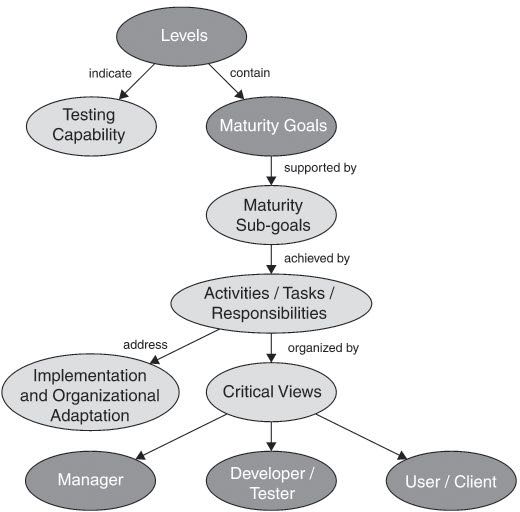

Oriented toward the CMM concept, TMM consists of five identically structured maturity levels containing the following parts (see figure 7-4):

![]() Maturity goals

Maturity goals

are defined for each level except level 1.

![]() Maturity sub-goals

Maturity sub-goals

are concretely specified and provide information relating to the scope, range, constraints, and performance of process evaluation and improvement activities and tasks.

![]() Activities/tasks and responsibilities

Activities/tasks and responsibilities

are described in detail and must be performed to reach the respective maturity level. Three groups of people are involved: manager, developer and tester, user and customer.

Figure 7–4 Internal TMM level structure

Table 7-2 shows the structure and processes of the five TMM maturity levels.

Table 7–2 TMM maturity models and process areas

Levels |

Process Areas |

1 – Initial |

No processes identified |

2 – Phase Definition |

Test Policy and Goals |

3 – Integration |

Test Organization |

4 – Management and Measurement |

Peer Reviews |

5 – Optimization |

Defect Prevention |

Following [van Veenendaal 02], the individual levels are described in the following paragraphs:

At level 1, testing is a chaotic, undefined process and considered to be part of debugging. The objective of testing at this level is to show that no serious anomalies occur during software execution. Software quality evaluation and defect-related risk assessment does not take place and software products are released with poor quality. Within the test process, there is a lack of resources, support tools, and qualified testers.

1-Initial

At level 2, the test process is seen as a defined process clearly separated from debugging. Test plans are established and contain a definition of the test strategy. Test techniques are applied to derive and select test cases from requirements specifications. However, test activities still start relatively late—e.g., parallel to the component specification or coding phase. The main objective of testing is to verify that the specified requirements are satisfied.

2-Phase definition

At level 3, the test process is fully integrated in the software development life cycle. Test planning is performed at an early stage. Test strategy is based on fully documented requirements and determined by risk considerations. Testing focuses on invalid inputs and failure situations. Reviews are performed, although not yet in a consistent or formal way and not yet throughout the entire development life cycle.

3-lntegration

A test organization exists, as well as a specific test training program. Testers are perceived as an independent, professional group.

At level 4, testing is a comprehensively defined and measurable process. Individual test activities are well founded. Document reviews are systematically performed throughout the entire software development life cycle using agreed-upon selection criteria. Reviews are considered to be an important supplement to testing. Software products are evaluated using quality criteria such as reliability, usability, and maintainability. Test cases are gathered, stored, and managed in a central database for later reuse and regression testing. Test measurements provide information regarding test process and software product quality. Testing is perceived as evaluation across the entire development process and includes reviews.

4-Management and measurement

At the highest level, level 5, testing is now a completely defined process. Costs and test effectiveness are controllable. Test techniques are optimized and test process improvement is continuously pursued. Defect prevention and quality control are part of the test process.

5-Optimization, defect prevention, and quality control

A documented procedure is in place for the selection and evaluation of test tools. All test activities are as much tool supported as possible. Early defect prevention is the main objective of the test process. Testing has a positive impact on the entire development life cycle.

Maturity Goals and Maturity Sub-goals

To convey a more detailed idea of maturity goals and sub-goals, a sample selection is described here.

The organization has set up a group responsible for the development of the test policy and test objectives. It enjoys full management support and has been provided with sufficient funds. The committee defines and documents goals for testing and debugging and communicates them to all project managers and developers. The test objectives are reflected in the test schedule.

Level 2 test policy and test objectives

A group for test planning has been established. A test schedule template has been developed and distributed to all project managers and developers. Basic planning tools have been evaluated, recommended, and purchased.

Level 2 test planning

A company-wide test technology group has been set up to develop, evaluate, and recommend a set of basic test techniques and methods (e.g., individual black box and white box test techniques and requirements tracing as well as making a distinction between the different test levels: module test, integration test, system test, and acceptance test). The issue of adequate tool support has also been addressed by the group.

Level 2 test techniques and test methods

A company-wide test group has been built up with the necessary management support. Test process roles and duties are defined. Well-trained and motivated members of staff could be won to join the test group. The communication channels used by the test group allow direct involvement of users and customers in the test process. User wishes, worries, and requirements are gathered, documented, and incorporated in the test process.

Level 3 test organization

Management has set up a training program, taking into consideration training objectives and plans. A training group has been established in the organization, equipped with all necessary tools, premises, and materials.

Level 3 test training program

The organization has defined mechanisms and objectives for test process control and monitoring. Principal process-related measurements are defined and documented. In case of significant deviations from the test plan, correction measures and contingency plans have been developed and documented.

Level 3 monitoring and control

Maturity models in CMM and TMM

TMM is intended to complement CMM (or CMMI). The maturity levels of both models correspond to a large extent. Since the test process is considered to constitute part of the software development process, TMM process areas (maturity goals) closely correlate to the CMM13 process areas (key process areas).

An overview of the corresponding levels of the two evaluation models is shown in table 7-3 ([van Veenendaal 02]).

Table 7–3 Process areas and maturity models in TMM and CMM

TMM |

CMM |

Process Areas |

2 |

2 |

Requirements Management |

3 |

2 |

Software Project Tracking and Oversight |

3 |

3 |

Organizational Process Focus |

4 |

3 |

Intergroup Coordination |

4 |

4 |

Quantitative Process Management |

5 |

5 |

Defect Prevention |

TMM Assessment Model (TMM-AM)

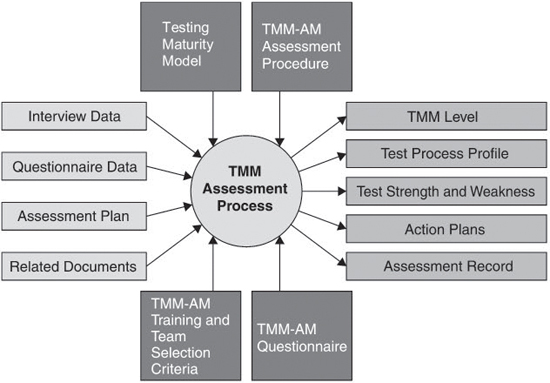

TMM-AM is intended to help organizations evaluate and improve their testing practices. Several input documents are required as an evaluation basis in a TMM assessment. Apart from the testing maturity model, three more elements are used in the assessment:

![]() Assessment procedure

Assessment procedure

![]() Team training and selection criteria

Team training and selection criteria

Figure 7–5 TMM-AM: Inputs, components and results

After the assessment, five outputs or results are available (maturity level, test process profile, test strengths and weaknesses, action plan, and assessment report, see figure 7-5). A more detailed description of the Testing Maturity Model can be found in [Burnstein 03].

7.3.2 Test Process Improvement® (TPI)

The Test Process Improvement model is based on Sogeti Netherlands B.V.’s14 many years of experience in the field of testing ([URL: Sogeti]).

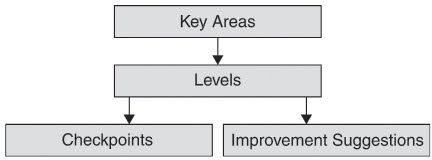

The model serves to assess the maturity of the test processes within an organization and supports stepwise process improvement (see figure 7-6).

Since testing comprises a whole range of tasks and activities that need to be evaluated, TPI has defined 20 key areas. One key area, for example, addresses important aspects related to the use of tools.

Twenty key areas and corresponding level

Figure 7–6 Main elements of the TPI model

To evaluate the test process, each of the 20 key areas is assigned a particular level. On average there are three levels per key area. So called checkpoints are used to determine the strengths and weaknesses of a test process. Levels are defined in such a way that improvements from a lower to a higher level have a measurable impact on testing time, required budget, and/or resulting quality. At each level, improvement suggestions are given to help reach the next higher level. Figure 7-6 illustrates the relationship between the main elements of the TPI model.

Similar to SPICE, the TPI has been adapted to meet the needs of different business sectors. “TPI Automotive” covers 21 key areas with a slight bias toward application in the automotive industry. This model variant is suitable in cases where there is a close relationship between a customer and several suppliers, requiring the customer to be the central integrator of different hardware and software components coming from different sources.

Key Areas, Levels, Checkpoints

This section lists the model’s key areas and describes three of them in more detail: “Test strategy,” “Life cycle model,” “Moment of involvement,” “Estimating and planning,” “Test specification techniques,” “Static test techniques,” “Metrics,” “Test tools,” “Test environment,” “Office environment,” “Commitment and motivation,” “Test functions and training,” “Scope of methodology,” “Communication,” “Reporting,” “Defect management,” “Testware management,” “Test process management,” “Evaluation,” and “Low-level tests” (TPI Automotive covers the additional key area “Integration testing”).

TPI key areas

“The test strategy has to be focused on detecting the most important defects as early and as cheaply as possible. defines which requirements and (quality) risks are covered by what tests. The better each test level defines its own strategy and the more the different test level strategies are adjusted to each other, the higher the quality of the overall test strategy” ([Koomen 99], page 35). In order to reach level A, level A must also be achieved in the key areas “Test specification techniques” (informal techniques) and “Commitment and motivation” (assignment of budget and time). Otherwise, the Test strategy will not be able to distinguish between low-level and high-level tests and resource distribution will be insufficient.

Key area Test strategy

“Although the actual execution of the test normally begins after realization of the software, the test process must and can start much earlier. An earlier involvement of testing in the system development path helps to find defects as soon and as easily as possible and even to prevent errors. A better adjustment between the different tests can be done and the period of time that testing is on the critical path of the project can be kept as short as possible” ([Koomen 99], page 36). Here, a dependency exists on the key area “Life-cycle model”. The phases planning and preparation are to be differentiated.

Key area Moment of involvement

“The test staff need rooms, desks, chairs, PCs, word processing facilities, printers, telephones, and so on. A good and timely organization of the Office environment has a positive influence on the motivation of the test staff, on communication inside and outside of the team, and on the efficiency of the work” ([Koomen 99], page 38).

Key area Office environment

The 20 key areas are organized in levels. Checkpoints are used for the evaluation and assignment of key areas to a particular level.

Levels and checkpoints

For the key area “Test strategy”, four levels (A, B, C, and D) have been defined. At the lowest level (A), four checkpoints must be fulfilled:

![]() A1. “A motivated consideration of the product risks takes place for which knowledge of the system, its use, and its operational management is required.

A1. “A motivated consideration of the product risks takes place for which knowledge of the system, its use, and its operational management is required.

Key area Test strategy, lowest-level Checkpoints

![]() A2. There is a differentiation in test depth, depending on the risks and, if present, depending on the acceptance criteria: not all subsystems and not every quality characteristic is tested equally thoroughly.

A2. There is a differentiation in test depth, depending on the risks and, if present, depending on the acceptance criteria: not all subsystems and not every quality characteristic is tested equally thoroughly.

![]() A3. One or more specification techniques are used, suited to the required depth of a test.

A3. One or more specification techniques are used, suited to the required depth of a test.

![]() A4. For retests also, a (simple) strategy determination takes place, in which a motivated choice of variations between ‘test solutions only’ and ‘full retest’ is made” ([Koomen 99], page 85-86). This is also done for further checkpoints of the upper three levels of the “Test strategy” key area.

A4. For retests also, a (simple) strategy determination takes place, in which a motivated choice of variations between ‘test solutions only’ and ‘full retest’ is made” ([Koomen 99], page 85-86). This is also done for further checkpoints of the upper three levels of the “Test strategy” key area.

For the key area “Moment of involvement,” there are levels with corresponding checkpoints:

![]() “Level A – Completion of test basis:

“Level A – Completion of test basis:

Key area Moment of involvement, levels and checkpoints

• A1. The activity ‘testing’ starts simultaneously with or earlier than the completion of the test basis for a restricted part of the system that is to be tested separately.

(The system can be divided into several parts that are finished, built, and tested separately. The testing of the first subsystem has to start at the same time or earlier than the completion of the test basis of that particular subsystem.)

![]() Level B – Start of test basis:

Level B – Start of test basis:

• B1. The activity ‘testing’ starts simultaneously with or earlier than the phase in which the test basis (often the functional specifications) is defined.

![]() Level C – Start of requirements definition:

Level C – Start of requirements definition:

• C1. The activity ‘testing’ starts simultaneously with or earlier than the phase in which the requirements are defined.

![]() Level D – Project initiation:

Level D – Project initiation:

• D1. When the project is initiated, the activity ‘testing’ is also started” ([Koomen 99], page 98-101).

If all the respective checkpoints are fulfilled, the level of the key area is considered achieved.

TPI matrix

All key areas with their maturity levels are combined in the test maturity matrix (TPI matrix) to show the dependencies between the different keys areas and maturity levels. The matrix distributes the different maturity levels of each key area across the 13 scales or development levels (see figure 7-7).

TPI matrix

|

Key area/Scale |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

1 |

Test strategy |

|

A |

|

|

|

|

B |

|

|

|

C |

|

D |

|

2 |

Life-cycle model |

|

A |

|

|

B |

|

|

|

|

|

|

|

|

|

3 |

Moment of involvement |

|

|

A |

|

|

|

B |

|

|

|

C |

|

D |

|

4 |

Estimating and planning |

|

|

|

A |

|

|

|

|

|

|

B |

|

|

|

5 |

Test specification techniques |

|

A |

|

B |

|

|

|

|

|

|

|

|

|

|

6 |

Static test techniques |

|

|

|

|

A |

|

B |

|

|

|

|

|

|

|

7 |

Metrics |

|

|

|

|

|

A |

|

|

B |

|

|

C |

|

D |

8 |

Test tools |

|

|

|

|

A |

|

|

B |

|

|

C |

|

|

|

9 |

Test environment |

|

|

|

A |

|

|

|

B |

|

|

|

|

|

C |

10 |

Office environment |

|

|

|

A |

|

|

|

|

|

|

|

|

|

|

11 |

Commitment and motivation |

|

A |

|

|

|

B |

|

|

|

|

|

C |

|

|

12 |

Test functions and training |

|

|

|

A |

|

|

B |

|

|

|

C |

|

|

|

13 |

Scope of methodology |

|

|

|

|

A |

|

|

|

|

|

B |

|

|

C |

14 |

Communication |

|

|

A |

|

B |

|

|

|

|

|

|

C |

|

|

15 |

Reporting |

|

A |

|

|

B |

|

C |

|

|

|

|

D |

|

|

16 |

Defect management |

|

A |

|

|

|

B |

|

C |

|

|

|

|

|

|

17 |

Testware management |

|

|

A |

|

|

B |

|

|

|

C |

|

|

|

D |

18 |

Test process management |

|

A |

|

B |

|

|

|

|

|

|

|

C |

|

|

19 |

Evaluation |

|

|

|

|

|

|

A |

|

|

B |

|

|

|

|

20 |

Low-level testing |

|

|

|

|

A |

|

B |

|

C |

|

|

|

|

|

The matrix makes it easy to see which key area has reached which level; i.e., at which level of the scale it is situated. Anomalies—either positive or negative—become visible. The goal is to achieve continuous improvement without leaving lower-level key areas behind. The 13 maturity or development scales can be grouped into three categories:

![]() “Controlled:

“Controlled:

Scales 1 to 5 are primarily aimed at the control of the test process. The purpose of the levels is to provide a controlled test process that provides a sufficient amount of insight into the quality of the tested object. (...)

![]() Efficient:

Efficient:

The levels in scales 6 to 10 focus more on the efficiency of the test process. This efficiency is achieved, for example, by automating the test process, by better integration between the mutual test processes and with the other parties within system development, and by consolidating the working method of the test process in the organization.

![]() Optimizing:

Optimizing:

(...) The levels of the last three scales are characterized by increasing optimization of the test process and are aimed at ensuring that continuous improvement of the generic test process will be part of the regular working method of the organization” (excerpt from [Koomen 99], page 46-47).

TPI Assessment

A TPI assessment evaluates the test process, using the checkpoints to determine the level of achievement for the 20 key areas. The TPI matrix is also used to determine the next improvement actions and the target situation (see figure 7-8).

Figure 7–8 Current situation...

Current situation |

||||||||||||||||

|

Key area |

Scale |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

1 |

Test strategy |

|

A |

|

|

|

|

B |

|

|

|

C |

|

D |

|

|

2 |

Life-cycle model |

|

A |

|

|

B |

|

|

|

|

|

|

|

|

|

|

3 |

Moment of involvement |

|

|

A |

|

|

|

B |

|

|

|

C |

|

D |

|

|

4 |

Estimating and planning |

|

|

|

A |

|

|

|

|

|

|

B |

|

|

|

|

5 |

Test specification techniques |

|

A |

|

B |

|

|

|

|

|

|

|

|

|

|

|

6 |

Static test techniques |

|

|

|

|

A |

|

B |

|

|

|

|

|

|

|

|

... |

|

|||||||||||||||

...and required situation

Required situation |

||||||||||||||||

|

Key area |

Scale |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

1 |

Test strategy |

|

A |

|

|

|

|

B |

|

|

|

C |

|

D |

|

|

2 |

Life-cycle model |

|

A |

|

|

B |

|

|

|

|

|

|

|

|

|

|

3 |

Moment of involvement |

|

|

A |

|

|

|

B |

|

|

|

C |

|

D |

|

|

4 |

Estimating and planning |

|

|

|

A |

|

|

|

|

|

|

B |

|

|

|

|

5 |

Test specification techniques |

|

A |

|

B |

|

|

|

|

|

|

|

|

|

|

|

6 |

Static test techniques |

|

|

|

|

A |

|

B |

|

|

|

|

|

|

|

|

... |

|

|||||||||||||||

For a test process to achieve a higher level, the checkpoints already provide concrete starting points. Further ideas on how to improve the test process are given in the improvement suggestions. For each transition to a higher level, the TPI model offers a variety of suggestions. These are meant as hints and tips and are, in contrast to the checkpoint criteria, not mandatory. For a comprehensive list of suggestions see [Koomen 99].

Improvement suggestions

Improvement Techniques

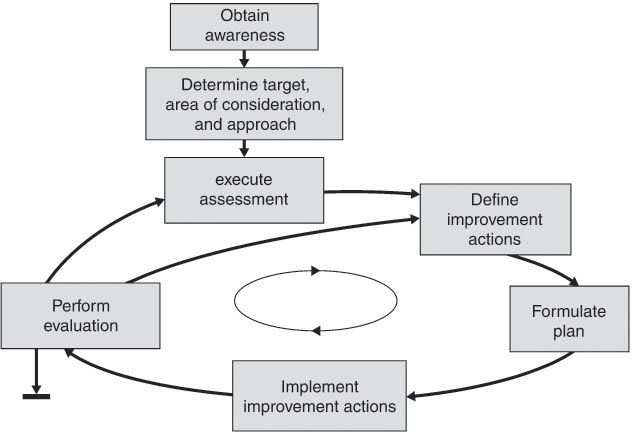

The methods of test process improvement are very similar to any other improvement technique (see figure 7-9). Once an awareness has been created in an organization for the necessity for change, improvement goals and the ways in which they are to be achieved can be defined.

Figure 7–9 Improvement process

A TPI assessment evaluates the current situation. The use of the TPI model is an important part of this activity since they provide a frame of reference for identifying the strengths and weaknesses of the test process. Individual key areas are evaluated based on interviews and documentation and by using checkpoints, determining which of the questions can be answered positively and which not or only partly. The test maturity matrix is used to give a complete status overview of the test process in the organization.

Improvement actions are determined in such a way as to make step-by-step improvement possible. The TPI matrix helps in selecting the key areas in need for improvement. For each of the key areas, it is possible to move to the next level or, in special cases, to an even higher level (see figure 7-8).

Improvement actions

A plan is drawn up to implement the short-term improvement actions; the plan contains the targets and indicators as to which improvements need to be implemented at what time in order to reach them.

Planning

In a next step the plan is implemented. A vital part of the implementation phase is consolidation to prevent improvement actions from remaining once-only affairs.

Implementing the improvement actions

During evaluation, the test manager needs to determine the extent to which improvement actions have been successfully performed and the extent to which original targets have been achieved. This forms the basis for the decision on the continuation and possible adjustment of the change process. If the intended overall target has been reached, the improvement process is considered completed.

Evaluation

7.3.3 Comparing TMM with TPI

Both TMM and TPI are exclusively focused on the test process. TMM development was based on CMM and leads to an assessment of the entire test process in terms of maturity levels. For a comprehensive evaluation of the software development and test processes, both types of evaluations should be performed.

TPI evaluates 20 (or 21) test-process-related key areas separately, thus resembling the CMMI continuous representation or ISO/IEC 15504, where individual process areas are also assessed in terms of capability levels.

Both evaluation models are very important to the test manager as they help him to analyze and evaluate the test process and to start relevant improvement activities.

TPI & TMM for the test manager

Both models provide useful information and advice regarding the test process. However, which of the two models is best suited for the job also depends on the particular situation in an organization. If a company is going for a CMMI appraisal (staged), TMM may be more familiar since it is similarly structured. Both TPI and TMM, however, are equally suited as complements to all the other assessment models. The TPI description is more detailed and the model appears to be more widely distributed. The results of a worldwide survey on the application of TPI are presented on Sogeti’s website ([URL: TPI] Survey).

Detailed descriptions can be found in Practical Software Testing [Burnstein 03] for TMM and in Test Process Improvement: A Practical Step-by-Step Guide to Structured Testing [Koomen 99] for TPI.

The “Expert Level” program of the ISTQB Certified Tester Qualification scheme will include a training module exclusively dedicated to test process improvement.

Certified Tester-Expert Level

7.4 Audits and Assessments

An audit is defined as a systematic and independent examination to evaluate software products and development processes and determine compliance with standards, guidelines, specifications, and/or processes. Audits are based on objective criteria and documents that accomplish the following:

Systematic and independent examinations

![]() Determine the design or content of a product solution

Determine the design or content of a product solution

![]() Describe the product’s development process

Describe the product’s development process

![]() Specify the methods and techniques used to prove or measure compliance or noncompliance with standards and guidelines

Specify the methods and techniques used to prove or measure compliance or noncompliance with standards and guidelines

Besides checking compliance with standards and guidelines, audits can also check on the effectiveness of an implementation and evaluate a artifact.

Types of audit

There are three types of audits:

![]() The system audit examines the quality management system or selected parts of it with regard to structure and workflow.

The system audit examines the quality management system or selected parts of it with regard to structure and workflow.

![]() The process audit analyzes processes with regard to human resource allocation, process monitoring, and the order of individual process steps.

The process audit analyzes processes with regard to human resource allocation, process monitoring, and the order of individual process steps.

![]() A product audit evaluates (part) products relative to their compliance with specification and adherence to standards and guidelines.

A product audit evaluates (part) products relative to their compliance with specification and adherence to standards and guidelines.

Audits usually last one to two days and the audit team is typically made up of two to four people. All audits aim at revealing weaknesses, documenting them, and proposing concrete and lasting improvements. Useful steps are as follows:

Audits

1. Determine the need for an audit (e.g., recognized necessity for internal process improvement or external customer requirement).

2. Plan the audits (content, team, formal basis, resources, dates).

3. Perform the audits in accordance with the plan and determine the achieved results.

4. Discuss strengths and weaknesses and decide whether or not improvement actions are necessary.

5. Implement the improvement suggestions and ensure appropriate reporting and archiving.

Assessments are similar to larger audits; they last several days and involve teams that are typically made up of three to five people.

Assessments

An assessment checks the operational processes and practices against the requirements of an assessment model, analyzing, in interviews, not only the direct artifacts (guidelines, work instructions, process models) and indirect artifacts (protocols, reports, filled-in templates) but also the actually “practiced” processes used in a project. Sometimes the assessment team may even “look over an expert’s shoulder” at their workplace to get a clearer idea of applied work practices.

CMMI and SPICE are typical assessment (or appraisal) examples. Maturity or capability levels assigned to organizations or individual processes are determined. Strengths and weaknesses are pointed out as well as improvement potentials, and identifying opportunities for synergies and lead competencies (especially where several organizational units or projects are compared).

Out of the five parts of the ISO/IEC 15504 (SPICE) standard, three deal directly with assessments: “Part 2 – Performing an Assessment”, “Part 3 -Guidance on Performing an Assessment”, and “Part 5 – An Assessment Model and Indicator Guidance”.

The purpose of these detailed descriptions is to ensure that different assessment results can be compared and that different assessors—i.e., the persons actually conducting the assessment—diverge as little as possible15 in their evaluation results.

TMM and TPI assessments were briefly described in the previous sections (7.3.1 and 7.3.2). More details on how to conduct an assessment can be found in the literature cited there.

7.4.1 Performing an Internal Audit or Assessment

All of the evaluation models mentioned here contain guidelines on how to conduct assessments. The different steps and activities required during the preparation of an assessment (irrespective of whether external or internal) are more or less the same in all models. The following list of the “assessment input” is based on ISO/IEC 15504 part 2:

![]() Identify the sponsor. The sponsor provides budget and time for the assessment. He is the person in the enterprise who has a prime interest in the assessment. It is most important to find out about his expectations and to obtain his commitment to perform the assessment with the estimated scope of effort identified in the plan, especially in the case of an internal assessment that may otherwise run the risk of being dismissed as a seemingly meaningless activity because it does not yield any immediate profit.

Identify the sponsor. The sponsor provides budget and time for the assessment. He is the person in the enterprise who has a prime interest in the assessment. It is most important to find out about his expectations and to obtain his commitment to perform the assessment with the estimated scope of effort identified in the plan, especially in the case of an internal assessment that may otherwise run the risk of being dismissed as a seemingly meaningless activity because it does not yield any immediate profit.

Sponsor

![]() Identify the purpose of the assessment. Further planning steps depend on the (business) purpose and concern—for example, the selection of the processes and organizational units to be assessed. The sponsor is the primary contact for this issue, but there may be other stakeholders who should also be consulted.

Identify the purpose of the assessment. Further planning steps depend on the (business) purpose and concern—for example, the selection of the processes and organizational units to be assessed. The sponsor is the primary contact for this issue, but there may be other stakeholders who should also be consulted.

Purpose

![]() Determine the scope. The organizational units, the processes, and the maximum maturity grades to be considered are derived based on the purpose of the assessment and on the actual situation within the company. Background information—such as an organization’s size, business sector, and complexity; the type of products or services it provides; and in particular, current problems it may have in projects or with particular products—plays an important role in determining the scope.

Determine the scope. The organizational units, the processes, and the maximum maturity grades to be considered are derived based on the purpose of the assessment and on the actual situation within the company. Background information—such as an organization’s size, business sector, and complexity; the type of products or services it provides; and in particular, current problems it may have in projects or with particular products—plays an important role in determining the scope.

Scope

![]() Select assessment approach and model. Depending on the purpose and scope of the assessment, different models can be chosen. The test processes of a manufacturer of medical equipment may, for instance, be assessed using either the “pure” TPI model or the automotive TPI variant, the latter putting special focus on integration testing, a fact that certainly makes sense in a company producing integrated hardware and software solutions.