As you have read in earlier chapters of this book, Operations Manager (OpsMgr) 2007 is definitely a new product compared to Microsoft Operations Manager (MOM) 2005. However, one thing that has not changed is the requirement for effectively planning your deployment before rushing the product into production.

Proper planning of your OpsMgr deployment is key to its success. Just like implementing any other application or technical product, the time spent preparing for your installation is often more important than the time spent actually deploying the product! The majority of technical product deployments that fail do so due to ineffective planning.

Too often for Information Technology (IT) organizations, the thought process is to deploy new products as quickly as possible, but this approach frequently results in not gaining full benefit from those tools. We refer to this as the RSA approach: Ready, Shoot, Aim. Using RSA results in technology deployments not architected correctly, which then require changes, or potentially a complete redeployment to resolve issues identified after the fact. Our recommended technique is RAS:

Ready—. Are you ready to deploy Operations Manager? Assess your environment to better understand it and where OpsMgr is required.

Aim—. What is the target you are trying to hit? Based on your assessment, create a design and execute both a proof of concept and a pilot project.

Shoot—. Implement the solution you designed!

Creating a single high-level planning document is essential because Operations Manager affects all IT operations throughout the enterprise. Many projects fail due to missed expectations, finger pointing, or the “not invented here” syndrome, which occurs when some IT staff members have stakes in preexisting or competitive solutions. You can avoid these types of problems ahead of time by developing a comprehensive plan and getting the backing from the appropriate sponsors within your organization.

A properly planned environment helps answer questions such as the number of OpsMgr management servers you will have, how many management groups to use, the management packs you will deploy, and so forth.

Proper technical deployments require usage of a disciplined approach to implement the solution. Microsoft’s recommended approach for IT deployments is the Microsoft Solutions Framework (MSF). MSF consists of four stages:

Envisioning—. Development of the vision and scope information.

Planning—. Development of the design specification and master project plan.

Developing—. Development and optimization of the solution.

Deployment & Stabilization—. Product rollout and completion.

Additional information on MSF is available at http://www.microsoft.com/technet/solutionaccelerators/msf. For purposes of deploying Operations Manager 2007, we have adapted MSF to a slightly different format. The stages of deployment we recommend and discuss in this chapter include

Assessment—. Similar to the Envisioning stage.

Design & Planning—. Roughly correlates to the Planning & Developing stages of MSF.

Proof of Concept, Pilot, Implementation, Maintenance—. These stages follow steps similar to the Deployment & Stabilization stages.

The specific stages we will discuss in this chapter include Assessment, Design, Planning, Proof of Concept, Pilot, Implementation, and Maintenance.

Note: Using Both MSF and MOF

Chapter 1, “Operations Management Basics,” discussed Operations Manager 2007’s alignment with the Microsoft Operations Framework (MOF) as an operational framework. Using MOF is an approach to monitoring, which is independent from using a deployment methodology such as the MSF when planning an OpsMgr deployment.

The first step in designing and deploying an Operations Manager 2007 solution is to understand the current environment. A detailed assessment gathers information from a variety of sources, resulting in a document that you can easily review and update. This process ensures there is a complete understanding of the existing environment. Although the concept of an assessment document is very common within consulting organizations as part of the process of implementing new technologies, we recommend also using this approach for projects internal to an organization.

The principles underlying the importance of assessments is summed up well by Stephen R. Covey’s Habit #5, “Seek first to understand, then to be understood,” in The 7 Habits of Highly Effective People (Simon & Schuster, 1989). From the perspective of an Operations Manager assessment, this means you should fully understand the environment before designing a solution to monitor it.

A variety of sources is used to gather information for an assessment document, including the following:

Current monitoring solutions—. These products may include server, network, or hardware monitoring products (including earlier versions of Operations Manager such as MOM 2005 and MOM 2000). You will want to gather information regarding the following areas:

What products are monitoring the production environment

What servers the product is running on

What devices and applications are being monitored

Who the users of the product are

What the product is doing well

What the product is currently not doing well

Options for existing monitoring solutions include integration with Operations Manager, replacement by Operations Manager, and no impact by Operations Manager.

Understanding any current monitoring solutions and what functionality they provide is critical to developing a solid understanding of the environment itself.

MOM 2005 upgrade/replacement—. This is applicable in environments currently using Operations Manager 2005. An in-depth analysis of what functionality MOM 2005 provides is critical to presenting an Operations Manager 2007 design that can effectively replace and enhance the existing functionality.

Current Service Level Agreements—. A Service Level Agreement (SLA) is a formal written agreement designed to provide the level of support expected (in this case, the SLA is from the IT organization to the business itself). For example, the SLA for web servers may be 99.9% uptime during business hours. Some organizations have official SLAs, whereas others have unofficial SLAs.

Unofficial SLAs actually are the most common type in the industry. An example of an unofficial SLA might be that email cannot go offline during business hours at all. You should document both the existence and nonexistence of SLAs as part of your assessment to take full advantage of Operations Manager’s capability to increase system uptime.

Administrative model—. Organizations are either centralized, decentralized, or a combination of the two. The current administrative model and plans for the administrative model help determine where OpsMgr server components may best be located within the organization.

Integration—. You can integrate Operations Manager 2007 with a variety of solutions, including help desk/problem management solutions and existing monitoring solutions. Some of the available connectors provide integration from OpsMgr to products such as BMC Remedy, HP OpenView, and Tivoli TEC. Chapter 22, “Interoperability,” discusses the various connectors that are available for Operations Manager 2007.

If OpsMgr needs to integrate with other existing solutions, you should gather details including the name, version, and type of integration required.

Service dependencies—. New functionality provided within Operations Manager 2007 makes it even more important to be aware of any services on which OpsMgr may have dependencies. These include but are not limited to local area network (LAN)/wide area network (WAN) connections and speeds, routers, switches, firewalls, Domain Name Server (DNS), Active Directory, instant messaging, and Exchange. A solid understanding of these services and the ability to document them will improve the design and planning for your Operations Manager 2007 deployment.

Functionality requirements—. You will also use the assessment to gather information specific to the functionality required by an OpsMgr environment. You will want to determine what servers Operations Manager will monitor (what domain or workgroup they are in), what applications on these servers need to be monitored, and how long to retain alerts.

While gathering information for your functionality requirements, concentrate on the applications OpsMgr will be managing. As we discussed in Chapter 2, “What’s New,” OpsMgr’s focus centers on modeling applications and identifying their dependencies. Identifying these applications and mapping out their dependencies will provide information important to your Operations Manager 2007 design.

Business and technical requirements—. What technical and financial benefits does OpsMgr need to bring to the organization? The business and technical requirements you gather are critical because they will determine the design you create. For example, if high server availability is a central requirement, this will significantly impact your Operations Manager design (which we discuss in Chapter 5, “Planning Complex Configurations”). It is also important to determine what optional components are required for your Operations Manager monitoring, audit collection, and reporting environments. Identify, prioritize, and document your requirements; you can then discuss and revise them until you finalize your requirements.

Gather the information you collect into a single document, called an assessment document. This document should be reviewed and discussed by the most appropriate personnel within your organization who are capable of validating that all the information contained is correct and comprehensive. These reviews often result in, and generally should result in, revisions to the document; do not expect that a centrally written document will get everything right from the get-go. Examine the content of the document, particularly the business and technical requirements, to validate they are correct and properly prioritized. After reaching agreement on the document content, move the project to the next step: designing your OpsMgr solution.

The assessment document you created in the previous stage now provides the required information to design your new Operations Manager 2007 environment. As with other technology projects, a best practice approach is to keep the design simple and as straightforward as possible.

Do not add complexity just because the solution is cool; instead, add complexity to the design only to meet an important business requirement! For example, it is best not to create a SQL cluster for OpsMgr reporting functionality unless it is determined that there is a business requirement for high availability with OpsMgr Reporting. Business requirements are critical because they drive your Operations Manager design. Rely on your business requirements to determine the correct answer whenever there is a question as to how you should be designing your environment.

The starting point for designing an Operations Manager environment is the management group.

As introduced earlier in this book, an Operations Manager management group consists of the Operations database, Root Management Server (RMS), Operations Manager consoles (Operations, Web, Authoring), optional components (additional management servers, reporting servers, data warehouse servers, Audit Collection Services, database servers, gateway servers), and up to 5000 managed computers. Start with one management group and add more only if more than one is necessary. For most cases, a single management group is the simplest configuration to implement, support, and maintain.

Note: The OpsMgr Authoring Console

The Authoring console will be available with Operations Manager 2007 Service Pack (SP) 1. We will discuss it in more detail in Chapter 23, “Developing Management Packs and Reports.”

One reason to add an additional management group is if you need to monitor more than the 5000 managed computers supported in a single management group. The type of servers monitored directly affects the 5000 managed computers limit; there is nothing magical or hard-coded about this number. For example, monitoring many Exchange backend servers has a far more dramatic impact on a management server’s performance than if you were monitoring the same number of Windows XP or Vista workstations.

If the load on the management servers is excessive (servers are reporting excessive OpsMgr queue errors or high CPU, memory, disk, or network utilization), consider adding another management group to split the load.

Another common reason for establishing multiple management groups is separating control of computer systems between multiple support teams. In MOM 2005, this was often the rationale used to split the security monitoring functionality from the application/operating system monitoring functionality. For most organizations, the new Audit Collection Services (ACS) functionality introduced in Chapter 2 should remove the need to split out security events into a separate management group.

However, let’s look at an example where the Application support team is responsible for all application servers, and the Web Technologies team is responsible for all web servers, and each group configures the management packs that apply to the servers it supports.

With a single Operations Manager 2007 management group, each group may be configuring the same management packs. In our scenario, the Application support team and Web Technologies team are both responsible for supporting Internet Information Services (IIS) web servers. If these servers are within the same management group, the rules in the management packs are applicable to each of the two support groups. If either team changes the rules within the IIS management pack, it may impact the functionality required by the other team.

Although there are ways to minimize this impact using techniques such as overrides, in some situations you will want to implement multiple management groups. This typically occurs when multiple support groups are supporting the same management packs. In a multiple management group solution, each set of servers has its own management group and can have the rules customized as required for the particular support organization.

Historically, multiple management groups were required due to limitations with the MOM security model. MOM 2005 provided very limited granularity in what level of security was available to users who had permission to work within the Administrator console. If a user had access to update rules in the Administrator console, she could not be restricted to specific rule groups or specific functions. OpsMgr 2007’s role-based user security removes the need to create multiple management groups to provide this level of security.

A role in Operations Manager 2007 consists of a profile and a scope. A profile, such as the Operations Manager Administrator or Operations Manager Operator, defines the actions one can perform; the scope defines the objects against which one can perform those actions. What this means is roles limit the access users have.

Although previous versions of Operations Manager often required multiple management groups to separate different groups from performing actions outside their area of responsibility, using roles limits who is able to monitor and respond to alerts generated by different management packs—and can eliminate the need to partition management groups for security purposes. (We discuss security in more detail in Chapter 11, “Securing Operations Manager 2007.”)

As an example, a MOM 2005 organization with one support group for operating systems and a second support group for applications would require multiple management groups for each group to maintain and customize its own rules and not affect the other support group. This is not necessary in OpsMgr 2007 because you can define different user roles for the personnel within each organization.

Although ACS does not require a separate management group to function, splitting this functionality into its own management group may be required to meet your company’s security requirements. Splitting into a separate management group may be required if your company mandate states that the ACS functionality be administered by a separate group of individuals. For more information on ACS, see Chapter 15, “Monitoring Audit Collection Services.”

We recommend creating a separate test environment for Operations Manager so you can test and tune management packs before deploying them into the production environment. This approach minimizes the changes on production systems and allows for a large amount of testing because it will not affect the functionality of your production systems.

Physical location is also a factor when considering multiple management groups. If many servers exist at a single location with localized management personnel, it may be practical to create a management group at that location. Let’s look at a situation where a company—Odyssey—based in Plano has 500 servers that will be monitored by Operations Manager; an additional location in Carrollton has 250 servers that also will be monitored. Each location has local IT personnel responsible for managing its own systems. In this situation, maintaining separate management groups at each location is a good approach.

Note: Management Group Naming Conventions

When you are naming your management groups, the following characters cannot be used:

( ) ^ ~ : ; . ! ? ", ' ` @ # % / * + = $ | & [ ] <>{}Management groups also cannot have a leading or trailing space in their name. To avoid confusion, we recommend creating management group names that are unique within your organization. Remember that the management group name is case sensitive.

If you have a server location with either minimal bandwidth or an unstable network connection, consider adding a management group locally to reduce WAN traffic. The data transmitted between the agent and a management server (or gateway server) is both encrypted and compressed. The compression ratio ranges from 4:1 to 6:1, approximately. We have found that an average client will use 3Kbps of network traffic between itself and the management server. We typically recommend installing a management server at network sites that have between 30 and 100 local systems. Operations Manager can support approximately 30 agent-managed computers on a 128KB network connection (agent-managed systems use less bandwidth than agentless-managed systems).

Table 4.1 lists the minimum network connectivity speeds between the various OpsMgr components.

Table 4.1. Network Connectivity Requirements

OpsMgr Component 1 | OpsMgr Component 2 | Network Connectivity |

|---|---|---|

RMS/Management Server | Agent | 64Kbps |

RMS/Management Server | Agentless | 1024Kbps |

RMS/Management Server | Operations database | 256Kbps |

RMS | Operations Console | 768Kbps |

RMS | Management Server | 64Kbps |

RMS/Management Server | Data Warehouse | 768Kbps |

RMS | Reporting Server | 256Kbps |

Management Server | Gateway Server | 64Kbps |

Web Console Server | Web Console | 128Kbps |

Reporting Data Warehouse | Reporting Server | 1024Kbps |

Console | Reporting Server | 768Kbps |

Audit Collector | Audit Database | 768Kbps |

You must install each server in your management group providing Operations Manager components using the same language. As an example, you cannot install the RMS using the English version of Operations Manager 2007 and deploy the Operations console in a different language, such as Spanish or German. If these components must be available in different languages, you must deploy additional management groups to provide this functionality.

Implementing multiple management groups introduces two architectures for management groups:

Connected management groups—. If you are familiar with MOM 2005, multitiered architectures exist when there are multiple management groups with one or more management groups reporting information to another management group. OpsMgr 2007 replaces this type of functionality with the connected management group. A connected management group provides the capability for the user interface in one management group to query data from another management group. The major difference between a multitiered environment and a connected management group is that data only exists in one location; connected management groups do not forward alert information between them.

For example, let’s take our Odyssey company, which has locations in Plano and Carrollton. For Odyssey, the administrators in the Carrollton location need the autonomy to manage their own systems and Operations Manager environment. The Plano location needs to manage its own servers and also needs to be aware of the alerts occurring within the Carrollton location. In this situation (illustrated in Figure 4.1), we can configure the Carrollton management group as a connected management group to the Plano management group.

Multihomed architectures—. A multihomed architecture exists when a system belongs to multiple management groups, reporting information to each management group.

For example, Eclipse (Odyssey’s sister company) has a single location based in Frisco. Eclipse also has multiple support teams organized by function. The Operating Systems management team is responsible for monitoring the health of all server operating systems within Eclipse, and the Application management team oversees the business critical applications. Each team has its own OpsMgr management group for monitoring servers. In this scenario, a single server running Windows 2003 and IIS is configured as “multihomed” and reports information to both the Operating Systems management group and the Application management group (see Figure 4.2 for details).

Another example of where multihoming architectures are useful is testing or prototyping Operations Manager management groups. Using multihomed architectures, you can continue to report to your production MOM management group while also reporting to a new or preproduction management group. This allows testing of the new management group without affecting your existing production monitoring.

The number of management groups you have directly impacts the number and location of your OpsMgr servers. The server components in a management group include at a minimum a Root Management Server (RMS), an Operations Database Server, and Operations consoles. Various additional server components are also available within Operations Manager 2007 depending on your business requirements.

Optional server components within a management group include additional Management Servers, Reporting Servers, Data Warehouse Servers, ACS Database Servers, and Gateway Servers. In this section, we will discuss the following components and the hardware and software required for those components:

Root Management Server

Management Servers

Gateway Servers

Operations Database Servers

Reporting Servers

Data Warehouse Servers

ACS Database Servers

ACS Collector

ACS Forwarder

Operations console

Web Console Servers

Operations Manager agents

These server components are all part of a management group as discussed in Chapter 3, “Looking Inside OpsMgr.”

In a small environment, you can install the required server components on a single server with sufficient hardware resources. As with management groups, it is best to keep things simple by keeping the number of Operations Manager servers as low as possible while still meeting your business requirements.

With the new components in Operations Manager 2007, the hardware requirements vary depending on the role each server component provides. We will break down each of the different server components and discuss both their minimum and large-environment hardware requirements.

The Root Management Server (RMS) Component is the first management server installed. This server provides specific functionality (such as communication with the gateway servers, connected management groups, and password key storage) that we discussed in Chapter 3. It also serves as a management server; as a reminder, management servers provide communication between the OpsMgr agents and the Operations database.

The RMS can run on a single server, or it can run on a cluster. Table 4.2 describes minimum hardware requirements for the RMS; Table 4.3 shows the recommended requirements for larger environments. We recommend clustering if your business requirements for OpsMgr include high-availability and redundancy capabilities. See Chapter 5 for details on supported redundancy options for each OpsMgr component.

Additional management servers (MS) within the management group can provide increased scalability and redundancy for the Operations Manager solution. The minimum hardware requirements and large-scale hardware recommendations match those of the RMS.

The number of management servers required depends on the business requirements identified during the Assessment stage:

If redundancy is a requirement, you will need at least two management servers per management group. Each management server can handle up to 2000 agent-managed computers. With MOM 2005, there was a limit of 10 agentless managed systems per management server. With Operations Manager 2007, there is no documented limit of agentless monitored systems per management server, but each additional agentless monitored system increases the processing overhead on the management server. For details on agentless monitoring, see Chapter 9, “Installing and Configuring Agents.”

If a management group in your organization needs to monitor more than 2000 computers, install multiple management servers in the management group. If you need to monitor agentless systems, start out with a maximum of 10 agentless managed systems and then track the performance of the system as you increase the number of agentless systems monitored (as an example, from 10 to 20). In addition, a good practice is to split the load of the agentless monitoring between management servers in the environment.

Each management group must have at least one management server (the RMS) in that management group.

Using a sample design of 1000 monitored computers for the purposes of our discussion, we would plan for multiple management servers to provide redundancy. Each management server needs to support one-half the load during normal operation and the full load during failover situations. Although the management server does not store any major amounts of data, it does rely on the processor, memory, and network throughput of the system to perform effectively.

When you design for redundancy, plan for each server to have the capacity to handle not only the agents it is responsible for but also the agents it will be responsible for in a failover situation. For example, with a two–management server configuration, if either management server fails, the second needs to have sufficient resources to handle the entire agent load.

The location of your management servers also depends on the business requirements identified during the Assessment stage:

Management servers should be separate from the Operations database in anything but a single-server OpsMgr management group configuration. In a single-server configuration, one server encompasses all the applicable Operations Manager components, including the RMS and the Operations database.

Management servers should be within the same network segment where there are large numbers of systems to monitor with Operations Manager.

If the RMS and Operations database are on the same server, we recommend monitoring no more than 200 agents on that server because it can degrade the performance of your OpsMgr solution. If you will be monitoring more than 200 agents, you should split these components onto multiple servers, or use an existing SQL Server (even a SQL cluster!) for the Operations database, thus lowering your hardware cost and increasing scalability. We discuss clustering in Chapter 5.

Tip: Using Existing SQL Systems for the OpsMgr Databases

The question arises about leveraging an existing SQL Server database server by using it for the OpsMgr databases. Using existing hardware can lower hardware costs and increase scalability.

As a rule, we do not recommend this because there are high processing requirements on both the Operations and Data Warehouse databases, and they perform better if they have their own server. However, in small environments or if you want to add a significant amount of memory to an existing system (or if you have a lot of available processing and memory resources), you may consider installing the OpsMgr databases on an existing SQL system.

Install gateway servers in untrusted domains or workgroups to provide a point of communication for servers in untrusted domains or workgroups. Gateway servers enable systems not in a trusted domain to communicate with a management server. The large-environment hardware recommendations (shown in Table 4.4) vary from those of management servers by the number of processors, required amount of memory, and operations system recommendations. Redundancy is available by deploying multiple gateway servers, which is the same approach used with management servers.

Table 4.4. Suggested Gateway Server Hardware Requirements for Large Environments

Hardware | Requirement |

|---|---|

Processor | One Dual-Core processor |

RAM | 2GB to 4GB |

Disk | 10GB space |

CD-ROM | Needed |

Display adapter | Super VGA |

Monitor | 800×600 resolution |

Mouse | Needed |

Network | 100Mbps |

Operating system | Windows 2003 Server SP1 32 bit or 64 bit |

The Operations Database Component stores all the configuration data for the management group and all data generated by the systems in the management group. We previously discussed this server component and all other components in Chapter 3.

Place the Operations database on either a single server or a cluster; we recommend clustering if your business requirements for OpsMgr include high-availability and redundancy capabilities. Each management group must have an Operations database. The Operations database (by default named OperationsManager) is critical to the operation of your management group—if it is not running, the entire management group has very limited functionality. When the Operations database is down, agents are still monitoring issues, but the database cannot receive that information. In addition, consoles do not function and eventually the queues on the agent and the management server will completely fill.

As a rule of thumb, the more memory you give SQL Server, the better it performs. Using an Active/Passive clustering configuration provides redundancy for this server component; the Operations database does not currently support Active/Active clustering. See Chapter 10, “Complex Configurations,” for details on supported cluster configurations.

We recommend that you not install the Operations database with the RMS in anything but a single-server Operations Manager configuration. Although this server component can coexist with other database server components, this is not a recommended configuration because it may also cause contention for resources, resulting in a negative impact on your OpsMgr environment.

Disk configuration and file placement strongly affects database server performance. Configuring all the OpsMgr database server components with the fastest disks available will significantly improve the performance of your management group. We provide additional information on this topic, including recommended Redundant Arrays of Inexpensive Disks (RAID) configurations, in Chapter 10.

Tables 4.5 and 4.6 discuss database hardware requirements.

Table 4.6. Suggested Operations Database Hardware Requirements for Large Environments

Hardware | Requirement |

|---|---|

Processor | Two Dual-Core processors |

RAM | 8GB |

Disk | 100GB space, spread across dedicated drives for the operating system, data, and transaction logs |

CD-ROM | Needed |

Display adapter | Super VGA |

Monitor | 800×600 resolution |

Mouse | Needed |

Network | 100Mbps |

Operating system | Windows 2003 Server SP1 64 bit |

Note: Measuring Database Server Performance

The time it takes Operations Manager to detect a problem and notify an administrator is a key performance metric; this makes alert latency the best measure of performance of the OpsMgr system. If there is a delay in receiving this information, the problem could go undetected. Because of the criticality of this measure, OpsMgr SLAs tend to focus on alert latency.

The Reporting Server Component uses SQL 2005 Reporting Services to provide web-based reports for Operations Manager. The Web Reporting Server Component typically runs on a single server. This server is running SQL 2005 with Reporting Services, so its hardware requirements match those discussed previously for the Operations Database Server Component. Redundancy is available for the Reporting servers by using web farms or Network Load Balancing (NLB), but there are issues associated with keeping the reports in sync. Chapter 5 includes information on redundant configurations.

The OpsMgr agent data goes through the management server and writes to the data warehouse, which provides long-term data storage for Operations Manager. This data provides daily performance information gathering and longer-term trending reports.

The Data Warehouse server hosts the Data Warehouse database (by default named OperationsManagerDW) that OpsMgr uses for reporting. The Data Warehouse Component typically runs on a single server but can run on a cluster if high availability and redundancy are required for reports.

For reasons similar to the requirements for the operational database, this server should not exist on the same system hosting RMS.

The hardware requirements for Data Warehouse database servers match those of Operations database servers, with the added requirement being additional storage for the database and log files on the server.

Audit Collection Services provides a method to collect records generated by an audit policy and to store them in a database. This centralizes the information, which you can filter and analyze using the reporting tools in Microsoft SQL Server 2005. The ACS Database Server Component provides the repository where the audit information is stored. The hardware requirements for ACS database servers match those of the Operations database servers, with a requirement of additional storage for the database and log files on the server.

The ACS Forwarder Component uses the Operations Manager Audit Forwarding service, which is not enabled by default. After the service is enabled, the ACS Collector Component captures all security events (which are also saved to the local Windows NT Security Event log). Because this component is included within the OpsMgr agent, the hardware requirements mirror those of the agent. Chapter 6, “Installing Operations Manager 2007,” discusses hardware requirements for OpsMgr.

The forwarders send information to the ACS Collector Component. The collector receives the events, processes them, and sends them to the ACS database. The hardware requirements for the collector mirror those of the RMS. Currently there are no options available to provide a high-availability solution for the ACS Collector Component.

As we discussed in Chapter 2, the Operations console provides a single user interface for Operations Manager. All management servers, including the RMS, should install this console. You can also install the console on desktop systems running Windows XP or Vista. Installing these consoles on another system removes some of the load from the management server. Desktop access to the consoles also simplifies day-to-day administration.

The number of consoles a management group supports is an important design specification. As the number of consoles active in a management group grows, the database load also grows. This load accelerates as the number of managed computers increases because consoles, either operator or web-based, increase the number of database queries on both the Operations Database Server and the Data Warehouse Server Components. From a performance perspective, it is best to run the Operations consoles from administrator workstations rather than from servers running the OpsMgr components. Running the Operations console on the RMS increases memory and processor utilization, which in turn will slow down OpsMgr.

The hardware requirements for this component match those of the Gateway Server Component.

The Web Console Server Component runs IIS and provides a web-based version of the Operations console. The hardware requirements for this server match those of the Gateway Server Component. You can configure redundancy for the Web Console Server Component by installing multiple Web Console Servers and leveraging Network Load Balancing (NLB) or other load-balancing solutions.

There are no Operations Manager–specific hardware requirements for systems running the OpsMgr agent. The hardware requirements are those of the minimum hardware requirements for the operating system itself.

Operations Manager 2007 supports a variety of operating systems and requires additional software as installation prerequisites. We discuss these in the following sections.

Operating system requirements for the OpsMgr components vary somewhat because the various components have different operating system requirements. Table 4.7 through Table 4.10 list the various operating systems and their suitability for the Operations Manager server components. Tables 4.7, 4.8, and 4.9 show the supported operating systems for each of the different server components. Table 4.7 shows management server components, Table 4.8 shows database server components, 4.9 shows ACS–related server components, and 4.10 shows the console and agent components.

Table 4.7. Management Server Component Operating System Support

Root Management Server | Management Server | Gateway Server | MS with Agentless Exception Monitoring File Share | |

|---|---|---|---|---|

Microsoft Windows Server 2003, Standard Edition SP1 X86 and X64 | X | X | X | X |

Microsoft Windows Server 2003, Enterprise Edition SP1 X86 and X64 | X | X | X | X |

Microsoft Windows Server 2003, Datacenter Edition SP1 X86 and X64 | X | X | ||

Microsoft Windows XP Professional X86 and X64 | ||||

Vista Ultimate X86 and X64 | ||||

Vista Business X86 and X64 | ||||

Vista Enterprise X86 and X64 |

Table 4.8. Database Server Component Operating System Support

Operating System | Operations Database | Reporting Server | Data Warehouse |

|---|---|---|---|

Microsoft Windows Server 2003, Standard Edition SP1 X86, X64, and IA64 | X | X | X |

Microsoft Windows Server 2003, Enterprise Edition SP1 X86, X64, and IA64 | X | X | X |

Microsoft Windows Server 2003, Datacenter Edition SP1 X86 X64, and IA64 | X | X | X |

Microsoft Windows XP Professional X86 and X64 | |||

Vista Ultimate X86 and X64 | |||

Vista Business X86 and X64 | |||

Vista Enterprise X86 and X64 |

Table 4.9. Audit Collection Server Component Operating System Support

ACS Database Server | ACS Collector | |

|---|---|---|

Microsoft Windows Server 2003, Standard Edition SP1 X86 and X64 | X | X |

Microsoft Windows Server 2003, Enterprise Edition SP1 X86 and X64 | X | X |

Microsoft Windows Server 2003, Datacenter Edition SP1 X86 and X64 | X | |

Microsoft Windows XP Professional X86 and X64 | ||

Vista Ultimate X86 and X64 | ||

Vista Business X86 and X64 | ||

Vista Enterprise X86 and X64 |

Table 4.10. Console and Agent Operating System Support

Operating System | Operations Console | Web Console | Agent |

|---|---|---|---|

Microsoft Windows Server 2003, Standard Edition SP1 X86 and X64 | X | X | X |

Microsoft Windows Server 2003, Enterprise Edition SP1 X86 and X64 | X | X | X |

Microsoft Windows Server 2003, Datacenter Edition SP1 X86 and X64 | X | X | |

Microsoft Windows Server 2003, Professional Edition SP2 X86 and X64 | X | ||

Microsoft Windows Server 2003, Standard Edition SP1 IA64 | X | ||

Microsoft Windows Server 2003, Enterprise Edition SP1 IA64 | X | ||

Microsoft Windows Server 2003, Datacenter Edition SP 1 IA64 | X | ||

Microsoft Windows Server 2003, Professional Edition SP 2 IA64 | X | ||

Microsoft Windows XP Professional X86 and X64 | X | X | |

Vista Ultimate X86 and X64 | X | X | |

Vista Business X86 and X64 | X | X | |

Vista Enterprise X86 and X64 | X | X | |

Microsoft Windows Server 2000 SP4 | X | ||

Microsoft Windows 2000 Professional SP4 | X |

The edition of the operating system chosen for each of the server components should correspond with the hardware that will be available for that component. As an example, the Windows Server 2003 Standard Edition supports up to four processors and 4GB of memory. If you purchase hardware to scale beyond that, use Windows Server 2003 Enterprise Edition instead (or use the X64 version of Windows Server 2003 Standard Edition).

Part of your decision regarding server placement should include evaluating licensing options for Operations Manager 2007. Each device managed by the server software requires an Operations Management License (OML), whether you are monitoring it directly or indirectly. (An example of indirect monitoring would be a network device such as a switch or router.) There are two types of OMLs:

Client OML—. Devices running client operating systems require client license OMLs.

Server OML—. Devices running server operating systems require management license OMLs.

To determine licensing costs, it is important to understand that there are also two different levels of OMLs available:

Standard OML—. Provides monitoring for basic operating system workloads

Enterprise OML—. Provides monitoring for all operating system utilities, service workloads, and any other applications on that particular device

If you plan to monitor servers that run on virtual server technology, an OML is only required for the physical server. This must be an enterprise OML.

Table 4.11 lists the products associated with the standard and enterprise OML levels. (This list is complete as of mid–2007.)

Table 4.11. Levels of Management Licenses and Products

License Type | Monitoring Capability |

|---|---|

Standard OML | System Resource Manager |

Password Change Notification | |

Baseline Security Analyzer | |

Reliability and Availability Services | |

Print Server | |

Distributed File System (DFS) | |

File Replication Service (FRS) | |

Network File System (NFS) | |

File Transfer Protocol (FTP) | |

Windows SharePoint Services | |

Distributed Naming Service (DNS) | |

Dynamic Host Configuration Protocol (DHCP) | |

Windows Internet Naming Service (WINS) | |

Windows Operating System 2000/2003 Operating System Management Pack | |

Enterprise OML | BizTalk Server 2006 |

Internet Security and Accelerator (ISA) Server 2006 | |

Microsoft Exchange Server 2003/2007 Management Pack | |

Microsoft SharePoint Portal Server (SPS) 2003 Management Pack | |

Microsoft SQL Server 2000/2005 Management Pack | |

Microsoft Windows Server 2000/2003 Active Directory Management Pack | |

Microsoft Windows Server Internet Information (IIS) 2000/2003 Management Pack | |

Operations Manager 2007 Servers (other than the server OML required for each management server) | |

Office SharePoint Server 2007 | |

Virtual Server 2005 | |

Windows Group Policy 2003 Management Pack |

You need to acquire an OpsMgr 2007 license for each Operations Manager server (RMS, management servers, and gateway servers; OpsMgr 2007 licenses for the Operations database, Reporting database, or other components are not required) as well as OMLs for managed devices as discussed earlier in this section.

When placing the Database Server Component separate from the Management Server Component, consider the following licensing aspects:

No Client Access Licenses (CALs) are required when you are licensing SQL Server on a per-processor basis.

CALs are required for each managed device when you are licensing SQL Server per user.

If the SQL Server is licensed using the System Center Operations Manager 2007 with the SQL 2005 Technologies license, no CALs are required. The SQL license in this case is restricted to supporting only the OpsMgr application and databases. No other applications or databases can use that instance of SQL Server.

Tip: Licensing for SNMP Devices

An OML is only required to manage network infrastructure devices if the device provides firewall, load balancing, or other workload services. OMLs are not required to manage network infrastructure devices such as switches, hubs, routers, bridges, or modems.

There has been a lot of confusion concerning what licensing is required to use the AEM functionality of Operations Manager. Using AEM requires a client license for each system from which AEM will collect crash information. So if you want to use AEM in your environment and you have 2000 workstations that you want to use AEM on, 2000 client OMLs are required. The exceptions to this license model include the following:

When the client device is also licensed for the Microsoft Desktop Optimization Pack for Software Assurance (MDOP for short), an OML is not required to use AEM on the device. The MDOP offering we are concerned with here is System Center Desktop Error Monitoring, or DEM for short. Either an OML or MDOP allows the device to use AEM.

Corporate Error Reporting (CER) was previously a Software Assurance (SA) benefit that was part of DEM. Customers with Software Assurance on Windows Client, Office, or Server products were entitled to use CER. Customers with Software Assurance on preexisting agreements can use DEM for the remainder of their agreement. After the current agreement expires, they will either need to purchase an OML or MDOP (DEM) if they want to continue to use AEM.

You do not need to purchase a client OML in addition to a standard or enterprise OML. As an example, if you have a Windows server (which has a standard OML), it is not necessary to purchase a client OML for this system to monitor it with AEM.

As an example of how this would work, let’s look at how we would license Operations Manager 2007 for the Eclipse Company. Eclipse is a 1000-user corporation with one major office. The company has decided to deploy one management group with a single management server. Eclipse is interested in monitoring 100 servers, which includes 30 servers requiring monitoring with domain controller, Exchange, SharePoint, or SQL functionality.

From a licensing perspective, Eclipse will be purchasing the following:

One server OML (one for each OpsMgr management server)

Thirty enterprise client OMLs (one for each Active Directory, Exchange, SharePoint, or SQL Server system)

Seventy standard client OMLs (for the remaining servers covered by the Enterprise Client OML licenses)

To help estimate costs, Microsoft has provided a pricing guide for Operations Manager 2007, available at http://www.microsoft.com/systemcenter/opsmgr/howtobuy/default.mspx. This guide lists the following prices for the components we just discussed:

Operations Manager Server 2007: $573 U.S.

Operations Manager Server 2007 with SQL Server Technology: $1,307 U.S.

Enterprise OML: $426 U.S.

Standard OML: $155 U.S.

Client OML: $32 U.S.

For our licensing example, our estimate would be

Operations Manager Server 2007: 1 × $573 = $573

Enterprise OML: 30 × $426 = $12,780

Standard OML: 70 × $155 = $10,850

The total estimate for Operations Manager licensing in this configuration is $24,203. Note, however, that this figure is a ballpark estimate only. Your specific licensing costs may be higher or lower based on the license agreement your organization has as well as your specific server configuration.

For more details on Operations Manager 2007 licensing, see the Microsoft Volume Licensing Brief, which is available for download at http://go.microsoft.com/fwlink/?LinkId=87480. Updates to the list of basic workloads are available at http://www.microsoft.com/systemcenter/opsmgr/howtobuy/opsmgrstdoml.mspx.

Table 4.12 shows the additional software requirements for each of the different Operations Manager database–related components.

Table 4.12. Additional Software Requirements

Server Component | RMS, MS, Gateway Server | Operations Database, Reporting Data Warehouse | Audit Collection Database | Reporting Server | Ops Console | Web Server Console |

|---|---|---|---|---|---|---|

Microsoft SQL Server 2005 SP1 Standard | X | |||||

Microsoft SQL Server 2005 Enterprise | X | X | ||||

Microsoft SQL Server Reporting Services with SP1 | X | |||||

.NET Framework 2.0 | X | X | X | |||

.NET Framework 3.0 | X | X | X | X | ||

Microsoft Core XML Services (MSXML) 6.0 | X | |||||

Windows PowerShell | X | |||||

Office Word 2003 (for .NET programmability support) | X | |||||

Internet Information Services 6.0 | X | |||||

ASP.NET 2.0 | X |

You have a variety of other factors to consider when designing your operations management environment. These include the servers monitored by OpsMgr, distributed applications, management packs, security, user notifications, and agent deployment considerations. We discuss these in the following sections.

As part of the assessment phase, you should have collected a list of servers to monitor with Operations Manager. As part of the design, you have identified each server and, based on its location, determined the management server it will use. You can now use your management group design to match the servers and their applications with an appropriate management group and management server.

As we discussed in Chapter 2, distributed applications have taken on a much more important role within OpsMgr. Categorize the applications identified in the Assessment phase to determine whether you can model them as distributed applications. You should also compare this list with the available management packs on the System Center Pack Catalog website (http://go.microsoft.com/fwlink/?linkid=71124).

Your design should also include the management packs you plan to deploy, based on the applications and servers identified during the assessment phase. As you are identifying management packs for deployment, it is important to remember that certain management packs have logical dependencies on others. As an example, the Exchange management pack is logically dependent on the Active Directory management pack, which in turn is logically dependent on the DNS management pack. To monitor Exchange, you should plan to deploy each of those management packs.

You can import MOM 2005 management packs into Operations Manager 2007 after converting them to the new OpsMgr 2007 format. Converted management packs do not have the full functionality of management packs designed for Operations Manager 2007; they do not become model-based as part of the conversion process. Converted management packs also do not include reports because Microsoft rewrote the reporting structure in OpsMgr 2007.

Operations Manager 2007 utilizes multiple service accounts to help increase security by utilizing lower privileged security accounts. Chapter 11 discusses how to secure your OpsMgr environment. Identifying these accounts and their required permissions is necessary for an effective Operations Manager design. OpsMgr uses several accounts that are typically Domain User accounts:

SDK and Config Service account—. Provides a data access layer between the agents, consoles, and database. This account distributes configuration information to agents

Management Server Action account—. Gathers operational data from providers, runs responses, and performs actions such as installing and uninstalling agents on managed computers

Agent Action account—. Gathers information and runs responses on the managed computer

Agent Installation account—. Used when prompted for an account with administrator privileges to install agents on the managed computer(s)

Notification Action account—. Creates and sends notifications

Data Reader account—. Executes queries against the OpsMgr Data Warehouse database

Data Warehouse Write account—. Assigned permissions to write to the Data Warehouse database and read from the Operations database

Operations Manager’s functionality includes the ability to notify users or groups as issues arise within your environment. For design purposes, you should document who needs to receive notifications and what circumstances necessitate notification. OpsMgr can notify via email, instant messaging, Short Message Service (SMS), or command scripts. Notifications go to recipients, configured within Operations Manager. As an example, if your organization has a team that supports email, the team will most likely want notifications if issues occur within the Exchange environment. (In this particular instance because the team is supporting email, it would be advisable to provide multiple Exchange servers that can send the notification or use other available notification methods in the event that Exchange is down.)

Operations Manager 2007 includes a robust reporting solution. With the reporting components installed, OpsMgr directly writes relevant information from the management server to the Data Warehouse database. This is a significant change from MOM 2005, which uses a DTS package to move data from the OnePoint database and transfers that information into the SystemCenterReporting database. Writing data directly into the reporting database enables near real-time reporting. It also decreases the space required within the database because only specific information is written, versus shipping all information over a certain age into the Data Warehouse database.

Monitored systems will have agents deployed to them. Deployment can be manual, automatic from the Operations console, or through automated distribution methods such as Active Directory, Microsoft Systems Management Server, and the recently released System Center Configuration Manager. For small to mid-sized organizations, we recommend deploying agents from the Operations console because it is the quickest and most supportable approach. For larger organizations, we recommend Systems Management Server/Configuration Manager or Active Directory deployment. Manual agent deployment is most often required when the system is behind a firewall, when there is a need to limit bandwidth available on the connection to the management server, or if a highly secure server configuration is required. Chapter 9 provides more detail on this topic. For the purposes of the Design stage, it is important to identify the specific approach you plan for distributing the agent.

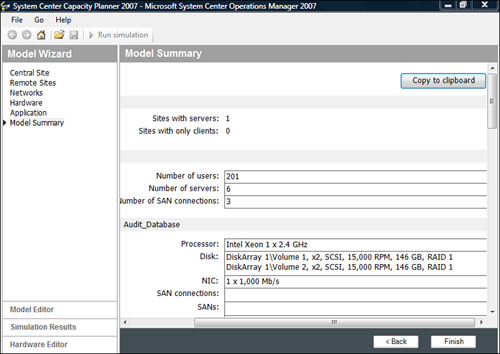

Microsoft’s System Center Capacity Planner (SCCP), shown in Figure 4.3, can provide you with the answers you need to design your OpsMgr environment. The System Center Capacity Planner, designed to architect your Operations Manager solution, provides the ability to create “what-if” analysis, such as “What-if I add another 100 servers for monitoring by OpsMgr?”

You can use the System Center Capacity Planner to provide a starting point for your OpsMgr 2007 design because it can provide you with answers needed for an effective design of your environment. According to discussions during the beta of the SCCP, we expect database-sizing estimates to be included within the released version of the SCCP. We recommend using database-sizing estimates from the SCCP as the final authority for sizing once that capability is available.

In the next few sections, we will provide sample designs for a single-server configuration, dual-server configuration, and finally a mid-to-enterprise-sized configuration.

You can create single-server configurations either using Microsoft System Center Essentials or Microsoft Operations Manager 2007, depending on the business requirements identified for your organization.

Microsoft System Center Essentials (or just Essentials) is designed to provide core operations management capabilities for small to medium-sized businesses. System Center Essentials combines the monitoring functionality of Operations Manager 2007 with the patch management functionality of Windows Software Update Services (WSUS) 3.0. However, Essentials is a different product that has specific restrictions on its functionality, including the following:

Although System Center Essentials can run in a multiserver configuration (with the RMS and database server on separate computers), all monitored systems must be in the same Active Directory forest.

There is no fault tolerance support (clustering) for the database server or management server.

Essentials does not include a data warehouse or fixed grooming schedule, and report authoring is not available.

No role-based security.

No Web console, support for ACS, or PowerShell integration.

Essentials can only monitor a total of 30 servers (31 including itself), and up to 500 non-server operating systems. Essentials can monitor an unlimited number of network devices.

Because the Essentials components typically are on a single server, the design process is greatly simplified. Figure 4.4 shows an example of this configuration. System Center Essentials is always a single–management server implementation; the only supported alternative option for Essentials puts the SQL database component on a second server when managing between 250 and 531 computers (remember, only 30 of those can be running a server operating system!).

A single-server configuration is the simplest Operations Manager design. Assuming an average level of activity, your server should be two dual-core processors or higher with 4GB of memory to achieve optimal results (shown in Table 4.13). The goal is to avoid taxing your processor by having a sustained CPU utilization rate of more than 75%. Consequently, a configuration of less than 30 managed nodes using the proper hardware should not exceed this threshold. Ensure that the tests you perform include any management packs you might add to Essentials because management packs can easily add 25% to your server CPU utilization.

Table 4.13. System Center Essentials Hardware Recommendations

Hardware | Requirement |

|---|---|

Processor | Two Dual-Core processors |

RAM | 4GB |

Disk | 250GB space that’s spread across dedicated drives for the operating system, data, and transaction logs |

CD-ROM | Needed |

Display adapter | Super VGA |

Monitor | 800×600 resolution |

Mouse | Needed |

Network | 100Mbps |

Operating system | Windows 2003 Server SP1 64 bit |

To provide sufficient server storage, we recommend approximately 250GB of disk storage available on the Essentials Server. Table 4.14 lists various methods for configuring drive space.

We recommend always placing the database on a disk array separate from the operating system; combining them can cause I/O saturation. Essentials also works best on a 100Mb (or 1Gb) network and connected to a switch (preferably on the backbone with other production servers).

You can also design Operations Manager to run in a single-server configuration. This is a good option when you need to monitor more than 30 servers but less than 100 servers or when there are requirements to monitor more than 500 non-server operating systems.

As with many other technical systems, using the simplest configuration meeting your needs works the best and causes the fewest problems. This is also true of OpsMgr; many smaller-sized organizations can design a solution composed of a single Operations Manager server that encompasses all required components. Certain conditions must exist in your infrastructure to make optimal use of a single-server OpsMgr installation. If the following conditions are present, this type of design scenario may be right for your organization:

Monitoring less than 100 servers but more than 30 servers (see the “System Center Essentials” section of the chapter for less than 30 servers).

The number and type of management packs directly affect the hardware requirements for the OpsMgr solution. A general recommendation for a single-server configuration is not to deploy more than a dozen management packs and to make sure that they are fully tuned.

Maintaining one year or less of stored data.

Generally, a single Operations Manager server configuration works for smaller to medium-sized organizations and particularly those who prefer to deploy Operations Manager to smaller, phased groups of servers. Another advantage of Operations Manager’s architecture is its flexibility concerning changes in design scope. You can add more component servers to a configuration later without the worry of major reconfiguration.

Figure 4.5 shows the single-server configuration with all the components on one server.

Sometimes the scalability Microsoft builds into its products makes it challenging to determine how to deploy a multiple-server solution. We will cut through that and show you where you can deploy a relatively simple Operations Manager solution.

Operations Manager can scale from the smallest office to the largest, worldwide enterprises. Consequently, you must make decisions as to the size and placement of the OpsMgr servers. As part of the design process, an organization must decide between implementing a single server or a deployment using multiple servers. Understanding the criteria defining each design scenario aids in selecting a suitable match.

Figure 4.6 shows the two-server configuration with the OpsMgr components split between two servers (one to provide management server functionality, one to provide database functionality).

Using the SCCP to design the Operations Manager solution (using 200 servers to be monitored), we can identify the recommended hardware for the environment, as shown in Figure 4.7, which illustrates a two-server configuration splitting the OpsMgr components.

Assuming an average level of activity, your servers should be two dual-core processors or higher with 4GB of memory to achieve optimal results (as shown in Table 4.15). The goal is to avoid taxing your processor by having a sustained CPU utilization rate of more than 75%. Consequently, a configuration of fewer than 100 managed nodes should not exceed this threshold if you use the proper hardware. Remember to test with any management packs that you might add to OpsMgr because management packs can easily add 25% to your server CPU utilization.

Table 4.15. Operations Manager Two-Server Hardware Recommendations

Hardware | Requirement |

|---|---|

Processor | Two Dual-Core processors |

RAM | 4GB |

Disk | Management Server: 10GB space |

Database Server: 150GB spread across dedicated drives for the operating system, data, and transaction logs. | |

CD-ROM | Needed |

Display adapter | Super VGA |

Monitor | 800×600 resolution |

Mouse | Needed |

Network | 100Mbps |

Operating system | Windows 2003 Server SP1 64 bit |

Now let’s apply some numbers to this. We will use a standard growth rate of 5MB per managed computer per day for the Operational database (discussed in the “Operations Database Sizing” section), and 3MB per managed computer per day on the Data Warehouse database (see the “Data Warehouse Sizing” section of this chapter). The total space for the year of stored data is 100 agents * 3MB/day * 365 days, which equals 109,500MB, or approximately 100GB. The Operational database stores data for 7 days by default, which translates to 100 agents * 5MB/day * 7 days, which equals 3500MB, or approximately 4GB. The Operational database no longer has the 30GB sizing restriction that existed with MOM 2005, but it is still prudent to allocate adequate space for it to grow to that size or greater. Approximating once again, we arrive at 250GB total space required. Table 4.16 lists a variety of drive sizes, the RAID configuration, and the usable space. This table assumes that the number of drives includes the parity drive in the RAID, but not an online hot spare.

You should always place the database on a disk array separate from the operating system because combining them can cause I/O saturation. OpsMgr also works best on a 100Mb (or 1Gb) network, connected to a switch (preferably on the backbone with other production servers).

In the two-server configuration, the two servers split the components of the typical Operations Manager installation, based on function. The first server provides management server functionality, and the second provides all database functionality. See Chapter 6 for the specific steps for installing a two-server configuration.

Although it is often simpler to install a single Operations Manager management server to manage your server infrastructure, it may become necessary to deploy multiple management servers if you require the following functionality:

Monitoring more than 200 servers.

Collecting and reporting on more than a month or two of data.

Adding redundancy to your monitoring environment.

Monitoring computers across a WAN or firewall using the Gateway Server Component.

Providing centralized auditing capabilities for your environment using the Audit Collection Services functionality.

Segmenting the operational database to another server. (We discuss this in the “Designing a Two-Server Monitoring Configuration” section of this chapter.)

In multiple-server configurations, you will split the OpsMgr components onto different physical servers. These components, introduced earlier in the “Design” section of this chapter, include the following:

You can have more than one server configured for each of these server roles, depending on your particular requirements.

It is generally a good practice to include high levels of redundancy with any mission-critical server, such as the RMS, Operations consoles, and database servers. We recommend you include as many redundant components as feasible into your server hardware design and choose enterprise-level servers whenever possible.

Disk space for the Operations database server is always a consideration. As with any database, it can consume vast quantities of drive space if left unchecked. Although Microsoft has removed the historical limit of 30GB on the Operations database, we still recommend that you limit the size of the Operations database to maximize performance and to minimize backup timeframes. The disk drive storing the Operations database should have at least slightly more than double the total size of the database in free space to support operations such as backups and restores, as well as in case of emergency growth of the Operations database. In addition, backups of the entire database on a regular basis are a must. We discuss backups in detail in Chapter 12, “Backup and Recovery.”

You can configure the Data Warehouse server in a manner similar to the Operations database server, but the Data Warehouse database requires a much larger storage capacity. Whereas the Operations database typically stores data for a period of days, the Data Warehouse database typically stores the data for a year. This data can grow rapidly; we discuss this in the “Designing OpsMgr Sizing and Capacity” section later in this chapter.

For optimal performance, place management servers close to their database servers or to the agents they manage. However, several key factors can play a role in determining where the servers will reside:

Maximum bandwidth between components—. OpsMgr servers should have fast communication between all components in the same management group to maximize the performance of the system. The management server needs to be able to upload data quickly to the Operations database. This usually means T1 speed or better, depending on the number of agents the management server is supporting.

Redundancy—. Adding management servers increases the failover capability of OpsMgr and helps to maintain a specific level of uptime. Depending on your organization’s needs, you can build additional servers into your design as appropriate.

Scalability—. If a need exists to expand the OpsMgr environment significantly or increase the number of monitored servers with short notice, you can establish additional OpsMgr management servers to take up the slack.

In most cases, you can centralize the management servers in close proximity with the Operations database and allow the agents to communicate with the management servers over any slow links that might exist.

As a rule, for multiple-server OpsMgr configurations, you should have at least two management servers for redundancy.

As defined in Chapter 3, Operations Manager management groups are composed of a single SQL Server operational database, a unique name, the RMS, and optional components such as additional management servers. Each management group uses its own Operations database and maintains its own separate configuration. As an example, two management groups can have the same management pack (MP) installed but different overrides configured within the MP. OpsMgr 2007 allows multiple management groups to write to a single Data Warehouse database (unlike MOM 2005, where the reporting environment could only have one management group report directly to the Reporting database).

It is important to note that you can configure agents to report to multiple management groups and connect management groups to each other. This increases the flexibility of the system and allows for the creation of multiple management groups based on your organization’s needs. There are five major reasons for dividing your organization into separate management groups:

Geographic or bandwidth-limited regions—. Similar in approach to Windows 2003 sites or Exchange 2003 routing groups, you can establish Operations Manager management groups in segregated network subnets to reduce network bandwidth consumption. We do not recommend spanning management groups across slow WAN links because this can lead to link saturation (and really upset your company’s network team). Aligning management groups on geographic or bandwidth criteria is always a good idea.

The size of the remote office has to justify creating a new management group. As an example, a site with fewer than 10 servers typically does not warrant its own management group. The downside to this is a potential delay in notification of critical events.

Functional or application-level control—. This is a useful feature of Operations Manager, enabling management of a single agent by multiple management groups. The agent keeps the rules it gets from each of the management groups completely separated and can even communicate over separate ports to the different management groups.

Political or business function divisions—. Although not as common a solution as bandwidth-based management groups, OpsMgr management group boundaries can be aligned with political boundaries. This would normally only occur if there was a particular need to segregate specific monitored servers to separate zones of influence. For example, the Finance group can monitor its servers in its own management group to lessen the security exposure those servers receive.

Very large numbers of agents—. In a nutshell, if your management group membership approaches the maximum number of monitored servers, it is wise to segment the number of agents into multiple management groups to improve network performance. This is appropriate when the number of managed computers approaches 5000 or when the database size increases to a level that decreases performance or the database cannot effectively be backed up and restored. In MOM 2005, the maximum supported database size was 30GB (including 40% free space for indexing). In OpsMgr 2007, there is no supported size limit, but the best-practice approach is to keep it less than 40GB, or even 30GB if you are able to do so. We have heard of transient issues when the Operations database is greater than 40GB.

Preparing for mergers—. Organizations expecting company mergers or with a history of mergers can use multiple management groups. Using multiple management groups enables quick integration of multiple OpsMgr environments.

Let’s illustrate this point using the bandwidth-limited criteria. If your organization is composed of multiple locations separated by slow WAN links, it is best to separate each location not connected by a high-speed link into a separate management group and set up connected management groups.

Another example of using multiple management groups is when IT support operations functionally is divided into multiple support groups. Let’s take an example where there is a platform group (managing the Windows operating system) and a messaging group (managing the Exchange application). In this instance, two management groups might be deployed (a platform management group and a messaging management group). The separate management groups would allow both the platform group and the messaging group to have complete administrative control over their own management infrastructures. The two groups would jointly operate the agent on the monitored computers.

Keeping in mind that each management group has a separate database, you should note that, as opposed to management servers, a management group has only one Operations database. Consequently, keep the following factors in mind when placing and configuring each SQL Server installation:

Network bandwidth—. As with the other OpsMgr components, it is essential to place the Operations database on a well-connected, resilient network that can communicate freely with the management server. Slow network performance can significantly affect the capability of OpsMgr to respond to network conditions.

Hardware redundancy—. Most enterprise server hardware contains contingencies for hardware failures. Redundant fans, RAID mirror sets, dual power supplies, and the like will all help to ensure the availability and integrity of the system. SQL Server databases need this level of protection because critical online systems require immediate failover for accessibility.

If you have high-availability requirements, you should cluster and/or replicate the database to provide redundancy and failover capabilities.

Backups—. Although some people consider high availability to be a replacement for backups, setting up your OpsMgr environment for hardware redundancy can be an expensive proposition. You should establish a backup schedule for all databases used by Operations Manager as well as the system databases used by SQL Server. We discuss backups in Chapter 12.

SQL Server licensing—. Because SQL Server is a separate licensing component from Microsoft, each computer that accesses the database must have its own Client Access License (CAL), unless you are using per-processor licensing for the SQL Server. As we discussed in the “Planning for Licensing” section of this chapter, if you use System Center Operations Manager 2007 with the SQL 2005 Technologies license, no CALs are required. However, remember that no other databases or applications can use that instance of SQL Server.

Licensing can be an important cost factor. Database clustering requires SQL Server Enterprise Edition.

With MOM 2005, the Operations database server transferred data to the reporting database on a daily basis using a DTS package. In OpsMgr 2007, writes occur directly from the management server to the Data Warehouse database rather than using a daily scheduled DTS package. In OpsMgr 2007, solid network connectivity should exist between the Operations database server and the Data Warehouse database server.

Although Operations Manager 2007 contains multiple mechanisms that allow it to scale to large environments, design limitations may apply depending on your specific environment. The number of agents you deploy and the amount of data you want to collect directly impact capacity limitations and the size of your database. A better understanding of exactly what OpsMgr’s limitations are can help to better define which design to utilize.

Data flow in OpsMgr 2007 is an important design consideration. A typical Operations Manager environment has a large quantity of data flowing from a relatively small percentage of sources within the IT environment. The data flows are latency sensitive, due to needing alerts and notifications in a short timeframe (measured in seconds).

We have found that agent traffic varies depending on the management packs you deploy, ranging from about 1.2Kbps to 3Kbps travelling from the agent to the management server. For our calculations, we use the following formula:

3Kbps * <number of agents> = total traffic (in Kbps)

The data flowing from each agent is not that great when considered individually. However, when looked at in aggregate for a large number of servers, the load can be significant, as illustrated in Table 4.17, which shows the estimated minimum bandwidth for just the Windows operating system base management pack. Using Table 4.17, you can see that the need for multiple 100Mbps or Gigabit network cards becomes important above the 500-agent mark.

You can adjust the data flow in two ways:

How often the agents upload their data

How much data you upload

When considering how to adjust the setting for low-bandwidth agents, you can tune the heartbeat and data upload times. However, this will not reduce the overall volume of information. You will be uploading more information at less frequent intervals, but it will be the same quantity of data. To reduce the data volume, you need to adjust the rules to collect less data. As an example, adjusting the sample interval of a performance counter from 15 minutes to 30 minutes will reduce the volume of data by half.