Chapter Seven. Design Thinking

The unique ability of human beings to create images of things that do not exist is what design thinking is all about. If everything around us had been designed without design capability we would not have a human civilization. Design, together with science and art, form the three dimensions of the incredible human cognitive ability. In addition, design thinking has a unique characteristic that makes it possible for it to be “universalized.” This means that design could be used as a vehicle to communicate across disciplines putting it at the core of systems methodology.

To design is to choose rather than predict the future. Design is the vehicle by which choice is manifested, and this choice includes elements of desirability. Since performance of any system is essentially design-driven, an-order-of-magnitude improvement requires a redesign. Design also develops innate abilities in dealing with real-world, ill-defined, ill-structured, or “wicked” problems. To design is to create an integrated whole from differentiated parts.

Keywords: Cognitive ability; Conjecture; Cultural transformation; Design for participation; Desirability; Differentiation; Dynamic structure; Human intelligence; Idealization; Integration; Interactive design; Iteration; Learning and adaptation; Mental image; Mess; Modular design; Modularity; Operational viability; Platform; Purposeful; Realization; Second-order machine; Self-evolving; Self-organizing; Social calculus; Social change; Successive approximation; Systems methodology; Technological feasibility; Universalized

During the 1960s, I had the privilege of being an IBMer. It was a period when IBM was probably experiencing some of its best and most exciting times. One of my notable assignments was to learn Operations Research (OR) to help our clients interested in its application. That is how I became familiar with the name R.L. Ackoff. However, my fascination with OR only lasted for a few years, and after implementing a few projects with a group of clients, I learned that decision makers — despite their willingness to pay handsomely for the work — were not interested in the optimum solution. They were only interested in confirming the choices they had already made. This is when I came to realize that the world is not run by those who are right; it is run by those who can convince others that they are right.

After this eye-opening experience, I became preoccupied with the question of why people do what they do, which led me to the fascinating concept of choice. Despite the overwhelming evidence that understanding choice is a requisite to understanding human systems, the dominant analytical culture with a scientific tag had no interest in disturbing its well-groomed analytical approach to include the messy notion of choice.

The critical question was how the notion of choice enters into the equation of interdependency, self-organizing tendencies, and the dynamics of social change. Ironically it was Russell Ackoff who came to my rescue. When we meet in 1974, I had already read his Herculean work, On Purposeful Systems (Ackoff, 1972). After a whole day of intense discussions he gave me the clue and insight that since has become the centerpiece of my professional life. He taught me why design is the vehicle through which choice is manifested and how design thinking can effectively deal with the three concerns of interdependency, self-organization, and choice all at the same time.

After 35 years of friendship, business partnership, and close collaboration with Ackoff, I wonder, in the following formulation, where does he end and where do I actually begin?

7.1. Design thinking, as the systems methodology

In his classic work, The Sciences of the Artificial, H.A. Simon (1996) makes two profound statements. The first is the observation that “the natural sciences are concerned with how things are; design, on the other hand, is concerned with how things ought to be.” The second observation is the assertion that design thinking has a unique characteristic that makes it possible for it to be “universalized.” This means that design could be used as a vehicle to communicate across disciplines.

Any professional whose task is to create, to dissolve problems, to choose, and to synthesize is involved with design thinking. Many beautiful designs have been produced in a variety of fields ranging from physical environments and artifacts to music (composition), philosophy (design of inquiring systems), and political and economic arrangements. Some great thinkers have even taken whole societies as systems to be redesigned.

Nigel Cross (2007), in his beautiful book Designerly Ways of Knowing, makes the following indisputable observation:

Everything we have around us has been designed.

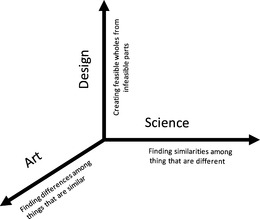

Design ability is, in fact, one of the three fundamental dimensions of human intelligence. Design, science, and art form an and not an or relationship to create the incredible human cognitive ability (Figure 7.1):

• Science — finding similarities among things that are different

• Art — finding differences among things that are similar

• Design — creating feasible wholes from infeasible parts

The unique ability of human beings to create images is what design thinking is all about. In this context the distinct advantage of design thinking is to produce new alternatives. It goes beyond default solutions by looking for new exciting possibilities. It is not about selecting the “best” from the existing set of alternatives. The choices in the existing set usually share one or more properties based on an explicit or implicit set of assumptions or constraints produced by the actors' previous experiences with similar situations. The conventional practice of using advanced analytical tools to help select the best alternative will only result in repeating the same known pattern of behavior, since the underlying assumptions governing the generation of alternatives remain unchallenged.

Design thinking, on the other hand, involves challenging assumptions. It represents a qualitative change that includes the notion of beauty and desirability. In this way design results in identifying new sets of alternatives and objectives, looking for more desirable possibilities for the future. Einstein put it so beautifully when he said, “We cannot solve our problems with the same thinking we used when we created them.”

To design anything requires a core concept and basic understanding of the subject to be designed. To design a car one needs to have a concept of a car and be knowledgeable about the state-of-the-art and the relevant technologies. To design a social system a practical knowledge about the nature of sociocultural systems is required. In this context nothing is more practical than a good operational theory. Without an explicit theory about the nature of the beast and the reasons why it does what it does, one would be condemned to keep repeating the same non-solutions all over again. All actions are preceded by some mental image, or theory, about the reality. Nobody, except perhaps a newborn child, is without a theory, no matter how crude and implicit. Those who claim that they are without one are either expecting others to accept their opinions without questions or are simply unaware that they have it by default. Not to get bogged down in a theoretical maze does not mean to fall into the other extreme of mindless action. As long as the assumptions underlying the action are explicit, there exists the chance to learn from experience and improve the quality of our practice. Part Two of this book covered in some detail the critical assumptions about the nature of sociocultural systems, therefore, it is an integral part of our theory of design.

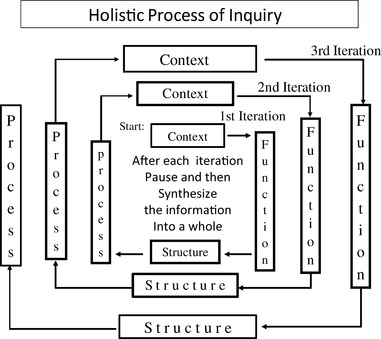

At the core of the design process is the iterative process of holistic thinking. To design is to create structure, functions, and processes in a given context. The context defined by direct user feedback provides the selection criteria for the design process. The point to emphasize is that we cannot deal with context, function, structure, and process independent from one another. We need to use an iterative process to keep the relationships among them interactive and meaningful.

To start, designers must stay at higher levels of abstraction, not letting the tendency to be lost in detail distract them from the ability to be playful in thinking about the whole.

With the first iteration designers will concentrate on developing the desired specifications of the system to replace the existing order. Start by specifying the function — the outputs or the desired impact designers would like the system to have on the larger system of which it is a part. This means understanding the context, especially the behavior of the stakeholders. Who are they and what is their interest? Which variables do they control and which ones do they influence? Designers then will try to understand and define the interdependencies among the many and often diverse desired specifications. They will find out which initial specifications are compatible and reinforcing, which ones are complementary and counterbalancing, and which ones are in conflict. They will also try to reconceptualize any conflicting requirements to dissolve the differences. In the second iteration, designers will let their imaginations take over to create abstract mental images of possible structures and processes that potentially would produce the desired outputs. Then they need to pause and synthesize the selected images into a conjecture (rough approximation of a cohesive whole that only defines primary functions), basic structure, and the outline of the throughput process.

Please recall that the same iterative holistic process that guided the process of inquiry to define problems is also used to design solutions (Figure 7.2).

In the third iteration, they will make a symbolic model of the design to be used to communicate with the design itself and with the stakeholders to achieve consensus that satisfies all concerns. The next iteration will convert this initial rough design into the desired next generation of the system. In successive iterations, after testing for operational viability, more detail and specificity are incorporated into the design. No design will be viable without a thorough understanding of its dynamic behavior. Understanding unintended consequences of our design and ability to map the behavior of positive and negative feedback loops to capture the control system makes operational thinking a critical component of our design theory.

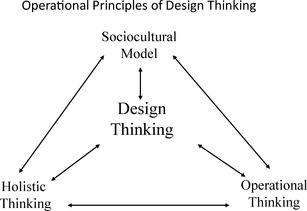

Therefore, holistic thinking, operational thinking, and sociocultural thinking together with design thinking form an interactive set (Figure 7.3). They complement each other to create a competent and exciting methodology that goes a long way in dealing with the emerging challenges of what may seem to be overwhelmingly complex and chaotic social systems.

7.2. Operating principles of design thinking

Design is an intuitive process of creating exciting feasible wholes from messy infeasible parts. It is about selecting a desired future and inventing the ways to bring it about. Design develops innate abilities in dealing with real-world, ill-defined, ill-structured, or “wicked” problems.

Here are ten operating principles of design thinking:

1. No problem or solution is valid free of context.

2. Performance of a system is essentially design-driven. An order of magnitude improvement requires a redesign.

3. To design is to choose rather than predict the future. The choice includes elements of desirability.

4. A redesign should always deal with both implicit and explicit functions. Ignoring implicit functions would result in a situation where the operation was successful but the patient died.

5. Design is an emergent outcome: its ultimate boundary, functions, structure, and processes evolve interactively. Designers must develop the confidence to define, redefine, and change the problem in light of the situation that emerges as the design activity evolves.

6. Design thinking involves conceptual abstraction and active experimentation. It is as much an art as a science. A system designer should have the capacity for abstraction and sensitivity to be moved by the power of an idea.

7. Design culture relies not so much on verbal, numerical, and literary modes of thinking and communicating, but on nonverbal modes. Sketches are a means of producing “reflective conjecture” and thus a dialog with the design subject.

8. To design a social system is to produce a clear and explicit image of the desired outcome. It should remove the fear of the unknown. Motherhood statements will not do it.

9. Design is the instrument of innovation. Innovation starts by questioning the sacred assumptions and denying the commonly accepted constraints with playful reflections on technology and market opportunities.

10. Finally, design thinking is the ability to differentiate and integrate at the same time. Design is the most effective tool of integration: to design is to create an integrated whole from differentiated parts.

It would be timely here to recall the principle of multidimensionality and the discussion that integration and differentiation form a complementary pair. Their interdependency is such that for any given level of differentiation there is a corresponding level of integration; otherwise, the system will fall into a state of chaotic complexity.

Unfortunately, the increasing amount of subspecialization in every discipline has reached such a level that we have produced an aggregate of subcultures that are not even capable of communicating within the same discipline let alone across disciplines. As a modern scientific culture, we have differentiated without paying any attention to the need for integration. It is no wonder we have lost the ability to connect the dots in a host of many arenas. Despite all claims to the contrary, most contemporary organizations are merely collections of silos of differentiated functional departments — each with very high levels of expertise — without any real means of integration among them. We have naively assumed that coordination is the same as integration. We have erroneously reasoned that if several functions report to a coordinating boss he/she will be able to integrate them into the emerging whole and properly connect the dots. The recent financial crisis, housing bubble, and the obvious messes we are facing in education, immigration, and health-care systems, just to name a few, should provide enough evidence to convince us that our integrative efforts have been a failure.

The failure to integrate specialized parts of the organization makes the following discussion of modular design especially important. Modularity is both a great vehicle for designing complex systems and a perfect mechanism for functional integration at operational levels as well as an effective format to create dynamic structure at architectural levels of social systems.

7.3. Modular design

IBM introduced its formidable architecture for the design of its 360 line of computers in the mid-1960s. This was the turning point that led the way for the creation of the present monumental state of information technology. The design contained a revolutionary conception known as modularity, which transformed the field of complex system design to an unbelievable state of possibilities and potentiality.

Being at the right place and at the right time, I was lucky to be among the first few in the IBM World Trade Corporation who got the call to learn “360 architecture” and the magic of its modular design. The experience had such a profound influence on my professional life that many years later it became the basis for my attempt to develop a systems theory of organization (Gharajedaghi, 1985) and to bring the beauty and immense potentiality of the multidimensional modular design into the social context, more specifically, design of business architecture.

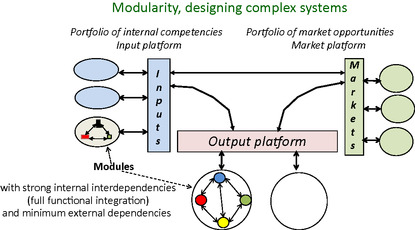

A module is an element in the architecture that is designed in such a way that it has strong internal interdependency (full functional integration) and weak or a minimum external dependencies and interactions. This fully integrated module is then connected to a specific platform designed to simplify the communications or interactions between the modules, its host platform, and the rest of the system. A module, therefore, is an integrative vehicle to facilitate and manage all of the internal interactions among the differentiated functions to produce an emergent whole (a signal or an outcome) to be transmitted or transferred to external elements of the system of which the module is a part.

A platform is a predefined host for a given class of modules. Each module of this class might have its own unique functionality, but all would share one common characteristic that defines the nature of their relationships with the rest of the system's platforms and modules. The platform, in essence, is a predesigned interface with the critical function of simplifying and managing all of the interactions of its class of modules with the rest of the architecture.

Multidimensional modular design is the architecture of a dynamic structure. It is an integrated system of differentiated platforms (each with its own class of modules) with a dynamic but simplified, predefined relationship. In this architecture combinations of different modules from different platforms transform the system into a different design as necessary. For example, we find a telephone, a music box, a GPS, a camera, or hundreds of amazing applications in Steve Jobs' incredible iPhone.

Figure 7.4 is the representation of a multidimensional modular design with the required minimum of three dimensions of inputs, outputs, and markets platforms. The input platform is the host of the class of modules that represents internal resources and competencies of the system such as a knowledge bank, manufacturing unit, and shared services. Input modules are also responsible for continuously refurbishing internal resources and competencies by continuous re-education and research. In addition to mastering their functional expertise, members of the knowledge bank will be required to learn design methodology. On the other side we have a market platform that includes the class of modules with the capability to access different markets and ability to assess their emerging needs and opportunities. Finally, the outputs platform hosts a group of dynamic modules that have the ultimate authority and responsibility for designing products, programs, and projects by continuously matching external opportunities with internal competencies. The products, programs, and projects have varying life spans that range from days to months or even years if necessary. Although output modules control the flow of money they do not own any resources (all capital assets belong to input modules); therefore, resistance to change is minimized.

The following example should help explain the intricacies of modular design and answer questions regarding its setup. A client of mine was in the business of designing custom-made separation units to purify water for customers in the electronics industry. The company's core was its engineering division, which was headed by the chief engineer. Reporting to the chief engineer were the mechanical engineering, electrical engineering, chemical engineering, and process engineering departments. Each of the four engineering departments in turn had its own chief engineer as well as a deputy chief, a senior engineer, and a few junior engineers who actually did the work. The chief engineer was the first to receive a client order secured by the company's sales department, and once the chief approved the order, the design process began. The process involved all four departments but in a successive order. It began by transferring the order to the chief chemical engineer, who then forwarded it to the deputy chief chemical engineer, and then down to the senior engineer who finally selected one of the junior chemical engineers to initiate the design. The junior chemical engineer, upon completing his part of the design, began the ascending order of forwarding his work back up the chain to the senior engineer, followed by the deputy chief, and finally the chief chemical engineer, who then transferred the approved design to the chief process engineer. The whole pattern was then repeated in the process, electrical, and mechanical engineering departments. When the final design was completed, it was then returned to the accounting and sales group for pricing and cost calculations. Once the final budget was priced and shared with the client, the back and forth process of price negotiations between the client and the company's sales and engineering teams began.

This process usually took about six to eight months to complete at an estimated cost of around $500,000. Over time, both the timetable and the associated costs surrounding each bid increased at an annual rate of 5% and this was becoming a major source of friction between the company and its customers.

After reviewing the situation it was obvious that we needed a modular design. But the traditional culture with its strong functional structure was not ready for the kind of change that modular design requires. To reduce resistance to change, we proposed a simple experiment to see if there were other alternative processes the company could consider to reduce cost and improve timing. The experiment was to create a learning cell involving four junior engineers, one from each of the engineering divisions, in addition to a cost accountant and a sales engineer from the sales department. Each member of the learning cell was asked to teach the remaining members of the team the essence and critical aspects of the functions he/she performed, and more specifically how each one of the defining variables impacted the final result. The learning cell was to manage this task on their own time in an effort to prevent this exercise from affecting their present work. As an incentive, each member of the team was promised a $10,000 salary increase if all of the members of the learning cell demonstrated that they had learned all of the functions and critical concerns of this design process from one another and successfully finished an assigned project.

After three months, the group was ready to accept their first project. When they received their first project, all six members of the module met with the client together to learn about its operations, major concerns, objectives, and requirements firsthand and as a team.

It took the modular design team only four weeks after this visit to produce the first iteration of their design. This design, with small modification, was approved by the client and finalized in another week. Total cost was $120,000 and total time was six weeks. This experiment was duplicated twice with similar results, and as such paved the way for the entire organization to convert to a modular design.

7.4. Design and process of social change

We have said that self-organizing, purposeful, sociocultural systems are self-evolving. They do not simply adapt to their environments but co-evolve with them. They can change the rules of interaction as they evolve over time. However, like all living systems, they exhibit a tendency toward a predefined order. Their behavior is guided by an implicit, shared image. They tend to approximate and reproduce a given pattern of existence very closely. To change this pattern of behavior the implicit shared image (the organizing attractor) needs to be changed.

The shared image, itself a complex design, stands at the center of the process of change. Once formed it acts as a filter so that success of any action intended to produce change invariably depends on the degree it penetrates and modifies this shared image.

Ackoff's systems methodology aims at the core of this conception. Its ultimate objective is to replace the distorted “shared image” responsible for regenerating a pattern of malfunctioning order with a shared image of a more desirable future. Working together for decades in INTERACT, the Institute for Interactive Management, we have used the interactive design process to redesign distorted shared images and to produce a lasting change in the behavioral pattern of many social systems.

Designers replace the existing order by operationalizing their most exciting image of the future — the design of the next generation of the system. An explicit and exciting design/image of the future is a powerful instrument of change. The desired future is then realized by successive approximation (inventing the ways to close the gap between actual and desired states).

This pretentious and daring optimism, however, is based on the following assumptions:

• Penetrating the shared image is more a question of excitement than logic. An exciting image of the future coupled with the instinctive human desire to share is a powerful instrument of change. This is why active participation of members (stakeholders) in producing a design is the fundamental, uncompromising operating principle of interactive design.

• Challenging the established relationships among people is not an easy proposition. However, people are more likely to accept a change if they had a hand in shaping it.

• The best way to learn and understand a system is to redesign it.

7.5. Interactive design

Interactive design is identified with Russell Ackoff. It is the core of his famous purposeful systems methodology. The ultimate aim of interactive design is to define problems and design solutions in an environment characterized by chaos and complexity. According to Ackoff (1981), “We fail more often not because we fail to solve the problems we face but because we fail to face the right problem.” We have routinely been taught how to solve problems, but rarely how to define one.

Traditionally, there are three ways to define a problem. The most common approach defines problems as deviations from the norm. The major shortcoming of this approach, besides the difficulty in defining the “norm” in a sociocultural system, is to reinforce the existing order. This is usually done despite strong suspicion that the existing order might be the source of the problem. A simple example is the “back to basics” movement in education.

A lack of resources is the next popular way to define a problem. It seems that somehow we cannot get enough information or money, and most certainly we do not have enough time to deal with most situations. This should hardly be a surprise, since time, information, and money are universal constraints. We will never have enough money. We will never have enough time. We will never have enough information. The more we know, the more we know that we do not know. A minister of economy in my native country once asked me to help him assess the impact of a certain decision on three important factors he was concerned with. I told him it would take me a month to develop the proper model. He replied, “The decision is going to be made without you. If you want to have any influence on this one, be in my office with your model at 7:00 a.m. Monday morning. Otherwise, get the hell out of the way.”

The third, and perhaps the most obstructive, way to define a problem is the tendency to define it in terms of the solution we already have. Existing solutions conveniently shield us from seeing the reality, so we accept the problem at face value. Not surprisingly, an operations researcher may see a situation as an allocation problem, while an accountant may consider it a cash flow problem.

Those of us trained as professionals — engineers, doctors, and lawyers — come with a tool bag. In each area we have been exposed to a series of classic cases, which supposedly resemble the problem set we are expected to encounter in our professional lives. We learn the solutions to these problems at the professional schools and store them in our tool bag for future use. What we have to do in real-life situations is find similarities between the situation we face and one of those cases, and then simply apply the proposed solution in the case to the problem at hand. This approach is so ingrained in the way we do things that we are not even willing to entertain a question if we do not already have the answer. A valued client once protested, “Why are you pressuring me to face this problem when you know very well that I don't have a solution for it?” It was not easy to convince him that today's problems no longer yield ready-made solutions and that his job had changed from a tool user to a tool maker. I had to remind him that he was paying me quite handsomely to help him do so. Unfortunately, even if we have a potent and innovative solution at hand, most of the time we lack the confidence to use it. We need to know who has done it before. It is as if, despite all claims to the contrary, we do not dare to be the first. Stafford Beer (1967) has expressed this phenomenon elegantly: “Acceptable ideas are competent no more, but competent ideas are not yet acceptable. This is a dilemma of our time.”

We have said that no problems or solutions are valid free of context. A phenomenon that can be a problem in one context may not be one in another. Likewise, a solution that may prove effective in a given context may not work in another. However, the tendency to define the problem in terms of the solution, and a strong preference for the context-free solution that is tried and true, create a closed loop. The process keeps on regenerating the same pattern of behavior all over again.

In a chaotic and complex environment we are not confronted with a single problem but a system of interdependent problems, or what Ackoff calls a mess. A mess is neither an aberration nor a prediction. It is the future implicit in the present operations, pointing to the potential seed of its destruction.

To map the mess is to capture the iterative nature and the workings of the multiple feedback loops that form critical interdependencies and define the second-order machine responsible for regenerating the problematic patterns repeatedly. Perhaps the best example of mess formulation is Das Capital. Ironically, the most important contribution of Karl Marx was not the solution he proposed but the problem he defined.

A good formulation of the mess makes a convincing case for fundamental change and sets the stage for effective redesign. A detailed discussion of how to formulate a mess will come in Chapter 8. The rest of this chapter deals with designing solutions, which consists of two distinct phases, idealization and realization.

7.5.1. Idealization

The basic idea of idealization is the notion of backward planning. It starts with the assumption that the system has been destroyed overnight and that the designers have been given the opportunity to re-create the next generation of the system from a clean slate. The new design is subject to only three constraints: (1) technological feasibility, (2) operational viability, and (3) learning and adaptation.

7.5.1.1. Technological feasibility

Although idealizing, we are not dealing with science fiction. Our design can only utilize technologies that are currently available. Nevertheless, in addition to knowing the state-of-the-art, interactive designers do not shy away from active experimentation. Good designs would use a platform that not only permits experimentations but also would be able to incorporate technologies with new functionality without requiring an additional interface or the costly patching process.

7.5.1.2. Operational viability

If our next-generation design comes to existence, in addition to being self-sustaining in the existing environment, it must also dissolve the current mess. Design must generate enough cash to meet its cash flow requirements. Moreover, a true viability test requires simulation of a dynamic model. Chapter 6 is a good introduction to dynamic modeling.

7.5.1.3. Learning and adaptation

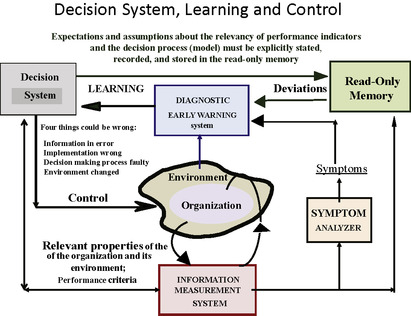

Our design is made to operate in the existing environment. We do not have a crystal ball and are not forecasting the future. Therefore our idealized design will have to have sufficient sources of variety to learn and adapt to possible emerging environments. Remember that our mental vision, which is the basis of our design, is at best an abstraction of reality. To be effective our design has to be equipped with a learning and adaptive system to validate our original design assumptions and adapt to changing environments. In this context and in my experience, Ackoff's (1981) management system provides one of the best frameworks for designing a learning system. Figure 7.5 is a simplified version of this rich and elaborate scheme. It has been modified to fit my intention to relate learning to control and underline the importance of “social calculus.”

Learning results from being surprised by detecting a mismatch between what was expected to happen and what actually did happen. If one understands why the mismatch occurred (diagnosis) and is able to do things in a way that avoids a mismatch in the future (prescription), one has learned.

To detect a mismatch in the first place, a formal process is needed to record expected outcomes. This system will be used only for major decisions and will record the following information:

• The assumptions on which the decision was made

• Performance indicators

• The expected outcome(s)

Although reasons for mismatches are infinite, they fall into one of the following four categories:

1. Wrong data

2. Wrong implementations

3. Wrong decision

4. Changed environment

Therefore, during a diagnosis, all four categories must be checked: the data, the decision, the implementation, and the environmental condition.

A learning system will be most effective if it includes an early warning system that calls for corrective action before the problem has occurred. Such a system will continuously monitor the validity of the assumptions on which the decision was made, as well as the implementation process and intermediate results.

The interactive design process has three steps: selecting the purpose, specifying desired properties of the system, and designing the ideal-seeking system.

Purpose identifies the reason for systems existence. It deals with the why question. The statement should also specify what effects systems want to have on each class of their stackholders. An organization is a purposeful system with purposeful parts and is itself contained in a larger purposeful systems. Its main function, in addition to serving its own purpose, is to serve both the purposes of its members as well as those of its environment.

Specifications deals with the what questions specifying the desired characteristics of the system to be designed:

1. Functions: What are we creating and for whom? What are the desired characteristics of the outputs from the user point of view? Good designers are capable of transforming themselves into an imaginary user so they can better understand the product/market relationships.

2. Critical processes: What are the desired specifications for throughput and organizational processes?

3. Structure: What are the desired specifications of the organizational structure?

Design deals with the how question. By using the iterative process, design creates structure, functions, and processes in the given context to realize the desired specifications. The output of this process is neither utopian nor ideal because it is subject to improvement. It is the best ideal-seeking system that its designers can conceptualize now, but not necessarily later.

7.5.1.4. Design for participation

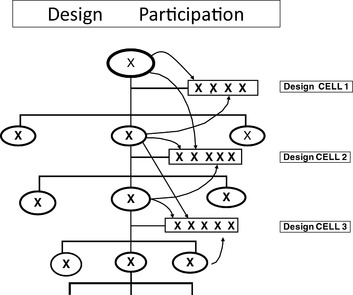

We have said that the ultimate objective of interactive design is to replace the distorted “shared image” responsible for regenerating a pattern of malfunctioning order with a shared image of a more desirable future. That is why participation of the members in the design process is an uncompromising principle of interactive design. Figure 7.6 is a duplication of a graph (Figure 5.9) we introduced earlier in Chapter 5 when discussing duplication of power. It represents nested design cells that are used as main organizational vehicles for participative design activities. Each design cell would consider the design of its superior design cell as its environment and try to redesign its activities within this context. You can recall how these nested design cells provide a mechanism for horizontal and vertical integration.

7.5.2. Realization — Successive Approximation

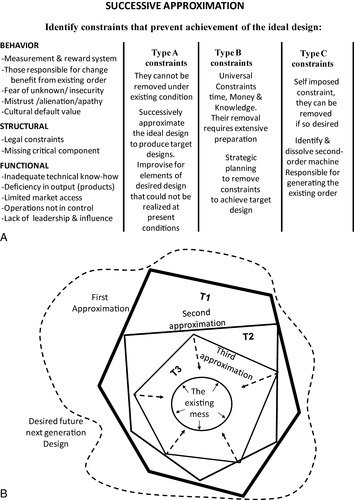

Just as the idealization phase of interactive design is iterative, so is the realization phase. Successive approximation is at the core of realizing an ideal design.

The realization of the design has to take place in a real-world environment. When we wake up from the beautiful dream we will find the monster very alive and staring right in our face. However, the image of the next generation of the system is just too exciting to let go. Therefore, we must find a way to implement as much of our design as we possibly can. To do so we need to identify all of the constraints that are going to prevent us from getting our desired design implemented.

It is crucial to the success of the whole redesign effort that all involved demonstrate the highest degree of candor in identifying any reservations they may have, subjective or otherwise, about the successful realization of the design at this time. If there is one juncture in the entire process of idealized design where nothing should be spared, this is it. Anything likely to inhibit the implementation is strongly encouraged to be put on the table, shared, and dealt with right then and there. These constraints usually fall into the following three distinct categories (see Figure 7.7A and B).

7.5.2.1. Type I constraints

Type I constraints cannot be removed within the existing framework. Such constraints would require revisions and improvisations of the design to create a target design capable of being implemented. Target I would be the first approximation of the unconstrained design. If necessary, subsequent approximations will identify target II and target III generations of the desired design. It is critical that type I constraints be continuously monitored so that the target design can further approximate the idealized design as soon as these constraints are removed. Clearly, the realization effort will not be a one-time proposition. Successive approximations of the desired state make up the evolutionary process by which the transformation from current reality to desired future is affected. It may take a number of attempts before the desired design is implemented.

7.5.2.2. Type II constraints

Type II constraints are essentially concerned with universal constraints whose removal will require extensive preparations. They consist of activities that consume time and resources and require knowledge and management talent. These interrelated activities together define the strategic plan that aims at converting the existing system to the target design. The strategic plan usually is the most time-consuming and resource-intensive part of the change effort. For control purposes, all critical assumptions and expectations about the selected course of actions must be explicitly recorded and continuously monitored.

7.5.2.3. Type III constraints

Type III constraints are essentially behavioral in nature. Selling the idea, removing resistance to change, ensuring acceptance, cultivating support, and expanding ownership are among the efforts targeted at constraints that are self-imposed. Without a prior foundation of trust and commitment, the system would simply refuse to undergo the planned transformation. These reinforcing constraints, taken together, represent the cultural default of the organization that has been reproducing the current mess all along. When confronting type III constraints, dissolving the “second-order machine” is the most critical phase of realizing the design.

Redesigning social systems, if done with active participation of critical actors, is an irreversible process of redesigning mental images. Its impact is long term and far exceeds the value of immediate implementation of the design document. One of the important outcomes of an idealized design is to see the light at the end of the tunnel. This light acts as a guide and defines the direction for future interventions as soon as opportunities arise. However, if even a design with no constraints cannot produce a desired outcome then, most probably, problems lie in the environment and the focus of change should be directed outward.

7.5.3. Dissolving the Second-Order Machine

The most critical phase of realizing the ideal design and to move from existing system to target design is to dissolve the current mess or the second-order machine responsible for generating undesirable patterns of behavior. Dissolving the second-order machine consists of two separate yet interrelated processes of self-discovery and self-improvement. They involve, first, identifying what is relevant and supportive to our shared vision of a desirable future, and second, diagnosing what turns out to be part of the “mess” and therefore obstructive to our renewal and progress. We want to keep the first and dispose of the second.

Accordingly, successful cultural transformation will involve (1) making the underlying assumptions about corporate life explicit through public discourse and dialog and (2) gaining, after critical examination, a shared understanding of what can happen when defaults that are outmoded, misguided, and/or downright fallacious are left unchanged. The process is a high-level social learning and unlearning. Only by the very act of discovering and interpreting our deep-seated assumptions can we see ourselves in a new way. The experience is liberating because it empowers us to reassess the purpose and the course of our lives and, through that, be able to exercise informed choice over our preferred future.

For example, in a design experience with a health-care system, I found that a dominant set of simple organizing assumptions (such as nurses report only to nurses, doctors report only to doctors, or integration is synonymous with uniformity) was at the core of the system's mess. A candid, open, and in-depth group discussion of the relevance and the consequences of these assumptions for the behavior of the heath-care system was the first step toward dismantling the second-order machine and implementing the target design.

7.6. Critical design elements

7.6.1. Measurement and Reward System (A Social Calculus)

No design has any chance of success without a reasonable and effective measurement and reward system. Winning is fun; to win we have to keep score, and the way we keep the score defines the game. To the extent that membership in a group is desired, the criteria by which the group evaluates the behavior of its members have a profound effect on the manner in which individuals conduct themselves.

We all know too well how to play a zero-sum game. We know how one can win here at the expense of there, or succeed today at the cost of tomorrow. In the context of biological thinking, we all have learned how to exploit our environment by undermining our collective viability.

Controlling the behavior of purposeful individuals in a multi-minded system by using supervision is no longer feasible or even desirable. To manage a multi-minded system with self-controlling members, we need a new social calculus. This calculus should provide a new framework for creating vertical, horizontal, and temporal compatibility among the members of an organization.

Vertical compatibility deals with the extent of compatibility between members at different levels; horizontal is concerned with compatibility of members at the same levels; and temporal is concerned with the compatibility of the interests among past, present, and future members in the system.

7.6.2. Vertical Compatibility

We have argued that effective integration of multilevel purposeful systems cannot be achieved without performance criteria that make fulfillment of a member's needs and desires dependent on fulfillment of the larger system's needs, and vice versa.

In other words, individuals' efforts for their own gain should be enhanced by the degree of contribution they make toward the needs of the higher system, of which they are willing members. This would change the measure of success and relative advantage of various activities for the actors in favor of those activities that satisfy the requirement of both levels.

Consider the following simple exchange system. A productive unit consumes the scarce resources of its environment; in return, it produces outputs (goods or services) that partially fulfill the needs of that environment. The assumption is that the unit will survive as long as the total value of the outputs produced is greater than or equal to the total value of the inputs it consumes. The pricing system determined by “dollar votes” is supposed to be a reliable and sufficient criterion for determining production and distribution priorities.

This supposition might be tenable if (1) dollar votes were distributed more equitably and (2) end prices were not manipulated. However, factors such as price control or government protection make the actual cost of service much higher than perceived. In other words, inputs are purchased from the environment at a lower price, and outputs (measured by the classical accounting method) are made to look more valuable than they really are.

Furthermore, even though creating a productive employment opportunity for all members of a social system is an effective means of simultaneous production and distribution of wealth, existing social calculus considers employment only as a cost and, not surprisingly, tries to minimize it. To remedy the situation we need a new framework, one that will use employment on both sides of the equation, as input as well as output. We also need performance criteria that, in addition to efficient production of wealth, explicitly consider their proper distribution as a social service to be adequately rewarded. Without a proper concern about production, a sole obsession with distribution will result in nothing but equitable distribution of poverty.

The following scheme is a simplified version of an attempt to measure the actual costs and benefits of each major economic activity as perceived on the national level. It complements the productive strength of a market economy by enhancing its allocation function. The model registers the needs of those members who lack the dollar vote to register their needs. It also explicitly values the distribution of wealth (salaries paid) as social service.

For simplicity, let us limit inputs to the two categories of (1) raw materials and (2) human resources, and the outputs to the two corresponding categories of (1) finished goods produced and (2) employment opportunity created. Assigning a “scarcity coefficient” to each set of inputs obtained from the environment and a “need coefficient” to each set of outputs (goods/services) yielded to the environment, we can compute the relative contribution of each major economic activity using Table 7.1.

Once the contribution ratio is calculated, the idea of a new social calculus would be to reward activities with higher social contributions. Suppose a certain productive unit produces bread with a contribution ratio of 2, but with a low rate of return on investment of 8% (because of the weak purchasing power of the consuming class). On the other hand, suppose another unit produces yo-yos with a contribution ratio of 1, but with a rate of return on investment of 18%. Accordingly, our incentive system ought to be able to change the relative rates of return on investment in favor of bread. The problem can be overcome with an integrated and coordinated application of well-known tools such as a differentiated loan structure, a differentiated interest rate structure, and a differentiated tax structure. Depending on the contribution ratios (computed from the previous table), a different loan equity ratio, a different interest rate, and a different tax rate can be assigned to each major economic activity. The method of determining scarcity and need coefficients is based on successive approximation. The initial raw coefficients, however, are revised and updated regularly to reflect further learning.

As demonstrated by the example in Table 7.2, this scheme will increase the rate of return on investment for bread to 18% and decrease that of yo-yos to 12.6%. Such a scheme has the advantage of minimizing the bureaucratic dangers associated with centralized planning, while enhancing the market economy's strength by promoting a more equitable allocation and distribution system.

7.6.3. Horizontal Compatibility

Major organizational theories have implicitly assumed that perfectly rational micro-decisions would automatically produce perfectly rational macro-conditions. This might have been acceptable if a whole range of incompatible performance criteria did not exist, such as a cost center, a revenue center, and an overhead center, producing structural conflicts among peer units in the organization.

Consider, for example, a typical setup within a corporation. The performance criterion for manufacturing units is the minimization of cost of production for a specified output. The performance criterion of the marketing units is to maximize sales revenue. (These units are often referred to as cost centers and revenue centers.) Intuitively, we would expect the interaction between the two centers to be complementary and result in maximum efficiency. Unfortunately, this is not the case. In most organizations the relationship between marketing and production is one of constant friction.

The reason for this is simple: this design violates a basic systemic principle. Suboptimization of each part of an interdependent set of variables in isolation will not lead to optimization of the system as a whole. The two objectives of cost minimization and revenue maximization, taken independently, lead to a basic contradiction within the system. To maximize revenue, sales would prefer to increase the variety of products, add customized features, change delivery schedules on short notice, and so on. Minimization of cost of production, on the other hand, can be achieved more easily by standardizing the production process. This means reducing the number of products and making long-run production schedules. Thus, the basic contradiction emerges: the best answer for marketing comes at the expense of manufacturing, and vice versa.

Ironically, the only reason this setup works at all in today's organizations is that the performance criteria are not taken seriously. (This is the major advantage systems with purposeful parts have over mechanical and organic systems. Such incompatibility could never be tolerated in a mechanical system.)

The usual solution that most corporations adopt for this problem is to compromise. The higher level authority over both centers determines which set of criteria should dominate the other at any particular time. The possible gain in one area might be more than offset by the loss in the other.

A totally different approach to this problem is to aim for compatibility of performance criteria rather than seek a compromise between incompatible sets. One way is to change the performance criteria for marketing and for manufacturing so that they both try to maximize the difference between cost and revenue. This means that both complementary units can be a profit or a performance center where the relationship between marketing and manufacturing is based on exchange, much like that of a customer and a supplier. Both units are now expected to be value-adding operations.

Consider the difference in how the two designs handle flexibility of delivery schedules. Flexibility is a value to some users who are willing to pay a certain premium. For a cost center, this premium has no value whatsoever. The only thing important to the cost center is that a change in production schedules will increase the cost. Since it is not concerned with revenues, the cost center will resist flexibility even when it results in positive net value to the corporation as a whole. Furthermore, since transfer of costs is based on average cost rather than marginal cost, there can be no distinction between users who demand flexibility (or various degrees of it) and those who do not; average cost is the same for both.

A profit center, on the other hand, examines each opportunity on a marginal cost versus marginal revenue basis. The price that the customer is willing to pay for the additional service is balanced against the marginal cost that the supplier will have to incur.

While a cost center is instinctively resistant to any change of operations requested by marketing, a profit center looks forward to opportunities to increase its net contribution to the system.

Note that in a profit-center design it may still be possible for one unit to benefit at the cost of another. But the critical difference is that a win/win situation is now a possibility. Both production and marketing can benefit from meeting customer demands, and, more significantly, the performance criteria are compatible. Note that uniformity of performance criteria, although desirable, is not necessary; the important point is compatibility. In other words, the performance criteria must be designed such that the success of one part does not imply failure of another.

7.6.4. Temporal Compatibility

Concern for compatibility over time in a social system is concern for its continuity and sustainability. Among stakeholders of an organization are those who were members in the past and those who will be its members in the future. The argument for compatibility between the interest of past, present, and future members, especially on ethical grounds, is so rich that it is beyond the scope of this book; our concern here is essentially pragmatic.

It is not difficult to appreciate that a social system can succeed today at the expense of its future, or suffer today for the creation of a better future. It can also be demonstrated that past members of a social system can have a profound (negative or positive) influence in shaping its present, although a need for compatibility between the interests of present and future members is more or less appreciated in the notion that decisions made today should not limit the options available to future members. However, the same recognition is not extended to the need for compatibility between the interests of present and past members.

In some cultures, the interests of past members continue to dominate the present, while in other cultures there is no concern for the interests of those who are gone — out of sight, out of mind. Nevertheless, rejecting the interests of past members is as undesirable as accepting their dominance. The effectiveness of an organization as a voluntary association of purposeful members depends on the degree of their commitment and sense of belonging. In this context, alienation is a serious obstruction to an organization's development. Incompatibility between the interests of past, present, and future members is a main source of its present members' alienation. This occurs because a constant threat to the organization's long-term viability is a continuous coproducer of anxiety and insecurity among members who identify with the future.

But members identify themselves with the past as well. They can see the image of their own future in the fate of those who had once been effective members of the system and served it well. An undesirable and unfortunate image is a serious source of insecurity that is at the core of alienation, corruption, and lust for power. This is why concern for the interests of past members, minimally in the form of an acceptable retirement system, is essential. In this respect the notion of gradual retirement, with all of its ramifications, should be considered more seriously than it has been.

In summary, to manage a multi-minded system, we need to

• Align the interests of purposeful members and generate excitement and commitment to the purpose of the whole, and vice versa.

• Let empowerment happen by duplicating power, not abdicating it.

• Separate control from service and convert it to a learning function.

• Prevent a win/lose struggle and dissolve paralyzing conflicts.

7.6.5. Target Costing

Final consideration in any design is the concept of target costing. Since a significant part of an operation's cost is design driven, the designers of a system must have cost responsibility. Without a target cost, every fool is a designer. Incorporation of target costing and variable budgeting in operating a throughput system is often the difference between success and failure.

Pricing, in the conventional cost-accounting system, is based on a cost plus formula. The assumption is that costs are uncontrollable and that the producers are entitled to recover their costs plus a reasonable margin for profit. Therefore, the prices are set by the cost of the least efficient producer who still manages to remain in the competitive game. Higher profits are achieved by targeting higher price levels if one commands a dominant share of the market. Thus, in this model, price is a controllable variable.

By contrast, with target costing prices are assumed to be set by the market on a competitive basis. In a market economy, values are defined by the users. As we move closer toward a global economy, prices increasingly become uncontrollable variables, while costs, because of technological advances, increasingly become controllable variables. This is a whole new ball game.

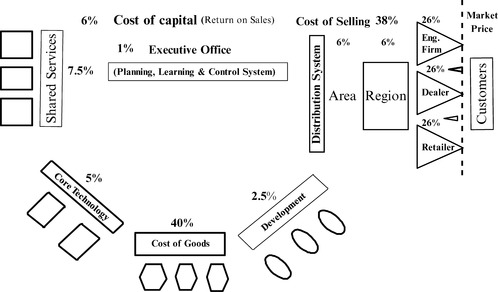

After deciding to enter the luxury-car market and compete head-on with Mercedes-Benz, Toyota had to use target costing in designing and producing the Lexus. Toyota had discovered that $20,000 of a Mercedes' $65,000 price tag is snob value. Customers, at the time, were willing to pay $20,000 more just for the name Mercedes-Benz. This meant that Lexus had to be, at a minimum, a car of the same quality but one that sold for less than $45,000. Toyota targeted the car to be priced at $40,000. But Lexus was a new entry, requiring a whole new distribution channel. The cost of selling was estimated to be nearly 25%, or about $10,000 per car. With a 10% return on sales also put aside, the design team was given a $26,000 target cost to produce the car. The charge was, “produce it within the target cost or get the hell out of the way.”

Target costing requires a variable budgeting scheme (Figure 7.8). With variable budgeting, every active element in the process becomes a performance center. None will get a fixed budget, but everyone will have a working capital and a monthly income. This income will be a percentage of monthly throughput, which in turn will determine the level of the expenditure of the activity.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.