Chapter 9

The Monetization the Climate Science

9.1 Introduction

The Internet era dawned on us with the hope of infinite transparency, great efficiency, and unprecedented prosperity. Much of the predictions have come true, but in the wrong direction. Clearly, Information age has monetized everything and every bit of indecency and act of immorality has cashed in with great reward. They will eat worms, hot peppers, rotted fish, road kills, live octopus, you name it – all to maximize views that translate directly into financial gains. While these YouTubers gain notoriety (which they do not mind because any publicity is good publicity), few notice that Jeff Bezos (Amazon), Mark Zuckerberg (Facebook), Larry Page and Sergey Brin (Google) are only the polished version of YouTubers. Even fewer realize that every scientist or researcher is focused on maximizing monetary gain. Knowledge is nothing unless it fetches financial gains. Similarly, as long a concept is monetized, there is no concern about it being right or wrong.

Ever since the oil embargo of 1972, the world has been gripped with the fear of ‘energy crisis’. U.S. President Jimmy Carter, in 1978, told the world in a televised speech that the world was in fact running out of oil at a rapid pace – a popular Peak Oil theory of the time – and that the US had to wean itself off of the commodity. Since the day of that speech, worldwide oil output has actually increased by more than 30%, and known available reserves are higher than they were at that time. This hysteria has survived the era of Reaganomics, President Clinton’s cold war dividend, President G.W. Bush’s post-9–11 era of ‘fearing everything but petroleum’ and today even the most ardent supporters of petroleum industry have been convinced that there is an energy crisis looming and it’s only a matter of time, we will be forced to switch no-petroleum energy source.

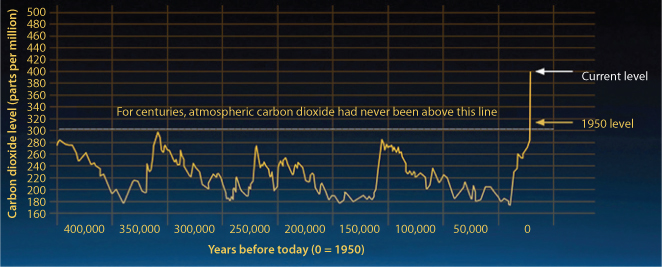

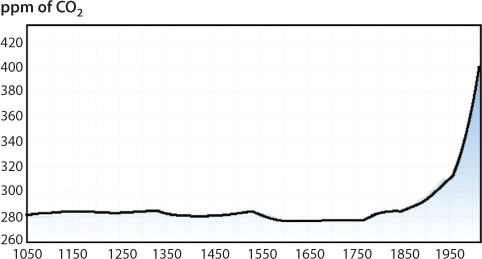

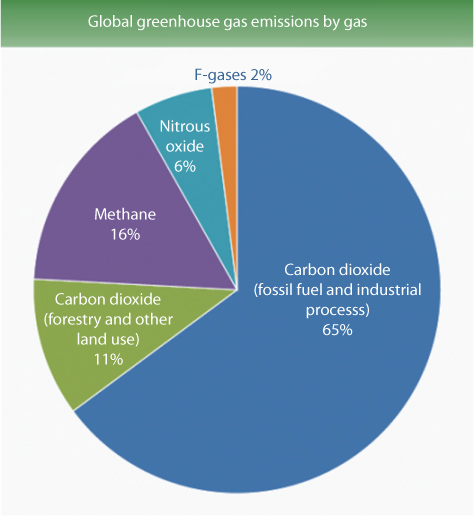

Almost simultaneous to the energy crisis hysteria, global warming has been a subject of discussion. It is thought that the accumulation of carbon dioxide in the atmosphere causes global warming, resulting in irreversible climate change. Even though carbon dioxide has been blamed as the sole cause for global warming, there is no scientific evidence that all carbon dioxides are responsible for global warming. This is despite the claim of vast majority of scientists that the global warming is all about carbon (Crooks, 2018). We have seen in previous chapters, scientists have either started off on false premises or have been ignoring crucial flaws of New Science to justify their assertion that climate change is about carbon dioxide.

The IPCC 5th Assessment Report (AR5) assessed carbon budgets for various levels of warming in billions of tonnes of carbon (GtC) or of carbon dioxide (GtCO2) based on projections of global nearsurface air temperature change, which referred to as ‘global-tas’1, from complex Earth System Models (ESMs. In general, climate modelling studies use global-tas, whereas observational records typically combine nonglobal coverage of near-surface air temperature over land with sea-surface temperature (SST) over oceans. Richardson et al. (2018) avoid using the term global average temperature with ‘carbon budget’. This usage of ‘carbon budget’ essentially expresses temperature in terms of quantifiable action item. Built-in in this analysis is the outcome that carbon tax can solve problems that are perceived to be due to greenhouse gas effects. Richardson et al. (2018) list three main factors that contribute to differences in ‘global average temperature’ change between globaltas and observational records. First, there are regions with missing data that may not warm at the global mean rate. As an example, they cite the cases of the Arctic, which is now rapidly becoming warmer and wetter (Boisvert and Stroeve, 2017) but the fate of which could not be identified with temperature data that are sparse at best (Cowtan and Way, 2014). Secondly, under CO2-driven global warming, modelled near-surface air temperatures warm more than the values of sea-surface temperature (SST), as pointed out by Richter and Xie (2008). Thirdly, data providers must decide how to account for changes in sea ice. There may be a change from reporting estimated near-surface air temperatures to SSTs where ice has retreated. In the HadCRUT4 dataset2 (Morice et al., 2012), this approach probably results in an artificially low reported warming compared with the air warming due to features of the normalisation procedure. Clearly the lack of data cannot be replaced by desired outcome without sacrificing the scientific validity of the model. Richardson et al. (2018) called it ‘masking’, and the other two factors together as ‘blending’, specifically ‘air-sea blending’ and ‘sea-ice blending’. One early study accounted for the masking and air-sea blending issues (Santer, 2000), and some studies have accounted for masking but this is not universal, although few scintists have raised the issue of misrepresentation. Recently, it was shown that over 1861–1880 to 2000–2009, modelled global-tas increased 24% more than a Had CRUT4 like blended-masked estimate (Richardson et al., 2016). Instead of attributing this discrepancy to the misrepresentation of the science underlying the climate change phenomena, Richardson et al. (2016), concluded that current observed temperature records should exceed 2 C later than global-tas, implying a larger carbon budget if compliance were assessed using one of them. Richardson et al. (2018) simply extended this work by

- reporting results to 2099,

- calculating carbon budgets using IPCC techniques,

- accounting for realistic potential future data coverage and

- applying blending and masking to a low-emission scenario.

The focus has been on the scenario that lowers emission scenario based on various policy impositions. Because no new data was added and because no further improvement is scientific description was available, blending-masking biases continue to exist without any means to determine what caused the discrepancy. For instance, the blending-masking bias under transient warming with 2000–2009 data coverage was estimated to be 15% instead of 24% (Richardson et al., 2016). Furthermore, with strong mitigation sea-ice cover would be expected to stabilise before 2100, suppressing the future sea-ice blending bias (Swart et al., 2015). In addition, the long-term warming pattern may differ from the historical pattern, leading to a different effect of coverage bias (Armour et al., 2013; Andrews et al., 2015; Held et al., 2010).

A very large amount of pseudo-science is already afoot on all aspects of the issue of climate change. Much of it is used to divide – if not aimed at dividing in the first place – public opinion over whether nature or humanity is the chief culprit. The crying need for a serious scientific approach to be taken has never been greater. On this note, paraphrasing Albert Einstein, it can truly be said that the system that got us into the problem is not going to get us out. Absent a comprehensive characterization of CO2 and all its possible roles and forms, any attempt to analyze the symptoms of global warming or design a solution must collapse under the weight of incoherence if it is based on univariate correlations, or even correlations of multiple variables, and assumes that the effects of each variable can be superposed linearly and still mean anything. The absurdity is so well known that one popular graph on the Internet depicts a strictly proportional increase in incidences of piracy in all the world’s oceans as a function of increasing global temperature.

In general, scientists have become so fixated by the conclusion that might follow their cognition that for vast majority of them the historical data mean nothing more than a smorgasbord that they can pick and choose from. For instance, the global climate change proponents very rarely (if at all) state that the Earth is in an interglacial period when temperatures are expected to (and do, in fact) increase but they are only too ready and willing to assign any global temperature increase to anthropogenic reasons, i.e., the use of fossil fuels by the human populations. No doubt, anthropogenic fossil fuel use does play a role in the temperature increase but the extent of the increase is not, and cannot be, accurately determined and furthermore, the contributory factors cannot be accurately determined (Speight and Islam, 2016). Indeed, serious question about the origin of the data supporting climate change have arisen but the idea persists that the Earth is doomed just as the cracked egg in the frying pan (skillet) or the egg being hard-boiled are changed irreversibly (Pittock, 2009; Bell, 2011; Speight and Foote, 2011).

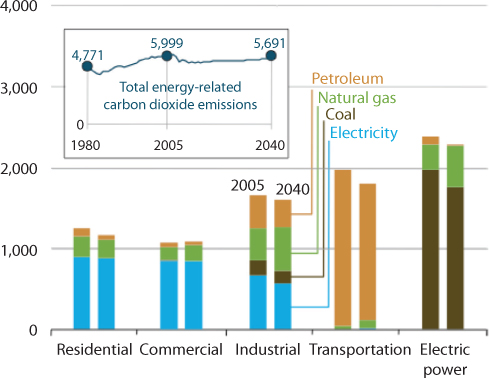

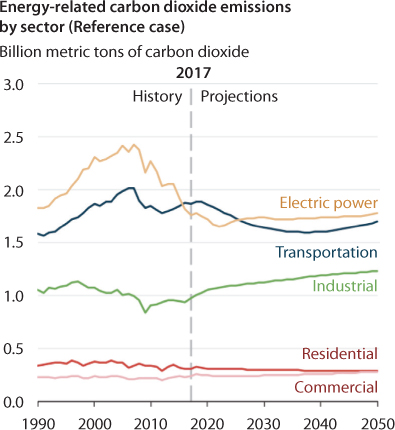

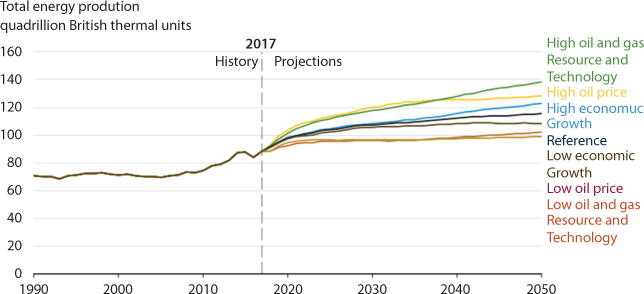

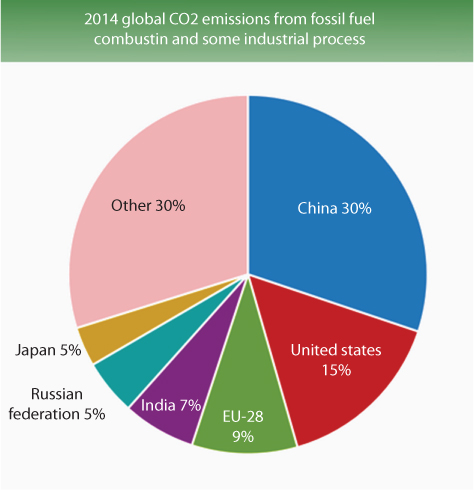

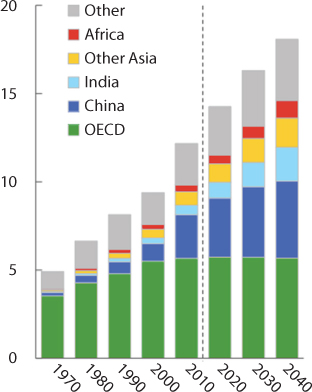

In this chapter, the current status of greenhouse gas emissions due to industrial activities, automobile emissions, and biogenic and natural sources is systematically presented. A scientific analysis is performed in order to show what history of CO2 causes rise in greenhouse gas concentration in the atmosphere. The history of economic development is analyzed to construct the monetization scheme regarding global warming and the climate change hysteria.

9.2 The Nobel Laureate Economist’s Claim

It has been for sometime that climate change and global warming have been rendered into profitable business models. Of course, there are politicians, such as Al Gore and even entertainer, such as Michael Moore that have glamourized the business model, but when it comes to obscuring the real science in favour of economic opportunities, the most important work has been done by Nobel laureate economist, often referred to as ‘father of climate change economics’, William Nordhaus. He won the Nobel Prize in 2018, along with Paul Romer, a former chief economist of the World Bank. They were recognized “for integrating technological innovations into long-run macroeconomic analysis,” (in case of Romer) and “for integrating climate change into long-run macroeconomic analysis.” The combined recognition of these two marks a breakthrough in monetizing ignorance, packaged as science. Nordhaus is well known for his influence over IPCC reports.

Today, the role of anthropogenic CO2 in causing global warming has become a matter of 97–99% consensus among scientists (Skuce et al., 2017). What we called in Chapter 2 a ‘debate’ has become a comical pontificating, in which all dissents are cringe worthy.

Starting with warning signs from 1990 s, IPCC continues to up the ante on climate change hysteria. In the 5th Assessment Report of the Intergovernmental Panel on Climate Change (IPCC AR5) stated that ‘warming of the climate system is unequivocal’ and ‘It is extremely [95%–100%] likely that human influence has been the dominant cause of the observed warming since the mid-20th century’ (IPCC, 2013). The science behind such claims goes back to simplistic models that belonged to the works of Nordhaus (Cook et al., 2013; Cook et al., 2016). Such scientific findings can inform policy responses in concert with other factors such as risk aversion, discounting of the future and assessments of the severity of future climate impacts and as such there is a need to package them as ‘scientific’. The Paris Agreement of the United Nations Framework Convention on Climate Change (UNFCCC) Article 2.1(a) expresses a long-term goal of: ‘Holding the increase in the global average temperature to well below 2 C above pre-industrial levels and pursuing efforts to limit the temperature increase to 1.5 C above pre-industrial levels, recognizing that this would significantly reduce the risks and impact of climate change’. In the mean time, even the phrase ‘global average temperature’ is not precisely defined, and achievement of the Agreement’s goal may depend on possible different definitions and available measurement techniques. A related concept is that of a carbon budget, the allowable cumulative carbon dioxide (CO2) emissions consistent with a specified level of peak warming with a particular probability (Meinshausen et al., 2009; Allen et al., 2009; Matthews and Caldeira, 2008). Until now, the unexpressed intention of charging universal carbon tax has been kept hidden. It all changed during the Nobel prize declaration cycle of 2018.

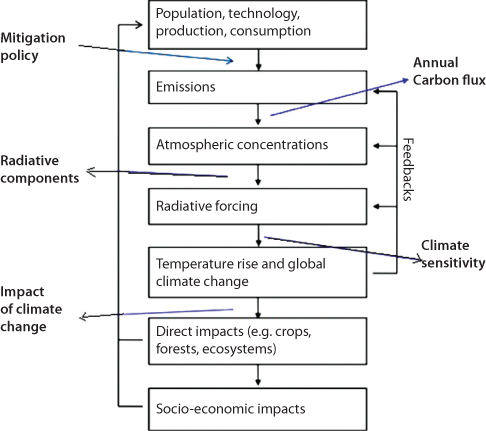

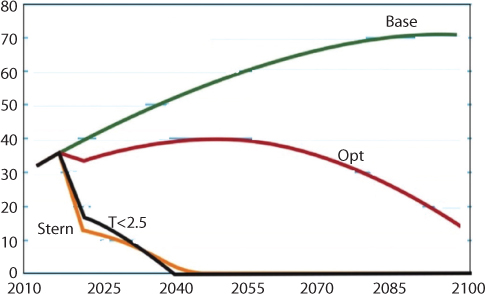

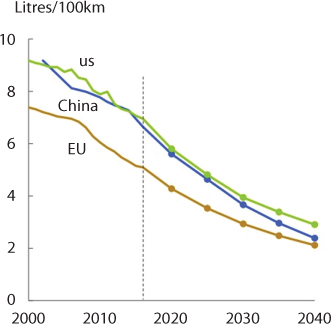

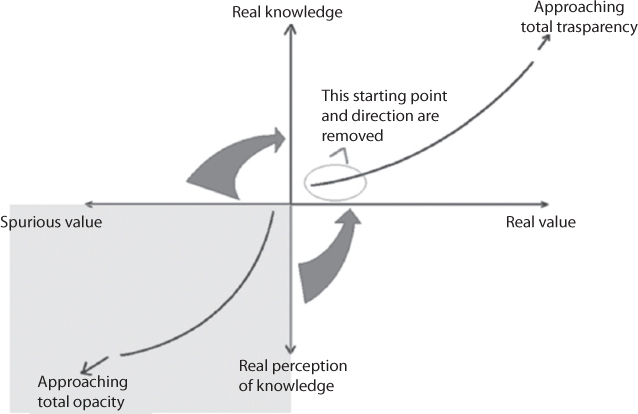

The most important problem to tackle has been been the development of integrated-assessment economic models that analyze the problem of global warming from an economic point of view. Numerous modeling groups around the world have been engaged in developing tools of economics, mathematical modeling, decision theory, and related disciplines. The scenario that they all wish to depict is shown in Figure 9.1

Figure 9.1 A policy that changes one of the above aspects changes all aspects and how they develop influence each other over time

As shown in this figure, the primary interest is to create policy guidelines that would mitigate impact of emissions, primarily the CO2.

Nordhaus is the developer of the DICE and RICE models3 integrated assessment models of the interplay between economics, energy use, and climate change. In his books on climate change he started off with the assumption that greenhouse gases are positively responsible for the climate change. From that point onward, he never checked the validity of that assumption despite numerous historical data available to him (Nordhaus, 2008).

As early as 1993, Nordhaus (1993) wrote:

“Mankind is playing dice with the natural environment through a multitude of interventions – injecting into the atmosphere trace gases like the greenhouse gases or ozone-depleting chemicals, engineering massive land-use changes such as deforestation, depleting multitudes of species in their natural habitats even while creating transgenic ones in the laboratory, and accumulating sufficient nuclear weapons to destroy human civilizations” (p. 11).

In this paper, he puts greenhouse gases in the same line with ozone-depleting chemicals (that would be CFC). He attacks massive land-use changes, including deforestation and laments depletion of certain species in the process. He even criticizes genetic alterations as well as nuclear weapons. He makes no comment on pesticide, chemical fertilizer and more importantly the chemical industry that is in control of oil refining and gas processing. He also makes no comment about the possibility of greenhouse gases being natural, thus harmless to the ecosystem.

His projection umbers are shown in Figure 9.2. In this figure, projections of IPCC Report are (dashed lines) from Intergovernmental Panel on Climate Change (1990) and that with the DICE model, which was developed by Nordhaus. We have discussed the shortcomings of these models in Chapter 2, but what is important here is the claim that this projection has scientific merit, although it has practically no relevance to the real problem and has nill data that could be cited for fine tuning the models. Also, note this was a prediction some 25 years ago, during the time Al Gore was fully involved in creating the climate change hysteria.

Figure 9.2 Projection of global temperature change as per Nordhaus (1993).

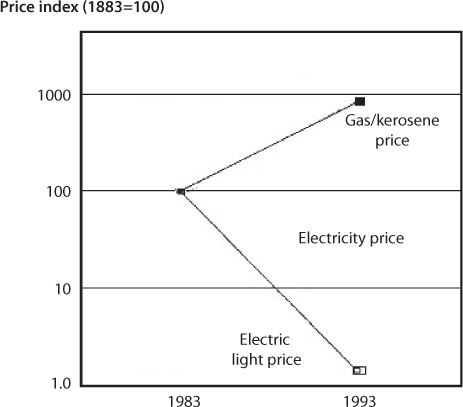

Figure 9.3 Labour price of light (After Nordhaus, 1998).

In 1998, Nordhaus authored a seminal report, in which he argued technological progress has delivered astonishing changes in the availability of artificial light, from the sesame oil lamps used in Babylon in 2000 BCE, to the first gas lamps used in the 1790s, to the LEDs we use today. Without regard to the hidden cost of artificial light, he proceeded to compare progress with a staggering drop in the cost of light, as measured in the number of hours one would have to work to buy one lumen. With that argument, he showed a steep decline since the Industrial Revolution. In the Middle Ages, an average person might have had to work for 10 hr to afford a thousand lumen hours of light. By 2000, that was down to working for about a third of a second.

He also introduced the concept of ‘true price index’. He took the period of 1883 (when Edison first introduced electric light) to 1993 (time of publication) and lumped gas and kerosene lights together because they were about the same price in that era. Figure 9.4 Shows that the prices of the fuel rose by a factor of 10 for kerosene and by a factor of 3 for electricity. He argued that if an ideal traditional (frontier) price index were constructed, it would use late weights (following electricity prices) since it is the frontier technology. Hence, the ideal traditional (frontier) price index using the price of inputs would show a fall in the price of light by a factor of 3 over the last century. On the other hand, if the price index were incorrectly constructed, say by using 1883 consumption weights and tracking gas/kerosene prices, it would show a substantial upward increase by a factor of 10. A true (frontier) price index of output or illumination, according to Nordhaus, would track the lowest solid line (Figure 9.4), which shows a decline factor of 75 over the century of concern. In summary, it shows a much steeper decline in price relative to the price of electricity because of the improvements in the efficiency of electricity. How such an extra ordinary efficiency can appear? Chhetri and Islam (2008) pointed out that such a number can be concocted by including only one portion of the energy picture. Similar to what has been ubiquitous today in terms of high efficiency of electric or hybrid cars all because the background of the electricity is not included, assigning such high value amounts to selective bias in favour of electric energy. It is no small irony that Nordhaus calls the conventional procedure ‘biased’.

Figure 9.4 Energy pricing as seen by Nordhaus.

Nordhaus (1993) introduced his DICE model in the realm of science. However, the principal conclusion of this paper was that there should be a carbon tax. Without any scientific evidence, the paper started off with the conclusion that “a modest carbon tax would be an efficient approach to slow global warming, while rigid emissions-stabilization approaches would impose significant net economic costs.” This so-called ‘Dynamic Integrated Climate-Economy model’ claimed to have included dynamic policies as well as dynamic data. This was an improvement over past model that was steady state. Following assumptions were made:

- The economy is endowed with an initial stock of capital, labor, and technology, and all industries behave competitively;

- Each country maximizes an intertemporal objective function, identical in each region, which is the sum of discounted utilities of per capita consumption times population;

- Output is produced by a Cobb-Douglas production function in capital, labor, and technology;

- Population growth and technological change are exogenous, while capital accumulation is determined by optimizing the flow of consumption over time.

On the technological side, In modeling GHG emissions, Nordhaus assumes that the ratio of uncontrolled GHG emissions to gross output is a slowly moving parameter represented by σ(t). GHG emissions can be reduced through a wide range of policies is the fundamental assumption of his work. In this analysis, an ‘emissions control factor’, μ(t) is introduced so that the amount of CO2 can be reduced and its impact studied. This term is the fractional reduction of emissions relative to the uncontrolled level. Scientifically, this emission controlling factor has no meaning other than for a parametric study. It assumes that somehow emissions can be reduced and as a consequence, he wants to study what happens to the rest of climate. So, Nordhaus is merely looking at how to optimize the trajectory of emissions control. The emissions equation is given as:

Where, E(t) is GHG emissions, Q(t) is the gross output, σ(t) is determined from historical data, and it is assumed that GHG emissions were uncontrolled through 1990, meaning there was no reduction, µ(t), effectuated before that date. In addition to previous assumptions, this equation further assumes that Cobb–Douglas function of capital, labor, and technology applies to climate change. The most egregious aspect of this assumption is the conflation of anthropogenic CO2 with natural CO2 and application of mechanical relationship to nature, for which nothing of the modern engineering applies. Of course, the Cobb–Douglas production function was not developed on the basis of any knowledge of engineering, technology, or even management of the production process. It was instead developed because it had attractive mathematical characteristics, such as diminishing marginal returns to either factor of production and the property that expenditure on any given input is a constant fraction of total cost. Equation 9.1 serves as the basis for the entire DICE model and this represents the non-scientific nature of this model. Lindzen (2018) also called out the absurdity of using anthropogenic CO2 as the controlling parameter for global warming, although it represents miniscule portion of the global CO2 output.

The next part of the model introduces a number of relationships that attempt to capture the major forces affecting climate change. It is assumed that there is instant mixing of various gases. The following equation is assumed to govern the CO2 accumulation.

where M(t) is the change in concentrations from pre-industrial times, β is the marginal atmospheric retention ratio, and δM is the rate of transfer from the quickly mixing reservoirs to the deep ocean. Similar to Eq. 9.1, Eq. 9.2 is the GHG analog of the capital accumulation equation. Atmospheric concentrations in a period are determined by last period’s concentrations [M(t- l)] times (l-δM,), where δM is the rate of removal of GHGs. This approximation essentially assumes that none of the CO2 emitted is absorbable within the ecosystem. With this formulation, another series of linear relationship between CO2 accumulation and climate change are invoked. The climate system is represented by a multilayer system, namely three layers - the atmosphere, the mixed layer of the oceans, and the deep oceans - each of which is assumed to be well mixed. This is the crudest version of the 2-layer atmospheric model, as discussed in Chapter 2. It also assumes instant mixing and adiabatic wall – both illogical in a natural setting. The accumulation of GHGs is assumed to warm the atmospheric layer, which then warms the mixed ocean, which in turn diffuses into the deep oceans. The lags in the system are primarily due to the thermal inertia of the three layers. This model is written as:

(9.3)

where T(t)=temperature of layer i in period t (relative to the pre-industrial period); i = 1 for the atmosphere and upper oceans (rapidly mixed layer) and i = 2 for the deep oceans; F(t)=radiative forcing in the atmosphere (relative to the pre-industrial period); Ri = the thermal capacity of the different layers; τ2 = the transfer rate from the upper layer to the lower layer; and λ=the transfer rate f

The above expression essentially treats the atmosphere like a laboratory flask and presumes that the data available to calibrate the model are as abundantly available as a laboratory test.

Ignoring the fact that the above formulation has no scientific basis, Nordhaus proclaims that the most important shortcoming is that the damage function, particularly the response of developing countries and natural ecosystems to climate change, is poorly understood at present. This apparent admission of shortcoming diverts attention to the fact that the formulation has no scientific basis and the fundamental assumptions involved are fatally flawed and illogical. What it does in addition is to create hysteria. Note the following disclaimer:

“… the potential for catastrophic climatic change, for which precise mechanisms and probabilities have not been determined, cannot currently be ruled out. Furthermore, the calculations omit other potential market failures, such as ozone depletion, air pollution, and R&D, which might reinforce the logic behind greenhouse gas reduction or carbon taxes. Issues of sensitivity analysis with respect to either parameters or components of the model have not been addressed in this study, although an examination of these issues is underway, as discussed above. And finally, this study abstracts from issues of uncertainty, in which risk aversion and the possibility of learning may modify the stringency and timing of control strategies.”

However, this formulation would set the stage for universal carbon tax decades later. To the credit of Nordhaus, other scientists have been duped into taking his conclusions as facts and the entire debate has been surrounding peripheral issues, without focus on the core lack of science in DICE formulation (see for instance, Kelly and Kolstad, 2001). Economists served as the drum beater of the climate change proponents. Consider the following quote of Fatih Birol, executive director of the International Energy Agency, explains his warning that oil prices may be entering the “red zone”.

“It seems like expensive energy is back, and back at the wrong time for the global economy … Global economic growth is losing momentum, there are major currency issues in emerging countries, and trade tensions among major players” (Quoted by Financial Times, 2018).

Subsequent versions of DICE have been used by policymakers to investigate alternative approaches to slowing climate change, namely through the application of carbon taxes. This line of cognition dominates Nordhaus’ take on ‘economics of climate change’. He was not challenged and he continued to make his mark on energy politics. He co-edited an NRC report that concluded that the current federal tax provisions have minimal net effect on greenhouse gas emissions, according to a new report from the National Research Council. The report found that several existing tax subsidies have unexpected effects, and others yield little reduction in greenhouse gas emissions per dollar of revenue loss. This report was a result of Congressional request to evaluate the most important tax provisions that affect carbon dioxide and other greenhouse gas emissions and to estimate the magnitude of the effects. The report considered both energy-related provisions – such as transportation fuel taxes, oil and gas depletion allowances, subsidies for ethanol, and tax credits for renewable energy – as well as broad-based provisions that may have indirect effects on emissions, such as those for employer-provided health insurance, owner-occupied housing, and incentives for investment in machinery.

Using energy economic models based on the 2011 U.S. tax code, the committee found that the combined effect of energy-related tax subsidies on greenhouse gas emissions is minimal and could be negative or positive. It noted that estimating the precise impact of the provisions is difficult because of the complexities of the tax code and regulatory environment. However, it found that these provisions achieve very little greenhouse gas reductions at substantial cost; the U.S. Department of the Treasury estimates that the combined federal revenue losses from energy-sector tax subsidies in 2011 and 2012 totalled $48 billion. The report concluded that while few of these provisions were created solely to reduce greenhouse gas emissions, they are a poor tool for doing so. In this process, no one questioned the source of the greenhouse gases that they were trying to reduce. As we will see in latter section of this chapter, greenhouse gases from manmade activities are miniscule compared to what is emitted naturally.

Not surprisingly, the models indicated that the provisions subsidizing renewable electricity reduce greenhouse gas emissions, while those for ethanol and other biofuels may have slightly increased greenhouse gas emissions. However, the debate delved into national output. They suggested that broad-based provisions such as tax incentives to increase investment in machinery affect emissions primarily through their effect on national economic output. In other words, when a broad-based tax provision is removed, the percent change in emissions is likely to be close to the percent change in national output.

It was quite stunning that the committee came up with the recommendation that tax provisions and climate change policy can make a substantial contribution to meeting the nation’s climate change objectives, although they themselves determined that the current approaches were ineffective in making any dent on the greenhouse gas emission. Without any evidence, and in fact with evidence to the contrary, they recommended that carbon taxes or tradable emissions allowances, would be the most effective and efficient ways of reducing greenhouse gases. Their science had no logic and there was a total disconnection between what was observed and what was recommended.

Ever since the development of DICE and RICE models, no science has been added to those strictly empirical model, yet they have been assigned the name ‘science-based’ and the entire debate has been over how much emission reduction must be imposed and what carbon tax must be levied.

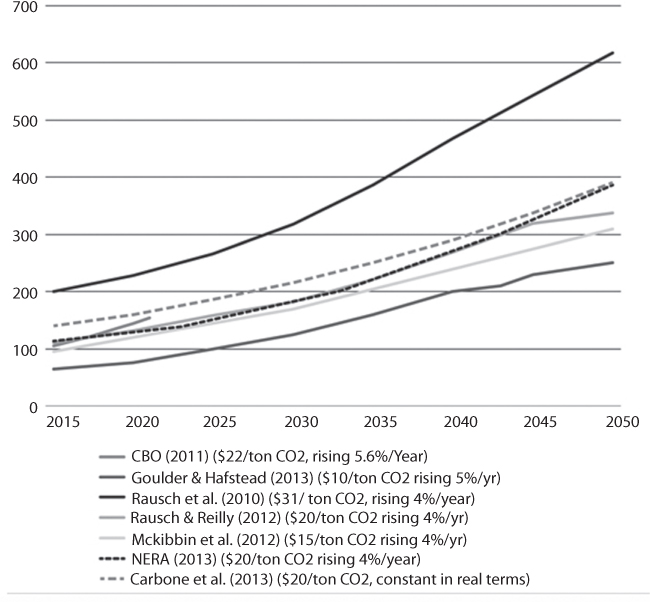

Then came the presentation of Nobel Prize in Economic sciences and the latest model, DICE-2016R2 was instantly sanctified and its predictions under presents four different scenarios, each applying a different carbon tax amount were gilded as the epitome of science-based policy making. The Nobel Committee tweeted,

“Laureate William Nordhaus’ research shows that the most efficient remedy for problems caused by greenhouse gas emissions is a global scheme of carbon taxes uniformly imposed on all countries. The diagram shows CO2 emissions for four climate policies according to his simulations.”

The Base case involves no new climate change policy beyond 2015 policies. The Opt option involves carbon taxes that maximize global welfare, using conventional economc assumptions about the importance of the welfare of future generations. The Stern option involves carbon taxes that maximize global welfare, with substantially more emphasis on the welfare of future generations than in scenario two as suggested in the Economics of Climate Change: The Stern Review, from 2007. The T < 2.5 option involves carbon taxes high enough to keep global warming from ever exceeding 2.5 C are implemented at minimum global welfare cost. If one had any doubt this is the epitome of knowledge, the following picture was plastered as a reminder, civilization has arrived and we are about to embark on the ultimate enlightenment, as depicted in Picture 9.1.

Picture 9.1 New Science has introduced a version of science that includes fundamental false assumptions that are in line with the desired outcome.

Figure 9.5 Predicted CO2 emissions tweeted by the The Royal Swedish Academy of Sciences (credit to Johan Jarnestad/The Royal Swedish Academy of Sciences, 2018).

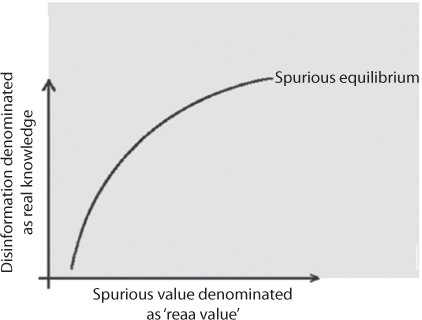

What we have here is a correlation and a discussion around that correlation without first establishing or even stating the theory. Of course, correlation doesn’t mean causation, so science doesn’t accept everything that correlates. Before correlation comes the link to causation. Repeatedly scientists have fallen into this ‘correlation means causation’ argument. Yet, the science used has been analogous to the pirate vs. global warming correlation (Figure 9.6). This figure available on Internet and previously used by Islam et al. (2010a) to show the spurious nature of modern-day probability theories presents a case, in which an absurd correlation between number of pirates with natural disasters is sought. The conclusion derived from this plotting is that global warming, earthquakes, hurricanes, and other natural disasters are a direct effect of the shrinking numbers of Pirates since the 1800s. At this point, the debate becomes how accurate the correlation is and what can be done to bring up the number of pirates without invoking too much disturbance in the high sea. This metaphor helps understand the problem associated with modern day connection between global warming, CO2 concentration and carbon tax.

Figure 9.6 Illustration of how an absurd conclusion cannot be avoided unless true science is introduced.

Without any scientific basis, the decades old empirical model now has assumed the place of the most scientifically accurate predictive tool and forecasts are exact and final. For instance, if carbon taxes were 6 to 8 times higher than today’s levels, drastic emission cuts could be achieved over a 25 year period (and maintained for much longer). The one-sided research shows how economic activity and policymaking (the creation and application of taxes) can interact with basic chemistry and physics (the carbon emissions) to slow climate change. For example, if the highest level taxes were applied, global warming temperatures could be kept from exceeding 2.5 C. Note the value 2.5 how closely it hovers around the almost century old 3 C that was picked out of thin air and flaunted as the ultimate number for climate change.

Yet, anytime this ‘science’ is challenged, insults are dolled out. Within minutes of Nobel Committee flaunting carbon tax as the only solution to save humanity, the following insulting tweets were directed toward a tweet that attempted to talk logic (Screenshort 9.1).

Screenshort 9.1 Twitter feeds in response to the announcement of Universal carbon tax.

9.3 Historical Development

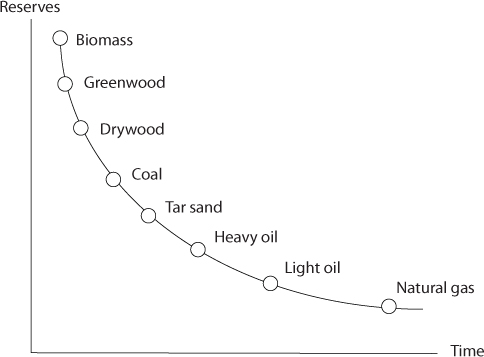

Progress in humanity is synonymous with how we manage our energy needs. Notwithstanding what is routinely put out in popular science and mainstream media, the energy crisis that we face today is not a result of continuous progress in society, let alone the product of human evolutionary traits. The current civilization is unique in the sense that no other epoch has been fixated on fear and greed the way this current world has been. In today’s culture of fear and greed, in which every fear is perpetrated in order to scheme off the scared population, there are many tactics that are in place. The most popular one is that we are in every war because it is about oil. Then, the scientific community, which is also another sellout to the grand scheme, rings another warning bell, we are soon to run out of oil, and there must be another resource put in place for an extra cost. The moment we make progress toward increasing the oil supply through new techniques of oil extraction, expansion of resource base, there comes another debilitating fear – the global warming. It used to be the so-called peak oil theories that popped out from all corners. It is the same hype that was concocted in last century about the world running out of coal. Then comes another round of apoplectic messiahs that warn us about global warming and vilifying carbon – of all things – as the enemy of life on earth. Before anyone can get out of gasping, the economists come out and ring another warning bell – all these have to be remedied for a fee and we simply do not have enough to go around.

In this section, the history of technological development from the pre-industrial age to the petroleum is reviewed. There is a colloquial expression to the effect that exact change plus faith in the Almighty will always get you downtown on the public transit service. On the one hand, with or without faith, all kinds of things could happen with the public transit service, before the matter of exact fare even enters the picture. On the other hand, with or without exact fare, other developments could intervene to alter the availability of the service and even cancel it. This helps isolate one of the key difficulties in uncovering and elaborating the actual science of increased carbon-dioxide concentrations in the atmosphere. All kinds of activities can increase CO2 output into the atmosphere; but precisely which activities can be held responsible for consequent global warming or other deleterious impacts? Both the activity and its CO2 output are necessary, but neither by itself is sufficient, for establishing what the impact may be and whether it is deleterious. The delinearized history has to involve different epochs divided up by pre- and post-industrial age and then the petroleum era. Of course, the CO2 emission history uses pre-industrial period as the benchmark. However, when it comes to causation, petroleum industry is held accountable for the rise in CO2 level. Therefore, we have to study the petroleum period separately.

9.3.1 Pre-Industrial

One commonly encountered argument attempts to frame the historical dimension of the problem, more or less, as follows: once upon a time, the scale of humanity’s efforts at securing a livelihood was insufficient to affect overall atmospheric levels of CO2. The implication is that, with the passage of time and the development of more extensive technological intervention in the natural-physical environment, everything just got worse. However, from prehistoric times onward, there have been important periods of climate change in which the causes could not have had anything to do with human intervention in the environment, especially not at the level we see today that is widely blamed for “global warming.”

Nevertheless, these had consequences that were extremely significant and even devastating for wide swaths of subsequent human life on this planet. One of the best-known climate changes was the period of almost two centuries of cooling in the northern hemisphere during the 13th and 14th centuries CE, in which Greenland is said to have acquired much of its most recent ice cover. This definitively brought to an end any further attempts at colonizing the north and northwest Atlantic by Scandinavian tribes (descended from the Vikings), creating the opening for later commercial fisheries to expand into the northwest Atlantic by using Basque, Spanish, Portuguese and eventually French and British fishermen and fishing enterprises – the starting-point of European colonization of the North American continent.

9.3.2 Industrial Age

The Industrial Age will be remembered as the epoch that founded the culture of Dogma justified with the hubris of science. The critical review of all major theories (scientific as well as social) demonstrates that the most fundamental and fatal shortcoming of these theories is their fundamental premises that are spurious or unnatural (Islam et al., 2018a). In fact, every diagnosis process starts off with a spurious first premise, making the entire process aphenomenal. Not surprisingly, every publication comes up with a different explanation for the same set of data. The problem is further convoluted by the fact there is no standard theory that is free from inherent spurious premises (Islam et al., 2014). Yet, there is no shortage of hubris from both scientists and social scientists. Wilhelm Ostwald (1853–1932, recipient of the 1909 Nobel Prize in Chemistry for his work on catalysis) was credited for expanding explicitly “the second law of energetics to all and any action and in particular to the totality of human actions…. All energies are not ready for this transformation, only certain forms which have been therefore given the name of the free energies…. Free energy is therefore the capital consumed by all creatures of all kinds and by its conversion everything is done” (Ostward, 1912; quoted by Smil, 2017). Ostwald is usually credited with inventing the Ostwald process (patent 1902), used in the manufacture of nitric acid, although the basic chemistry had been patented some 64 years earlier by Kuhlmann. The science that Ostward introduced would pave the way to developing chemical fertilizers that triggered ‘green revolution’ around the world in 1960s. This ‘revolution’ was completed with another ‘miraculous’ technology in name of DDT, which fetched a Nobel prize for Paul Hermann Müller in 1948, only to be banned in 1972. We have seen in previous chapter how consequential these technological breakthroughs have been to the environment, in particular to the carbon culture.

It is the same story in social science and economics. The term “social science” first appeared in the 1824 book An Inquiry into the Principles of the Distribution of Wealth Most Conducive to Human Happiness. This ‘happiness’ applied to the Newly Proposed System of Voluntary Equality of Wealth by William Thompson (1775–1833). The concern for social equality was in the core of the social thinking but did not have a definite form, let alone concrete theories. Auguste Comte (1797–1857) argued that ideas pass through three rising stages, theological, philosophical and scientific. He defined the difference as the first being rooted in assumption, the second in critical thinking, and the third in positive observation. This framework, still rejected by many, encapsulates the thinking which was to push economic study from being a descriptive to a mathematically based discipline. Karl Marx was one of the first writers to claim that his methods of research represented a scientific view of history in this model. With the late 19th century, attempts to apply equations to statements about human behavior became increasingly common. Among the first were the “Laws” of philology, which attempted to map the change over time of sounds

After Newton, all modern European social theories are premised on considering humans as ‘just another species’, disconnecting human conscience or consciousness from human behavior. Malthus (1766–1834), who inspired the likes of Charles Darwin, Paul R. Ehrlich, Francis Place, Raynold Kaufgetz, Garrett Hardin, John Maynard Keynes, Pierre François Verhulst, Alfred Russel Wallace, William Thompson, Karl Marx, Mao Zedong, introduced this false premise.

Blumer (1954) dared question social theories advanced in the enlightened world, albeit limited to empirical science. He wrote:

“Now, it should be evident that concepts in social theory are distressingly vague. Representative terms like mores, social institutions, attitudes, social class, value, cultural norm, personality, reference group, social structure, primary group, social process, social system, urbanization, accommodation, differential discrimination and social control do not discriminate cleanly their empirical instances. At best they allow only rough identification, and in what is so roughly identified they do not permit a determination of what is covered by the concept and what is not.”

All modern European social theories are premised on considering humans as ‘just another species’, disconnecting human conscience or consciousness from human behavior (Islam et al., 2013). Here, we will discuss one of the most widely accepted (consciously or otherwise) theories that is based on the premise that humans are liabilities. This theory is in the core of all other theories, including peak oil theory. Reverend Thomas Robert Malthus, a British scholar advanced the theory of rent. In his publications during 1798 through 1826, he identified various factors that would affect human population. For him, the population gets controlled by disease or famine. His predecessors believed that human civilization can be improved without limitations. Malthus, on the other hand thought that the “dangers of population growth is indefinitely greater than the power in the earth to produce subsistence for man”. He added his religious fervor to this doctrine and considered it divine and wrote:

“Must it not be acknowledged by an alternative examiner of the histories of mankind, that in every age and in every State in which man has existed, or does now exist that the increase of population is necessarily limited by the means of subsistence, that population does invariably increase when the means of subsistence increase, and, that the superior power of population is repressed, and the actual population kept equal to the means of subsistence, by misery and vice.”

He supported the Corn Laws and opposed the poor law. The poor law had been in place for centuries to deal with the ‘nuisance’ of beggars and ‘impotent poor’. The Corn Laws were supposed to protect local farmers from less expensive imports of wheat and other food grains. The Corn Laws needs a bit elaboration. In 1816, the volcanic eruption in the Indonesian archipelago incurred tremendous consequences. It spewed an enormous volume of dust into the atmosphere that travelled around the globe in the jet stream and led to the “year with no summer” in Europe and the northern half of North America. In 1817, grain crops on the continent of Europe failed. In industrial Great Britain, where the factory owners and their politicians boasted how the country’s relatively (compared to the rest of the world) highly advanced industrial economy had overcome the “capriciousness of Nature,” hunger and famine actually stalked the English countryside for the first time in more than a century and a half. The famine conditions were blamed on the difficulties attending the import of extra supplies of food from the European continent and led directly to a tremendous and unprecedented pressure to eliminate the Corn Laws – the system of high tariffs protecting English farmers and landlords from the competition of cheaper foodstuffs from Europe or the Americas. Politically, the industry lobby condemned the Corn Laws as the main obstacle to cheap food, winning broad public sympathy and support. Economically, the Corn Laws actually operated to keep hundreds of thousands employed in the countryside on thousands of small agricultural plots, at a time when the demands of expanding industry required uprooting and forced the rural population to work as factory laborers. Increasing the industrial reserve army would enable British industry to reduce wages. Capturing command of that new source of cheaper labor was, in fact, the industrialists’ underlying aim.

Without the famine of “the year with no summer,” it seems unlikely that British industry would have targeted the Corn Laws for elimination, therefore blasting its way into dominating world markets. Even then, because of the still prominent involvement of the anti-industrial lobby of aristocratic landlords who dominated the House of Lords, it would take British industry nearly another 30 years. Between 1846 and 1848 Parliament eliminated the Corn Laws, industry captured access to a desperate workforce fleeing the ruin brought to the countryside, and overall industrial wages were driven sharply downwards. On this train of economic development, the greatly increased profitability of British industry took the form of a vastly whetted appetite for new markets at home and abroad, including the export of important industrial infrastructure investments in “British North America,” i.e., Canada, Latin America, and India. Extracting minerals and other valuable raw materials for processing into new commodities in this manner brought an unpredictable level of further acceleration to the industrialization of the globe in regions where industrial capital had not accumulated significantly, either because traditional development blocked its role or because European settlement remained sparse.

Malthus’ theories were later rejected based on empirical observations that confirmed that famine and natural disasters are not the primary cause of population control. Many economists, including, Nobel Laureate Economist Amartya Sen (1998) who confirmed the man-made factor playing a greater role in controlling the human population. However, Malthus’ theory lives on in every aspect of European social science and hard science. Malthus’ most notable follower was Charles Darwin whose theory of natural selection is eerily similar to Mathusian theory. They are similar in two ways: 1) they both assume that humans are just another species, thus disconnecting human conscience from human being; 2) they both use natural causes as the sole factor in deciding human population, thus inferring they knew the underlying program of nature science. Of more significance is the fact that Darwin was considered to be a ‘hard scientist’ whereas Malthus was considered to be a social scientist and an economist. This aspect needs some elaboration. Darwin said that the emergence of a species distinct in definite ways from its immediate predecessor and new to the surrounding natural environment generally marked the final change in the sequence of steps in an evolutionary process. The essence of his argument concerned the non-linearity of the final step, the leap from what was formerly one species to distinctly another species. Darwin was silent on the length of time that may have passed between the last observed change in a species-line and the point in time at which its immediate predecessor emerged – the characteristic time of the predecessor species – was the time period in which all the changes so significant for later on were prepared. This latter could be eons, spanning perhaps several geological eras. This idea of tNATURAL as characteristic time is missing from every European theorist. This is not unexpected. Ever since the work of Thomas Aquinas, Europeans scientists simply repeated the dogmatic adherence to tangible timelines while distancing themselves from doctrinal philosophy. However, as Islam et al. (2018a) have recently pointed out, they did not employ the scientific methodology of Averroes while accepted him as the father of secular philosophy in Europe as well as claimed themselves to be secular. This claim was not genuine. A second, but equally telling source of pressure on social scientists to mathematize their research methodology was a sense that their work would not be taken seriously as scientific without some such mathematical rigor. As the models and mathematics from the so-called “exact” sciences would hardly be appropriate or seem credible in any field of study focusing on human beings and their incredible variety of needs, wants and impulses, another kind of mathematics would have to do. This fascination comes from another trail of cognition that was popularized when the term social science was introduced. Questions of history and historical phenomena were also a convenient target because of the lack of any means to describe them with any meaningful, non-trivial mathematical model.

In terms of economics and purely social theory, John Maynard Lord Keynes was probably the biggest supporter of Malthus. Similar to Malthus, Keynes also believed that historical time had nothing to do with establishing the truth or falsehood of economic doctrine. “In the long run, we are all dead,” he wrote. He tied this to a stance that attacked all easy acceptance without question of any of the underlying assumptions propping up all forms of orthodoxy. Accordingly, this retort was taken as the sign of a fresh and rebellious spirit. However, in his own theoretical work he was frequently at pains to differentiate what happens to individuals who are driven by short-term considerations from what happens at the societal level at which he was theorising about broad historically sweeping movements of economic cause and effect (Keynes, 1936).

Keynes would emerge completely unscathed. No one challenged his theories that were accepted at face value with doctrinal fervor. Until now, every Nobel Laureate in Economics derives his/her inspiration from Keynes. One of them is Stiglitz, who was deconstructed by Zatzman and Islam (2007) as well as Zatzman (2012, 2013).

Even though not explicitly recognized for obvious reasons, Karl Marx also derived his inspiration from Malthus and did little to change the premise that Malthus built his theories on. For Karl Marx, however, human beings did have connection to the conscience but that conscience was solely dedicated to “survival”. This ‘conscience’ is not any different from what has been known as ‘instinct’ – something that every animal has. This survival, in Marx’s belief, was the reason for dialectic materialism and class struggle. Similar to what has been pointed out in the discourse on human civilization (Islam et al., 2013, 2014a, 2014b, 2014c), the only possibility that Karl Marx did not investigate is the existence of higher conscience that makes a human unselfish and considerate of long-term consequence. Such deviation from long-term approach is strictly Eurocentric. Such addiction to short-term approach was non-existent prior to Thomas Aquinas and the adoption of doctrinal philosophy.

What made Karl Marx popular is his empathy for the poor and the oppressed. His notion of capitalism being the “dictatorship of bourgeoisie”, a notion by itself is based on the same premise of ‘human being is an evil animal’ that Karl Marx used, hit a sympathetic cord with a wide range of followers. Similar to what Malthus predicted in terms of population control by famine and natural disasters, Karl Marx predicted that Capitalism would be subject to internal conflicts and will implode, being replaced with socialism. This in turn will lead to the replacement of “dictatorship of bourgeoisie” with “dictatorship of proletariat”. His theory was so convincing that Soviet Union was formed in 1922, leading the way to many countries that formally adopted Marxism as the formal political system of the country. In 1949, the People’s Republic of China became communist, making nearly half of the world population to immerse into a political system that can be best described as the dream application of Karl Marx’s political theory. Marx is recognized as one of the most influential person of all time (Hart, 2000). Yet, the prediction of Karl Marx that capitalism will be replaced with socialism and eventually give rise to stateless, classless society has utterly failed. Instead of stateless society ruled by “workers”, socialism created the biggest and most repressive government regimes of human history. Many celebrate the fact that every promise of capitalism has made in terms free market economy has been broken and monopoly has become the modus operandi of biggest corporations of ‘free market’ economy, but few point out that such is the demise of Marxist predictions in societies that did everything to uphold Marx’s ideals.

Even events like the volcanic eruption in the Indonesian archipelago in 1816 incurred tremendous consequences. It spewed an enormous volume of dust into the atmosphere that travelled around the globe in the jet stream and led to the “year with no summer” in Europe and the northern half of North America. In 1817, grain crops on the continent of Europe failed. In industrial Great Britain, where the factory owners and their politicians boasted how the country’s relatively (compared to the rest of the world) highly advanced industrial economy had overcome the “capriciousness of Nature,” hunger and famine actually stalked the English countryside for the first time in more than a century and a half. The famine conditions were blamed on the difficulties attending the import of extra supplies of food from the European continent and led directly to a tremendous and unprecedented pressure to eliminate the Corn Laws – the system of high tariffs protecting English farmers and landlords from the competition of cheaper foodstuffs from Europe or the Americas. Politically, the industry lobby condemned the Corn Laws as the main obstacle to cheap food, winning broad public sympathy and support. Economically, the Corn Laws actually operated to keep hundreds of thousands employed in the countryside on thousands of small agricultural plots, at a time when the demands of expanding industry required uprooting and forced the rural population to work as factory laborers. Increasing the industrial reserve army would enable British industry to reduce wages. Capturing command of that new source of cheaper labor was, in fact, the industrialists’ underlying aim. Without the famine of “the year with no summer,” it seems unlikely that British industry would have targeted the Corn Laws for elimination, therefore blasting its way into dominating world markets. Even then, because of the still prominent involvement of the anti-industrial lobby of aristocratic landlords who dominated the House of Lords, it would take British industry nearly another 30 years. Between 1846 and 1848 Parliament eliminated the Corn Laws, industry captured access to a desperate workforce fleeing the ruin brought to the countryside, and overall industrial wages were driven sharply downwards. On this train of economic development, the greatly increased profitability of British industry took the form of a vastly whetted appetite for new markets at home and abroad, including the export of important industrial infrastructure investments in “British North America,” i.e., Canada, Latin America, and India. Extracting minerals and other valuable raw materials for processing into new commodities in this manner brought an unpredictable level of further acceleration to the industrialization of the globe in regions where industrial capital had not accumulated significantly, either because traditional development blocked its role or because European settlement remained sparse.

9.3.3 Age of Petroleum

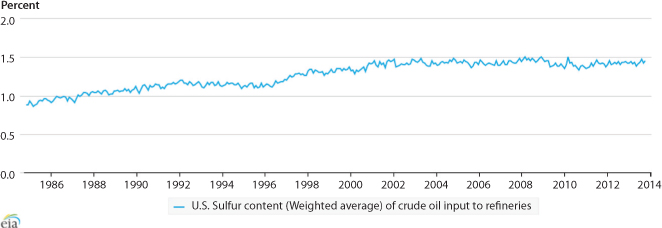

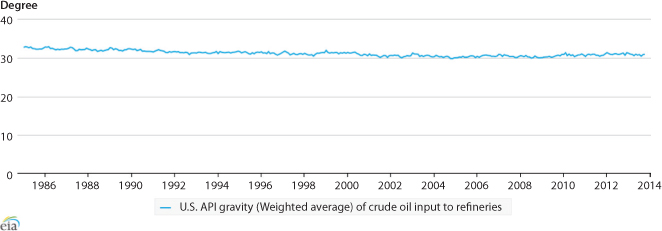

The modern era of oil production and the ensuing age of petro-politics began on August 27, 1859, when Edwin L. Drake drilled the first successful oil well 69 feet deep near Titusville in northwestern Pennsylvania. Just five years earlier, the invention of the kerosene lamp had ignited intense demand for oil. By drilling an oil well, Drake had hoped to meet the growing demand for oil for lighting and industrial lubrication. Drake’s success inspired hundreds of small companies to explore for oil. In 1860, world oil production reached 500,000 barrels; by the 1870s production increased phenomenally to 20 million barrels annually. In 1879, the first oil well was drilled in California; and in 1887 an oil well was drilled, in Texas. But as production boomed, prices fell and oil industry profits declined. However, in 1882, John D. Rockefeller had devised a solution to the problem of competition in the oil fields: the Standard Oil Trust. This brought together many of the leading refiners in the United States by controlling crude oil refining, the Trust was able to control the price of oil.

The world economy entered the Age of Petroleum with the rise of an industrial-financial monopoly in one sector of production after another in both Europe and America before and after World War I. Corresponding to this has been the widest possible extension of chemical engineering – especially the chemistry of hydrocarbon combination, hydrocarbon catalysis, hydrocarbon manipulation and rebonding – on which the refining and processing of crude oil into fuel and myriad byproducts, such as plastics and other synthetic materials, crucially depend. As a result, there is no activity, be it production or consumption, in any society today that is tied to the production and distribution of such an output where adding to the CO2 burden in the atmosphere can be avoided or significantly mitigated. In these developments, carbon and CO2 are, in fact, vectors carrying many other toxic compounds and byproducts of these chemically engineered processes. Atmospheric absorption of carbon and CO2 from human activities or other natural non-industrial activities would normally be continuous. However, what occurs when hydrocarbon complexes combine with inorganic and other substances, which occurs nowhere in nature, is much less predictable, and – on the available evidence – not benign, either. From a certain standpoint, there is logic in attempting to estimate the effects of these other phenomena by taking carbon and CO2 levels as vectors. However, there has never been any justification to assume the CO2 level itself is the malign element. Such a notion is a non-starter in science in any event, which raises the question: Just what is the role of science? Today, there is no large petrochemical company or syndicate that has not funded a study or group interested in CO2 levels as a global warming index – whether to discredit or to affirm such a connection. It is difficult to avoid the obvious inference that these very large enterprises, fiercely competing to retain their market shares against rivals, do not have a significant stake in engineering a large and permanent split in public opinion based on confusing their intoxication of the atmosphere with rising CO2 levels. Whether the consideration is refining for automobile fuels, processing synthetic plastics, or concocting synthetic crude, behind a great deal of the propaganda regarding “global warming” stands a huge battle among oligopolies, cartels, and monopolies over market share. The science of “global warming” is the only means that can separate the key question, “What is necessary to produce goods and services that are nature-friendly?” from the toxification of the environment as a byproduct of the anti-nature bias of chemical engineering in the clutches of the oil barons.

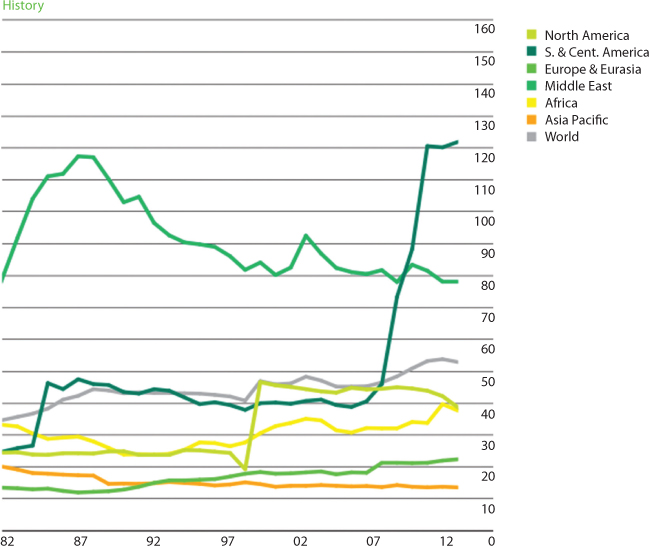

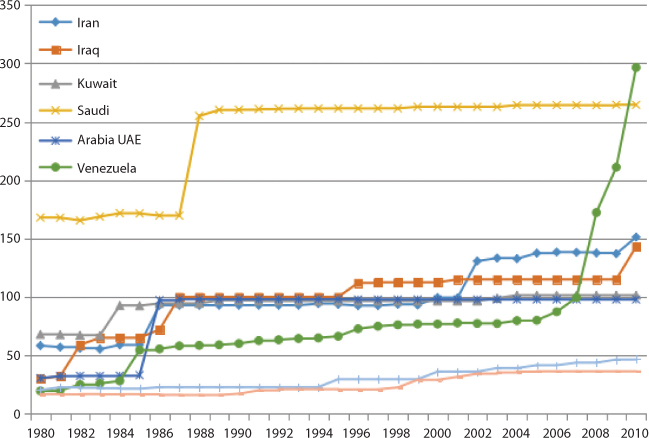

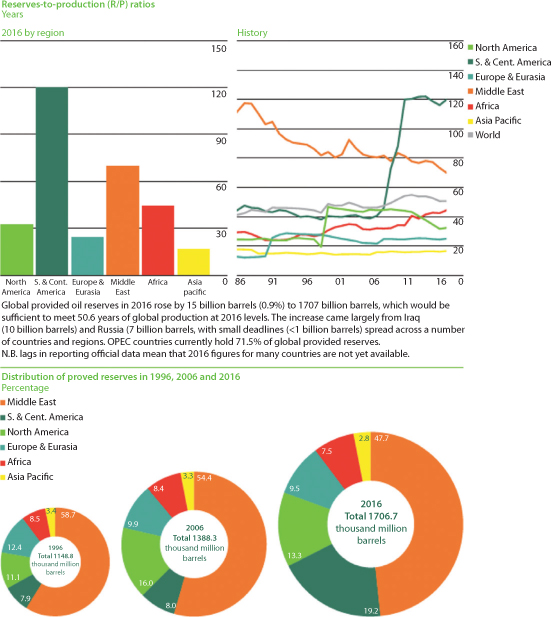

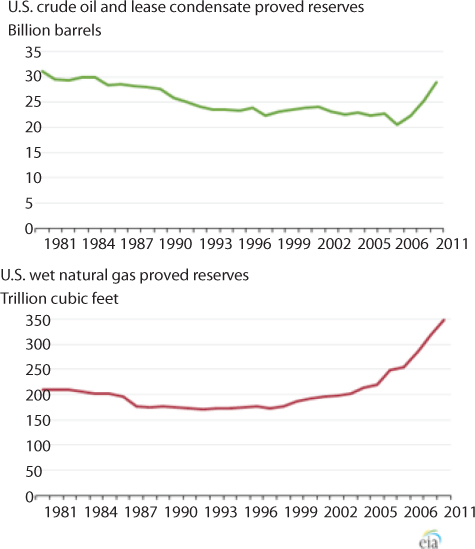

Up until the commencement of World War I in 1914, the United States produced between 60 and 70 percent of the worldwide oil supply. By 1920, oil production reached 450 million barrels – prompting fear that the United States was about to deplete the available reserves and, hence, run out of oil. In fact, Government officials predicted that oil reserves in the United States would last only ten more years. As fears grew that the oil reserves of the United States were seriously depleted, the search for oil turned to a worldwide basis. As a result, crude oil was discovered in Mexico at the beginning of the 20th Century, in Iran in 1908, in Venezuela during World War I, and in Iraq in 1927. Because of the politics of the time and the still-existing empires and/or colonies, many of the new discoveries occurred in areas dominated by Britain and the Netherlands: such as in the Dutch East Indies (now, Indonesia), Iran, and various British mandates in the Middle East. By 1919, Britain controlled 50% v/v of the proven world reserves of crude oil.

However, after World War I, a struggle for the control of world oil reserves erupted. The British, Dutch, and French excluded companies based in and originating in the United States from owning oil fields in territories under their sphere of control. Not surprisingly, the Congress of the United States retaliated in 1920 by adopting the Mineral Leasing Act, which denied access to American oil reserves to any foreign country that restricted American access to its reserves. The dispute was ultimately resolved during the 1920 s when American-based and owned oil companies were finally allowed to drill in the then British Middle East and also in the Dutch East Indies.

The fear that oil reserves in the United States were depleted to the point of near exhaustion ended abruptly in 1924, with the discovery of extensive crude oil fields in Texas, Oklahoma, and California. These discoveries, along with production of crude oil from fields in Mexico, the Soviet Union, and Venezuela, combined to significantly lower the price of crude oil. By 1931, with crude oil selling for 10 cents a barrel (which may be compared to a value – approximately $1.54 per barrel – much less than the current variable price of 50$ to $100 per barrel of oil), domestic oil producers in the United States demanded restrictions on production in order to raise prices. In fact, the major producers of crude oil – Texas and Oklahoma – passed state laws and stationed militia units at oil fields to prevent to enforce these laws and prevent drillers from exceeding production quotas. However, despite these measures, the price of crude oil continued to fall. In a final bid to solve the problem of overproduction, the federal government – under the National Recovery Administration (NRA) – imposed production restraints, import restrictions, and price control. After the Supreme Court of the United States declared the actions of the National Recovery Administration (i.e., the Federal government) to be unconstitutional, the Federal government took and additional step and imposed a tariff on foreign oil. During World War II, the oil surpluses of the 1930 s quickly disappeared – six billion barrels (6 × 109 bbls) of the seven billion barrels (7 × 109 bbls) of petroleum used by the allies during the war came from the United States. Again, there was concern that the United States was running out of oil.

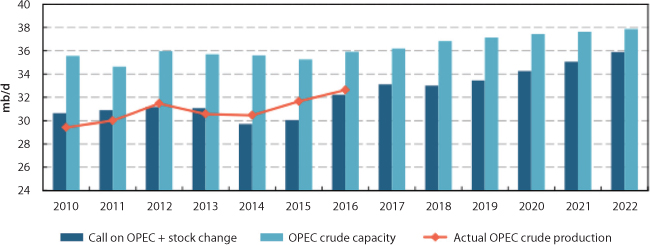

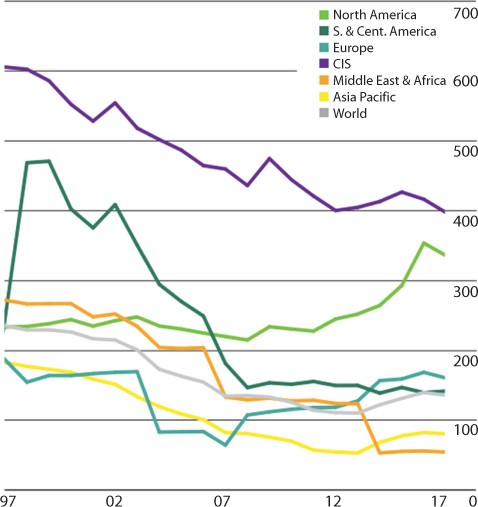

On the other hand, world oil prices were at such low levels that in 1960 Iran, Venezuela, and oil producers in the Middle Eastern countries form an alliance (often referred to as a cartel) that became known as the Organization of Petroleum Exporting Countries (OPEC) to negotiate oil prices – for the most part, higher prices of crude oil. This price-fixing came to a head in the early 1970 s when the United States, which depended on the Middle East for a third of its oil, realized that foreign (non-domestic) oil producers were in a position to control and raise oil prices. The oil embargo of 1973 and 1974, during which oil prices quadrupled, and the oil crisis of 1978 and 1979, when oil prices doubled and emphasized the vulnerability of the United States to foreign producers (Yergin, 1991; Speight, 2011b; Yergin, 2011). However, the oil crises of the 1970 s had an unanticipated side-effect when higher oil prices stimulated conservation and exploration for new oil sources. As a result of increasing supplies and declining demand, oil prices fell from $35 a barrel in 1981 to $9 a barrel in 1986. The sharp slide in world oil prices was one of the factors that led Iraq to invade neighboring Kuwait in 1990 in a bid to gain control over a substantial portion (in excess of 40% v/v) of Middle Eastern oil reserves. On the other hand, there were oil-producing countries that existed and operated outside of the OPEC cartel which were responsible for producing 60% v/v of the world’s oil but they faced increasing production hurdles. Many of these non-OPEC producers had older, less productive wells and rising costs for new projects, and in some cases rising domestic demand, cut into the export totals, leading to increases in unconventional oil production (NPC, 2007).

Five of the world’s fifteen largest oil producers are outside of OPEC – those countries are Russia, the United States, China, Mexico, Canada, Norway, and Brazil. Some major producers, such as the United States, Mexico, and Norway, have experienced a decline in production in recent years but, on the other hand, non-OPEC production, although declining, has been bolstered by the significant increases in production from Brazil, Canada, Russia, and other former Soviet states (BP, 2008) as well as hitherto unavailable oil production from tight formations and from shale formations through expansion of hydraulic fracturing projects (Speight, 2015).

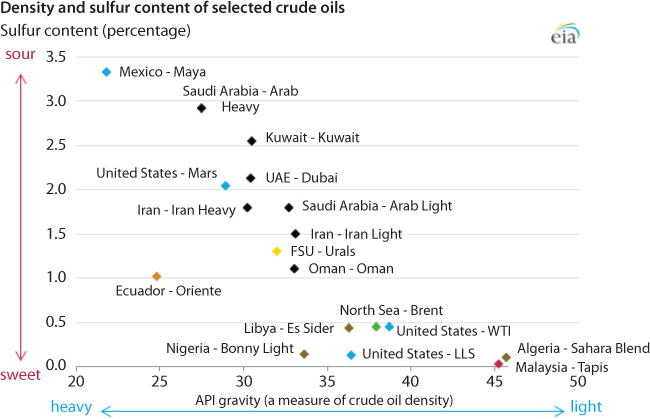

9.3.3.1 High-Acid Crude Oils and Opportunity Crudes

Within the petroleum family are two different types of crude oils based on price: (1) opportunity crude oils and (2) high acid crude oils. Opportunity crude oils are often dirty and need cleaning before refining by removal of undesirable constituents such as high-sulfur, high-nitrogen, and high-aromatics (such as polynuclear aromatic) components. A controlled visbreaking treatment would clean up such crude oils by removing these undesirable constituents (which, if not removed, would cause problems further down the refinery sequence) as coke or sediment. There is also the need for a refinery to be configured to accommodate opportunity crude oils and/or high acid crude oils which, for many purposes are often included with heavy feedstocks.

High acid crude oils are crude oil that contains considerable proportions of naphthenic acids which, as commonly used in the petroleum industry, refers collectively to all of the organic acids present in the crude oil (Shalaby, 2005: Rikka, 2007). By the original definition, a naphthenic acid is a monobasic carboxyl group attached to a saturated cycloaliphatic structure. However, it has been a convention accepted in the oil industry that all organic acids in crude oil are called naphthenic acids. Naphthenic acids in crude oils are now known to be mixtures of low to high molecular weight acids and the naphthenic acid fraction also contains other acidic species.

Naphthenic acids can be very water-soluble to oil-soluble depending on their molecular weight, process temperatures, salinity of waters, and fluid pressures. In the water phase, naphthenic acids can cause stable reverse emulsions (oil droplets in a continuous water phase). In the oil phase with residual water, these acids have the potential to react with a host of minerals, which are capable of neutralizing the acids. The main reaction product found in practice is the calcium naphthenate soap (the calcium salt of naphthenic acids). The total acid matrix is therefore complex and it is unlikely that a simple titration, such as the traditional methods for measurement of the total acid number, can give meaningful results to use in predictions of problems. An alternative way of defining the relative organic acid fraction of crude oils is therefore a real need in the oil industry, both upstream and downstream.

High acid crude oils cause corrosion in the refinery – corrosion is predominant at temperatures in excess of 180 °C (355°F) (Kane and Cayard, 2002; Ghoshal and Sainik, 2013) – and occurs particularly in the atmospheric distillation unit (the first point of entry of the high-acid crude oil) and also in the vacuum distillation units. In addition, overhead corrosion is caused by the mineral salts, magnesium, calcium and sodium chloride which are hydrolyzed to produce volatile hydrochloric acid, causing a highly corrosive condition in the overhead exchangers. Therefore these salts present a significant contamination in opportunity crude oils. Other contaminants in opportunity crude oils which are shown to accelerate the hydrolysis reactions are inorganic clays and organic acids.

In addition to taking preventative measure for the refinery to process these feedstocks without serious deleterious effects on the equipment, refiners will need to develop programs for detailed and immediate feedstock evaluation so that they can understand the qualities of a crude oil very quickly and it can be valued appropriately and management of the crude processing can be planned meticulously.

9.3.3.2 Oil From Tight Formations and From Shale Formations

In addition, oil from tight sandstone and from shale formations (tight oil) is another type of crude oil (Speight, 2014, 2015). Typically, tight oil is conventional oil that occurs in low-permeability reservoirs. The oil contained in such reservoirs will not flow to the wellbore without assistance from advanced drilling (such as horizontal drilling) and fracturing (hydraulic fracturing) techniques. There has been a tendency to refer to this oil as shale oil. This terminology is incorrect insofar as it is confusing and the use of such terminology should be discouraged as illogical since shale oil has been (for decades) the name given to the distillate produced from oil shale by thermal decomposition (Lee, 1990; Scouten; 1990; Speight, 2012, 2014, 2015).

Tight sandstone formations and shale formations are heterogeneous and vary widely over relatively short distances. Thus, even in a single horizontal drill hole, the amount recovered may vary, as may recovery within a field or even between adjacent wells. This makes evaluation of plays and decisions regarding the profitability of wells on a particular lease difficult. Production of oil from tight formations requires at least 15 to 20 percent natural gas in the reservoir pore space to drive the oil toward the borehole; tight reservoirs which contain only oil cannot be economically produced (EIA, 2013).

The challenges associated with the production of oil from shale formations are a function of their compositional complexities and the varied geological formations where they are found. These oils are light, but they are very waxy and reside in oil-wet formations. These properties create some of the main difficulties associated with oil extraction from the shale. Such problems include scale formation, salt deposition, paraffin wax deposits, destabilized asphaltene constituents, corrosion and bacteria growth. Multi-component chemical additives are added to the stimulation fluid to control these problems.

Oil from tight shale formation is characterized by low-asphaltene content, low-sulfur content and a significant molecular weight distribution of the paraffinic wax content. Paraffin carbon chains of C10 to C60 have been found, with some shale oils containing carbon chains up to C72. To control deposition and plugging in formations due to paraffins, the dispersants are commonly used. In upstream applications, these paraffin dispersants are applied as part of multifunctional additive packages where asphaltene stability and corrosion control are also addressed simultaneously.

Scale deposits of calcite, carbonates and silicates must be controlled during production or plugging problems arise. A wide range of scale additives is available. These additives can be highly effective when selected appropriately. Depending on the nature of the well and the operational conditions, a specific chemistry is recommended or blends of products are used to address scale deposition.

Another challenge encountered with oil from tight shale formations is the transportation infrastructure. Rapid distribution of shale oils to the refineries is necessary to maintain consistent plant throughput. Some pipelines are in use, and additional pipelines are being constructed to provide consistent supply. During the interim, barges and railcars are being used, along with a significant expansion in trucking to bring the various these oils to the refineries. Eagle Ford production is estimated to increase by a factor of 6: from 350,000 bpd to approximately 2,000,000 bpd by 2017. Thus, a more reliable infrastructure is needed to distribute this oil to multiple locations. Similar expansion in oil production is estimated for Bakken and other identified (and perhaps as yet unidentified) tight shale formations.

9.3.3.3 Natural Gas

The generic term natural gas applies to gas commonly associated with petroliferous (petroleum-producing, petroleum-containing) geologic formations. Natural gas generally contains high proportions of methane (a single carbon hydrocarbon compound, CH4) – higher molecular weight paraffins (CnH2n+2) generally containing up to eight carbon atoms may also be present in small quantities (Mokhatab et al., 2006; Speight, 2007, 2014). The hydrocarbon constituents of natural gas are combustible, but nonflammable non-hydrocarbon components such as carbon dioxide, nitrogen, and helium are also present in the minority and are regarded as contaminants.

In addition to the natural gas which exists in petroleum reservoirs, there are also those reservoirs in which natural gas may be the sole occupant. The principal constituent of natural gas is methane, but other hydrocarbons, such as ethane, propane, and butane, may also be present. Carbon dioxide is also a common constituent of natural gas as well as trace amounts of rare gases, such as helium, may also occur – certain natural gas reservoirs are a source of these rare gases. Just as petroleum varies in composition, natural gas also has varied composition depending upon the reservoir from which it is produced. Furthermore, differences in natural gas composition not only occurs between different reservoirs but wells in the same field may also produce natural gases that are different in composition (Speight, 1990, 2007; Mokhatab et al., 2006; Speight, 2014). Thus, there is no single composition of components which might be termed typical natural gas.

Like petroleum, natural gas has been known for many centuries, but its initial use was probably more for religious purposes rather than as a fuel. For example, gas wells were an important aspect of religious life in ancient Persia (modern-day Iran) because of the importance of fire in the religion of that region. In classical times these wells were often flared and must have been, to say the least, awe inspiring (Forbes, 1964). However, the use of petroleum has been relatively well documented (more so than natural gas) because of its use in warfare and as mastic for walls and roads (Henry, 1873; Abraham, 1945; Forbes, 1958a, 1958b, 1959; James and Thorpe, 1994).

There are several general definitions that have been applied to natural gas that require explanation. For example, associated or dissolved natural gas occurs either as free gas or as gas in solution in the petroleum. Gas that occurs as a solution in the petroleum is dissolved gas whereas the gas that exists in contact with the petroleum (gas cap) is associated gas. In addition, lean gas is gas in which methane is the major constituent and wet gas contains considerable amounts of the higher molecular weight hydrocarbons. Sour gas contains hydrogen sulfide whereas sweet gas contains very little, if any, hydrogen sulfide. In direct contrast to the terminology of the petroleum industry where the residue (residuum, resid) is the high boiling material left after distillation (Speight, 2014), residue gas is natural gas from which the higher molecular weight (higher boiling) hydrocarbons have been extracted and so is the lowest boiling hydrocarbon in natural gas. Finally casing head gas (casinghead gas) is derived from petroleum but is separated at the separation facility at the well-head.

To further define the terms dry and wet in quantitative measures, the term dry natural gas indicates that there is less than 0.1 gallon (one gallon, US,=264.2 m3) of gasoline vapor (higher molecular weight paraffins) per 1000 ft3 (one ft3 = 0.028 m3). The term wet natural gas indicates that there are such paraffins present in the gas, in fact more than 0.1 gal/1000 ft3.

Just as oil can be produced from tight shale formations (formations having less than 10% v/v porosity and less than 0.1 millidarcy permeability), natural gas is also produced from such formations. Such gas (also called shale gas) is a description for a field in which natural gas accumulation is locked in tiny bubble-like pockets within layered sedimentary rock such as shale and tight sandstone formations (Speight, 2015). Tight gas describes natural gas that is dispersed within low-porosity silt or sand areas that create a tight-fitting environment for the gas. In general, the same drilling and completion technology that is effective with shale gas can also be used to access and extract tight gas, even though the selection criteria for a given operation is dependent on many factors (Islam, 2014).

9.3.3.4 Heavy Oil

Heavy oil is a type of petroleum that is different from the conventional petroleum insofar as it is much more difficult to recover from the subsurface reservoir. This material (heavy oil) has a much higher viscosity (and lower API gravity) than conventional petroleum and recovery of heavy oil usually requires thermal stimulation of the reservoir (Speight, 2008, 2009, 2014).