Chapter 10

The Science of Global Warming

10.1 Introduction

Global hydrological cycle is integral to the climate system. Even though it has become customary to present climate change as a distinct crisis, the overall climate change pattern depends on the entire ecosystem. In focus is the global temperature that shows a consistent upward slope in the modern era, synchronized with modern data collection techniques. Ever since the creation of The Intergovernmental Panel on Climate Change (IPCC) in 1988, numerous projects have been dedicated to collecting data as well as projection with various models (e.g., Figure 10.1). As discussed in previous chapters, these predictive models are rudimentary and do not have scientific basis.

Figure 10.1 IPCC FAR BAU global surfacetemperature projection adjusted to reflectobserved GHG radiative forcings 1990-2011 vs. observed surfacetemperature changes (average of NASA GISS, NOAA NCDC, and HadCRUT4) for 1990 through 2012 (Houghton et al., 2001).

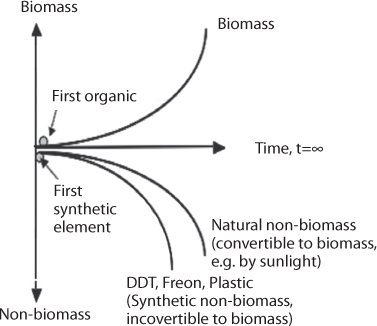

However, the lack of scientific foundation did not stop scientists from making claims. Scores of publications have claimed to know the exact temperature from centuries (even millennia) of the history or to make predictions for centuries to come. They have often added contributions of human activities – meaning activities related to fossil fuel production and utilization Figure 10.2. For these as well, there has been no scientific rigor. For instance, none considered the long-term impact of the use of pesticide, chemical fertilizers, and genetic alteration on the quality of atmospheric health.

Figure 10.2 Evolution of global mean surface temperature (GMST) over the period ofinstrumental 24 observations. Grey line shows monthly mean GMST in the HadCRUT4, NOAA, GISTEMP and 25 Cowtan-Way datasets, expressed as departures from1850–1900, with line thickness indicating 26 inter–dataset range. Allobservational datasets shown represent GMST as a weighted average of 27 nearsurface air temperature over land and sea surface temperature over oceans.Human–induced 28 (yellow) and total (human– and naturally–forced, orange)contributions to these GMST changes (Houghton et al., 2001).

Numerous stations have been installed and they have generated volumes of data. In general, the bias in data collection has been attributed to the time of data collection and locations of the station (Williams et al. (2012). These two biases have been the focus of subsequent studies (Muller et al. 2012) and few, if any, have ventured into commenting on the applicability of the data, let alone scientific merit of the data collected. It is true, that considerable bias exists regarding stations’ predominant coverage of developed nations and very poor coverage of polar regions (Cowtan and Way, 2014). However, a scientific study that would actually question the parameters that are being monitored is what is lacking.

In this chapter, a scientific investigation is conducted in order to answer questions that have eluded previous researchers. The discussion identifies real source of global warming and unravels the mysteries surrounding climate change issues.

10.2 Current Status of Greenhouse

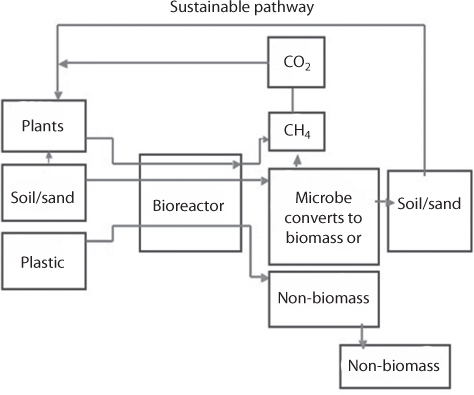

There is no doubt, there is almost 100% consensus that there is a climate change crisis that is caused by anthropogenic CO2 and is manifested through a rise in global temperature (Trivedi et al., 2013). In previous chapters, we have presented numerous graphs, depicting millennia of data, all allowing to ‘prove’ that that we are facing a great crisis that challenges the existence of the planet hearth. The reason that CO2 is a suspect is that CO2 is readily absorbed in the ocean that acts as a sink for virtually unlimited amount of CO2, yet the atmospheric concentration has been rising. In this analysis, CO2 has been lumped with other greenhouse gases, although unlike other greenhouse gases, CO2 is readily used by plants to synthesize carbohydrate, quickly renewing the life cycle. Compare that with, for instance, aerosols – the synthetic kind. Aerosols are not absorbed the environment and are instantly rejected by the ecosystem. Even methane and other greenhouse gases are subject to oxidation (at any temperature, because low-temperature oxidation is continuous). In addition, methane oxidation is a microbial metabolic process for energy generation and carbon assimilation from methane that is carried out by specific groups of bacteria, the methanotrophs. Methane (CH4) is oxidized with molecular oxygen to carbon dioxide, once again being reduced into useful gases. One can analyse each of the following greenhouse gases (listed in order of abundance) and show that there is no reason for them to become rejected by the ecosystem, except the last two, CFC, HCFC & HFC, which are not natural.

- Water vapour (H2O)

- Carbon dioxide (CO2)

- Methane (CH4)

- Nitrous oxide (N2O)

- Ozone (O3)

- Chlorofluorocarbons (CFCs)

- Hydrofluorocarbons (incl. HCFCs and HFCs)

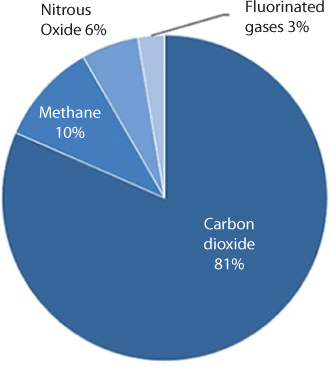

As shown in Figure 10.3. the least amount released are the synthetic chemicals. Naturally, this depiction draws more attention to CO2.

Figure 10.3 Greenhouse gasemission in 2016 (EPA, 2018).

Of course, in the bigger picture, CO2 is among trace gases, the concentration of all of which combined is less than 0.1%. The permanent gases whose percentages do not change from day to day are nitrogen, oxygen and argon. Nitrogen accounts for 78% of the atmosphere, oxygen 21% and argon 0.9%. Trace gases are: carbon dioxide, nitrous oxides, methane, and ozone. Of course, the entire atmosphere is embedded in water, the vapour form of which has a concentration ranging from 0–4%, depending on the location and time of the day. Within the trace gases, CO2 is the most abundant (93.497%), followed by Neon (4.675%), Helium (1.299%), Methane (0.442%), Nitrous Oxide (0.078%), and Ozone (0.010%). Note that each of these components can be natural origin or artificial origin. For instance, N2O can occur in nature and as such wouldn’t pose a threat to the environment. However, when N2O is emitted from a catalytic converter, it contains tiny fragments of the catalysts and other materials that didn’t exist in nature before. This makes the N20 distinctly different from the naturally occurring ones. Because, New Science doesn’t have a means of tracking source of material in the process of material characterization, pathway analysis of artificial chemicals as opposed to natural chemicals has eluded all modern scientists. Had there been a characterization based on natural and artificial sources of matter, a new scientifically accurate image would appear.

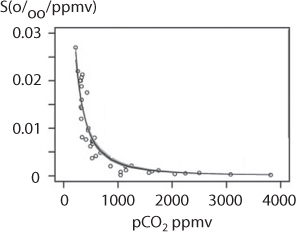

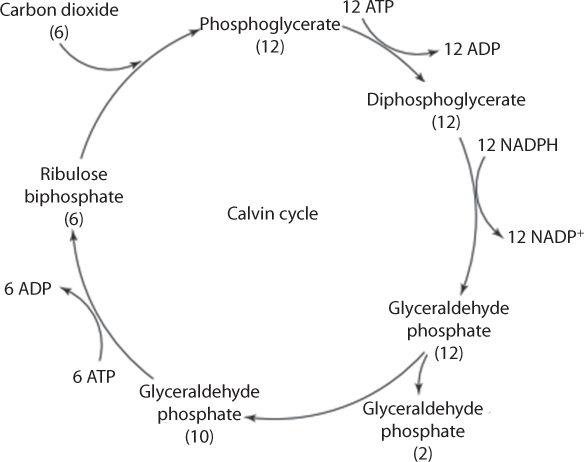

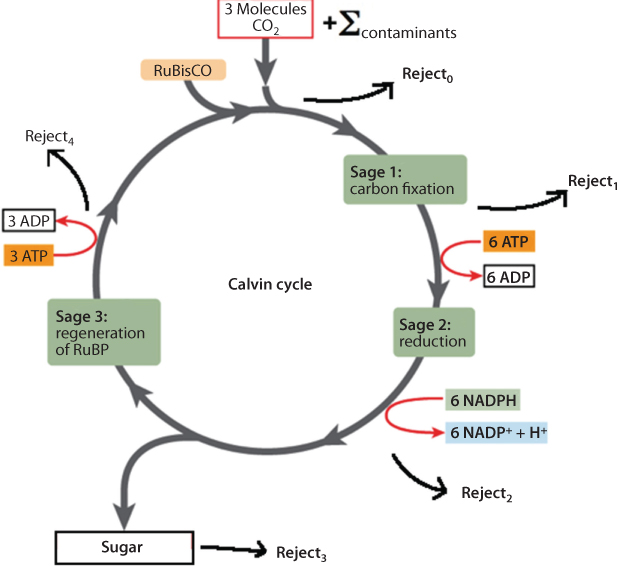

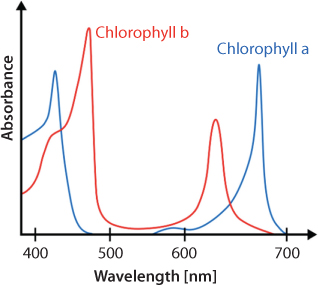

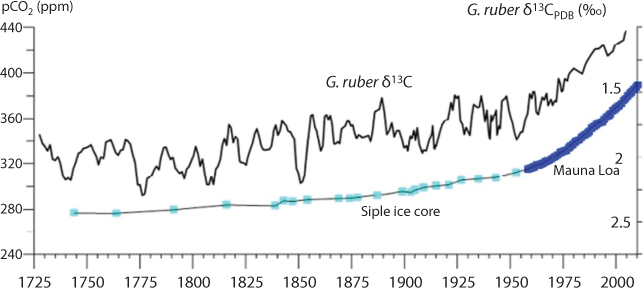

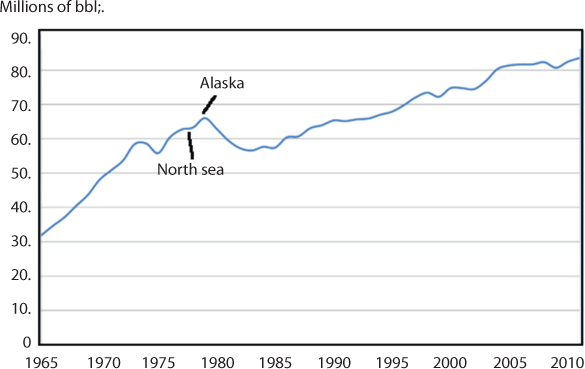

As has been stated numerous times in previous chapters, in addition to geological scale variation, CO2 concentrations show seasonal variations (annual cycles) that vary according to global location and altitude. Several processes contribute to carbon dioxide annual cycles: for example, uptake and release of carbon dioxide by terrestrial plants and the oceans, and the transport of carbon dioxide around the globe from source regions (the Northern Hemisphere is a net source of carbon dioxide, the Southern Hemisphere a net sink). In addition to the obvious seasonal variations and long-term trends in CO2 concentrations, there are also more subtle variations, which have been shown to correlate significantly with the regular El Niño-Southern Oscillation (ENSO) phenomenon and with major volcanic eruptions. These variations in carbon dioxide are small compared to the regular annual cycle, but can make a difference to the observed year-by-year increase in CO2. These are much more significant events than the burning of some 100 million barrels of oil. Carbon dioxide enters the atmosphere through burning fossil fuels (coal, natural gas, and oil), solid waste, trees and wood products, and also as a result of certain chemical reactions (e.g., manufacture of cement). On the other hand, CO2 is removed from the atmosphere when it is absorbed by plants as part of the biological carbon cycle. There would be perfect balance had it not been for certain fraction of CO2 that becomes contaminated and as such rejected by the plants as part of the carbon cycle. Carbon dioxide being the most important component of photosynthesis, the estimation of this lost CO2 is the greatest challenge in describing the global warming phenomenon.

It is noted that CO2 concentrations in the air were reasonably stable (typically quoted as 278 ppm) before industrialization. Since industrialisation (typically measured from the mid-18th century), CO2 concentrations have increased by about 30 per cent, thus prompting the current hysteria against carbon. However, it is not clear why petroleum products are targeted because the petroleum era is much more recent that the industrial era.

Similar to CO2, Methane concentrations in the air were reasonably stable before industrialization, typically quoted as 700 ppb. Since industrialisation, methane concentrations have increased by more than 150 per cent to present day values (~1790 ppb in 2009). In contrast with CO2, the rise in methane concentration is attributed to a variety of agricultural practices, such as rice and cattle farming, as well as from the transportation and mining of coal, the mining and reticulation of natural gas and oil, from livestock and other agricultural practices, by the decay of organic waste in municipal solid waste landfills, and from wetlands in response to global temperature increases. Methane concentrations show seasonal variations (annual cycles) that vary according to global location and altitude. The major processes that contribute to methane annual cycles are:

- release from wetlands, dependant on temperature and rainfall;

- destruction in the atmosphere by hydroxyl radicals;

- transport of methane around the globe from source regions (the Northern Hemisphere is a net source of methane, the Southern Hemisphere a net sink).

Similar to CO2, there are the more subtle inter-annual variations in methane, which have been shown to correlate with the regular ENSO phenomenon and with major volcanic eruptions. These variations in methane are small compared to the regular annual cycle.

The variation in methane concentration is, therefore, not considered to be the cause of global warming. It is also true that bulk of methane is not inherently toxic to the environment. Unlike CO2, most of methane available in the atmosphere is a result of direct discharge rather than a product of chemical/thermal reaction in presence of artificial chemicals or energy sources.

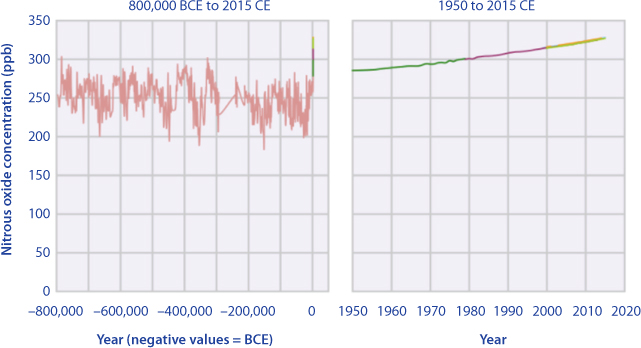

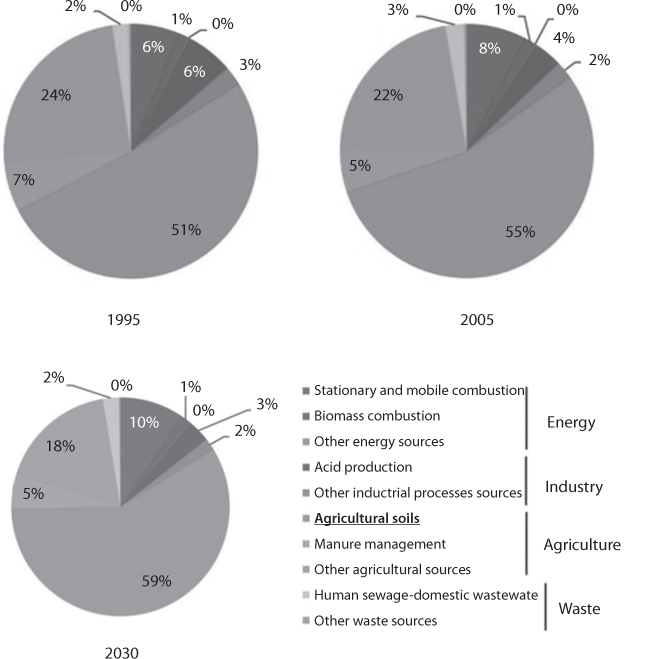

Nitrous oxide concentrations in the air were reasonably stable before industrialisation (in the timeframe of human existence), typically quoted as 270 ppb (parts per billion molar). Since industrialization, nitrous oxide concentrations have increased by about 20 per cent to present day values (~330 ppb in 2017). Figure 10.4 shows concentrations of nitrous oxide in the atmosphere from hundreds of thousands of years ago through 2015. The data come from a variety of historical ice core studies and recent air monitoring sites around the world. Each line represents a different data source as reported by EPA.

Figure 10.4 Nitrousoxide in the atmosphere (from EPA, 2018).

Nitrous oxide is a potent GHG with ~300-fold greater warming potential than CO2 on a per molecule basis, and is involved in destruction of the stratospheric ozone layer (Ravishankara et al., 2009). Globally, soil ecosystems constitute the largest source of N2O emissions (estimated at 6.8 Tg N2O-N/year), comprising approximately 65% of the total N2O emitted into the atmosphere, with 4.2 Tg N2O-N/year derived from nitrogen fertilization and indirect emissions, 2.1 Tg N2O-N/year arising from manure management and 0.5 Tg N2O-N/year introduced through biomass burning (IPCC, 2007). Other important N2O sources include ocean, estuaries and freshwater habitats and wastewater treatment plants (Schreiber et al. 2012). In recent years, the excessive use of nitrogen-based fertilizers (ca. 140 Tg N/year), has greatly contributed to the conspicuous elevation in atmospheric N2O concentrations, from pre-industrial levels of 270 ppbv. Generally, for every 1000 kg of applied nitrogen fertilizers, it is estimated that around 10–50 kg of nitrogen will be lost as N2O from soil, and the amounts of N2O emissions increase exponentially relative to the increasing nitrogen inputs (Shcherbak et al., 2014). Industrial activities, as well as during combustion of fossil fuels and solid waste are believed to be the cause of monotonous increase in Nitrous oxide.

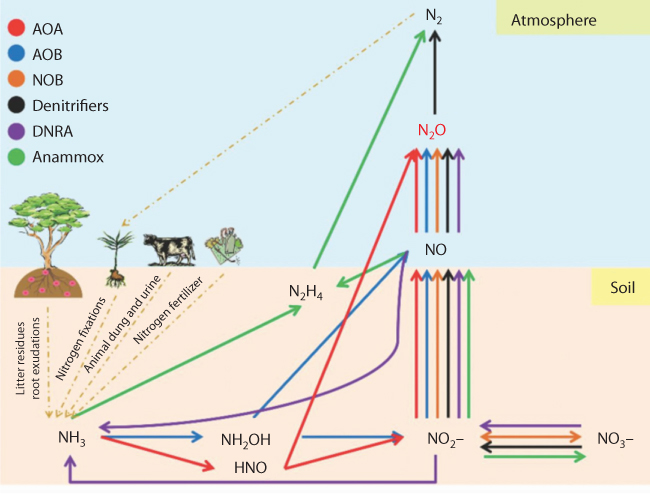

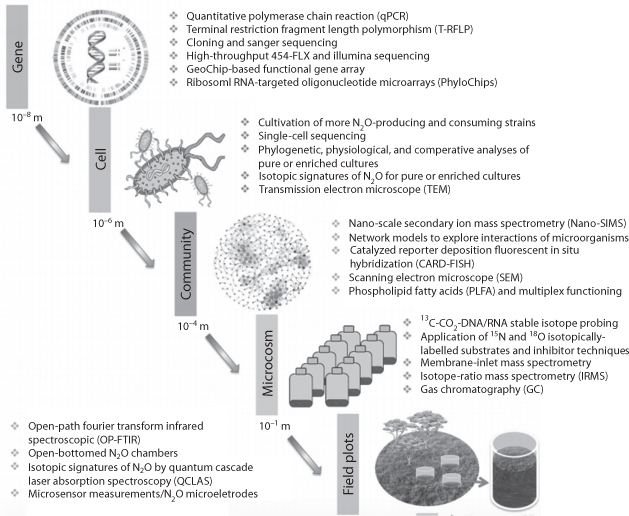

Figure 10.5 shows different pathways that can generate NO2. The quality of NO2 will depend on the pathway travelled by various components. Hu et al. (2015) presented details of various techniques that can track the pathways. Even though this work can shed light on truly scientific characterization of matter, they do not identify a technique that would separate natural origin from the artificial (synthetic) one. For instance, their analysis doesn’t differentiate between chemical fertilizer and organic fertilizer. With the advent of new analytical tools, it is becoming possible to characterize matter with proper signatures (Wielderhold, 2015). Most recent developed will be discussed in a latter section.

Figure 10.5 Simplifiedschematic representations of the major microbial pathways and microbes for theglobal N2O production and nitrogen cycling in soil ecosystems (FromHu et al., 2015) AOA: ammonia-oxidizing archaea; AOB: ammonia-oxidizingbacteria; NOB: nitrite oxidation; DNRA: dissimilatorynitrate reduction to ammonium; Annamox: anaerobic ammonium oxidation.

Hydrofluorocarbons, perfluorocarbons, sulfur hexafluoride, and nitrogen trifluo-ride are synthetic, powerful greenhouse gases that are emitted from a variety of industrial processes. Fluorinated gases are sometimes used as substitutes for stratospheric ozone-depleting substances (e.g., chlorofluorocarbons, hydrochlorofluorocarbons, and halons). These gases are typically emitted in smaller quantities, but because they are potent greenhouse gases, they are sometimes referred to as High Global Warming Potential (GWP) gases. GWP was developed in order to allow comparison of various greenhouses gases through their impact on global warming. The base is CO2 and other gases are measured relative to CO2. It is a measure of how much energy the emissions of 1 ton of a gas will absorb over a given period of time, relative to the emissions of 1 ton of carbon dioxide (CO2). The larger the GWP, the more that a given gas warms the Earth compared to CO2 over that time period. The time period usually used for GWPs is 100 years. By definition, CO2 has a GWP of 1 regardless of the time period used. Methane (CH4) is estimated to have a GWP of 28–36 over 100 years. Methane emitted today lasts about a decade on average, which is much less time than CO2. However, methane also absorbs much more energy than CO2. The net effect of the shorter lifetime and higher energy absorption is reflected in the GWP. Nitrous Oxide (N2O) has a GWP 265–298 times that of CO2 for a 100 year timescale. N2O emitted today remains in the atmosphere for more than 100 years, on average. Chlorofluorocarbons (CFCs), hydrofluorocarbons (HFCs), hydrochlorofluorocarbons (HCFCs), perfluorocarbons (PFCs), and sulfur hexafluoride (SF6) are sometimes called high-GWP gases because, for a given amount of mass, they trap substantially more heat than CO2. This characterization is useful but not entirely scientific as it doesn’t distinguish the composition of artificial chemicals within a gaseous system.

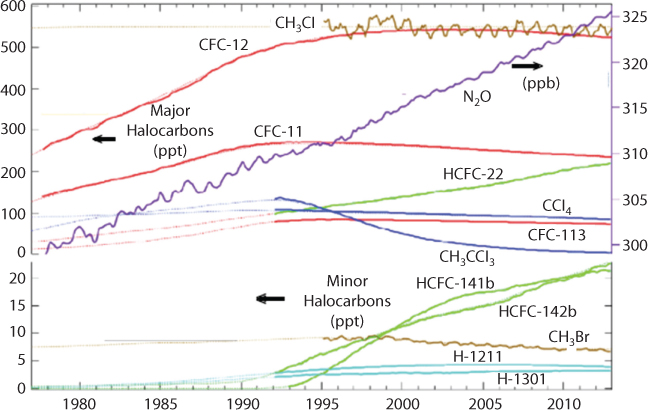

Although not considered before, clues to the answer of key climate change questions lie within monitoring the concentration of Chlorofluorocarbons (CFCs) in the atmosphere. These chemicals all had 0 concentration before the onset of the so-called plastic era, which saw mass production of artificial chemicals for ubiquitous applications. Figure 10.6 shows CFC concentrations as monitored by NORA (2018). The dashed marks are estimated values. NOAA monitors atmospheric concentrations of these chemicals and other important halocarbons at twelve sampling sites using either continuous instruments or discrete flask samples.

Figure 10.6 CFC concentration in atmosphere as monitored by NORA (2018).

Refrigerators in the late 1800s and early 1900s used the toxic gases, ammonia (NH3), methyl chloride (CH3Cl), or sulfur dioxide (SO2), as refrigerants. After a series of fatal accidents in the 1920s when methyl chloride leaked out of refrigerators, a search for a less toxic replacement began as a collaborative effort of three American corporations- Frigidaire, General Motors, and Du Pont. CFCs were first synthesized in 1928 by Thomas Midgley, Jr. of General Motors as safer chemicals for refrigerators used in large commercial applications. In 1932 the Carrier Engineering Corporation used FreonTM-11 (CFC-11) in the world’s first self-contained home airconditioning unit, called the “Atmospheric Cabinet”. During the late 1950s and early 1960s the CFCs made possible an inexpensive solution for air conditioning in many automobiles (CFC-12), homes, and office buildings. Later, the growth in CFC use took off worldwide with peak, annual sales of about a billion dollars (U.S.) and more than one million metric tons of CFCs produced. During the same time (1930), Einstein patented a technology for the same purpose that used ammonia, butane – a remarkable feat considering it used no electricity, had no moving part, and could operate without any synthetic chemical (Khan and Islam, 2012). While, this technology is making a comeback (Zyga, 2008), the science behind this technology and its merit in creating an engineering design without resorting to synthetic chemicals didn’t appeal to anyone for almost a century.

Instead, CFCs were promoted as ‘safe’ to use in most applications and ‘inert’ in the lower atmosphere. In 1974, two University of California chemists, Professor F. Sherwood Rowland and Dr. Mario Molina, showed that CFCs could be a major source of inorganic chlorine in the stratosphere following their photolytic decomposition by ultra-violet (UV) radiation there. Some of the released chlorine would become active in destroying ozone in the stratosphere. Ozone is a trace gas located primarily in the stratosphere. While this damage to the environment was recognized when “Ozone Holes” were discovered in 1980s and the synergistic reactions of chlorine and bromine and their roles recognized, the solutions offered was to reduce the production of these chemicals. For instance, in Montreal Protocol (on September 16, 1987), 27 nations signed a global environmental treaty to Reduce Substances that Deplete the Ozone Layer that had a provision to reduce production levels of these compounds by 50% relative to 1986 before the year 2000. This international agreement included restrictions on production of CFC-11,-12, -113,-114, -115, and the Halons (chemicals used as a fire extinguishing agents). An amendment approved in London in 1990 was more forceful and called for the elimination of CFC production by the year 2000. The chlorinated solvents, methyl chloroform (CH3CCl3), and carbon tetrachloride (CCl4) were added to the London Amendment as controlled substances in 1992. Subsequent amendments added methyl bromide (with exemptions for specific uses), hydrobromofluorocarbons, and bromochloromethane. This explains the peaks reached in various CFC graphs in Figure 10.6. So, how was the reduction of these chemicals compensated? Instead of finding chemicals that wouldn’t cause the kind of harm CFC was causing, other chemicals were produced that would delay the response of the environment although in long-term effects they were not any less desirable than CFC. For instance, two classes of halocarbon substituted for others. These are: hydrochlorofluorocarbons (HCFCs) and the hydrofluorocarbons (HFCs). The HCFCs include hydrogen atoms in addition to chlorine, fluorine, and carbon atoms. The advantage of using HCFCs is that the hydrogen reacts with tropospheric hydroxyl (OH), resulting in a shorter atmospheric lifetime. HCFC-22 (CHClF2) has an atmospheric lifetime of about 12 years compared to 100 years for CFC-12. This, however, says nothing about the fact that HCFC-22 is not compatible with the ecosystem. In fact, it is US EPA site that lists it as a candidate for high-temperature oxidation in order to mitigate (EPA, 2014). There lies another layer of disinformation. It is assumed that HCFC-22, a synthetic chemical that never existed in nature can be combusted into generating CO2 – a natural chemical that is essence of life on Earth.

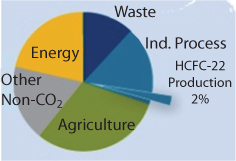

EPA (2014) reports Global abatement potential in the HCFC-22 production sector is 228 million metric tons of carbon dioxide equivalent (MtCO2e) and 255 MtCO2e in 2020 and 2030, respectively, which equates to a 90% reduction from projected baseline emissions (Figure 10.7). It is then stated that thermal oxidation is the only abatement option considered for the HCFC-22 production sector, for a price tag of $one per tCO2. Then comes the solution in terms of tagging cleanup with increasing price Figure 10.8. We’ll see in latter section of this chapter that New Science is incapable of properly analyzing the real damage caused by these synthetic chemicals.

Figure 10.7 FluorinatedGreenhouse Gas Emissions from HCFC-22 Production (from EPA, 2014).

Figure 10.8 It would be cost-effective to reduce emissions by 0%, compared to the baseline, in 2030. An additional 89% reduction is available using technologies with increasingly higher costs (From EPA, 2014).

Curiously, New Science didn’t stop at avoiding artificial/natural sourcing, it used the presence of artificial sources to explain the paradoxical nature of CO2 cause effect narration. For instance, the warming this century occurred mostly between 1910 and 1940, when the carbon dioxide concentration grew slowly from 293 to 300 ppm. Meanwhile, the temperature remained steady between 1940–1980, while the carbon dioxide concentration increased from 300 to 335 ppm. These seemingly paradoxical behaviors are explained by the presence of atmospheric aerosol – the artificial variety ones. Aerosols are emitted by industrial processes, transport, etc, and their increased concentration offset simultaneous warming due to increasing greenhouse gases. However the warming overtook the cooling by mid 1970’s (Keeling et al., 1996).

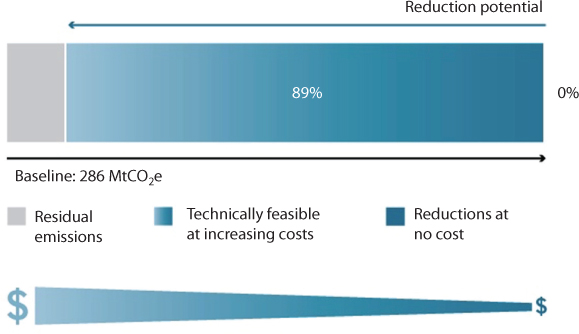

Figure 10.9 Shows the latest details of greenhouse gas emissions in USA. Note that the only nitrous oxide and fluorinated gas show continuous increase, whereas carbon dioxide and methane show fluctuations over time.

Figure 10.9 Nitrousoxide in the atmosphere (from EPA, 2018).

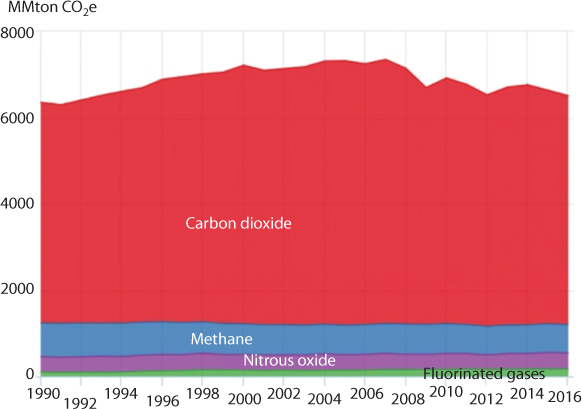

Figure 10.10 shows distribution of greenhouse gas among various sectors. As we’ll see in latter sections, CO2 generated from transportation, electricity generation, and industry are 100% contaminated and cannot be absorbed by the ecosystem. When it comes to agriculture, the production of contaminated CO2 is proportional to the practices of the region. For instance, in the west, beef is the most CO2-intesive operation. Gerber et al. (2013) give a complete details of greenhouse gases emitted from livestocks. For this sector, global emissions from the production, processing and transport of feed account for about 45 percent whereas fertilization of feed crops and deposition of manure on pastures generate substantial amounts of N2O emissions, representing together about half of feed emissions (i.e., one-quarter of the sector’s overall emissions). About one-quarter of feed emissions (less than 10 percent of sector emissions) are related to land-use change.

Figure 10.10 Distribution of greenhouse gas among various sectors in USA (EPA, 2017).

Among feed materials, grass and other fresh roughages account for about half of the emissions, mostly from manure deposition on pasture and land-use change. Crops produced for feed account for an additional quarter of emissions, and all other feed materials (crop by-products, crop residues, fish meal and supplements) for the remaining quarter. Enteric fermentation is the second largest source of emissions, contributing about 40 percent to total emissions. Cattle emit 77% of enteric CH4, buffalos 13%, whereas small ruminants emit about 10%. Methane and N2O emissions from manure storage and processing represent about 10 percent of this sector’s emissions. When energy consumption is tallied up, the total emissions amount to about 20%. This is the part that depends heavily on the region where the livestock is grown.

Today, this entire analysis doesn’t distinguish between organic or mechanical processes. For instance, methane emitted from enteric fermentation is lumped together with industrial methane from oil and gas activities, both being branded as ‘antropogenic methane’. Whereas, this enteric methane accounts for as much as 30% of global anthropogenic methane emissions.

The problem is very similar for N2O emission as well. In natural processes (including bacterial activities), N2O is integral part of vital functioning of NO (Wang et al., 2004). Whereas, NO2 emitted from industrial processes is inherently toxic to the environment and gets rejected by the ecosystem.

10.3 The Current Focus

At present all theories are geared toward targeting carbon as the source of global warming. In this process, CO2 is the paradigm and other gases are expressed as a CO2 equivalent. No distinction is made between organic CO2 (either naturally occurring or through biological process) and mechanical CO2 (generated through modern engineering).

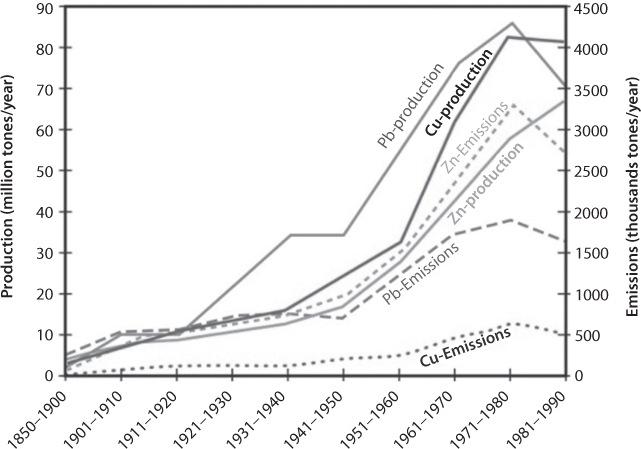

10.3.1 Effect of Metals

Dupuy and Douay (2001) studied of the behavior of heavy metals in soils requires the knowledge of the complexation between soil constituents and metals and this information is not available from conventional analytical techniques such as atomic absorption. Since metals do not absorb mid infrared radiation, we wanted to characterize them using their interaction with the organic matter of soils. The use of chemometrics treatment of the spectroscopic data has demonstrated firstly that the interaction between soil constituents and metals takes place preferentially via organic matter, secondly the high difference between the complexation of lead and zinc into organic matter should be noted. The study of the infrared spectra shows that two bands at 1670–1690 and 1710 cm−1 vary according to the concentration of lead, which seems to be preferentially complexed by the salicylate functionality.

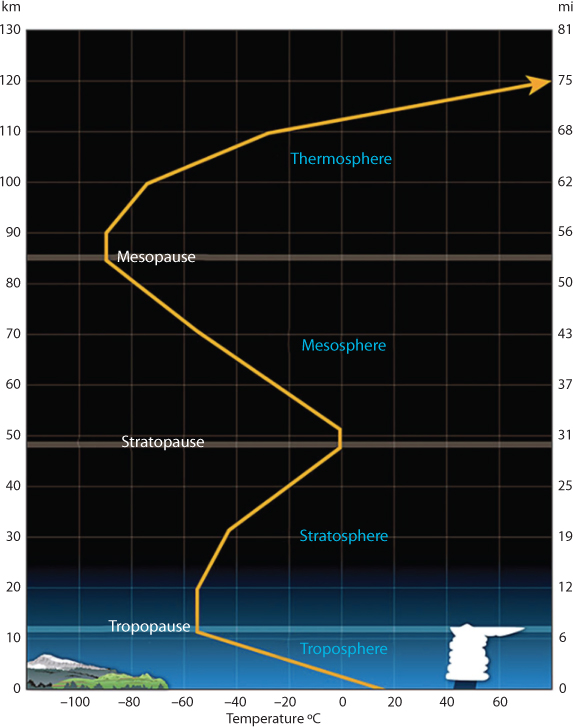

Five distinct layers have been identified based on following features (NOAA, 2018)

- thermal characteristics (temperature changes);

- chemical composition;

- movement; and

- density.

Each of the layers are bounded by “pauses” where the greatest changes in thermal characteristics, chemical composition, movement, and density occur. These five basic layers of the atmosphere are Figure 10.11:

Figure 10.11 Various atmosphericlayers and their principal features (from NOAA, 2018a).

Exosphere: This is the outermost layer of the atmosphere and is external to our atmospheric system. It extends from the top of the thermosphere to 10,000 km above the earth. In this layer, all matters escape into space and satellites orbit the earth. At the bottom of the exosphere is the thermopause located around 375 miles (600 km) above the earth.

Thermosphere: This layer is between 85 km and 600 km from the surface of the earth. This layer is known as the upper atmosphere. The density of gases is very low but starts to increase drastically toward lower layers. In this layer that high energy ultraviolet and x-ray radiation from the sun begins to be absorbed by the molecules in this layer, causing temperature increases. Because of this absorption, the temperature increases with height. From as low as –120 C at the bottom of this layer, temperatures can reach as high as 2,000 C near the top. However, even at that high temperature, the actual heat retention is not large because of the low density of matter in that layer.

Mesosphere: This layer extends from around 50 km above the Earth’s surface to 85 km. In this layer, the density of gases increases drastically, accompanied with rise in ambient temperature. This is the layer that is dense enough to slow down meteors hurtling into the atmosphere, where they burn up, leaving fiery trails in the night sky. The transition boundary which separates the mesosphere from the stratosphere (next one) is called the stratopause.

Stratosphere: The Stratosphere extends around 50 km down to anywhere from 6 to 20 km above the surface of the Earth. This layer holds 19 percent of the atmosphere’s gases but very little water vapor. In this region the temperature increases with height. Heat is produced in the process of the formation of Ozone and this heat is responsible for temperature increases from an average –51 C at tropopause to a maximum of about –15 C at the top of the stratosphere. As such, warmer air is located above the cooler air, thus minimizing intra-layer convection.

Troposphere: The troposphere begins at the surface of the Earth and extends from 6 to 20 km high. The height of the troposphere varies from the equator to the poles. At the equator it is around 18–20 km high, at 50°N and 50°S, 85 km and at the poles just under 65 km high. The sun’s rays provide both light and heat to Earth, and regions that receive greater exposure warm to a greater extent. This is particularly true of the tropics, which experience less seasonal variation in incident sunlight. As the density of the gases in this layer decrease with height, the air becomes thinner. Therefore, the temperature in the troposphere also decreases with height in response. As one climbs higher, the temperature drops from an average around 17 C to –51 C at the tropopause.

Scientists have spent great amount of time and energy in explaining how greenhouse gases have created the global warming. At present, it is theorized that many molecules in the atmosphere possess pure-rotation or vibration-rotation spectra that allow them to emit and absorb thermal infrared (IR) radiation (4–100 μm), such gases include water vapor, carbon dioxide and ozone (but not the main constituents of the atmosphere, oxygen or nitrogen). It is this property that gives special status for greenhouse gases. Because this absorption of IR prevents heat from escaping the earth, the greenhouse gas effect kicks in. It is customary to consider that convection is the dominant mechanism below tropopause. As such, it is often justified that instant mixing occurs up to that interface. Overall, thermal balance is maintained through exchange of IR back and forth from the Earth’s surface. Consequently, the change in the radiative flux at the triopopause, which marks the interface between convective layer and a stable layer becomes the most crucial zone for overall climate control (Ramanathan et al., 1987). Mechanically, Earth’s spin creates three belts of circulation due to its continuous spinning motion (Stevens, 2011). Air circulates from the tropics to regions approximately 30° north and south latitude, where the air masses sink. This belt of air circulation is referred to as a Hadley cell, after George Hadley, who first described it (Holton, 2004). Two additional belts of circulating air exist in the temperate latitudes (between 30° and 60° latitude) and near the poles (between 60° and 90° latitude). A further consideration is the spectroscopic strength of the bands of molecules which dictates the strength of the infra-red absorption. Molecules such as the halocarbons have bands with intensities about an order of magnitude or greater, on a moleculeper-molecule basis, than the 15 |im band of carbon dioxide. This is further impacted by the presence of any fraction of artificial chemicals that often have heavy metals. The actual absorbance by a band is, however, a complicated function of both absorber amount and spectroscopic strength so that these factors cannot be considered entirely in isolation.

Other factors involve radiative forcing and in particular the spectral absorption of the molecule in relation to the spectral distribution of radiation emitted by a matter. The distribution of emitted radiation with wavelength is shown by the dashed curves for a range of atmospheric temperatures as shown in Figure 10.12. Unless a molecule possesses strong absorption bands in the wavelength region of significant emission, it can have little effect on the net radiation. The solid line shows the net flux at the tropopause (Wm2) in each 10 cm-1 interval using a standard narrow band radiation scheme and a clear-sky mid-latitude summer atmosphere with a surface temperature of 294K (Shine et al., 1990). Under this scenario, the wavelength is important.

Figure 10.12 Spectrum of variousemissions from a black body (Wm2 per 10 cm−1 spectral interval) across the thermal infrared for temperatures of 294K, 244K and 194K (from Shine et al., 1990).

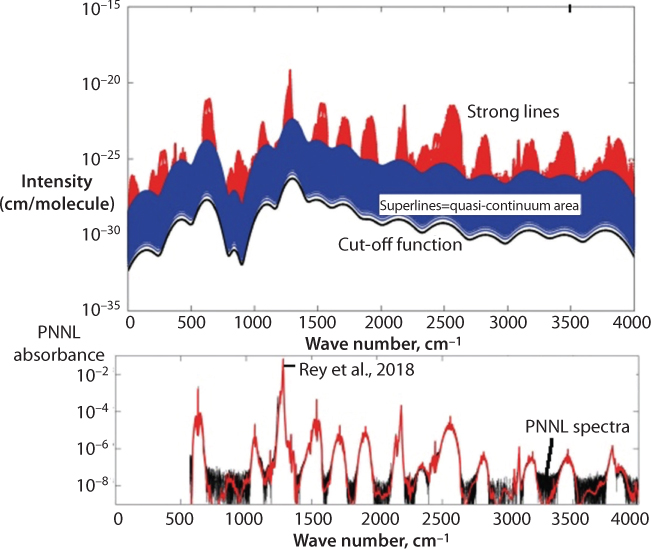

There has been a perfect balance in the concentration of naturally occurring gases in the atmosphere and all strata that are described above. These concentrations dictate the level of absorption that would take place within the tropopause. It is understood that gases, such as halocarbons that didn’t exist in nature before, the forcing is linearly proportional to the concentration. On the other hand, for gases such as methane and nitrous oxide, their forcings are considered to be proportional to the square root of their concentration (Shine et al., 1990). For CO2, the spectrum is so opaque that additional molecules of carbon dioxide are considered to have little impact, making forcing to be logarithmic in concentration. Molecules such as the halocarbons have bands with intensities about an order of magnitude or even greater, on a molecule-per-molecule basis, than the 15 μm band of carbon dioxide The actual absorptance by a band is, however, a complicated function of both absorber amount and spectroscopic strength so that these factors cannot be considered entirely in isolation (Rey et al., 2018). Initial work of Rey et al. (2018) indicates that for these artificial chemicals (CF4 in this particular case), “hot bands” corresponding to transitions among excited rovibrational states contribute significantly to the opacity in the infrared even at room temperature conditions. Such molecules have a particularly long atmospheric lifetime (scientifically, they never become assimilated with the ecosystem) and their very estimated global warming potentials are very large. They report a list on such data for CF4 in the range 0–4000 cm−1, containing rovibrational bands that are the most active in absorption. is partitioned into strong & medium intensity transitions and the quasi-continuum of individually indistinguishable weak lines. The latter ones are compressed using “super-lines” (SL) databases for an efficient modelling of absorption cross-sections (Figure 10.13).

Figure 10.13 Top panel: Strong lineintensities (in red) and schematic representation of the quasi-continuum area(QC, in blue, treated using super-lines) of tetrafluoromethane at296 K. A plot of the cutoff function is also given (black line). Bottom panel:Plot of the PNNL absorbance and comparison with TheoReTS (From Rey et al., 2018).

This behavior is strongly affected by the presence of heavy metals (Vernooij et al., 2016). Table 10.1. gives atomic absorption data for select metals, along with their intensity and corresponding wave numbers.

Table 10.1 Atomic absorption data for certain elements (From Sansonettia and Martin, 2005).

| Element | Ground state | Ionization energy | Intensity/wavelength (air) |

| Pb I | 1s22s22p63s23p63d104s24p64d104f145s25p65d106s2 6p21/2(1/2,1/2)0 | 59819.2 cm−1(7.411663 eV) | 90/2663.154 |

| Pb II | 1s22s22p63s23p63d104s24p64d104f145s25p65d106s2 6 p2P01/2 | 121 245.14 cm−1(15.03248 eV) | 50/3713.982 |

| Hg I | 1s22s22p63s23p63d104s24p64d104f145s25p65d106s21S0 | 84184.1 cm−1(10.4375 eV) | 80/3131.839 |

| Hg II | 1s22s22p63s23p63d104s24p64d104f145s25p65d106s2S1/2 | 151284.4 cm−1(18.7568 eV) | 60/2260.294 |

| Pt I | 1s22s22p63s23p63d104s24p64d104f145s25p65d96s3D3 | 72257.3 cm−1(8.9588 eV) | 30/1971.5374 |

| Pt II | 1s22s22p63s23p63d104s24p64d104f145s25p65d92D5/2 | 149723 cm−1(18.563 eV) | 30/1954.7436 |

| Cd I | 1s22s22p63s23p63d104s24p64d105s21S0 | 136374.74 cm−1(16.908 31 eV) | 150/3252.524 |

| Cd II | 1s22s22p63s23p63d104s24p64d105s2S1/2 | 136374.74 cm−1(16.908 31 eV) | 200/2321.074 |

| Cu I | 1s22s22p63s23p63d104s2S1/2 | 62317.44 cm−1(7.72638 eV) | 150P/2178.94 |

| Cu II | 1s22s22p63s23p63d104s1S0 | 163669.2 cm−1(20.2924 eV) | 150P/1999.698 |

| Fe I | 1s22s22p63s23p63p63d64s25D4 | 63737 cm−1(7.9024 eV) | 40/2373.6245 |

| Fe II | 1s22s22p63s23p63p63d64s6D9/2 | 63737 cm−1(7.9024 eV) | 40/2363.8612 |

10.3.2 Indirect Effects

In addition to their direct radiative effects, many of the greenhouse gases also have indirect radiative effects on climate through their interactions with atmospheric chemical processes. Note that these processes are continuous and take place under any temperature and pressure, albeit at different rates. In addition, the presence of contaminats alter the nature of these reactions, as will be shown in latter section of this chapter. Some of these interactions are listed in Table 10.2.

Table 10.2 Direct radiative effects and indirect trace gas chemical-climate Interactions (Shine et al. 1990).

| Gas | Greenhouse gas? | Is its tropospheric concentration affected by chemistry | Effects on tropospheric chemistry? | Effects on stratospheric chemistry? |

| CO2 | Yes | No | No | Yes, affects O3 |

| CH4 | Yes | Yes, reacts with OH | Yes, affects OH, O3 and CO2 | Yes, affects O3 and H2O |

| CO | Yes, but weak | Yes, reacts with OH | Yes, affects OH, O3 and CO2 | Not significantly |

| N2O | Yes | No | No | Yes, affects O3 |

| NOX | Yes | Yes, reacts with OH | Yes, affects OH and O3 | Yes, affects O3 |

| CFC-11 | Yes | No | No | Yes, affects O3 |

| CFC-12 | Yes | No | No | Yes, affects O3 |

| CFC-113 | Yes | No | No | Yes, affects O3 |

| HCFC-22 | Yes | Yes, reacts with OH | No | Yes, affects O3 |

| CH3Cl3 | Yes | Yes, reacts with OH | No | Yes, affects O3 |

| Cl2CFBr | Yes | Yes, reacts with OH | No | Yes, affects O3 |

| CF3Br | Yes | No | Yes, affects O3 | |

| Yes, but weak | Yes, reacts with OH | Yes, increases aerosols | Yes, increases aerosols | |

| cs3scs3 | Yes, but weak | Yes, reacts with OH | Source of SO2 | Not significantly |

| CS2 | Yes, reacts with OH | Source of COS | Yes, increases aerosols | |

| COS | Yes but weak | Yes, reacts with OH | Not significant | Yes increases with aerosol |

| O3 | yes | yes | yes | yes |

This table focuses on the role of Ozone. Ozone plays an important dual role in affecting the climate. Ozone concentration in the atmosphere depends on its vertical distribution throughout troposphere and stratosphere. This is in addition to concentration in the atmosphere – this concentration being dynamic as Ozone concentration is not stable like other greenhouse gases, such as CO2. Ozone is also a primary absorber of solar radiation in the stratosphere, thus contributing to the increase with altitude. The greenhouse effect is directly proportional to the temperature contrast between the level of emission and the levels at which radiation is absorbed. This contrast is greatest near the tropopause, where temperatures are at a minimum compared to the surface. Above about 30 km, added ozone causes a decrease in surface temperature because it absorbs extra solar radiation, effectively depriving the troposphere of direct solar energy that would otherwise warm the surface (Lacis et al, 1990). Lacis et al. (1990) observed a cooling of the surface temperature at northern mid-latitudes during the 1970s equal in magnitude to about half the warming predicted for CO2 for the same time period. However, the measurement uncertainty of the observed trends is large, with the best estimates for mid-latitude cooling being –0.05 ± 0.05 °C. The surface cooling was determined to be caused by ozone decreases in the lower stratosphere, which outweigh the warming effects of ozone increases in the troposphere. The results obtained differ from predictions based on one-dimensional photochemical model simulations of ozone trends for the 1970s, which suggest a warming of the surface temperature equal to ~20% of the warming contributed by CO2. Also, the ozone decreases observed in the lower stratosphere during the 1970 s produce atmospheric cooling by several tenths of a degree in the 12- to 20-km altitude region over the northern mid-latitudes. This temperature decrease is larger than the cooling due to CO2 and thus may obscure the expected stratospheric CO2 greenhouse signature.

The presence of metals affect the IR absorption. This principle has been used for material characterization, the technique being called Surface-enhanced IR absorption (SEIRA). It is established that IR absorption of molecules adsorbed on metal nanoparticles is significantly enhanced than would be expected in the normal measurements without the metal (Miki et al., 2002). SEIRA spectroscopy (SEIRAS) has been applied to in situ study of electrochemical interfaces. A theoretical calculation predicts that SEIRA effect can be observed on most metals if the size and shape of particles and their proximity to each other are well tuned. It has been observed for Au, Ag, Cu, and Pt. From a comparison with the spectra of CO adsorbed on smooth Pt surfaces, it has been reported that the absorption is 10–20 times enhanced on Pt nanoparticles (Miki et al., 2002).

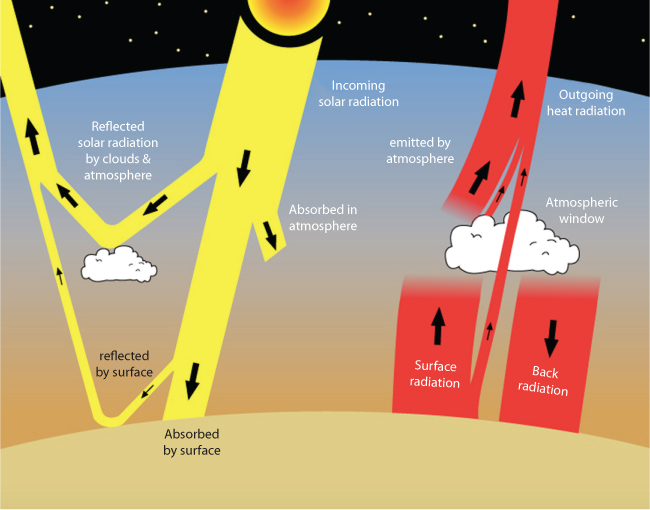

Figure 10.14 shows the fluxes of energy in and out of Earth’s surface. The Sun provides most of the incoming energy, shown in yellow. Most of this energy is absorbed at the surface with the exceptions of:

- - energy reflected by clouds or the ground; and

- - energy absorbed by the atmosphere.

Figure 10.14 Overall energy balanceof the Earth (From NASA, as reported in Rosen and Egger, 2016).

Most of the outgoing energy is emitted by Earth’s surface as long wavelength radiation, shown in red in Figure 10.14. These are the radiations that mostly get absorbed by greenhouse gases the atmosphere. The atmosphere re-emits some of this energy up to space and some of it back down to Earth. The red arrow labeled “back radiation” represents the greenhouse effect, which can create imbalance if there is a perturbation in thermochemical features of the atmosphere.

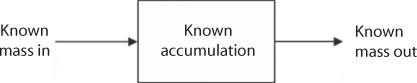

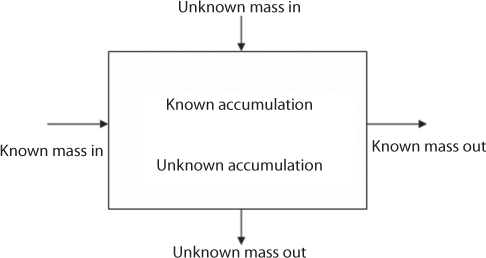

Arrhenius set out to account for all the energy coming into and leaving the Earth system – a kind of energy budget (Arrhenius, 1896). That required tallying up all the sources of energy, the ways energy could be lost (known as energy sinks) and the ways energy could be transferred (known as energy fluxes). Arrhenius did not include an illustration in his 1896 paper, but essentially captured what is shown in Figure 10.14. This diagram shows the fluxes of energy in and out of Earth’s surface. The Sun provides most of the incoming energy, shown in yellow. Most of this energy is absorbed at the surface, except for a small amount that gets reflected by clouds or the ground, or that gets absorbed by the atmosphere. Most of the outgoing energy is emitted by Earth’s surface as long wavelength radiation, shown in red. However, most of this energy gets absorbed by greenhouse gases in the atmosphere. The atmosphere re-emits some of this energy up to space and some of it back down to Earth. The red arrow labeled “back radiation” represents the greenhouse effect. Thin black arrows in Figure 10.14 represent solar radiation in Arrhenius’ equation. Because long-wave infrared radiation is absorbed by greenhouse gases, they were known to have a role in global temperatre.

Arrhenius reasoned that if the atmosphere was absorbing infrared radiation, it too was heating up. Thus, he added another level of complexity to his model: an atmosphere that could absorb and radiate heat just like Earth’s surface. For simplicity, he treated the whole atmosphere as one layer. The atmosphere absorbed outgoing radiation emitted by the surface (thick red arrow), and then emitted its own radiation both up to space and back down to Earth (thin red arrows). This was the beginning of the so-called 2-layer model.

This was an important realization, because it showed that the atmosphere didn’t block outgoing radiation as Fourier had proposed. It absorbed it. Then, like the hotbox, it heated up and emitted infrared energy. The atmosphere emits this energy in all directions, including back toward the earth. This flux of energy from the atmosphere to the surface represents another important source of heat to Earth’s surface, and it explains the real mechanism behind the greenhouse effect.

10.4 Scientific Characterization of Greenhouse Gases

It has been known for some time that biogeochemical cycling of anthropogenic metals can attach an isotopic signature during the biological processing in a natural environment. If this signature is often accompanied by stable isotope fractionation, which can now be measured due to recent analytical advances (Wiederhold, 2015). A new research field involves complementing the traditional stable isotope systems (H, C, O, N, S) with many more elements across the periodic table (Li, B, Mg, Si, Cl, Ca, Ti, V, Cr, Fe, Ni, Cu, Zn, Ge, Se, Br, Sr, Mo, Ag, Cd, Sn, Sb, Te, Ba, W, Pt, Hg, Tl, U), which then can be potentially applied as novel geochemical tracers. The same technique can also shed light on the application of metal stable isotopes as source and process tracers in environmental studies. Wiederhold (2015) introduced most important concepts of mass-dependent and mass-independent metal stable isotope fractionation and their effects on natural isotopic variations (redox transformations, complexation, sorption, precipitation, dissolution, evaporation, diffusion, biological cycling, etc.).

It is known that stable isotope ratios of chemical elements in environmental samples contain valuable information on sources and processes that elements were exposed to, in essence carrying the signature of the pathway all the way going back to the source. For decades, there has been a flux of new techniques for isotope analysis of light elements (H, C, O, N, S) and their applications to environmental geochemistry (Hoefs, 2009; Fry, 2006). These stable isotope systems encompass only elements, which can then be converted into a gaseous form, thus being amenable to gas-source mass spectrometers (de Groot, 2008). For decades, the main difficulty in analysis techniques was the lack of technique suitable for discerning natural variations in the stable isotope composition of heavier elements, especially metals. This capability has been enhanced greatly over last few years, during which period the high-precision stable isotope analysis techniques have been expanded to almost the entire periodic table. This includes primarily the present-day cycling of metals and metalloids in the environment related to their important roles as:

- integral components of earth surface processes (e.g., weathering, pedogenesis);

- as nutrients for organisms (e.g., plants, microorganisms); and

- as pollutants affecting natural ecosystems as a result of anthropogenic activities (e.g., emissions from industrial or mining sources).

10.4.1 Connection to Subatomic Energy

Islam (2014) presented comprehensive mass-energy balance equation that erases the artificial boundary between mass and energy. Every chemical reaction involes mass transfer and every mass transfer involves energy transfer. Starting with Newton, mass and energy have been disconnected in all analyses of New Science. Only recently, it has been recognized that energy exchange is inherent to chemical reactions1. Problem, however, persists because currently there is no mechanism to discern between organic/natural mass and synthetic/artificial mass, which are the source of organic energy and artificial energy, respectively. The use of quantum chemistry has made the situation worse because instead of seeking scientific reasoning, it has made it easier to invoke dogmatic and often paradoxical concepts. Even then, the latest discoveries are useful in gaining insight into physic-chemical reactions. In that regard, Rahm and Hoffmann (2015) introduced new ways of understanding the origins of energy in chemical reactions Starting with the premise that all of the interactions between the molecules, atoms, and the electrons that bind atoms together can collectively be understood in terms of energy, they propose a new energy decomposition analysis in which the total changing energy of any chemical reaction can be broken down into three components:

- nuclear-nuclear repulsion (the repulsive energy between the positively charged nuclei of different atoms);

- the average electron binding energy (the average energy required to remove one electron from an atom); and

- electron-electron interactions (the repulsive energy between negatively charged electrons).

The first one (repulsion) occurs when two atoms are brought together by decreasing the distance between nuclei. This repulsion leads to accumulation of electron to fill in the space caused by repulsion. In the presence of the two nuclei, the average binding energy of the electrons changes due to differences in electron-nuclear attraction. As the electrons move closer together, they also begin to interact more strongly with each other. At this point, quantum chemistry focuses on quantifying these electron-electron interactions with the assumption that all electrons are similar, irrespective of what element it belongs to. We argued in previous chapters that not only they are different from element to element, they are also different in their spinning depending on the source of the element, meaning a natural source would have them spin in a different direction from the direction of artificial sources. Not surprisingly, Rahm and Hoffmann (2015) focused on the electron interactions and estimated their interactions from experimental data, relying on quantum analysis. Conventionally a wave function is used to estimate the solutions to the Schrödinger equation, which assumes simultaneous existence of the wave–particle duality. They used experimental data to correlate with solutions of quantum equations to justify their applicability. Similarly, electronegativity, an old term used to describe is propensity of an atom to attract a bonding pair of electrons, was redefined. When Nobel laureate Chemist, Linus Pauling first defined this term in 1932, he referred to it as “the power of an atom to attract electrons to itself.” An alternative definition was proposed by Allen (1989), who introduced electronegativity as the third dimension of the periodic table. In essence, Allen legitimizes Schrödinger equation by calling it an intimate property of the periodic table. As such, the door to experimentally validating electronegativity values, which were not explicitly called energy until Allen’s paper. Allen expressed electronegativity on a per-electron (or average one-electron energy) basis as:

where m and n are the number of p and s valence electrons, respectively. The corresponding one-electron energies, ϵp and ϵs, are the multiplet-averaged total energy differences between a ground-state neutral and a singly ionized atom. Rahm and Hoffmann (2015) used the same concept but used all of the electrons, not just the valence ones, in their definition of electronegativity. They showed that traditional electronegativity values (such as Allen’s) and average all electron binding energies often provide the same general trends over a reaction, while preserving lower electrons that would make the description more complete.

With this information, Rahm and Hoffman (2015) explain that all chemical reactions and physical transformations can be classified into eight types based on whether the reaction is energy-consuming or energy-releasing, and on whether it is favoured or resisted by the nuclear, multielectron, and/or binding energy components. This information in turn relates to the nature of a chemical bond. They also showed that, in four of the eight classes of reactions, knowledge of the binding energy alone is sufficient to predict whether or not the reaction is likely to take place. Although their focus was to measure absolute energy, this work led the background of characterizing energy in terms of its sustainability.

In the experiment, the baseline is created with a one-electron system (such as C5+, which is a carbon atom with all but one of its electrons removed). The absolute energy of this system is easy to measure because, with only one electron, there is zero electron-electron repulsion. Then the absolute energy of the carbon atom, and the electron-electron interactions within it, could be measured as electrons are added back one by one. This is possible because it is theoretically possible to experimentally measure the average electron binding energy for each step.

The following are the major consequences of Rahm and Hoffman’s work.

- connects electronegativity to total energy;

- allows quantification of electron-electron interactions in governing chemical reactions from experimental data;

- gives an avenue to track energy sources through experiment and subsequent refinement through computational models.

The average binding energy of a collection of electrons (![]() ) is a defined property of any assembly of electrons in atoms molecules, or extended materials (Rahm and Hoffmann, 2015):

) is a defined property of any assembly of electrons in atoms molecules, or extended materials (Rahm and Hoffmann, 2015):

where εi is the energy corresponding to the vertical (Franck– Condon) emission of one electron i into vacuum, with zero kinetic energy, and n is the total number of electrons. For extended structures in one-, two-, or three-dimensions, ![]() can be obtained from the density of states (DOS) as (10.3)

can be obtained from the density of states (DOS) as (10.3)

here εf is the Fermi energy2. A related expression for the partial DOS has been found useful for determining the average position of diverse valence states in extended solids and for estimating covalence of chemical bonds. With the definitions of 10.2 and 10.3, one can choose to estimate ![]() for any subset of electrons, such as “valence-only” (as Allen (1989) did). Comparison with Traditional Electronegativities. Do the average electron binding energies correlate with timehonored measures of electronegativity, for instance Pauling’s values? Figure 10.15 shows the relation for the first four periods. The correlation is clearly there, but with one important difference. By the

for any subset of electrons, such as “valence-only” (as Allen (1989) did). Comparison with Traditional Electronegativities. Do the average electron binding energies correlate with timehonored measures of electronegativity, for instance Pauling’s values? Figure 10.15 shows the relation for the first four periods. The correlation is clearly there, but with one important difference. By the ![]() definition, heavier elements will naturally attain larger absolute values of

definition, heavier elements will naturally attain larger absolute values of ![]() , simply because the definition includes the cores, i.e., the binding of a larger number of electrons.

, simply because the definition includes the cores, i.e., the binding of a larger number of electrons. ![]() values correlate linearly with normal atomic electronegativity scales (we show a correlation with Pauling χ, but similar ones are obtained with other scales) only along each period, but not down the periodic table. We note that, however, the trend of increasing electronegativity down the periodic table, where each atom of necessity attracts more electrons, is from a certain perspective in accord with Pauling’s original definition, as quoted above. It is ∆χ, i.e., the change in average electron binding energy that is important in this analysis, not absolute values of χ. If one wishes to maintain a connection to more traditional electronegativity values, one can estimate Δ

values correlate linearly with normal atomic electronegativity scales (we show a correlation with Pauling χ, but similar ones are obtained with other scales) only along each period, but not down the periodic table. We note that, however, the trend of increasing electronegativity down the periodic table, where each atom of necessity attracts more electrons, is from a certain perspective in accord with Pauling’s original definition, as quoted above. It is ∆χ, i.e., the change in average electron binding energy that is important in this analysis, not absolute values of χ. If one wishes to maintain a connection to more traditional electronegativity values, one can estimate Δ![]() using a valence-only approach, i.e., using the Allen scale of electronegativity, which correlates linearly with Pauling’s values. As we saw in the CH4 example, such valence-only values of ∆

using a valence-only approach, i.e., using the Allen scale of electronegativity, which correlates linearly with Pauling’s values. As we saw in the CH4 example, such valence-only values of ∆![]() will be similar to ∆

will be similar to ∆![]() estimated with an all-electron approach and, in our experience, lead to the same general conclusions. Because energies of lower levels can, in fact, shift in a reaction, we nevertheless recommend the use of all-electron

estimated with an all-electron approach and, in our experience, lead to the same general conclusions. Because energies of lower levels can, in fact, shift in a reaction, we nevertheless recommend the use of all-electron ![]() for a more rigorous connection to the total energy. We understand that the all-electron electronegativities are unfamiliar and seem to run counter to chemistry’s fruitful concentration on the valence electrons. We beg the reader’s patience; there is a utility to this definition that will reveal itself below.

for a more rigorous connection to the total energy. We understand that the all-electron electronegativities are unfamiliar and seem to run counter to chemistry’s fruitful concentration on the valence electrons. We beg the reader’s patience; there is a utility to this definition that will reveal itself below.

Figure 10.15 Comparison of (from LC-DFT) with Pauling electronegativity forthe four first periods.37 Values for He, Ne, Ar, and Kr are from ref 38. The  values for H and He are, of course, not zero;they just appear small in value on the

values for H and He are, of course, not zero;they just appear small in value on the  scale. Thelines are linear regression lines for the elements in a period, with a separateline for Zn–Kr.

scale. Thelines are linear regression lines for the elements in a period, with a separateline for Zn–Kr.

The designation of total energy as a sum of three primary contributions, namely, the average electron binding energy, the nuclear–nuclear repulsion, and multielectron interactions can be adjusted by assigning electrons a phenomenal configuration, that is, a collection of particles that continues to include smaller particles in an yin yang pairing fashion. This can help amalgamate the galaxy model with the model proposed by and Rahm and Hoffman (2015). If the premise that natural material follows a different orientation of spinning than artificial material, each matter can carry a signature. The following sequence of events occur with artificial materials:

Characteristic time is changed → Natural frequency and orientation is changed → bond energies change → becomes a ‘cancer’ to natural materials.

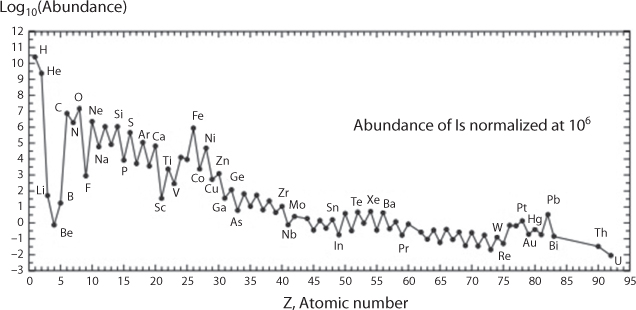

New Science observed such behaviour of artificial materials and exploited these features in order to develop new line of products. Scientifically New Science also attempted to explain such behaviour with dogmatic assertions, some of which has been presented earlier. Much of previous material characterization delved in considering nuclear stability. As we have seen in previous section, there is a general understanding that subatomic features relate to both energy and mass characteristics. At the outset there is a correlation between abundance and atomic number, Z. Figure 10.16 shows elements with an even number of protons, reflected by an even atomic number Z, are more abundant in Nature than those with an uneven number. Also, most abundant elements are also most stable and more difficult to denature. While every element is useful in its natural state, a denatured element is inherently harmful (Khan and Islam, 2016).

Figure 10.16 Natural relativeabundance of the elements as a function of their atomic number. (From Vanhaeckeand Kyser, 2010).

10.4.2 Isotopes and Their Relation to Greenhouse Gases

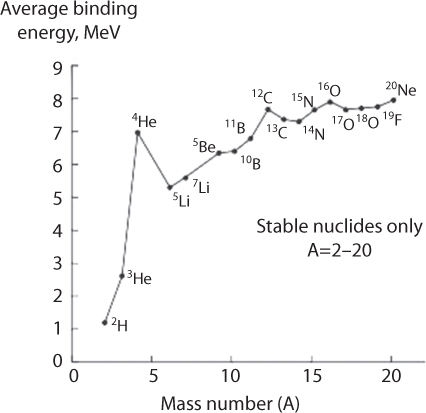

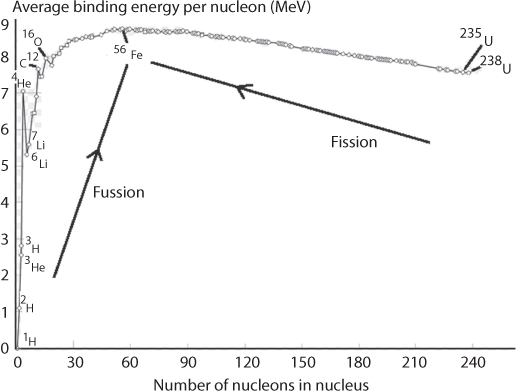

Figure 10.17 shows binding energy per nucleon for elements with atomic number greater than 20. Using this graph, scientists conclude that fission of a heavy nucleus into lighter nuclei or fusion of two H atoms into He are exo-energetic because the process results in nuclei/a nucleus characterized by a substantially higher binding energy per nucleon. Figure 10.18 shows the average binding energy for elements of mass number lower than 20. This figure shows that nuclei with an even number of protons show a higher binding energy per nucleon and thus higher stability (compare, e.g., the binding energies for 4He and 3He, 12C and 13C, and 16O and 17O.

Figure 10.17 Average bindingenergy per nucleon as a function of mass number for nuclides with a mass numberfrom 20 to 238 (From Vanhaecke and Kyser, 2010).

Figure 10.18 Average binding energyper nucleon as a function of mass number for nuclides with a mass number from 1 to 20. (From Vanhaecke and Kyser, 2010).

Around a mass number of 60, there is an optimum in average binding energy (Figure 10.19). Fewell (1995) indicated that the most tightly bound of the nuclei is 62Ni. This is in contradiction to previous assertion that 56Fe is the most strongly bounded nucleus. Fewell demonstrated that both 58Fe and 62Ni are more strongly bound than 56Fe. Table 10.3 shows the tabulation of the binding energy B divided by the mass number A (somewhat equivalent of atomic density). It is interesting to note that 56Fe has higher number than 52Cr, 54Cr, as well as 60Ni. It is also true that 56Fe has the highest absolute nuclear binding energy3. The optimum is explained through the fact that the more nucleons, the stronger the total strong force is in the nucleus. However, as nucleons are added, the size of the nucleus gets bigger, so the ones near the outside of the nucleus are not as tightly bound as the ones near the middle of the nucleus. Other data are shown in Tables 10.3–10.4. The binding energy per nucleon, because of the variation of the strong force with the distance, increases until the nucleus gets too big and the binding energy per nucleon starts decreasing again. This binding energy per nucleon achieves a maximum around A = 56, and the only stable isotope with that Atomic number is 56Fe. Figure 10.19 shows the nuclear binding energy per nucleon of those seven “key” elements denoted in the graph by their abbreviations (four with more than one isotope referenced). Increasing values of binding energy can be thought as the energy released when a collection of nuclei is rearranged into another collection for which the sum of nuclear binding energies is higher. As can be seen in this figure, light elements such as hydrogen release large amounts of energy (a big increase in binding energy) when combined to form heavier nuclei. This is the process of fusion. Beyond, iron, heavy elements release energy when converted to lighter nuclei—processes of alpha decay and nuclear fission. Figure 10.19 shows that the curve increases rapidly with low A, hits a broad maximum for atomic mass numbers of 50 to 60 (corresponding to nuclei in the neighbourhood of iron in the periodic table, which are the most strongly bound nuclei) and then gradually declines for nuclei with higher values of A. In this figure, iron’s ranking as optimum carries significance in terms of sustainability. In turns out iron sticks out in between toxic elements, similar to silver and gold, which also represent optimum properties. When it comes to organic applications, these optima are paramount (Bjørklund et al., 2017).

Figure 10.19 Optimum around iron.

Table 10.3 B/A Ratio for a Optimum Elements (From Wapstra and Bo, 1985).

| Nuclide | B/A (keV/A) |

| 62Ni | 8794.60 0.03 |

| 58Fe | 8792.23 0.03 |

| 56Fe | 8790.36 0.03 |

| 60Ni | 8780.79 0.03 |

Table 10.4 Most Abundant Metals and Their Concentration.

| Metal | Concentration |

Ranking |

| Aluminum | 8.1% |

1 |

| Iron | 5% |

2 |

| Calcium | 3.6% |

3 |

| Sodium | 2.8% |

4 |

| Potassium | 2.6% |

5 |

| Magnesium | 2.1% |

6 |

| Others | 0.8% |

7 |

In practical terms, it means it is the hardest to denature iron than any other element.

This variation in binding energy per nucleon also exerts a pronounced effect on the isotopic composition of the elements, especially for the light elements. “Even– even isotopes” for elements such as C and O (12C and 16O) are much more abundant than their counterparts with an uneven number of neutrons (13C and 17O). Despite the overall limited variation in binding energy per nucleon as a function of the mass number for the heavier elements, its variation among isotopes of an element may vary substantially, leading to a preferred occurrence of even–even isotopes, as illustrated by the corresponding relative isotopic abundances for elements such as Cd and Sn, as shown in Table 10.5. In both the lower (106Cd through 110Cd) and the higher (114Cd through 116Cd) mass ranges, only Cd isotopes with an even mass number occur. In addition, the natural relative isotopic abundances for 113Cd and, to a lesser extent, 111Cd are low in comparison with those of the neighboring Cd isotopes with an even mass number. Similarly, Sn, for which 7 out of its 10 isotopes are characterized by an even mass number, the isotopes with an odd mass number have a lower natural relative abundance than their neighbors. This trend continues with silicon, nitrogen, oxygen, sulphur. The only exception is hydrogen, for which uneven isotope is the stable form. Hydrogen has no neutron, deuterium has one, and tritium has two neutrons. The isotopes of hydrogen have, respectively, mass numbers of one, two, and three. Their nuclear symbols are therefore 1H, 2H, and 3H. The atoms of these isotopes have one electron to balance the charge of the one proton.

Electrons Are Fermions of Half-Integer Spin. Particles with Integer Spin Are Bosons. Fermions and Bosons Avoid Each Other

Figure 10.20 asserts the existence of number of stable isotopes of an element (upper right corner), the mass of the isotope commonly used in the delta value or alternatively the most abundant isotope (upper left corner), and the potential influence of radiogenic (RAD) and cosmogenic processes (COS) as well as mass-independent fractionation (MIF) on the stable isotope system (below the element symbol). In most cases, MIF due to nuclear volume or magnetic isotope effects has only been observed in laboratory-scale studies and has not yet been detected in natural samples (except for O, S, and Hg, marked in bold). The “traditional” stable isotope systems are marked with a red border. Elements for which high-precision stable isotope methods have been developed are marked with a bold symbol. In order to gain insight into isotopic fractionation, Hg offers an excellent case, because it has seven stable isotopes. Table 10.6 shows atomic mass and natural relative abundance of various Hg isotopes.

Figure 10.20 Periodic table with selectedelemental properties relevant for stable isotope research (from Wiederhold, 2015).

Table 10.6 Isotopic Composition of Selected Isotopes (From Vanhaecke, and Kyser, 2010 and Lide, 2002).

| Element | Atomic number, Z |

Isotope |

Natural relative abundance |

| Cd | 48 |

106Cd |

1.25 |

108Cd |

0.89 |

||

110Cd |

12.49 |

||

111Cd |

12.80 |

||

112Cd |

24.13 |

||

113Cd |

12.22 |

||

114Cd |

28.73 |

||

116Cd |

7.49 |

||

| Sn | 50 |

112Sn |

0.79 |

114Sn |

0.66 |

||

115Sn |

0.34 |

||

116Sn |

14.54 |

||

117Sn |

7.68 |

||

118Sn |

24.22 |

||

119Sn |

8.59 |

||

120Sn |

32.58 |

||

122Sn |

4.63 |

||

124Sn |

5.79 |

||

| H | 1 |

1H |

99.985 |

2H |

0.015 |

||

| C | 6 |

12C |

98.89 |

13C |

1.11 |

||

| N | 7 |

14N |

99.64 |

15N |

0.36 |

||

| O | 8 |

16O |

99.76 |

17O |

0.04 |

||

18O |

0.2 |

||

| Si | 14 |

28Si |

92.23 |

29Si |

4.67 |

||

30Si |

3.10 |

||

| S | 16 |

32S |

95.0 |

33S |

0.76 |

||

34S |

4.22 |

Figure 10.21 illustrates the influence of different fractionation mechanisms on Hg isotopes. In this figure, the arrows indicate qualitatively the influence of the mass difference effect (MDE), the nuclear volume effect (NVE), and the magnetic isotope effect (MIE) on the seven stable Hg isotopes. Mass-independent fractionation (MIF), which is defined as a measured anomaly compared with the trend of the MDE, is observed mainly for the two odd-mass isotopes 199Hg and 201Hg and can be caused either by the NVE due to their nonlinear increase in nuclear charge radii or the MIE due to their nuclear spin and magnetic moment. As can be seen from Table 10.7, natural abudance of these two isotopes are markedly lower than their corresponding ‘even-even’ counterparts, 200Hg and 202Hg, respectively. Here, MIF by the NVE and the MIE can be differentiated by the relative extent of MIF on 199Hg and 201Hg. Elements for which MIF has been detected in natural samples (only O, S, Hg) or observed in laboratory studies are marked in Figure 10.20. The relative extent of the nuclear charge radius anomalies (x/y = 1.6) causes the characteristic slope in a Δ199Hg/Δ201Hg plot for the NVE in comparison to slopes observed for Hg(II) photoreduction (1.0) and methyl-Hg photodemethylation (~1.36) due to the MIE. The magnitude of MIF due to the NVE is generally much smaller than MIF by the MIE. The MIE occurs only during kinetically controlled processes (in natural systems probably always related to photochemical reactions) whereas the NVE and the MDE occur during both kinetic and equilibrium processes. The relative importance of MDE and NVE on the overall fractionation can vary depending on the reacting species.

Figure 10.21 Schematic illustrationof fractionation mechanisms for the Hg isotope system (From Wiederhold, 2015).

Table 10.7 Mercury Isotopes.

| Isotope | Atomic mass |

Natural abundance (%) |

| 196Hg | 195.965807 |

0.15 |

| 198Hg | 197.966743 |

9.97 |

| 199Hg | 198.968254 |

16.87 |

| 200Hg | 199.968300 |

23.10 |

| 201Hg | 200.970277 |

13.18 |

| 202Hg | 201.970617 |

29.86 |

| 204Hg | 203.973467 |

6.87 |

In assessing greenhouse gas pollution, metal concentrations are paramount. Modern refining and material processing rely heavily on the use of catalysts that use metals, including heavy metals. For tracking these contaminants, isotope signatures can be used in different ways to deduce information about composition and history of environmental samples. The most important applications are source and process tracing. If the isotopic compositions of the involved end members are known and sufficiently distinct, contributions of different source materials in a sample can be quantified by mixing calculations. Figure 10.22 shows how to conduct material balance with two metal pools of opposite isotope signatures. depicts the mass balance between two metal pools of opposite isotope signatures and equal size. shows the effect of different pool sizes (pool A = 4 × pool B), whereas shows the combined effect of different pool sizes and isotope signatures. illustrates a schematic example of a natural river system for which the relative fractions of natural and anthropogenic metal sources can be quantified by metal isotope signatures. The delta (δ) value of a sample can be explained by the sum of the δ values of the mixing final products multiplied by their relative fractions of the total amount present (Equation 10.3), where where f describes the relative fraction of the involved pools A and B (fpool_A + fpool_B = 1, as per the material balance requirement).

Figure 10.22 Schematicillustration of the principles of mixing models used for source tracing with metalstable isotope signatures (From Wiederhold, 2015).

Rearranging the equation allows determining the fraction of one end product (Equation 10.4):

(10.5)

For example, if the geogenic (naturally occurring) background in a soil has an isotopic composition of +0.5‰ relative to the reference standard and the soil has been polluted by an anthropogenic source with a δ value of –1.5‰, a delta value of –1.0‰ would indicate an anthropogenic contribution of 75%. Here, of course, it is assumed that linear mixing rule is applied. As we’ll see in latter sections, real life contamination bears far greater consequence of anthropogenic materials. Chen et al. (2008) presented a case study involving Zn isotopes in the Seine River, France. This is depicted in. Chen et al. (2008) used of Zn isotope ratios as a tracer of anthropogenic contamination using an extensive collection of river water samples from the Seine River basin, collected between 2004 and 2007. The 66Zn/64Zn ratios (expressed as δ66Zn) of dissolved Zn have been measured by MC-ICP-MS after chemical separation of Zn. Significant isotopic variations (0.07–0.58 ‰) occurred along a transect from pristine areas of the Seine basin to the estuary and with time in Paris, and were found to be coherent with the Zn enrichment factor. Dissolved Zn in the Seine River displays conservative behavior, making Zn isotopes a good tracer of the different sources of contamination. Dissolved Zn in the Seine River is essentially of anthropogenic origin (>90%) compared to natural sources (<7%). Roof leaching from Paris conurbation was a major source of Zn, characterized by low δ66Zn values that are distinct from other natural and anthropogenic sources of Zn. Their study highlights the absence of distinctive δ66Zn signatures of fertilizer, compost or rain in river waters of rural areas, and therefore suggests the strong retention of Zn in the soils of the Basin. They demonstrate that Zn isotope ratios can be a powerful tool to trace pathways of anthropogenic Zn in the environment.

Estrade et al. (2010) also used source tracing for several stable isotopes of Hg. They investigated in lichens over a territory of 900 km2 in the northeast of France over a period of nine years (2001–2009). The studied area was divided into four geographical areas: a rural area, a suburban area, an urban area, and an industrial area. In addition, lichens were sampled directly at the bottom of chimneys, within the industrial area. While mercury concentrations in lichens did not correlate with the sampling area, mercury isotope compositions revealed both mass dependent and mass independent fractionation (MIF) characteristic of each geographical area. Odd isotope deficits measured in lichens were smallest in samples close to industries, with Δ199Hg of –0.15 ± 0.03 ‰, where Hg is thought to originate mainly from direct anthropogenic inputs. Samples from the rural area displayed the largest anomalies with Δ199Hg of –0.50 ± 0.03‰. Samples from the two other areas had intermediate Δ199Hg values. Mercury isotopic anomalies in lichens were interpreted to result from mixing between the atmospheric reservoir and direct anthropogenic sources. Furthermore, the combination of mass-dependent and mass independent fractionation was used to characterize the different geographical areas and discriminate the end products (industrial, urban, and local/regional atmospheric pool) involved in the mixing of mercury sources. Figure 10.23 shows how Rural Area (RA), Suburban Area (SA), Urban Area (UA), Industrial Valley (IV), Industrial sites (I). For these cases, the total Hg concentrations varied little between zones. However, the average δ202Hg value of lichens from the IV zone was significantly lighter (–2.07 ‰) than those measured for the three nonindustrial areas. Furthermore, lichens sampled at the two industrial sites (I) within IV displayed similar δ202Hg (–1.90‰) trends. This suggests that the Hg taken up by the lichens in the industrial area had different emission sources from that found in the urban, suburban, and rural areas. Furthermore, average Δ199Hg measured in IV, UA, SA, and RA were found to be significantly different from one area to another, suggesting the contribution of different mercury sources. Odd isotope deficits in lichens are believed to be representative of atmospheric Hg, which is the complementary reservoir of aquatic Hg in terms of isotope fractionation (Carignan et al., 2009). It is known that redox reactions govern mercury (Hg) concentrations in the atmosphere because fluxes (emissions and deposition), and residence times, are largely controlled by Hg speciation. Recent work on aquatic Hg photoreduction suggested that this reaction produces MIF or non-mass dependent fractionation (NMF) and that residual aquatic Hg(II)is characterized by positive δ199Hg and delta δ201Hg anomalies. Carignan et al. (2009) showed that atmospheric Hg accumulated in lichens is characterized by NMF with negative δ199Hg and δ201Hg values (–0.3 to –1 ‰), making the atmosphere and the aquatic environment complementary reservoirs regarding photoreduction and NMF of Hg isotopes. Because few other reactions than aquatic Hg photoreduction induce NMF, photochemical reduction appears to be a key pathway in the global Hg cycle. Carignan et al. (2009) also observed isotopic anomalies in several polluted soils and sediments, suggesting that an important part of Hg in these samples was affected by photoreactions and has cycled through the atmosphere before being stored in the geological environment. Thus, mercury isotopic anomalies measured in environmental samples may be used to trace and quantify the contribution of source emissions.