Chapter 9. Why Engineering Practices are Important in Scrum

Scrum does not talk about engineering practices. As such, you might assume that, like a Jedi master, you are expected to use the force to start the sprint, commit to the work, and then magically make it appear. You’re not. But Scrum does expect that as the team begins to work in an agile manner, it will soon realize that good engineering practices are essential to becoming a high-performing Scrum team. Engineering practices are the magic, the force, the pixie dust needed to get things out the door. I do not attempt to define all the engineering practices that you need to implement on your Scrum projects—there are other books for that. What I do try to convey in this chapter, however, is why you need engineering practices and how they can help development teams overcome some typical obstacles.

I start with a story about Patrick, a developer by trade who moved into project management at a new company and got a little less than he bargained for.

The Story

When Patrick accepted his new job at Helix, he was excited. Helix had a great reputation, a fabulous product history, and had long been Patrick’s dream company. Patrick could hardly wait to get started in his new role as ScrumMaster. True, he’d be inheriting a team, but he had been told the team was using Scrum, a framework Patrick was very familiar with, having used Scrum and Extreme Programming (XP) from both the manager and developer perspectives. And he was sure, since the team members had been together for some time, that they’d evolved into a fairly high-performing team.

Patrick was in for a rude awakening.

On his first full day on the job, Patrick sat down with the team members individually to get to know them and see how their processes worked. His first stop was Niall, reputed to be one of the best coders on the team.

“So, Niall,” said Patrick. “I’ve been watching you work for a while. You’re as good a coder as everyone says. I’m surprised, though, that you don’t seem to use test-driven development. Why not?”

“Too much up-front work and too much throwaway code,” Niall responded offhandedly. “It’s kind of a waste of time. We’ve only got six months to get all this out the door,” he said pointing to the wall full of index cards.

“Sure. TDD might take some extra time. And there is usually extra code, but the design that emerges is much cleaner, and when you encounter issues and errors—” said Patrick before Niall interrupted him.

“We’ve got a debugger and a cross-functional team that includes testers,” said Niall. “We add any bugs we find to the next sprint and solve them as we find them. As for design, we had a lot of design meetings up front, so that’s a nonissue for us.”

“So your issue with TDD is extra time, correct, Niall?” asked Patrick.

“Yes, I don’t have enough time to do it. We need to crank on the code!” exclaimed Niall.

“What about when you are dealing with bugs? How do you know where to look?” asked Patrick.

“I use the debugger to find the issues,” stated Niall.

“What do you consider all the time you spend in the debugger? Isn’t that extra time, too?” challenged Patrick.

“Well, I guess. I’ve never thought about it that way,” Niall admitted.

Patrick nodded. “One more question. I noticed that here you wrote some code to create drop-down boxes. What’s the acceptance criteria you’re trying to fulfill with that?”

Niall smiled conspiratorially. “No one has asked for it yet. But once they see how it works, I think they’re really going to like it. I like to give the customers a little something extra. It gets them excited about the final product.”

“Your heart’s in the right place, but it’s the wrong thing to do,” said Patrick. “We have to write just enough code to satisfy the story. Nothing more. Nothing less. We’ve got too much to do to spend time gold-plating the software. And just think of the time it would free up to do that TDD we were talking about. Think about it. We’ll talk more later.”

Patrick moved on to Claire, an experienced team member with a reputation for finishing her work quickly.

“This morning in the daily scrum, you mentioned that Story 1822 was done, right?”

“Right,” Claire replied.

“So what if I asked you to prove it to me? What would you do?” asked Patrick.

“Well, it works. See?” answered Claire, giving Patrick a quick demo.

“Right. But what about all the things I can’t see? Is it checked in yet?”

“No. I’m planning to do that later. I’m demoing it on my own machine.”

“How often do you check in, Claire?”

“I try to check in once a day, but it’s more like two to three times per week.”

“If you check in that infrequently, how do you know that your code isn’t breaking anything else?”

“Ummm. I guess I’ll find out at check-in and deal with it then. It usually isn’t a problem.”

“Do other team members check in two or three times per week also?”

“Yes,” said Claire.

“So right now, and maybe even when we check in (if all the others’ code isn’t checked in yet), we don’t really know if a story is done, do we?” asked Patrick.

“We know it’s written. We know it’s working,” responded Claire.

“I’ve also noticed there is no continuous integration server, and it appears that the team members don’t share a lot of code with each other,” said Patrick.

“We keep meaning to set up a CI server, but we don’t have time or funding for it,” said Claire. “I know we need to set it up, and we need to follow more rigorous engineering practices, but we’re under so much time pressure. With management breathing down our necks, doing the right thing is almost impossible!”

A bit worried by the attitude of the senior team members toward what he considered basic engineering practices like TDD, static code analysis, shared code ownership, and continuous integration, Patrick spent the next three days looking at the backlog and burndown charts and digging around in the code to see what other trends he could find. He looked at the acceptance test framework that the team had implemented and saw that it had not been updated at all during the sprint. He looked at the size of the check-ins, often 1,000 lines of code or more. He noticed that the team tended to leave one or two stories unfinished almost every sprint. He also saw from the burndown that they often had a lot of work in progress until quite late in the sprint. What’s worse, he found nothing related to good coding practices—various parts of the code were written in different styles, there were few comments in the code, and overall it just had a poor structure.

Patrick was shocked with how few basic engineering practices the team had implemented and the number of bugs that continued to pop up in every sprint. His big concern was how they would release a quality product, on time, without making some serious changes. He thought back to a couple of projects some colleagues had worked on at his last company—projects that had slipped by months because of large architectural issues that were identified late. He needed the team to understand why these practices were worth the effort. After thinking for some time, he came up with a plan.

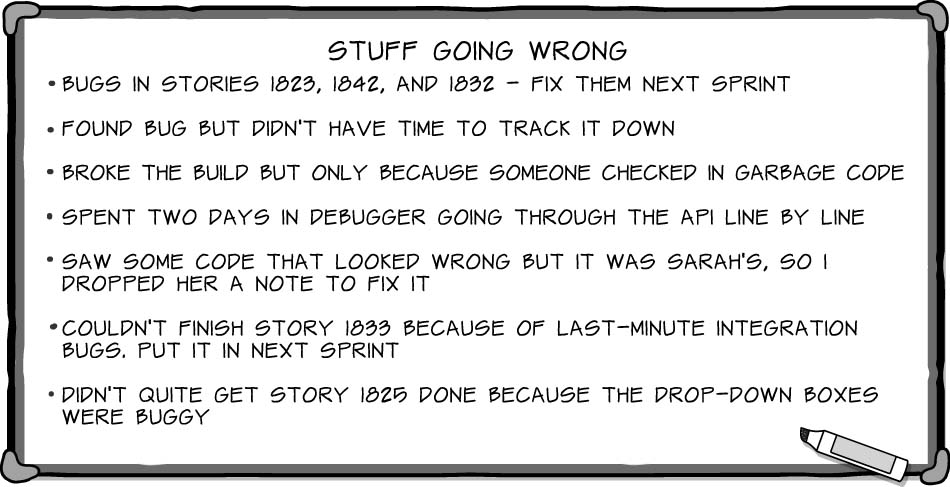

The sprint ended, and after the demo the team gathered in the conference room for the sprint retrospective. While they were filtering in, Patrick quietly taped two big sheets of paper up around the room. The retrospective started. Patrick noticed that the accepted format seemed to be for everyone to take turns talking about what had gone well and what could go better. Perfect, he thought. He got up as they began talking and began writing down a few of the things the team was saying on one of the sheets, which he labeled “Stuff Going Wrong.”

When the team had finished talking, Patrick moved to the next sheet of paper. He labeled it “Stuff We Can Do to Fix It.”

“So, team. I’ve talked to each of you individually. I’ve looked through the code. I imagine you have problems like these every sprint, right?”

“Sure,” said Niall. “But we’re going to have problems. There’s no blame here.”

“Not blaming,” said Patrick. “Just wondering. Is there anything we can do about it?”

“Not if we want to keep up this pace,” said Claire.

“That’s interesting. Let’s talk about pace. To me, all these problems,” Patrick paused to point to the sheet full of issues, then continued, “these issues that keep recurring—they are slowing you down the most: bugs, gold-plating, finding poor code but leaving it to fester until ‘someone, someday’ fixes it. We have a deadline to meet and right now, let me tell you, we’re not going to get there. We’ve got to invest some time in getting better so that we can go faster.”

He let the team soak that in for a few seconds.

“So what are we going to invest in?” asked Patrick. Then he sat down and let the silence grow uncomfortable, waiting for the team to respond.

“I see stuff every day I wish I had time to fix,” said one of the team members. “So one thing we could do would be to include some refactoring tasks in each sprint.”

“Good,” said Patrick, standing up to write it on the paper. “What else?”

“Test-driven development,” said Niall, in a low, resigned voice. “I realize it’s the right thing to do, but it’ll slow me down.”

Patrick nodded but wrote it anyway. “Maybe at first. But we’ll more than make up for that by creating fewer bugs and spending less time finding and tracking the ones that do slip through.”

“Continuous integration,” said Claire. “And more frequent check-ins will both really help. That doesn’t take that much time once it’s set up. It’s just not a habit, and we don’t have the tools right now.”

Patrick wrote it. “Yep. Tools are easy when compared to habits. It’s changing the habit that’ll be hard.”

The team went on to mention pair programming.

“And pair programming and TDD will help ensure that we build only what is necessary, which will ultimately increase our velocity as well,” said Patrick. “Clearly, you know what you should be doing. So why aren’t you doing it?”

The team went on to list several obstacles to adopting engineering practices, including lack of knowledge and fear of the unknown. They discussed each objection, with Patrick providing the rationale for at least giving it a try. In the end, they agreed to a new definition of done, which would necessitate immediately implementing certain engineering practices.

The team decided to implement other new practices one at a time in subsequent sprints. In turn, Patrick agreed to discuss their plan with management and explain to them why the team’s velocity might drop for a sprint or two while they invest in practices that would ultimately help them go faster.

“We’ve got a long way to go to get from here to there,” finished Patrick. “But I promise once we start working this way, you won’t want to go back. The first thing I want us all to do is start challenging each other, every day, to prove it. Prove it’s tested, prove it’s not going to break the build, prove it’s done. That’ll get us a long way toward where we want to go.”

The Practices

The challenges in this chapter’s story are not uncommon with teams new to Scrum. It’s easy to get focused on doing Scrum right and never get around to implementing the engineering practices necessary to become a truly high-performing Scrum team. Patrick’s solution was to work with the team members to make visible where they were falling short, to show them how it was slowing them down, and to convince them that investing in better practices would both solve their issues and increase their velocity as well. Patrick and the team agreed to implement the following key engineering practices:

![]() Implementing test-driven development (TDD)

Implementing test-driven development (TDD)

![]() Refactoring

Refactoring

![]() Continuous integration and more frequent check-ins

Continuous integration and more frequent check-ins

![]() Pair programming

Pair programming

![]() Automated integration and acceptance tests

Automated integration and acceptance tests

All these engineering practices mean more work up front. You will recover the time later through efficiency, improved quality, and code stability. What is often forgotten, however, is that these are not silver bullets. Doing one or doing them all will not guarantee you success, but they will get you farther away from failure if they are done with diligence and discipline.

Implementing Test-Driven Development

Contrary to what the name implies, TDD is not a testing process. It’s a software design technique where code is developed in short cycles. You can think of the TDD process as Red, Green, Refactor. The first step is to write a new unit test without writing the necessary code to make it pass. Obviously, when you run that test, it will fail: This is the red state. The next step is to write new code, just enough to make the unit test pass, or turn green. When the test passes, the code is working. The final step, which can occur multiple times, is to refactor. The next section talks more about refactoring, but in TDD it specifically refers to removing any “code smells,” elements that might not be optimized, well-designed, or easy to maintain. Design changes can occur during this phase as well (also called test-driven design). During refactoring, the code is changed, but all the while, the tests must stay green, or continue to pass. The process then begins again from the beginning, writing new tests and new code until the story is complete.

I’m not going to attempt to teach you TDD; other books do a good job of that, and I’ve listed them in the references with this chapter. What I want you to know is why TDD helps a Scrum team and how you can help your own team reach the conclusion that TDD is the best choice for meeting sprint goals at a high velocity.

Implementing TDD is akin to weaving a net. Each automated test builds on the next, forming a safety net of automated unit tests. Consider the high-wire act at the circus. The person hundreds of feet above the ground, trying to balance on a thin wire, can take the risks he does because he knows that if he falls, the net will save him. A team might choose to walk the high wire without a safety net, but if the team falls, it could take days, maybe weeks, to recover. Yes, more code is written with automated unit tests, and, yes, sometimes there is more throwaway code. In many cases, though, the reduced debugging time means that the total implementation time is actually shorter.

In 2008, researchers from Microsoft, IBM, and North Carolina State University published a paper [NAGGAPAN], looking at the benefit of TDD in teams. They looked at three teams from Microsoft (one of which I happened to be working on at the time) and one team from IBM. They found that all teams showed “significant” drops in defect density1: 40 percent for the IBM team and between 60 percent and 90 percent for the Microsoft teams. The increased time taken to follow TDD on these projects ranged from 15 percent to 35 percent. This initial decrease in velocity is compensated for by the increased stability and maintainability of the code.

1. Defined in the published [NAGGAPAN] paper as “When software is being developed, a person makes an error that results in a physical fault (or defect) in a software element. When this element is executed, traversal of the fault or defect may put the element (or system) into an erroneous state. When this erroneous state results in an externally visible anomaly, we say that a failure has occurred.”

By following TDD, you will be less reluctant to implement a change that is required by a key stakeholder or customer, even late in the project. Without TDD, these kinds of eleventh-hour changes are considered too risky because the team can’t predict the ramifications of the change (coupled code), and there isn’t time to test all the possible side effects. With a TDD safety net in place, the risk is substantially lessened, so teams feel confident that they can make required changes, no matter when they occur in the project. Good version control practices also help to increase confidence. If the refactoring goes horribly awry or breaks too many tests, you can easily roll back to a known good point.

Anecdotally, in the projects that I’ve run and on teams that I’ve coached, I have found that TDD significantly streamlines the writing of code, helps prevent analysis paralysis, minimizes impediments (like spending lots of time in the debugger), and gives teams the priceless gift of peace of mind. For these reasons, TDD is essential for teams that want to grow to be high-performing Scrum teams. And, remember, the compiler only tells you if your code obeys the rules of the language; your tests prove that your code executes correctly.

Refactoring

Refactoring is the act of enhancing or improving the design of existing code without changing its intent or behavior. The external behavior remains the same, while under the hood, things are more streamlined.

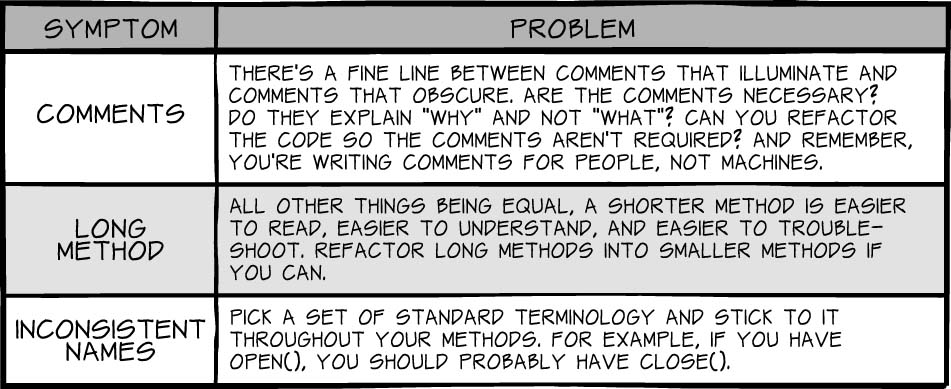

How do you know when you need to refactor your code? When it begins to smell. It’s best to follow the rules of Grandma Beck: “If it stinks, change it” [FOWLER 01]. Her comment was in relation to diapers, but it applies equally as well to code. A code smell is a seemingly small problem that is symptomatic of a deeper issue. Jeff Atwood lists some common smells in a 2006 blog post, breaking them out into smells within classes and smells between classes [ATWOOD].

Table 9-1 presents a few of my favorites from Atwood’s list.

These might seem like basic coding standards, yet they are too often left undone.

When should you refactor? Refactoring can be performed at any time during a system’s life cycle, whenever someone is working on legacy code, sees a piece of code that could be improved, notices the system acting sluggish, or if bugs start popping up in a particular section of the code—basically whenever someone notices a smell.

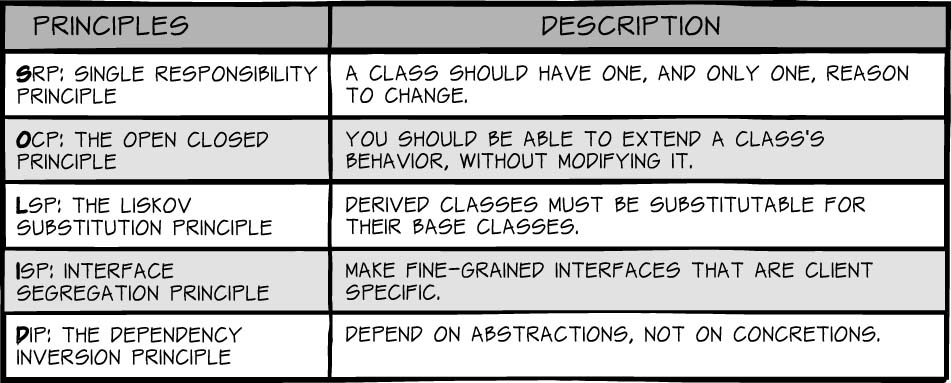

Remember that refactoring code does not mean rewriting the code. Instead, to refactor is to optimize the code without changing the intent of the code. Robert Martin [MARTIN] has a set of principles with the acronym SOLID, in which he talks about the first five principles of class design (see Table 9-2).

Why should you refactor? Simply put, it’s an investment in your code. An analogy might be doing little things every day to help keep your house clean. If you walk in the house with dirty shoes on your feet on a regular basis, your house will get dirtier faster. If you throw your discarded wrappers on the counter rather than in the garbage can because you’re in a hurry, in a few days you’re going to look up and find a counter full of wrappers that you’ll have to clean. Refactoring is like cleaning up after yourself a little bit each day—sometimes you’ll clean more, sometimes less, but the important thing is that you’re cleaning on a regular basis and not waiting for it to pile up or for someone else to do it.

Continuous Integration and More Frequent Check-Ins

With discipline, continuous integration can take a Scrum team from somewhat good to just plain awesome. Martin Fowler defines continuous integration best [FOWLER 02]:

Continuous Integration is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily—leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible.

Continuous integration enables us to have quicker feedback loops on the code. Instead of checking in a thousand or more lines at one time, each person or pair might check in a hundred or so lines of code at once. They also create a local build before committing changes to the larger branch. Both the local builds and the main build should be fully automated, meaning the person submitting the code can “click and forget,” while the build machine runs a suite of automated acceptance and unit tests. These builds might also run static analysis checks, verify desired unit test coverage, and so forth. Once the code is run and in the branch, a continuous integration server pulls everything into the branch and runs a larger suite of tests. Because the check-in is small, the feedback loop is short. Therefore, it is much easier to see what broke the build and why.

Why should you care about any of this? Let me associate it with real life.

![]() Headlights—Why do drivers use headlights at night? Because they can see where they’re going and others can see them, it reduces the risk of an accident. Continuous integration provides the same benefit—it shines a light down the path of code development so that you can determine whether you’re going to hit something. A continuous integration build warns you almost instantly if something is blocking your way.

Headlights—Why do drivers use headlights at night? Because they can see where they’re going and others can see them, it reduces the risk of an accident. Continuous integration provides the same benefit—it shines a light down the path of code development so that you can determine whether you’re going to hit something. A continuous integration build warns you almost instantly if something is blocking your way.

![]() Washing machines—What makes them great? They eliminate the repetitive, labor-intensive process of washing clothes by hand. Similarly, continuous integration reduces all (or most) manual processes. Not only does this free up time and resources, it also helps improve quality. When people do things manually, there is an increased chance for variation. Automating helps ensure that the same test runs the exact same way, every time. Having automation in place allows team members to focus on what matters, the system under development.

Washing machines—What makes them great? They eliminate the repetitive, labor-intensive process of washing clothes by hand. Similarly, continuous integration reduces all (or most) manual processes. Not only does this free up time and resources, it also helps improve quality. When people do things manually, there is an increased chance for variation. Automating helps ensure that the same test runs the exact same way, every time. Having automation in place allows team members to focus on what matters, the system under development.

![]() GPS—Having a GPS in your vehicle allows you to know where you are and where you are going at all times. Following continuous integration does the same thing. It provides visibility into where the system under development is currently and where it is going next. This enables the team and the product owner to react in real time. Further, if teams add quality gates or checks such as unit test coverage, teams are able to identify patterns or trends with the code and make necessary corrections in time to alter the system’s course.

GPS—Having a GPS in your vehicle allows you to know where you are and where you are going at all times. Following continuous integration does the same thing. It provides visibility into where the system under development is currently and where it is going next. This enables the team and the product owner to react in real time. Further, if teams add quality gates or checks such as unit test coverage, teams are able to identify patterns or trends with the code and make necessary corrections in time to alter the system’s course.

Scrum maintains that at the end of the sprint, all code should be potentially shippable, yet many teams struggle with what that really means. Continuous integration enables teams to build and release at any time. What does this look like in the real world? It means going into a customer review meeting and pulling the latest bits off the build server and installing them. No manual testing, no human validation, just pure trust in the system. And where does this trust come from? As teams develop good engineering practices, their confidence in their systems grows. TDD gives them a safety net and shows them where their errors are while their continuous integration strategy allows them to fully understand the impacts of code changes. It is important to keep the build in a green state and not go home with a broken build. When breaks do occur, it is better to roll back any changes at the end of the day, go home in a green state, and fix any issues in the morning.

One of my favorite books on continuous integration is Continuous Integration: Improving Software Quality and Reducing Risk by Paul M. Duvall. As stated before, this is not a book on software design or hardcore engineering; buy Paul’s book and learn just about everything you need to know about how to start doing continuous integration. Alternatively, a paper presented at Agile 2008 by Ade Miller, “One Hundred Days of Continuous Integration,” is also a great read.

Pair Programming

Too often as people shift in and out of projects, the information shifts in and out with them—information ranging from how stories were implemented to why one design path was chosen over another. There is one technique that ensures that everyone on the team knows what the code actually does: pair programming.

Pair programming drives collective code ownership and increases knowledge sharing among team members. At its core, pair programming is two team members working together to accomplish a single task. One person is the driver and the other is the navigator (for more advanced pairing techniques, see Chapter 19, “Keeping People Engaged with Pair Programming”). When the pairs switch on a frequent basis, information passes among all team members, ensuring that everyone is familiar with the entire code base. Pair programming uses conversation to produce code, causing each person in the pair to question continually what the other is doing. Pairs reinforce the team’s coding standards on how to get the system built.

It is important to understand that practicing pair programming does not necessarily mean work takes twice as long. While at the University of Utah, Laurie Williams found that pair programming was 15 percent slower than two independent developers but produced 15 percent fewer bugs [WILLIAMS]. This finding reemphasizes that teams making the investment in more disciplined engineering practices up front go a little slower at first but have a better payback down the road through reduced risk, better visibility, and lower defect rates.

Another benefit of pair programming is the reduction of noise. I define noise to mean phone calls, email, instant messages, unnecessary meetings, and other distractions. It would be rude to be working on a project with someone and check your email or be on a phone call, right? When you’re pairing with someone, you focus on what you are working on with the other person, just because it’s good manners. The side benefit of that focus is that you get more done.

I like to think of pair programming as “real-time code reviews,” meaning the code is being reviewed and updated and managed in real time. A good pair is constantly reflecting on the work at hand. As a result, there is no need for separate pre-check-in meetings to review what individual team members did. And because fewer bugs are created, fewer bug triage meetings are needed. All this noise reduction optimizes the team’s time.

Pairing in a distributed environment is challenging but possible. Sharing a screen, using webcams, and having a direct line to the person you are pairing with are all requirements. It is obviously easier to pair when you are in the same time zone, so if you distribute, go north/south instead of east/west.

To do pair programming well, you’ve got to start with education and ground rules. Let people know that, as with trying anything new, pairing will be uncomfortable and a bit awkward at first—remind them to keep an open mind and to have courage, knowing that the entire team is going through this together. Encourage them to have a willingness to try—to really give it a good effort and be able to move forward objectively. If needed, invest in training and always be sure to implement coding standards.

There are some great papers and books on pair programming. Start with an older book, Pair Programming Illuminated, by Laurie Williams and Robert Kessler. Another good reference is The Art of Agile Development by James Shore. To understand the cost benefits of pairing, read “The Economics of Software Development by Pair Programmers” by Hakan Erdogmus and Laurie Williams.

Automated Integration and Acceptance Tests

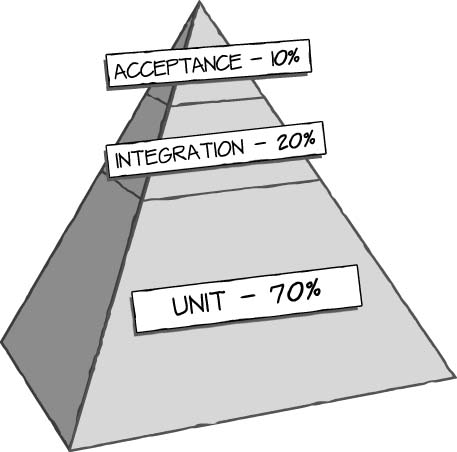

Like unit tests, integration and acceptance tests are a form of automated tests. They fill out the top of the test automation pyramid, with unit tests making up the base layer (see Figure 9-1).

Integration tests are designed to test various integration points in your system. They are designed to ensure that APIs, data formats, and interfaces are all working as expected while the team is developing the code. While it is generally easier to build automated integration tests than it would be to build automated acceptance tests, automated integration tests still have a high maintenance cost, especially in systems with hundreds or thousands of people working together in a single codebase.

Acceptance tests are designed to emulate the behavior of the user. If you are building a system with a UI, these tests will be scripted to click and emulate the behavior of the user. These tests are often easily broken, as interfaces and customers’ minds change. They are also prone to providing false-positive readings, which makes their maintenance cost high—but not as high as a dedicated team member. A variety of tools are designed to let stakeholders and customers write tests and easily interface with the system to ensure the system is working from an end-user perspective.

Even with the added work and maintenance cost, though, automated integration and acceptance tests are one of the best ways to ensure that a Scrum team is building the right thing. First, each allows the team to have a continuous feedback loop, similar to that provided by continuous integration and unit tests, which helps prevent long testing phases at the end of each sprint and each release.

Second, it’s cheaper to fix issues when they occur than it is to fix them a few sprints down the road. Plus, fixing bugs early helps keep the overall codebase clean. While it might initially slow the team down with what can seem to be constant bug fixing, the end result will be a cleaner, more refined codebase over time.

Third, having automated integration tests helps ensure that the external integration points the system interfaces with are working, and continue working, as expected. I often ask teams, “What are your computers doing after you go home at night?” They should be working for the team, identifying weaknesses in the system so that they can be corrected the next morning.

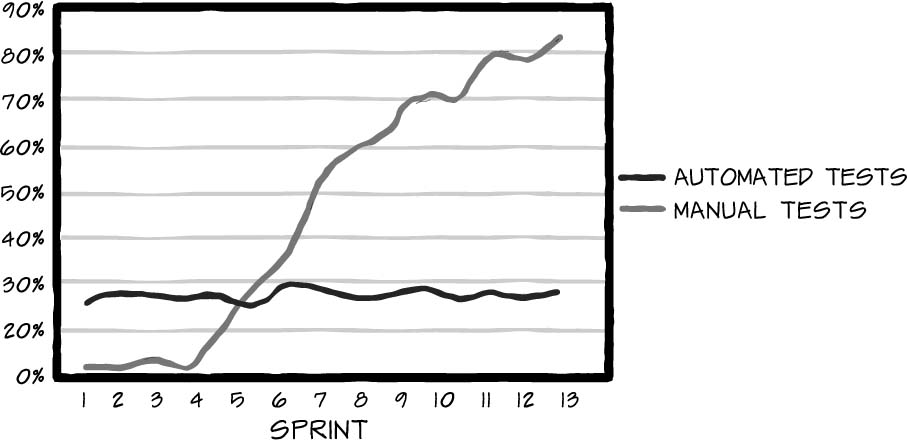

Finally, having automated acceptance tests helps ensure that the UI/interface behaviors of the system work as expected. Acceptance tests should be fired off at every build if possible and should be continually built out over time, just as the unit test “safety net” is built out over time. This means that acceptance tests need to be written before the sprint starts so when the product owner goes into the sprint planning meeting, the team knows what it needs to “prove” to the customers and stakeholders from an interface point of view. When you don’t invest in automation, each sprint the test debt builds and the amount of time spent each sprint on manual testing rises to a nearly unmanageable state, as illustrated in Figure 9-2.

Keys to Success

Yes, you can do Scrum without implementing even one of the engineering practices listed in this chapter. And yes, you will find some success. Eventually, however, you will find yourself asking, “Why are we not getting significantly better?” The answer lies in how you actually build your software. If you do Scrum but refuse to change your engineering practices and your mindset, you’ll struggle.

All I am asking you to do is think about things differently; understand the why when you do things. In the end, however, you may have to do some additional initial work (TDD, pairing, setting up continuous integration, creating an automated testing framework) and make a calculated bet that it will pay off in the end by having less rework to do—simply because you will have fewer bugs and integration issues.

Not a Silver Bullet

I cannot stress this enough: Using Scrum or any of the practices listed in this chapter will not magically grant you the ability to more frequently deliver software of higher quality or guarantee that what you build will be what your customers really want. I can, however, predict that choosing not to use these techniques will likely result in failure. In the same way, riding a high-quality road bike won’t guarantee a win at the Tour de France; riding a tricycle, however, will virtually guarantee a loss.

The practices in this chapter are not silver bullets. Instead they are meant as signposts that help guide you to your final destination, which is a high-performing team able to react quickly and with high quality to customer requests.

Starting Out

Getting started with engineering practices can seem overwhelming, but it doesn’t have to be. I have seen teams take two different approaches: They start slowly, gradually adding one new practice at a time, or they go full throttle and embrace everything. Either way you do it, it’s important to realize that you are going to stumble as you work your way through revamping your engineering practices. This is okay—just remember to stay disciplined and focused, and seek help when you need it.

Get the Team to Buy In

In the story, Patrick took the team to the why right out of the gate. Sure, he could have said, “Do it my way or the highway,” but in my experience it works much better if the team members understand why they are being asked to change. Patrick helped the members of the team see his point of view, helped them understand what he wanted, and gave them guidance on how to get there and why it was important. This is essential, because if you have someone on the team who is not supporting the effort, there will be sabotage. Avoid the command-and-control aspect and focus on the why.

Definition of Done

In Chapter 7, “How Do You Know You’re Done?” you find an exercise on how to build a done list and how to work with your team to define what done is. At the end of this chapter’s story, Patrick did a similar exercise with his team. Knowing what it means to be done is like having a set of blueprints. The practices in this chapter are the tools that allow teams to build. Without understanding what done is, you will be lost.

Build Engineering into Product Backlog

For the team to get traction on the practices listed in this chapter, the product owner must understand the payoff and be willing to make the long-term investment in the team. One way to do this and to highlight the visibility of the team’s effort to improve its engineering practices is to include engineering related stories or tasks in the product backlog.

Get Training/Coaching

Don’t expect people to “just do it.” Teach them how. You can do this by hiring a coach, finding someone in your company who has this experience, buying books, or sending them to training classes. If money is an issue, realize that the benefit that modern engineering practices will bring back to the company will be far greater than the financial investment being made up front.

Putting It Together

Good engineering practices break things into small pieces, from unit tests to check-ins, so that the time between issue and fix is minimal. Further, the ability to change direction with small batches increases customer confidence. As a result, risk is reduced across the board. The automated unit test framework that the team has built over the length of the project alerts team members when there is a problem and tells them where the problem is—no more spending “days in the debugger.” They also have better visibility into the code through pairing, as the “I own the code” mentality is replaced with collective code ownership. This mixture results in lower defect rates and overall increases in quality—giving customers more trust in the team and the team more confidence in its ability to deliver working software every sprint.

Please understand that these practices are not easy to do. They require dedication, focus, and perseverance. You will be pulled away from them, naturally, due to the time investment needed to do each of them. With TDD, you will likely need to get trained on how to do it and initially you will slow down. The same with setting up a continuous integration server—though you can have it up and running in under an hour, getting it set up to suit your needs and the needs of the business takes time. Refactoring and pair programming also take time to become accustomed to. Overall, I find it takes from three to six months to go from a traditional way of building software to fully understanding and implementing the engineering practices listed in this chapter.

At the end of the day, the investment you make now will pay huge dividends in the future, giving you stability, quality, and the consistent releases your customers desire.

References

[ATWOOD] Atwood, Jeff. “Code Smells.” Coding Horror. http://www.codinghorror.com/blog/2006/05/code-smells.html (accessed 01/11/2009).

[FOWLER 01] Fowler, Martin, Kent Beck, et al. 1999. Refactoring: Improving the Design of Existing Code. Reading, MA: Addison-Wesley Professional, p. 75.

[FOWLER 02] Fowler, Martin. “Continuous Integration.” martinfowler.com. http://martinfowler.com/articles/continuousIntegration.html (accessed 03/15/2010).

[MARTIN] Martin, Robert C. “The Principles of OOD.” http://butunclebob.com/ArticleS.UncleBob.PrinciplesOfOod (accessed 07/11/2011).

[NAGGAPAN] Nagappan, Nachiappan, E. Michael Maximilien, Thirumalesh Bhat, and Laurie Williams. “Realizing Quality Improvement through Test Driven Development: Results and Experiences of Four Industrial Teams.” Microsoft Research. http://research.microsoft.com/en-us/groups/ese/nagappan_tdd.pdf (accessed 07/11/2011).

[WILLIAMS] Williams, Laurie. “The Collaborative Software Process.” The University of Utah. http://www.cs.utah.edu/~lwilliam/Papers/dissertation.pdf (accessed 01/11/2011).

Works Consulted

Astels, David. 2004. Test-Driven Development: A Practical Guide. Upper Saddle River, NJ: Prentice Hall.

Erdogmus, Hakan, and Laurie Williams. “The Economics of Software Development by Pair Programmers.” The Engineering Economist 48(4): 283–319.

Shore, James, and Shane Warden. 2008. The Art of Agile Development. Sebastopol, CA: O’Reilly Media.

Williams, Laurie, and Robert R. Kessler. 2003. Pair Programming Illuminated. Boston: Addison-Wesley.