12.

12.

WON’T THE

IDEAS SOON

RUN OUT?

In 1953, NASA held some planning meetings to select projects for the coming years. For preparation, they asked the Air Force Office of Scientific Research to make a graph of the top speeds of vehicles throughout history, since this could give them an idea of what would be expected of them in the future.

The resulting graph contained an exponential vertical axis showing fractions of the speed of light. On the horizontal axis were given years 1750 - 2050. Furthermore, there was an indication of what speed was required for a satellite to reach orbit or to leave the Earth entirely. The latter was 40,320 km/h (25,000 mph). The graph started with the Pony Express of 1750, and then came the trains, cars, aircraft and rockets. Faster and faster it went, and a curve matched the exponentially-growing top speeds and extended this into the future.

When the planning team saw the shape of this curve and how it projected forward, they received a big surprise, because it suggested that mankind was only four years away from being able to send a rocket into orbit. Four years! But NASA was not working with any such plans at all. However, as it later turned out, the Russians were, and four years later, on October 4th, 1957, they sent a rocket into orbit.

Horror! NASA was behind!

The US now started a crash course to catch up. On 6 December of that same year, they attempted to send a satellite into orbit, but it blew up two seconds after ignition. The following year, the Soviet Union made five new attempts to send satellites into orbit, of which, one succeeded, and the Americans made 23 attempts and sent five satellites into orbit. The US was now taking over the lead in the space race and put the first people on the Moon in 1969. Two years later, our friend Alan Sheppard arrived there too, where, to the surprise of many, he pulled out a golf club and three golf balls. His first swing didn’t go well; he buried the ball. The second only brought the ball a metre or so forward, which made Houston space control comment: “It looks like a slice to me, Al.” But his final shot was clean and the ball flew for what was probably more than a minute due to the low gravity. The Americans were back on top, and they definitely enjoyed it.

The space race had enormous implications for mankind, as it soon led to development of communications satellites, which today helps us with satellite TV, weather forecasts, telephone communications, GPS navigation, surveillance and much more.

In 1959, something happened on the technology front that was not about millions of kilometres or giant rocket engines, but about technological adventure on a microscopic scale. On December 29,1959, the (incoming) Nobel Laureate Richard Feynman held a speech at the California Institute of Technology, during which he astonished his audience with wild fantasies about how much information one could, in principle, compress onto a single computing device.169

Technically speaking, he said, just considering the limitations of physics, maybe one day it would be possible to make a physical representation of a data unit, (a “bit”), with a maximum of only 100 atoms. If you did that, he continued, it would also be possible to store the full text of all books ever published (which he estimated to be 24 million titles), on a single computer chip with a diameter of 100,000 nanometres. To put that in perspective, a hair is approximately 50,000 nanometres wide, so what he really claimed was that you could, in principle, store the contents of all the world’s books in an almost invisible speck of dust that was only twice as wide as a hair!

At the end of his speech, he offered two prizes of $1,000 each - one for developing an electric motor not exceeding 0.4 mm in each dimension, and another one for scaling down letters 25,000 times, so that the entire Encyclopaedia Britannica could be stored on the head of a pin.

These tasks were supposed to be very difficult, but the following year (1960), an electrical engineer claimed the first prize. However, it would take all of 26 years before the second prize was given to a student from Stanford University. He had, with an ingenious laser machine, engraved the first page of Charles Dickens’ A Tale of Two Cities on the head of a pin.

This was impressive, but how do you read it afterwards? Newman was struggling with this himself, as he had great problems finding his own text with a microscope; the letters were just so tiny compared to the pin head. No, it is far more practical to make compact data storage by using transistors.

What’s a transistor? These have, in a sense, a kinship with cogwheels in clocks. In a complicated watch, there will be large and small cogwheels that mesh with each other and each of them represents a small mathematical algorithm, such that there is 60 seconds to one minute and 24 hours in a day. When you write basic software code (“machine code”), you also use simple algorithms, and the basic one is an equation that says “if ... then ... otherwise...”. Let’s say you are driving your car and reach a traffic light: If the light is green, then we continue, otherwise we stop. It’s that sort of thing.

This concept was first patented in Canada in 1925, but its technical breakthrough came in 1947, when engineers at Bell Labs learned to make them by sending electric current through germanium. This was smart because, without a power field, this crystal was a very poor conductor of electricity, but when you switched on a power field, it suddenly became a good conductor. It was, in other words, a “semi-conductor”.

The transistors in a computer chip can nowadays be switched on and off billions of times per second, and the signals within them move at speeds corresponding to travelling around Earth at equator-level 7.5 times in a single second. So we have made these ultra tiny machines that just run unbelievably fast and, if you inter-connect a myriad of such transistors, you have endless creative possibilities. Since this invention was developed, the industry has beaten one record after another. For example, the production of transistors reached close to 1.4 billion per capita worldwide in 2010.

The stories about the increases in maximum speeds of human travel or of performance improvements in IT are both about exponential growth. In 1965, the co-founder of Intel, Gordon Moore, described how the number of transistors on computer chips had doubled every 18 months between 1958 and 1965. He ventured that this exponential trend could probably be maintained for at least another ten years. Since then, others have dubbed this rule “Moore’s Law.”

How on earth was that achieved? Via good, solid, engineering. For example, by making the entire electronic maze ever smaller, so the distances the electrons had to travel were minimized and more and more transistors could fit on a chip. Also, by optimizing the structure of the maze and even by making it three-dimentional, learning to turn the power on and off faster and faster, thus increasing the so-called “clock rate”; and by making multi-core chips to reduce heat problems.

It’s amazing how long Moore’s Law has been in force and, since the 1950s, the average performance of the chips has grown more than 10,000 billion times.170 In 1996, the US military ran the world’s fastest super-computer ASCI Red, which cost $55 million, used 800,000 watts and took up approximately 150 square metres (1,600 square feet). Ten years leter, in 2006, it was taken out of service because you could achieve the same performance with, for instance, the Sony Playstation, which cost in the region of $500, took up less space than a desk-top computer and used 200 watts.

Moore’s observation started with looking at data going back to 1958 and his original forecast was only good until 1975. However, American author, serial entrepreneur and computer scientist Ray Kurzweil has calculated that the law has actually been in operation for close to 200 years. As long as this law remains valid, it means that the improvements in performance at any time over the next 18-24 months will be as great as they were the preceeding 200 years. However, we know that if the size of the current gates in the chip falls below what corresponds to the width of approximately 50 hydrogen atoms, the electrons can jump spontaneously over them, even if they are supposed to be in a shot position. Many experts expect that we will reach this limit around 2020-2025, but further efficiency can then be obtained by connecting many chips and computers into virtual super-computers, and also by introducing other materials and concepts such as quantum and optical computing (using electron spin to represent bits and light to move them).171

Rules similar to Moore’s Law apply to most forms of information technology, and Kurzweil, for example, has documented similar laws concerning prices of chips, annual sales of chips and the performance of super-computers. Oh yes, and also for the sequencing speeds for DNA, the price drop of DNA sequencing, the total amount of decoded genetic material, the resolution of three-dimensional brain scans and much, much more. It is also evident in some parts of the software’s effectiveness – for instance, scientist Martin Grötschel found that computers’ ability to solve certain computational problems were improved 43 million times between 1988 and 2003, although the computer’s hardware had only been made 1,000 times faster during the same period.172

In 2009, Kevin Kelly, founding executive editor of Wired Magazine, made a list of 22 different variations of Moore’s Law, where he found the historical doubling times ranging from nine months for optical network performance and price reductions; over 12 months for price of pixels in cameras; and 36 months in computer clock rates.173 As Kelly noted, it is in the small things that you see these extreme doubling rates. The work of these scales requires much thought, but these are far easier to exchange than physical products, and they rarely require much energy to replicate.

All of this means that Moore’s Law can be generalized - it applies to information technology in general. Information technologies tend to increase their performance exponentially, with typical doubling times of one to two years.

That’s all very well, but it’s also very much history by now, and this raises an obvious question: for how long can such intense creativity continue? Aren’t we, for instance, closing in on some limits in the laws of physics? After all, when the gates in transistors cannot get any smaller, when the crystals that drive clock speeds cannot vibrate any faster, when speeds of rockets approach the speed of light, what then? There is also another concern: are we simply approaching the bottom of the well of possible ideas?

It is true that the limitations set by the laws of physics are real, but the resource-view of innovation is, nevertheless, quite wrong for a whole lot of reasons. First, we can see from history that not only has our innovation grown exponentially, but the doubling time of our knowledge has become shorter – the growth process has, in other words, been hyper-exponential since around 1450. According to some estimates, at the start of the 22nd century our knowledge doubled roughly every eight to nine years, which is a far faster doubling rate than that of, for example, 100, 10,000 or 500,000 years earlier.174 Just look again at how it began:

![]() 400,000 –1,500,000 BC: Control of fire

400,000 –1,500,000 BC: Control of fire

![]() 700,000 BC: Stone axe

700,000 BC: Stone axe

![]() 500,000 BC: Tents of skin

500,000 BC: Tents of skin

![]() 400,000 BC: Wooden skewers

400,000 BC: Wooden skewers

![]() 250,000 BC: Fine stone cutting

250,000 BC: Fine stone cutting

![]() 230,000 BC: Funerals

230,000 BC: Funerals

![]() 200,000 BC: Knives and rope

200,000 BC: Knives and rope

![]() 100,000 BC: Serrated blades and domestication of wolves

100,000 BC: Serrated blades and domestication of wolves

![]() 90,000 BC: The needle made of bone

90,000 BC: The needle made of bone

![]() 70,000 BC: Art and clothing

70,000 BC: Art and clothing

![]() 60,000 BC: Herbal medicine

60,000 BC: Herbal medicine

![]() 50,000 BC: Boats, flutes, bone spearheads

50,000 BC: Boats, flutes, bone spearheads

This is roughly exponential, but the doubling time was evidently not eight to nine years, so yes, it has become hyper-exponential.

But why does this happen? A lot of the explanation can simply be found in the logics of an expanding creative design space which, as we saw earlier, creates more opportunities for new combinations as it grows. As per our earlier example, if we double the number of existing products in a creative design space from two to four, the number of possible simple re-combinations rises from three to 14. The mechanics of a creative design space’s growth is inherently hyper-exponential.

However, there are other important explanations to the acceleration, and one is very simple: the growing population. The greater the number of people who live in a dynamic society, the more people there are to come up with ideas. Today, there are approximately 30,000 times as many people on the planet than there were at the end of the last Ice Age. The effect of having 30,000 times more people is much more than a factor of 30,000, because today we are much better connected to one another than people were during the Stone Age, and the creative design space we have at our disposal is infinitely bigger.

Now, one might think that, similarly, ideas need longer to cascade and spread in a much larger population, but it is not so. Good ideas spread exponentially the same way that viruses do, and if the population becomes, for example, 1,000 times larger, it only takes twice as long for a good idea to spread widely. As people grew richer and therefore had more money to travel, communicate and study, the processes just became faster and faster.

The third reason is emerging market switch to market economies. Until 1980, China, the Warsaw Pact countries and many other emerging markets had socialist governments and were largely static and had very little innovation except perhaps within military/space programmes. Now, they have almost all become market-driven and are far more creative; China and India alone can massively improve global creativity, as they are clearly ramping up their creativity-levels very quickly now.

In addition, there are more people who are educated, and, in particular, there are more females in higher education. We also conduct far more win-win transactions and exchanges of ideas than we used to, and we constantly invent new meta-ideas and meta-technologies that facilitate such transactions.

Urbanization plays a major role. An increasing percentage of the world’s population lives in cities and, an analysis from 2007 showed that innovation intensity increases in a super-linear proportion to the size of cities.175 To be more specific, if city size increases by 100% it will, on average, increase its production by 115 %, while its resource efficiency also improves. The effect of concentrating people in cities is akin to developing a more compact architecture in a semi-conductor chip and, interestingly, the clock frequency in cities is higher than in towns or villagers: It has been proved that people in cities walk faster on the street, probably because their lives offer more opportunities.176 And there is more: the denser the cities, the more patents are filed inproportion to the population.177

So there is an underlying hyper-exponential growth in our overall creativity, and while we do run into the limits of physics in specific technologies all the time (and also will with micro-processors and rocket speeds), something new in other areas keeps coming up, and the typical pattern is this: 1) A new core technology is invented from combinations of previous ones; 2) this creates its own creative design space, where applications pop up; 3) fashion trends enter the picture; 4) the technology or the derived application combines with other technologies to create another new core technology after which the process starts all over again.

Overall, the following looks like a good bet for some simple basic assumptions about creativity in the future:

![]() If something is desirable and doesn’t violate the laws of physics, it will probably be invented and launched commercially. We do not know, in advance, how this will be achieved, but we know that, in a free market economy, people will not stop trying, until they have succeeded.

If something is desirable and doesn’t violate the laws of physics, it will probably be invented and launched commercially. We do not know, in advance, how this will be achieved, but we know that, in a free market economy, people will not stop trying, until they have succeeded.

![]() It can happen, even if it sounds magic. Many new technologies seem “magical” when we first encounter them, just as glass, mirrors, anaesthesia, aeroplanes, computers and television would have appeared incredible the first time they were encountered.

It can happen, even if it sounds magic. Many new technologies seem “magical” when we first encounter them, just as glass, mirrors, anaesthesia, aeroplanes, computers and television would have appeared incredible the first time they were encountered.

![]() Perhaps the development is already well underway. The mere fact that someone thinks that a technology cannot be implemented probably means that there are others who think about how it could be done.

Perhaps the development is already well underway. The mere fact that someone thinks that a technology cannot be implemented probably means that there are others who think about how it could be done.

![]() It often starts later than you think but then grows bigger than you ever imagined. Those who are good at designing a new core technology often overestimate how fast they can get it launched, but underestimate the ultimate size of the market, because they do not envisage the creative design space that will evolve around it.

It often starts later than you think but then grows bigger than you ever imagined. Those who are good at designing a new core technology often overestimate how fast they can get it launched, but underestimate the ultimate size of the market, because they do not envisage the creative design space that will evolve around it.

It is one thing to conclude that, in theory, we should keep inventing evermore amazing developments, but another is to imagine what it may be like. Let’s try for a moment.

We can start with (arguably) the weirdest material ever discovered: graphene. In 2010, Andre Geim and Konstantin Novoselov received the Nobel Prize in Physics for experiments with this odd material, which are grids of carbon atoms that are only one atom thick. This is the best heat conducting material ever discovered and is also an excellent electric conductor, which means that we may use it in semi-conductors. And more than that: It is so strong, that if you put an elephant on a (very strong) pencil, the edge of that pencil would not be able to penetrate this ultra thin layer of graphene.

There are many other things going on in materials research, but let’s now look at computers. Somewhere between 2020 and 2030, the biggest computer will probably reach the same data capacity as the human brain (at least, if they work together in clusters), and we will also have made software that enables them to be intuitive and creative. We gained an early sense of this in a “Jeopardy” competition in 2011. Normally, this television show involves competition between three exceptionally intelligent people who compete to provide fast, accurate answers to questions that are read aloud to them. However, in this particular event, one of the competitors was an IBM computer called Watson, which was the size of a bedroom and able to speak and understand speech. It won the competition and thus a million dollars. Watson was not allowed to be connected to any external network, but had saved 200 million pages of information, including all of Wikipedia in its memory, and it was able to read and understand roughly about a million books per second. Two years later, IBM made a new version with three times the capacity that was only the size of a regular desk-top computer. This could read and understand three million books per second and was later put to use to diagnose cancer while its services were also offered online over the web. However, one of the most interesting future applications may be to make automated search for scientific hyphothesis. In an intriguing experiment, IBM let the Watson compuer study 186,879 scientific papers written up until 2003 with the purpose of identifying possible proteins that could trigger another protein called P53 to curb cancer. Watson suggested a number of candidates, and of the top nine on its list, seven had actually been found to have that effect after the cut-off date on Watsons reading list. This gave strong indication that Watson can predict what will be successful future areas in research and development, and it is a new meta-technology called “automated hyphothesis generation”.

Artificial intelligence has disappointed for decades, but many scientists now believe we are close to creative and intuitive computers. For example, in June 2014 a computer programme convinced 33% of a panel of judges at the University Reading that it was a 13-year-old Ukrainian boy.178

Such progress is the result of faster chips, better programming and a better understanding of how the human intuition and creativity work. The latter comes from a number of public or semi-public brain research and simulation projects, including the Swiss Blue Brain Project, the EU’s Human Brain Project, Paul Allen’s Allen Brain Atlas and, since 2013, the American BRAIN project.

IBM’s Watson computer uses so-called “deep learning” technology, which is a form of artificial intelligence, and it is now offered as an open platform. A specific use is as “Doctor Watson”, assisting doctors in making diagnoses of patients’ conditions. This shows that IBM is good at names, but also that human experts soon will be second-guessed by AI-based computer assistants. Perhaps the next will be Sherlock Holmes; a computer that can solve crime mysteries.

When computers begin to think independently and act creatively, they will not only exhibit great ability to answer our questions, they will even ask intelligent questions of each other.

Creative computers will also be able to write newspapers independently, news reports and books, make music and computer animations, design houses and write software for themselves and each other. Websites will be able to design themselves. You will be able to ask computers to explain a scientific problem, and they will instantly read all the science-based analysis on the topic and produce a meta report summarizing the best and most reliable information - all within seconds. Perhaps they will also take over a myriad of control functions for the service industry, such as reading licence plates to check if they match passing cars.

We may also use smarter computers to navigate the regulatory mazes for us. Especially in the US, it has already become common among large law firms to use computers to sift through huge numbers of previous trials to find precedent for this and that - a task that would otherwise require legions of paralegals. In the future, we can develop more and more opportunities to test the legality of the activities envisaged by letting computers talk directly to one another. To have acted in “good faith” may mean, legally, to have trusted a leading legal analysis program.

Computers will also be able to help with school and university tuition. If a pupil has lagged behind in maths or chemistry, the computer programmes may, for example, offer interactive tutoring based on a dialogue, where the computer really understands the student’s problems and addresses them well. For the same reason, it will be very effective to take a higher education course online via interactive computer instruction combined with video broadcasts of lectures at elite universities.

With such computers, we shall enter the robot age. The first and one of the most important breakthroughs will here be driver-less vehicles. When these materialize in scale, a taxi might be something you call via a click on your smartphone. The phone will tell the taxi where you are and tell you where the taxi currently is. As is already available in cities such as London through the service Uber, you will not call a specific taxi company, but simply a taxi. The software will then identify the closest taxi and tell you how its previous users have rated it. You will be able to use a filter so that you will only use cars that have been rated, for instance, at least four stars out of five. In addition, the smartphone will, of course, also take care of the payment for the trip, as it already does on Uber. Because taxis will then no longer require drivers, they will be far cheaper than they are today and, accordingly, we will also have driverless ships, aircraft, tractors and other vehicles.

In addition to driverless vehicles, we will have robots that have what is known as “telepresence”, which means that the owner can move them around by remote control while using their sensors to see and hear what goes on around them. An early example is the so-called “Double Robot”, which is essentially a remote-controlled iPad on a Segway. Such robots can represent an expert doctor visiting patients at a hospital, or help us monitor our homes while we are away, shop for us in retail shops, help in factories and with cleaning and cooking. There may also be telepresence robots in spacecraft, or they will be used to clean sewers, fight in wars and do other things that are impractical, dangerous, unpleasant or just physically remote.

Other robots will be autonomous and may, in certain cases, have taught themselves tenacity after they have been taught by the owner via telepresence. In fact, parts of society may become so automated that robots could “live” on even if all people disappeared (do we have a disaster movie theme here?).

Some of these robots will be pre-programmed to solve specific tasks, while others will be adaptable and able to learn anything from experience. At the same time, there will be application (app) stores for robots, where you can download hundreds of thousands of software applications for them, such as ability to monitor a carpark for thieves and vandals or to clear weeds in the garden, wash dishes, serve cocktails, or whatever is required.

There will also be endless physical robotic gadgets such as custom-built components for specific purposes. It will be possible to buy their designs online or find them free online, so you can print them out at home or in a corner shop with a 3D printer. Eventually, the robots will take over so many tasks that people will laugh at previous predictions of coming labour shortages due to falling birth rates.

In the future we will have transparent mobile phones and smart glasses that can annotate anything in the world around us with explanations. If you look at a bottle of wine, you will immediately receive reviews and a narration about the manufacturer. Focus on some food, and you will receive nutritional content information and a calorie count. View a building, and you will be told its history. With smart glasses, you may see simulations of potential changes to a building, as you walk through it. You will see how it would appear with different wallpaper, mirrors or other features. Take a walk down a street in a big city and small arrows will point out where there are companies seeking labour, or where to buy milk. Listen to someone speaking a foreign language, and you will see subtitles in your own language.

There will also be an explosion in so-called M2M (machine-to-machine) communication, whereby all sorts of things will be online and talking with one another over the internet – the “internet of things”. In parallel, there will be evermore analytical use of “data exhaust” which is collection of statistical data for analytical purposes. For instance, a study of how mobile phones connect to transmitters along highways will tell computers where there is traffic congestion, and studies of search terms on the internet can be highly-efficient predictors of fluctuations in house prices in local areas or sales of specific cars. There is already impressive software such as R and Amazon Web Services available for such analysis.

Additionally, you will have access to the Semantic Web which, instead of answering a question with reference to relevant links, simply gives us the direct answer, as IBM’s Watson already can. Try typing “How high is the Eiffel Tower?” on Google and see what happens - we are already moving towards the “magic mirror on the wall” from the fairy tale Snow White and the Seven Dwarves.

In parallel with the IT revolution, we are now in the early stages of a revolution in biotechnology, which will also have huge impact.

The cost of full DNA sequencing of human DNA started at about $3 billion between 1990 and 2003. Ten years after this project, in 2013, that cost had dropped to about $1,000 and the predicted length of time it would take had simultaneously dropped from 12 years (or many hundred years in 1990) to a single day; scientists are working on methods that might take as little as two hours or even 20 minutes (Ion Torrent sequencing and Sanger sequencing are examples).

Genetic engineering and microbiology can, of course, be used for medical purposes. One of the main issues would be to do what the insulin industry does today: You insert a gene into a micro-organism, plant or animal, which is enabled to secrete a desired compound such as aircraft fuel or medicine.

Virtually all medications cause side-effects in a small minority of users but, with low-cost genetic testing, it will become easier to pre-determine which drugs best serve a person. Medication can also come in the form of nanoparticles, which are only able to adhere to specific cell types by hatching on to a specific protein that only this cell-type has on its surface. They will thereby act as “smart bombs” rather than the “carpet bombs” almost all medicine resembles today. One of the goals is to replace standard chemotherapy with smart bombs that do not provide discomfort and collateral damage.

Another significant area of development is development of vaccines. With outbreaks of new infectious diseases, such as annual strains of influenza, we aim to develop an effective vaccine as soon as possible, but it may still take two-to-three months, currently. With computer analysis and synthetic production processes, this may be reduced to 24 hours - a technique that could protect us against a future outbreak of a devastating infectious disease such as the Spanish Flu that hit last century or Ebola, that hit in this one.

In addition to flu, scientists hope, in future, to be able to vaccinate against malaria, asthma, food allergies, multiple sclerosis, leukaemia, arthritis, high blood pressure and even alcohol and drug abuse. Furthermore, one could produce injections of virus-containing plasmids, which are small separate DNA strands. These will compensate for any individual DNA error in a way that is similar to when your software downloads a “patch” from the internet.

One of the most interesting opportunities is this: you make a genetic analysis of a person to determine which forms of cancer they have highest risk of developing in their lifetime. You then develop a vaccine against these forms of cancer, after which your own body will immediately attack the cancer, should it ever appear. The only way you might ever know you had had cancer is if a blood test revealed a spike in your cancer specific anti-bodies.

Stem cell technology will also be revolutionary. These are cells that have not yet determined any particular function in the body so that they can be used as building blocks almost anywhere. With these cells you can, in laboratories, grow copies of people’s skin, blood, blood vessels, heart valves, bones, noses, veins, and much more by using their own stem cells. First, you may make an ultrasound scan of the relevant body part, then you use a 3D printer, directed by a computer, to build a porous mould wherein the artificial part will be cultivated out of stem cells. Note, by the way, how this illustrates the effect of an expanding creative design space: we combine 1) genetic analysis, 2) ultrasound scanning, 3) computing and 4) 3D printing to create a new technology.

Other interesting areas include bio-engineering of modified yeasts, algae and bacteria to make new foods, pharmaceuticals, fibre materials and chemical agents; or to extract raw materials; or to combat pollution caused by oil spills at sea via oil-eating bacteria.

Once we reach and surpass the middle of our aforementioned chessboard (from the anecdote about the emperor and the artist), we may have developed accurate bio-computation software to the level where we can fairly easily computer-design simple new life forms with desired properties. This would necessitate computer simulation of whole-cell behaviour, including the DNA, proteins, organelles and totality of it all. Proteins, it should be said, are long and often extremely complex chains that fold themselves into three-dimensional spaghettis, where both their molecular structure and 3D folding patterns determine function. Such programmes will simulate millions of biochemical combinations to find opportunities and explain problems, and one of the first out of the gates is the Openworm project which, in 2014, raised money via the internet to simulate all the 959 cells of the worm C. elegans.

In 2012, genetic engineer Craig Venter expressed his expectations for bio-computation in an interview in Wired Magazine:

“What’s needed is an automated way to discover what they do. And then we can actually make substitutions starting with the digital world and converting that into these analogy DNA molecules, then transplant them automatically and get cells out. It’s a matter of scoring the cells based on knowing what the input information is, to work out what that gene does, what impact it has. Do you get a living cell or not? I think we can make a robot that learns 10,000 times faster than a scientist can. And then all bets are off on the rate of new discovery.”179

A robot that learns 10,000 times faster? That may perhaps sound absurd, but when we consider that DNA sequencing speeds have improved by roughly that magnitude within 25 years, why is that not feasible? But if we really do improve the speed of biochemical and genetic discovery like that we then will have yet another phase shift in development of our creative design space – our hyper-exponentiallity gets even more hyper.

Such prospects have led some to speak of a future “singularity” - a point in time during which development is so fast-paced that we can no longer make forecasts about the future. However, perhaps a better way of looking at it is that we already have passed through many such singularities, including the invention of trade, farming, the electronic computer and the internet.

These were all wild technologies, but it can get even wilder. Let’s go crazy for a moment and imagine really weird stuff that might happen. We can start by taking a deep breath and then asking ourselves: “What if we really begin to change our own genes?” As we have already seen, this is actually not as strange as it may sound, because we have already, inadvertently, done this ever since we started civilization. But the process can accelerate dramatically if we start doing it consciously.

The first steps towards genetic manipulation of people could be cloning of beloved dogs and cats. In fact, this has already begun; in 2004, the company Genetic Savings & Clone cloned a cat for a woman in Texas, USA180 and, in 2007, three clones were made of a dead dog as part of the Missyplicity project.181 The next step could be to genetically-engineer pets so they live longer. Scientists have actually already extended life-spans dramatically in some animals. This can be done by extending the telomeres. These are inactive codes that sit at the end of each chromosome in our DNA. Each time a cell divides, a piece of the telomeres break off, until there is nothing left. After that, the cells will lose active DNA each time it divides and, which creates trouble. Extending the telomeres would postpone this problem, and possibly by a lot, and you can do it by modifying the single DNA string in the zygote (the very first cell of an organism). Experiments with mice have thus extended their life expectancy by 24%. Other successful attempts at life extension have, for example, used incorporation of genetic material of blueberries into an insect’s DNA to form a steady flow of life-prolonging antioxidants that slows its ageing process.183

Genetic self-modification could involve people asking to have a number of eggs and sperm screened for about 4,000 different genetic diseases prior to in vitro fertilization (IVF) so they can choose those of highest quality. At a later stage, people would be able to ask for screening of some of their embryos to select those who have the most attractive properties. For instance, perfect pitch is the rare ability to identify or re-create a given musical note without the benefit of a reference tone. It turns out that the position of a single atom on an individual’s DNA string decides whether or not you have that.

The highest IQ measured, to date, was that of a Korean boy, Kim Ung-Yong, who could read German, Japanese, Korean and English when he was two years old. He began studying physics at university when he was aged three. Twelve years later, he completed a postgraduate degree in physics in the US, having previously worked in NASA. His IQ was measured at 210.

So what if people decide to modify their unborn children’s genes to make them as smart as Kim Ung-Yong, but perhaps also healthier, happier and more beautiful for good measure, or to enable them to live for much longer than normal? Much of this is already starting to work at laboratory-level, but there is a potential market for it? “No!” many will cry, “We will never do any such things. We don’t modify people artificially.”

But we already do. People are already having fillings in their teeth, contact lenses, artificial knees, hips and heart valves, heart transplants, plastic surgery, Botulinum toxin facial treatments, pacemakers, hearing aids, artificial corneas and liposuction. In 2007, it was possible, for the first time, to cultivate an artificial bladder and, in 2009, an artificial windpipe; since then, our ability to grow body parts has gone exponential. We already perform amniocentesis to screen for defective genes and, to date, we have created more than half a million test-tube babies - two technologies which sparked outrage when they were introduced, just like contraceptive pills. We have also, after initial difficulties and some accidents, learned how to cure some genetic diseases by infecting the patients with viruses containing corrected versions of the defect genes.184

If genetic manipulation of embryonic cells occurs, it may well start in Asia. In 1993, Darryl Macer, from Eubios Ethics Institute in Japan, found that a large majority of Asian people are in favour of using genetic manipulation and genetic screening to improve children’s physical and mental abilities.185

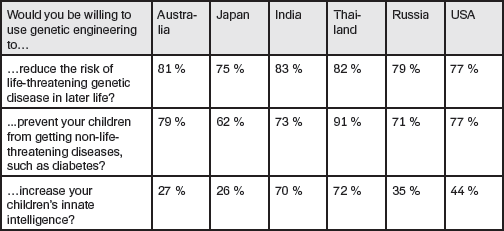

SHARE OF POPULATION IN DIFFERENT COUNTRIES THAT ARE IN FAVOUR OF USING GENETIC MANIPULATION OF UNBORN CHILDREN FOR VARIOUS PURPOSES.

Even a very simple strategy based, in each case, on screening ten embryos for the one with best potential for high intelligence is estimated, in itself, to be able to raise each generation’s IQ by five points. Within a 100-year period, that would multiply the proportion of individuals who have an IQ of 145 by a factor of 40. In mice, scientists have already managed to improve memory significantly by providing them with an extra copy of the gene NR2B.186

Could it be that the next epic power struggle in humanity will not be traditional wars, but brains wars? China’s leading gene research institute in early 2013 had approximately 4,000 employees and was beginning to decode and analyze the complete genomes of 1,000 people with extremely high intelligence to find reasons why they were so bright. One of the genes that have been found to matter is called KL-VS and this is associated with an increase in IQ by 6 points. In a random sample of 220 people, approximately 20% were shown to have this gene variant.187

We can now imagine that, one day, we hear about rich men without heirs who put a clone of themselves as the beneficiary of their testament. Or we may hear of parents who lose a beloved child and have it revived (sort of) in the form of a clone. Or there may be people who recreate famous dead people or even Neanderthals. In fact, genetics professor George Church of Harvard Medical School has stated that he believes the latter could be done today for 30 million dollars. By the way, recreation of an extinct species is called de-extinction, and this is definitely on its way, as we shall see later. It won’t be Jurassic Park, but expect to see extinct species return.

What about causing deliberate extinction? We have, so far, managed to eradicate smallpox and rinderpest and are close to eliminating polio, guinea worm and Yaws as well, and this is done with combinations of isolation, vaccines, and environmental improvements. Next in line may be rubella, hookworm, measles, river blindness, and Creutzfeldt–Jakob disease (commonly known as mad cow disease). While some of this may be achievable by traditional means, scientists have proposed using genetic engineering to eliminate some of the more troublesome diseases such as malaria. For instance, biologist Olivia Judson has proposed to use introduction of knock-out genes to eliminate 30 mosquito species as follows:

“…put itself into the middle of an essential gene and thereby render it useless, creating what geneticists call a ‘knockout’. If the knockout is recessive (with one copy of it you’re alive and well, but with two you’re dead), it could spread through, and then extinguish, a species in fewer than 20 generations.”189

The 30 species she had in mind constitute approximately 1% of the world’s 2,500 mosquito species, whose disappearance would eliminate dengue and yellow fever plus malaria. It would take in the region of 10 years to eradicate these mosquitos after the release of the gene. In 2014, scientists at Imperial College in London announced that they had developed a genetic modification so that malaria-carrying mosquitoes produce 95% male offspring, which means the the population will crash over approx six generations.190 An alternative approach, suggested by the Gates Foundation, is to make the mosquitoes carrying deng fever immune to the viruses causing it, which may be done by infecting them with the bacterium Wolbacia.191

Each of us is entitled to our own opinions about all the possibilities described above, but the key is that much, or all, of it probably will happen and some of it is already happening at laboratory-level.

If we take the helicopter view for a moment, we can say that there are two compelling, but different, ways of describing our technological development. The first is to see it as a tale of human creativity, and this is the most entertaining – at least you can include the great anadectotes.

The other is to see it as a self-organizing creative design space, which means that it follows a roughly pre-determined path. Why? Because each technology or combination of technologies will, almost inevitably, lead to the next, according to a simple logic of combinations in a creative design space.

An example: Ernest Rutherford developed ideas about atomic structures, and Max Planck developed quantum theory. It was therefore only a matter of time before someone (it was Niels Bohr) combined their thoughts into a single atomic theory. Or another example: Someone invented a steam engine and others the stagecoach Ergo, it was inevitably that someone else would combine the two into a car or a train, because it was the adjacent possibilty at the edge of the creative design space.

When you think about it, it has to be like that – our scientific and technological development is like a self-organizing creative design space that inevitably must expand roughly in a somewhat pre-determined sequence - and therefore the same products will often be invented at approximately the same time by different people, which is why patent offices often receive almost identical patent applications every few months or even on the same day. For example, Alexander Bell filed a patent application for the telephone on February 14, 1876, which was exactly the same day that Elisha Grey filed a patent application for a telephone. In fact, Ian Morris explained in his book Why the West Rules for Now, that 20 out of humanity’s first 15 major inventions arose independently in the same sequence in the West and the East, but only by 2,000 years of displacement.

When people think we are approaching the end of innovation and thus growth, it may be because there is something basic in our psychology causing us to believe that the development has reached its end point right here and now. Of course, we may know from history about the endless, and even accelerating, number of inventions that came before us, but we can’t imagine how this should continue. We fail to see that it is in the basic trait of inventions that you normally do not know about them in advance. Could people in, say, year 1900, have imagined genetic manipulation, when DNA’s chemical components were only (and quite wrongly) proposed in 1919? Or could they have thought of nuclear power, when Niels Bohr’s atomic theory was first put forward in 1922 and when Einstein, 10 years later, stated that: “There is not the slightest indication that nuclear energy will ever be obtainable. It would mean that the atom would have to be shattered at will.”

Not only have we often been very reluctant to accept new technologies, we are also frequently very sceptical about how much more can be learned and discovered. In Roman times, shortly before the birth of Christ, the eminent engineer Sextus Julius Frontinus said: “Inventions reached their limit long ago, and I see no hope for further development.” And so it has been ever since.

“What? You would make a ship sail against the wind and currents by lighting a bonfire under its deck? I ask you to excuse me. I do not have time to listen to such nonsense. “

So said Napoleon Bonaparte to Robert Fulton’s idea of a steamship in 1803. (That was not a year in which he made good decisions since he also sold a third of the current US. to the US. government for 68 million Francs, equivalent to less than $300 million in today’s money. He wanted the money to rule Haiti, which he found more promising.)

And what about this one: “Rail travel at high speed is not possible, because passengers, unable to breathe, would die of asphyxia.” This was said by Professor Dionysius Lardner in 1830.

“It’s an empty dream to imagine that the cars will take the place of railways for long trips.” This was the pronouncement, in 1913, by the American Railroad Congress (who else?). In 1837, medicine Professor Alfred Velpeau said: “The elimination of pain during surgery is a figment of the imagination. It is absurd to apply it today.” It was anaesthetics he had in mind.

One can go on and on. When, in 1859, Edwin L. Drake tried to recruit workers to drill oil, he had a very hard time finding someone willing to do it. “Drill for oil?” they asked. “You mean drill into the ground to try and find oil? You’re crazy.”

The aeroplane was obviously no exception to this rule. Today, there are more than half-a-million people flying in plances at any given time but, in 1903, The Times wrote that it was a waste of time to try to develop flying machines. This was poor timing, because just a few weeks later the Wright brothers managed to make a machine fly. When Boeing later introduced its B247, one of its engineers said: “There will never be a bigger plane built.” It carried 10 passengers. Later, Ferdinand Foch from Ecole Superieure de Guerre said that “aircraft are interesting toys but of no military value.” That was before the Battle of Britain in 1940.

In 1920, the New York Times reported that it would be a physical impossibility to make a rocket as its exhaust would have nothing to push against. When, 49 years later, Americans were about to land on the Moon, they printed the following humorous correction: “It is now definitely established that a rocket can function in a vacuum as well as in an atmosphere. The New York Times regrets the error.”

Our lack of imagination does not only apply to what is technically possible, but also what has commercial potential, and the reason for this is that we do not foresee that each new core innovation, which in itself may seem rather useless, will stimulate its own creative design space of applications, which will later be followed by increasing fashion shifts. When David Sarnoff tried to encourage his colleagues to invest in the newly-invented radio, they replied: “The wireless music box has no imaginable commercial value. Who would pay for a message sent to no one in particular?” Yes, who indeed, if there are no applications for it?

The same scepticism is echoed in the earlier history of technology. In 1878, Erasmus Wilson from Oxford University said the following about electric power: “When the Paris Exhibition closes, electric light will close with it and no more will be heard of it.”

The lack of imagination seems particularly prevalent when people comment on their own field. In 1995, British Telecom futurologist Ian Pearson made a speech in which he predicted that IBM’s Big Blue computer would, within a few years, beat reigning champion Kasparov in chess. After the lecture, a member of the audience came up to him and said that he was sure the Deep Blues chess programme could never beat Kasparov, as he himself had written that programme and knew its limitations. Just 18 months later, it happened. Astronomy has also been the subject of our scepticism. In 1888, astronomer Simon Newcomb stated that: “We are probably nearing the limit of all we can know about astronomy.”

However, there are scientists and engineers who gradually learn from their experiences. As the co-inventor of the electronic computer John von Neumann in 1949 commented on this invention’s future, he left the door ajar for future surprises:

“It would appear that we have reached the limits of what it is possible to achieve with computer technology, although one should be careful with such statements, as they tend to sound pretty silly in five years.”

Yes, you may say that, and the reason is that ideas are not finite resources, but rather parts of an expanding, self-organizing creative design space, which feeds on itself without end. Unless, of course, we decide to halt it, like the Ottomans and Chinese did.