Chapter 5. Research

Challenge your assumptions; understand people and context.

Expert comments by:

Anke Helmbrecht | Geke van Dijk | Jürgen Tanghe | Maik Medzich | Mauricio Manhães | Phillippa Rose | Simon Clatworthy

5.1 The process of service design research

5.2 Methods of data collection

This chapter also includes

Move Beyond Assumptions

In service design, research is used to understand people, their motivations, and their behavior. Usually research is one of the first activities in a service design project, but it’s not uncommon for ideation, prototyping, or implementation to send a team back to research activities when new questions arise. Design research enables a team to:

→ Empathize with the people they design for and build up a genuine understanding of their practices and routines.

→ Immerse themselves in an unfamiliar area or subject and learn about the specific context they will be working in – which might be quite technical and specialized.

→ Step away from established routines and assumptions, looking at a certain topic with fresh eyes.

Mostly, researchers strive to find out how customers experience a specific physical or digital product, service, or brand (customer experience). Furthermore, research is used to study the experiences and behavior of different employees (employee experience) as well as other involved stakeholders. More generally, it can be used to reveal the ecosystem in which a certain theme, service, good, or product is embedded, including other players, places, artifacts, processes, platforms, and stakeholders, and to see how they are connected.

Comment

Comment

“We view the research phase as a way to understand the world in the same way that the customer or employee does. If you feel that you do this, then you have a platform that makes your ideas and concepts better and more relevant.”

— Simon Clatworthy

Research is crucial in service design, as it helps a design team to move beyond assumptions. There’s a continuum from simple research for the inspiration of a design team to solid data that can reveal (valid) discoveries. Research can be divided into quantitative methods and qualitative methods. Both types are useful in service design. Quantitative research is often a good way to gain insights into the “what” and “how” of an experience, while qualitative research provides insights into the “why” – people’s motivations and needs. However, research can be used in various forms at different stages within a service design project. We might see initial research identifying user needs, discovering experience gaps and other problems. Then we have research testing prototypes, validating implemented solutions and assisting ideation (by systematically gathering existing ideas and avoiding reinventing the wheel). In all stages research is used to inform decisions based on real data and insights, rather than on assumptions that may be biased. 1

Service design research is a structured process with planned iterations. It starts with a research topic or one or more research questions and often aims to derive insights. Design research is based in user/human-centered design and usually includes ethnographic research methods. 2 When you start to explore this field, you might find that the methods and vocabulary used are often quite fuzzy or non-defined – it’s an issue that academics and designers often criticize themselves for. Also, this type of qualitative research feels a little unsafe for people who are more accustomed to quantitative research in a business context, but usually a qualitative approach turns out to be really valuable. 3 Instead of looking for “the” truth, qualitative research can provide insights into “a” relevant truth. Insights from qualitative research are often more actionable than mere quantitative data as they provide answers to the “why” questions. New perspectives and nuances come up. Communicating insights with quotes, photos, or videos of user realities can initiate change in organizations by creating a common understanding of the problem and motivating people to do things differently.

As this book is about doing, this chapter presents an actionable framework for service design research, based on common academic standards.

THE BASIC PROCESS OF SERVICE DESIGN RESEARCH

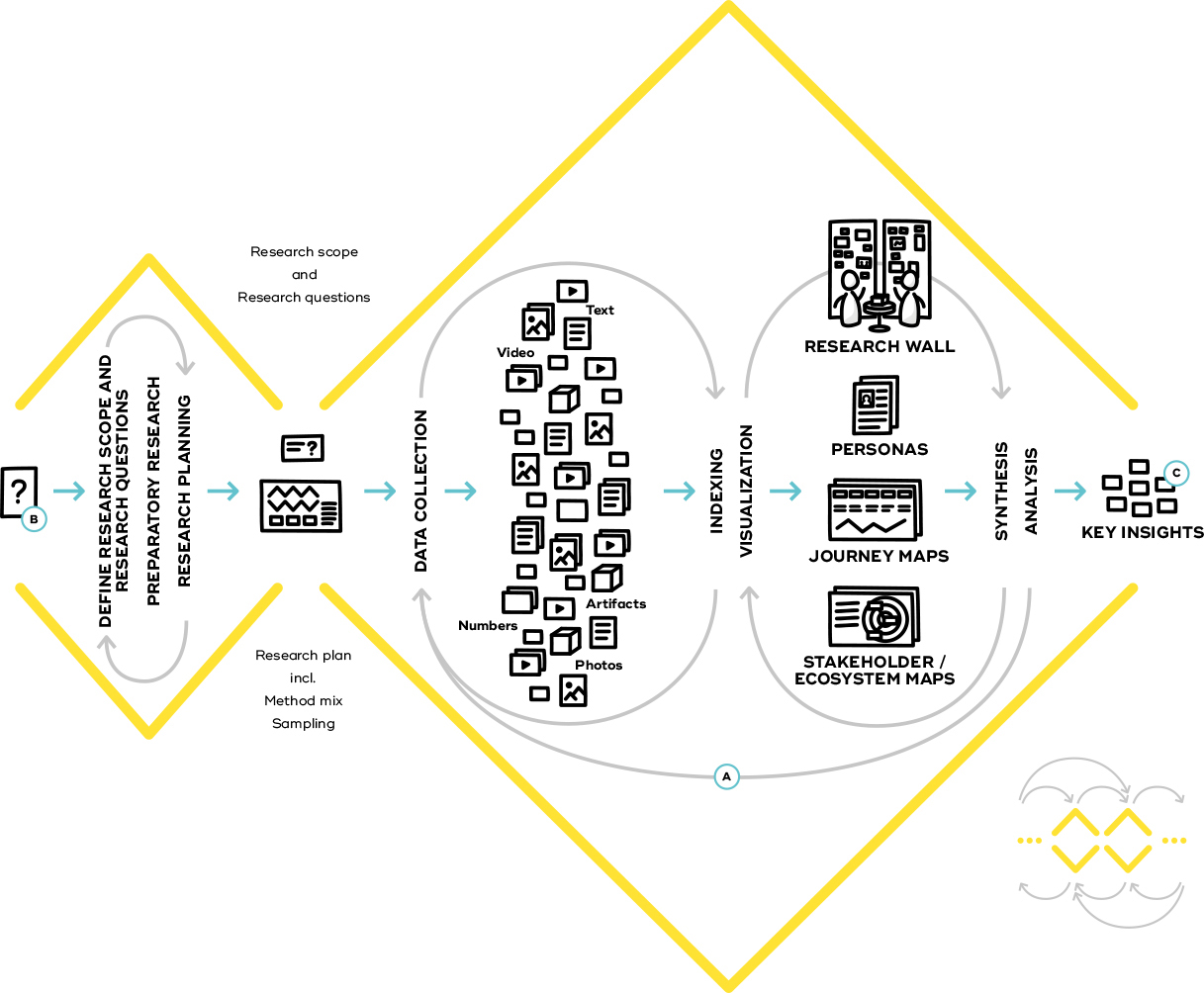

Figure 5-1. Research activities are embedded in an iterative sequence with other activities of ideation, prototyping, and implementation.

Iterations and research loops Design research is an iterative process – a sequence of research loops within and between activities.

Starting point Usually research starts with a brief from an internal or external client. Based on some preparatory research, you define research questions and start research planning.

Output There are various potential outputs of design research, from informal inspirations to formal research reports.

The Process of Service Design Research

Research can be used at different stages within a design process. Design research can be used to find opportunity fields by identifying customer problems and needs; to research experience gaps in existing services or products (whether physical or digital); 4 to get inspiration from other domains; or to test and collect feedback on ideas, concepts, and prototypes.

Service design research can benefit from a clearly articulated research design that considers some of the aspects and main criticisms of ethnography. 5 Even though not every research process needs extensive planning, this framework might help you to achieve richer results with fewer resources. It doesn’t need to be followed step by step, but should serve as a collection of useful rules of thumb that can be applied to your research. This section describes each step in detail.

Research scope and research question

To define the scope of your research, it might help to consider which of the following options is applicable for the project.

Exploratory research vs. confirmatory research

→ Exploratory research sets out to learn more about a specific subject without the prior formulation of explicit assumptions. The objective is often to find answers to “Why” questions without a sound assumption of what might be the cause. You can also do exploratory research to get inspired by solutions (or problems) from different industries, regions, cultures, target groups, and so on.

→ Confirmatory research is intended to validate specific assumptions you have generated before you start research. The objective is often to find out if an assumption or hypothesis is supported by research findings. For example, assumption-based journey maps you created during a workshop might now need to be challenged with solid data on customer experience.

Research into existing services and physical or digital products vs. new ideas/concepts

→ Research that focuses on existing physical/digital products or services is mostly done in the existing situational context through fieldwork using ethnographic approaches. Customers, employees, and other stakeholders are observed or interviewed when they interact with the service or physical/digital product in question, or with one another in reality.

→ Research which could lead to new ideas or concepts uses similar ethnographic methods, but as there are no existing physical/digital products or services to be researched, we often use prototypes or experiments to get results that are as close as possible to the results we would get from the future situational context of this idea or concept.

Start by formulating a research question to make sure that your team (and your potential client) has a common research aim. An initial research question might derive from a (client) brief, from customer complaints, from a workshop, or somewhere else. Often you need to do some preparatory research before or during defining your research scope and phrasing research questions. 6

A research question could have various aims: perhaps to understand customer needs (“Why do people use selfie sticks?”), to find gaps in an existing customer experience (“Where do customers have problems or leave when they are in our shop?”), to confirm steps of an assumption-based journey map (“Which steps are missing in our journey map when our customers go through our software onboarding? And which ones do they skip?”), or to understand the ecosystem of a physical/digital product or service (“Which players are directly and indirectly involved in our procurement process?”).

When developing your research question, always remember to think ahead and ask yourself what you will do with the answers. You know what the next activity of your project will be, so you can test a question by asking “How will the answer to this question help us generate a range of insights and ideas to create new (or more) value?”

Research questions are often rather broad and vague in the beginning, but then narrow down to one or more specific questions throughout the iterative process. It is like finding a path through a jungle: you don’t know the way when you set out. You have a vague aim and move in that direction. Then, if you stumble over a creek, it’s best to follow it as it might take you somewhere interesting much faster.

Be aware that research questions often need to be refined over time due to the iterative and explorative character of design research. In general, you should avoid questions that could be answered with a simple “yes” or “no” – otherwise your research might come to an end very fast, and you won’t learn much. Often research questions are open-ended, sometimes followed with a follow-up “Why” question to gain more detailed insights. It usually helps if you write down not only one question in the beginning, but 10 or 20 and then select one or more you like. 7 With a little practice, you will get better at developing questions. But you will always need to iterate and refine them.

Based on your progressive understanding of the subject matter, you’ll be able to gradually rephrase the question better, improve your process and methods of collecting data during your fieldwork, and also refine the focus of your documentation.

Research planning

When planning your research, you should think of research methods that are most likely to give you fruitful answers for your proposed research questions. On the other hand, your research must fit within certain business constraints, as you always have to consider how to best allocate time, money, and people within a project.

A good starting point is to take a look at what is already out there so you are “standing on the shoulders of giants.” Take a look at previous research and existing data on the subject matter. Ask the Market Research or Innovation department if they have any relevant data, get statistics that might be useful for your research, and get an overview of what has been done in this field by other departments in the organization. Besides existing research within the organization, also invest some time into classic desk research: use (academic) research platforms to look for scholarly papers published on that topic. In many cases, you might invest an hour or so to screen the research landscape until you have a good idea whether it is worth spending more time reading published research. If you find interesting research, it will save some time and budget by focusing your research on the really useful parts.

Each loop should include data collection with various methods as well as at least a simple form of data synthesis and analysis.

Besides conducting some preparatory research, your research planning will include decisions regarding research loops, sample selection, research context, sample size, and method selection. 8

Research loops

Always plan qualitative research as an iterative process with a sequence of research loops. Fruitful design research often starts with a broad focus and aims to narrow it as soon as possible. The first loops can be really short: something between one hour and one day.

Through iterative research loops, it is possible to be more confident that the research is effectively targeting the important questions.

Sample selection

Sampling is the definition of who you want to take part in your research. Who participates in your research and how you select them is a critical question for the reliability of any study. A skewed selection of research participants may distort your results – for example, when you ask only a specific age group, or only happy customers (the “sampling bias”). There are various strategies that aim either to get a representative dataset for large-scale quantitative research (probability sampling) or to get richer data from a specific group for in-depth qualitative research (non-probability sampling). Results based on quantitative probability samples can usually be generalized, but with data from qualitative non-probability samples that’s not the case. Mostly service design research uses qualitative research methods and selects participants with non-probability sampling techniques, like these:

Expert Tip

Expert Tip

“Good practice is to regard the first one or two interviews as pilots to test out your script and materials. If all goes well you include the data in the research; if there are serious changes needed you may want to consider doing some extra pilot interviews.”

— Geke van Dijk

→ Convenience sampling: Find some people to participate in your research (the simplest, but also most biased sampling approach).

→ Self-selective sampling: Let participants decide to take part in your study without defining specific criteria or quotas – for example, through a link on a company website (also a very biased approach).

→ Snowball sampling: Find a few relevant people for your research purpose and ask them to recommend others (e.g., users of electric cars can get you in touch with other users from their network).

→ Quota sampling: Find out how a population is structured according to certain criteria, set a quota for how many participants you would like to have for each criterion (e.g., gender) and randomly select participants for each quota.

→ Extreme case sampling: Find very unusual participants to understand extreme positions (e.g., very early adopters of interesting technologies, or the opposite: people who are radically against or have never thought about using your service/product/technology).

→ Emergent sampling: Follow new leads during fieldwork as they unfold, to flexibly take advantage of new knowledge.

→ Maximum-variation sampling: Find participants with a wide range of variation on dimensions of interest with the aim to discover central themes or shared dimensions across a diverse sample (e.g., people who use a product in very different ways than it was intended).

→ Maximum-input sampling: Find participants with a comprehensive overview of an entire experience or system in order to get a maximum of input from the selected participants (e.g., people who have just followed a complete customer life cycle).

Probability sampling can also be useful for service design research, especially when you need larger sample sizes or even representative samples for large-scale quantitative research. Here are just a few examples for probability sampling techniques:

Comment

Comment

“When we were working with CarGlass in Belgium we discovered huge differences between the experience of changing a windshield during the day and at night. A well- designed process for the daytime was rather frightening for female customers during the night considering the dark and lonely location outside the city and the different emotional state the customers were in.”

— Jürgen Tanghe

→ Simple random sampling: Randomly pick participants from a sampling frame.

→ Systematic random sampling: Select a random number, such as 10, and pick every 10th person from a flow of people as your participants.

→ Stratified random sampling: Separate your sampling frame into groups based on specific criteria and use simple or random sampling to select participants within these groups.

→ Cluster sampling: Create a list of clusters based on specific criteria and randomly choose some of these clusters, then randomly choose participants within the selected clusters.

Comment

Comment

“Theoretical saturation is important, but difficult to predict in advance. Usually it is based on prior experiences and rules of thumb in proposals, while keeping flexibility to extend the fieldwork when data and results indicate that saturation has not yet been reached.”

— Geke van Dijk

Most projects use a combination of different sampling techniques, for example systematic random sampling in combination with snowball sampling. Often you need to set certain selection criteria as screening questions (e.g., “Do you drive an electric car?”). Emergent sampling is something we should always do anyway in design – if you find something interesting, don’t ignore it. The aim of a sound sampling strategy is to avoid a sampling error, like systematically excluding a certain group of people that should have been considered.

Research context

Besides the “who,” it is also crucial to define the context of your research: “when” and “where” to conduct the research. This might seem obvious – but think, for example, of the people you’d meet at a train station on weekdays in the morning (commuting to work/school?), weekdays around noon (lunch break?), weekdays in the afternoon (commuting from work/school?), or weekdays in the evening (leisure activities?). And compare this to the same times during the weekend. Also, seasons are important: consider how hard it would be to research customer experience at a ski resort during the summer season.

It often helps to engage participants in their natural surroundings or in a specific situational context of interest. Interviews are often done at people’s homes, because they are most at ease when at home. Observations or contextual interviews should be done at places where people often use the specific service or physical/digital product, as they can relate to their experience more easily in that environment and maybe even point out certain aspects of it that they like or dislike. Also consider the effect of external factors on the experience and behavior of people, such as weather, public holidays, major events, and so on.

Sample size

You might decide to fix the sample size (how many participants your research has) before data collection, or you might choose to stay flexible. This is mostly a question of the research objective and methods used, as well as the resources and time available.

Sample size in service design is determined using an ethnographic research approach based on the concept of theoretical saturation. In other words, you stop collecting data when new data does not bring additional insights to the research questions.

In quantitative statistics, your sample size depends on whether the data need be representative for a defined group of people (the population). In qualitative research – and particularly in ethnographically inspired design research – researchers in stead look for recurring patterns. You do enough research – such as interviews or observation – to let you identify patterns. When more research only confirms the patterns you have already identified, you have reached theoretical saturation. This means doing more research would only confirm what you already know and will not bring any new knowledge. Just like a usability test that is designed to find the biggest bugs in software, service design research is used to find the biggest bugs (or opportunities) in a physical or digital product, a service, or any (customer) experience. Unlike quantitative statistics, service design is not especially interested in accurate percentages of exactly how many people struggle with one issue, but rather needs a ranking or just a shortlist of bugs to fix, a list of inspirations as a basis for ideation, or a hit list of features customers would like to have.

Comment

Comment

“When we started with service design research in our company, not everyone on the team trusted the results from our in-home interviews and co-creative workshops with customers. However, a later quantitative survey proved exactly the same points. Team members now realize more and more the credibility of these methods.”

— Maik Medzich

When you set your sample size, it should be large enough to identify recurring patterns. In 1993, the usability researchers Nielson and Landauer published an article revealing that usability tests with only 5 users found 85% of all usability problems, while they needed at least 15 users to find all problems. Since service experiences are more complex, a good rule of thumb is to start with a small but culturally diverse set of participants. If you already see patterns emerging, use the next batch to confirm the patterns you spotted and see if you’ve already reached theoretical saturation for these patterns. 9

Data collection

There are a huge variety of research methods you can use to collect meaningful data in service design. We use quantitative methods like surveys (offline and online), any form of automated statistics (e.g., conversion rate analysis), and manually collected quantitative data (e.g., frequency of shop visitors through simple counting). However, we mostly use qualitative methods and particularly methods based on ethnography. Select and line up a sequence of research methods to collect and to visualize, synthesize, and analyze your research data. See this section and 5.1.4 for details.

Research methods

You should always consider a mix of methods as each research method has its own inherent potential bias. “Actions speak louder than words” is a common saying, and indeed you often observe that people behave differently than they say they would. This can have different causes, like the “interviewer effect,” when the style and personality of the interviewer affects interviewees; the “Hawthorne effect,” when people modify their behavior simply based on their awareness of being observed; or “confirmation bias,” when researchers tend to search for information that confirms their beliefs or assumptions and thereby ignore other data that contradicts their beliefs. However, a good mix of research methods levels out potential biases.

Expert Tip

Expert Tip

“A mantra we use is to see the customer (observation), hear the customer (dialogue), and be the customer (selfethnography) - three complementary methods to understand customers or employees.”

— Simon Clatworthy

As a rule of thumb, for a good mix of research methods pick one method from each of the following categories: 11

→ Desk research, like preparatory research, secondary research

→ Self-ethnographic approaches, like autoethnography, online ethnography

→ Participant approaches, like participant observation, contextual interviews, in-depth interviews, focus groups

→ Non-participant approaches, like non-participant observation, mobile ethnography, cultural probes

→ Co-creative workshops, like co-creating personas, journey maps, and system maps

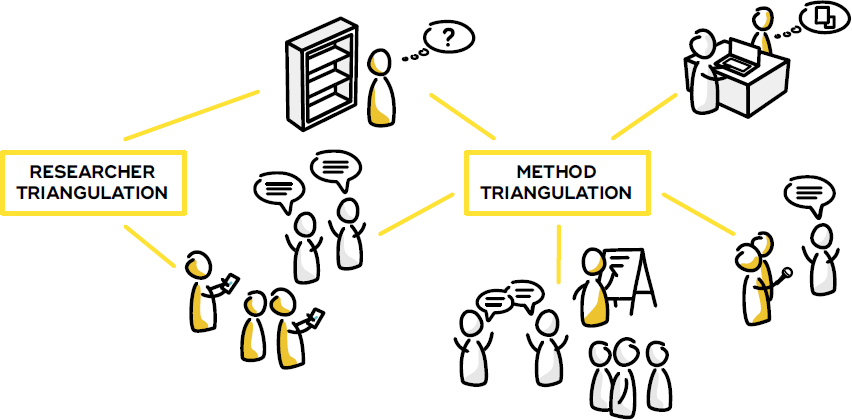

Method triangulation

When we are selecting the “right” research methods – ones that yield a lot of useful data – we always face the question of how many research methods we should use. Given a limited budget, researchers often need to decide whether they should put their budget into one method and do this rather well, or distribute their budget to use a variety of research methods. We always suggest the latter, based on the concept of method triangulation. 12 Triangulation is based on classic navigation and land surveying techniques. In simple terms, with triangulation you can estimate your own position by measuring the directions of at least two distinct landmarks. Based on basic principles of geometry, the more landmarks we measure, the more accurately the position can be calculated. Similarly, researchers can improve the accuracy and richness of their research by using different methods to collect data on the same phenomenon.

If different methods lead to the same outcome, you can be more confident about these findings. However, in design research, triangulation is not used as much to seek validation or verification of research results, but rather to ensure that insights are based on a rich and comprehensive dataset that is robust enough to provide a foundation for design decisions. In particular, when you do exploratory research to get inspiration for new ideas, richness of data and perspectives is key.

In a research context the fundamental idea of method triangulation is to cross-check findings with different methods.

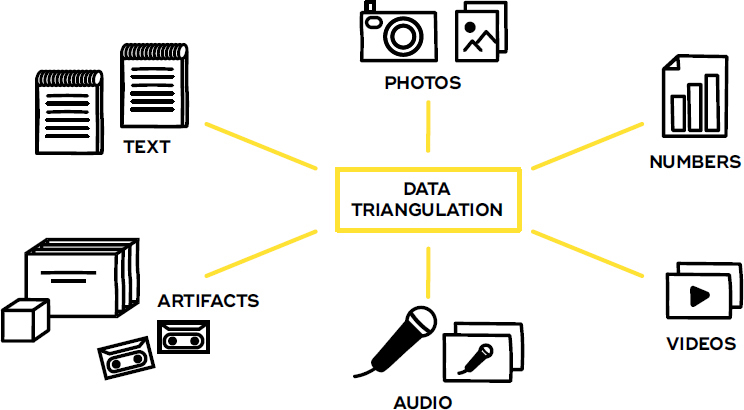

Data triangulation

Different research methods generate different types of data as output, such as text (e.g., field notes or interview transcripts), photos, videos, artifacts (e.g., tickets or info flyers), as well as statistics. Some methods can generate different or even multiple types of data, and researchers should plan what kind of data they need. Again, following the principle of triangulation, you should strive to create different types of data in your research process.

Data triangulation enables researchers to support findings with different underpinnings and makes your dataset richer and more comprehensible. One advantage of using different types of data can be explained with a simple example. Imagine the situation of a contextual interview. If researchers only take field notes, they only write down what they regard as important. If they add photos of the situation, others can understand the situational context of the interview. If they do an audio recording of the interview and transcribe it afterwards, others have a chance to interpret the interview as well. If they take a video of the situation, others can also interpret body language and situational context. Different data types can help you to get a richer dataset and reduce the subjectivity of researchers.

Another differentiation is the distinction between primary and secondary data. Primary data is data collected by a researcher for a specific purpose. Secondary data is data that has been collected by someone else for other purposes, but is being used by a researcher for a new purpose. If your organization will be conducting several service design projects, it makes sense to integrate a data or knowledge management system. The outcomes from primary research in one project can serve as secondary data for another project and thereby save time and money. Researchers can build on the outcomes of prior research (i.e., secondary data) and so might be able to use a given research budget for more focused primary research, “standing on the shoulders of giants.” 13

Figure 5-2. —Use different types of triangulation to offset different forms of research bias:

—Researcher triangulation to avoid prejudices and predispositions.

—Method triangulation to get different perspectives on the same subject matter.

—Data triangulation to get a richer and more comprehensive dataset.

Expert Tip

Expert Tip

“Providing sufficient raw data speeds up the decision-making process and helps avoid endless discussions within your team and with superiors. Instead of [questions] like ‘Is this really like this?’ our team accept a fact when they see it with their own eyes – or even better, when they experience it themselves. They then move on and work on actionable solutions instead of pointless discussions.”

— Anke Helmbrecht

The differentiation between first- and second-order concepts (not the same as primary and secondary data) is an important factor which affects how other researchers can work with the data. Basically, first-order concepts are the “raw data” (e.g., the direct transcript of an interview), while second-order concepts include interpretations by researchers. In practice, first-order concepts might be any original evidence of research that researchers gain from observations or interviews – for example, interview transcripts, but also photos or videos. Second-order concepts might be summarized field notes or any other form of data filtered or biased by a researcher striving to identify patterns. 14 While both are useful, it is important to collect enough raw data (first-order concepts) that does not include interpretations by researchers, as only this raw data can be interpreted by other researchers at a later stage. Recorded data like photos or videos preserves to a large extent the raw content, which is often more convincing when presenting your results than any second-order concept. When you can do only field notes, distinguish between an accurate description of a situation studied and your personal interpretation, so that you can always go back and refine your interpretation.

Researcher triangulation

As a (design) researcher using ethnographic methods, it is important to step into someone else’s shoes. However, to be able to walk in the customer’s shoes, you first need to take off your own. All researchers have their individual background, knowledge, and prejudices. It’s almost impossible to get rid of this “researcher bias,” but it helps if you become aware of your own tendencies regarding your interpretations and conclusions (e.g., through peer reflection by other researchers regarding potential biases and predispositions).

Comment

Comment

“Jürgen Habermas described a […] way to apprehend reality in a way that may reduce research bias […]:

Use research tools to produce/collect numbers (dry quantitative data) about the intended context of research;

Cast the most accepted or the researchers’ preferred interpretation (what these numbers ‘obviously’ say);

Based on the same numbers, develop contrasting/alternative interpretations (what these numbers could also say);

Reflect upon the different interpretations in order to fine-tune the researcher’s perception.”

— Mauricio Manhães

Researchers should also be aware of their “researcher status” – the social position a researcher holds among the studied group. Depending on your status, participants will react differently to your questions. This is something to consider in your research planning. How do you communicate the research to participants? Which research aim do you communicate? What expectations do your participants get through this and which hidden intentions might arise as a consequence?

One way to tackle researcher bias is by including various researchers, both during the collection of data and during synthesis and analysis. This researcher triangulation can help to reduce the level of subjectivity in ethnographic research and keep the team on a consistent knowledge level throughout the project. One way to increase the number of researchers involved is to use methods of data collection that actively integrate participants as researchers (e.g., diary studies or mobile ethnography) or methods that put researchers in the roles of participants (e.g., service safari or autoethno graphy).

In general, you’ll have more informed conversations and increase buy-in from clients or management if your design process includes people from the client organization or management as well as other stakeholders. Invite them to participate in your fieldwork – even if they only have limited time. In most cases, contact with customers and other research participants will increase appreciation for research, and attention to customer needs.

Sometimes the downside can be that invited clients or management use such opportunities to explain or even promote their offerings to interviewees, or to prioritize single users or responses. One way of supporting less-knowledgeable clients could be by assigning them a clear role, such as a supporting interviewer or observer, while the members of the key project team lead the fieldwork.

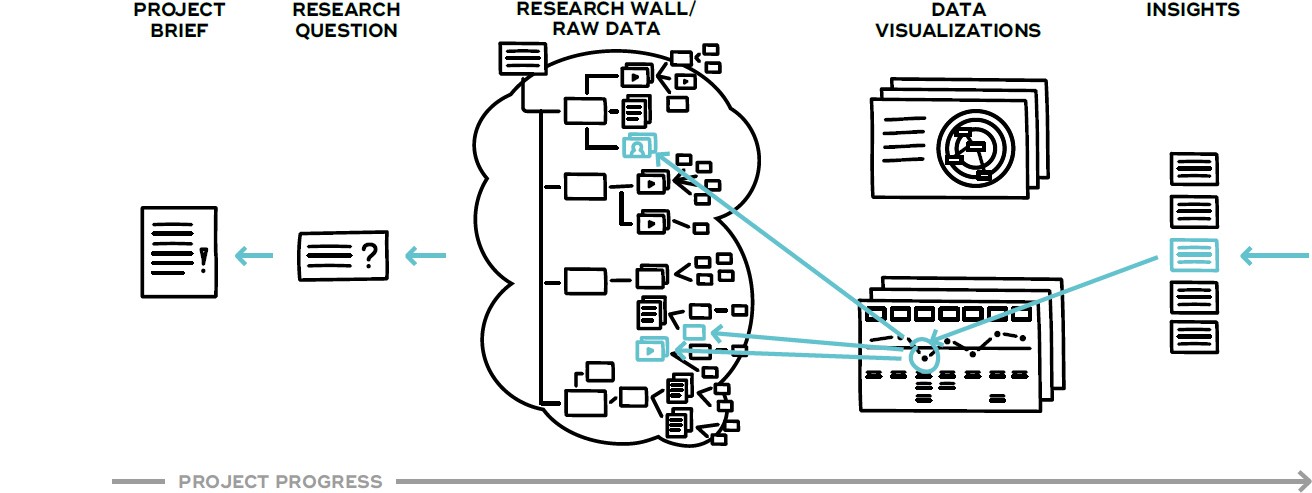

Indexing

During your research, it is important to index your data so that you can trace insights back to the data sources they are based on. A simple way of indexing could be to label data with a short index, such as “i6.17” for interview 6, line 17, or “v12.3:22” for video 12, at minute 3:22. This allows you to later base your design decisions not only on insights you have generated, but on raw data. You might even be able to include the participants who reported a specific phenomenon in your prototyping of solutions to improve the original situation. 15

Data visualization, synthesis, and analysis

There are many ways to synthesize and analyze data (also known as sensemaking) in design research. In general, we can see two major ways to do this: the academic way and the practitioner’s way. Although we’ll focus mostly on the practitioner’s approach, we can learn a lot from academic approaches.

Expert Tip

Expert Tip

“It is often worth iterating within stages, as well as between techniques: coding data then sifting and filtering and then analyzing to draw out insights. For example, in diary studies we write up all comments in a spreadsheet, look for common patterns and highlight/color code, then dig deeper again on specific issues and reanalyze comments. The issues identified are then explored further in exit interviews.”

— Phillippa Rose

The academic way to work with qualitative data mostly includes codifying data and then searching for patterns within this codified data. This process is often referred to as content analysis – there are various methods and even software 16 to support researchers in doing this, but of course pen and paper will often also do the job. For example, a researcher could codify the data by going through transcribed interviews and tagging sentences with specific labels. In a second step, the researcher then counts how often specific labels occur – or rather a software tool does this automatically. 17 This process takes some time, but is particularly useful when you have to cope with a huge amount of data because it lets you split up a set of data and work your way through it step by step. This happens often when you plan research design with a classic project management approach: one period of data collection followed by one period of data analysis.

However, if you follow an iterative research design with a rather visual synthesis and analysis process, you won’t be drowning in data as you will have multiple iterations of data collection, synthesis, and analysis.

Visualizing data

Visualizing data helps teams get an overview of the amount of information, brings structure into complex data, identifies patterns, and uncovers existing gaps in the data. It also deepens their understanding of a topic and develops empathy with the people who were the subject of the research. There are many ways to visually work with and present research, and what makes sense depends on your aim. The following list summarizes some common ways to visualize research data in service design, with a brief description of why each might be useful: 18

→ A research wall 19 to give you an easy overview of your data and your mix of research methods and data types; the wall may contain any of the other assets listed here.

→ Personas to exemplify different groups of people, such as customers or employees, and their individual characteristics, goals, and/or tasks.

→ (Customer) journey maps to visualize customer experiences happening over time.

→ System maps to show relationships between stakeholders and product-service ecosystems.

→ Key insights to highlight the biggest customer problems or potentials customers have regarding a certain physical/digital product or service.

→ Jobs to be done to emphasize the big picture of what customers strive to achieve.

→ User stories to ensure a common language with software developers.

→ Research reports to ensure a comprehensive research overview, often including many of the tools above.

It is important to consider the target audience for your visualization and ask yourself what exactly you want to share. Different audiences will have different needs – do they need pithy research insights or lots of raw data? Do you need something rather formal and self-explanatory so your outcomes can be used in different departments or organizations? Or are your outcomes only needed for your internal team? Any research outcome that you need to communicate beyond your own team needs more polish.

Peer review and co-creation

One simple way to increase the quality of your research is through peer review. Include other researchers, customers, employees, and stakeholders to benefit from multiple perspectives and reduce the risk of confirmation bias. If you want to include others at this stage, they must be able to understand your data and draw their own conclusions. So, aim to collect as much raw data (i.e., first-order concepts) as possible and compare your own interpretations with those of your peers. Make sure that insights are always trackable to your raw data (use indexing), so that reviewers can understand the translation from data to insight and second it.

As a rule of thumb, it is easier to include other people early on during the synthesis and analysis stage, so that you can cluster your data and generate categories using a co-creative approach instead of only inviting others to review your work. However, sometimes co-creative workshops are not possible; then, peer-review is a way to limit subjectivity and research bias.

Codifying data

One major question when you’re codifying data 20 is this: where do the categories for these codes come from? Mostly, qualitative research is understood to follow an inductive approach in which researchers “immerse” themselves in the data and generate categories and insights from the data itself. On the other hand, sometimes researchers follow a deductive approach and start with a defined set of categories derived from literature or other research, but then eliminate or add categories through their data synthesis. Deductive qualitative analysis strives to test specific assumptions or concepts and therefore often categorizes data in previously defined categories. While design research to identify customer experience gaps or people’s needs is mostly inductive, research to test prototypes is often rather deductive.

Figure 5-3. Indexing your dataset allows you to trace back insights (and later even ideas and prototypes) to their underlying data.

Using research outcomes

The outcome of service design research usually serves as input for other service design activities, such as ideation or prototyping. In some cases, research output can even be used directly in implementation activities. For example, research can sometimes reveal simple usability improvements for a piece of software that can be described as user stories and directly implemented during software development. 21 The usual case, however, is that topics identified during the research process are visualized with personas, journey maps, system maps, key insights, jobs to be done, user stories, or research reports. These can be used to identify problems or opportunities in subsequent ideation activities. 22 If you already have some immediate ideas of how to solve discovered issues, you might also directly try to prototype these in prototyping activities. 23

Typically, during research activities, you explore in detail the existing problems of an experience, a process, or a system. You try to question what you see and challenge your assumptions. At least, this is what you should do. Unfortunately, one problem is that we as humans are trained to solve problems. When we see a problem, we immediately start thinking about potential solutions.

This is unfortunate, as these are probably not solutions for the root cause. To be able to work on that, you need to invest more effort into understanding the problem. You need to remain longer in the problem space, without jumping into the solution space too soon.

Sometimes it helps a design team if they clearly understand where they are. Are you in the problem space trying to understand someone’s problem in more detail and depth, exploring this from various perspectives and trying to dig deeper and discover the root cause of it? Or are you in the solution space striving to find ideas on how to solve a well-defined problem? You can make this clear using visual clues such as a poster on the wall wherever your design team works, by clearly articulating where you are in your meetings and workshops.

Of course, a design team will have many ideas during research activities and it would be a shame to lose them. If ideas come up during research, capture them on an idea wall 24 and then let go.

Or, you might want to see the idea as a hypothesis and rephrase it into its underlying assumptions – this feeds into your next research iteration as a new research question. Also, you might want to brief your team before the next steps of the design process, such as ideation activities that might follow your research.

Methods

Read more on methods and tools in our free online resources at:

![]() www.tisdd.com

www.tisdd.com

Methods of Data Collection

This section provides a wide selection of potential research methods to collect data in service design research. Many more methods exist, and often the same method has several inconsistent names. We can only give a very brief introduction for each method, but if you want to dig deeper, there is plenty of literature – and for some methods, even whole books – with detailed descriptions and examples. 25 The research methods are structured in five categories:

→ Desk research: Preparatory research, secondary research

→ Self-ethnographic approaches: Autoethnography, online ethnography

→ Participant approaches: Participant observation, contextual interviews, in-depth interviews, focus groups

→ Non-participant approaches: Non-participant observation, mobile ethnography, cultural probes

→ Co-creative workshops: Creating personas, journey mapping, system mapping

These categories are not an academic standard, and as there are many variations and names for each research method, the boundaries between the categories might be rather fluid. However, as a rule of thumb, we suggest you use at least one method from each category in your research to give better method triangulation.

“Prep” research often includes an online search for certain keywords, companies, and competitors as well as searching for scholarly research on specific topics.

When doing secondary research, keep notes and explore potentially interesting topics iteratively.

A smartphone and/or a simple notepad is often the best tool to document autoethnographic research.

Desk Research Preparatory Research

Your own preparation before you start your actual research or fieldwork. 26

Preparatory (or simply “prep”) research often includes digging deeper into an industry, an organization, competitors, or similar products, and also the client’s perspective of what the research problem is, their context, perceptions, internal conflicts or interplays, and so on. Prep research is less about finding answers, and more about finding the right questions to ask in your research. Prep research can result in a summary of text snippets and/or a collection of photos, screenshots, or videos, perhaps visualized as a mood board.

Preparation: Often prep research starts with very wide research questions or topics, from soft topics such as “How does home feel?” to rather specific topics such as “Where else is this technology used?”

Use: Prep research can include conducting internal interviews; screening social media; listening to podcasts, online videos, or conference talks; and reading industry-specific scientific or special-interest publications along with newspapers or general-interest magazines.

Expected output: Text, statistics, photos, and videos, as well as mind maps, mood boards, and the like

Desk Research Secondary Research

The collection, synthesis, and summary of existing research. 27

In contrast to primary research, secondary research (often also simply called “desk research”) uses only existing secondary data – information collected for other projects or purposes. The main idea is to check whether research regarding a topic already exists. This helps us to formulate a research question more precisely and identify promising methods of data collection, visualization, and synthesis. Desk research should always be the starting point of a research process, simply to avoid reinventing the wheel and to stand on the shoulders of giants when you start your primary research.

Preparation: Collect a list of potentially promising internal and/or external sources such as academic papers, white papers, and reports, as well as experts on your research topic.

Use: Search for qualitative and quantitative secondary data regarding your research topic using online search engines, scientific databases and journals, libraries, conferences, and expert talks and interviews.

Expected output: Text, statistics, mind maps and the like

Self-Ethnographic Approach Autoethnography

Researchers explore a particular experience themselves and self-document this using field notes, audio recordings, videos, and photographs; 28 also called self-ethnography/documentation.

Besides “real” (i.e., rather academic) autoethnographic research where researchers immerse themselves for months within an organization, service design often applies shorter versions of this: team members explore a particular experience themselves in the real situational context, mostly as customers or as employees. Variants of this include mystery shopping, mystery working, service safaris, explorative service safaris, or diary studies.

Preparation: Autoethnography is often one of the first research methods undertaken as it helps researchers to interpret behaviors when they conduct interviews or observations. Decide when and where you will conduct your research.

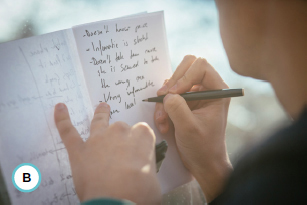

Use: Autoethnography can address one or more channels as well as actions with or without other people and/or machines. If you take field notes, write up first-level (“raw data”) and second-level concepts (“interpretations”) separately: for example, what you see and hear on the left page and what you interpret from this or how it feels on the right.

Expected output: Text (transcripts, field notes), audio recordings, photos, videos, artifacts

Self-Ethnographic Approach Online ethnography

An approach to investigate how people interact with one another in online communities, 29 also known as virtual or cyber ethnography.

Online ethnography can be done as self-ethnographic research, non-participant ethnography, or participant ethnography – however, it always focuses on online experiences. It can look at many different aspects, such as social interactions within an online community or the differences between the self-perception of people when they are online and in real life.

Preparation: Based on your research question, define which online communities might be suitable for your research question. Decide how you will document your experiences; e.g., through screenshots or screencasts, system or journey maps, or simply field notes.

Use: Often online ethnographies include a mix of methods, such as observations, contextual interviews conducted online with screen sharing, or in-depth retrospective interviews with other community members.

Expected output: Text (quotes, transcripts, field notes), screenshots, recordings (screencasts or audio recordings)

Participant Approach Participant observation

Researchers immerse themselves in the lives of research participants.

Participant observation is an umbrella term for a variety of methods, such as shadowing, a day in the life, or work-along. 30

Preparation: Based on your research question, select suitable interviewees and plan when and where you will conduct your research and how you will document it. How will you approach your participants? How will you start and end? How will you manage the “observer effect”? How much time will you plan for it?

Use: Observations might be at the participant’s workplace, in their home, or even following them throughout a process like a holiday trip. Use the situational context and ask participants to explain specific activities, artifacts, behavior, motivations, needs, pains, or gains. Sometimes contradictions between what people say and what people do can be very revealing if you mirror behavior back to participants. During participant observations it is important to observe not only what people are doing (by interpreting their body language and gestures) but also what they are not doing.

Expected output: Text (transcripts, field notes), audio recordings, photos, videos, artifacts

When researchers conduct participant observations, they often switch between more passively observing situations and actively asking questions to get a deeper understanding of user needs.

Contextual interviews help interviewees to articulate problems and needs as they are in the situational context, and they can simply show things right where they are.

During contextual interviews or observations, take audio or video recordings to enable data triangulation, if possible.

Participant Approach Contextual interview

Interviews conducted with customers, employees, or any other relevant stakeholders in a situational context relevant to the research question, 31 also known as contextual inquiry.

Contextual interviews are used to understand a certain group of people better (their needs, emotions, expectations, and environment – useful for personas), to reveal formal and informal networks and hidden agendas of specific actors (useful for system maps), or to understand particular experiences (useful for journey maps). Contextual interviews can be done, for example, with employees at their workplace or with customers during a specific moment of the customer experience.

Preparation: In contrast to studio interviews, contextual ones are conducted “in situational context,” so that researchers can observe the surroundings and interviewees can point to elements in the environment. Based on your research question, define who, when, and where you will interview and how you will document the situational context – including the interviewee’s mood, gestures, and body language.

Use: Try to ask your interviewees to demonstrate details of the concrete experience of interest. It is often easier for people to articulate their motivations and experience when they can refer to concrete examples.

Expected output: Text (transcripts, field notes), audio recordings, photos, videos, artifacts

Pay attention to your interviewees’ body language and gestures and write down interesting observations. This often leads to further questions.

Try to differentiate between concrete observations and your own interpretations (first-level/second-level concepts).

Participant Approach In-depth interview

A qualitative research technique of conducting intensive individual interviews.

In-depth interviews are often conducted with relevant stakeholders or external experts to understand different perspectives on a specific subject. These interviews can help researchers learn more about particular expectations, experiences, products, services, goods, operations, processes, and concerns, and also about a person’s attitude, problems, needs, ideas, or environment.

Preparation: In-depth interviews are mostly done in a semistructured way to collect useful data. For example, interview guidelines can be based on an empathy map. 32 In-depth interviews are mostly done face to face, allowing researchers to observe body language and create a more intimate atmosphere. They can also be conducted online or by telephone.

Use: These interviews can be supported by co-creating boundary objects, such as scribbles or mind maps, or using personas, journey maps, system maps, or other useful templates. They can also include tasks like card sorting to understand user needs or storytelling supported by tangible touchpoint cards to visualize experiences.

Expected output: Text (transcripts, field notes), audio recordings, photos, videos, artifacts

Participant Approach Focus groups

A classic qualitative interview research method in which a researcher invites a group of people and asks them questions on specific products, services, goods, concepts, problems, prototypes, advertisements, etc.

With a focus group, researchers strive to understand the perceptions, opinions, ideas, or attitudes toward a given topic. Although focus groups are often used in business, they have only limited applicability in service design. They typically lack the situational context and usually do not co-create boundary objects, such as personas or journey/system maps. This often leads to limited informative value as results depend solely on the moderated discussion and are biased by issues like observer effect, group think, social desirability bias, etc. 33

Preparation: Focus groups are mostly carried out in a rather informal setting, like a meeting room or a special room where researchers observe the situation through a one-way mirror.

Use: Researchers often ask only an initial question and then observe the group discussion and dynamics. Sometimes a researcher acts as a moderator guiding the group through a set of questions.

Expected output: Text (transcripts, notes), audio recordings, photos, videos

Non-Participant Approach Non-participant observation

Researchers collect data by observing behavior without actively interacting with the participants.

In non-participant observation, researchers do not interact with research participants; they behave like a “fly on the wall.” Research subjects are often customers, employees, or other stakeholders, observed in situations that are relevant to the research question. Often, non-participant observation is used to level out researcher biases in other methods and to reveal differences between what people say and what they actually do.

Preparation: Plan who will do the research, when, where, and with whom. Sometimes when researchers do covert non-participant observation they pretend to be customers or passers-by, or even use one-way mirrors, minimizing the risk of the “observer effect.” 34

Use: During non-participant observations, it is important to observe not only what people are doing (for example by interpreting their body language and gestures), but also what people are not doing (perhaps ignoring instructions or refraining from asking for help or assistance).

Expected output: Text (field notes), photos, videos, audio recordings, sketches, artifacts, statistics (e.g., counting customers per hour)

Non-Participant Approach Mobile ethnography

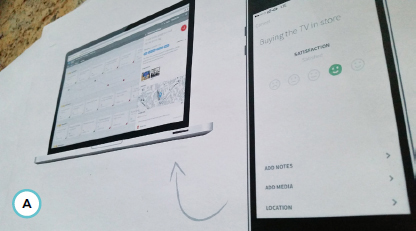

Aggregated multiple self-ethnographies, taking place in a guided research setting where data is collected with mobile devices such as smartphones. 35

A mobile ethnography research project can include anywhere from a handful to thousands of participants. Usually users, customers, or employees are included as participants, self-documenting their own experiences on their own smartphones with text, photos, videos, or quantitative evaluations, as well as date, time, and location. Researchers can review, synthesize, analyze, and export the collected data in real time.

Preparation: Plan to offer incentives for your participants (recruiting is often the hardest part!). In your invitation, provide clear and short instructions on how to join the project and how to document. Define questions for your participant profile so that you can cluster participants into groups matching your personas.

Use: Mobile ethnography works well for longer research over one or a few days. Once you have started your data collection, you can start to synthesize and analyze.

Expected output: Text, photos, videos, audio recordings, date and time information, geolocation data, statistics of participant profiles

Non-Participant Approach Cultural probes

Selected research participants collect packages of information based on specific tasks given by researchers. 36

In cultural probes, research participants are asked to self-document certain experiences with field notes and photos, and/or to collect relevant artifacts. Cultural probes are often also done virtually using online diary platforms or mobile ethnography apps.

Preparation: Prepare and send a package to participants, which might include a set of instructions, a notebook, and a single-use camera. You might want to prepare a simple script for participants to follow, or instruct them to take photos of how they use specific products in various contexts.

Use: The aim of cultural probes is to gain unbiased data that has been collected by participants themselves in context, without having a researcher present. They help researchers to understand and overcome cultural boundaries and bring diverse perspectives into a design process. Cultural probes are often a mix of various approaches and may be combined with in-depth interviews to review the collected data retrospectively. They can include diaries kept over a day, a week, or even several years.

Expected output: Text (self-documented notes, diaries), photos, videos, audio recordings, artifacts

Participants use a mobile ethnography app on their smartphones to report on and evaluate their experiences step by step. Researchers see the data in real time and can start analyzing it immediately. 37

The content of a cultural probe (the observation package) to research flight travel experiences. 38

Even though age and gender is always an easy start for a persona, demographics might be quite misleading. Instead, think of factors that differentiate the groups you would like to represent with your personas.

Co-Creative Workshop Co-creating personas

Using the know-how of a group of invited participants to create a set of personas.

The quality of results of a co-creative persona workshop depends on the research data you bring to the workshop and on how much participants know about the group of people you want to exemplify with personas – for example, a workshop with frontline employees is often quite useful to create personas of customers. For less biased results, avoid inviting only people with abstract knowledge of the subject matter. The results might look convincing, but often they are very biased.

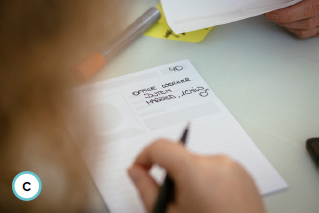

Preparation: Invite and incentivize your workshop participants and describe the aim of the workshop. Select participants with in-depth knowledge of the stakeholder group you are creating the persona for. Write a facilitation agenda to create a safe space during the workshop. 39

Use: These workshops often follow a structure similar to this: welcome and split into smaller groups, create initial personas, present and cluster, discuss and merge, visualize and validate, iterate.

Expected output: Drafts of personas (physical or digital), workshop photos, quotes of participants (audio or text), videos of workshop progress

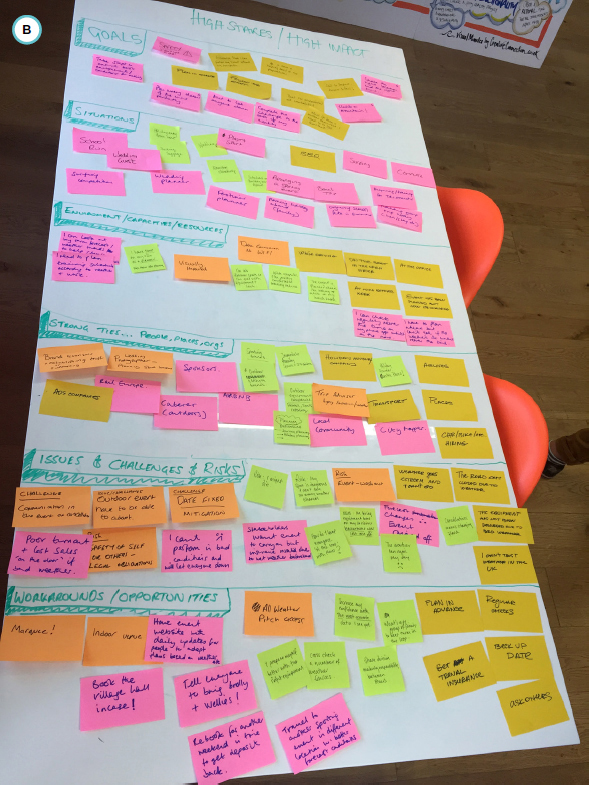

Co-Creative Workshop Co-creating journey maps

Using the know-how of a group of invited participants to create one or more journey maps or service blueprints.

Invite participants who have solid knowledge about the experience you are mapping. If you want to create a journey map about customer experiences, this might be customers (yes, real ones!) and/or frontline employees. The outcomes of co-creative workshops are often assumption-based. The results might look convincing, but often they are biased. These outcomes should be understood as tools in development, as a common starting point to design the research process, or to evaluate and enhance collected data.

Preparation: Think about inviting workshop participants with either a shared perspective (such as customers of a particular target group) or from differing perspectives (such as customers of various target groups or customers and employees). Clearly communicate the scope of the journey map, such as a high-level journey map vs. a more detailed map of one specific situation within a high-level journey map.

Use: Define your main actor and journey scope, welcome and split into smaller groups, identify stages and steps, iterate and refine, add perspectives such as the emotional journey (optional), discuss and merge, iterate.

Expected output: Drafts of journey maps (physical or digital), workshop photos, quotes of participants (audio or text), videos of workshop progress

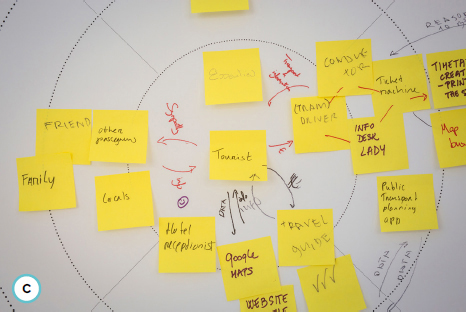

Co-Creative Workshop Co-creating system maps

Using the know-how of a group of invited participants to create system maps. 40

For each system map, define a specific perspective and invite participants with a sound knowledge of this. With your decision on who to invite and who to leave out, you also decide which perspectives might be interesting enough to include. Constantly challenge your assumptions with solid research. Over time, assumption-based maps should develop into research-based ones.

Preparation: In addition to the know-how of the workshop participants, a second important factor is the qualitative research you do beforehand and bring to the workshop, for example through a research wall.

Use: Setting a clear scope and situational context helps workshop participants to get on the same page. Often these workshops follow a structure like this: welcome and split into smaller groups, create initial stakeholder maps (1. list stakeholders, 2. prioritize stakeholders, 3. visualize stakeholders on map, 4. illustrate relationships between stakeholders), present and compare, discuss and merge, iterate and validate, test different scenarios within the ecosystem (optional).

Expected output: Drafts of system maps (physical or digital), workshop photos, quotes of participants (audio or text), videos of workshop progress

Methods of Data Visualization, Synthesis, and Analysis

This section introduces methods used in service design to visualize, synthesize, and analyze data collected as described in the previous section – sometimes this process is also called “sensemaking.” This is just a brief overview; there are many more approaches to visualize data, and plenty of appropriate ways to communicate the data and insights. Also, often the same method is known by several (perhaps inconsistently used) names. If you want to dig deeper, there is plenty of literature, and for some methods even whole books with detailed descriptions and examples.

Visualizations, such as a journey map, help participants to understand the context of each step and enable them to navigate quicker.

Paper templates often help participants to get started and to take a task seriously. The more familiar they become with a tool, the less important templates are for them.

Value network maps quickly can become quite messy. Try to give a map a specific focus to keep an overview.

This section presents eight methods of data visualization and analysis:

→ Building a research wall

→ Mapping journeys

→ Mapping systems

→ Developing key insights

→ Generating jobs-to-be-done insights

→ Writing user stories

→ Compiling research reports

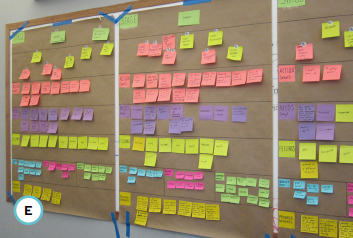

Building a research wall

Synthesizing and analyzing research data through a visual arrangement of research data on a wall. 41

You can imagine a research wall as a more complex version of how detectives structure their crime scene data in many thrillers (think of any CSI episode). You’ll find many types of data on these walls (quotes, photos, screenshots of websites or videos, statistics, artifacts, etc.). This enables you to identify patterns within your data, while also providing a place to share your research with others as it develops.

Expert Tip

Expert Tip

“To take personas further, use a persona’s goals, issues, and unmet user needs to (1) stimulate scenarios and ideation sessions on iterations of an existing service or develop a new service, or (2) to guide recruitment in ethnographic studies, as a starting point to create journey maps or build service blueprints from.”

— Phillippa Rose

Preparation: Prepare a wall space or large cardboard sheets to hang up your research data. Also, think about who should join you to create a research wall.

Use: Hang the material on the wall and start synthesizing data by clustering it according to specific topics, like certain customer segments, common problems, steps along the journey map, etc. Name these clusters and look for connections between clusters as well as connections between single materials (be aware of a potential confirmation bias). The various patterns you identify can then be further explored with tools like personas, journey maps, system maps, key insights, and so on – all of which also become part of the research wall.

Comment

Comment

“We started to document all our core customer experiences with current-state journey maps based on quantitative and predominantly qualitative research. Now that we know where we are, we can make educated decisions on what exactly needs improvement and why.”

— Anke Helmbrecht

Expected output: A visual arrangement of research data

Creating personas

Creating a rich description of a specific fictional person as an archetype exemplifying a group of people, such as a group of customers, users, or employees. 42

Personas focus on particular types of customer motivations and behaviors, and help to achieve empathy with a group of people to create solutions that address real problems. You can create them for existing market segments or to challenge an existing segmentation.

Preparation: Persona templates or empathy maps are sometimes helpful when creating personas. You often mix different approaches – for example, starting with assumption-based personas developed during a co-creative workshop with frontline staff, then enriching and backing these with research.

Use: Create approximately three to seven core personas representing your main market segments. Following the principle of “design for the average – test with extremes,” create many more “edge-of-the-curve” personas to test ideas and prototypes with people from more extreme ends of your user spectrum.

Expected output: Personas

Using foam boards as research walls helps a research team to keep research data (such as quotes, photos, screenshots, artifacts, etc.) with them when they have to move between rooms.

Structure your research wall by clustering and adding headings to the different sections.

Starting personas with demographics, like age, gender, nationality, job, and so on, carries the risk of stereotyping. Instead, try to build your personas from your research, perhaps starting with behavioral patterns you find within your data.

Mapping journeys

Visualizing specific experiences of a main actor, often exemplified by a persona, over time.

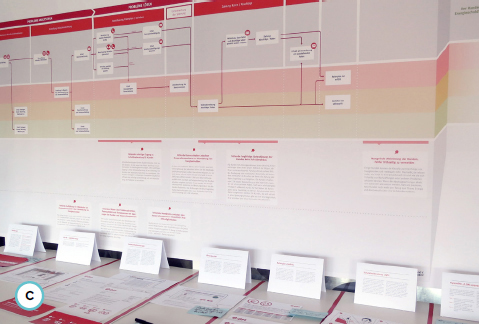

Journey maps can visualize 43 existing experiences (current-state journey maps) or planned experiences (future-state journey maps). The basic structure of a journey map consists of steps and stages defining the scale of the visualized experience, from a high-level journey map that shows an end-to-end experience to a detailed journey map showing only a few minutes.

Preparation: Even though assumption-based journey maps are relatively easy and fast to do, they can be very misleading. If you start with assumption-based journey maps, constantly challenge your assumptions. Over time, assumption-based journey maps should develop into research-based ones with a solid foundation on research data (beware of confirmation bias). 44

Use: Often a process to create a journey map looks like this: prepare and print out data, choose a main actor (persona), define scale and scope, create steps, iterate and refine, add lanes.

Expected output: Journey maps

A journey map visualizing two different scales of daily and weekly user activities. The map includes a sketched storyboard, an emotional journey, and user needs. 45

Journey mapping software helps you to quickly create professional journey maps with dispersed teams. 46

System maps are often hard to understand for people outside of your core team. Reduce them to the most important facts when you use them for communication.

Using templates or a specific structure helps to develop key insights, but constantly ask yourself if every aspect of your insight is specific and clear enough and if it is backed by sufficient research data.

Mapping systems

Visualizing the ecosystem around services and physical or digital products.

“System maps” is an umbrella term for different visualizations, such as stakeholder maps, value network maps, or ecosystem maps. All of these can be created from various perspectives. A system looks different from a customer’s perspective compared with a business internal perspective. System maps have obvious relationships to other tools in service design, such as personas and journey maps. 47

Preparation: As system maps can become very messy, it is important to define a clear focus for a map. Don’t try to visualize every stakeholder you can think of on the same stakeholder map; it’s more useful to make various maps for different purposes. System maps are an excellent tool to synthesize research data, so it is useful to prepare research data beforehand. Remember that research is iterative, and it makes sense to use these maps to find gaps in your research, which you can investigate in later research iterations.

Use: Often, creating system maps looks like this: prepare and print out data, collect stakeholders, prioritize stakeholders, visualize stakeholders on map, illustrate relationships between stakeholders (optional), find gaps and iterate.

Expected output: System maps

Developing key insights

Summarizing main findings in a concise and actionable format for communication within and across project teams. 48

Key insights are built on research and supported by raw data. They often include a situational context, and an intended outcome, as well as a restriction, obstacle, or friction. First insights are often generated based on patterns you find while you are collecting data, building your research wall, or codifying your data. If you don’t have enough data to critically reflect on an assumption, collect more data. Design research is iterative!

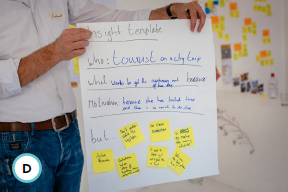

Preparation: There are many ways (and templates) to formulate insights, such as: … [actor] wants to … [action] because … [motivation], but … [tension]. It helps to write down initial ideas for insights at any stage of the research process and then critically reflect on them using your research data.

Use: Often, key insights are developed from initial assumptions, hypotheses, and intermediate insights (try to avoid confirmation bias). The process often goes like this: prepare and print out data; write initial insights; cluster, merge, and prioritize; link key insights to data; find gaps and iterate. Key insights should be carefully phrased, as they will serve as points of reference for the further design process.

Expected output: Several key insights

Generating jobs-to-be-done insights

Summarizing the bigger picture of what customers want to achieve when they use certain services or physical/digital products.

Jobs to be done (JTBD) is a specific way to formulate insights based on a framework by Clayton Christensen. 49 JTBD describes what a product helps the customer to achieve. It can be formulated for an entire physical/digital product or service (the main aim behind a journey map). Alternatively, formulate it for certain steps within a journey map by asking yourself what a customer or user wants to get done and adding your discoveries as an additional lane on a journey map. JTBD can help a team to break away from a current solution and discover new solutions based on what customers really want to achieve.

Preparation: Often, JTBD are developed based on this structure: When … [situation], I want to … [motivation or forces], so I can … [expected outcome]. To phrase JTBD, prepare and print out research data, personas, and journey maps.

Use: JTBD insights can be created iteratively together with data collection and can follow a process like this: write down initial JTBD insights; cluster, merge, and prioritize; link JTBD insights to data; find gaps and iterate.

Expected output: Jobs-to-be-done insights

Writing user stories

Summarizing what customers or users want to be able to do; used to bridge design research with defining requirements for software development. 50

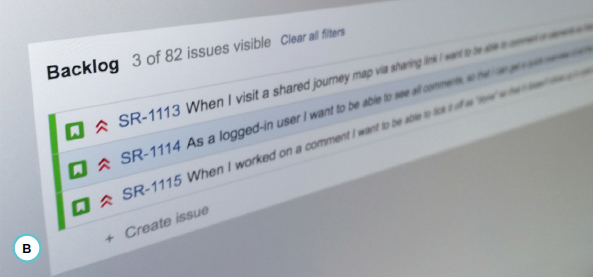

User stories are used in software development to define requirements from a user perspective, instead of more product-based requirement documents. User stories are often formulated like this: As a … [type of user/persona/role], I want … [action], so that … [outcome]. In service design, they are used to connect design research with actionable input for IT development to turn insights and/or ideas into productive software. User stories can also be used beyond software development to define the requirements of any physical/digital product or service.

Preparation: Just as journey maps have different zoom levels, software requirements also have different scales. While user stories typically describe detailed requirements, a set of user stories can be combined into an “epic,” a longer, less detailed description of the big picture of what software can do. It’s important to define this scale level beforehand.

Use: User stories should be formulated without IT-specific language, using simple, concise words, so that everyone can understand them. Writing user stories often follows a process like this: write initial user stories, cluster user stories into epics, link user stories to data, find gaps and iterate.

Expected output: User stories

Compiling research reports

Aggregate research process, methods, research data, data visualizations, and insights. Reports are often a required deliverable.

Research reports can have many forms, from written reports to more visual collections of photos and videos. Depending on the project a research report can serve various purposes, such as providing actionable guidelines to improve a physical/digital product or service, a “shock report” to get internal buy-in for a service design project, proof of work that justifies the budget spent on research, a compendium of research data that can be reused in other projects, and more.

Preparation: Have your research process and your research data, as well as different visualizations (personas, journey maps, system maps) and insights (key insights, JTBD, user stories), at hand. Think who you could invite to peer-review your report.

Use: Write a first draft of your research report. Ask yourself: who was involved, which methods and tools did you use, and how many iterations did you do? Add a summary of your key findings and key visualizations, add raw data as evidence, and use indices to show that there’s much more data these are based on. Then invite other researchers or participants of the research to peer-review your report and iterate.

Expected output: Research reports

Jobs-to-be-done integrated as an additional lane in a journey map.

Example of a backlog in software development for an “epic” (i.e., a new feature) consisting of three user stories.

Cases

The following five case studies provide examples of how service design research is done in practice: how to apply ethnography to gain actionable insights in a service design project (“Case: Applying Ethnography To Gain Actionable Insights”), how to use both qualitative and quantitative research in service design projects (“Case: Using Qualitative and Quantitative Research in Service Design”), how to develop valuable personas and use these in a service design project (“Case: Developing and Using Valuable Personas”), how to illustrate research data with journey maps and use these in a service design project (“Case: Illustrating Research Data With Journey Maps”), and how to use current-state (as-is) and future-state (to-be) journey mapping in service design (“Case: Current-State (as-is) and Future-State (to-be) Journey Mapping”).

5.4.1 Case: Applying ethnography to gain actionable insights

Zahlhilfe program: An intersectoral cooperation to prevent electricity cutoffs

- — Nina Weschenfelder, Senior Service Designer, minds & makers

- — Michael Wend, Senior Customer Experience Manager, E.ON

5.4.2 Case: Using qualitative and quantitative research in service design

- — Cat Drew, Senior Policy Designer, Policy Lab

- — Laura Malan, Senior Consultant, Uscreates

5.4.3 Case: Developing and using valuable personas

- — Phillippa Rose, Service Designer and Facilitator, current.works

5.4.4 Case: Illustrating research data with journey maps

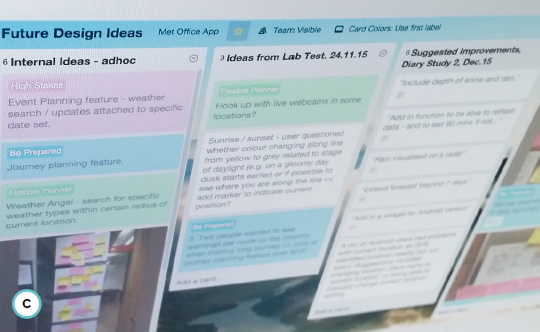

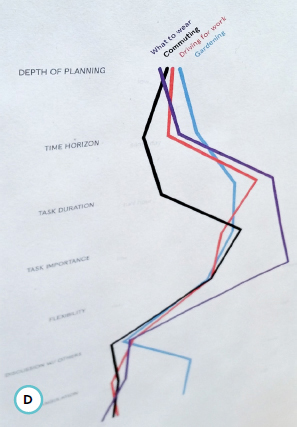

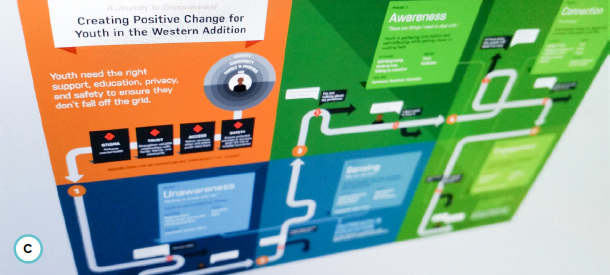

Promoting youth mental health: The impact of mapping the journey

- — Jamin Hegeman, Design Director, Adaptive Path

5.4.5 Case: Current-state (as-is) and future-state (to-be) journey mapping

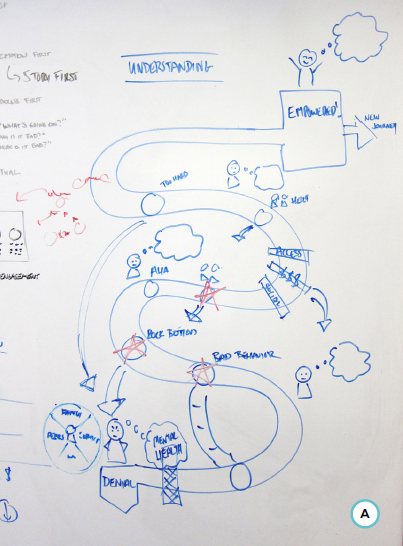

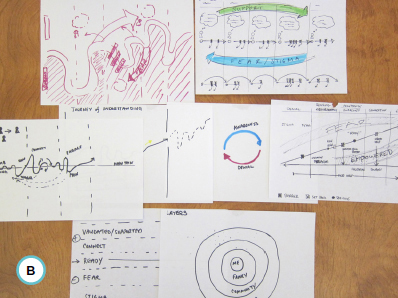

The bigger picture: Projects building up to more long-term and strategic value

- — Geke van Dijk, Strategy Director, STBY

- — Ozlem Dessauer-Siegers, Sr. Service Experience Design Lead, Vodafone

Case: Applying Ethnography To Gain Actionable Insights

Zahlhilfe program: An intersectoral cooperation to prevent electricity cutoffs

AUTHORS

Nina Weschenfelder Senior Service Designer, minds&makers

Michael Wend Senior Customer Experience Manager, E.ON

The challenge

Every year, the electricity supply is turned off in approximately 350,000 households in Germany 51 because the electricity bill cannot be paid. For those affected, the effects are dramatic and often lead to further social problems. For energy suppliers, cutting off the supply incurs costs, and it negatively affects the company’s image. Energy-related debts are often just one aspect of a complex debt issue and must therefore be addressed holistically. In view of this, there is no viable alternative to a cooperative effort involving institutions from the public, private, and social sectors.

Project objectives