Appendix C: Grid, Cluster, Utility, and Symmetric Multiprocessing Computing

Grids leverage underutilized CPU power from machines connected to the Internet (or a private network) to run various applications. Clusters are a formal collection of CPU resources (servers normally) connected to a private network for use in computing. At the most fundamental level, when two or more dedicated computers are used together to solve a problem, they are considered a cluster. Utility computing hides the complexity of resource (computers, networks, storage, etc.) management and provides what business wants: utilization on demand. Finally, symmetric multiprocessing (SMP) harnesses the power of N CPUs running in parallel. All of these techniques may be applied to A/V computational problems. The following sections review the four methods.

C.0 GRID COMPUTING

Grid computing is a distributed environment composed of many (up to millions) heterogeneous computers (nodes), each sharing a small burden of the computational load. The power of the Internet enables thousands of cooperating PCs to be connected in a mesh of impressive computational power. By some estimates, most desktop PCs are busy only 5 percent of the time, so why not put these underutilized resources to better use? Even some servers are idle for a portion of each day, so a grid assembles and manages unused storage and CPU power on networked machines to run applications. The node application is run in the background and does not interfere with the primary user applications on the machine.

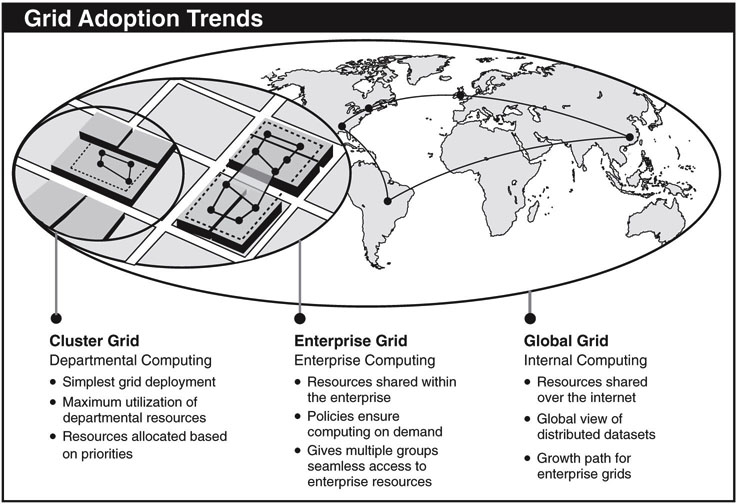

The potential of massive parallel CPU capacity is very attractive to a number of industries. In addition to pure scientific needs, bio-med, financial, oil exploration, and others are finding grids to be of value. If the computational solution is written with a grid in mind, it will run N times faster when N nodes are working in parallel on average. Figure C.1 shows trends ranging across local to enterprise to global grid computing. Grid.org (www.grid.org) reports that 2.5 million computers from 200 countries are now tied into one grid for the purposes of cancer research, searching for extraterrestrial life (SETI project at http://setiathome.ssl.berkeley.edu), and other noble causes. For local or enterprise grids, it is possible to define a QoS for the computing power. In a global sense, a guaranteed QoS is problematic, as most of the Internet-connected nodes are voluntary citizens.

FIGURE C.1 Grid computing environments. Concept: Sun.

The Open Grid Forum (OGF) is a community of users, developers, and vendors leading the global standardization effort for grid computing. See www.ogf.org for a great collection of white papers and other references.

C.1 GRID COMPUTING AND THE RIEMANN ZETA FUNCTION

One particularly interesting use of grid computing is related to finding the complex zeros of the Riemann zeta function. In 1859, Bernhard Riemann wrote a mathematical paper showing a formula for all the prime numbers less than N, the so-called prime number theorem (PNT). In this paper he asserted that all the zeros of the zeta function have a real part of 1/2. This is the Riemann Conjecture. Since then, mathematicians have attempted to prove this, but without success. It is one of the most difficult problems facing mathematicians today. There is a $1 million prize on the block if you can prove the conjecture (see www.claymath.org/millennium). Sebastian Wedeniwski of IBM used a grid configuration of ~11,600 nodes to find the first trillion complex zeros of the zeta function. Results show all zeros have a real part of 1/2. This is not a proof of the Riemann Conjecture, but it provides tantalizing data to the affirmative.

To learn more about Riemann, the zeta function, complex zeros, the PNT, loads of interesting stories, and the quest to solve one of the greatest unsolved problems in mathematics, pick up a copy of the very entertaining book Prime Obsession by John Derbyshire. He treats the problem as a mystery and leads the reader through beautiful gardens of advanced, yet accessible, math to illuminate it.

C.2 CLUSTER1 COMPUTING

A cluster is a common term meaning independent computers combined into a unified system through software and networking. Clusters are typically used for high-availability (HA) or high-performance computing (HPC) to provide greater computational power than a single computer can provide. The Beowulf project began in 1994 as an experiment to duplicate the power of a supercomputer with commodity hardware and software (Linux).

Today, Beowulf cluster technology is mature and used worldwide for serious computational needs. The commodity hardware can be any of a number of mass-market, standalone compute nodes. This ranges from two networked computers sharing a file system to 1,024 nodes connected via a high-speed, low-latency network. Performance is improved proportionally by adding machines to the cluster.

Class I clusters are built entirely using commodity hardware and software using standard technology such as SCSI/ATA and Ethernet. They are typically less expensive than class II clusters, which may use specialized hardware to achieve higher performance. As Chapter 3B discussed, NAS clusters are very practical for A/V applications. Also, for high-end rendering and film effects work, a cluster is “without parallel.” Clusters are in daily use for computing the world’s most demanding scientific simulations.

C.3 UTILITY AND CLOUD COMPUTING

Next, there is utility computing. In theory, utility computing gives companies greater utilization of data center resources more cost effectively. It is based on flexible computing, clusters, grids, storage, and network capacity that react automatically to changes in computing needs. Utility computing can be localized (enterprise) or global (the Web). The data center of the future should be selfconfiguring, self-monitoring, self-healing, and invisible to end users.

In 2006, Amazon launched the EC2—Elastic Compute Cloud. This service sells “CPU cycles” over the Web. Users pay only for the instantaneous use of CPU cycles and consumed memory. There is no recurring subscription fee. The EC2 is being used by companies that have wide fluxes in computing needs but don’t desire to own/lease physical hardware. Scale and reliability are key attributes to EC2. Amazon also offers the S3 networked storage service, as do other companies. See http://aws.amazon.com/ec2 for pricing and configuration.

3tera, Amazon, AT &T, BT, Google, Microsoft, and others have roadmaps to enable the “universal cloud computer.” Although not an authoritative fact, Google is rumored to have plans for ~2 million distributed servers with a combined storage capacity of ~5 million hard drives in 2009. Even if this estimate is high for 2009, it will likely be spot-on within a few years if Google continues to grow. It’s not difficult to imagine a global computer utility akin to the Edison electric grid for all to tap into on demand. Plug into it; use it without worries of where the resource came from or who manages its allocation and reliability.

The networked cloud computer would run cloudware (Software-as-a-Service, SaaS) to meet the needs of many business and some home users. Bye-bye desktop or enterprise server applications, hello cloudware. Will it happen? Yes—it is happening in 2009, but the scale is relatively small. For example, Salesforce.com currently offers over 700 SaaS applications. The SaaS phenomenon is in its early stages, analysts say. The research firm ITC predicts that SaaS companies, which earned $3.6 billion in revenue in 2006, would earn $14.8 billion by 2011.

For the cloud infrastructure, 3tera offers AppLogic, the first grid operating system designed for Web applications and optimized for transactional and I/O intensive workloads. AppLogic makes it easy, according to 3tera, to move existing Web applications onto a cloud grid without modifications.

The end game is “cloud-everything”—computing, storage, networking, applications, provisioning, management. This is our future, so be looking for it.

C.4 SMP COMPUTING

The final method in our list to increase performance uses N tightly coupled CPUs. This is sometimes called symmetric multiprocessing. Commercial systems support 4 or 8 CPUs commonly, although 64 or more are possible. The programming model for a SMP system relies on dividing a large program into small threads spread out among the N CPUs. The CPUs share a common memory, so a computational speed up of N may occur under a best-case scenario. Linux and Windows support SMP as do other operating systems.

The most popular entry-level SMP systems use the x86 instruction set architecture and are based on Intel’s and AMD’s multi-CPU processors.

1 Some of the material in this section is paraphrased from the Beowulf Web site, www.beowulf.org.