CHAPTER 1

Networked Media in an IT Environment

CONTENTS

1.2 Motivation Toward Networked Media

1.2.1 Force #1: Network Infrastructure and Bandwidth

1.2.2 Force #2: CPU Compute Power

1.2.3 Force #3: Storage Density, Bandwidth, and Power

1.2.4 Force #4: IT Systems Manageability

1.2.5 Force #5: Software Architectures

1.2.6 Force #6: Interoperability

1.2.7 Force #7: User Application Functionality

1.2.8 Force #8: Reliability and Scalability

1.2.9 The Eight Forces: A Conclusion

1.3 Three Fundamental Methods of Moving A/V Data

1.4 Systemwide Timing Migration

1.5 Can “IT” Meet the Needs of A/V Workfl ows?

1.6 Advantages and Disadvantages of Methods

1.7 It’s A Wrap: Some Final Words

1.0 INTRODUCTION

Among his many great accomplishments, Sir Isaac Newton discovered three fundamental laws of physics. Law number one is often called the law of inertia and is stated as Every object in a state of uniform motion remains in that state unless an external force is applied to it.

By analogy, this law may be applied to the recent state of A/V system technology. The traditional methods (state of uniform motion) of moving video [serial digital interface (SDI), composite …] and storing video (tape, VTRs) assets are accepted and comfortable to the engineering and production staff, fit existing workflows, and are proven to work. Some facility managers feel, “If it’s not broken don’t fix it.” Ah, but the second part of the law states “… unless an external force is applied to it.” So, what force is moving A/V systems today into a new direction—the direction of networked media? Well, it is the force of information technology (IT)1 and all that is associated with it. Is this a benign force? Will its muscle be beneficial for the broadcast and professional A/V production businesses? What are the advantages and trade-offs of this new direction? These issues and many more are investigated in the course of this book. First, what is networked media?

1.1 WHAT IS NETWORKED MEDIA?

The term network in the context of our discussions is limited to a system of digital interconnections that communicate, move, or transfer information. This primarily includes traditional IT-based LAN (Ethernet in all forms), WAN (Telco-provided links), and Fibre Channel network technologies. Some secondary linkages such as IEEE-1394, USB, and SCSI are used for very short haul connectivity. The secondary links have limited geographical reach and are not as fully routable and extensible as the primary links.

In contrast to traditional A/V equipment,2 networked media relies on technology and components supplied by IT equipment vendors to move, store, and manipulate A/V assets. With all respect to the stalwart SDI router, it is woefully lacking in terms of true networkability. Only by Herculean feats can SDI links be networked in similar ways to what Ethernet and Internet Protocol (IP) routing can offer.

The following fundamental methods and concepts are examples of networked media.

• Direct-to-storage media ingest, edit, playout, process, and so on

• 100 percent reliable file transfer methods

• A/V streaming over IT networks

• Media/data routing and distribution using Ethernet LAN connectivity, Fibre Channel, WAN, and other links with appropriate switching

• Networkable A/V components (media clients): ingest ports, edit stations, data servers, caches, playout ports, proxy stations, controllers, A/V process stations, and so on

• A/V-as-data archive; not traditional videotape archive

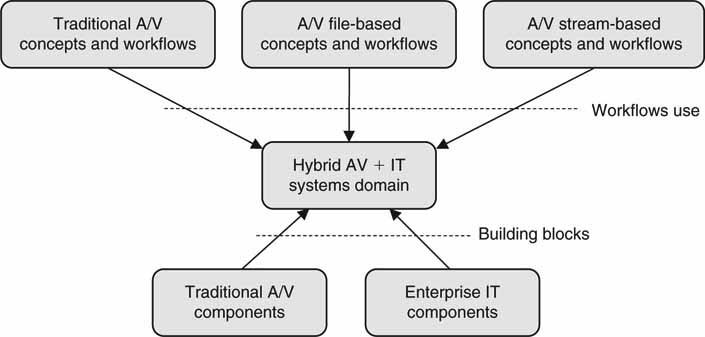

For the most part, file-based technology and workflows (so-called tapeless) use networked media techniques. So, file-based technology is implemented using elements of AV + IT systems and is contrasted to stream-based throughout this book. Also, the AV/IT systems domain is a superset of the file-based concepts domain. Figure 1.1 illustrates the relationships between the various actors in the AV/IT systems domain.

FIGURE 1.1 Professional video system components.

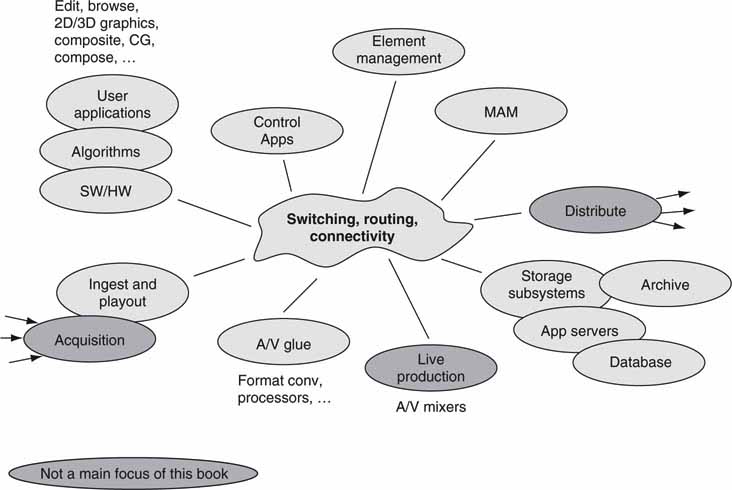

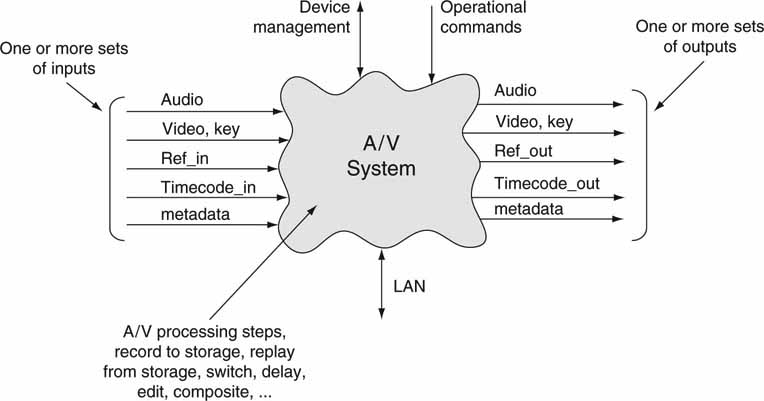

The world of networked media spans from a simple home video network to large broadcast and postproduction facilities. There are countless applications of the concepts in the list just given, and many are described in the course of the book. We will concentrate on the subset that is the realm of the professional/enterprise (and prosumer) media producer/creator. Figure 1.2 illustrates the domain of the general professional video system, whether digital or not.

FIGURE 1.2 Switching, routing, connectivity.

The components are connected via the routing domain to create an unlimited variety of systems to perform almost any desired workflow. Examples of these systems include the following:

1. Analog based (analog tape + A/V processing + analog connectivity)

2. Digitally based (digital tape + A/V processing + digital connectivity)

3. Networked based (data servers + A/V processing + networked connectivity)

4. Hybrid combinations of all the above

The distinction between digitally based and networked based may seem inconsequential, as networks are digital in nature. Think of it this way: all networks are digital, but not all digital interconnectivity is net-workable. The ubiquitous SDI link is certainly digital, but it is not easily networkable. Over the course of discussions, our focus highlights item #3 as primary, with the others taking on supporting roles. Items #1 and #2 are defined for our discussions as “traditional A/V” compared to item #3, which is referred to as “AV/IT or IT-based AV” throughout this book.

Again, looking at Figure 1.1, most of the components may be combined in various ways to make up an IT-based professional video system. However, three elements have extended applications beyond our consideration. The world of media acquisition and distribution is enormous and will not be considered in all its glory. Also, media distribution methods using terrestrial RF broadcast, cable TV networks, the Web, and satellite are beyond our scope. Additionally, live (sporting events, news, etc.) production methods (field cameras, vision mixers) fall into a gray area in terms of the application of IT. However, most new field cameras don’t use videotape; instead, they use use file-based optical disc or flash memory for storage. These offer nonlinear access and network ports.

1.2 MOTIVATION TOWARD NETWORKED MEDIA

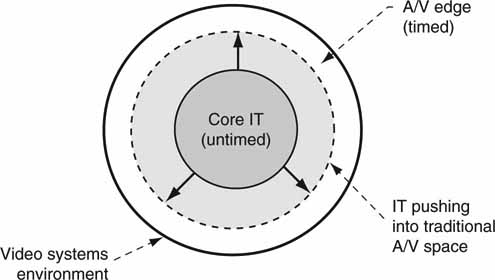

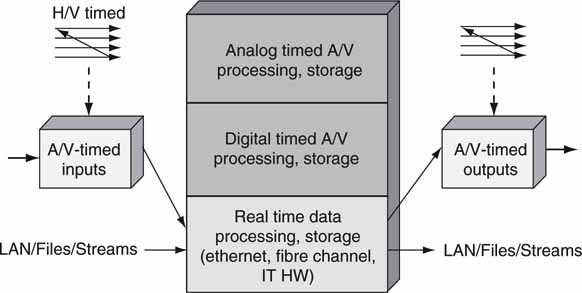

Over the past few years, there has been a gradual increase in new A/V products that steal pages from the playbook of IT methods. Figure 1.3 shows the changing nature of video systems. At the core are untimed, asynchronous IT networks, data servers, and storage subsystems. At the edges are traditional timed (in the horizontal and vertical raster-scanning sense) A/V circuits and links that interface to the core. The core is expanding rapidly and consuming many of the functionalities that were once performed solely by A/V-specific devices. This picture likely raises many questions in your mind. How can not-designed-for-video equipment replace carefully designed video gear? How far can this trend continue before all notion of timed video has disappeared? What is fueling the expansion? Will the trend reverse itself after poor experiences have accumulated? Our discussions will answer these questions.

FIGURE 1.3 The expansion of the IT universe into A/V space

There is no single motivational force responsible for the shift to IT media. There are at least two levels of motivational factors: business related and technology related. At the business level there is what may be called the prime directive. Simply put, owners and managers of video and broadcast facilities are demanding, “I want more and better but with less.” That is a tall order, but this directive is driving many purchasing decisions every day. More what? More compelling content, more distribution channels, more throughput. Better what? Better quality (HD, for example), more compelling imagery, better production value, better branding. Less what? Less capital spending, less ongoing operational cost, fewer maintenance headaches. All these combine to create value and the real business driver—more profit. Of course, there are many aspects to more/better/less, but let us focus our attention on the technical side of the operations. If we want to achieve more/better/less, the technology selection is key. The following sections examine this aspect.

Of course, there are issues with the transition to the AV/IT environment from the comfortable world of traditional A/V video. All is not peaches and cream. The so-called move to IT has lots of baggage. The following sections focus on the positive workflow-related benefits of the move to IT. However, in Chapter 10, several case studies examine real-world examples of those who took the bold step to create hybrid IT and A/V environments. In that chapter you will feel the pains and joys of the implementers on the bleeding edge. In that consideration we examine the cultural, organizational, operational, and technical implications of the move to IT.

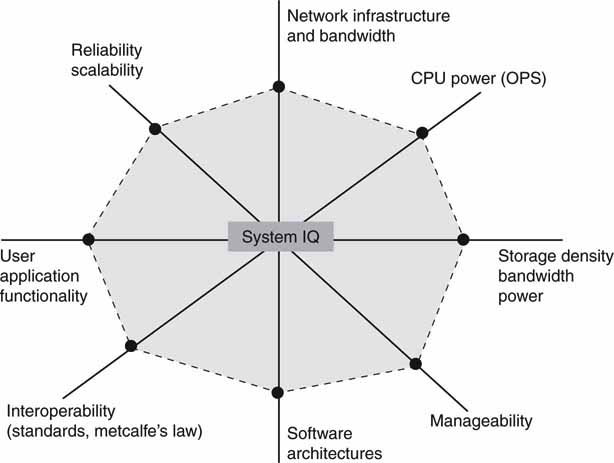

At least eight technical forces are combining to create a resulting vector that is moving media systems in the direction of IT. Let us call the area enclosed by the boundary contour of Figure 1.4 the system IQ. This metric is synthetic, but consider the area (bigger is better) as a measure of a system’s “goodness” to meet or exceed a user’s requirements. Each of the eight axes is labeled with one of the forces. Let us devote some time to each force and add insight into their individual significance. Also, for each force, a measure of workflow improvement due to the force is described. After all, without an improvement in cost savings, quality, production value, resource utilization, or process delay, a force would be rather feeble. Although the forces are numbered, this is not meant to imply a priority to their importance.

FIGURE 1.4 Eight forces enabling the new AV/IT infrastructure.

1.2.1 Force #1: Network Infrastructure and Bandwidth

The glue of any IT system is its routing and connectivity network. The faster and wider the interconnectivity, the more access any node has to another node. But of what benefit is this to a media producer? What are the workflow improvements? Networks break the barrier of geography and allow for distributed workflows that are impossible using legacy A/V equipment. For example, imagine a joint production project with collaborating editors in Tokyo, New York City, and London (or among different editors in a campus environment). Over a WAN they can share a common pool of A/V content, access the same archive, and creatively develop a project using a coordinated workflow management system. File transfer is also enabled by LANs and WANs. Does file transfer improve workflow efficiency? Consider the following steps for a typical videotape-based copy and transfer cycle:

1. Create a tape dub of material—delay and cost.

a. Check quality of dub—delay and cost.

b. Separately package any closed caption files, audio descriptive narration files (SAP channel), and ratings information.

2. Deliver to recipient using land-based courier—delay and cost.

3. Receive package, log it, and distribute to end user—delay mainly.

a. Integrate the closed caption and descriptive notation ready for playout.

4. Ingest into archive or video server system (and enter any metadata)—delay and cost.

a. QA ingested material—delay and cost.

5. Archive videotape—cost to manage and store it, format obsolescence worries.

THE PERFECT VIDEO SYSTEM

The late itinerant Hungarian mathematician Paul Erdos developed the idea of “The Book of Mathematical Proofs” written by God. In his spare time, God filled it with perfect mathematical proofs. For every imaginable mathematical problem or puzzle that one can posit, the book contains a correspondingly elegant and beautifully simple proof that cannot be improved upon. Erdos imagined that all the proofs developed by mere mortal mathematicians could only hope to equal those in the “Book.” We too can imagine a similar book filled with perfectly ideal video systems designed to match all the requirements of their users. Of the many architectural choices, of the many equipment preferences, and of the many design decisions, our book would contain a video system that could not be improved upon for a given set of user workflow requirements. True, such a book is a dream. However, many of the principles discussed in these chapters would make up the fabric and backbone of our book.

It is obvious that the steps are prone to error, are costly, and add delay. Let us look at the corresponding file transfer workflow:

1. Locate target file(s) to transfer.

2. Initiate and transfer file(s) to end station—minimum delay for transfer (seconds to hours, depending on desired transfer speed).

Additionally, file-associated metadata are included in the transfer, thereby eliminating another cause or error—manual metadata logging. The transferred file integrity is 100 percent guaranteed accurate.

What are the advantages? No QA process steps—or very short ones—delay cut from days to minutes and guaranteed delivery (not lost or stuck in shipment) to the end user. All in all, file transfer improves the workflow of making a copy and distribution of a program in meaningful ways. The walls of the traditional video facility are crumbling, and the new virtual facility is an anywhere-anytime operation. So what are the technology trends for LANs and WANs?

Not all that long ago, Ethernet seemed stuck indefinitely at 100 Mbps Fortunately, there is a continual press forward to higher bandwidths and reach of networks. Today it is not uncommon to see 10-Gbps Ethernet links and routers in high-end data centers.

Let us take a tangent for a moment and investigate the very high end of connectivity. Using wavelength division multiplexing on optical fiber, researchers at Alcatel-Lucent Bell Labs (4/07) have proven that a WDM optical system is capable of delivering ~50,000 Gbps of data on one strand of fiber. Using 320 different wavelengths, each carrying a 156.25-Gbps payload, they postulate that the astronomical rate of ~50 Tbps is achievable per strand of fiber (see Appendix F).

Let us assume that we have encoded an immense collection of MPEG movies and programs each at 5 Mbps. At this rate, one could transmit 10 million different programs simultaneously on one single fiber. Since most fiber cables carry 200 strands, one properly snaked cable could serve 2 billion homes, each accessing a unique program. Ah, so many channels, so few people. Amazing? Yes, but tomorrow promises even greater bandwidths. What is the point of this hyperbolic illustration? Video distribution and production workflows will be impacted greatly by these major advances in connectivity. Fasten your seat belt and hold on for a wild ride.

1.2.2 Force #2: CPU Compute Power

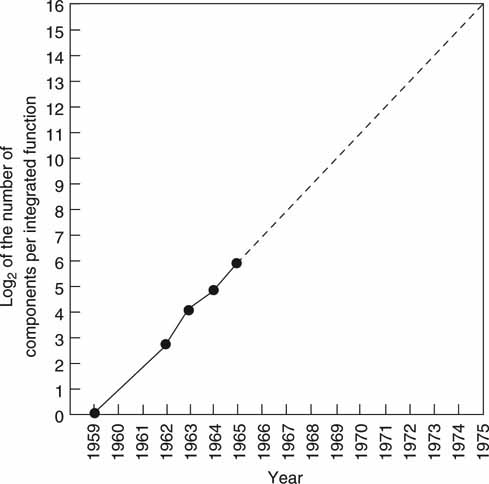

In a nutshell, it all follows from Moore’s law. Simply put, Gordon Moore from Intel stated that integrated circuit density doubles every 18 months. The law had been in effect since 1965 and will likely continue at least until 2015, according to Moore in statements made in 2005. Initially, the doubling occurred every 12 months, but it has slowed to a doubling every ~24 months due to CPU complexity. Figure 1.5 shows the famous diagram redrawn from Moore’s original paper (Cramming more components onto integrated circuits) (Moore 1965) and shows the doubling trend every 12 months. This diagram is the essence of Moore’s law. Early among Intel’s CPUs was the 8008 with 2,500 transistors. As a graduate student at UC Berkeley, the author wrote an 8008 program to control elevator operations. In 2008 the Dual-Core Intel Itanium 2 Processor had 1.72 billion transistors per die (2 CPUs). The Nvidia 9800 class, dual GPU, computes 1.1 trillion operations per second, bringing world-class 3D reality to the display. The Sony ZEGO technology platform is based on the Cell Engine chipset and is targeted at real-time HD video transforms and 2D/3D image rendering. Hence, the predictive power of Moore’s law.

FIGURE 1.5 Moore’s law: Graph from his original paper

Source: Electronics, Volume 38, Number 8, April 19, 1965

This law was not the first but the fifth paradigm to provide exponential growth of computing. Starting in 1900 with purely electromechanical systems, relays followed in the 1940s, then vacuum tubes, then transistors, and then integrated circuits. Since 1900, “computing power” has increased 10 trillion times. Our appetite for computing power is growing to consume all available power.

Demonstrating one of the paradigms of computation, while a Lowell High School student, the author designed and built an eight-line, relay-based, automatic telephone system for a San Francisco Science Fair. Figure 1.6 shows the final 60-relay design. Relay logic was relatively straightforward, and the sound of the relays completing a call was always a kick. For a teenager, transistors were way too quiet. The top of the unit is the power supply, the midsection has 40 of the 60 relays, and the lower section has two dial-activated rotary relays and two line-finder rotary relays. In the rear is the dial tone generator, batteries, and some additional relays. Not shown is a sound-proof box containing relays for generating the 20 Hz ringing voltage and various timing intervals.

FIGURE 1.6 Eight-line, relay-based, automatic telephone system.

From: May Kovalick.

Video processing needs a huge amount of computing power to perform realtime or “human fast” operations. Once left to the domain of purpose-built video circuits, CPUs are now performing three-dimensional (3D) effects, noise filtering, compositing, compressing video (a la MPEG), and completing other mathematically intensive operations in real time or faster. It is only getting easier to manipulate digital video, which has consigned traditional video circuits to a smaller and smaller part of the overall system.

Running CPUs in parallel, dual, and quad cores, for example, increases the total processing power available to applications. AMD has announced a 12-core processor chip targeted at servers, due out in 2010. The computing power of these systems is enormous, and performance can exceed a trillion operations per second (TOPS). There are more details on this subject in Appendix C.

On the memory front, the cost of one megabyte of DRAM has dropped precipitously from $5,000 in 1977 (Henessy et al. 2003) to $.02 in 2010e (Source: Objective Analysis) in constant dollars. This is a 250,000 factor decrease in only 33 years. At least two video server manufacturers offer a RAM-based server while eschewing the disk drive completely. One worry is processor power consumption. The rule has been a doubling of processor power every 36 months. This is an untenable progression, since the cooling requirements become very difficult to meet. For example, the Intel Pentium Processor XE draws 160 watts. Fortunately, the new multicore versions use less than half this power.

All in all, CPU and memory price/performance are ever decreasing to the benefit of media system designers and their users. Incidentally, CPU clock speed has increased by a factor of 750 from the introduction of the 8008 in 1972 until the Pentium4 in 2000.

So what is the workflow improvement? Fewer devices are needed to accomplish a given set of operations. The end-to-end processing chain has fewer links. Many A/V operations can be performed in real time using off-the-shelf commodity CPUs. There are, however, a few specialized processors that are optimized for certain tasks and application spaces.

In the area of specialized processors, the list includes

• Graphics processors (NVIDIA and AMD/ATI Technologies, for example)

• Embedded processors (Intel, Infineon, TI, and Motorola, for example)

• Media processors (TI, Analog Devices, and Philips, for example)

• Network processors (IBM, Intel, Xelerated, and a host of others) that clock in at 91.3

WHAT WILL YOU DO WITH A 2 BILLION TRANSISTOR CPU?

Is such a device overkill? Consider some CPU-based software A/V applications:

• Encode and decode MPEG HD video in real time

• Perform 3D effects in real time much like dedicated graphics processors do today

• Format conversions, transcoding in faster than real time

• Three-dimensional animation rendering

• Real-time image recognition

• Complex video processing

• Compressed domain processing

As processing power increases, there will be less dependence on special-purpose hardware to manipulate video. It is possible that video processing HW will become a relic of the past—time will tell.

The world’s most powerful computing platforms are documented at www.top500.org. The SX-9, from NEC, is capable of calculating 839 teraflops—or 839 trillion floating-point operations per second—and was considered the world’s most powerful computing platform in 2008.

Fast I/O is required to keep up with increasing CPU speeds. One of the leaders in this area is the PCI Express bus (PCIe or PCI-E). Not to be confused with PCI-X, this is an implementation of the PCI bus that uses existing PCI programming concepts and communications standards. However, it is based on serial connectivity, not parallel as with the original PCI bus. The basic “XI” link has a peak data bandwidth of 2 Gbps. The link is bidirectional, so the effective data transfer rate is 4 Gbps. Links may be bundled and are referred to as X1, X4, X8, and X16. An X16 bus structure supports 64 Gbps of throughput. All this is good news for A/V systems. The link uses 8B/10B encoding (see Appendix E).

There is every reason to be optimistic about the future of the “CPU,” especially for A/V computing. But will it become the strong link in the computation chain of otherwise weak elements? Fortunately not, as the other forces grow in strength, too.

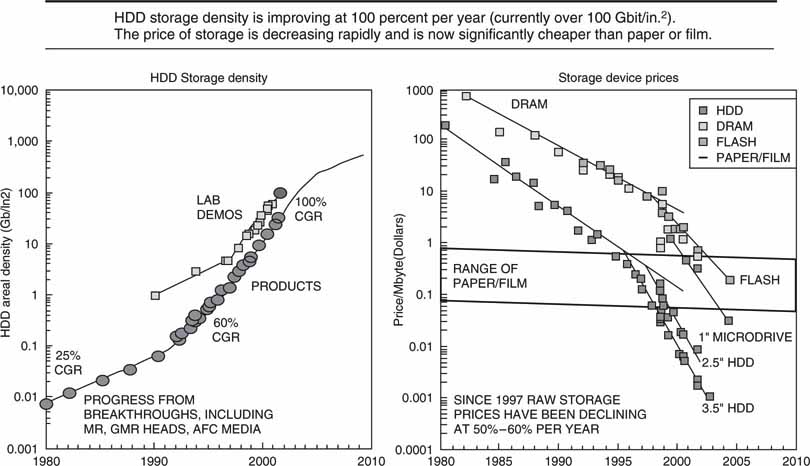

1.2.3 Force #3: Storage Density, Bandwidth, and Power

At 3 o’clock on Figure 1.4 is the dimension of storage density (cost/GB), storage bandwidth3 [cost/(Mbps)], and power consumed (W/GB). For all metrics, smaller is better. Unless you have been living in a cave for the past 20 years, it is obvious that disk drive capacity per unit has been climbing at an astronomical rate. Much of the technology that makes up drives and other storage media also follows Moore’s law, hence the capacity increase. The dimension of storage is a broad topic. The four main storage means are hard disk drives (HDD), optical disk, tape, and DRAM/Flash. The application spaces for these are as follows:

1. HDD—video servers, file/database servers, Web servers, PCs of all types, personal video recorders, embedded products (portable music players)

2. Optical disk—DVD (4.7 GB single sided), CD (700 MB), Blu-ray (up to 25 GB single sided, 50 GB double sided), and other lesser known devices. Some sample applications are

a. Consumer DVD—SD and HD

b. Sony XDCAM HD using a variation of the Blu-ray format for field acquisition at 50 GB per disc

c. Archive, backup

3. Tape—traditional videotape, archive, backup. Archive tape technology is discussed in Chapter 3A.

4. RAM/Flash—RAM is being used to replace disc and tape for select applications, including deep cache. The Panasonic P2 camera is a good example of a professional camera that has only removable Flash cards as the media store. In 2009, 32 GB modules are common and able to store 160 minutes of DV25 video. P2 cards have writing speeds of 640 Mbps, exceeding conventional Flash memory. Sony also offers a Flash-based camera, the XDCAM EX, using the SxS PRO cards. By 2010, 128 GB Flash cards will be in use.

5. SSD—The Solid State Disc is a device that mimics an HDD using identical I/O ports and form factor but with Flash memory replacing rotating platters for storage. There are numerous reasons to replace HDD with SSD in some applications. See for a review of the pros and cons.

The hard disk is having an immense impact on the evolution of IT systems. Consider the implications of Figure 1.7, reprinted from an article by IBM researchers (Morris et al. 2003).

FIGURE 1.7 Storage media performance trends.

Source: IBM

Storage density is currently over 250 Gbits/in.2 on the surface of the rotating platter. This increased at a compound growth rate (CGR) of 60 percent per year in 2008 and enabled 2.5-inch form factor HD drives with capacities of 1 TB. This rate is expected to slow down modestly to about 40 percent per year in 2010. HDD prices have decreased by about 5 orders of magnitude (100,000:1) since 1980, whereas storage systems’ prices have decreased by a factor of 2.5 orders of magnitude. The faster fall in HDD prices compared to system prices implies that HDDs are a smaller overall part of storage systems. Chapter 3A discusses storage systems in detail. Raw HDD prices have been falling 50–60 percent a year since 1997.

It is enlightening to forecast the future of HDD performance, keeping in mind that fortune telling is risky business. So using Figure 1.7 and extrapolating to 2010, we should expect to see HDD capacities of around 1.5TB per 2.5-inch unit at a cost of $40 in constant dollars. Using the most advanced audio compression (64 Kbps), a single HDD could store 1 million tracks of music (3 min average length). Imagine the world’s collection of music on your personal computer or in your pocket. All this bodes well for professional video systems, too. Video/file servers with 10TB of combined storage (91 days’ worth of continuously playing content at SD-compressed rates) will be routine. Even at HD compressed production rates of say 150 Mbps, one 1.5TB HDD will store 2.2 hr of material and at 19.3 Mbps (ATSC payload rate) will store nearly 17 hr. More storage for less is the trend, and it will likely continue. When integrated into a full-featured chassis with high-performance I/O, RAID protection and monitoring, the system price per GB will be higher in all cases.

Storage Rule of Thumb

Storage Rule of Thumb

Ten megabits per second compressed video consumes 4.5 GB/hr of storage. Use this convenient data point to scale to other rates.

The development to higher capacities has other side benefits too. Note the following trends:

• Internal HDD R/W sustained rates (best case, max) are currently at 1,000 Mbps (1TB drive) and are increasing at 40 percent per year for SAS class HDD units. The actual achieved I/O for normal transactional loads will be lower due to random head seek and latency delays.

• Power per GB for HDD units is dropping at 60 percent per year. In 2009 a 1 TB, R/W active drive consumes on the order of .01 W/GB. This is crucial in large data centers that have hundreds of TB of storage. Storage systems consume an order of magnitude or more of power per GB due to the added components overhead.

Are there any workflow improvements? Oh yes, and in spades. This force is single-handedly driving IT into broadcast and other professional A/V applications. Consider the case of the video server. In 1995, HP pioneered and introduced the world’s first mission-critical MPEG-based video server (the MediaStream Server) for the broadcast TV market. Initially, the product used individual 9 GB hard drives in the storage arrays. In 2009, storage arrays support 1TB drives. Now that is progress. Video servers enable hands-free, automated operations for many A/V applications.

1.2.3.1 SCSI Versus ATA Drives

Two different types of HDD have emerged: one is the so-called SCSI HDD and the other is the ATA (IDE) drive. In many ways the drives are similar. The SCSI drive is aimed at enterprise data centers where top-notch performance is required. The ATA drive is aimed at the PC market where less performance is acceptable. Because of the different target markets, the common perception is that SCSI drives are the right choice for high-end applications and ATA drives are for home use and light business. A comparative summary follows:

• ATA drives are about one-third the price of SCSI drives.

• SCSI drives have a top platter spin of 15,000 rpm, whereas ATA tops at 7200.

• ATA drives have a simpler and less flexible I/O interface than SCSI.

• ATA consumes less power.

• ATA drives sport 1TB capacities in 2009, more than SCSI drives.

• The reliability edge is given to SCSI due to more testing during the R&D cycle.

Because of the lower price of the ATA HDD, many video product manufacturers have found ways to use ATA drives in their RAID-based storage systems. The biggest deficit in the ATA drive is the R/W head access time, which is determined by the platter rotational speed. In the world of A/V storage, the faster SCSI platter rotation speed is not necessarily a big advantage. For the enterprise data center, the average HDD R/W transaction block size is 4–8 KB. However, for A/V data transactions, several MB is a normal R/W block size (video files are huge). There is a complete discussion of this topic in Chapter 3A.

The ATA is on the ascension for A/V systems. Working around the less than ideal specs of the ATA drive yields big cost savings. These drives are almost always bundled with RAID, which improves overall reliability. Look for the ATA drive to become the centerpiece for A/V storage systems. In addition, some drive manufacturers specialize in ATA (and SATA) drives and offer specs that compete very favorably with SCSI on most fronts. See Chapter 5 for more details on HDD reliability.

1.2.4 Force #4: IT Systems Manageability

Unmanaged equipment can quickly become the chaotic nightmare of searching for bad components and repairing them while trying to sustain service. Long ago, the IT community realized the necessity to actively manage the routers, switches, servers, LAN and WAN links, and even software applications that comprise an IT system. However, most legacy A/V-specific equipment has no standard way to report errors, warnings, or status messages. Ad hoc solutions from each vendor have been the norm compared to the standardized methods that the IT industry embraces.

Managed equipment yields savings with less downtime, faster diagnosis, and fewer staff members to manage thousands of system components. Entire industries have risen to provide embedded software for element status and error reporting, management protocol software, and, most importantly, monitoring stations to view and notify of the status of all system components. This includes the configuration and performance of the system under scrutiny. There are sufficient standards to create a vendor plug-and-play environment so users have their choice of products when creating a management environment. However, there will always be vendor-specific aspects of element management for which only they will provide management applications.

No one doubts that the IT management wave will be adopted by many A/V equipment manufacturers over the next few years. The IT momentum, coupled with the advantages of the approach, spells doom for unmanaged equipment. Of course, the A/V industry must standardize the A/V-specific aspects of element management. See for an extended discussion. Let us leave the topic at this juncture. Has this improved the workflow to produce or generate video programming? Well, only indirectly. With less downtime and more accessible resources, workflows will literally flow better.

1.2.5 Force #5: Software Architectures

There are two main forces in software systems today. They both have their adherents and detractors, and siding with one faction or the other can be a religious experience. It is obvious to almost anyone that Microsoft wields a mighty sword and that many professional application developers use their Windows OS and .NET software framework for design. The other camp is the Linux-based Open Source movement with backing by IBM, HP, and countless others who advocate open systems (see, for example, www.sourceforge.net, www.openoffice.org, www.linux.org).

Closely associated with this is the Java EE framework (and several very good development platforms) as an alternative to the Microsoft .NET programming environment. Java is now open sourced. The .NET and Java camps have built up a momentum of very credible solutions. Are there other alternatives? Yes, but they are niche players, and the sum total of their influence will be small. Next, a little background on the status of the OS market.

The lion’s share of the OS marketplace comes from two segments, namely enterprise servers (database servers, Web servers, file servers, and so on) and client based (desktop). After these two behemoths, many smaller segments follow, such as the embedded OS, mobile phones, and more. Market Share by Net Applications analysts estimated that Microsoft Windows controlled 92 percent of the desktop OS real estate in early 2008. Worldwide, Apple MacOS gets 7.6 percent, and the Linux desktop is at 1 percent.

For the worldwide server space in early 2008, Gartner stated that UNIX commands a 7 percent share, Microsoft Windows a 67 percent share, and Linux a 23 percent share. The server OS market is now a two horse race. In 2005 UNIX was in first place. As server OS virtualization gains ground, some expect that Linux will have an edge over Windows. Time will tell.

Does the selection of an OS and programming language development platform bring end user workflow improvements? Admittedly, this is a complex question. The advantages are first felt by the equipment manufacturers. How? Using either Java or .NET programming paradigms produces efficiencies in product development, product enhancements, and change management. If end users are given access to the code base or APIs for systems integration and enhancement purposes, then they will reap the advantages of these programming environments. Also, if the IT staff is trained and comfortable with these environments, then any needed upgrades or patches are more likely to be implemented without issue or anxiety.

Workflow improvements will come from the power of the software applications produced by either of these environments. Also, their flexibility (welldocumented, open programming interfaces) will allow for software enhancements to be made to meet changing business needs. Software-based systems allow for great flexibility in creating and changing workflows. Older non-IT-based A/V systems can be rigid in their topology. IT frees us to create almost any A/V and data workflow imaginable. Many video facilities already have one or more programmers on staff to effect software changes when business needs dictate. Look forward to a big leap in customer-developed solutions that work in harmony with vendor-provided equipment. The topic of programming environments is discussed in Chapter 4.

1.2.6 Force #6: Interoperability

Writer John Donne once said, “No man is an island; every man is a piece of the continent.” Much has been written for and against his proclamation of the dependent need for others. For our discussion, we will side with the affirmative but apply the sentiment to islands of IT. Gone are the days of isolated islands of operations. Gone are the days of proprietary connectivity. Today, end users of video gear expect access to the Internet, email, compatible file formats, easy access to storage, and workflows that meet their needs for flexibility and production value. “Give me the universe of access and only then am I satisfied” is the mantra.

Does this mean that operational “islands” are a bad idea? By no means. Whether for security, reliability, control, application focus, or some other reason, equipment islands defined by their operational characteristics will be a design choice.

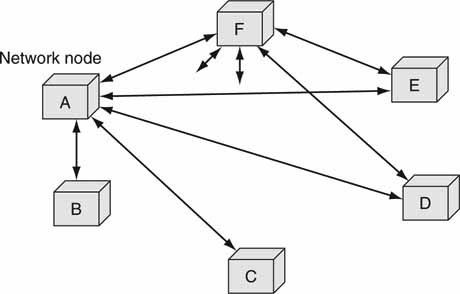

Robert Metcalfe, the inventor of Ethernet (Gilder 1993), once declared a decree now known as Metcalfe’s law: “The value of a network of interconnected devices grows as the square of connected elements.” What did he mean? Well, consider an email system of two members. Likely, boring and limited. But a billion member population is much more interesting and useful. So too with media interconnectivity. As the number of connected elements and users grows, the power of productivity grows as the square of the connected devices. Collaborative works, file sharing, common metadata, and media are all powerfully leveraged when networked. Networking also adds layers of software complexity, which must be managed by the IT staff.

So Metcalfe’s law is the response to the plea, “Please, I want more productivity.” Standards foster interconnectivity. SMPTE (Society of Motion Picture and Television Engineers), the EBU (European Broadcast Union), ARIB (Japan), the IEEE (Ethernet, for example), the ITU/ISO/IEC (MPEG, for example), and W3C (Web standards HTML and XML, for example) develop the standards that make Metcalfe’s law a reality. There is more discussion on standards and user forums such as the Advanced Media Workflow Association (AMWA) in Chapter 2. Is there a demonstrative workflow improvement? Yes, in terms of nearly instant user/device access to A/V content, processors, access to metadata, and user collaboration.

You may wonder why the synergy of a system is a function of the square of the number of attached nodes. Consider that most communication paths are between nodal pairs in a network. For example, node A may request a file from node Z, which is only one possible choice for A. With N nodes there are roughly N2 number of combinations for 1:1 bidirectional communication; A can communicate with B or C or D, B can communicate with C or D, and so on until N2 combinations are accumulated—hence Metcalfe’s law.

Figure 1.8 shows some of the pairwise combinations in a population of N = 6. For this case, there are 2 * (5 + 4 + 3 + 2 + 1) 30 pairwise combinations (each bidirectional path is counted as two unidirectional paths, hence the factor of 2 multiplier). However, 36 would be the value based on 62. For N = 25, Metcalfe’s law predicts 625 when there are 600 paths in actuality. The actual number of pairwise communication paths is N2—N so as N tends to be large the -N term is a diminishingly small correction, as Metcalfe must have known.

FIGURE 1.8 Metcalfe’s law: Combinations of unidirectional pairwise communication paths tend toward N2 as N (number of nodes) becomes large.

1.2.7 Force #7: User Application Functionality

Application functionality is now largely defined and accessed via graphical user interfaces (GUIs) and APIs. Many of the hard surfaces of old have been replaced by more flexible soft interfaces. Oh sure, there is still a need for hard surface interfaces for applications such as live event production with camera switching, audio control, and video server control. Nonetheless, most user interfaces in a media production facility will be soft based, thereby allowing for change with a custom look and feel. A GUI as defined by a manufacturer may also be augmented by end user-chosen “helper” applications such as media management, browsing, and so on. Using drag-and-drop functionality, a helper application can provide data objects to the main user application. In the end, soft interfaces are the ultimate in flexibility and customization.

Another hot area of interest is Web services. In brief, a Web service can be any business or data processing function that is made available over a network. Web services are components that accomplish a well-defined purpose and make their interfaces available via standard protocols and data formats. These services combine the best aspects of component-based development and Web infrastructure. Web services provide an ideal means to expose business (and A/V process) functions as automated and reusable interfaces. Web services offer a uniform mechanism for enterprise resources and applications to interface with one another. The promise of “utility computing” comes alive with these services. Imagine A/V service operators (codecs, converters, renders, effects, compositors, searching engines, etc.) being sold as components that other components or user applications can access at will to do specific operations. Entire workflows may be composed of these services driven by a user application layer that controls the logic of execution. There are already standard methods and data structures to support these concepts. There is a deeper discussion of these ideas in Chapter 4.

1.2.8 Force #8: Reliability and Scalability

The world’s most mission-critical software systems run in an IT environment. Airline reservation systems, air traffic control, online banking, stock market transaction processing, and more depend on IT systems. There are four basic methods to improve a system’s reliability:

• Minimize the risk that a failure will occur.

• Detect malfunctions quickly.

• Keep repair time quick.

• Limit impact of the failure.

In Chapter 5 there is an extensive discussion of reliability, availability, and scalability. Also, enterprise and mission-critical systems often need to scale from small to medium to large during their lifetime. Due to the critical nature of their operations, live upgrading is often needed, so scalability is a crucial aspect of an IT system.

Many video systems (broadcast TV stations, for example) also run missioncritical operations and share the same reliability and scalability requirements as banking and stock market transaction processing but with the added constraint of real-time response. IT-based A/V solutions may have all or some of the following characteristics:

• A/V glitch-free, no single point of failure (NSPOF) fault tolerance

• Real-time A/V access, processing, and distribution

• Off-site mirrors for disaster recovery

• Nearly instantaneous failover under automatic detection of a failed component

• Live upgrades to storage, clients, critical software components, and failed components

• Storage redundancy using RAID and other strategies

These characteristics have a very positive impact on workflow. Keeping systems alive and well keeps users happy. True fault-tolerant operations are practical and in use every day in facilities worldwide. As business and workflow requirements change, IT systems are able to keep pace by enabling changes of all critical components while in operation. All these aspects are addressed in more detail in Chapter 5. The bottom line is this: IT can meet the most critical needs of mission-critical video systems. Many systems offer better performance and reliability than purpose-built video equipment.

1.2.9 The Eight Forces: A Conclusion

The eight forces just described do indeed improve video system workflows by being more cost effective, reliable, higher performing, and flexible. The combined vector of all eight forces is moving video system design away from purposebuilt, rigid, traditional A/V links toward an IT-based infrastructure. The remainder of this book delves deeper into each force and provides added information and insight. Several years ago, even the thought of building complex A/V systems with IT components seemed a joke. Today, the maturity of IT and its far-reaching capability grants it an honored place in video systems design. During the course of this book, several case studies will show impressive evidence of real-world systems with an IT backbone.

Despite the positive forces described, there exists a lot of fear, uncertainty, and doubt (FUD) surrounding AV/IT systems. Many of those well grounded in traditional A/V methods may find stumbling blocks at every step. The chapters that follow will provide convincing evidence that AV/IT can indeed meet the challenges of mission-critical small, medium, and large video system designs.

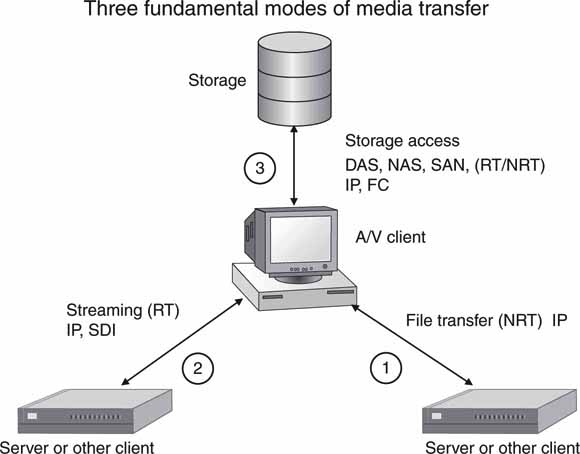

1.3 THREE FUNDAMENTAL METHODS OF MOVING A/V DATA

There are three chief methods of moving A/V assets between devices/domains using IT. In Figure 1.9 these means are shown connected to the central “A/V client.” This client represents any digital device that exchanges media with another device. These methods form the base concepts for file-based and so-called tapeless workflows. The means are

1. File transfer using LAN/WAN in NRT or pseudo RT

2. Streaming A/V using LAN/WAN

a. Included is A/V streaming using traditional links

3. Direct-to-Storage real-time (RT) or non-real-time (NRT) A/V data access

a. DAS, SAN, and NAS storage access

FIGURE 1.9 Three fundamental methods used to move A/V data.

Storage access, streaming A/V, and file transfer are all used in different ways to build video systems. The notion of an A/V stream is common in the Web delivery of media programming. In practice, any A/V data sent over a network or link in RT is a stream. For Figure 1.9, a client is some device that has a means to input/output A/V information over a link of some sort. Some systems depend exclusively on one method, whereas another may use a hybrid mix of all three. Each method has its strong and weak points, and selecting one over another requires a good knowledge of the workflows that need to be supported. Chapters 3A and 3B review storage access, and Chapter 2 reviews streaming and file transfer.

FILE-BASED AND TAPELESS WORKFLOWS: ARE THEY DIFFERENT?

The sense of tapeless, in A/V systems, implies videotapeless. In fact, many A/V systems use tape for archive, so they are not truly tapeless. Archive tape is alive and well, as discussed in Chapter 3A. On the other hand, file-based is more encompassing a term. File-based concepts transcend tape and are used to create networkable, IT-enabled, nonlinear-accessible, and malleable media workflows. The designation networked media covers file-based and IP streaming, so it defines a superset of IT-based media flows.

Two acronyms, RT and NRT, are used repeatedly throughout the book, so they deserve special mention. RT is used to represent an activity such as A/V streaming or storage access that occurs in the sense of video or audio real time. NRT is, as expected, an activity that is not RT but slower (1/10 real time) or faster (5 real time). NRT-based systems are less demanding in terms of quality of service (QoS). These two concepts are intrinsic to many of the themes in this book.

In Figure 1.9, each of the three links represents one of the A/V mover techniques. For example, one client may exchange files with another client, or the central client may R/W to storage directly. The client in the center supports all three methods. The diagram represents the logical view of these concepts. The physical view may, in fact, consolidate the “links” into one or more actual links. For example, storage access, IP streaming, and file transfer can use a single LAN link. The flows are separated at higher levels by the application software running on the client.

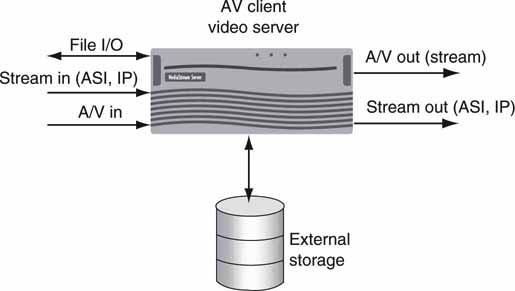

A practical example of the three-flow model is illustrated with the common video server (the A/V client) in Figure 1.10. The I/O demonstrates streaming using IP LAN, traditional A/V I/O, file transfer I/O, and storage access. The most basic video server supports only A/V I/O with internal storage, whereas a more complete model would support all three modes. All modes may be used simultaneously or separately, depending on the installed configuration. When evaluating a server, ask about all three modes to fully understand its capabilities.

FIGURE 1.10 Video server with support for files, streams, and storage access.

Of course, there are other ways to move A/V assets (tape, optical disk manual transport), but these three are the focus of our discussions. Throughout the book, these means are discussed and dissected to better appreciate the advantages/disadvantages of each method.

One of the characteristics that help define a video system is the notion of A/V timing. Some systems have hundreds (or thousands) of video links that need to be frame accurate, lip synced to audio, and aligned for switching between sources. The following section discusses the evolution from traditional A/V timing to that of a hybrid AV/IT system.

1.4 SYSTEMWIDE TIMING MIGRATION

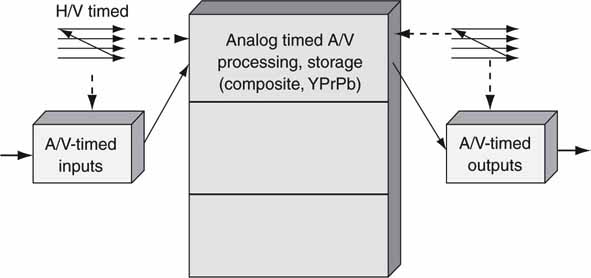

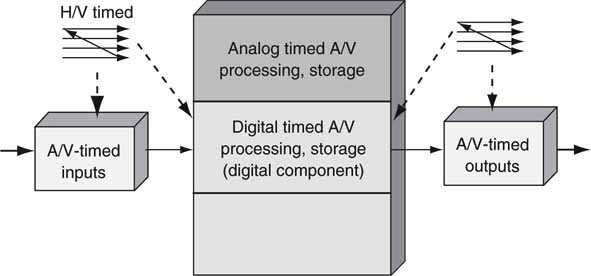

When IT and A/V are mentioned in the same breath, many seasoned technical professionals express signs of worry. After all, is video not a special data type because of the precise horizontal and vertical timing relationships? It turns out that the needed timing can be preserved and still rely on IT at the core of the system. Figures 1.11, 1.12, and 1.13 show the migration from an all analog system to an AV/IT one. In Figure 1.11 every step of video processing and I/O needs to preserve the H/V timing. In Figure 1.12 the timing is easier to preserve due to the all-digital nature of the processing. In Figure 1.13 the H/V timing is evident only at the edges for traditional I/O and display purposes. Figure 1.13 is the hybrid AV/IT model for much of the discussion in this book.

FIGURE 1.11 Traditional analog.

FIGURE 1.12 Traditional digital.

FIGURE 1.13 Hybrid AV + IT system.

As discussed earlier, streaming using IT links is practical for some applications. If Figure 1.13 has no traditional A/V I/O and only network links used for I/O, then the notion of H/V timing is lost at the edge of the system. In this case, there is a need for new timing methods that are IT based and frame accurate. In 2009, there are very few pure IT-only video systems. In a few years, pure IT-only systems may become popular; until then, let us pass on this particular special case for now, although it is revisited in Chapter 2. Next, let us see how well AV/IT configuration fares compared to its older cousins.

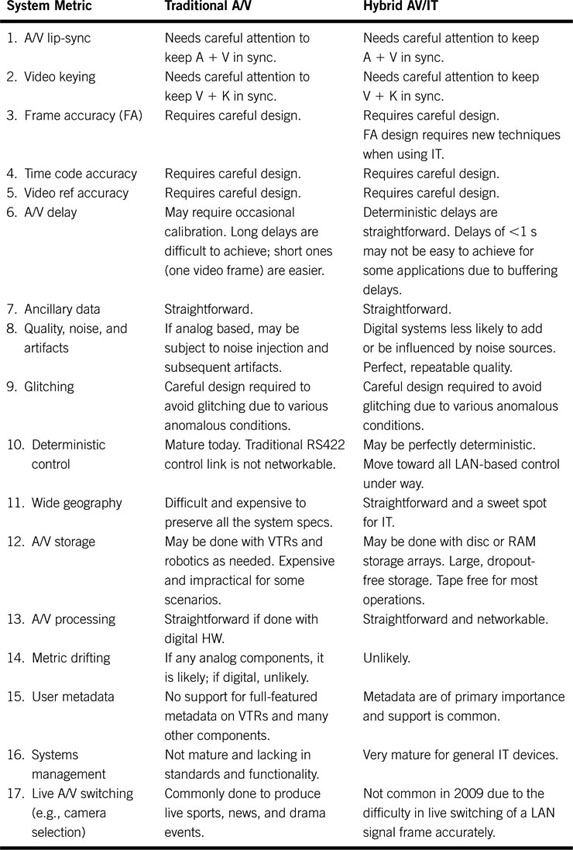

1.5 CAN “IT” MEET THE NEEDS OF A/V WORKFLOWS?

There are several important metrics when comparing traditional video to networked media system performance. These are the measures that the technical staff normally quantifies when calibrating or tuning a system. Figure 1.14 shows a simple A/V system where a perfectly aligned A/V signal set serves as an input along with a corresponding video reference signal and a time code (see Glossary) signal. The system may perform any process steps (delay, switch, process A/V, route, store, replay, etc.) to the input signals, including the control signals, and the output signals are always some function of the inputs. As a result, the outputs are referenced to the inputs in well-defined ways.

FIGURE 1.14 Traditional A/V system performance model.

Under this group of conditions, the output should be completely deterministic and measurable to a set of specifications. The question then becomes whether a system composed of the hybrid mix of AV/IT can work as well as or better than a traditional A/V-only system. Imagine the system in Figure 1.14 composed of a hybrid AV/IT mix and measured to a set of specs. Then convert the system to a traditional A/V-only system and make the same measurements. How much—if any—would the measurements differ? Ideally, the AV/IT system would equal or exceed all measured specs. Is this the case? Are there some “sweet spots” for either system and, if so, what are they? Let us find out. The chief metrics (check Glossary if needed) of interest to us are as follows:

1. A/V lip-sync. This measures the amount of time delay between the audio signal and the video signal on the system output. Any deviation from zero shows the classic lip-sync characteristic. The input A/V alignment has exactly zero deviation.

2. Video keying. If an input key signal is present, does the keying operate without artifacts? Is it always frame aligned to the video fill signal?

3. Frame accuracy. Is the video output frame accurate to the output video reference (or input reference if desired)?

4. Time code accuracy. Is the time code output perfectly correlated (if needed) to the time code input value? Is the output time code perfectly correlated to the output video signal?

5. Video reference accuracy. If required, is the output video reference perfectly correlated to a video reference?

6. A/V delay. Is the A/V delay (latency) through the system acceptable and constant? For some cases the acceptable delay should be less than a line of video (<<62|xs), whereas for others it may need to be several hours or more.

7. Ancillary data. If auxiliary information is present in the input signals (VBI, HANC, VANC, AES User Data, etc.), are they preserved as desired?

8. Quality, noise, and artifacts. Is there any noise or other undesired A/V properties on the output signals? Are the output A/V quality and timing as desired?

9. Glitching. Are there any undesired interruptions (illegal A/V, dropouts, etc.) on the output signals? How well does the system perform when the input signals exhibit glitching or are disconnected?

10. Deterministic control. Are all system operations deterministic? Does every operational command (switch, route, play, record, etc.) consistently function as expected?

11. Wide geographic environments. Can systems be created cost effectively across large distances?

12. A/V storage. Can the input signals be recorded and replayed on command? What are the delays?

13. A/V processing. Can the input A/V signals be processed at will for any reasonable operation?

14. Metric drifting. Do any of the measured spec values drift over time?

15. User metadata. Are user metadata supported?

16. Systems management. How are A/V devices managed? What are methods for alarm reporting? How is the configuration determined and maintained?

17. Live A/V switching. Traditional A/V switching is very mature. Can an AV/IT system do as well for all functional requirements?

All these metrics are indicative of system performance. If any one is not compliant, the entire system may be unusable. A detailed analysis of each element is beyond our scope, but the summary overview is appropriate. Table 1.1 provides a high-level overview of what features the different systems can provide and describes their “sweet spots.” Note that traditional A/V systems can and often are composed of a predominance of digital components (or analog digital mix), but they lack the full-featured networking and infrastructure of IT-based solutions.

Table 1.1 Summary of Traditional A/V Versus AV/IT Metrics

1.6 ADVANTAGES AND DISADVANTAGES OF METHODS

1.6.1 Trade-off Discussion

Table 1.1 lists several “sweet spots” for AV/IT, namely No. 6, 8, 10, 11, 12, 13, 15, and 16.

• No. 6. Long delays are ideally implemented with IT storage systems. Long delays may be needed for time delay applications, censorship, or other needs.

• No. 8. Digital systems may be designed to be noise and artifact free with the highest level of repeatable quality.

• No. 10. See later.

• No. 11. Systems can span a campus, city, country, or the world without affecting video quality. There are countless such systems in use today.

• No. 12. IT storage methods are accepted for video servers and are in use daily in media production and distribution facilities. For all but deep archives, this means the demise of the VTR (tape free at last) for most record/replay operations. Tape is still in common use for field camera acquisition, but even this is waning.

• No. 13. There are no geographic constraints on where the A/V processors may be located. Clusters of render farms, codecs, effects processors, format converters, and so on may be networked to form a virtual utility pool of A/V processing.

• No. 15. User-descriptive metadata importing, storage, indexing, querying, and exporting are considered a cornerstone feature of IT systems. Chapter 7 investigates metadata standards and utilization in more detail.

• No. 16. IT systems management has a major advantage over the ad hoc methods in current use today (see ).

Number 10 has the IT edge too for many applications. Traditional device control has relied on the sturdy RS422 serial link carrying device commands. Sure, it is trustworthy and reliable, but it is not networkable, is yet another link to manage and route, and has limited reach. LAN-based control is the natural replacement. There is one concern with LAN replacing RS422 serial linking: determinism. RS422 routing is normally a direct connection from controller to device under control, and its QoS is excellent and proven. As has been the case, a video server with 10 I/O ports has 10 RS422 control ports! This is messy and inefficient in terms of control logic, but it is proven. However, a LAN may be routed through a shared network with at least some small delay. A single LAN connection to a device may control all aspects of its operation with virtually no geographic constraints. In fact, it is not uncommon to control distributed devices from a central location using LAN and WAN connectivity. This cannot be done using a purely RS422 control and reporting system. There are several ways to deal with the networking delay and jitter, which are covered in detail in Chapter 7.

Number 17 in Table 1.1 is one area where traditional A/V methods are superior. This scenario assumes that event cameras have a LAN-like output that carries the live video signal. The signals are fed into a LAN-based switcher operated by a technical director where camera feeds are selected and processed for output. There are no accepted standards for frame-accurately aligning the isolated camera output signals over a LAN. Furthermore, there are no commercial LAN-based (for I/O) video switchers. Most professional cameras use a coax Triax link (or alternative fiber optic link for HD feeds) from camera to the production control room.

The missing link for live camera production using IT networking is time-synchronous Ethernet. Current Ethernet uses an asynchronous timing model. The IEEE 802.1 Audio/Video Bridging Task Group (LANs/MANs) is developing three specifications that will enable time-synchronized, excellent QoS, low latency A/V streaming services through 802 networks. These are referenced as 802.1AS, 802.1Qat, and 802.1Qav (www.ieee802.org/1/pages/avbridges.html). Once these standards are mature (sometime in 2009 likely), expect vendors to offer A/V devices with time-synchronous Ethernet ports. When this happens and it is embraced by our industry, traditional video links will start to wane. How long before the stalwart SDI link goes the way of composite video link for professional systems?

Note too that Ethernet switches (MAC layer 2) have less I/O jitter and delay compared to most (IP layer 3) routers. Ethernet frames (1,500B normally) may be switched with less delay than a 64 KB IP packet. So, for live event switching, layer 2 may be applied with better results than layer 3. However, IP routing offers more range and scale, so methods to support both layers 2 and 3 are being developed. See for more insights.

Some researchers have built test systems for live event production that are almost entirely LAN/WAN and IT based. The European Nuggets (Devlin et al. 2004) project developed a proof of concept live production IT-based system. In their demo system, camera control, streaming A/V over networks, metadata management, MXF, live proxies, and camera switching are all folded into one comprehensive demo. Their work is on the bleeding edge of using IT techniques for live production. The Nuggets effort is a good indicator of the promise of IT-based live production.

Another effort to leverage IT in postproduction is MUPPITS, or Multiple User Post-Production IT Services. This group brings together key players in the UK postproduction value-chain to investigate, develop, and demonstrate a new service-oriented approach to film and broadcast postproduction. See www.muppits.org.uk.

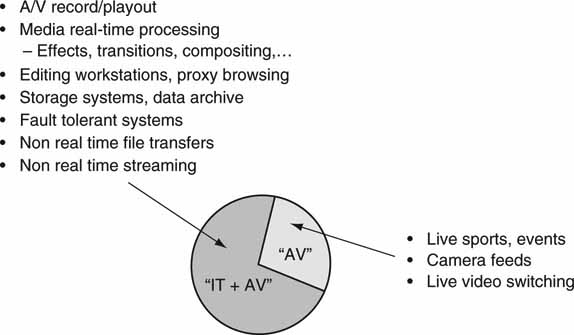

As technology matures, it seems likely that video systems for any set of user requirements can and will be implemented using IT methods. Admittedly, it may take many years for live event HD production to migrate to an all-IT environment. Despite the latency drawback, most other video system application requirements are easily achieved today with AV/IT configurations. See Figure 1.15 for the production sweet spots for traditional A/V versus AV/IT in 2009 and likely sometime beyond. Note too that the AV + IT portion of the pie is file based for virtually all operations. Even non-RT streaming has a file origination and end point in most cases.

FIGURE 1.15 Sweet spots for traditional and hybrid A/V systems.

For a traditional TV station, 90 percent of daily operations have little need for live stream switching. Using non-real-time file transfers to move A/V files can replace SDI in many cases. Sure, live news with field reporting requires video switching. In practice, most station operations can use a mix of AV + IT gear. However, ESPN’s HD Digital Center facility has 10 million A/V cross points in its router infrastructure. Their lifeblood is live events. In this case, because the need to switch streams under human control is great, traditional SDI is required in bulk. Still, the ESPN facility has its share of IT elements (Hobson).

Traditional A/V has one added benefit not cited in Table 1.1: familiarity. The engineers, technicians, and staff responsible for the care and feeding of the media infrastructure may have many years of experience. Moving to an AV/IT infrastructure requires new skills and changes in thinking. Some staff may resist or find the change uncomfortable. Others will welcome the change and embrace it as progress and improvement. Change management is always a challenge. We now have some track record of facilities that made the switch. Some are broadcasters who made the switch to an IT infrastructure while on air, whereas others had the advantage of building a new “green field” facility where existing operations (if any) were not of concern. The challenges and rewards of building these new systems are reviewed in Chapter 10.

1.7 IT’S A WRAP: SOME FINAL WORDS

So can AV/IT meet the challenge of replacing (and improving) the traditional A/V infrastructure? For all but a few areas of operations, the answer is a resounding yes! There is every reason to believe that IT methods will eventually become the bedrock of all media operations. True, there will always be a few cases in which traditional A/V still has an edge, but IT has a momentum that is difficult to derail. Do not let a corner case become the driving decision not to consider IT. The words of the brilliant Charles Kettering (GM research chief) seem truer today than when he spoke them in 1929: “Advancing waves of other people’s progress sweep over unchanging man and wash him out.” Do not get washed out but seek to understand the waves of IT that are now crashing on the shores of traditional A/V.

Now that we have established the beneficial aspects behind the move to IT, let us move to expand on the concepts outlined in this chapter. The next chapter reviews the basics of networked media and file-based techniques as related to A/V systems. The chapters that follow it will provide yet more insights and explanations for the major themes in IT as related to A/V systems. As Winston Churchill once said, “Now this is not the end. It is not even the beginning of the end. But it is, perhaps, the end of the beginning.”

1 IT storage and networking concepts are used universally in business systems worldwide. See the Introduction for background on IT.

2 If you are not familiar with traditional A/V techniques, consider reviewing Chapter 11 for a general overview.

3 The terms bandwidth and data rate are equivalent in a colloquial sense.

REFERENCES

Chen, X., Transporting Compressed Digital Video, Kluwer Academic Publishers, (2002). Chapter 4.

Gilder, G., Metcalfe’s Law and Legacy, Forbes ASAP, (September 13, 1993).

J. Hennessy, D. Patterson, Computer Architectures, 3rd edition, 2003, page 15, Morgan Kaufmann.

Hobson, E., and Szypulski, T., The Design and Construction of ESPN’s HD Digital Center in Bristol, Conn. SMPTE: Technical Conference Pasadena. 2004.

Moore, G. Cramming more components onto integrated circuits. Electronics, 38 (8), April 19, 1965.

Morris, R. J. T., and Truskowski, B. J. The evolution of storage systems. IBM Systems Journal, 42 (2), July 2003.

Devlin, B., Heber, H., Lacotte, J. P., Ritter, U., van Rooy, J., Ruppel, W., and Viollet, J. P. Nuggets and MXF: Making the networked studio a reality. SMPTE Motion Imaging Journal, July/August 2004.