Chapter 9

Multimedia

WHAT’S IN THIS CHAPTER?

- Understanding multimedia concepts

- Working with audio

- Working with video

Today’s smartphone original equipment manufacturers (OEMs) and mobile operators (MOs) use multimedia functionality as their main selling point. Most smartphones have a good camera with 5 or 8 megapixel capabilities, an FM radio, a variety of sounds like different ringtones, and video capture functions. Some smartphones even have a surround sound system and can be regarded as mobile theaters.

With users relying on mobile devices increasingly as entertainment devices, Windows Phone 7 (WP7) has a stronger emphasis on multimedia technology including music, pictures, and video.

This chapter begins with an architectural overview of the common multimedia components offered by three OSs: iPhone OS (iOS), Google Android, and WP7. The chapter then compares the common features and differences among the three OSs. The latter part of the chapter focuses mainly on WP7. You’ll discover what WP7 provides for multimedia features in details. The accompanying code illustrates how to use WP7’s multimedia APIs in your applications.

This section discusses the fundamentals and similarity of multimedia technologies on the three platforms. Some examples are shown to illustrate the differences in architecture, APIs, and some implementation details.

Multimedia Architectural Overview

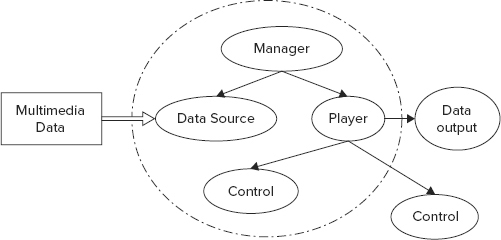

A modern multimedia framework across major mobile platforms adopts a paradigm similar to that illustrated in Figure 9-1. This is a high-level architectural abstraction of the multimedia framework used on WP7, Android, and iOS. The concrete implementations of each component shown in this figure may have different names on the specific platform, but no matter what they are called, you can find the conceptual mappings here. The components inside the dash-line circle are usually encapsulated as one class object to handle the multimedia functions.

FIGURE 9-1: Conceptual multimedia framework architecture

There are basically four software components:

- Manager: The main coordinator for multimedia processing, it provides the factory used to create DataSource and Player objects.

- Data Source: This is the main input for receiving multimedia content, which could be a data file, an input stream, or another source.

- Player: It manages the multimedia content play actions like start, play, stop, pause, and resume, and controls the life cycle through its methods.

- Control: It enables seeking, skipping, forwarding, and rewinding functionalities, and controls the flow of multimedia contents.

The conceptual architecture just discussed in Figure 9-1 mainly applies to audio and video media types. Image is another important media type which has a relatively simpler manipulation mechanism. In general, all three platforms provide a view-based control or container for displaying images. On WP7 it is Image control, while it is ImageView widget on Android, and the UIImageView class on iOS.

WP7 Multimedia

Let’s look at how the components shown in Figure 9-1 are implemented on WP7.

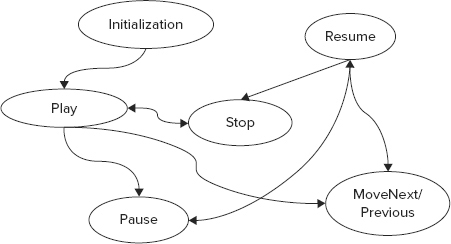

To implement the Player functions and add the media playback function into a WP7 application, you have two options. One is to use the Silverlight MediaElement class defined in the System.Windows.Controls namespace (http://msdn.microsoft.com/library/ms611595.aspx). The other option is to use the MediaPlayer class in the Microsoft.Xna.Framework.Media namespace (http://msdn.microsoft.com/library/dd254868.aspx). You’ll find an explanation of the WP7 sound and video playing details later in this chapter. Figure 9-2 shows the WP7 version of the Player object states.

FIGURE 9-2: Player object state model on WP7

The DataSource on WP7 is an abstract layer for handling different types of codecs. It’s responsible for generating data and content for the MediaPlayer object to use. DataSource hides the details of data payload formats. The WP7 DataSource refers to the Source property of the MediaElement class or the MediaLibrary class defined in the Microsoft.Xna.Framework.Media namespace (http://msdn.microsoft.com/library/microsoft.xna.framework.media.medialibrary.aspx)

On WP7, the Control functions shown in Figure 9-1 are actually included in the MediaElement and MediaPlayer classes, which not only expose methods and properties to play, pause, resume, and stop media, but also provide shuffle, repeat, play position, and visualization capabilities. For example, to control song playback, the MoveNext() and MovePrevious() methods move to the next or previous song in the queue and the IsMuted, IsRepeating, IsShuffled, and Volume properties are used to get and set playback options.

The Image control is used on WP7 to display an image in JPEG or PNG formats. Because the JPEG decoder in WP7 is faster than the PNG decoder, you may want to use JPEG format if you need to display opaque images. However, for transparent images, PNG is the only choice. When you include images in your WP7 application, as in some sample applications demonstrated previously, you can set their Build Type as either Resource or Content. When the Resource type is used, the images are built in the assemblies (.dll files). The larger the application’s dll, the slower the application will launch. However, once the application launches, the images will show up more quickly than using Content as the build type. Thus you should take tradeoffs to select the proper build type based on the requirements.

iOS Multimedia

On iOS, you can use its Media Player Framework to select and play audio and video. For example, AVAudioPlayer is a sound-playing object. Additionally, a commonly used media player on iOS is the MPMusicPlayerController class, which is responsible for playing audio media items. The MPMusicPlayerController object methods are play(), beginSeekingForward(), beginSeekingBackward(), endSeeking(), skipToNextItem(), skipToBegining(), skipToPreviousItem(), and stop().

If MPMusicPlayerController is instantiated as an iPodMusicPlayer object, the system will launch the system default iPod music player application to handle the audio playback functions. The user then can use the playback controls provided by the iPod application to control its iPod music player states such as repeat, shuffle, and now-playing item. For iPod music player instantiation, the code is as follows:

(void) setMusicPlayState {

MPMusicPlayerController *iPodController =

[MPMusicPlayerController iPodMusicPlayer];

playPauseButton.selected =

(iPodController.playbackState == MPMusicPlaybackStatePlaying);

}If MPMusicPlayerController is instantiated as an applicationMusicPlayer object, a new player control is created as part of the application. It plays music locally within the user’s application, but doesn’t act as the iPod music player. Furthermore, the user interactions with this application player will not affect the system iPod player’s state. When the application moves to the background, the player stops if it was playing. The following code shows the instantiation of the normal application music player.

(void) createMyMusicPlayer {

MPMusicPlayerController *myMusicPlayer =

[MPMusicPlayerController applicationMusicPlayer];

}iOS uses the UIImageView class to provide a display of one or more images. This object can also set the duration and frequency of animation. To view a series of images, you set this object’s animationImages property to hold one or more UIImage objects. Then you set the contentMode property (display mode) to the desired display type (such as UIViewContentModeScaleAspectFit), and the animationDuration property to the number of seconds to display the series of images. Here is an example of a typical setup:

NSArray *myimagecollection = [NSArray arrayWithObjects: [UIImage imageNamed:@"IOS.png"],[UIImage imageNamed:@"Android.png"], [UIImage imageNamed:@"WP.png"],[UIImage imageNamed:@"WP7.png"],nil]; CGRect animatedframe = CGRectMake(0,0,600,800); UIImageView *myanimationView = [[UIImageView alloc] initWithFrame:animatedframe]; myanimationView.animationImages = myimagecollection; myanimationView.contentMode = UIViewContentModeScaleAspectFit; myanimationView.animationDuration = 6;

This code creates myimagecollection as INSArray’s instance and creates myanimationView as the UIImageView’s instance. After you create the UIImageView object, you can set the repeat count and start the animation using the following code:

myanimationView.animationRepeatCount = 6; [myanimationView startAnimating];

When the UIImageView control displays the PNG or JPEG image array, you can touch the screen to launch the program that starts the animation slideshow.

Before iOS 4, you could play video only in full-screen mode. When working with iOS 4 you can embed videos in the view window as shown here:

(void)playMovie:(NSURL*) myaddress {

MPMoviePlayerController* media = [[MPMoviePlayerController alloc]

initWithContentURL:myaddress];

media.scalingMode = MPMovieScalingModeNone;

media.movieControlMode = MPMovieControlModeDefault;

[[NSNotificationCenter defaultCenter] addObserver:self

selector:@selector(mediacallback:)

name:MPMoviePlayerPlaybackDidFinishNotification

object:media];

[media play];

}

(void) mediacallback:(NSNotification*)mynotice {

MPMoviePlayerController* media = [mynotice object];

[[NSNotificationCenter defaultCenter] removeObserver:self

name:MPMoviePlayerPlaybackDidFinishNotification

object:media];

[media release];

}This example shows how to use the MPMoviePlayerController class to manage the video player. The code uses NSNotificationCenter to register a notification so the system can tell the application when the movie is done playing. The mediacallback() method is designed to unregister and release the movie object.

Android Multimedia

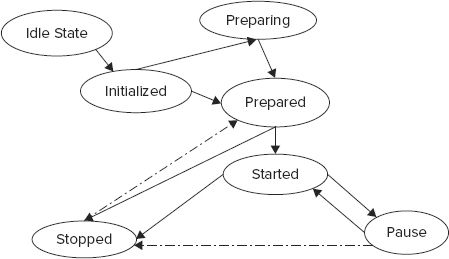

Android uses the MediaPlayer class (described at http://developer.android.com/reference/android/media/MediaPlayer.html) to control playback of audio and video files and streams. Figure 9-3 shows the media playback state diagram.

FIGURE 9-3: Android media player state diagram

When working with Android, the MediaPlayer object has the following states:

- Idle: Using the reset() method, calling the code creates a MediaPlayer object in the idle state.

- Initialized: When the code calls setDateSource(), it changes the MediaPlayer object from the idle state to the initialized state.

- Preparing: This state is a temporary state, where the MediaPlayer object behavior is not deterministic. Only when the code calls prepareAsync() does the system change the MediaPlayer object state to preparing. The prepareAsync() method does not take any parameter, and this method immediately returns the programming control and will not block code execution in this running thread. The working thread can continue to finish other tasks, and wait for its registered callback function to return and handle the deterministic state’s information.

- Prepared: After the prepareAsync() method returns and the internal player engine’s preparation work is completed, the MediaPlayer object enters the prepared state. As an alternative, a call to the prepare() method can also place the MediaPlayer object in the prepared state. The prepare() method will block the current thread execution until the method returns; the next line of code can continue to run, with the benefit that the code logic will be protected in sequential order.

- Started: At this point, the code calls the start() method and waits for the call to return successfully. When the call is successful, the MediaPlayer object is in the started state. You can call isPlay() to verify if the MediaPlayer object is in the started state.

- Pause: Use the pause() method to place the MediaPlayer object in pause state. While MediaPlayer is in the pause state, you can reposition the current playback. When the code calls pause(), the MediaPlayer object state transition occurs asynchronously in the player engine. Transitioning from the pause to started state also occurs asynchronously. Calling the start() method places a paused MediaPlayer object in the started state.

- Stopped: Calling the stop() method stops playback. It also places a MediaPlayer object that is in the started, paused, or prepared states into the stopped state.

On Android, the MediaPlayer class assumed the role of the manager and player functions in Figure 9-1. It has the setDataSource() method to enable the object to access the multimedia data sources.

The Android SDK provides a set of audio and video APIs for developers to build rich multimedia functionality into an application. The android.media.MediaPlayer class can be used to play audio files, video files, and media streams. Your application can use any of the formats listed in the “Supported Media Codecs” section of this chapter. These media files can appear in an Android Package (.apk) file, Secure Digital (SD) card, or a smartphone’s NAND flash and Embedded MultiMediaCard (EMMC) storage. The following code shows how to play an MP3 stored in an .apk file. By using the MediaPlayer object’s create() method, you can obtain the handle to the MediaPlayer object:

MediaPlayer mySounder = MediaPlayer.create(this, r.raw.sound);

If (mySounder != null)

mySounder.stop();Before starting the sound playback, you must call the prepare() method, and then you can call the start() method to play the music as shown here:

mySounder.prepare(); mySounder.start();

When the music files are stored on an SD card, in NAND flash, or in EMMC storage, you use the following code to obtain a handle to the MediaPlayer object:

MediaPlayer mySounder = new MediaPlayer();

mySounder.setDataSource("/AndroidSD/yourfun.jpeg");Once you have access to the file, mySoundPlayer can call prepare() and start() to set up and play the sound. Similarly, you can call pause() and stop() to pause and stop the playback. The MediaPlayer object also supports event-driven notifications during playback. For instance, once the MediaPlayer object completes the playback, it will fire an onCompletion event. You can release the MediaPlayer object and other related resources as part of the event handler as shown here:

Public void onCompletion(MediaPlayer mySounder) {

mySounder.release();

}

mySounder.setOnCompletionListener(this);You can also use the android.widget.VideoView class, which is a wrapper for MediaPlayer, to perform video playback on the Android. This class can load videos from resources or content providers. It offers various display options such as scaling and tinting and is managed by a layout manager. The VideoView object can also calculate video measurements and use a number of formats as described in the “Supported Media Codecs” section of this chapter. To use this object, you can add a VideoView element to the layout XML file:

<VideoView android:id="@+id/myViewer" android:layout_width="600px" android:layout_height="300px"/>

You can implement the video playback by calling the setVideoURI() method with a file path and filename. You must also set the controller by calling setMediaController(), and then call the start() method to begin playback as shown here:

myViewer.setVideoURI(Uri.parse("file///androidSD/fun.wav"));

myViewer.setMediaController(new MediaController(this));

myViewer.start();

If you want to pause or stop the video player, you can use the following code:

myViewer.pause();myViewer.stopPlayback();

In addition, you can use the android.view.SurfaceView class to manage a drawing surface in a view hierarchy. It’s possible to adjust the format and size as well. This class can place the surface at the proper position on the screen, which is something you can’t achieve using the VideoView component. To make the video player more flexible, you can integrate MediaPlayer with SurfaceView. Before using the SurfaceView component, you need to create a SurfaceHolder object as shown here:

SurfaceView myViewer = (SurfaceView) findViewById(R.id.surfaceView); SurfaceHolder myHolder = myViewer.getHolder(); myHolder.setFixedSize(200, 200); myHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

If you don’t call setFixedSize(), the rest of the SurfaceView is black except for the default video area. When you call the SetFixedSize() method, it sets the video player area to the size you specify. Whether you view this functionality as a bug or a feature, the SetFixedSize() method always fills the whole SurfaceView area once it’s called.

After the code completes this setup, you can use the MediaPlayer.setDisplay() method to put the video player in the SurfaceView area, as shown here:

MediaPlayer myVideoPlayer = new MediaPlayer();

myVideoPlayer.setAudioStreamType(AudioManager.STREAM_MUSIC);

myVideoPlayer.setDisplay(myHolder);

myVideoPlayer.setDataSource("AndroidSDCard/fun.3gp");

myVideoPlayer.prepare();

myVideoPlayer.start();You can call setAudioStreamType() to get the sound stream. By calling setDataSource(), the myVideoPlayer object can load a video file stored at the location you specify. For pause and stop, myVideoPlayer can use pause() and stop() methods individually.

Supported Media Codecs

Smartphone users care a lot about the sound, voice, and video quality when they play multimedia like video clips. Thus developers need to pay attention to choosing the media codecs the OS supports, because it can affect the user experience in ways like latency, well-encoding color, and surface texture. For example, the sports media needs to encode motion in high quality. Moreover, different types of codecs can have different effects on the device itself, affecting power consumption, talk time, and other things.

There are audio codecs and video codecs. Some media streams contain audio data, video data, and metadata that will be used for synchronization of audio and video.

The iOS supports the following audio formats:

- Advanced Audio Coding (AAC)

- Protected AAC (From iTunes Store)

- High-Efficiency Advanced Audio Coding (HE-AAC)

- Motion Picture Experts Group (MPEG) Layer-3 Audio (MP3)

- MP3 Variable Bit Rate (VBR)

- Audible (formats 2, 3, and 4, Audible Enhanced Audio, Audible Enhanced Audiobook File (AAX), and AAX+)

- Apple Lossless

- Audio Interchange File Format (AIFF)

- WAV (wave)

The iOS also supports the following video formats:

- H.264 video, up to 720p (or 1280×720 pixels), 30 frames per second, Main Profile level 3.1 with Advanced Audio Coding Low-Complexity (AAC-LC) audio up to 160 Kbps, 48kHz, stereo audio in MPEG-4 Visual (.m4v), MPEG Layer-4 Audio (.mp4), and .mov (movie) file formats

- MPEG-4 video, up to 2.5 Mbps, 640 by 480 pixels, 30 frames per second, Simple Profile with AAC-LC audio up to 160 Kbps per channel, 48kHz, stereo audio in .m4v, .mp4, and .mov file formats

- Motion Joint Photographic Experts Group (M-JPEG) up to 35 Mbps, 1280 by 720 pixels, 30 frames per second, audio in μ law, Pulse-Code Modulation (PCM) stereo audio in .avi file format

For additional details about the supported media format on iOS, please refer to http://www.apple.com/iphone/specs.html.

Android provides built-in supports for the following audio formats:

- MP3

- AAC

- AMR

- AAC +

- PCM/WAV

- Musical Instrument Digital Interface (MIDI)

- Ogg Vorbis

The Android system also supports the following video formats:

- H.264 AVC

- H.263

- MPEG-4 SP

- VP8

For additional details about the supported media format on Android, please refer to http://developer.android.com/guide/appendix/media-formats.html.

WP7 offers broad support on audio and video codecs as described in http://msdn.microsoft.com/library/ff462087.aspx

WP7 supports the following audio formats:

- Low Complexity AAC (AAC-LC)

- Linear Pulse Code Modulation (LPCM)

- Microsoft Custom Technology Differential Pulse Code Modulation (MS ADPCM)

- Interactive Multimedia Association ADPCM (IMA ADPCM)

- Version 1 of HE-AAC using spectral band replication (HE-AAC v1)

- Version 1 of HE-AAC using both spectral band replication and parametric stereo to enhance the compression efficiency of stereo signals (HE-AAC v2)

- MP3

- MP3 Variable Bit Rate (VBR)

- Adaptive Multi-Rate Audio Codec (AMR-NB)

- Windows Media Audio which was developed by Microsoft for audio data compression technology (WMA 10 Professional)

- Version 9 of Windows Media Audio (WMA Standard v9)

WP7 supports the following video formats:

- MPEG-4

- Windows Media Video’s Video Codec Simple Profile (WMV VC-1 SP)

- Windows Media Video’s Video Codec Main Profile (WMV VC-1 MP)

- Windows Media Video’s Video Codec Advanced Profile (WMV VC-1 AP)

- MPEG-4 Part 2 Simple Profile

- MPEG-4 Part 2 Advanced Simple Profile

- MPEG-4 Part 10 (MPEG-4 AVC, H.264) Level 3.0 - Baseline Profile

- MPEG-4 Part 10 (MPEG-4 AVC, H.264) Level 3.0 - Main Profile

- MPEG-4 Part 10 (MPEG-4 AVC, H.264) Level 3.0 - High Profile

- H.263, which is the video compression standard as a low bit rate compressed format for videoconferencing

All three of these platforms also support the JPEG, Portable Network Graphics (PNG), Graphic Interchange Format (GIF), Bitmap (BMP), and Tagged Image File (TIF) picture codecs.

This section discusses how to play audio on WP7. You’ll discover how to build applications that offer integrated sound, photo, and graphics experience.

WP7 audio development is based on two assembly models: the Silverlight object model (System.Windows.Controls.MediaElement) and the Game SDK, XNA model (Microsoft.Xna.Framework.Audio.SoundEffect).

The difference between the two models is that when you try to design a good web, business, or personal .NET application, it’s easier to use Silverlight’s MediaElement object. But when you design for game effects, you might want to consider using XNA’s SoundEffect object.

Let’s start with MediaElement class. It should be noted that the MediaElement object is also designed to play videos.

Playing Sounds Using MediaElement

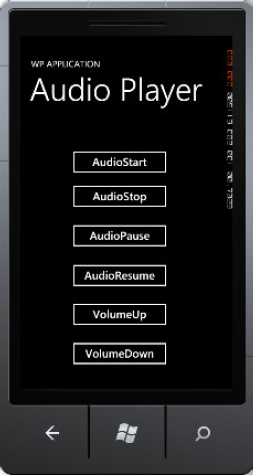

The simplest way to play Audio in WP7 is using the MediaElement object, which is a .NET Framework object that appears as part of the Silverlight object model. This Windows control appears as a rectangle in the Visual Studio and Expression Blend designer window. Now let’s use a sample application to demonstrate how to use it to play sounds on WP7. The UI of this sample application is shown in Figure 9-4.

FIGURE 9-4: Sample application using MediaElement to play audio

The sample application WP7AudioPlayerDemo has a simple UI with six buttons to demonstrate the basic audio playback controls (start, stop, pause, and resume) and volume control (volume up and volume down). You can use the following steps to build this sample application.

1. Create a Windows Phone project and type WP7AudioPlayerDemo as the project name. For details of how to build a WP7 application, please refer to Chapter 2.

2. Drag UI components for the application to the UI designer canvas. The main UI components used in this sample application are Button controls and a MediaElement control. As shown in Figure 9-4, the button label clearly presents the button function.

3. Create event handlers for action commands or events associated with each button. Listing 9-1 shows the core UI layout for the content panel and the event handler for each button.

LISTING 9-1: Core UI components, media element, and event handlers for the sample audio player, WP7AudioPlayerDemoWP7AudioPlayerDemo MainPage.xaml

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<Button Content="AudioStart" Name="button1" Click="AudioStart_Click"

IsEnabled="True" />

<Button Content="AudioStop" Name="button2" Click="AudioStop_Click"

IsEnabled="True"/>

<Button Content="AudioPause" Name="button3" Click="AudioPause_Click"

IsEnabled="True"/>

<Button Content="AudioResume" Name="button6" Click="AudioResume_Click"

IsEnabled="True"/>

<Button Content="VolumeUp" Name="button4" Click="VolumeUp_Click"

IsEnabled="True"/>

<Button Content="VolumeDown" Name="button5" Click="VolumeDown_Click"

IsEnabled="True"/>

</Grid>

<MediaElement x:Name="Audio1" AutoPlay="False"

MediaOpened="Audio1_MediaOpened"/>The MediaElement tag shown in Listing 9-1 has the AutoPlay attribute set to False, which means the application won’t play the media until the user takes some action. The MediaOpened attribute defines a connection to the Audio1_MediaOpened() event handler. Here’s the event handler code for the MediaOpened event.

private void Audio1_MediaOpened(object sender, RoutedEventArgs e)

{

Audio1.Play();

}

Code snippet WP7AudioPlayerDemoWP7AudioPlayerDemoMainPage.xaml.cs

Table 9-1 contains a summary of the main events for the MediaElement class.

TABLE 9-1: MediaElement Main Events

| EVENT | DEFINITION |

| BufferingProgressChanged | This event fires whenever the BufferingProgress property changes. The code can take the event and implement its business logic |

| CurrentStateChanged | This event fires whenever the CurrentState property changes. |

| DownloadProgressChanged | This event fires whenever the DownloadProgress property changes. |

| GotFocus | The application fires this event whenever a UIElement object receives focus. |

| KeyDown/UP | The system fires this event whenever the user presses a keyboard key and a UIElement object is in focus. |

| LayoutUpdated | The application fires this event whenever the Silverlight visual tree layout changes. |

| Loaded | The application fires this event after it constructs the FrameworkElement object and adds it to the object tree. |

| LostFocus | The application fires this event whenever a UIElement object loses focus. |

| MediaEnded | The application fires this event after the MediaElement object has stopped audio or video play. |

| MediaFailed | The application fires this event any time the media source has an error. |

| MediaOpened | The application fires this event after it validates and opens the media stream, and the code has read the file headers. |

| SizeChanged | This event fires whenever either the ActualHeight or the ActualWidth property changes value on a FrameworkElement. |

| Unloaded | The application fires this event when the MediaElement object has lost its connection with the main object tree. |

If the AutoPlay attribute shown in Listing 9-1 is set to True, the application plays the audio when the page loads. You can specify the audio source statically in the XAML file. If the audio source is a file (such as an .mp3 file or a .wav file) packaged within your application, you should add it to your project first. You can right-click the project entry in Solution Explorer and choose Add ⇒ Existing Item from the context menu. You’ll see the Add Existing Item dialog box where you can locate the audio file you want to add. After you select the item you want to add, click Add and the IDE will add it to Solution Explorer for you. After that, you should set the BuildAction property to Content. For example, the following code adds JackieSound.WAV to the MediaElement.

<MediaElement x:Name="Audio1" AutoPlay="False" Source="JackieSound.WAV"

MediaOpened="Audio1_MediaOpened"/>You don’t have to add the media source statically to the XAML file. It’s also possible to add the media source dynamically using code. Compared with statically specifying the media source file, the dynamic load method enables the application to choose the file on the fly if the application prompts the user with a dialog box or other UIs. It becomes more flexible but the side effect is you may lose some performance.

private void AudioStart_Click(object sender, RoutedEventArgs e)

{

Audio1.Source = new Uri("JackieSound.WAV", UriKind.Relative);

Audio1.Position = new TimeSpan(0);

}

Code snippet WP7AudioPlayerDemoWP7AudioPlayerDemoMainPage.xaml.cs

Because setting the source will reset the audio’s position (the current progress through the audio’s playback time) to 00:00:00, if you want to locate a specific start point you can set the Position property after you set the source.

In addition to an audio file, the source can also be an audio streaming specified by an absolute or relative source URI. An absolute URI is a complete reference to the resource, and a relative URI depends on a previously defined base URI. Here’s an example of defining an absolute URI source, where the media position is defined precisely:

private void AudioStart_Click(object sender, RoutedEventArgs e)

{

Audio1.Source = new

Uri("http://dc232.4shared.com/img/462949607/ed97b821/dlink__2Fdownload_

2FiqCUthcU_3Ftsid_3D20101227-45361-f962eca/preview.mp3",

UriKind.Absolute);

Audio1.Position = new TimeSpan(0);

}You’ve been shown several MediaElement properties, and Table 9-2 provides a summary of those properties.

TABLE 9-2: MediaElement Main Properties

| PROPERTY | DEFINITION |

| ActualHeight | Retrieves FrameworkElement object’s height. This property is not applicable to audio playback. |

| ActualWidth | Retrieves FrameworkElement object’s width. This property is not applicable to audio playback. |

| AutoPlay | Plays the media described by the MediaElement Source property automatically when set to True, otherwise not. |

| Balance | Retrieves and assigns a ratio of volume between stereo speakers. Some OEM Phones have stereo speakers. |

| BufferingProgress | When loading multimedia content into your media player application, you need to create a buffer to store the content. You can define the application to play back the real time content by streaming or using the buffered content in the buffer. When using content in buffer, the application can monitor how much content is in the buffer. This property indicates the current buffering development process state in the buffer. |

| BufferingTime | Retrieves and assigns the amount of time to buffer. |

| CacheMode | Caches the rendered content when set to True. Caching improves rendering performance and reduces network traffic as well. |

| CanPause | Shows whether the application can pause the media when it calls the Pause() method. After the application raises the MediaOpened event, and if the CanPause property is False, the application can change the display characteristics of the Pause button. |

| CanSeek | Gives a value that indicates whether the application can reposition the media. This property can be set as False or True. For live streaming media, it is set to False. This API can be used to determine whether user capabilities are available at a point in time so the application can be shown the proper UI. |

| CurrentState | Indicates the MediaElement status. This property affects application behavior for applications that use MediaElement methods such as Play() and Stop(). For example, when the player is in the Buffering state, the application can’t make a call to Play() until the state changes to another state such as Playing or Paused. |

| DownloadProgress | Indicates the remote content’s downloaded percentage. |

| IsMuted | Sets the audio state to mute or indicates whether the audio is muted. |

| Name | Sets or gets the MediaElement object name. |

| NaturalDuration | Obtains the length of the opened media file. For live media, this property returns a value of Automatic. |

| Position | Sets or gets the MediaElement content’s play position. |

| Source | Obtains the MediaElement source data, usually by URI value. |

| Volume | Sets or gets the MediaElement volume. The application’s volume slider can use this property to control volume. |

When the application plays music, the user can increase or decrease the volume as needed. The VolumeUp_Click() and VolumeDown_Click() methods contain the code required to change the volume. The player uses the AudioStart_Click() and AudioStop_Click() methods to start or stop audio playback. Additionally, the player calls the AudioPause_Click() and AudioResume_Click() methods to pause or resume play. The code for supporting these functions is shown in Listing 9-2.

This example also shows a potential failing of media support for WP7. The pause function works fine for .mp3 files, but not for .wav files.

LISTING 9-2: Main implementations for the sample audio player , WP7AudioPlayerDemoWP7AudioPlayerDemoMainPage.xaml.cs

namespace WindowsPhoneAudio1

{

public partial class MainPage : PhoneApplicationPage

{

double volumeChange = 0.1;

double volumeMax = 1.0;

double volumeMin = 0;

TimeSpan timeSpan;

private void Audio1_MediaOpened(object sender, RoutedEventArgs e)

{

Audio1.Play();

}

private void AudioStart_Click(object sender, RoutedEventArgs e)

{

Audio1.Source = new Uri("JackieSound.WAV", UriKind.Relative);

Audio1.Position = new TimeSpan(0);

}

private void AudioStop_Click(object sender, RoutedEventArgs e)

{

Audio1.Stop();

}

private void AudioPause_Click(object sender, RoutedEventArgs e)

{

Audio1.Pause();

timeSpan = Audio1.Position;

}

private void AudioResume_Click(object sender, RoutedEventArgs e)

{

Audio1.Position = timeSpan;

Audio1.Play();

}

private void VolumeUp_Click(object sender, RoutedEventArgs e)

{

if (Audio1.Volume < volumeMax)

Audio1.Volume += volumeChange;

}

private void VolumeDown_Click(object sender, RoutedEventArgs e)

{

if (Audio1.Volume > volumeMin)

Audio1.Volume -= volumeChange;

}

}

}The AudioStart_Click() defines where to load the media file, and where the start position is. When the Pause button is clicked, the method AudioPause_Click() is invoked. Similarly, when the resume, stop, volume up, and volume down buttons are clicked, the corresponding AudioResume_Click(), AudioStop_Click(), AudioVolumeUp_Click(), and AudioVolumeDown_Click() are called.

Table 9-3 contains a summary of the methods you’ll use most often. You can find the complete listing of all the events, properties and methods at http://msdn.microsoft.com/library/system.windows.controls.mediaelement.aspx.

TABLE 9-3: MediaElement Main Methods

| METHOD | DEFINITION |

| Pause | Pauses the play action when the media is playing. Use the Play() method to resume play. |

| Play | Starts playing the media content at the given state. If the media player is stopped, calling Play() will start playing the media from the beginning. When the media player is paused, calling Play() will resume playing the media from the paused position. |

| SetSource(MediaStreamSource) | Sets the source used to load media for the media player from a MediaStreamSource object. The MediaStreamSource object will have a MediaElement subclass when the user sets it. If you define the MediaElement Source in the .xaml file and set the MediaElement.Source property in your C# code, the source set in the C# code wins. |

| SetSource(Stream) | Sets the source used to load media for the media player from a Stream object. Unlike the Source property, which relies on a URI, a Stream object can accept an existing media stream provided by the user. You may load the media stream using the WebClient APIs and SetSource() can leverage the existing content stream. |

| Stop | Stops playing the media content. When media is playing, user can use this method to stop the media play and reset the media position to point at the beginning of the media stream. |

Playing Sounds Using SoundEffect

The SoundEffect class in the Microsoft.Xna.Framework.Audio namespace provides another way to play audio on WP7. The usage difference between SoundEffect and MediaElement is that SoundEffect is not a UI element which can be defined in the XAML. Thus you can create the SoundEffect object directly in code. Although it is provided by the XNA assembly, you can use this class when building a Silverlight application.

SoundEffect supports only .wav files, but you can also convert .mp3 and .mp4 files into the .wav file format. There are a lot of conversion tools available, for example Audacity (http://audacity.sourceforge.net/). It’s important to realize that the .wav format file is larger than that of the .mp3 or .mp4 because the .mp3 and .mp4 files are compressed. Therefore if you aren’t using the media purely for game purposes, you can use the MediaElement object to play the media as described in the “Playing Sounds Using MediaElement” section of the chapter to gain a performance benefit.

Just as you added the audio source file into the application in the last sample project, you can add the .wav files to your application project for the SoundEffect object. Then you can use the Load() method of the ContentManager to load the sound effect. The Play() method of the SoundEffect object can be used to play the sound:

// Create SoundEffect object

SoundEffect sound;

// Load sound

sound = Content.Load<SoundEffect>("busy");

// Play sound

sound.Play();You can also open a stream to a .wav file by calling TitleContainer.OpenStream() with the name of the .wav file. And then you can call the SoundEffect.FromStream() method to read the stream object you obtain from the TitleContainer.OpenStream().

Table 9-4 contains a summary of the main SoundEffect properties and methods. You can see the entire list at http://msdn.microsoft.com/library/dd282429.aspx.

TABLE 9-4: SoundEffect Main Properties and Methods

| PROPERTY | DEFINITION |

| Duration | Gets the media length. This is a read-only property. |

| MasterVolume | Gets and sets the volume. |

| SpeedOfSound | Gets and sets the media playing speed to simulate different environments. Using higher speeds decreases Doppler effects. |

| METHOD | DEFINITION |

| FromStream | Sets the data source used to feed the SoundEffect object. |

| Play | Launches the audio play action. |

Sound, Picture, and Graphics Integration

In many cases, especially for games, you need to integrate audio elements with other media elements such as pictures and graphics. It is useful to understand how you can bundle these media elements together in your applications.

In this section, you’ll use a sample application, MediaPicker, to show you the tight integration between sound, picture, and graphics elements by playing two pictures on the XNA game canvas with a sound when the pictures collide. The main purpose of this sample is to demonstrate how to manipulate these media elements instead of really introducing how to develop a game. Thus you will focus on handling the media elements. Figure 9-5 is a screenshot of this sample application. Because this is an XNA application, you can create the MediaPicker project by following the steps introduced in Chapter 8. The game loop is handled by the Media class as defined in Listing 9-3.

FIGURE 9-5: WP7 sound and picture play

As mentioned in Chapter 8, to run XNA graphics on the WP7 emulator, the graphics card of your computer needs to support Direct X version 10 or above and the Driver mode needs to be WDDM 1.1 or above. Otherwise, you will still be able to compile the code but cannot test it on the emulator.

Note that all the sample code in this chapter will NOT run on an emulator that does not meet the driver requirements. However, you will be able to run the sample applications on a physical Windows Phone 7 device.

The classes in XNA to handle pictures, graphics, and audio can be found in the Microsoft.Xna.Framework.Media, Microsoft.Xna.Framework.Graphics, and Microsoft.Xna.Framework.Audio namespaces. To access the media resources available on the WP7, such as pictures, songs, and videos, you can use MediaLibrary class in the Microsoft.Xna.Framework.Media namespace. For example, the Pictures property of the MediaLibrary class provides the access to all the pictures in the Picture Hub of your WP7 device. In addition, the Microsoft.Xna.Framework.Audio namespace provides access to the SoundEffect class described in the “Playing Sounds Using SoundEffect” section to which you were just introduced. Listing 9-3 shows the properties you’re going to use in this sample application.

LISTING 9-3: Property definitions used in the game, MediaPickerMediaPickerMedia.cs

namespace MediaPicker

{

/// <summary>

/// SoundEffect and Picture Demo Game

/// </summary>

public class Media : Microsoft.Xna.Framework.Game

{

GraphicsDeviceManager mediaGraph;

SpriteBatch mediaCanvas;

MediaLibrary mediaLibrary = new MediaLibrary();

Texture2D mediabox1;

Texture2D mediabox2;

Vector2 mediaPosition1;

Vector2 mediaPosition2;

Vector2 mediaSpeed1 = new Vector2(50.0f, 50.0f);

Vector2 mediaSpeed2 = new Vector2(100.0f, 100.0f);

int media1Height;

int media1Width;

int media2Height;

int media2Width;

bool isPicture;

SoundEffect sound;

Random random;

public Media()

{

mediaGraph = new GraphicsDeviceManager(this);

Content.RootDirectory = "Content";

random = new Random();

// Frame rate is 30 fps by default for Windows Phone.

TargetElapsedTime = TimeSpan.FromTicks(333333);

}

}

}The mediaGraph object is used to handle the configuration and management of the graphics device. The mediaCanvas object is used to draw sprites on the screen. The mediaLibrary object is used to get access to the pictures stored on the device. In this sample application, two pictures at time are displayed in two Texture2D objects (mediabox1 and mediabox2). These pictures will move randomly on the screen. The sound object is used to play a sound when the two pictures collide. The application uses a combination of the Initialize() and LoadContent() methods to initialize the game, as shown in Listing 9-4.

LISTING 9-4: Initialize the game, MediaPickerMediaPickerMedia.cs

protected override void Initialize()

{

base.Initialize();

}

protected override void LoadContent()

{

// Create a new mediaCanvas, which can be used to draw textures.

mediaCanvas = new SpriteBatch(GraphicsDevice);

ShowMedia();

// Load Sound

sound = Content.Load<SoundEffect>("busy");

// Set starting positions of the media.

mediaPosition1.X = 0;

mediaPosition1.Y = 0;

mediaPosition2.X =

mediaGraph.GraphicsDevice.Viewport.Width - mediabox1.Width;

mediaPosition2.Y =

mediaGraph.GraphicsDevice.Viewport.Height - mediabox1.Height;

// Calculate the height and width of the media.

media1Height = mediabox1.Bounds.Height;

media1Width = mediabox1.Bounds.Width;

media2Height = mediabox2.Bounds.Height;

media2Width = mediabox2.Bounds.Width;

}These methods provide a place to query required services and to load non-graphic content. The Initialize() method calls base.Initialize() to enumerate any components and initialize them. The application calls LoadContent() once the game starts and is the place to query required services to load media-related content.

To prepare the pictures that will be displayed on the screen, the method ShowMedia() is defined as shown in Listing 9-5.

LISTING 9-5: Prepare the pictures to display on the screen, MediaPickerMediaPickerMedia.cs

void ShowMedia()

{

PictureCollection picGroup;

picGroup = mediaLibrary.Pictures;

// Set the current photo to display

int index1 = random.Next(picGroup.Count);

int index2 = random.Next(picGroup.Count);

while (index1 == index2)

{

index2 = random.Next(picGroup.Count);

}

Stream stream1 = picGroup[index1].GetThumbnail();

mediabox1 = Texture2D.FromStream(GraphicsDevice, stream1);

Stream stream2 = picGroup[index2].GetThumbnail();

mediabox2 = Texture2D.FromStream(GraphicsDevice, stream2);

}The picGroup object holds the access to the picture collections by using the Pictures property of the MediaLibrary class. The application randomly chooses two pictures from the picture collection, and loads their thumbnails into the sprites which will be drawn on the screen. As mentioned in Chapter 8, the game logic is implemented in the Update() method as shown in Listing 9-6.

LISTING 9-6: Update the media movement position in the game, MediaPickerMediaPickerMedia.cs

/// <summary>

/// Allows the MediaPicker game to run logic such as updating the world,

/// checking for collisions, gathering input, and playing audio.

/// </summary>

/// <param name="mediaRun">Provides a snapshot of timing values.</param>

protected override void Update(GameTime mediaRun)

{

// Allow the game to exit.

if (GamePad.GetState(PlayerIndex.One).Buttons.Back ==

ButtonState.Pressed)

this.Exit();

// Move the media around.

Updatemedia(mediaRun, ref mediaPosition1, ref mediaSpeed1);

Updatemedia(mediaRun, ref mediaPosition2, ref mediaSpeed2);

IsIntersect();

base.Update(mediaRun);

}

/// <summary>

/// Update the medias position.

/// </summary>

void Updatemedia(GameTime mediaRun,

ref Vector2 mediaPosition,

ref Vector2 mediaSpeed)

{

// Move the media by speed, scaled by elapsed time.

mediaPosition +=

mediaSpeed * (float)mediaRun.ElapsedGameTime.TotalSeconds;

int MaxX =

mediaGraph.GraphicsDevice.Viewport.Width - mediabox1.Width;

int MinX = 0;

int MaxY =

mediaGraph.GraphicsDevice.Viewport.Height - mediabox1.Height;

int MinY = 0;

// Check for bounce.

if (mediaPosition.X > MaxX)

{

mediaSpeed.X *= -1;

mediaPosition.X = MaxX;

}

else if (mediaPosition.X < MinX)

{

mediaSpeed.X *= -1;

mediaPosition.X = MinX;

}

if (mediaPosition.Y > MaxY)

{

mediaSpeed.Y *= -1;

mediaPosition.Y = MaxY;

}

else if (mediaPosition.Y < MinY)

{

mediaSpeed.Y *= -1;

mediaPosition.Y = MinY;

}

}

/// <summary>

/// See if the mediabox are intersecting each other, and play sound

/// and swap pictures if true

/// </summary>

void IsIntersect()

{

BoundingBox mb1 =

new BoundingBox(

new Vector3(

mediaPosition1.X - (media1Width / 2),

mediaPosition1.Y - (media1Height / 2), 0),

new Vector3(

mediaPosition1.X + (media1Width / 2),

mediaPosition1.Y + (media1Height / 2), 0));

BoundingBox mb2 =

new BoundingBox(

new Vector3(

mediaPosition2.X - (media2Width / 2),

mediaPosition2.Y - (media2Height / 2), 0),

new Vector3(mediaPosition2.X + (media2Width / 2),

mediaPosition2.Y + (media2Height / 2), 0));

if (mb1.Intersects(mb2))

{

sound.Play();

ShowMedia();

}

}The main game loop keeps updating the pictures’ state such as position and speed through the call to the Updatemedia() method. It also checks whether the pictures overlap each other or not through the call to the IsIntersect() method. If two pictures collide, a sound is played and the new pictures will be selected randomly again. After all these updates have been processed, if it is time to redraw itself, the game will call the Draw() method as shown in Listing 9-7 to draw a new frame.

LISTING 9-7: Redraw the screen, MediaPickerMediaPickerMedia.cs

/// <summary>

/// This is called when the MediaPicker game’s media should draw itself.

/// </summary>

/// <param name="mediaRun">Draw media canvas in

/// a snapshot of timing values.</param>

protected override void Draw(GameTime mediaRun)

{

mediaGraph.GraphicsDevice.Clear(Color.Coral);

// Draw the mediaCanvas.

mediaCanvas.Begin(SpriteSortMode.BackToFront,

BlendState.AlphaBlend);

mediaCanvas.Draw(mediabox1, mediaPosition1, Color.White);

mediaCanvas.End();

mediaCanvas.Begin(SpriteSortMode.BackToFront, BlendState.Opaque);

mediaCanvas.Draw(mediabox2, mediaPosition2, Color.Gray);

mediaCanvas.End();

base.Draw(mediaRun);

}This sample game has illustrated how to use the SoundEffect, PictureCollection, MediaLibrary, GraphicsDevice, and Texture2D objects together to build a simple game. By running this example you can see the audio and picture integration in WP7. In the next section you’ll discover the video replay component of WP7.

There are two ways to programmatically play videos on WP7. The first approach is to user a launcher called MediaPlayerLauncher to let the system handle and control the playback. The use of this launcher is similar to other launchers that were introduced in Chapter 3. The other approach is to use the MediaElement class just introduced in the last section.

Playing Video Using MediaPlayerLauncher

The MediaPlayerLauncher class enables an application to launch the system media player to play the video with the given URI. Figure 9-6 shows the scenario when the system media player is launched to handle the video play. In this case, your application should follow the application life cycle principles and manage its own state as was discussed in Chapter 3. In addition, because the video playback is handled by the system media player, you cannot change the UI look-and-feel. Furthermore, the player state transitions totally depend on the user interactions and cannot be controlled programmatically.

FIGURE 9-6: Using the WP7 media player launcher

Listing 9-8 shows the code for how to use the MediaPlayerLauncher class, which is defined in the Microsoft.Phone.Tasks namespace.

LISTING 9-8: Use media player launcher to play video, WP7VideoPlayerDemoWP7VideoPlayerDemoMainPage.xaml.cs

using Microsoft.Phone.Tasks;

public partial class MainPage : PhoneApplicationPage

{

MediaPlayerLauncher Demolauncher;

// Constructor

public MainPage()

{

InitializeComponent();

Demolauncher = new MediaPlayerLauncher();

}

private void MediaLaunchStart_Click(object sender, RoutedEventArgs e)

{

Demolauncher.Controls = MediaPlaybackControls.All;

Demolauncher.Location = MediaLocationType.Install;

Demolauncher.Media = new Uri("MVI_3086.wmv", UriKind.Relative);

Demolauncher.Show();

}

}In this example, you declare a MediaPlayerLauncher object, Demolauncher, and initialize it in the constructor. When you need to use the launcher to play a video, you just need to set the Controls, Location, and Media properties, and then call Show() to launch the system media player. The Controls property sets the flags that determine which controls are displayed in the system media player by using the bitwise combination defined by the MediaPlaybackControls type. The Location property sets the location of the media file to be played, and this property can take values from MediaLocationType enumeration. MediaLocationType.Install means the media file is in the application’s installation directory. MediaLocationType.Data should be used if the media file is in isolated storage or in the case of streaming for the network. The Media property sets the media played with the system media player and it takes a URI.

Playing Video Using MediaElement

Using the MediaElement class to play video is similar to playing audio. Compared to using the MediaPlayerLauncher, the benefit of using the MediaElement is that you can embed the video player inside your application and have full control of the player state.

Like the sample application used for audio play, you can build a sample application to demonstrate how to use MediaElement to play videos inside your application. Figure 9-7 shows the UI for this sample application, WP7VideoPlayerDemo. You can use the following steps to build this sample application:

FIGURE 9-7: Sample application using MediaElement to play video

1. Create a Windows Phone project and type WP7VideoPlayerDemo as the project name. For details of how to build a WP7 application, refer to Chapter 2.

2. Drag UI components for the application to the UI designer canvas. The main UI components used in this sample application are Button controls and a MediaElement control. As shown in Figure 9-7, the button label clearly presents the button function.

3. Create event handlers for action commands or events associated with each button. Listing 9-9 shows the event handler for each button.

From the code listed in Listing 9-9 you can see that the basic operations such as start, stop, pause, and resume the video playback, as well as increasing and decreasing the volume, are the same used in the audio play sample application.

LISTING 9-9: Using MediaElement to play video, WP7VideoPlayerDemoWP7VideoPlayerDemoMainPage.xaml.cs

private void VideoStart_Click(object sender, RoutedEventArgs e)

{

Video1.Source = new Uri("MVI_3086.wmv", UriKind.Relative);

Video1.Position = new TimeSpan(0);

Video1.Play();

}

private void VideoStop_Click(object sender, RoutedEventArgs e)

{

Video1.Stop();

}

private void VideoPause_Click(object sender, RoutedEventArgs e)

{

Video1.Pause();

timeSpan = Video1.Position;

}

private void VideoResume_Click(object sender, RoutedEventArgs e)

{

Video1.Position = timeSpan;

Video1.Play();

}

private void VolumeUp_Click(object sender, RoutedEventArgs e)

{

if (Video1.Volume < volumeMax)

Video1.Volume += volumeChange;

}

private void VolumeDown_Click(object sender, RoutedEventArgs e)

{

if (Video1.Volume > volumeMin)

Video1.Volume -= volumeChange;

}Unlike audio play, you can specify the height and width of the video display surface using the properties Height and Width. If you don’t, once you specify a video source the media will display at its natural size and the layout will recalculate the size.

Reusable Media Player Controls

From the sample application using the MediaElement class to play audio and video, you can see that the basic media handling operations are similar. This suggests that it may be a good idea to build a customized user control like that introduced in Chapter 4. Doing so will enable you to reuse your code for media handling and increase your productivity. In addition, you can always match your own media player control’s look-and-feel with different applications by making style changes — adjusting the height, width, font, or other customizable features.

Figure 9-8 shows an example of a reusable media player user control. This media player control includes functions for start, stop, pause/resume, fast forward (using a slider), rewind (using the same slider), volume slider controls (using another slider), and total play length display. Because you have already seen how to create a user control in Chapter 3, and also showed the implementation of media handling functions, there’s no need to dig into details of the control itself. The complete source code of this sample media player can be found in the source code package of this book at WP7EnrichedMoviePlayerDemo.

FIGURE 9-8: A reusable media player user control

This chapter began with a discussion of the common characteristics of the multimedia architecture for WP7, iOS, and Android. All three platforms are built with a solid object-oriented design model, multimedia data flow, and state information preservation and transition concepts. The different models and examples presented in this section demonstrate that WP7 is easier to use, has richer APIs, and provides better management of audio, pictures, and video. WP7 also leverages its Silverlight framework and XNA framework to provide diverse programming models.

This chapter also has explained how to use the System.Windows.Controls.MediaElement class and XNA multimedia object model in the Microsoft.Xna.Framework.Media namespace to develop sound playback, picture display, and video playback code. The WP7 APIs are as easy to use as those provided by the iOS and Android development environment, if not easier.

The next chapter discusses the peripherals such as a microphone to record sound, a camera to take pictures, an accelerometer to play media files, and an FM radio sensor to listen to broadcasts.