XML Frameworks

Introduction

XML by itself is great, but better still is the assembly of XML tags into larger data structures, paired with a delivery mechanism to create larger solutions. The concept of an XML framework is an application of XML technology to solve a particular problem or set of problems. Generally these problems are more complex and larger than those addressed by some of the technologies described in the XML Component Architecture chapter of this book. Although defining one’s own language is something that is well within the reach of XML’s users, frameworks do not stop there, but go on to build a higher-level system that incorporates XML as a data interchange and specification language. The frameworks that are discussed in this chapter build on the main capabilities of XML to produce extremely beneficial systems. We will discuss the types of benefits that organizations see from the use of XML frameworks, along with the specifics of several example frameworks, including RosettaNET, ebXML, BizTalk/.NET, and Acord. At the end of the chapter, we will present an example of an XML framework in action as developed for the chemical industry, and close with a discussion of the common themes, services, and advantages that are found in XML frameworks’ design.

Framework Advantages

The types of systems that operate using XML frameworks existed before the use of XML was widespread, but they tended to be very brittle and industry specific. As XML has matured, it has encouraged broader thinking in terms of how metadata can be used in systems. The fact that a number of XML-based frameworks have popped up over the past few years while the non-XML systems are languishing says something about the ease of adoption and benefits associated with using XML.

How did these frameworks come about in the first place? As more organizations began to use XML and its utility grew, efforts were mounted to create a set of components that would allow business information to be sent over the Internet. By using networking and XML, organizations could make sure that everyone was able to participate in the growing electronic marketplace by using a low-cost, reliable, and highly available technology as well as continuing interoperability. The issue of ongoing interoperability is quite important; with many thousands of businesses and almost as many unique systems for storing and manipulating business information, it is important to know that any technology selected would not quickly morph and change into something that would require complicated reconciliation.

This type of outlook requires a certain level of forward thinking. As data-management sophistication has increased, so too has the interest in these frameworks. Adoption started with the largest organizations that could achieve the economies of scale necessary to make the systems worth-while in their infancy. Initially, the development of some of these frameworks was not cheap or fast. The frameworks and the software that use them are evolving, though, and the use of them is becoming more widespread since organizations no longer have to be huge to see the benefit. In some cases, organizations have adopted the frameworks not as a choice but out of absolute necessity; their competitors possessed such a strategic advantage by using the framework that they have had to adopt it in order to level the competitive playing field.

Since many of these frameworks attempt to accomplish many of the same goals, it is useful to first discuss the types of things these frameworks are trying to accomplish, and then follow that with a description of each of the frameworks in context. These frameworks have only come about as a result of organizations realizing the value of automated communication within and between industries. This section deals with characteristics of the frameworks, including standardized data formats, standardized process models, the connection of as many organizations as possible, the logical spoke-and-hub model used by the frameworks, security provisions, lowering the barrier to entry, and homogenized transactions.

Shared Vocabulary–A Standardized Data Format

The first quality that all of the frameworks have in common is a common or shared vocabulary (in data-management terms, this is a standardized data format). There are two ways of looking at the standard data formats. The first is by syntax, and the second is by semantics. For syntax, these frameworks use plain XML documents or snippets of documents that are exchanged back and forth between systems. Since XML is already widely implemented and software can easily be had that supports XML, no organization is shut out when XML is used. As an interchange language, it has all of the advantages discussed in earlier chapters.

The semantics aspect is also important and has long been a stumbling block for data managers—the XML documents that are being transferred back and forth are all compliant with a particular XML DTD or Schema. They all have the same set of valid elements, and meanings associated with those elements. This semantic similarity is absolutely crucial to the success of these frameworks. If the organization is able to figure out how the common semantic model maps to their interpretation of various data items, then there is no need to worry about additional data formats—systems only need know how to process the common XML format.

As an example, take the case of a major publishing house with many different partners that supply them with lists for customer solicitations. Every month, each vendor sends the publishing house a long list of customer names obtained through various means. Every month, the publishing house must undertake a substantial effort to manipulate the data from the form it was sent in by the vendor, to the form used in the publishing house’s internal systems. In effect, this means that the vendors never really change the data formats that they use, and the publishing house must have the ability to translate data formats from potentially many different vendors, every single month. On the other hand, if an XML framework had been used, the publishing house would be able to have one piece of software that knows how to do one thing only—to take a standard XML document in a predetermined form, and translate it into the language of the publishing house’s systems. Rather than translate multiple different data formats every month, the potential is to only have to worry about one data format, and automate that process as well.

Part of the standardization of data formats is putting together an actual specification of how everything will be done. These documents are sometimes referred to by name, such as formal Collaboration Protocol Agreements, or CPAs. Along with the specification of a CPA, many frameworks provide a shared repository for a number of data items that do not relate to any specific transaction, but rather relate to the structure of the overall network of trading partners. These shared repositories often include:

![]() Information models and related data structures associated with message interchange

Information models and related data structures associated with message interchange

Making sure that the data is understandable by all of the involved parties is only part of the challenge, however. The next part is to make sure that the business processes the data applies to also make sense between partners.

Standardize Processes

The second concept that the frameworks employ is the standardization of processes. Each organization tends to have its own way of doing things. For example, the purchasing process is different in every organization with respect to who has to inspect which documents, what information those documents must contain, and how the process flows from point to point. The differences in processes make it almost impossible to automate aspects of business effectively, since the specifics of dealing with each organization differ so greatly.

Standardized processes do not necessarily require an organization to change the way it does things internally, but they do force the organization to change the way information is put into the process, and taken out of the back of the process. The actual process internally may remain a “black box” that the users do not have to modify, but the standardized portion has to do with how external entities feed things into the process and receive output from the process. The “black box” of the internal process is taken care of for the user. The standardized input and output must be understood by the user in order to make use of the processes. The benefits from standardized processes are profound; all trading partners can be treated alike, which reduces the amount of complexity involved with trading. One theme that is encountered over and over in the use of frameworks is the attempt at minimizing the complexity needed to communicate with everyone else that uses the framework. If a group of trading partners could be considered a network, the idea is to establish a common communication ground that everyone can use, rather than setting up a mess of individual point-to-point systems.

To illustrate this point, take an example from the chemical industry. Every company in the industry may have a slightly different process for selling a chemical, from the exchange of funds, to the shipping date and method, to the way that the warehouse where things are stored finds out about the actual sale. Still, there are commonalities between all of these processes, namely that money and goods are changing hands, and that there needs to be some way of coordinating delivery. Rather than using point-to-point systems that in some cases require traders to take into account the idiosyncrasies of their trading partner’s processes, the process is abstracted to what is necessary for everyone. The entire network of traders then uses that core process.

This example can be generalized a bit to give a basic definition of the functionality of frameworks—the way that trading is accomplished between partners is by the exercise of various processes. The purchase process has to be the same so that from the perspective of the buyer, it does not matter who the seller is—the process remains the same. Processes for querying inventory, returning goods, and requesting information must always be the same so that participants in the network can feel free to utilize the best trading partner without incurring additional overhead related to reconciling those processes.

Given standardization of data and processes, the framework starts to have some real value. Once the framework has been designed, the next step is to start to share it with other organizations to get the word out, and maximize the overall benefit of the design work that was done for everyone.

Connect as Many Organizations as Possible to Increase Value

One of the most interesting aspects of networks that form around the use of these frameworks is that the more organizations that are members in the network, the more value the overall network has. This is known as “the network effect.” As it grows larger, it becomes more and more representative of the industry as a whole, and begins to comprise the electronic marketplace for goods and services in that industry. XML frameworks are akin to the early telephone system. The benefit of the telephone grew exponentially with the number of people who had one. If only 10 people had telephones, their utility was limited by whom the telephone could be used to contact, while widespread telephone use greatly increased its value as a common mode of communication.

To take advantage of the network effect, these frameworks were built to enable as many organizations as possible to use them. Again, if we look at a group of trading partners as a network, the benefits of 5 trading partners participating in the use and development of a framework might be worthwhile, but the participation of 10 makes the benefit substantial, due to the increasing number of data formats and processes that each individual member no longer needs to be able to reconcile.

Logical Hub-and-Spoke Model: Standards and Processes

The use of standardized data and processes forms a logical hub-and-spoke model for communication using a particular framework. Earlier, we discussed how the use of these standards reduces the number of connections that are necessary between organizations in order to get business done. Figure 6.1 shows the difference between these two communication models. When looking at the peer-to-peer model, we see that the number of connections is larger, and that the organizations must communicate strictly between one another. Communications that A sends to B could not be sent to C; this is because the process and data format differences between B and C would make messages bound for B unintelligible to C.

In the hub-and-spoke model, we see that all of the organizations can communicate through a central hub. In this case, this is not a physical device—it is not meant to stand for an actual piece of software or a server—it is representative of the common data and process format through which the communication must flow in order to reach the other end. In a previous chapter, we discussed that the peer-to-peer or point-to-point model requires the creation of (N * (N – 1)) / 2 agreements between trading partners, while the hub-and-spoke model requires only N agreements. Moreover, any agreement between three business partners can be adopted by more and more organizations as time goes on. In some cases, frameworks for industry trading may develop as an expansion of an agreement between a relatively small number of “seed” partners.

Looking at the hub-and-spoke model, logically it means that every single organization must create only one data link—from itself to the hub. If another 20 organizations later join the model, each of the original organizations does not have to do anything in order to be able to communicate with the newcomers, since all communications go through the logical hub of data and process standards. In the peer-to-peer model, if another organization “X” were to join the group, the number of new links that would potentially have to be built would be equal to the total number of trading partners in the arrangement. This type of architecture is doable for small numbers of partners, but quickly becomes impossible when any reasonable number of organizations is involved, just as it would be impossible to effectively use a telephone system if connection to the network required creating an individual connection to everyone else using the network.

If an XML framework is going to be a preferred place to do business, it has to be reliable and trustworthy. Individual bank branches put a lot of work into making sure their customers feel safe putting their money in that location, and XML frameworks are no different, particularly when millions or billions of dollars in transactions have to flow through them.

Standardized Methods for Security and Scalability

Each of the frameworks in this chapter addresses standard ways of ensuring security and scalability of the system. These frameworks are built from the ground up to be scalable, owing to the previous point that the value of the total increases with the number of organizations that take part. Security is clearly also a very important consideration—provisions must be put in place that allay the concerns of trading partners, including threats such as data snooping, industrial espionage, corruption, misrepresentation, and so on. When it comes to security and scalability, the same approach is taken as with data formats and processes—standardization means that the designers of the framework only have to solve that problem once. All framework users then benefit from the solution, rather than each user having to come up with its own individual way of coping with these issues. Furthermore, the security model can be validated a single time, and strong evidence can be presented to the participating organizations that they are not exposing themselves to large amounts of risk by taking part in the group.

Frameworks Lower Barrier to Entry

One of the unexpected benefits of frameworks is that they tend to lower the barrier of entry for other organizations into particular networks. After all, anyone who can set up a system that can issue the appropriate standards-compliant documents and understand the result that comes back can take part in the trading community. When the frameworks were first being developed, they generally required large technology investments, but as they have grown, a number of other options have become available. Web-based systems exist that can automatically create and dispatch the XML documents sent using the framework, which allows smaller “Mom and PoP” operations to take part in the marketplace.

In other cases, organizations that purchase large ERP systems may require that their vendors and even customers use similar ERP systems in order to smooth the process of doing business. ERP requirements tend to greatly increase the barrier to entry since the investment needed to implement an ERP is substantial. By using XML, users of these frameworks have effectively minimized the technology investment required to use the framework, which will hopefully maximize the users of the framework and the value of it to all of the trading partners.

Commonly Available Transactions

One of the patterns commonly seen when working with these frameworks is the division of data exchange into various transactions between partners. A transaction might be a request for information about a particular product that is offered, an actual order for that product, or the exchange of administrative information. In most cases, though, the transaction is carried out with a sequence of events similar to those seen in Figure 6.2.

In Figure 6.2, we see that transactions can be broken down into a number of communications between the original requester of the information and the provider of the information.

1. First, the requester issues its initial request. This is a document whose format is dictated by the technical specification of whichever framework is in use. By virtue of the fact that both parties take part in the framework, the requester can be confident that the provider’s systems will understand this request.

2. The provider then sends back an acknowledgement and/or a signed receipt. This lets the requester know that the original request was received, and that it was received properly. In some cases for security, the acknowledgement may be signed or otherwise contain some information that allows the requester to be sure that the provider is who it claims to be. Each framework has different provisions for security, but this is one of the ways that those provisions frequently show up in the actual message exchange.

3. Next, the provider issues the response data to the requester. Some frameworks might roll this data up into the previous step and accomplish everything at one time, while others separate them into two distinct steps. In either case, the provider must respond to the request and provide an answer, whether that answer is, “Yes, you have now purchased 100 widgets at a cost of $0.99 per widget,” simple administrative information, or even an error.

4. Finally, the original requester sends an acknowledgement back to the provider. After this is accomplished, both parties can be confident that their messages were correctly received, and that the transaction has ended.

There are a few things to point out about this model. First, models of this type typically provide a reasonable amount of reliability. When each party must respond to the requests or information of the other, it becomes easier to guarantee that messages are passed properly. If a message were passed improperly, the acknowledgement would give the error away to the original sender of the document and allow for correction of errors. Second, sending acknowledgement messages allows for piggybacking of extra data that aids with issues such as security. Finally, communications models of this type can be easily formalized into a set of instructions that neither party should deviate from. Coming up with a solid set of rules for how communications should flow back and forth allows more robust implementations of actual software—there is never any question about what the next correct step would be in the process.

Now that we have covered some of the ideas that are common between frameworks, it is time to take a look at specific frameworks, where they came from, and what they have to offer, starting with one of the first to come on the scene—RosettaNET.

RosettaNET

One of the oldest XML-based frameworks is RosettaNET. The framework takes its name from the Rosetta stone, used to decipher hieroglyphics for the first time in history. Given the meaning and importance of the original Rosetta stone, the name indicates from the start that the designers realized the complexities involved with their task, and the importance of providing a translation between organizations, just as the original Rosetta stone allowed us to translate hieroglyphics into English. The target of RosettaNET is to automate supply-chain transactions over the Internet for the members that take part in it. RosettaNET was created in 1998 by a variety of companies in the electronic component industry including Intel, Marshall Industries/AVNET, Hewlett-Packard, Ingram Micro, Inacom, Solectron, Netscape, Microsoft, IBM, and American Express. The reason for its creation was to standardize a number of common information exchanges such as pricing information, product descriptions, product identifiers, and so on, throughout the electronic component industry. Today, RosettaNET is a nonprofit consortium of companies, much like the W3C or OASIS, whose goal is to streamline the high-tech supply chain by eliminating redundancies and improving flow of critical information.

The core of RosettaNET is defined by the use of PIPs, or Partner Interface Processes. These act as standard XML-based dialogs that define processes and vocabulary interaction between various trading partners. Each PIP may consist of multiple transactions that specify activities, decisions, and roles for each trading partner. For example, PIP3A4 Manage Purchase Order consists of three possible transactions: send purchase order, update purchase order, and cancel purchase order. PIP3A4 specifies two entities: buyer and seller. The “buyer” performs the “create purchase order” activity that is understood by all parties as “creating purchase order once acknowledgement of acceptance received from seller.”

Each PIP contains a specification of a particular XML vocabulary, a list of which elements are valid in transmitted documents, and in what order they must be used. Also included with each PIP is a process choreography model, which covers how messages flow and in which order they should be sent and received. A simple choreography model for a purchasing transaction might be that the buyer initiates the transaction by sending a document requesting purchase; the seller sends a document back intended to confirm and verify the order. The buyer then responds again with nayment information. and so on.

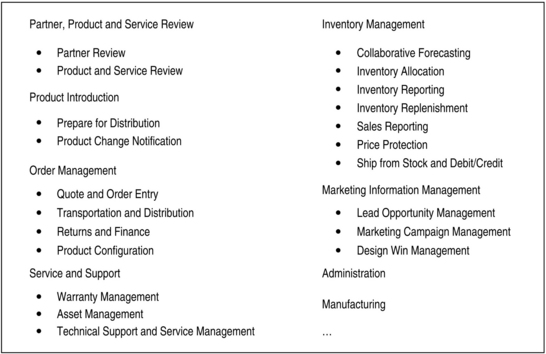

Figure 6.3 shows some example PIP clusters with sub-functions (segments) shown as bullet items. There are many processes that are covered by various PIPs in RosettaNET, and PIPs themselves are categorized into “clusters” that accomplish various tasks in specific areas. The clusters are supposed to represent various core business processes, and each cluster may contain many different PIPs.

RosettaNET also contains something called the RNIF, which stands for the RosettaNET Implementation Framework. The RNIF covers the way in which messages are actually sent back and forth. Information related to the specific transport protocols, security and scalability techniques, routing, and the way documents are actually aggregated and sent are all specified by the RNIF. The RNIF specification strives to be complete in its description of various behaviors, to leave as little as possible to chance and guessing on the part of the software creator who implements RosettaNET software.

The framework also provides two dictionaries—the RosettaNET technical dictionary, and the RosettaNET business dictionary. The business dictionary covers the various high -level business concepts that are used in RosettaNET, such as which entities are exchanged, and which business transactions members in the network may undertake. The technical dictionary, on the other hand, provides a lower-level description of the technical aspects of how some transactions are accomplished. With any good data structure, one usually finds an accompanying data dictionary that lays out exactly how things are put together. This documentation can aid individual organizations in deciphering the mapping between RosettaNET data items and their own systems, as well as provide them with documentation to view all of the different possibilities and capabilities of the framework as a whole.

Up until now, the partners involved in RosettaNET have been businesses in the information technology, electronics components, telecommunications, and semiconductor manufacturing industries. In many ways, RosettaNET has pulled these industries together, and created a number of interesting opportunities for all of the members. Participants in the network have found that RosettaNET allows them to inspect purchase orders from across their industry, and to perform data analysis that was not even possible before, since no common marketplace existed through which the data might flow. The existence of the network has also provided opportunities for new revenue streams—industry data can be aggregated and resold to third parties that might be interested in future interaction with the industry. Bulk discounting and larger sales are possible with automation, and partners have generally found that productivity has increased while the cost of business has decreased as a result of their use of the RosettaNET framework.

XML can be thought of as the blood and bones of the RosettaNET framework. The concepts that went into the architecture of the XML standards from the start allowed the RosettaNET architects to design a system for flexibility and interoperability. RosettaNET can be thought of as a success story of XML frameworks, and it actually represents one of the earlier efforts in this area. As new frameworks are developed in the future that have the benefit of past experience in terms of what has worked and what has not worked in RosettaNET, the advantages of these frameworks will only grow.

The next framework has a lot in common with RosettaNET, while providing more generic facilities for accomplishing trading relationships. While the desired outcome of RosettaNET might be similar to that of the next framework (ebXML), the way that it was accomplished is quite different.

ebXML

The stated goal of the ebXML project was “to provide an open XML-based infrastructure enabling the global use of electronic business information in an interoperable, secure and consistent manner by all parties.” Other descriptions include the term “single global marketplace,” “facilitating the ability of enterprise of any size in any geographical location to conduct business,” and “enabling modular, yet complete, electronic business framework that comprise the ‘rules of the road’ for conducting electronic business.”*

ebXML standards were created and supported in conjunction with OASIS and UN/CEFACT, and are credited as having created a single global electronic marketplace. Two of the architectural goals from the start were to leverage existing XML components wherever possible, and to provide a ready-made library of commonly used business objects. These architectural approaches are reminiscent of object-oriented software development. Using existing XML components wherever possible essentially amounts to using existing software rather than recreating it from scratch, and providing a library of commonly used business objects is another way of making sure that the output of the effort itself is as reusable as possible in the future. Reuse is also encouraged. Because the design of the framework ensures data communication interoperability, the common semantic framework ensures commercial interoperability. Furthermore, publishing mechanisms allow enterprises to find each other, agree to establish business relationships, and conduct business. These are maintained via a formal Collaboration Protocol Agreement (CPA) and shared repositories for company profiles, business process models, and related message structures.

Like other frameworks, ebXML does specify XML documents that are sent back and forth between organizations to accomplish particular tasks. The actual full ebXML specification goes further than just defining a type of document that organizations can exchange. It is intended as an actual suite of different technologies that allow many types of functionality, including exchanging business information, conducting business relationships, and registering business processes. All of this is done in a common format that all organizations can easily understand. In addition, a large amount of ready-made software is already available for exchanging ebXML messages with other organizations. In some ways, this standard provides out-of-the-box solutions for some problems rather than just a data specification. The set of standards that comprise ebXML are fairly far reaching; while there are a number of other XML components that solve similar problems for specific industries, ebXML is one of the only technologies that provides the representational flexibility to deal with many different industries, despite their different terms and procedures. RosettaNET and other frameworks have grown quite a bit over time, but there may still be some artifacts left in these frameworks that identify them as having come from a particular industry or sector—ebXML was engineered from the start to be more general in its applicability.

The following are some of the metamodels included in ebXML:

![]() Represent the “nouns and adjectives” of electronic business

Represent the “nouns and adjectives” of electronic business

![]() Define “what” reusable components can be applied

Define “what” reusable components can be applied

![]() Enable users to define data that is meaningful while also maintaining interoperability

Enable users to define data that is meaningful while also maintaining interoperability

Messaging services define services and protocols such as the following that enable data exchange:

![]() Common protocols such as SMTP, HTTP, and FTP

Common protocols such as SMTP, HTTP, and FTP

![]() Cryptographic protocols such as HTTPS/SSL

Cryptographic protocols such as HTTPS/SSL

![]() Digital signatures that can be applied to individual messages

Digital signatures that can be applied to individual messages

Among its many components, ebXML specifies a business process representation schema, a message service specification detailing how ebXML messages move between organizations, and a registry services specification. In keeping with the requirements of an open platform, all relevant technical and procedural documents are available through the ebXML project web site. If ebXML has any downside, it might be the complexity of the interrelated standards. Unlike some of the other XML technologies, the entirety of ebXML is likely not something that could easily be understood in an afternoon, but then again, neither is the complex set of processes that it is trying to represent.

While the steps to implement ebXML are not quite as simple as those described by the ebXML literature, the general outline is useful to understand.

![]() Implementer buys, builds, or configures application(s) capable of participating in selected business process

Implementer buys, builds, or configures application(s) capable of participating in selected business process

Several companies are currently pushing adoption of ebXML as a “lingua franca” for electronic business on the Internet, and its popularity has been growing steadily, enticing many organizations with the prospect of replacing several incompatible and brittle communications interfaces with one system that can handle interbusiness communication. It seems also that some convergence of other frameworks with ebXML might be on the horizon. RosettaNET has plans to include ebXML’s messaging services specification in the RosettaNET Implementation Framework (RNIF). ebXML represents a strong standard that many are interested in to promote communication between industries rather than just within industries. Later in the book, we will talk more about communication within and between industries, and how frameworks like ebXML, in conjunction with other standards, might make wide interindustry communication possible.

The next framework that we will discuss is quite a bit different from the first two, and represents one of the most recent major approaches to the topic.

Microsoft Offerings: BizTalk and .NET

BizTalk was Microsoft’s first offering in the XML frameworks. While attention is now focused on Microsoft’s .NET product suite, it is worth spending a few minutes on BizTalk to see how its architecture was organized to provide common business services.

BizTalk

BizTalk is intended to be a platform-independent solution that handles document transfer within and between organizations. Developed by Microsoft and transferred to BizTalk.org, the framework has five areas that are intended to aid in application integration:

Almost all frameworks handle some aspect of the document routing and transport function. Process automation is one of the core missions of an XML framework, and scalability and manageability services are also characteristics that most frameworks share in common. The data transformation and application integration pieces of the BizTalk functionality are what set this framework apart from the others. Many different plug-ins or adaptors are available for BizTalk that take the core framework and extend it by making data interchange with various ERPs, databases, and web services possible.

BizTalk was originally developed by Microsoft, and has since developed into two separate entities, depending on what is being discussed. Microsoft sells the BizTalk server, which is an actual software implementation of the ideas behind the framework, but there is also a BizTalk specification, similar to the RosettaNET RNIF, that lays out exactly how the heavily XML-dependent technology functions and accomplishes the tasks that it does.

The technology of BizTalk is structured around a server model. Some frameworks tend to be spoken of as a network that messages flow through, but BizTalk is usually referred to by its various BizTalk servers, through which messages flow—a slightly different focus. The server focuses on a number of areas, including:

![]() Business-to-Business and E-Commerce. This includes trading-partner integration, supply-chain integration, order management, invoicing, shipping management, etc.

Business-to-Business and E-Commerce. This includes trading-partner integration, supply-chain integration, order management, invoicing, shipping management, etc.

![]() Automated Procurement. Some of the areas involved here are Maintenance Repair and Operations (MRO), pricing and purchasing, order tracking, and government procurement.

Automated Procurement. Some of the areas involved here are Maintenance Repair and Operations (MRO), pricing and purchasing, order tracking, and government procurement.

![]() Business-to-Business Portals. This large and growing topic encompasses catalog management, content syndication, and post-sale customer management.

Business-to-Business Portals. This large and growing topic encompasses catalog management, content syndication, and post-sale customer management.

![]() Business Process Integration and Exchange. This area usually deals with integration of E-Commerce, ERP, and legacy systems.

Business Process Integration and Exchange. This area usually deals with integration of E-Commerce, ERP, and legacy systems.

In Figure 6.7, an example is provided of the layered protocol model of BizTalk. This layering of protocols is based on the OSI model for networking. The process of layering protocols involves creating a particular protocol to do something very specific and small (for example, ensuring that data is correctly moved through a physical wire or existing logical network), and then creating new protocol layers above it with higher-level functionality, each building on the services provided by protocols at lower layers. Figure 6.7 shows a simplified version of this protocol layering at several levels: First, the application talks to the BizTalk server. Then, the server uses a data-communication layer to transfer data back and forth. The data-communication layer handles technical details such as whether SOAP via HTTP or MSMQ is used to transfer the messages. On the other end of the transaction, another data-communication layer receives and interprets the messages coming in over the network, and passes them up to the BizTalk server. Finally, the server makes the information sent and received accessible to the application at the highest level. In this way, the application does not need to bother with the details of how all of this works—from its perspective, it can speak to the BizTalk server and accomplish many things simply.

But how is the sending and receiving of that data accomplished? In Figure 6.8, we see the structure of a BizTalk message. These messages are exchanged between BizTalk servers to accomplish the tasks described earlier as capabilities of the server. Note that while this information could certainly be represented in a non-XML format, the way the message structure is laid out here even looks like a rudimentary XML document. The overall BizTalk message acts as the parent wrapper to two items—the transport envelope and the BizTalk document. The document in turn contains its own children—the BizTalk header, and the document body. This message structure is typical of many XML frameworks—the “Transport Envelope” and “BizTalk Header” portions of the document usually contain various types of metadata about the document and the process that it represents. This information might contain source and destination addresses, information about the specifics of the protocol used to send it, digital signatures or security information, and information about the types of additional data the body of the message contains.

Taking a look at the message structure only provides a low-level view of what is possible with the framework. Next, we will investigate the various tools that BizTalk provides to add higher-level functionality to the base technology described above.

Given the various tasks that BizTalk servers perform, a number of tools are needed to facilitate the process. These tools help users build business processes, and the corresponding BizTalk representations of those processes. The optional tools available with BizTalk include:

![]() The Orchestration Designer. This tool provides a common visual interface to BizTalk, and aids in building integration layers for existing processes.

The Orchestration Designer. This tool provides a common visual interface to BizTalk, and aids in building integration layers for existing processes.

![]() XML Editor. For the creation of individual XML documents, an editor is provided. It also contains some capabilities that help reconcile different types of schemas.

XML Editor. For the creation of individual XML documents, an editor is provided. It also contains some capabilities that help reconcile different types of schemas.

![]() The Mapper. This tool helps in the process of mapping one data set onto another, and can generate XSLT programs that express the mapping operations that were performed.

The Mapper. This tool helps in the process of mapping one data set onto another, and can generate XSLT programs that express the mapping operations that were performed.

![]() The Messaging Manager. This helps automate the trading of profiles and agreements, using a GUI interface.

The Messaging Manager. This helps automate the trading of profiles and agreements, using a GUI interface.

These various tools support the facets of the tasks that the BizTalk server performs, and they really show how XML can shine, from defining processes and organizations as XML-based documents, to transforming data from a source to a target specification. XML documents permeate the system, but more importantly, the architectural perspective that XML provides makes the system’s flexibility possible in the first place. Some of the power behind BizTalk has been biztalk.org whose value-added services (such as use of open-source code and automated schema submission and testing) are designed to facilitate growth toward a critical mass of information.

.NET

First a few words from Microsoft to describe .NET:

Microsoft® .NET is a set of Microsoft software technologies for connecting your world of information, people, systems, and devices. It enables an unprecedented level of software integration through the use of XML Web services: small, discrete, building-block applications that connect to each other—as well as to other, larger applications—via the Internet. .NET-connected software delivers what developers need to create XML Web services and stitch them together.

Another way to look at the overall .NET initiative is to examine three of its levels:

![]() Everything needs to be a web service. This applies to both pieces of software and resources in the network like storage.

Everything needs to be a web service. This applies to both pieces of software and resources in the network like storage.

![]() You need to be able to aggregate and integrate these web services in very simple and easy ways.

You need to be able to aggregate and integrate these web services in very simple and easy ways.

![]() The system should provide a simple and compelling consumer or end-user experience.*

The system should provide a simple and compelling consumer or end-user experience.*

The most telling phrase in the above description is, “Everything needs to be a Web service.” Microsoft hopes to turn all applications including its own software into web services. Already you will notice how the properties tag reveals document-specific metadata tallying the number of minutes you have spent editing the document—this in preparation for charging for software rentals. One cynic describes the move as follows:

Once your spreadsheet talks XML, it can link across the Net into other spread-sheets and into server-based applications that offer even greater power. .NET puts little XML stub applications on your PC that do not actually do much until they are linked to the big XML servers Microsoft will be running over the Internet. You can do powerful things on tiny computers as long as you continue to pay rent to Microsoft. The effect of dot-NET is cooperative computing, but the real intent is to smooth Microsoft’s cash flow and make it more deterministic. .NET will move us from being owners to renters of software and will end, Microsoft hopes forever, the tyranny of having to introduce new versions of products just to get more revenue from users. Under .NET, we’ll pay

over and over not just for the application parts that run on Microsoft computers, not ours, but we’ll also pay for data, itself, with Microsoft taking a cut, of course.*

.NET makes use of five additional tools. In Microsoft’s own language; they are

![]() Developer Tools: Make writing Web services as simple and as easy as possible using .NET Framework and the Microsoft Visual Studio toolset.

Developer Tools: Make writing Web services as simple and as easy as possible using .NET Framework and the Microsoft Visual Studio toolset.

![]() Microsoft Servers: Best, simplest, easiest, least expensive way to aggregate and deliver Web services

Microsoft Servers: Best, simplest, easiest, least expensive way to aggregate and deliver Web services

![]() Foundation Services: Building a small set of services, like identity and notification and schematized storage, that will make it really simple for consumers or users to move from one service to another, from one application to another, or even from one environment to another

Foundation Services: Building a small set of services, like identity and notification and schematized storage, that will make it really simple for consumers or users to move from one service to another, from one application to another, or even from one environment to another

![]() Devices: We’re building a lot of device software so that people can use a family of complementary devices…to make that experience as compelling and immersive as possible.

Devices: We’re building a lot of device software so that people can use a family of complementary devices…to make that experience as compelling and immersive as possible.

![]() User Experiences: Building some very targeted end-user experiences that pull together the Web services, and that pull together and integrate a lot of functionality to deliver a very targeted experience. So we’re building: MSN for consumers, bCentral for small businesses, Office for knowledge workers, Visual Studio .NET for developers.

User Experiences: Building some very targeted end-user experiences that pull together the Web services, and that pull together and integrate a lot of functionality to deliver a very targeted experience. So we’re building: MSN for consumers, bCentral for small businesses, Office for knowledge workers, Visual Studio .NET for developers.

Some have correctly stated that the entirety of .NET is difficult to describe. The .NET initiative is a combination of a number of different elements whose relationships are as complicated as the elements themselves. Really, .NET is an initiative, not a single technology. The initiative contains a programming language (the new C# language), a virtual machine like the one used to run Java programs, a web services push, a system of allowing different programming languages to be used in conjunction with one another, a security model, a just-in-time compiler, and an object hierarchy, to name just a few. Intertwined through all of these technologies is a common thread of XML used to represent data, but it would not be accurate to say that .NET is XML or that XML is .NET. Rather, XML is used as a small but important part of a much larger initiative called .NET.

When people refer to XML and .NET, frequently what they are talking about is the extensive set of XML APIs (Application Programming Interfaces) that .NET provides for programming language access. The goal of these APIs is to make XML access as simple as possible for two reasons; first, as XML is used in many areas of .NET, tools need to be provided to work with that XML, and second, to encourage the use of XML within applications for data-representation needs.

Both BizTalk and ebXML aim at providing capabilities with XML that are not specific to any particular industry. Next, we will take a look at an XML framework that was created and grown with a specific industry in mind. Industry-specific frameworks often contain a trade-off—this next framework, Acord, sacrifices a bit of general applicability that ebXML or BizTalk might provide, in exchange for a system with preexisting components that match industry-specific needs.

Industry-Specific Initiatives

The Acord group is a non-profit organization that works to develop and promote the use of standards in the insurance industry. Created in 1970, Acord first dealt with the development of a set of forms for information gathering by insurance companies. In the 1980s, it began focusing on data standardization and is currently working on a number of XML standards related to lines of business related to insurance of various kinds. Specifically, Acord is working on standards related to property and casualty insurance, life insurance, and reinsurance. One of the reasons that Acord became involved in data standardization was to help prevent the development of many different, incompatible XML DTDs in different areas of the industry that would later have to be reconciled. Instead, Acord wishes to act as a central industry broker for these standards in a way that can build consensus among member companies. The interoperability of these industry-wide standards allows all involved parties to reap maximum benefit. Doing it right once at the industry level (instead of many times at the company level) reduces the total amount of effort needed to build the standards and allows all members to take part.

As with any good framework, Acord starts with a data model that details the metadata needed in order for effective communication to happen. Different insurance companies have different ways of storing and referring to various data items, but the central Acord data model relating to life insurance is the basis for later XML documents used to describe various data or phenomena in the insurance industry. The data model was created to allow interoperability, but was also built with an eye toward expandability. The creators of good frameworks always factor industry change into all of their decisions to prevent having to solve a similar problem again at a later date. Finally, usage of this data model allows organizations to achieve some type of consistency in representation, as well as reusability. Data models that become the de facto method of representing specific concepts can be reused in new systems so that as legacy systems are updated and replaced, the translation component can be eliminated as the new system is brought online to understand the data model natively.

Acord represents an effort by a large portion of the insurance industry to come together and develop standards to reduce costs and improve efficiency not only within companies, but across the entire industry. At the date of this writing, Acord has over 1,000 member insurance companies (including the majority of the top 25),27,000 agents, and 60 software vendors. Acord has also recently been working with the Object Management Group (OMG), a body that helps to set data standards for a number of different industries. The work that Acord has done within the insurance industry is becoming the acknowledged and adopted standard for that industry.

Along with an XML format, Acord also specifies a series of forms that allow for the collection of standard data related to various types of insurance. Predictably, these forms are based on the Acord data models, and attempt to gather standard information that will be needed regardless of the type of policy or company with which a customer is doing business. At an industry level, Acord can take steps to make sure that these individual forms are compliant with various information-collection laws and regulations. They also ensure that information collected by an Acord form in one organization will be transferable to another organization that uses the same forms, rather than requiring a complicated data-reconciliation process.

Acord also has developed and maintains its own EDI standard, called AL3 (Automation Level 3). It tends to focus on communication between property and casualty insurance companies, the agents of those companies, and other industry trading partners. The system is currently being used for exchanging accounting, policy, and claims submission and processing infor- mation. The AL3 standard also delves into some standardization of business processes, as with other XML-based frameworks. The flow of data and the business processes that require it often go hand in hand, so it is not strange to see data standards next to business-process standards.

Envera

The Envera Hub is a good example of a mature framework usage for the chemical industry. The industry achieved a strong start toward development of a common XML-based framework-based approach to information exchange. The Envera Hub was developed to be a business-to-business transaction clearinghouse with an in-depth menu of value-added services for its members. Implemented by a consortium of multinational chemical-industry players, the hub facilitated transaction exchange with other members. This provided an incentive to join the hub.

Envera developed a Shockwave presentation describing both the business case and the utility of the hub model. Unfortunately, the demo is no longer available. We will present some shots from the demonstration to illustrate the type of technological sophistication achieved by this XML framework.

Members were equity partners in the venture and all were supposed to realize cost savings and begin to learn more about their industry using the metadata collected by the hub. For example, the hub accumulated volumes of data on one of its consortium members’ product-shipping logistics. This included data on specific product orders, quantities, warehousing requirements, shipping routes, and schedules. Hub operators “mined” this data on its members’ behalf—for example, offering residual space in containers to the market as well as cargo vessels not filled to capacity, or warehouse space that is about to become vacant. Members also realized savings from reduced complexity in order fulfillment, lower working-capital requirements, and revenue boosts through re-engineered distribution channels. Figure 6.9 lists the various areas of the enterprise where savings were made possible.

Figure 6.10 shows just some of the ways that organizations might attempt to work together as partners. Organizations must become good at managing procedures in order to use them effectively. Figure 6.11 shows various types of cross-organization communication used to complete a single transaction. The hub replaces much of this variability both in terms of business processes and technical complexities.

Figure 6.11 Cross-organization communication used to complete a single transaction and replaced by the Envera Hub.

The Envera Hub “learns and/or is taught” Member A and Member B’s metadata. Once this is understood, the Hub can develop translation rules required to make Member A’s document understood by Member B’s system. Figure 6.12 shows how the XML translators work.

All of this is achieved via a commonly understood XML vocabulary, which enables members to reap online business-to-business efficiencies regardless of their IT sophistication. The hub permits XML-based transaction exchange across many different types of organizations involved in the chemical business. Using XML as the basis, the Envera group developed detailed understanding of the data structures and uses within the industry.

Figure 6.13 shows the use of the translators reformatting an XML purchase order-acknowledgement document. Best of all, the interfaces can be maintained by XML because the translation rules themselves are stored as XML documents. This means that maintenance can be performed using modern Database Management System, or “DBMS,” technologies instead of reading through custom programs of spaghetti code.

Figure 6.14 illustrates a final significant ability of the hub, that it could link to other hubs and types of systems. Once Envera learned how to translate between any two systems (say a logistics and a cashforecasting system), new systems of either type could be added with virtually no additional effort. By the same sort of leverage, once Envera was hooked to other hubs, the connectivity multiplier was enormous. New companies could often be added to the hub with little or no new development.

The fact that the Envera hub has not received wider recognition has been more due to the pre-dot.com bust than to any technical issues with the hub. As this book is being finished, in the fall of 2003, the Envera hub seems to be an underappreciated asset that comes as a bonus to a corporate merger.

Common Themes and Services

At this point, we have taken a look at a number of different frameworks. While the approach to solving problems is different from framework to framework, they do have quite a bit in common. The common themes of these XML frameworks include:

Integration of applications and business partners

![]() Framework servers exchange using open W3C-standard XML

Framework servers exchange using open W3C-standard XML

![]() Framework document transformation is done in W3C-standard XSLT

Framework document transformation is done in W3C-standard XSLT

![]() Improved operating efficiencies

Improved operating efficiencies

![]() Strengthened customer relationships and service

Strengthened customer relationships and service

![]() Tighter supply-chain integration

Tighter supply-chain integration

Framework servers support multiple protocols and common services, including:

![]() Electronic data interchange (EDI)

Electronic data interchange (EDI)

![]() Extensible Markup Language (XML)

Extensible Markup Language (XML)

![]() Hypertext Transfer Protocol (HTTP)

Hypertext Transfer Protocol (HTTP)

![]() Multipurpose Internet Mail Extensions (MIME)

Multipurpose Internet Mail Extensions (MIME)

![]() Simple Mail Transfer Protocol (SMTP)

Simple Mail Transfer Protocol (SMTP)

Common XML-based framework advantages include:

![]() Relatively less technical expertise required

Relatively less technical expertise required

![]() Flexible and open architecture

Flexible and open architecture

![]() Open to businesses of all sizes

Open to businesses of all sizes

![]() Open binding architecture (allows any developer to build adaptors that allow their products to be accessed from the framework server)

Open binding architecture (allows any developer to build adaptors that allow their products to be accessed from the framework server)

![]() Sends, receives, and queues messages with exactly-once semantics

Sends, receives, and queues messages with exactly-once semantics

These themes are also shared by additional offerings beyond those discussed, such as the Sun ONE framework pictured in Figure 6.15.

Figure 6.15 Sun One XML-based framework. (From Sun ONE Architecture Guide: Delivering Services on Demand. Courtesy of Sun Microsystems Inc., www.sun.com.)

XML frameworks in general frequently attempt to take the emphasis off of data structures and specific platforms by standardizing the datainterchange aspect of trading, and instead try to focus on the business processes behind the flow of information. After all, the reason why businesses have information systems in the first place is to facilitate their business processes. One of the most frequent problems with any type of technology is that the user gets distracted by the minutiae and details of the technology and does not spend the necessary time thinking through the business rules and logic behind what the technology is doing. By setting up automated systems for carrying on business transactions, these XML frameworks are trying to put as much focus on the business process as possible.

These frameworks all share the concept of the “metamodel”—a technical description of a business process. These models represent the “verbs” of electronic business, and provide a common way for specifying processes. For example, one organization might wish to purchase goods from another. The business process is represented at its core by the use of the verb “to purchase.” The information models, on the other hand, represent the “nouns” and “adjectives” of electronic business. They define what reusable components can be applied to various processes. Taking the example of purchasing, when a purchasing process is invoked, it must have some object on which to operate—the organization itself and the item that is being purchased represent nouns. Adjectives frequently modify the nouns to give a clearer picture of exactly which type of item is being described. The information models allows users to define data that is meaningful while maintaining interoperability. The interplay of these two ideas is illustrated in Figure 6.16 by taking a business request (purchasing a particular item) and showing how different parts of the request are understood and dealt with by an XML framework.

Conclusion

The benefits of using the frameworks described in this chapter have become a compelling argument for organizations to move toward more extensive use of the frameworks and other components of XML as well. Interestingly, these frameworks seem to be methods of creating rallying points for entire industries—the industry comes together to create a set of data standards and a way of exchanging them in XML for the benefit of everyone involved. As competition within industries has intensified, in many situations competitors find themselves linked by supplier/purchaser relationships. Facilitating more efficient communication within industries increases efficiency and allows for even more vigorous competition. Even before XML became popular, the trend was toward increasing contact and involvement between organizations and industries. In many cases, the adoption of these XML frameworks is just a signal of the acceleration of that trend.

The frameworks presented in this chapter represent concrete examples of how XML is delivering on some the things that were always promised of it. XML-language documents and the architectural directions that XML opened up for the information world are the bones of these frameworks. These bones are what make the difference between the one-off solutions for solving specific trading problems, and the general, flexible, and elegant solutions described in this chapter. It is probably a safe prediction to say that the value of XML frameworks will increase along with their overall use over the coming years.

Not everything about XML frameworks is absolutely perfect, though. Just like any technology, XML frameworks can in some situations raise as many questions as they answer. One of these questions is the way in which the frameworks will be integrated. As we have seen earlier, some frameworks are starting to collaborate, as is the case with RosettaNET and ebXML. XML frameworks are proliferating, and not all of them interoperate correctly with one another. Larger organizations that take part in a number of different industries may find themselves still facing the issue of maintaining multiple interfaces (representing various different frame-works) with different trading partners. Interoperability between frameworks, and something of a meta-framework that represents the metadata, business process standardization, and document-exchange methods that would tie frameworks together would be most valuable. Frameworks have shown the myriad benefits that are to be had when intraindustry communication can be automated and managed effectively. Reconciling those frameworks and bringing the same benefits to the larger community of industries may well represent another level of gains of a similar magnitude. Later in the book, methods for how these frameworks might be reconciled will be discussed along with the potential implications for the industries that take part.

*http://www.ebXML.org, accessed in 10/2001.

*http://Microsoft.net/net/defined/default.asp, accessed 10/2001.

*Data, Know Thyself by Robert X. Cringely (http://www.pbs.org/cringely/pulpit/pulpit20010412.html), accessed on 10/2001.