XML and DM Basics

Introduction

XML equips organizations with the tools to develop programmatic solutions to manage their data interchange environments using the same economies of scale associated with their DM environments. XML complements existing DM efforts nicely and enables the development of new and innovative DM techniques. Rather than looking at data management as a set of many problems, each consisting of a method of transporting, transforming, or otherwise evolving data from one point to alternate forms, practitioners can now look at the big picture of DM—the complete set of systems working together, and how data moves amongst them. This is jumping up one conceptual level—moving from thinking of the challenge in terms of its individual instances to thinking of it in terms of a class of similar challenges. It is an architectural pattern that is seen frequently as systems evolve. First, a solution is created for a specific problem. Next, a slightly different problem arises that requires another specific solution. As more and more conceptually similar problems arise that differ only in the details, eventually it makes sense from an architectural standpoint to develop a general solution that deals with an entire class of similar problems by focusing on their commonalities rather than their differences.

This first chapter begins by describing a present-day DM challenge. We then present the definitions of DATA and METADATA used throughout the book. The next section presents a brief overview of DM. This is followed by a justification for investing in metadata/DM. We acknowledge the challenge of XML’s hype, but we will provide a brief introduction and two short examples. These lead to an overview of the intersection of DM and XML. The chapter closes with a few XML caveats. We are presenting this information in order to help you make the case that investing in metadata is not only a good idea, but also necessary in order to realize the full benefit of XML and related IT investments.

The DM Challenge

An organization that we worked with once presented us with our most vexing DM challenge. When working with them to resolve a structural data quality situation, our team discovered it would be helpful to have access to a certain master list that was maintained on another machine located physically elsewhere in the organization. Better access to the master list would have sped up validation efforts we were performing as part of the corrective data quality engineering. In order to obtain a more timely copy—ours was a l-year-old extract refreshed annually—we requested access through the proper channels, crossing many desks without action. As the situation was elevated, one individual decided to address the problem by informally creating a means of accessing the master list data. Volunteering to take on the effort, the individual neglected to mention the effort to our team, and consequently never understood the team’s access requirements. Ten months after the initial request, the individual approached us with a solution created over two weeks. The solution allowed us to retrieve up to twelve records of master data at a time with a web browser, and access incorporated a substantial lag time.

After realizing that this solution was inadequate, our team managed to get the attention of developers who worked with the master list directly. They in turn offered their own solution—a utility that they described as capable of handling large volumes of data. This solution also proved inadequate. After one year, our requirements had not changed. We needed approximately four million records weekly to ensure that we were working with the most current data. These extracts were necessary since transactional access to live data was not available.

This was a reasonable and remarkably unchallenging technical request. The sticking point that made both of the offered solutions inappropriate was the way they were built. Because of the way the system was constructed, queries on particular items had to be done one at a time, and could not be done in bulk. This meant that when tools were built for us to access “large volumes of data,” those tools simply automated the process of issuing tens of thousands of individual requests. Not surprisingly, extracting the volumes of data that we needed would have put an untenable burden on the system.

While this organization had many technically brilliant individuals, they only used the tools that they knew. As a result, we were unable to gain access to the data that we required. Many aspects of this situation might have been helped by the judicious application of XML to their DM practices.

Over the coming pages, we will describe many of the lessons we have learned with respect to how XML can help data managers. Organizations have resources in the knowledge that resides in the heads of their workers as well as in their systems. The way systems are built can either take advantage of that knowledge, or render it impotent.

Whether you are a database manager, administrator, or an application developer, XML will profoundly impact the way in which you think about and practice data management. Understanding how to correctly manage and apply XML-based metadata will be the key to advancements in the field. XML represents a large and varied body of metadata-related technologies, each of which has individual applications and strengths. Understanding the XML conceptual architecture is central to understanding the applications and strengths of XML, which are presented later in this chapter.

Why are you reading this book? Chances are that you opened the cover because XML is impacting your organization already, or will be very shortly. If you are interested in this material, our experience shows us that one or more of the following is probably true:

![]() You are leading a group that is working with XML.

You are leading a group that is working with XML.

![]() Your new application will benefit from the ability to speak a common language with other platforms.

Your new application will benefit from the ability to speak a common language with other platforms.

![]() You are a technical analyst and need higher-level information on the XML component architecture.

You are a technical analyst and need higher-level information on the XML component architecture.

![]() You are in a business group tasked with ensuring return on existing or potential technology investment.

You are in a business group tasked with ensuring return on existing or potential technology investment.

![]() You are in IT planning and you need to understand how XML will impact various technology investment decisions.

You are in IT planning and you need to understand how XML will impact various technology investment decisions.

![]() You are a CIO and you want to ensure that the organization is able to take advantage of modern XML-based DM technologies.

You are a CIO and you want to ensure that the organization is able to take advantage of modern XML-based DM technologies.

As a group, you are busy professionals who share a concern for effective architecture-driven development practices. You are as likely to be a manager tasked with leading XML development, as you are to be a technical XML specialist. If you are the latter, you already know that the best and most up-to-date XML documentation is of course on the web precisely because of the web’s superior ability to publish and republish information from a central location. Because of the proliferation of excellent documentation on the technical aspects of XML, we will be focusing on the strategic implications for a variety of different roles within the organization.

Definitions

This section presents the definitions that we will use when referring to data and metadata.

Data and Information

Before defining DM, it is necessary to formally define data and metadata. These definitions may be understood with the assistance of Figure 1.1.

Figure 1.1 Deriving information from its two predecessors—facts and data. (Original description from Appleton, 1983..)

1. Each fact combines with one or more meanings. For example, the fact “$5.99” combines with the meaning “price.”

2. Each specific fact and meaning combination is referred to as a datum. For example, the fact “$5.99” is combined with the meaning “price” to form an understandable sentence: The item has a price of USD $5.99.

3. Information is one or more pieces of data that are returned in response to a specific request such as, How much does that used CD cost? The answer, or returned data, is USD $5.99. This request could be a simple acquisition of the data. But whether the data is pulled from a system or pushed to a user, a dataset is used to answer a specific question. This request or acquisition of the data is essentially the question being asked.

There are numerous other possible definitions and organizations of these terms relative to one another, usually specific to certain information disciplines, like applications development. The reason for using this set of definitions is that it acknowledges the need to pair context with an information system’s raw response. The fact “123 Pine Street” means nothing without the paired meaning “address.” A particular dataset is only useful within the context of a request. For example, what looks like a random assortment of house-for-sale listings becomes meaningful when we know that it was the result of a query requesting all houses within a particular price range in a particular area of town. This contextual model of data and information is important when we consider that one of the most common problems in information systems is that organizations have huge quantities of data that cannot be accessed properly or put into context. Facts without meanings are of limited value. The way facts are paired with meanings touches on the next term we will define—metadata.

Metadata

The concept of metadata is central to understanding the usefulness of XML. If an organization is to invest in XML and metadata, it is important to understand what it is, so that those investments can be exploited to their fullest potential.

Many people incorrectly believe that metadata is a special type or class of data that they already have. While organizations have it whether they have looked at it or not, metadata actually represents a use of existing facts rather than a type of data itself What does it mean to say that it is a use of facts? Metadata has to do with the structure and meaning of facts. In other words, metadata describes the use of those facts. Looking back at Figure 1.1, metadata acts as the meaning paired with the facts used to create data.

Take for example a standard hammer. This object can be looked at in two ways. On one hand, it is just an ordinary object, like an automobile, or even a highway bridge. While it is possible to look at it as an object just like any other, what makes the hammer powerful is the understanding of its use. A hammer is something that drives nails—that is how we understand the hammer! Metadata is the same. Rather than understanding it just as data (as some might claim that the hammer is just an object), we understand it in terms of its use—metadata is a categorization and explanation of facts. The implications of the actual meaning of metadata must be understood. In the coming discussion, we will outline why understanding of metadata is critical.

When metadata is treated like data, the benefits are no more or less than just having more data in an existing system. This is because looking at it simply as data causes it to be treated like any other data. When metadata is viewed as a use of data, it gains additional properties. For example, data sources can be conceptually “indexed” using the metadata, allowing anyone to determine which data is being captured by which system. Wouldn’t it be useful to know all of the places throughout a system where integer data was used within the context of uniquely identifying a customer? The benefits of data transparency throughout the organization are numerous—the application of metadata to create this transparency is one of the topics that we will address.

To explain why the understanding of a concept is important in the way it is used, let’s take another example. When the first personal computers were coming onto the market for households, most people thought of them as curious toys that were useful for things such as playing games, writing rudimentary programs, and possibly even keeping track of personal information. The evolution of personal computers is marked primarily by shifts in the way people think about them, leading to computers branching into new areas. Today, many people think of personal computers mainly as platforms to enable connectivity to the Internet, and subsequently as communication devices even though their conceptual operation has not changed since their earliest days. While the speed of computers may have increased by leaps and bounds, their underlying architecture, based on the original Von Neumann computer architecture,* has notchanged. The critical aspect that allowed computers to radically expand their utility to home users was a shift in the way people thought about them. Similarly, as practitioners shift their understanding of metadata toward looking at it as an application of data, so too can the benefits derived increase substantially.

Traditional Views of Metadata

The basic definition of the term “metadata” has been limited, and as a result, metadata has been underutilized both in research and in practice. Widely used “poor” metadata definitions and practices lead to higher systems costs. Most savings that compound from proper recognition of metadata are simply not articulated in practice. Incorporating the correct definition of metadata into our collective understanding will lead to more efficient systems development practices and more effective information technology investments. In short, widening the way that we think about metadata will inevitably widen its applicability and value.

Let us take a quick look at metadata’s most popular definitions, which are examples of definitions of types of data:

![]() Popularly: “data about data”*

Popularly: “data about data”*

![]() Newly: data resource data—“data that is useful to understand how data can be used as a resource” (Bracket, 2002)

Newly: data resource data—“data that is useful to understand how data can be used as a resource” (Bracket, 2002)

![]() Formally: the international standard, ISO 11179. The data that makes datasets more useable by users. †

Formally: the international standard, ISO 11179. The data that makes datasets more useable by users. †

![]() Intellectual Property: At one point in time, attempting to make the term proprietary, one organization/person copyrighted/patented the term as intellectual property.‡

Intellectual Property: At one point in time, attempting to make the term proprietary, one organization/person copyrighted/patented the term as intellectual property.‡

The main point to take away from this discussion of metadata is that how a concept is understood greatly impacts how useful it is in practice. The distinction presented between the two possible ways of understanding metadata may seem like a small point, but as we will see with examples throughout the book, its impact is profound. Next, we will present an overview of DM.

DM Overview

Between 1994 and 1995, research at the MITRE Corporation near Washington, DC, formalized a definition of DM as the interrelated coordination of six functions (Figure 1.2) in a way that has become a de facto standard. Each DM function will be described in the sections below. (The DM framework, figure, and definitions that follow are all derived from Parker [1995] and Aiken [In press].) The first three functions of DM provide direction to the latter three—responsible for implementation of a process designed with the specific aim of producing business value. Please note that we specifically differentiate this definition from the previous, narrower DM practices. The figure illustrates the effect of business direction-based input to DM, providing guidance to individual functions. Several functions also exchange specific feedback of the process information and these have also been noted on the figure—the feedback permits process fine-tuning. These functions provide the next level of granularity at which we have asked organizations to rate their DM maturity.

Data Program Coordination

The focus of the data program coordination function (Figure 1.3) is the management of data program data (or meta-meta-metadata). These are descriptive propositions or observations needed to establish, document, sustain, control, and improve enterprise data-oriented activities (e.g., vision, goals, policies, processes, standards, plans, budgets, metrics, training, feedback, and repositories and their contents). Every organization that creates and manipulates collections of data has a data program coordination function, whether it is called that or not. The effectiveness of the data program depends on whether or not it is actively managed, with well-understood responsibilities, procedures, processes, metrics, and two-way lines of communication, and has the ability to improve its practices based on feedback gathered proactively from business users, administrators, programmers, analysts, designers, data stewards, integrators, and coordinators. A mature data program is further characterized by its use of a system to plan for, allocate resources for, and track and manage data program-related activities in an accurate, repeatable fashion. Results from historical activities captured by the system are used to optimize data program management practices. Data program management characteristics include vision, goals, policies, organization, processes, plans, standards, measures, audits, guidance, and schedules.

Enterprise Data Integration

The focus of the Enterprise Data Integration function (Figure 1.4) is the management of data-development data or meta-metadata. These are descriptive facts, propositions, or observations used to develop and document the structures and interrelationships of data (e.g., data models, data- base designs, specifications, and data registries and their contents).

Enterprise Data Integration measures assess the extent to which an organization understands and manages collections of data and data architectures across functional boundaries and is a measure of the degree to which the data of an enterprise can be considered to be integrated. Maturity in this arena is measured in terms of proactive versus reactive management. Mature enterprise data integration practices include the proactive observation and study of data integration patterns, managed by an enterprise-wide entity consisting of IT personnel and business users, utilizing tools with Computer-Aided Software Engineering (CASE)-like functionality. This entity constantly anticipates and reevaluates the organization’s data integration needs, and manages their fulfillment. Enterprise data integration sub-functions include identification, definition, specification, sourcing, standardization, planning, measures, cost leverage, productivity, enterprise data engineering commitment, budgeting, developing metrics, guidance, policies, and standards.

Data Stewardship

The focus of the enterprise data integration function (Figure 1.5) is the management of data-stewardship data or metadata. These are descriptive facts about data, documenting their semantics and syntax, e.g., name, definition, format, length, and data dictionaries and their contents. Data stewardship’s primary objective is the management of the enterprise data assets in order to improve their accessibility, reusability, and quality. A data stewardship entity’s responsibilities include the capture, documentation, and dissemination of data naming standards, entity, and attribute definitions; business rules; and data retention criteria, data change management procedures, and data quality analysis. The data stewardship function is most effective, and therefore most mature, if managed at an enterprise level, with well-understood responsibilities and procedures, and the ability to improve its practices over time. Data stewardship sub-functions are more narrowly focused on identification definition, specification, sourcing, and standardization.

Data Development

The focus of the data program coordination function (Figure 1.6) is the management of business. These are business facts and their constructs used to accomplish enterprise business activities, e.g., data elements, records, files, databases, data warehouses, reports, displays, and their contents. As new data collections are created to satisfy a new line of business, or the needs or practices of an existing line of business necessitate change, at what organizational level are the challenges of database design managed? Enterprises with more mature data development practices are characterized by their ability to estimate the resources necessary for a given data development activity, their proactive discovery and correction of data quality problems, and by their use of tools with CASE-like functionality at an organization-wide level.

Data Support Operations

Data support operations are also focused on business data. Organizations with mature data support operations have established standards and procedures at the enterprise level for managing and optimizing the performance of their databases, managing changes to their data catalog, and managing the physical aspects of their data assets, including data backup and recovery, and disaster recovery.

Investing in Metadata/Data Management

Organizations that understand the true power of metadata and XML stand to gain competitive advantages in two areas. First, a significant reduction in the amount of resources spent transforming data from one format to another, both inside and outside the organization, is one of the benefits of XML as it is frequently used today. Second, using XMLbased metadata allows for the development of more flexible systems architectures.

Fundamentally, having architectural components exist as metadata permits them to be programmatically manipulated and reused by existing technologies. If you are still having trouble convincing your management to invest in XML, try the following argument: Ask them which version of the following two sets of data they would find easier to comprehend. In

Figure 1.7, the context and semantics of the data are unspecified and subject to misinterpretation.

In the second version, shown in Figure 1.8, XML tags have been added describing the structure and the definition of the concepts “shopping list,” and “flight status.” The XML version with its embedded metadata structure makes the data easier to understand—not only at the high level of “shopping list” or “flight status,” but also at lower levels, giving meaning to individual data points (“product price”) within the context of a larger data structure. This is critical to understanding the ability of organizations to use this powerful simplicity. XML labels should be designed to be human understandable, as opposed to labels defined for the convenience of computer processing—the implications resulting from the human ability to understand metadata structures at multiple levels are substantial. In Figure 1.8, we see another case of how metadata is a use of data. In the shopping list, we see the product “lettuce.” The word “lettuce” all by itself is simply a string, but the metadata “product” in this case shows the user of the document how that string variable is being used, not as a non sequitur or as a request for something on a burger, but as a string variable to identify a product within the context of a shopping list.

Typical Systems Evolution

To appreciate the situation that most organizations are in today with respect to their DM practices, it is important to understand how they evolved over time. It helps to have a solid idea of where organizations are coming from in order to understand the challenges of the present.

Historically, large organizations have had a number of individual systems run by various groups, each of which deals with a particular portion of the enterprise. For example, many organizations have systems that hold marketing data related to finding new business, manufacturing data related to production and potentially forecasting, research and development data, payroll data for employees, personnel data within human resources, and a number of other systems as illustrated in Figure 1.9.

At first, these systems were not connected because of the fact that they evolved in different ways at different paces. As organizations have learned of the numerous benefits of connecting these systems, the need to build interfaces between systems has grown quickly. For example, it is often very useful for the marketing department working with marketing data to have some type of access to manufacturing data, to ensure that customer promises are in line with manufacturing capacity. It is also critical to join payroll and personnel data so that if employees move or change names and notify human resources, their paychecks can be sent to the appropriate names and addresses.

XML lntegration

The solution to the issue of integrating many disparate data systems was frequently to develop one-time solutions to data interchange. The costs related to maintaining custom electronic data interchange (EDI) solutions were often underestimated, and organizations to this day are frequently plagued by inflexible, brittle software meant to perform these tasks. The essential problem with these systems as they have traditionally been designed is that the thinking that went into their architecture was essentially point to point. Developers of a link from System A to System B developed what was requested, but may not have taken into account the way the individual systems interacted with other systems that perhaps were not within the immediate scope of their data interchange project.

Today, many organizations have grown their data systems to quite an amazing size and level of complexity. This level has long since exceeded the level of complexity where it made sense to think of the relationships between systems in terms of point-to-point connections. While the point-to-point connections were initially cheaper to implement than considering “the big picture” of intercommunication between systems, they are now major barriers to future development. Not only are they typically limited in functionality, they also tend to require quite astounding ongoing investments in the way of routine maintenance, updating the software as the system evolves underneath it, and working around its architectural limitations. XML’s ability to increase the number of programmatic solutions that organizations are able to develop can only help this situation.

Data Integration/Exchange Challenges

The same word or term may have many different meanings depending on whom you ask. Take as an example the word “customer”—what does it mean? If a person from the accounting department is asked for a definition of customer, he or she might respond that a customer is an organization or individual that buys products or services. If a person in the service department was asked, the individual might respond that a customer is a client in the general public who bought a product and needs service on that product. Asking a third person in the sales department yields a third answer. In the sales department, a customer is a potential retailer of a product line.

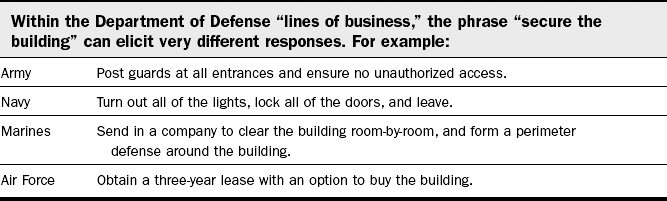

Definition confusion doesn’t stop at simple terms, but extends to phrases and concepts that would otherwise seem clear. For example, our colleague Burt Parker has made the following illustration numerous times (Table 1.1).

Every organization of any size will find a multitude of its own examples of phrases that have different meanings to different branches internally. Often it seems that the most solid concepts are the ones that most frequently cause problems of interpretation. The concept of “unique identifier” seems quite solid, but in fact the data that comprise a unique identifier even for the same concept may differ across various data systems.

What is needed is information that explains and details what is stored in a particular data store and what its actual meaning is. As we’ve just found out, it may not be enough to know that a particular data item contains information on a particular “customer.” Since the concept of customer can differ based on context, more information is needed about the surrounding data and business context in order to decipher seemingly simple terms.

Managing Joan Smith’s Metadata

Another challenge frequently encountered is that of data duplication, and the issue of data in some systems being “out of sync” with what should be complementary data in another system. To illustrate this point, the example of Joan Smith is provided. Joan Smith is a loyal and frequent customer of a particular organization. The organization would like to know more about Joan Smith in order to better serve her, but efforts at pulling together the information about her contained in different data stores have encountered a number of thorny problems. As illustrated in the SAS Institute’s articulation of a somewhat typical data engineering challenge, this company’s problem was that they did not have a good idea who its customers were. This translated directly into a lack of understanding of the company’s organizational DM practices and its metadata.

As an example, consider how a single customer, Ms. Joan E. Smith, appears multiple times in data stores for a number of reasons. As it turns out, “Joan Smith” is not really “Joan Smith” but a number of different individual data items whose metadata must be reconciled. In the customer data-base, Joan Smith is known as “Joan E. Smith.” The next instance occurred when the call center operator misheard or mistyped Ms. Smith’s name during a customer contact. The call center database only has record of calls placed to “Joanie Smitt.” The third instance occurred because Ms. Smith’s browser reported her identify as “J E Smith” when she visited the web site. The fourth identity was created because of data from a vendor list of prospective customers. The process to acquire this list did not require that existing customers be removed. It is reasonable to ask whether the goal of obtaining the most accurate and complete information is possible, given the poor quality of the data. Ms. Smith might even acquire a fourth identify as “Jon E. Smith” through some other system or business process. The authors of this book often even fill in forms with name variations in order to track who is selling information to whom. These problems of multiple records for a single individual lead to excessive maintenance costs if they are addressed at all, and create an inability to provide reliable information.

Figure 1.10 A very nice articulation of a common type of data-quality problem—not having a good idea who your customers really are. (Advertisement courtesy of SAS Industries, www.sas.com. Copyright © 2004 SAS Institute Inc. Cary, NC, USA. All rights reserved.)

In this example, the DM challenge is rooted in the metadata as the organization has no idea from where it gets its data. Understanding how XML has developed in the minds of management and even its core users is an important part of knowing what perceptions already surround XML and how the hype can be tempered with fact. This is presented in the next subsection.

XML Hype: Management by Magazine

Managers have been told a number of different things about XML and its impacts. It has been promised to us as the “silver bullet.” DM technology adoption is high and still rising, but our experience indicates that most organizations have not yet developed sufficient maturity to use XMLbased DM successfully. Unfortunately, some organizations have brought XML-related technologies in-house so that they can be seen as applying a new technology—not in response to a particular challenge to which XML is well suited. Organizations are being driven by magazine article pressure to “adopt XML.” Most organizations “adopt XML” by wrapping a few data items up in unstructured XML tags, most of which likely don’t fall into a larger architecture or pattern. Some organizations actually structure their XML elements into larger architectural components, and gains the advantages associated with utilizing those architectural components, as opposed to using individual data items.

This section closes with a set of descriptions of hype-related statistics surrounding XML adoption. The first points out that more than half of organizations do not use metadata cataloging tools, which strongly suggests that they may not be using or valuing their metadata as appropriate. Since metadata is such a crucial component of work with XML and just about any other data technology, it makes sense that those organizations that are not making investments in their metadata may not see the maximum benefit possible from those investments.

Repositories are often used within organizations to store metadata and other derived data assets. These repositories keep track of metadata so that it can be reused in other projects elsewhere in the organization—they help enable information reuse. In Figures 1.12 and 1.13, we see that barely more than 50% of repository builders take a structured approach to the development of their repository, and less than half plan to adopt repository technology in the future. Organizations have long known that an unstructured approach to other projects such as applications development can be costly, and repository development is no different.

Figure 1.12 Just over half of repository builders use a structured approach to repository development.

Figure 1.14 lists perceived XML adoption challenges. A commonly held perception has been that immature XML standards have been a barrier to its adoption. In truth, these days there are numerous mature and solid standards to work with, and while some standards are still being developed, the majority of what is needed for most organizations is ready for implementation and has been for some time. Toward the bottom of the figure, we see that organizations do not view the complexity of XML as a barrier to adoption. Later, we will see that this perception is correct and that XML does have an elegant simplicity to it that eases adoption. Finally, immature metadata management practices are not seen as a barrier to XML adoption. There are two sides to that issue. While it is true that an organization need not have mature metadata management capabilities to adopt XML, we would argue that these capabilities are crucial to getting the most out of any XML investment.

This section has dealt with what managers and users have been told about XML. But what is it that you really need to know about XML? Let us take a look at a few things that are important to keep in mind, from the perspective of those who developed XML and what they think is important to know about it.

Two Examples of XML in Context

XML is architected to be architected. Most of the XML technologies and standards that exist today are built on top of “lower layers” of XML—it is possible to stack XML on top of itself, building more robust structures by applying existing engineering principles. In this way, newly developed XML technologies can take advantage of the already existing technologies. This can be thought of like floors in a building—the foundation supports each layer of the building. If the foundation is built correctly, an architect can even build a skyscraper on top of it.

In this section, we will discuss two specific applications of XML within context. These are examples of building an application on top of the foundation that XML provides, and the benefits that come along with doing this. The purpose of these examples is to give you a taste for some of the possibilities that come with using XML-based data and metadata.

Internet Congestion and Application Efficiency

Internet congestion can be reduced using XML-based delivery architectures. This is accomplished by reducing the number of transfers required to complete a business transaction on the Internet. The current approach requires a given server—for example, a travel savings server—to respond to each inquiry with an entire web page. In typical scenarios, refining travel options often requires six or more queries for each trip. This allows the passenger to find the right seats, times, and flights for the trip. In many cases, extra server requests are generated by simple rearrangements of data that the client already has. For example, sometimes it may be preferable to sort flights by total price when budget is the concern, while other times the customer may want to see them sorted by departure times when scheduling is the greater concern. In this situation, another request is generated even though the data being returned will be identical, only ordered differently.

Large savings are possible if the efficiency of the server could be increased. The necessary task is to eliminate redundant work done by the server—the process of sending documents with identical data differing only in the order of the records. Using XML-based data management, an organization would serve up a copy of the most likely used data, wrapped in XML and accompanied by an XML-aware Java applet or equivalent. This approach permits the Java program to manipulate the XML on the client side. The idea can further be expanded with the use of related XML technologies that aid other aspects of the process, such as data presentation. Rather than sending the same redundant presentation data over and over to the client, the XML document the client receives may refer to another resource that describes how the data is to be presented. The result is that presentation data is fetched once, rather than each time any modification to the displayed data is requested. While the savings created from reducing the load from an average of six to two queries might not seem significant, its effect is amplified when there are 6,000 transactions per minute, effectively reducing the overall number of requests for that resource by two-thirds.

Some people might accurately point out that XML also has the potential to increase total traffic on the Internet. Once organizations find out the types of services and capabilities that are possible with XML, they may begin to consume these services more and more. In this sense, traffic may increase not because of a technical problem with XML, but because of increased overall demand for the services provided by XML and related technologies. This is strangely similar to situations that manufacturers find themselves in all the time—by reducing the individual price of an item (which can be equated with increasing the ease of use and power of an existing application reworked to take advantage of XML) the total demand for the item increases dramatically. In this sense, increased network traffic should be seen as a sign that the use of XML is succeeding—it should not be viewed simply as an increased cost.

Information Location

One of the most immediately promising aspects of using XML is exploiting its self-describing nature to aid in information location problems. Many organizational information tasks can be broken down into two systems communicating with one another. The key to this communication is in the answers to two questions—What does the system on the other end of the line actually store, and what does the data being stored mean? XML-based metadata is the answer to these questions that allows the communication to take place.

One common solution to the problem of locating data is to create a system in which metadata is published by various other systems. This metadata clearinghouse then acts as a central location that the larger community of applications can consult. Such a metadata repository can answer common systems questions such as, “Which systems store information on customers?” and “What are the acceptable values for this particular code?” This central XML repository eliminates the old point-to-point thinking along with the way data interchange is often done, and introduces a new concept of dialog between networks of related systems. Such systems exist today, and their users are already reaping the benefits. The architecture of most systems will eventually change from the semi-chaotic legacy base of today, teeming with individually maintained connections between systems, to one that values metadata. This will enable huge savings as both low-level devices and high-level systems become capable of information location and analysis as a direct result of their access to metadata.

Let us take an example that is often seen in many corporate environments. Due to new requirements, a new system must be built that generates reports and statistics from many different data sources. At a high level, the business wants to see reports that logically span several different information storage areas, for example, linking customers to shipping records, and so on. The old way of developing this type of system would have involved setting up links between the new reporting application and the many different data sources from which it pulled. That in turn would involve first figuring out which data was stored where, how it might be accessed, and how the data would be formatted once the application received it. Using XML’s information location abilities, if the systems from which the data were to be pulled would publish XML metadata descriptions of what the systems contain, things could be simplified. The new application would simply consult this “metadata catalog,” quickly discovering which data items were located in which systems. Again using an XML-based messaging method, it could connect directly to these systems and fetch the necessary information. Given that the data would be formatted in XML and the metadata would already be understood, the new application would not even have to put as much effort into reformatting data from whatever arcane format the other system’s platform dictated.

The change from the existing legacy systems to metadata-consuming systems has started slowly, but will likely accelerate. One major area of competition between companies is the level of efficiency at which they can operate with respect to their goods, services, and management of data. As many companies begin to adopt new practices, the benefits derived from these practices will be reaped in terms of efficiency, creating more competition and impetus for others to follow. Effective and efficient management of data is already a best business practice as well as a critical success factor for most organizations. Some organizations may choose better DM for its benefits. Others in the future will choose it because they are forced to—keeping up with the competition may require it! XML-based metadata management simply extends existing ideas about organizational data and metadata management by attempting to extend the gains already seen within this area.

The architectural aspects of XML have important and wide-ranging implications for how it is used as a component of DM approaches. The next section deals with how these core features of XML translate into changes in the role of data management.

XML & DM Interaction Overview

DM is the practice of organizing and using data resources to the maximal benefit of the organization. DM is understood in several different contexts, from a high-level strategic discipline to a very low-level technical practice. DM often encompasses efforts at standardizing naming and usage conventions for data, profiling and analyzing data in search of trends, storing and formatting it for use in numerous different contexts, and evaluating data quality. In short, one might say that DM is tasked with making sure the right people have the right data at the right time, and that it is factually correct, useful, relevant, and accessible.

XML is more than just a way to represent data. Just as we noted about metadata toward the beginning of the chapter, the way XML is understood will impact how it is used and what benefit is derived from it. One of the specific profound impacts of XML that is appearing is its effect on data management. XML will change DM in three major ways:

1. XML will allow the management of data that was previously unstructured. This will expand the definition of DM to include data sources that previously were not addressed by existing DM capabilities.

2. XML will expand data management’s role in applications development. As applications are increasingly developed in ways that

focus primarily on the data and how it is used in the application, XML and its connection to DM will grow in influence.

3. XML’s capabilities will influence the increasingly frequent DM task of preparing data for interchange, along with associated data quality issues.

As XML changes DM overall, organizations must be sure that their DM practices are mature so that they can take advantage of the benefits. Organizations have typically shown low- or entry-level metadata management practices both across time and industry. A number of different attempts have been made to effectively measure the status of DM within organizations—most recently, the authors have researched the maturity of organizational DM practices. Given the correct surveying tool, it is possible to assess how mature the practices of a particular organization are. When comparing this data against data of other companies within the same industry, an organization can gain knowledge about its relative strengths and weaknesses. This information is crucial for organizations to discover where they are lacking or where they might yet find additional efficiencies relative to their competition.

Aside from information concerning outside competition, a DM assessment can indicate which benefits of XML can be immediately realized. For organizations with mature DM practices, it is likely that some of the advantages XML brings to the table will be like a breath of fresh air, prompting data managers to exclaim, “I always wished there was something that could do that!” For organizations with less mature practices, some of XML’s advantages may not be within immediate reach.

Let’s take a look at the three XML and DM interactions previously stated and address them individually.

Management of Unstructured Data

Organizational data can be divided into two categories, structured and unstructured. Structured data is what most people think of when they think of organizational data to be managed. Unstructured data, on the other hand, is data stored in formats that is not as easily accessible or searchable as data one might find in a relational database. Examples of unstructured data would include Word documents, PowerPoint presentations, e-mails, memos, notes, and basically just about anything that would be found on the average worker’s PC as opposed to in a centralized “data store.” Another way of describing unstructured data is that it is usually intended for human consumption, where the read mechanism is a pair of eyes, and the write mechanism is a keyboard. Structured information, conversely, is usually intended for consumption by computer systems and intermediaries before it reaches humans. Some people prefer to describe structured and unstructured data as tabular and non-tabular data—tabular data could be viewed as data that would fit into a table, or a rectangular array, while non-tabular data tends to represent more complicated and less atomic information that has to be put in a different form in order to be utilized. This definition generally captures the right idea, but is slightly inaccurate since structured information need not necessarily be tabular.

When asked, most managers would initially respond that the bulk of their important data is stored in structured formats where it is most easily accessible. In fact, research into the overall quantity and location of data (Finkelstein, 1998) within organizations tends to point in quite the opposite direction—80% of data is stored in unstructured formats, while only 20% is stored in structured formats. This large skew is due in part to the massive amount of valuable information transmitted as unstructured e-mails between workers. The effect, though, is that organizations are applying DM ideas to only 20% of their data! Large quantities of data that are critical to everyday operation are floating around in a number of different formats on desktop PCs. Many people have experienced problems in the past with multiple versions of a document floating around, not being able to locate the right document in a timely manner, and problems surrounding possession and versioning control of documents that are collaborative efforts. Furthermore, when a worker leaves an organization, the information in his or her head is lost.

The concept of XML-based metadata brings a number of new possibilities to the arena of managing this unstructured information. Part of the problem with today’s unstructured information is that the amount of metadata related to the documents is insufficient, and because of this the search facilities that exist to locate documents tend to be somewhat lacking. For example, it is very difficult to query documents with questions like, “Show me all documents that contain annotations by Joan Smith between November 1 and November 20.” Similar queries inside of structured datasets are much more straightforward. When structure can be given to previously unstructured information and metadata associated with particular data items within documents, the number of ways that the documents can be used and reused increases dramatically.

Expanded DM Roles

Some of data management’s roles related to applications development will be expanded with the use of XML. For example,

![]() Greatly expanded volumes of data will require a fundamentally different approach than has been practiced in the past.

Greatly expanded volumes of data will require a fundamentally different approach than has been practiced in the past.

![]() DM can play a greater role in areas that have previously been off-limits, such as capacity management.

DM can play a greater role in areas that have previously been off-limits, such as capacity management.

![]() As an integration technology, DM permits more effective use of technologies focusing on the management of both data and metadata.

As an integration technology, DM permits more effective use of technologies focusing on the management of both data and metadata.

The amount of data being managed today far exceeds the amounts managed even a few years ago. As organizations start new data initiatives, including correlation of their data with external datasets, data mining, and business intelligence, the variety and quantity that must be managed have grown rapidly. DM technology itself must then change with the evolving requirements of the data itself if the organization is to keep pace and not lose ground by increasing its overall management burden.

People normally do not think of data managers as individuals who can help in more technical areas such as capacity management. But keeping in mind our earlier example dealing with the airline reservation system and the potential to reduce the total number of queries against the system, we see that data managers can make quite a splash. The way that data is delivered determines how much capacity will be needed in order to accomplish delivery. The way the data is structured and managed in turn determines the way in which it is delivered. In other words, by creating effective data structures at the outset, benefits can be obtained further “down the pipeline,” minimizing the resources necessary to disseminate the data to the appropriate parties. The structure of data is important. Shipping companies have long known how to stack boxes and crates of all sizes and shapes to maximize space efficiency inside trucks. DM may play a similar role to get the most out of existing technical capacity.

Technologies within the overall architectural family of XML bring a number of new methods to the table in terms of the way we think about data. Take for example a subsystem that consists of three distinct processes, each of which is intended to perform a particular transformation upon the inputted data. In order to get the data from one format to another, it has to first go through a three-step pipeline on the way to its destination. One of the core concepts of good architecture has always been simplicity; wherever there are three components that can be replaced by one component, it is frequently wise to do so. The trick is to have enough information so that you can determine which configuration is most appropriate for any given situation.

In the case of this collaborative data transformation system described, had the data at the first stage been expressed in terms of an XML structure, another XML technology known as XSLT (Extensible Stylesheet Language Transformations) could have been applied to transform the structure from its original form into its final form in one simple step. XSLT, a component that will be addressed in more detail in the component architecture chapter, essentially allows one form of XML document to be transformed into another. In the case of our example, eliminating the intermediate steps obviates the need to maintain three legacy systems and the associated code. It also simplifies the overall process, which makes it easier to document, understand, and manage. Finally, the XSLT transformations we allude to are also XML documents, and are subject to all of the advantages of any other XML document. This means that you can use XSLT to transform XML documents, or other XSLT structures, replacing volumes of spaghetti code and if/then statements with well-defined data structures that can perform common data manipulation tasks. These structures in turn can be applied to thousands of documents as easily as they are applied to one document.

Let us take a look at a solid example of how XSLT and other XML technologies can reduce the burden related to data transformation. Imagine a system that contains 15,000 XML documents, each of which relates to a particular employee within the organization. This data must be moved into another system for subsequent processing, but unfortunately the foreign system requires that the <employee> XML element be transcribed as <associate>. Using previous DM approaches, this potentially could have required modification of thousands of lines of legacy code (written by programmers who are no longer on staff) at an enormous cost of both time and money. Since XML is designed to operate on itself and to be “reflexive,” XSLT allows the trained analyst to quickly design a service that will do simple and even complex tag modifications. In other words, since XSLT allows data transformations to be expressed in terms of already well-understood XML documents, the transformation processes themselves can be managed the same as any other data. How many legacy systems out there allow data transformation business rules to be stored, modified, and managed like any other data structure? The answer is not many.

Preparation of Organizational Data for E-Business

Another way in which XML will greatly impact DM is that data will require better preparation. Anyone can put arbitrary XML tags around existing data, but in order to take advantage of the benefits of XML, the data has to be properly prepared so that it makes sense within its new XML “context.” Again we see the theme of XML requiring a shift in the way managers think about their data. It is worth mentioning that this particular impact of XML on DM is something that requires some work in order to be of benefit. Effective XML usage requires addressing data at a structural level as well as at the “tag” level. Most data management groups within organizations have not advanced past wrapping individual data items up in tags, and have not yet taken a serious look at the overall structure and how it fits into a larger organizational DM challenge.

Most of the chapter up until this point has been talking about the positive aspects of XML, and what it is good for. To avoid the pitfall of misapplication or over-application of technology that seems so common in information technology, it is important to temper enthusiasm with a bit of reality by discussing what XML is not, and where it should not be used.

What XML Is Not: XML Drawbacks and Limitations

Frequently, XML is sold as the “silver bullet” technology, when in fact it is not. While it is a vital addition to the tool chest of just about any organization, it is ultimately the judicious and appropriate application of the technology that solves problems rather than any “magic” that is attributed to the mere existence of it.

As with any data technology, effective use of XML requires fore-thought and planning. Architectural decisions that are made before the technology component of a project comes into play often determine the benefit that the organization will derive from the use of XML based technologies. Early adopters of XML, who were driven by the “management by magazine” phenomenon described earlier in the chapter, sometimes feel that the benefits of XML are limited. In these situations, the benefits of XML are in fact somewhat limited. In order to reap the full benefit of XML, metadata must be understood in terms of its proper definition—an application of data—and strategy must be put in place accordingly. When XML is used simply as a new data format and nothing else, it is not surprising that the gains associated with it are accordingly limited.

One of the concepts that will be addressed repeatedly throughout this book is that the abilities of XML should be used in conjunction with solid DM and engineering principles. Just as steel beams can be used to craft magnificent skyscrapers in the hands of a skilled architect, XML can do wonderful things when handled skillfully. If the architect does not spend sufficient time fleshing out the plans, however, nothing about the steel beam itself will prevent the building from falling.

There is more on what XML is and is not in Chapter 3. Please also see numerous entries under the CONTENT section at Fabian Pascal’s site at http://www.dbdebunk.com/.

Chapter Summary

The purpose of this chapter has basically been just to warm up for what is coming in the rest of the book. The basic definitions have been laid out, along with a discussion of what XML is, and what it is not. The points in this chapter are good to keep in mind throughout the rest of the book. The next chapter examines XML technologies as a way of building on the understanding gained in this chapter.

References

Aiken, P., Mattia, A., et al. (in press). Measuring data management’s maturity: An industry’s self-assessment. IEEE IT Computer.

Appleton, D. Law of the data jungle. Datamation. 1983;29:225–230.

Brackett, M.H., Data resource design and remodeling. Olympia, WA. 2002.

Finkelstein, C., Aiken, P.H. Building corporate portals using XML. New York: McGraw-Hill; 1998.

Parker, B., Chambless, L., et al. Data management capability maturity model. McLean, VA: MITRE; 1995.

*http://www.csupomona.edu/~hnriley/www/VonN.html

*ISO 11179

†http://www.iso.org/iso/en/CatalogueDetaiIPage.CatalogueDetail?CSNUMBER=31367&IC SI=35&ICS2=40&ICS3=.

‡The metadata company did this as a trademark: http://www.metadata.com/word.htm.