Chapter 11

Test Your Hypotheses

In every block of marble I see a statue as plain as though it stood before me, shaped and perfect in attitude and action. I have only to hew away the rough walls that imprison the lovely apparition to reveal it to the other eyes as mine see it.

—Michelangelo

This book has already presented you with lots of ideas to improve your marketing. As you’ve read through the chapters, you’ve probably recognized problems affecting your own company’s communications.

You may be tempted to make changes immediately without testing them. “Some of these things obviously need to change,” you’ll tell yourself. You may be right.

Or not.

If you take anything away from this book, I hope it’s an understanding of the importance of testing. The only way to know what really works is to gain experience from many tests. From seeing many results, you can discover human behavior patterns from similar test situations and continuously improve your conversion intuition.

This chapter is all about building a test plan and putting your good ideas into action. In Chapters 4 (“Create Hypotheses with the LIFT Model”) through 10 (“Optimize for Urgency”), you learned how to analyze your pages and create powerful hypotheses for testing. Now you need to put those ideas into a structure that you and your team can follow to run your tests.

Starting with a test plan can save you from being surprised by organizational or technical barriers or, worse, losing support after wasting time on directionless tests.

A test plan solidifies the goal for your test, outlines the structure of the experiment, locks down a valid control, and avoids common mistakes. In this chapter, you’ll learn how to build a test plan that you can follow to get dramatic conversion-rate and revenue lift.

Set Test Goals

The first decision in your test plan is to set the goal or goals for your test. The goals for each of your tests should match the overall conversion-rate optimization goals you identified and prioritized in Chapter 2, “What Is Conversion Optimization.” Individual tests may focus on one or more of your conversion-optimization goals, depending where the test page is in your conversion funnel.

Types of Goals

You can set a wide variety of goals. Following is a brainstorming idea list of typical goals. Remember that your conversion optimization goal should be as close to revenue as possible.

Lead-Generation Goals

If lead generation is your overall purpose, the goals might include any of the following specific user actions:

- Request a quote

- Request an in-person demo

- Request a phone call

- Request a situation analysis

- Request market information

- Book a meeting

- Ask a question

- Complete a contact form inquiry

- Download software

- Sign up for trial offer

- Request a printed brochure

- Request a catalogue

- Download an online brochure

- Download a whitepaper

- Download an e-book

- Download a worksheet

- Download a case study

- Create an online quote

- View an overview video

- Take a quiz or poll

- Join a contest

- Fill out a needs-analysis questionnaire

- Use a needs-analysis wizard

- Use an interactive savings or ROI calculator

- Click to call

- Click to chat

- Make a phone call

- Register for a webinar

- Register for a conference

- Sign up for a newsletter

- Sign up for a blog subscription

- Sign up for an RSS feed subscription

E-Commerce Goals

For e-commerce, your goals might be based on any of these common metrics:

- E-commerce purchase conversion rate

- Average order value

- Return on ad spend

- Revenue per visitor

Or they might include any of the following user actions:

- Request a catalog

- Ask a question

- Click to call

- Click to chat

- Sign up for a newsletter

- Sign up for a blog subscription

- Sign up for an RSS feed subscription

- Add to cart

- Save to a wish list

- Sign up for auto-reordering

- Add accessories (up-sell)

Affiliate Marketing Goals

For affiliate marketing, your goals might include a specific revenue-per-visitor value, along with any of the following actions:

- Click through to an affiliate site

- Fill out a needs-analysis questionnaire

- Use a needs-analysis wizard

- Use an interactive savings or ROI calculator

- Create an online quote

- Sign up for a newsletter

- Sign up for a blog subscription

- Sign up for an RSS feed subscription

- Find a service provider

- Find savings in your area

Subscription Goals

Subscription goals might include any of the following actions and metrics:

- Sign up for a free trial subscription

- Upgrade subscription

- Paid subscription signups

- Average subscription signup value

There are many more goals you can track, depending on your business model. For each test, choose the one that drives the most revenue. Once you have identified the goals for your test, you’ll be ready to set up your conversion-optimization experiment and get testing.

Use Clickthroughs with Caution

One of the most common metrics used for judging marketing effectiveness is the clickthrough rate (CTR). Many people use it to measure their banner ads, paid search listings, and even internal promotions within their websites.

The problem is that when marketers use CTR as the primary success metric, it’s misleading. Measuring the effectiveness of your ads independently of the rest of your conversion funnel doesn’t tell you much about how well your ad performed. It only tells you that people click the ad. But they may have clicked the ad for many different reasons. Maybe they were just curious or confused by the ad. A click alone doesn’t measure your prospects’ intent to purchase.

Ben Louie at PlentyOfFish (www.pof.com) shared a humorous example that demonstrates how CTR measurement could lead to misleading results. He ran a banner-ad test that promoted Electronic Arts’s Need for Speed video game. The test included one of EA’s professionally designed banners with the EA logo, a concise value proposition, and a photo of one of the race cars from the game. We’ll call this the control ad. The other was, as Ben described it, “Some [bleep] ad I made in five minutes in Microsoft Paint,” which we’ll lovingly call the challenger.

Control

Challenger

Care to guess the result?

Based on the success measure of clickthrough rate, the challenger more than doubled the control. The control had a 0.049 percent CTR and the challenger got a 0.137 percent CTR. That’s a 180 percent CTR lift!

But which ad generated higher sales and revenue? Maybe the challenger would also have produced more players and subscribers for the Need for Speed game. Or, maybe more of the challenger prospects clicked out of curiosity or looking for a laugh. Maybe they didn’t convert, and the control produced more revenue.

We don’t know. This test wasn’t tracked through to conversions or revenue.

Ben ran this test and publicized it in a somewhat tongue-in-cheek way, and he assures me that most of his tests are tracked through to more important conversion goals. But it’s a vivid example of the importance of choosing the right goal to track.

Measuring with CTR has done more harm than good for online advertisers. Whenever possible, your conversion-optimization goals should show purchase intent or, even better, represent real revenue.

Once you have decided on your most important conversion goal for your experiment, you have to translate that into a technical goal trigger that will be tracked by the testing tool.

Goal Triggers

The goal you track must be represented by a specific action the visitor takes on the website, like a button click or a visit to a page. Think about an action on the site that the visitors will do only once they have completed the goal. Experiment goals can be calculations like average order value, but they still must be triggered by something simpler, such as the viewing of a purchase-confirmation page. In most cases, calculated goals should be secondary to direct revenue-generating conversion rates and are for more advanced test strategies.

In an e-commerce purchase, the goal trigger could be placed on the post-purchase thank-you or purchase-confirmation page. A quote request, contact form, or download will likely have a similar thank-you page.

In most testing tools, you have a technical action that triggers a conversion goal. The tool may give you a snippet of code called a goal script that triggers your conversion goal, or a setting within the tool interface that defines the user action. You want to trigger that goal at a point that can only occur after a visitor has completed the goal.

After requesting a travel guide from www.GoHawaii.com, for example, I was shown a thank-you page. If the Hawaii Tourism Authority had been running a test to increase their guide request conversion rates, they would identify this page as the conversion-goal trigger.

In some cases, you may want to recognize clicking a link as a goal rather than a post-action page view. For example, clicking a Like button or a click that leads to a page on a different website (or at least a different domain name) may require a click goal. Your developer can modify your technical goal setup so that it’s triggered when visitors click a link rather than when they visit a thank-you page.

If you have a testing tool that is installed site-wide (or not installed with JavaScript at all), you may be able to do your goal setting in the tool’s interface rather than modify the page code. Check with your testing tool vendor or conversion consultant for specific instructions for triggering conversion goals.

However your goals are set up technically, make sure you understand exactly how they’re triggered. Being certain about this technical setup will give you more confidence when analyzing the test results.

The One-Goal Goal

In Chapter 9, “Optimize for Distraction,” you learned that having too many options, messages, and goals distracts your prospects from understanding your primary message. Giving your prospects choice in how to respond may seem like a good thing to do. But the evidence is clear that more choice isn’t always better. Too many calls to action and goals reduce overall conversions.

When you’re setting your conversion goal, can you whittle the options down to just one? If you can, you’ll give yourself a much easier time in analyzing the results later. An unambiguous winning result is more fun than holding debates over relative goal value when a test is complete.

Maybe you have secondary goals that you also think are important to support your primary goal. That’s fine, but they don’t all have to be tracked as conversions for the sake of your optimization tests.

Remember what you learned in Chapter 2 about the difference between conversion-optimization goals and web-analytics goals: if your supporting goals and micro-conversions are truly supporting your main goal, they’ll be captured in the results of your test when the primary goal’s conversion rate is lifted. They don’t need to be tracked as test goals.

For example, WiderFunnel had a client whose primary goal on their retail catalog website was e-commerce sales. They also tracked “add to cart” and “time on site” as key performance indicators in their web-analytics tool. Initially, they wanted to track those supporting metrics as conversion-optimization goals within our tests as well.

The question we asked was, “How would the outcome change if one of these supporting goals outperformed for a challenger where the primary goal under-performed?” If a challenger variation were to double the add-to-cart conversion rate, but final sales were cut in half, that wouldn’t be considered a win, would it? The client agreed to track only sales for the tests, and we also tracked the micro-conversions as secondary goals outside of the testing tool. The client ended up with both a significant lift in sales and an understanding of how micro-conversion behavior was affected.

You shouldn’t include supporting goals for your conversion optimization. Whenever possible, focus your tests on the single primary goal for your test pages. If you do have more than one revenue-related end goal, you’ll need to set up multi-goal conversion tracking.

Multi-Goal Tracking

In many businesses, sales don’t tidily fit into a single conversion scenario. You may have several types of conversions on your website that are tied to revenue. You may even have offline sales channels to consider. Multi-goal tracking allows you to measure all the revenue-driving conversions and optimize for overall revenue.

For each of your goals, online and offline, you need to assign a value. You can use the relative goal values you set in Chapter 2 for this.

Phone Call Conversions

At WiderFunnel, we often set up multi-channel conversion tracking for companies that drive their online marketing to offline conversions. By tracking phone calls and online leads independently, you’ll find some challengers that attract your prospects to different channels and can maximize overall lead value.

Phone call conversions produce some of the most valuable leads for companies that use a lead-generation strategy. You may find, as many of WiderFunnel’s clients have, that your sales conversion rate and average order value are higher for inbound phone calls than for online form leads.

To track phone calls, you need to assign unique phone numbers for each test variation. Several companies provide tracking phone numbers, such as Ifbyphone (www.ifbyphone.com), Mongoose Metrics (www.mongoosemetrics.com), and ClickPath (www.clickpath.com). The unique numbers should be added to each page and updated throughout the website for each test participant.

Remember to also use a new unique phone number for the control page. If you don’t, you’ll inflate conversions for the control; that’s because some callers will have the number written down, cached in their browser from before the test began, or will be part of a test-exclusion segment.

In an ideal world, your phone call conversions would be counted only when sales occur. But, as you know, this isn’t an ideal world. This revenue tracking by test variation is often challenging for organizations whose inbound call center’s customer relationship management (CRM) system isn’t able to attach the phone number to the lead. If manual entry of phone numbers would be required in order to track revenue, don’t track it. In my experience, the inaccuracy of manual phone number entry is much greater than that of estimating phone lead value.

If you can’t track phone calls to revenue, you may want to count only phone calls over a certain call length as conversions. Then you can estimate the value of those leads based on your phone call to sale conversion rate and average order value during the test period.

There are added complications to multi-channel conversion testing that you should be aware of. Your testing tool probably won’t have built-in integration with the phone-tracking providers. Online and offline conversions will need to be exported separately and combined in a spreadsheet. Then the various conversion points will need to be normalized, combined, and calculated for statistical significance. You may find it reassuring to bring in a consulting firm with experience in this type of multi-channel testing to help so you can trust the results.

Multiple Online Conversions

To track multiple online goals, your testing tool needs to allow you to track goals independently. Ideally, it should let you set goal values; but if it doesn’t, you can calculate the weighted values in a spreadsheet once the test is complete.

Be sure to set up revenue tracking for e-commerce sites as well. Increasing average order value can be just as effective as the sales conversion rate, and you’ll want to be able to include that in your results analysis.

Keep It Simple, Smartypants

Even though I’ve just covered the ideal-world scenarios for tracking accurate conversions to revenue, I urge you to practice the KISS principle when getting started with goal setting. I don’t want you to fall into the trap we’ve helped many companies out of—being frozen by an over-concern for goal accuracy. They’re so concerned with precision that they stop everything until they’re 100 percent convinced that their test results would stand up to a doctoral thesis committee. Determining relative goal values down to the fifth decimal point isn’t necessary or productive.

Start with what you can get. If it takes you more than a week to determine your goal values, stop and simplify.

Remember, all tests have inherent inaccuracies, but any test is more accurate than running on gut feeling. A strange phenomenon happens when you introduce the concept of testing. Often, people become fixated by the numbers and statistical certainty and forget that any accuracy level is better than not testing.

In Chapter 12, “Analyze Your Test Results,” you’ll learn how to evaluate multiple weighted conversion goals, including revenue metrics.

Along with selecting your goals, you’ll need to determine the type of experiment to run. But first it will help to understand how to choose your test page.

Choose the Test Area

Test-page opportunities can take several forms and may not look like pages at all. What I call a test page may be a single page, a site-wide page template, a repeating section that appears on many pages, a microsite, or a series of pages (that is, a defined conversion funnel or split-path test). Each of these types of opportunities can be tested and has different characteristics to consider.

Templated Pages

Template-driven pages make up most website content pages. You’ll know the page you want to test is template-driven if it looks similar to other pages on your website. It may be updated through a CMS system or e-commerce platform.

At WiderFunnel, we often test layouts at the page-template level to find the best layout and site-wide content. Testing template pages typically involves hypotheses related to eyeflow, layout clarity, and overall company value proposition. These types of tests tend not to be focused on testing the value proposition of individual products.

We love these types of tests and often see some of the most rewarding results with them, because they reach most of the website visitors at once. There are several reasons to consider testing site-wide page templates:

- Layout tests complete much more quickly because most visitors to the site see the template pages and are entered into the experiment.

- Visitors’ experience on the site is more consistent if all pages on the same template have the same layout variation.

- The learning from the aggregate templated pages is more applicable to the entire website than trying to apply learning from an individual page to all other similar pages.

- You can test more dramatic eyeflow and layout approaches than you can if you test individual pages within the website, because you don’t have to worry about inconsistency across the site if visitors see a different variation for each page.

- Testing your site templates is used to redesign your site using an evolutionary site redesign (ESR) approach where the site design evolves based on tested changes that improve business results, as opposed to a traditional abrupt site-redesign project.

The downside of template tests is that it’s more difficult to vary individual product messaging. When your test includes dozens, hundreds, or even thousands of pages, you can’t create unique content variations for all of them.

Individual Static Test Pages

On the other hand, if you want to test content that is unique to individual high-traffic pages, you have a good reason to test on a single page rather than a template. For example, on a product or service page, you may want to find the features and images that work best, which can only be tested at the individual page level.

Single, static, stand-alone pages are the most straightforward test pages. These are usually marketing landing pages that sit outside of your website’s content-management system (CMS). The Hair Club case study in Chapter 9 is an example of a static campaign landing-page test.

When testing individual landing pages, you can test more specific content related to that product, service, or topic. Your test design is less constrained to fit into a corporate website or be integrated with dynamic content.

The downside of testing on a single landing page is that your traffic level will usually be lower than with a site-wide test. In that case, your landing page test will need to run longer than website tests to complete with statistical significance.

Individual Pages with Dynamic Content

A hybrid type of testable page can appear when your individual page includes dynamic content or has database interactions. Dynamic elements can include server-side form interactions, product and price updates from a database, social commenting sections, and dependencies on a CMS.

Individual pages with dynamic content may still give you the flexibility to test dramatically different design, layout, and content than you can within a website structure. The technical setup may be a little more complicated, and you’ll need a developer involved to connect the test sections with database content.

Site-Wide Section Tests

You can also test elements within your website that affect more than just a single template.

Stylesheet Test

Modern web design follows the principle of separating content from design. This structure allows you to create content without worrying about the design becoming outdated. The content is independent of the look and feel and, theoretically, can be easily updated as the design is updated in the future.

Cascading Style Sheets (CSS) is the web equivalent of the stylesheets you may be familiar with from Microsoft Word or other document publishing software. With CSS, the web developer identifies the types of content on the site and then defines the attributes of each of those types.

For example, each piece of body copy on the site is labeled as such. Then, when the designer decides that the body copy should change from 10px Verdana font to 12px Helvetica, only one CSS file needs to change to alter the look of every piece of body copy site-wide.

Doing a stylesheet swap test is an easy way to test design and layout elements throughout the site. You can change anything that is defined by your CSS file, from type size and headline treatment to column placement and page color.

Many websites are still not fully CSS-driven, however. If yours isn’t, a CSS test may not give you much flexibility. You’ll need to target specific site-wide areas to test the entire site experience.

Navigation and Header Test

Your site’s navigation structure is an important part of your information architecture. It can help or hinder your prospects’ ability to find what they’re looking for. Are the navigation labels clear? Do they use words that are relevant to your prospects? Rather than debate with your colleagues over semantics, You Should Test That!

Navigation and header areas are often overlooked opportunities for value-proposition positioning as well. You may find that assurance messages in the header remind prospects of your key messages when they need it. Those messages can improve your conversion rates, even if your visitors don’t click them often.

Persistent Call-to-Action Areas

Do you have consistent areas in your website template that include a call to action? It may be promoting quote requests, newsletter signups, whitepaper or e-book downloads, or social media connections. Many websites do, especially for sites that generate leads.

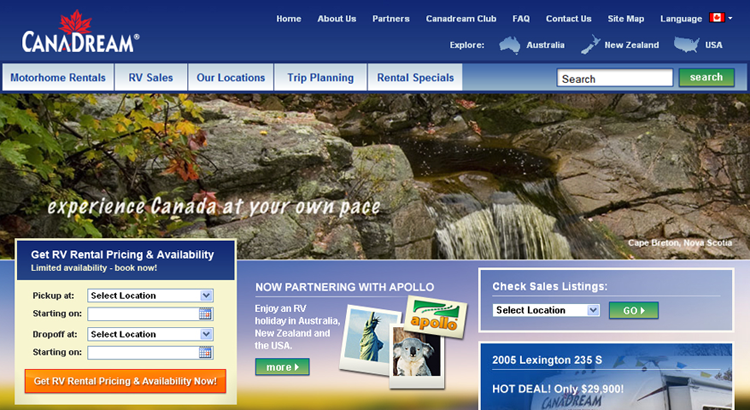

I’ve coined the term persistent call-to-action areas (PCTAs) for these. Testing PCTAs can be a powerful way to target nearly convinced prospects and move them to act. These site-wide sections can produce significant test results. The following case study shows a PCTA test that doubled conversions for one company.

- In-depth web analytics audit of the entire www.CanaDream.com website to understand and evaluate business goals and key performance indicators (KPIs), website metrics, visitor experience, and purchase flow

- Review of identified conversion issues and prioritization of pages to test

- Analysis of priority high-traffic pages using the LIFT Model to analyze pages along the six conversion dimensions:

- Create scientific marketing hypotheses

- Wireframing of all test variations

- Design and copywriting

- HTML/CSS build and quality assurance

- Technical installation instructions

- Test round launch

- Results monitoring, analysis, and recommendations

- Increased the prominence of the headline

- Aligned input fields to ease eyeflow

- Changed the shape of the disrupting angled background area

- Increased the prominence of the call-to-action button

- Changed the call-to-action button copy to match the form headline

Choose the Test Type

I’m often asked, “For conversion-rate optimization, should I run an A/B/n test or a multivariate test?” The short answer is, “Yes!”

Of course, I know the real question being asked is, “Which method should I emphasize?” There is an ongoing debate between proponents of multivariate (or multivariable or MVT) testing and A/B/n testing over which method gets better results.

Consider Traffic Volume

The monthly traffic volume on your test page will be a big part of the test type decision. Lower-traffic sites need to consider more carefully how many challengers are run in each test round.

I’m often asked how much traffic you need to run tests. Although testing the duration to achieve statistical significance is notoriously unpredictable, as a general rule of thumb, you’ll need between 100 and 400 conversions per challenger. We’ve had tests complete with fewer and some that have needed 10 times more, though.

Multivariate Testing

Some people, especially some software vendors, believe conversion-optimization testing and multivariate testing to be two ways of saying the same thing. In their view, the technology and statistics behind the tests provide the value, and therefore the more complex multivariate testing should deliver the best results. I disagree, based on WiderFunnel’s results: multivariate testing does play a role in your conversion-optimization strategy, but it shouldn’t play the leading part.

In Chapter 2, you saw how testing works by redirecting prospects to different challenger pages or swapping a single section for each variation. The difference between A/B/n testing and MVT is that MVT swaps content within multiple sections on the same page and compares all the possible combinations. With MVT, each variable is tested against each of the other variables.

In the case of a test setup with three sections and three variables in each, adding one more test variable in just one of the sections would increase the test combinations from 27 to 36:

3 3 4 = 36

There are distinct advantages to MVT, namely the abilities to do the following:

- Easily isolate many small page elements and understand their individual effects on conversion rate

- Measure interaction effects between independent elements to find compound effects

- Follow a more conservative path of incremental conversion-rate improvement

- Facilitate interesting statistical analysis of interaction effects

But multivariate testing also has downsides:

- MVT usually requires many more variable combinations to be run than A/B/n.

- MVT requires more traffic to reach statistical significance than A/B/n.

- Major layout changes aren’t possible.

- All variations within each swapbox area must make sense together, which restricts the marketer’s freedom to try new positioning approaches.

- MVT either gives approximated results (if using Taguchi or a similar test design) or requires exponentially more traffic (if using full factorial) than A/B/n.

A/B/n Testing

A/B/n testing is much less dependent on advanced technology. It often doesn’t take full advantage of a testing tool’s capabilities and is therefore much less interesting for technology vendors. After all, there may not be a need for high licensing fees on testing tools if you can get the same results without spending much on the technology.

Advantages of A/B/n testing are as follows:

- The conversion strategist isn’t constrained by which areas of the page to vary.

- You can test more dramatic layout, design, page-consolidation, and value-proposition variations.

- Advanced analytics can be installed and evaluated for each variation (examples include click heatmaps, phone call tracking, analytics integration, and so on).

- Test rounds usually complete more quickly than with MVT.

- Often, you can achieve more dramatic conversion-rate lift results.

- Individual elements and interaction effects can still be isolated for learning.

Here are the disadvantages of A/B/n testing:

- The test rounds must be planned carefully if a goal is to measure interaction effects between isolated elements.

- Any Taguchi or design-of-experiment setups must be planned manually.

We take a software-agnostic approach at WiderFunnel, so we don’t need to promote software features unless they will produce better results. We find that we can get better results for our clients by not constraining ourselves to the features of a particular testing tool. This approach gives us the freedom to recommend the type of test plan that will get the best results.

My Recommendation: Emphasize A/B/n Testing

The comfort MVT gives is enticing. But I’m a businessperson, and I recognize the significant opportunity cost of running the prolonged tests that often are unavoidable with MVT.

At WiderFunnel, we run one multivariate test for every 8 to 10 A/B/n test rounds. In most cases, our first test rounds on a web page or conversion funnel start with A/B/n testing of the major elements (such as value-proposition emphasis, page layout, copy length, and eyeflow manipulation). In a third or fourth test round, we may want to investigate interaction effects using MVT, usually only on pages with more than 60,000–100,000 unique monthly visitors.

Alternative Path Tests

For defined conversion funnels, you may want to test different multi-page paths rather than single pages. This split-path (or alternative flow) testing is a type of A/B/n test where, instead of just altering a single page, you change multiple sequential pages. Examples include multi-step checkout paths, multi-page forms, and product recommendation wizards.

Alternative path testing allows you to maintain consistency of design elements throughout the path and change the number or sequence of steps. In a simple example, if you were to test a checkout path and wanted to test the button color in the cart, you should change the buttons on all pages in the checkout to match. Alternatively, testing individual pages would produce inconsistency throughout the path that could decrease sales and outweigh any improvement from the change.

Once you’ve chosen your test target page and type of test, you’re ready to create your challenger variations.

Isolate for Insights

There are three overarching purposes for conversion optimization: lift, learning, and insights.

The first purpose, lift, is to gain conversion-rate and revenue increase. This is why most companies are attracted to testing. They’ve seen the case studies about the great test results other companies get, and they want those results, too! If I could give you a 10 percent, 20 percent, 50 percent or higher conversion-rate lift, you’d want that, right?

The second purpose, learning, is where you understand which changes have the biggest positive effect. This is the reason you plan tests with single variable isolations. Some people aren’t initially interested in this part. Many people think that a page is optimized after you’ve tested it once and seen a good conversion-rate lift. They don’t understand how important the ongoing process of learning and iterating is to achieving long-term results. Everything a company learns can be built on and leveraged in other areas.

The third purpose, insights, is where you plan for learning that gleans insights about your customers and prospects. These insights happen when you discover the “why” behind the results you observe. When you see a pattern that implies a motivational driver or usability principle, you can use it to create many more test hypotheses. We’ll talk about this more in Chapter 13, “Strategic Marketing Optimization.”

The learning and insights outcomes are dependent on test isolations. Isolations allow you to learn which changes are influencing the test results.

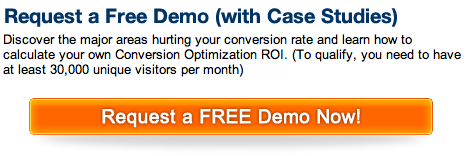

For example, on the WiderFunnel blog, we’ve tested using a call to action at the bottom of each blog post.

We could run a test on this section to isolate whether this is the best offer. One alternative could be to test changing the offer message to say “Request a Free Demo (with Case Studies).”

That test variable would isolate which of those two offer wording options is the most effective. There are several hypotheses within this test, so it’s not a pure isolation. The words “Request” and “Get” differ between the two challengers, for example, along with the “(with Case Studies)” add-on. It’s not a pure isolation, but it still could point toward a potential insight about the type of wording that works best for our future clients.

Or, we could try matching the headline color with the button by changing the headline to orange. That would be an example of a pure isolation of a single color variable. A headline color isolation like that isn’t likely to deliver a profound customer insight, though. Isolations that give you insights into the best messaging and user interfaces are more useful.

At WiderFunnel, we strategically build isolations into the tests that aim to generate customer insights. In the case study shown in Chapter 5, “Optimize Your Value Proposition,” for the Electronic Arts The Sims 3 team, for example, the isolations of offer positioning pointed to valuable insights about the types of offers that work for the game’s players. The Sims 3 team was able to use those insights in their other marketing activities.

How to Get Good Great Results

Deciding to test your marketing decisions is one small step toward achieving great conversion-optimization wins. The rest of the path is littered with obstacles and pitfalls. Here are six tips that will give you a better chance of achieving the results you need.

Test Boldly

It’s always fun to share in our clients’ excitement when they first start testing. We’ll spend a few weeks with them planning and creating their first test, and then, when we’re ready to launch, the anticipation is palpable! When we pull the trigger and launch the test, the reaction of most is to immediately look at the results report.

But there’s nothing there yet. Results take time.

Fortunately, we plan for tests to complete within two to three weeks whenever possible. Sometimes they take longer for low-traffic sites, but our goal is to run fast test iterations.

One of the most common complaints I hear from those who come to WiderFunnel after testing on their own is that their tests take too long to complete. They begin with that same excitement when launching a test, but it slowly turns to frustration as their tests seem to limp along endlessly.

One of the underlying problems with many tests is that they only vary minutiae on the page. Many people falsely believe that conversion optimization is used only to test small tweaks to landing pages. This leads to frustration as they test minor variations of words, colors, and button design and expect dramatic results. By testing insignificant challengers, you’ll be in for a long test that may never complete with statistical significance. The case studies throughout this book show examples of the types of bold test challengers that can produce significant conversion-rate improvements.

So, as you analyze your pages, think about bold changes you could make. When you come up with something entirely different, You Should Test That!

Test Fewer Variations

Bloated tests are a common problem that causes tests to run too long. The duration that your tests need to be in market is directly affected by the amount of traffic and conversions each challenger variation records. This means that increasing the number of challengers from four to eight doubles the time your test will take to complete.

It’s better to run two tests with four challengers each than one test with eight. Each test round will complete more quickly, and the second test can take into account what was learned from the first.

Minimizing the number of challengers you run isn’t as easy as it appears at first. It takes discipline to stick with your plan of testing only a certain number. There are so many good ideas to try!

Avoid Committee Testing

Committee-run testing is notorious for producing diluted ideas and bloated test plans. When everyone has to agree on all variations being tested, many of the novel ideas are weeded out because they don’t “make sense.” Often the most interesting insights come from those winning challengers that were more risky or that few predicted would win.

Even if a group collaborates to generate suggestions, leadership is needed to test bold ideas.

Win with Confidence

One tempting solution to complete tests more quickly is to accept a lower confidence level. You may be comfortable accepting an 80 or 90 percent statistical confidence level or, like WiderFunnel, aim for a 95 percent confidence level with every test result.

Whatever you decide as a business, don’t be tempted to read too much into the early results of a test before it hits your confidence threshold. Small numbers can seem more important than they really are. They can easily be based on pure random chance and statistical clumping. Statistics are misleading when misread or interpreted too early. Understand what your testing tool’s reports mean before you interpret results.

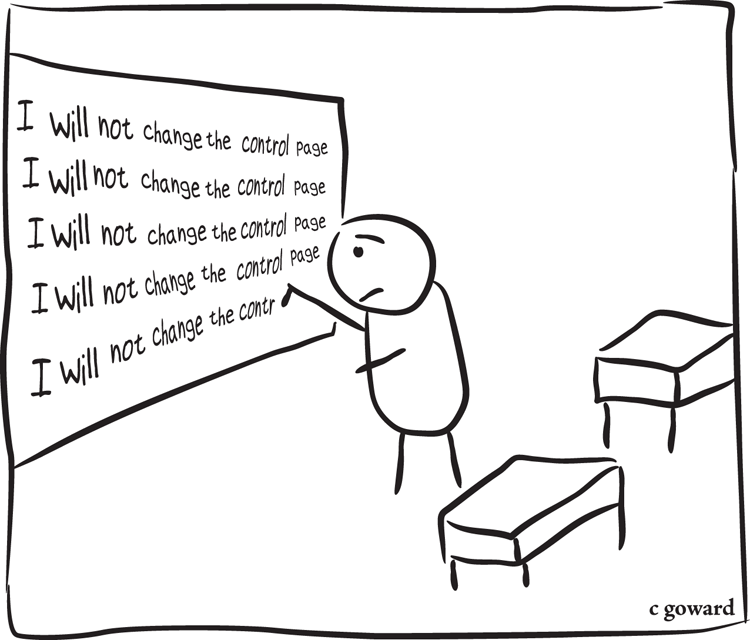

Maintain Your Control

Repeat after me: “I will not change my control page—not even a little bit—until the test is complete.”

This is a fundamental principle of controlled, scientific testing. Any change to the control or challenger variations during the test invalidates the test result. It becomes useless, pointless, worthless. (As you may be able to tell, I’m passionate about this point. It’s a common temptation for companies that are new to testing.)

Just don’t change it.

The exception to this rule is for dynamic pages or changing offers. If you’re testing on a template with changing content, the variable content on all challengers should change to match. That’s okay, as long as the components you’re testing remain consistent, which in this case is probably the layout template or value-proposition messaging.

Use Testing Expertise

Many people start their search for conversion optimization by looking for a tool first. This is a mistake of the “tail wagging the dog” variety. The tool should follow the strategy. Begin by bringing in the expertise you need to develop a strategy, and then find the tools that best support it.

Another common mistake people make when starting with testing is to use their testing software vendor to develop test plans. Software vendors generally provide excellent service for the technical aspects of setting up tests, but not the best testing strategy. Every company has its primary expertise area. Software developers are best at developing software, and services agencies provide the best strategy and test execution.

Depending on your situation, bringing in the expertise of a specialist conversion-optimization agency could be a good option for you. There are many reasons you may want to consider hiring a conversion-optimization agency. Here are a few that I often hear:

- A specialist conversion-optimization agency brings a proven process for developing powerful hypotheses that get results more quickly.

- The agency has trained and experienced team members with all the important skill sets you need: strategists, designers, copywriters, developers, and account managers.

- Dedicated resources at an agency can get tests running faster than doing it on your own or with constrained internal resources.

- You get access to the learning from tests on many other websites.

- You’ll avoid the common mistakes and get quick early wins to cement organizational support.

- The expert perspective from outside the company can help persuade the website’s stakeholders to test new ideas.

- You can be more confident that you’re interpreting the results of the tests properly and maximizing your marketing insights.

- An arms-length agency can give you unbiased advice about the right software to select.

The agency option isn’t for everyone, and I recommend you start with an ROI calculation to help with the decision. If those benefits would be helpful for you, I humbly suggest that you consider WiderFunnel as an option to help improve your conversion rates.

Continue reading to Chapter 12, “Analyze Your Test Results,” to learn how to glean insights from the results of your tests.