C H A P T E R 26

![]()

Developing for Performance

Developing for Performance in packetC

In developing data-plane network applications for modern high-speed networks, there exists a trade-off between the limits of available processing power and the requirements for increased analytics within applications. With packetC, this battle rages on and must remain at the heart of any application design. Network applications must ensure low latency and minimization of processing in every algorithm possible in order to maximize the quantity of transactions that may be evaluated. Evaluating an application not only for valid logic but also for efficiency must become core to every packetC development project.

In many cases, the computation cannot be avoided; however, the time or location where the processing needs to occur can often be changed. For example, portions of an algorithm can be computed in advance and used as a lookup table trading off real-time processor budget in return for an increased amount of non-real-time computation and memory storage. Furthermore, many network systems need to collect metadata about packets and perform analytics where the metadata collection may occur in real time, but the analytics occur in a separate computational system in parallel to processor of other real-time transactions. In each of these examples, the processing paradigm must be elevated to a system level issue.

This concept is not new and, actually, early computer systems were so constrained in their processing that lookup tables became one of the only ways that complex computations could be evaluated. In fact, many math books still include logarithm tables which are an equivalent form of the representation of processing trade-off for memory. Pages of tables are present with pre-computed logarithms for easy reference since computing them can be complex. This has been carried out for over a hundred years with sine, cosine and many other complex mathematical algorithms. Consider the difference in computing an arc-tangent of an integer without a floating point processor versus having a lookup table. This simple concept carried forward into a high performance packetC application can lead to substantial performance improvements.

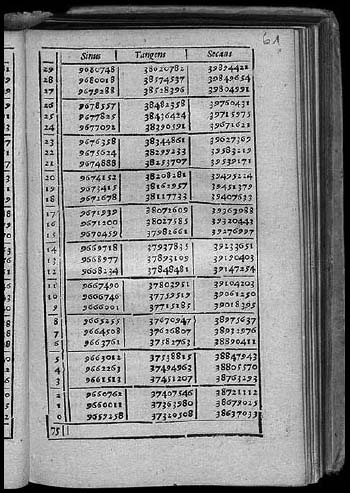

While each system that implements packetC will differ in the quantity of packet processing available and the number of methods for interacting with control-plane systems, the general methodology persists in prioritizing computing resources as the primary resource to protect, such that packets processed per second may be maximized. This leads to a general philosophy that memory storage is cheap and instructions are expensive. Furthermore, the adage that one should not do something in real time that can be done at some other time also persists. Throughout this section, several examples will be used to demonstrate these concepts. In a world of high-level scripting languages focusing on developer simplicity to describe a scenario being prioritized over performance, this may feel like a bit of a step back in time. Albeit an old principle (see trigonometric table in Figure 26-1 from Matthias Bernegger’s Manuale Mathematicum published in 1619), it is not one that is gone from the modern programmer worried about the increase in Internet data rates growing faster than Moore’s Law’s ability to keep up with ever-increasing complexity of processing expected to be applied against that data.

Figure 26-1. Sine, tangent, and secant lookup table.

Counting Bits Set

The following variations on the first example take a classic programming problem that has been used to demonstrate alternatives in performance tuning in several texts. The problem is simple: count the number of bits set to one within a 32-bit integer. While the concept is simple, the impacts on the processing cycles required to evaluate vary greatly based upon the solution path taken. The classic examples are evaluated and expanded with packetC in mind to pose some additional areas to think about when developing similarly complex processing problems.

Simplest Computation Scenario

In the first variation shown below, the simplest algorithm is used where each bit of the input value is evaluated through the use of a bitwise and. When a bit is set to 1, the counter is incremented. A couple of simple optimizations have been implemented where evaluation does not proceed through all 32 bits if the shifted value of the input value has upper bits all equal to zero. In addition, compound assignment operators are used to increment as well as shift should those have optimizations in a given implementation.

int countOnes (int inputValue)

{

int counter; // Used to count the number of bits set to one in inputValue

for (counter = 0; inputValue; inputValue >>= 1)

{

counter += (inputValue & 1);

}

return counter;

}

Unfortunately, the approach above will require one iteration per bit for values with a high-order bit set. This would lead to 32 instructions per integer in addition to function return overhead.

Improving the Computational Algorithm

While the previous implementation was quite straightforward and utilizes a simple iteration of shifts and additions, it required up to 32 operations for a evaluating a 32-bit value. The following example falls out of a number of variations derived from an original Rich Schroeppel algorithm that has been refined by several others over the years. This example uses 64-bit integers, longs in packetC, to evaluate the 32-bit input value in just 15 operations. The evaluation operates through a complex combination of bitwise and operations, multiplications and divides that result in two statements to calculate the number of bits in the lower 24 bits followed by a single statement evaluating the upper 8 bits.

int countOnes (long inVal)

{

long counter; // Used to count the number of bits set to one in inVal

counter = ((inVal & 0xfff) * 0x1001001001001L & 0x84210842108421L) % 0x1f;

counter += (((inVal & 0xfff000) >> 12) * 0x1001001001001L & 0x84210842108421L) % 0x1f;

counter += ((inVal >> 24) * 0x1001001001001L & 0x84210842108421L) % 0x1f;

return (int) counter;

}

The operations result in an improvement in the processing time through an algorithm1 worthy of a whole chapter on how it works and was discovered. Although this is a greatly improved algorithm, it still bases the evaluation upon computing with no real trade-offs for non-real-time processing.

_________________

1 Rich Schroeppel of MIT originally created a 9-bit version, similar to option 1; see the Programming Hacks section of Beeler, M., Gosper, R. W., and Schroeppel, R. HAKMEM. MIT AI Memo 239, Feb. 29, 1972. His method was the inspiration for the variants above, devised by Sean Anderson. Randal E. Bryant offered a couple of bug fixes on May 3, 2005. Bruce Dawson tweaked what had been a 12-bit version and made it suitable for 14 bits using the same number of operations on February 1, 2007.

Altering the Algorithm with Memory Use in Exchange of Processing

If one starts to think about the problem of counting the number of bits set to one in a 32-bit integer in real time, and this is a systems issues and not a programming contest, there are a few assumptions that can change. First, a computer can be used to run through whatever algorithm works comparing every possible integer value to generate a table that can be used in a single lookup. At 32 bits, however, while memory is cheap, the size of a table with 4 billion entries is a bit excessive to save a few clock cycles and leads to the following algorithm which is both lightweight at only 4 operations plus highlights several aspects of packetC important in optimization.

int countOnes (int inputValue)

{

union FourBytes

{

int bigInt;

byte eachByte[4];

} operand;

const int bitsSetTable[256] =

{

0, 1, 1, 2, 1, 2, 2, 3, 1, 2, 2, 3, 2, 3, 3, 4,

1, 2, 2, 3, 2, 3, 3, 4, 2, 3, 3, 4, 3, 4, 4, 5,

1, 2, 2, 3, 2, 3, 3, 4, 2, 3, 3, 4, 3, 4, 4, 5,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

1, 2, 2, 3, 2, 3, 3, 4, 2, 3, 3, 4, 3, 4, 4, 5,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

3, 4, 4, 5, 4, 5, 5, 6, 4, 5, 5, 6, 5, 6, 6, 7,

1, 2, 2, 3, 2, 3, 3, 4, 2, 3, 3, 4, 3, 4, 4, 5,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

3, 4, 4, 5, 4, 5, 5, 6, 4, 5, 5, 6, 5, 6, 6, 7,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

3, 4, 4, 5, 4, 5, 5, 6, 4, 5, 5, 6, 5, 6, 6, 7,

3, 4, 4, 5, 4, 5, 5, 6, 4, 5, 5, 6, 5, 6, 6, 7,

4, 5, 5, 6, 5, 6, 6, 7, 5, 6, 6, 7, 6, 7, 7, 8

};

operand = inputValue;

return ( bitsSetTable[operand.eachByte[0]] + bitsSetTable[operand.eachByte[1]] +

bitsSetTable[operand.eachByte[2]] + bitsSetTable[operand.eachByte[3]] );

}

The example above uses an array of 256 entries each containing the number of bits set to one in a byte whose value is equal to the index of the entry in the array. This table is small enough that it could be created by a simple script or even from evaluating bytes manually to include the initialization values into the application at compile time. A union is declared that maps an array of bytes onto the different byte positions within an integer such that each byte can be used as a table reference to the number of bits in that byte. By adding up the four byte values from the lookup table, the number of bits in a 32-bit integer is returned.

The key point of comparing the options above is to show how an algorithm that calls out for a repetitive sequence of analysis can quickly become overly complex when trying to improve processing efficiency. Overly complex code is not recommended as it becomes difficult to audit and problematic to support. Additionally, often by evaluating assumptions and trading real-time processing time for processing in advance not only can a more optimal solution be found, but also something that is much simpler than almost any other option.

If processing rates alone are the key measurement, further review will show that this could be improved even further by using 32k entries and using the upper and lower 16-bits as indices reducing this algorithm by two addition operations. The question becomes: at what point do trade-offs make sense and when don’t they?

Metadata Analysis

One of the most common processing scenarios present in packetC is the retrieval of metadata from packets to feed analysis algorithms that generate data for either third-party analysis or even as a feedback loop for future real-time decisions. Consider the previous example as a means of showing the value of leveraging non-real-time processing, namely computation of bits in a byte, to save real-time processing instructions. If the same approach is applied to metadata collection, a number of design lessons can be discerned. The primary objective in packetC is to look for scenarios where the packetC application collecting metadata can focus on collection and either eliminate or minimize analysis. A few examples of this scenario are broken out below to highlight potential design approaches.

Netflow Record Generation

A common program that appears in several variations is the flow tracking application. The data plane is very efficient at monitoring packets to record the unique flows as well as the number of packets and bytes comprising each flow. When a flow terminates or the application determines that it should be aged out of tracking, a record is produced which can be utilized by other programs to analyze or graph network flows. The recipient applications often expect the format of the flow record packet to be in a specific format, such as Netflow Version 5 or IPDR records.

While packetC makes it simple to code the construction of a UDP packet and each of the structured field contents due to its similarity to C, time should be taken to determine whether or not formatting should be done in the data plane. For performance it may be preferable to ship the raw data collected about the flow off to a control-plane processing application which is not IO-bound to perform the conversion into the formatted flow-record.

DDoS Trend Analysis

Distributed Denial of Service defense applications often monitor the incoming packet flows and through trend-analysis highlight flows of interest for further analysis or potential rate-limiting and blocking. Instead of performing the analysis within the data-plane application, consider exporting data through the control plane such that a separate application can perform the trend analysis. A control plane application may not have the long-term database memory constraints or the CPU-bound constraints that the data plane poses. Upon completion of trend analysis, simple commands can trigger events for the data plane to respond.

VoIP QoS Analysis

Similar to the flow example, consider formatting of call data records (CDRs) in the control plane. Furthermore, metrics of calls will often need to be parsed out such that records can be tracked by user, phone number, phone type, codec, or other key metrics. Instead of parsing SIP INVITE packets in the data plane, consider snagging the entire INVITE packet, which has this information buried in the textual payload for recording along with the results of monitoring the call flow. Key fields can be extracted at a later date without the data-plane performance hit for the sole benefit of the control-plane application.

Processing Minimization

Often applications need to perform housecleaning like clearing out aged tables or performing extended tabulations. Reducing these checks from every packet to a fraction of packets is critical. Focus on leveraging background threads doing garbage collection and control-oriented tasks instead of performing the evaluations on every packet. Furthermore, if calculations are being used for rough estimations, simplify algorithms to process more efficiently. Consider counters to powers of 2 such as 128 instead of 100. Divide by 8 instead of dividing by 10 and use SHR instead of a divide instruction. Small adjustments add up quickly. Even if a background task isn’t in the cards, consider doing some tasks only on even-numbered contexts. A simple approach like even-contexts checking for table management can reduce the processing overhead of the task by 50 percent.

Whatever You Do … Don’t Involve Other Contexts

Don’t stall the pipelines to synchronize analysis; rather snapshot and do work in the background. If an application collects a lot of metrics and from time to time needs to export them, be cautious in how the snapshot process works. One poor way is to set a lock during table export; this is bad because it stalls all other contexts from updating statistics. Instead consider approaches where a flag sets a primary or secondary table. When it is time to export the primary table, change the flag so that updates occur to the secondary table, export the primary table in one context. Upon completion, initialize the primary table such that it can be cutover to when it is time to export the secondary table.