Chapter 7

Multi- and Stereoscopic Matching, Depth and Disparity

7.1. Introduction

Three-dimensional (3D) reconstruction using stereo-correlation relates to the automatic extraction of data about the scene’s 3D structure from 2 to N images acquired simultaneously. In this context, in order to estimate depth within a scene, the 3D points are triangulated using their projections in the images taken from different viewpoints and the characteristics of the capture system. This problem therefore relates to matching1 homologous pixels (i.e. projections of the same 3D point in images). This research can be based on specific geometric constraints, including the epipolar constraint that creates a first-order indeterminacy, reducing the search space to a segment. The photometry compared between pixels from different images is therefore used to match homologues although anomalies (similar photometries or variations in brightness) may occur, requiring the use of more complex heuristics or information redundancy. Matching pixels, in this context, are known as stereoscopic matching.

We will first introduce the difficulties related to homologue searches as well as primitives and capture geometry. We will then examine the generic algorithms of two existing approaches with the most commonly used constraints and costs. Second, we will concentrate on the occlusion problem by describing two approaches, the first being stereoscopic and the other being multiscopic.

7.2. Difficulties, primitives and stereoscopic matching

7.2.1. Difficulties

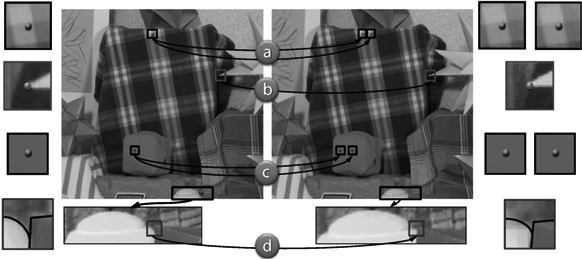

The quality of the 3D reconstruction is highly dependent on the quality of matching. Regardless of the number of points used to estimate depth and the matching method used, the same difficulties and limitations can affect the obtained results. Gales [GAL 11] highlights two categories of problems, as illustrated in Figure 7.1:

Figure 7.1. Difficulties in matching. a) Repetitive textures, b) discontinuity, c) homogeneous areas and d) occlusions

7.2.2. Primitives and density

As shown in [JON 92], two kinds of primitives can be used in stereoscopic matching: pixels for the first kind of primitives and feature types, also called points of interest (see Chapter 6), for the second. The pixel-based approach uses the whole set and provides dense results because it is possible to estimate as many matches (3D points) as there are pixels in the N images and use attributes such as lightness, color, gradient, etc. to identify them. These characteristics are subject to noise and only necessarily provide a small amount of information about the scene, thereby generating a number of false matches (decoys). In contrast, methods within the second category are feature based [JAW 02], uses a partial set of pixels and focus on structured and more discriminative primitives to remove ambiguity and limit combinatorics during matching. However, the detection of these primitives does not provide any consistency between images or a particularly dense reconstruction for some images. There are two approaches to this type of conflict (decoy/inconsistency and dense/sparse): hybrid and multiscopic methods. The first method combines the advantages of these two categories, for example, by matching and segmenting the image at the same time (see section 7.5.1) while the second method is based on the redundancy of information created by adding images into the series (see section 7.5.2).

7.3. Simplified geometry and disparity

Chapters 3 and 4 introduced the geometry of a sensor and specifically the epipolar constraint. We will concentrate specifically on multiscopic matching (N ≥ 2) where the capture system respects the shooting conditions with a (non) off-axis parallel geometry (see Figure 4.4). Since this includes both cases, we will focus on off-axis geometry, which we will term “parallel geometry” in the rest of this chapter. This configuration places matching within a simplified epipolar geometric framework, which ensures, among other things, that the co-epipolar straight lines are horizontals of the same rank as N images. As such, two homologous pixels have the same y-coordinate and the gap between one pixel in an image i and its homologue in the following image i + 1 will be a simple horizontal translation, which limits the search area in the image i + 1 to a horizontal segment. In this chapter, we will systematically use this configuration. If the geometry of capture used does not match this configuration, it is possible to make a prior image rectification by reprojecting the initial images using parallel geometry [HAR 03] (see Chapter 5).

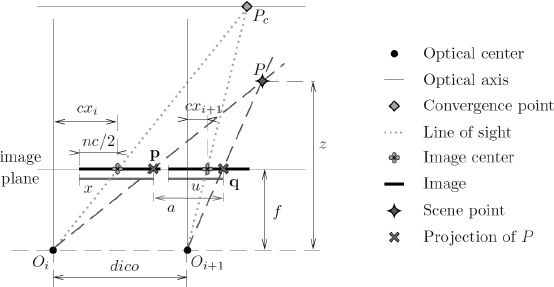

Figure 7.2. Relation between disparity and depth

In parallel geometry, a point P in the 3D scene is projected in the numbered images i and i + 1 , if it is not occluded on either of these images, at the positions connected by simplified epipolar geometry, respectively, p = (x, y) and q = (u, y′ = y) (see Figure 7.2). The homologue of the pixel (p, i) in the following image (q, i + 1) can therefore be identified by the single difference of the abscissa ![]() , defined as a “horizontal disparity”, which will be called disparity in the rest of this chapter. For a pixel (p, i), the disparity

, defined as a “horizontal disparity”, which will be called disparity in the rest of this chapter. For a pixel (p, i), the disparity ![]() (see Figure 7.2) calculates the depth z of the point P, which is projected in it, according to the following:

(see Figure 7.2) calculates the depth z of the point P, which is projected in it, according to the following:

where dico is the distance between the optical centers, f is the darkroom depth of the virtual sensors and ![]() is the off-axis difference

is the off-axis difference ![]() (in pixels) in the two images (gaps between the center of the image and the optical image–axes intersection).

(in pixels) in the two images (gaps between the center of the image and the optical image–axes intersection).

In multiscopic stereovision, if the parallel geometry is regular (regular spacing (dico) between the optical centers and convergences between all lines of sight), the disparity ![]() of the pixel (p, i) connects the abscissa of the N potential projections in P, which are the possible homologues in (p, i). Indeed, the application between pairs of successive images in Figure 7.2 and the equation [7.1] shows that the disparity related to a depth z is equal for all pairs of images (i, i + 1). The position in an image

of the pixel (p, i) connects the abscissa of the N potential projections in P, which are the possible homologues in (p, i). Indeed, the application between pairs of successive images in Figure 7.2 and the equation [7.1] shows that the disparity related to a depth z is equal for all pairs of images (i, i + 1). The position in an image ![]() of the homologue of the pixel

of the homologue of the pixel ![]() , assigned the disparity

, assigned the disparity ![]() , can therefore only be:

, can therefore only be:

As such, in this simplified geometry, the depth estimation problem can be seen as a disparity estimation problem, formulated as the search of a multimap ![]() attributing to each pixel (p, i) its disparity

attributing to each pixel (p, i) its disparity ![]()

![]() , depending on whether real or integer disparities are needed. The next section will introduce the generic algorithm for matching methods more precisely, including a description of the constraints and the cost functions that can be used.

, depending on whether real or integer disparities are needed. The next section will introduce the generic algorithm for matching methods more precisely, including a description of the constraints and the cost functions that can be used.

7.4. A description of stereoscopic and multiscopic methods

7.4.1. Local and global matching algorithms

As we discussed in section 7.2, application of the epipolar constraint alone is not sufficient to generate a multimap of disparities in qualities. To remove ambiguity due to decoys, heuristics must be used. Stereovision methods are characterized by the following criteria (see [SCH 02]):

– “primitives” to be matched (see section 7.2.2), and their “attributes";

– the expression of the “cost of matching or energy” on a support made up of all primitives to be matched: N images, one image or a subimage;

– the “optimization method” used to find the solution with the minimum cost.

These latter elements depend on the method’s classification. Brown et al. have [BRO 03] distinguished local methods from global methods. Both seek to minimize the cost function: the first pixel by pixel and the second in relation to all pixels.

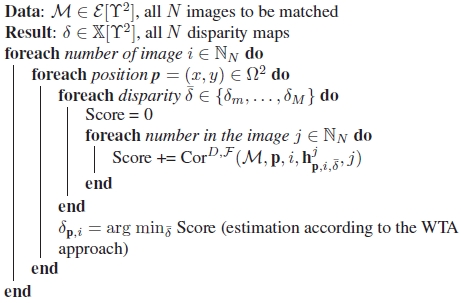

Algorithm 7.1. Local pixel matching with ![]() defined in section 7.4.3.

defined in section 7.4.3.

In local approaches, the energy used is a “similarity measure” also known as “correlation” or, in contrast, a “dissimilarity measure” that evaluates the degree of photometric similarity/dissimilarity between two homologous pixels and their respective neighborhoods (see section 7.4.3), and the maximization/minimization strategy is the “winner takes all” (WTA). Algorithm 7.1, a generic version of this approach, requires the choice of a Cor energy function, a neighborhood form ![]() and a distance D.

and a distance D.

In global approaches, the difficult aspects are the initialization of disparities (most of the time with a correlation-based approach), the choice of the stop conditions, the update of the disparity function and cost, which are dependent on the optimization method used. In algorithm 7.2, the conditions chosen are simple in order to facilitate its understanding. For further information on optimization methods, see [FEL 11].

Algorithm 7.2. Global pixel matching.

The reliability of a match is evaluated using an energy function. This function, to be minimized for a set of matches, integrates terms measuring dissimilarities in neighborhoods of homologous pixel Edis and violations of constraints on the estimated disparities Econt. Its general form is:

where ![]() influences the weight of each of the two terms Edis and Econt. The dissimilarity cost Edis or the term related to the data is often a sum of “local costs” of all matches where each local cost measures the dissimilarity between two homologous primitives. The constraint costs, Econt, is used to choose between several potential homologues and to limit the combinatorial. This cost models the interactions between the pixels considered. It quantifies the respect of the constraints used for all matches and corresponds to the sum of “neighborhood costs”.

influences the weight of each of the two terms Edis and Econt. The dissimilarity cost Edis or the term related to the data is often a sum of “local costs” of all matches where each local cost measures the dissimilarity between two homologous primitives. The constraint costs, Econt, is used to choose between several potential homologues and to limit the combinatorial. This cost models the interactions between the pixels considered. It quantifies the respect of the constraints used for all matches and corresponds to the sum of “neighborhood costs”.

In 2002, the Middlebury evaluation protocol2 was introduced [SCH 02]. Widely used, it compares matching methods and proposes different data sets. At this moment in time, however, only stereoscopic or multiscopic methods in convergent capture are considered in this protocol.

7.4.2. Principal constraints

A constraint is related to a match taken from hypotheses based on the geometry of capture and of the scene as well as a reflection on objects’ surfaces. The geometry of the scene is described by different constraints and costs, which we will detail here and in the following section. For each of these constraints, two aspects need to be considered: the definition (the rule) itself and their objectives of use.

– Uniqueness constraint. Widely used in stereovision, it is defined by:

where two pixels in the image i cannot have the same homologue in the image j.

– Ordering constraint. Occasionally used in stereovision, it is defined by:

It indicates that the order of the pixels in the image i along the epipolar line y must be the same as their correspondents in the image j. However, the presence of a shortening effect (see section 7.2.1) transgresses these two constraints. Kostková, and Šará have therefore proposed a variant in [KOS 03] called weak consistency.

– Symmetry constraint, or bidirectional verification, is written as:

It is respect for a pixel (p, i) when it is a homologue of its homologous pixel3 (q, j). In addition, this constraint ensures the uniqueness constraint.

These three constraints reduce the ambiguities induced by homogeneous areas, repetitive textures or changes in lighting between the different views (see section 7.2.1). However, none of the constraints examined here limit the effect of a lack of information because accounting for this difficulty is strongly dependent on the type of method used, as discussed in section 7.5.

7.4.3. Energy costs

Energy costs also rely as heavily on photometric aspects as on geometric aspects based on disparities in neighboring pixels. In the literature, there are a large number of energy functions but only the most significant ones are presented: dissimilarity costs, smoothing costs and costs that explicitly take into account occlusion problems.

Local cost functions (denoted by the indices xxx) in a pixel (p, i) and for a predicted disparity value ![]() are as follows:

are as follows:

The global costs, for a given disparity multimap ![]() , are obtained by creating the sum on all pixels of their disparity in the multimap according to the following general formula:

, are obtained by creating the sum on all pixels of their disparity in the multimap according to the following general formula:

7.4.3.1. Photometric dissimilarity cost

According to the hypothesis that all objects are matte and without any specular effect, a match must be penalized if the photometric or colorimetric components of homologous pixels involved are dissimilar within a given neighborhood, regardless of the color space chosen (generally RGB; Bleyer et al. [BLE 08] have studied the influence of this choice on the estimated disparities). The function of this cost Edis can generally be defined by:

In binocular stereovision, the sum in equation [7.9] on j is not carried out. As such, Cor measures the photometric differences in M between two pixels (p, i) and (q, j) by cumulating the distance ![]() (generally L1 or L2 [CHA 11]) used in

(generally L1 or L2 [CHA 11]) used in ![]() between their respective neighbors in correlation form

between their respective neighbors in correlation form ![]() , such that:

, such that:

Within the context of multi-ocular stereo (N > 2) where problems of changes in illumination can be accentuated by the cumulative distances between the capture systems, Niquin [NIQ 11] has restricted the calculation of dissimilarity costs to homologues of two successive images in the scene. The energy function, therefore, becomes:

7.4.3.2. Geometric and/or photometric smoothing costs

To limit noise sensitivity to dissimilarity costs, a photometric and/or geometric smoothing is often added to the term ![]() in equation [7.3]. Except for areas near depth discontinuities, smoothing is applied to the attributes (disparity, intensity and color) of the pixels in the smoothing form

in equation [7.3]. Except for areas near depth discontinuities, smoothing is applied to the attributes (disparity, intensity and color) of the pixels in the smoothing form ![]() . Centered or not, composed of several configurations or adapted to the scenes contents,

. Centered or not, composed of several configurations or adapted to the scenes contents, ![]() has a significant influence on the quality of this smoothing [FUS 97]: when

has a significant influence on the quality of this smoothing [FUS 97]: when ![]() is too small, noise sensitivity persists and, when too large, depth discontinuities tend to be smoothed. A photometric smoothing ElissPhoto and a geometric ElissGeom smoothing or a combination of both ElissGeomPhoto can be written as follows:

is too small, noise sensitivity persists and, when too large, depth discontinuities tend to be smoothed. A photometric smoothing ElissPhoto and a geometric ElissGeom smoothing or a combination of both ElissGeomPhoto can be written as follows:

In contrast to the previous costs, those with geometric constraints are based on disparities of the pixels in the neighborhood, previously estimated, and therefore require, at a local level, having the multimap ![]() . As a result, their global formulation becomes:

. As a result, their global formulation becomes:

These smoothing constraints therefore can take into account the depth discontinuity problems but remain ineffective for reducing errors caused by occlusion.

7.4.3.3. Geometric and/or photometric occlusion costs

Occlusions can be detected by using the constraints discussed in section 7.4.2, in post-processing with local approaches or directly in the constraint cost formulation. In this case, the dissimilarity cost is replaced by an expensive cost when the detection is positive. Some fast methods, such as [MIN 08], exploit colorimetric dissimilarities to indicate a potential occlusion. However, they are sensitive to changes in illumination that can be improved by considering the disparities. One of the most common methods in binocular stereovision relies on “Left Right Checking”, denoted by LRC where, using an arbitrary threshold, the difference in disparities between the pixel and its homologue indicates an occlusion. However, within the context of multiscopic stereovision, occlusions are rarely present in N – 1 images. A 3D point is therefore generally visible in several images even if it is occluded in others. As a result, this method and its extensions [INC 05, JOD 06] are not applicable in this state. A method based on this observation is introduced in section 7.5.2.

7.5. Methods for explicitly accounting for occlusions

7.5.1. A local stereoscopic method – seeds propagation

Using a parallel configuration, a homologous pixel is found in a horizontal segment with a maximal width of δM (maximal disparity). The seed propagation, a binocular technique, reduces this search area and therefore the risk of selecting a wrong correspondent. In accordance with the observation that generally two neighboring points on the same surface are projected as two neighboring pixels in each of the two images, implying that the two neighboring pixels have similar disparities, the search area can be reduced as follows: a pixel’s correspondent, the neighbor of a previously matched neighbor, is searched within the neighborhood of the homologue of the latter. Propagation is iterative and requires the selection of an initial set of reliable matches, known as “seeds”. At each iteration, the newly calculated matches are added to the set of seeds and the process continues as new matches are found.

However, this assertion is not verified in relation to depth discontinuities. A threshold on the correlation scores is therefore necessary to prevent propagation near depth discontinuities. The more tolerant the threshold, the denser the result but the higher the risk of propagating errors. An alternative may be to carry out a prior segmentation of the reference image in homogeneous color regions, most commonly using the mean-shift technique [COM 02]. By way of hypothesis, each region corresponds to the same surface and does not present depth discontinuities. The propagation of seeds occurs within each region, thereby preventing propagation above the depth discontinuities. Using this segmentation also has an advantage in that it accounts for occlusions. Indeed, in a single region there may be occluded pixels and non-occluded pixels that correspond to the same surface. As a result, after propagation, it is possible to carry out a regularization, stage that will be described later, in order to estimate disparities for occluded pixels from non-occluded pixels.

7.5.1.1. Calculating the initial seeds

There are two families of automatic seed selection methods:

– Matching feature points: these pixels are different from others (see Chapter 6), and matching them can be done fairly reliably. As such, the homologue of a feature point in the image 0 can be found within the set of feature points in the image 1. This technique first involves detecting the points of interest in the two images and then matching them. To do so, the dissimilarity measures and the geometric constraints presented in sections 7.4.3 and 7.4.2 can be used.

– Matching interests: the correspondents of the pixels in the image 0 are found in image 1 according to algorithm 7.1. Only the matches satisfying a series of strong constraints are then retained, as discussed in section 7.4.2.

It is not necessary to have a large number of seeds. However, in cases where scenes present depth discontinuities, there should be a “good” distribution with at least one correct initial seed per region of homogeneous depths to carry out propagation in these regions. In [GAL 11], a hybrid “completion-validation” approach is proposed to improve this distribution.

7.5.1.2. Propagation approaches

Depending on the means of using seeds within each iteration, there are two propagation approaches:

– A simultaneous approach: all seeds are considered simultaneously to find correspondents, meaning that each iteration can be carried out in parallel but at the risk of errors if wrong seeds exist.

– A sequential approach: a single seed is considered. It is selected according to a predefined criterion in order to propagate the “best” seed first. This approach, summarized in algorithm 7.3 limits the propagation of errors. The cost of dissimilarity (see section 7.4.3) between the neighborhoods of the seed’s correspondents is generally used as a selection criterion. However, this cost does not provide information about the seed’s reliability. In [GAL 12], the criterion used is the correspondence probability, calculated during the matching of each pixel. It is given by the dissimilarity cost between the two correspondents, divided by the sum of costs of all other candidates. As such, the more different a candidate is from others, the greater is its probability of correspondence (and vice versa).

Algorithm 7.3. Matching pixels using sequential propagation with prior segmentation, see section 7.5.1 where ![]() is an 8-connected form

is an 8-connected form

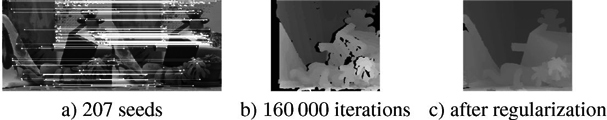

7.5.1.3. Adjustment by region-based voting scheme

The result of a local stereoscopic matching by propagation is only slightly dense, notably in the occluded areas (see section 7.2.1). Adjustment by the region-based voting scheme is therefore required to densify it (see Figure 7.3). This stage is used to estimate disparities among occluded pixels as well as to correct some errors. It is based on homogeneous color regions in the reference image (mean-shift). In a region, there are occluded and non-occluded pixels, presumed to correspond to the same surface. An approach using estimated disparities for each region has been proposed by Gales [GAL 11].

Figure 7.3. Initial set of seeds a) and disparity maps obtained from different iterations (b, d, e, f, g and h) during sequential propagation. The image c) shows the result obtained after regularization

7.5.2. A global multiscopic method

The approach explored in this section uses an original pixel matching formulation, specifically constructed around a multiscopic context and simplified epipolar geometry. It simultaneously calculates the N disparity maps while ensuring geometric consistency, managing occlusions and precisely identifying information redundancy. An in-depth study of this solution and different global and local methods can be found in [NIQ 11].

7.5.2.1. Multiscopic matching formulation

When searching for 3D points in a finite number of constant depth planes, this formulation restricts its disparity search, for pixels with N views, to a set of integer values ![]() . Indeed, the density of this reconstruction is reduced but a refinement of disparities can solve this at a later stage. This choice is justified, at least for initializing maps, by the assurance that co-homologues4 are all pixels (and not subpixels) in the images, which clarifies redundancies and the consistency search. As such, potential 3D points are discrete in number and correspond to intersections of optical-pixel center rays with the planes

. Indeed, the density of this reconstruction is reduced but a refinement of disparities can solve this at a later stage. This choice is justified, at least for initializing maps, by the assurance that co-homologues4 are all pixels (and not subpixels) in the images, which clarifies redundancies and the consistency search. As such, potential 3D points are discrete in number and correspond to intersections of optical-pixel center rays with the planes ![]() , for

, for ![]() .

.

The idea proposed in [NIQ 11] relates to all the co-homologous pixels with the disparity ![]() , representing the same 3D point

, representing the same 3D point ![]() in an entity known as a match, which intrinsically codes the matching redundancies and partially ensures consistency. Denoted as

in an entity known as a match, which intrinsically codes the matching redundancies and partially ensures consistency. Denoted as ![]() , a match is identified by the position p0 = (x, y) of the pixel in the reference image (i = 0), a disparity

, a match is identified by the position p0 = (x, y) of the pixel in the reference image (i = 0), a disparity ![]() and a boolean vector α where α[j] = 1 if

and a boolean vector α where α[j] = 1 if ![]() is a projection of

is a projection of ![]() . As such, it contains at most 1 pixel per image and 0 in some images when there is occlusion. Multiscopic matching therefore involves finding a set

. As such, it contains at most 1 pixel per image and 0 in some images when there is occlusion. Multiscopic matching therefore involves finding a set ![]() of matches that form a consistent partition of all the pixels

of matches that form a consistent partition of all the pixels ![]() .

.

7.5.2.2. Energy function and geometric consistency constraint

By defining a match, the uniqueness and symmetry constraints are ensured if the number of pixels composing it is N. However, an inferior value indicates that the pixels in certain views are not co-homologous (α[j] = 0) with the others (α[j] = 1), the symmetry constraint is therefore no longer verified and this indicates the presence of either an occlusion or a geometric inconsistency in the partition as a distant object that will mask a near object. To prevent the creation of such partitions, a new constraint is integrated into the cost function: the “geometric consistency constraint” (see [NIQ 10]).

The aim of this multiscopic formulation is to explicitly use the notions of “match” and partition without questioning all existing stereocorrelation methods. It is for this reason that the energy function ![]() evaluating a partition can be reduced to a classic energy function in the form of equation [7.3], in order to use the existing constraints (see section 7.4.2). The first part integrates dissimilarity, occlusion and geometric costs at once using the energy Edoc:

evaluating a partition can be reduced to a classic energy function in the form of equation [7.3], in order to use the existing constraints (see section 7.4.2). The first part integrates dissimilarity, occlusion and geometric costs at once using the energy Edoc:

with [7.16] ![]()

The cost Cdoc only evaluates the colorimetric dissimilarity Cor between the pixel (p, i) and its unproven homologue (q, i) if they have the same disparity (i.e. both belonging to the same match). Its form is:

where Kcoh is a constant large enough representing the cost of a geometric inconsistency and Kocc is the cost of an occlusion.

7.5.2.3. Global selection and partition construction

In addition to the energy function, Niquin [NIQ 11] has also proposed the “Near-Far” selection method in order to find a visibility function at minimal cost. The principle is, after an initialization of the partition g with the matches from ![]() , to consider the disparity planes from the largest

, to consider the disparity planes from the largest ![]() to the smallest

to the smallest ![]() , in order to find the 3D target points from the nearest to the farthest. Figures 7.4(a) and (b) illustrate this progression. At each studied disparity

, in order to find the 3D target points from the nearest to the farthest. Figures 7.4(a) and (b) illustrate this progression. At each studied disparity ![]() , the previous matches are reexamined so that they are retained or removed after evaluation. As a result, the suppression of a match involve redistributing its constituent pixels in other disparity matches

, the previous matches are reexamined so that they are retained or removed after evaluation. As a result, the suppression of a match involve redistributing its constituent pixels in other disparity matches ![]() . To solve this binary choice problem, a graph cut method is used with a “min-cuts/max-flows” algorithm where each arc is valuated using the energy function. Therefore, for each disparity studied

. To solve this binary choice problem, a graph cut method is used with a “min-cuts/max-flows” algorithm where each arc is valuated using the energy function. Therefore, for each disparity studied ![]() , a graph is constructed and minimized, creating a new partition

, a graph is constructed and minimized, creating a new partition ![]() that replaces g if its cost is inferior to that of g. In contrast to other existing work, this formulation reduces the graph to one node per match instead of one node per pixel. In practice, their number only rarely exceeds twice the number of pixels in a single image, regardless of the number of images N, thereby reducing the computation time. For a complete examination of the graph cut technique and for a detailed study of costs for each arc, please refer to [BOY 99] and [NIQ 11].

that replaces g if its cost is inferior to that of g. In contrast to other existing work, this formulation reduces the graph to one node per match instead of one node per pixel. In practice, their number only rarely exceeds twice the number of pixels in a single image, regardless of the number of images N, thereby reducing the computation time. For a complete examination of the graph cut technique and for a detailed study of costs for each arc, please refer to [BOY 99] and [NIQ 11].

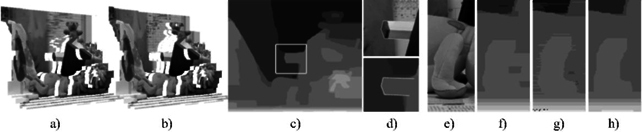

Figure 7.4. Different stages in reconstruction (a and b), the disparity map and occlusion zoom in with Kocc = 100 and Kliss = 20 (c and d), increasing from (e) with Kocc = 200 (f), Kliss = 0 (g) and Kliss = 40 (h)

7.5.2.4. Results

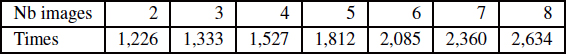

The cost Cdoc (see equation [7.17]) detects the edges of objects with precision (see Figure 7.4(d)) but penalizes, with Kocc, not only occlusions but also each change in disparity along an epipolar line. The value Kocc must therefore be small enough to avoid the type of problems illustrated in Figure 7.4(f), but large enough to maintain an effective colorimetric comparison. ![]() (see equation [7.3]) contains a smoothing constraint of which the influence of the coefficient Kliss reinforces (or not) the robustness of matches (see Figures 7.4(g) and (h). However, the reduction in the size of the graph to one node by matching means that the number of nodes in the graph (i.e., the complexity of the cutting) remains almost unchanged. As a result, the computation times obtained (see Table 7.1) increase linearly according to the number of images where the algorithms using one node per pixel have an exponential evolution time.

(see equation [7.3]) contains a smoothing constraint of which the influence of the coefficient Kliss reinforces (or not) the robustness of matches (see Figures 7.4(g) and (h). However, the reduction in the size of the graph to one node by matching means that the number of nodes in the graph (i.e., the complexity of the cutting) remains almost unchanged. As a result, the computation times obtained (see Table 7.1) increase linearly according to the number of images where the algorithms using one node per pixel have an exponential evolution time.

Table 7.1. Computation time (in ms) according to the number of images for the “Teddy” Middlebury scene (Intel Core i5-3470 @ 3.2 GHz, 8 GB RAM)

7.6. Conclusion

In the context of binocular and multiocular stereovision, the configuration of (un)centered parallel geometry capture allows the use of the simplified epipolar geometry constraint in order to reduce the homologue search area. However, it cannot be used to solve the pixel matching problem. Therefore, there are two approaches: hybrid methods combining the advantages of pixel and feature point matching, and multiscopic methods, which exploit information redundancy. Both are based on cost constraints and functions that include photometric as well as geometric or smoothing characteristics, either locally or globally. Among the known difficulties, this chapter has focused on occlusions by describing two approaches that can be used to account for them. One is hybrid, local and stereoscopic, based on seed propagation (using previously established and reliable matches), whereas the other is global and multiscopic, ensuring geometric consistency while highlighting and exploiting information redundancy.

7.7. Bibliography

[BLE 08] BLEYER M., CHAMBON S., POPPE U. et al., “Evaluation of different methods for using colour information in global stereo matching approaches”, The Congress of the International Society for Photogrammetry and Remote Sensing, vol. XXXVII, Part B3a, Beijing, China, pp. 415–420, July 2008.

[BOY 99] BOYKOV Y., VEKSLER O., ZABIH R., “Fast approximate energy minimization via graph cuts”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, pp. 1222–1239, 1999.

[BRO 03] BROWN M., BURSCHKA D., HAGER G., “Advances in computational stereo”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 8, pp. 993–1008, August 2003.

[CHA 11] CHAMBON S., CROUZIL A., “Similarity measures for image matching despite occlusions in stereo vision”, Pattern Recognition, vol. 44, no. 9, pp. 20632075, September 2011.

[COM 02] COMANICIU D., MEER P., “Mean shift: a robust approach toward feature space analysis”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 24, no. 5, pp. 603–619, May 2002.

[FEL 11] FELZENSZWALB P.F., ZABIH R., “Dynamic programming and graph algorithms in computer vision”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 4, pp. 721–740, April 2011.

[FUS 97] FUSIELLO A., ROBERTO V., TRUCCO E., “Efficient stereo with multiple windowing”, IEEE Conference on Computer Vision and Pattern Recognition, San Juan, Porto Rico, p. 721–740, June 1997.

[GAL 11] GALES G., Mise en correspondance de pixels pour la stéréovision binoculaire par propagation d’appariements de points d’intérét et sondage de régions, PhD Thesis, University of Toulouse, July 2011.

[GAL 12] GALES G., CHAMBON S., CROUZIL A. et al., “Reliability measure for propagation-based stereo matching”, International Workshop on Image Analysis for Multimedia Interactive Services, Dublin, Ireland, May 2012.

[HAR 03] HARTLEY R., ZISSERMAN A., Multiple View Geometry in Computer Vision, 2nd ed., Cambridge University Press, 2003.

[INC 05] INCE S., KONRAD J., “Geometry-based estimation of occlusions from video frame pairs”, IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, vol. 2, pp. 933–936, March 2005.

[JAW 02] JAWAHAR C., NARAYANAN P., “Generalised correlation for multi-feature correspondence”, Pattern Recognition, vol. 35, no. 6, pp. 1303–1313, June 2002.

[JOD 06] JODOIN P.-M., ROSENBERGER C., MIGNOTTE M., “Detecting half-occlusion with a fast region-based fusion procedure”, British Machine Vision Conference, Edinburgh, United Kingdom, pp. 417–426, September 2006.

[JON 92] JONES D., MALIK J., “A Computational framework for determining stereo correspondence from a set of linear spatial filters”, International Journal of Image and Vision Computing, vol. 10, no. 10, pp. 699–708, December 1992.

[KOS 03] KOSTKOVÁ J., ŠÁRA R., “Stratified dense matching for stereopsis in complex scenes”, British Machine Vision Conference, vol. 1, Norwich, United Kingdom, pp. 339–348, September 2003.

[MIN 08] MIN D., KIM D., SOHN K., “Virtual view rendering system for 3DTV”, 3DTV-Conference 2008: The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON), Istanbul, Turkey, pp. 249–252, May 2008.

[NIQ 10] NIQUIN C., PRÉVOST S., REMION Y., “An occlusion approach with consistency constraint for multiscopic depth extraction”, International Journal of Digital Multimedia Broadcasting, vol. 2010, 8 pages, 2010.

[NIQ 11] NIQUIN C., Reconstruction du relief et mixage réel virtuel par caméras relief multi-points de vues, PhD Thesis, University of Reims Champagne-Ardenne, March 2011.

[SCH 02] SCHARSTEIN D., SZELISKI R., “A taxomomy and evaluation of dense two-frame stereo correspondence algorithms”, International Journal of Computer Vision, vol. 47, no. 1, pp. 7–42, 2002.

1 In stereoscopy, dedicated terms are “pair/pairing”, i.e. to match two things together. In multiscopy, the number of elements is not limited to 2, so we will use, in this case, the term match/matching namely to bind similar and multiple elements.

2 http://vision.middlebury.edu/stereo.

3 The term “homologue” normally refers to pairwise symmetry but in a formal mathematical formulation when creating disparity maps, this property is not systematic. Depending on the constraints applied, it must be explicitly mentioned when this property is required.

4 Co-homologue is the term used to refer to homologous pixels between them in the N images taken from projections in different images from the same 3D point.