CHAPTER 5

LINEAR LEAST-SQUARES ESTIMATION: SOLUTION TECHNIQUES

This chapter discusses numerical methods for solving least-squares problems, sensitivity to numerical errors, and practical implementation issues. These topics necessarily involve discussions of matrix inversion methods (Gauss-Jordan elimination, Cholesky factorization), orthogonal transformations, and iterative refinement of solutions. The orthogonalization methods (Givens rotations, Householder transformations, and Gram-Schmidt orthogonalization) are used in the QR and Singular Value Decomposition (SVD) methods for computing least-squares solutions. The concepts of observability, numerical conditioning, and pseudo-inverses are also discussed. Examples demonstrate numerical accuracy and computational speed issues.

5.1 MATRIX NORMS, CONDITION NUMBER, OBSERVABILITY, AND THE PSEUDO-INVERSE

Prior to addressing least-squares solution methods, we review four matrix-vector properties that are useful when evaluating and comparing solutions.

5.1.1 Vector-Matrix Norms

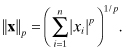

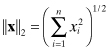

The first is property of interest is the norm of a vector or matrix. Norms such as root-sum-squared, maximum value, and average absolute value are often used when discussing scalar variables. Similar concepts apply to vectors. The Hölder p-norms for vectors are defined as

(5.1-1)

The most important of these p-norms are

(5.1-2)

![]()

(5.1-3)

(5.1-4)

![]()

The l2-norm ![]() x

x![]() 2, also called the Euclidian or root-sum-squared norm, is generally the most often used. To apply these concepts to matrices, the elements of matrix A could be treated as a vector. For an l2-like norm, this leads to the Frobenius norm

2, also called the Euclidian or root-sum-squared norm, is generally the most often used. To apply these concepts to matrices, the elements of matrix A could be treated as a vector. For an l2-like norm, this leads to the Frobenius norm

(5.1-5)

One can also compute induced matrix norms based on the ability of matrix A to modify the magnitude of a vector, that is,

where the norms for A, Ax, and x need not be the same, but usually are. The l1-norm is

![]()

where a:j is the j-th column of A, and the l∞-norm is

![]()

where ai: is the i-th row of A. Unfortunately it is more difficult to compute an l2-norm based on this definition than a Frobenius norm. It turns out that ![]() A

A![]() 2 is equal to the square root of the maximum eigenvalue of ATA—or equivalently the largest singular value of A—but it is not always convenient to compute the eigen decomposition or SVD.

2 is equal to the square root of the maximum eigenvalue of ATA—or equivalently the largest singular value of A—but it is not always convenient to compute the eigen decomposition or SVD.

Two properties of matrix norms will be of use in the next sections. From definition (eq. 5.1-6) it follows that

and this can be extended to products of matrices:

However, the l2 and Frobenius matrix norms are unchanged by orthogonal transformations, that is,

(5.1-9)

![]()

More information on matrix-vector norms may be found in Dennis and Schnabel (1983), Björck (1996), Lawson and Hanson (1974), Golub and van Loan (1996), and Stewart (1998).

5.1.2 Matrix Pseudo-Inverse

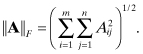

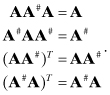

When matrix A is square and nonsingular, the equation Ax = b can be solved for x using matrix inversion (x = A−1b). When A is rectangular or singular, A does not have an inverse, but Penrose (1955) defined a pseudo-inverse A# uniquely determined by four properties:

(5.1-10)

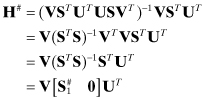

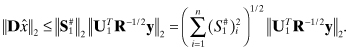

This Moore-Penrose pseudo-inverse is of interest in solving least-squares problems because the normal equation solution ![]() for y = Hx + r uses a pseudo-inverse; that is, H# = (HTH)−1HT is the pseudo-inverse of H. Notice that when H has more rows than columns (the normal overdetermined least-squares case), the pseudo-inverse (HTH)−1HT does not provide an exact solution—only the closest solution in a least-squares sense. This pseudo-inverse can be also written using the SVD of H

for y = Hx + r uses a pseudo-inverse; that is, H# = (HTH)−1HT is the pseudo-inverse of H. Notice that when H has more rows than columns (the normal overdetermined least-squares case), the pseudo-inverse (HTH)−1HT does not provide an exact solution—only the closest solution in a least-squares sense. This pseudo-inverse can be also written using the SVD of H

![]()

where U is an m × m orthogonal matrix, V is an n × n orthogonal matrix, and S = [S1 0]T is an m × n upper diagonal matrix of singular values (S1 is an n × n diagonal matrix). The pseudo-inverse is

(5.1-11)

where ![]() is the pseudo-inverse of S1. This equation shows that a pseudo-inverse can be computed even when HTH is singular (the underdetermined least-squares case). Singular values that are exactly zero are replaced with zeroes in the same locations when forming

is the pseudo-inverse of S1. This equation shows that a pseudo-inverse can be computed even when HTH is singular (the underdetermined least-squares case). Singular values that are exactly zero are replaced with zeroes in the same locations when forming ![]() . The term “pseudo-inverse” often implies an inverse computed for a rank deficient (rank < min(m,n)) H matrix rather than a full-rank inverse such as (HTH)−1HT. In this case the pseudo-inverse provides the minimal norm solution, as will be discussed in Section 5.8.

. The term “pseudo-inverse” often implies an inverse computed for a rank deficient (rank < min(m,n)) H matrix rather than a full-rank inverse such as (HTH)−1HT. In this case the pseudo-inverse provides the minimal norm solution, as will be discussed in Section 5.8.

5.1.3 Condition Number

Another useful matrix property describes the sensitivity of x in

to perturbations in A and b; that is, the magnitude of δx due to perturbations δA and δb in

(5.1-13)

![]()

Note that A can be a square matrix, but this is not a requirement. The following analysis of the problem is from Dennis and Schnabel (1983, p. 52), which is based on Wilkinson’s (1965) backward error analysis. First consider the effects of perturbation δb such that x + δx = A−1(b + δb) or

(5.1-14)

![]()

This equation applies for a square nonsingular A, but it also applies if A−1 is interpreted as a pseudo-inverse of a full-rank rectangular A. Using equation (5.1-7),

(5.1-15)

![]()

Equation (5.1-7) can also be used on equation (5.1-12) to obtain

(5.1-16)

![]()

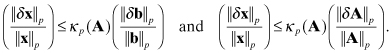

Merging the two equations yields

(5.1-17)

![]()

Similarly for perturbation δA, (A + δA)(x + δx) = Ab, or ignoring the δAδx term,

(5.1-18)

![]()

Using (5.1-8),

(5.1-19)

![]()

or

(5.1-20)

![]()

In both cases the relative sensitivity is bounded by ![]() A

A![]()

![]() A

A![]() −1, which is called a condition number of A and is denoted as

−1, which is called a condition number of A and is denoted as

(5.1-21)

![]()

when using the corresponding lp induced matrix norm for A. Again these equations still apply if A−1 is replaced with A# when A is rectangular and full rank (rank = min(m,n)). Notice that κp(A) is unaffected by scaling of A that multiplies the entire matrix; that is, κp(αA) = κp(A). However, κp(A) is affected by scaling that affects only parts of A.

The effects of numerical errors (due to computational round-off) on minimal norm solutions for x in Ax = b are analyzed using κp(A). It can be shown that most numerical solution techniques limit ![]() δA

δA![]() /

/![]() A

A![]() to a constant (c) times the computer numerical precision (ε), so that

to a constant (c) times the computer numerical precision (ε), so that

![]()

Notice that κp(A) = 1 when A = αI, and κp(A) increases as A approaches singularity.

Example 5.1: Matrix Condition Number for Rank-2 Matrix

First consider

![]()

for which κ1(A) = α/α = 1. We use the l1-norm for convenience. This is an example of a well-conditioned matrix. Now consider

![]()

for which

![]()

Assuming that |β| < 1 (which is a condition for A to be nonsingular), ![]() A

A![]() 1 = 1, and

1 = 1, and ![]() A−1

A−1![]() 1 = 1/(1 − β2), so that

1 = 1/(1 − β2), so that

![]()

Hence as |β| → 1, κ1(A) → ∞. For example, if β = 0.999, κ1(A) = 500.25. It is obvious that solution of x in equation x = A−1b will become increasingly sensitive to errors in either A or b as |β| → 1.

As a third example, consider

![]()

for which κ1(A) = β if |β| > 1. A is clearly nonsingular, but can still have a large condition number when β is large. This demonstrates a problem in treating condition numbers as a measure of “closeness to singularity” or “non-uniqueness.” For κp(A) to be used as a test for observability (a term defined in the next section), matrix A should be scaled so that the diagonal elements are equal. The effects of scaling are often overlooked when condition numbers are used for tests of observability. This issue will be explored further in Section 5.7.

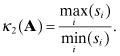

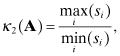

It is generally not convenient or accurate to compute condition numbers by inverting a matrix. Condition numbers can also be computed from the singular values of matrix A. Since A can be decomposed as A = USVT, where U and V are orthogonal, and A−1 = VS−1UT when A is square, then ![]() and

and ![]() where si are the singular values in S. Hence

where si are the singular values in S. Hence

(5.1-22)

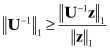

However, it is computationally expensive to evaluate the SVD, so simpler methods are often used. One such method assumes that the A matrix has been transformed to an upper triangular form using an orthogonal transformation; that is, TTA = U where TTT = I and U is upper triangular. (This QR transformation is discussed in Section 5.3.) Since T is orthogonal, ![]() A2

A2![]() =

= ![]() U

U![]() 2 and

2 and ![]() A

A![]() F =

F = ![]() U

U![]() F. Bierman’s Estimation Subroutine Library (ESL) subroutine RINCON.F—used for examples in this chapter—computes the condition number

F. Bierman’s Estimation Subroutine Library (ESL) subroutine RINCON.F—used for examples in this chapter—computes the condition number

(5.1-23)

![]()

using the Frobenius norm because RINCON explicitly inverts U. κF(U) may be larger than κ2(U), although it is usually quite close.

Computation of κ2(U) without using the SVD depends upon computation of the matrix l2-norm, which is difficult to compute. Thus alternatives involving the l1-norm are often used. For the l1-norm of n × n matrix A,

(5.1-24)

![]()

In practice it is normally found that κ1(U) ≈ κ1(A), as will be shown in a later example. An estimate of κ1(U) can be computed using a method developed by Cline, Moler, Stewart, and Wilkinson (1979), and is included in the LINPACK linear algebra library (Dongarra et al. 1979). First, ![]() (where u:j is column j of U) is computed. Then a bound on

(where u:j is column j of U) is computed. Then a bound on ![]() U−1

U−1![]() 1 is computed from the inequality

1 is computed from the inequality

for any nonzero z. The appropriate z is chosen by solving UTz = e, where e is a vector of ±1’s with the signs chosen to maximize |zi| for i = 1, 2,…, n in sequence. Then Uy = z is solved for y (i.e., y = U−1U−Te), and the estimated κ1(U) is evaluated as

(5.1-25)

![]()

As noted by Dennis and Schnabel (1983, p.55) and Björck (1996, p. 116), even though ![]() y

y![]() 1/

1/![]() z

z![]() 1 is only a lower bound on

1 is only a lower bound on ![]() U−1

U−1![]() 1, it is usually close. Another κ1(U) estimator developed by Hagar and Higham is found in LAPACK (Anderson et al. 1999). This algorithm does not require transforming A to upper triangular form. It only requires computation of products Ax and ATx where x is a vector. Björck (1996, p. 117) claims that the estimates produced by this algorithm are sharper than those of the LINPACK algorithm.

1, it is usually close. Another κ1(U) estimator developed by Hagar and Higham is found in LAPACK (Anderson et al. 1999). This algorithm does not require transforming A to upper triangular form. It only requires computation of products Ax and ATx where x is a vector. Björck (1996, p. 117) claims that the estimates produced by this algorithm are sharper than those of the LINPACK algorithm.

5.1.4 Observability

Previous chapters have generally assumed that the system y = Hx + r is observable, but this term has not been clearly defined. Theoretical observability is defined in several ways that are equivalent:

1. The rank of the m × n matrix H must be n.

2. H must have n nonzero singular values.

3. The rows of H must span the n-dimensional vector space such that any n-vector can be represented as

![]()

where hi: is row i of H.

4. The eigenvalues of HTH must be greater than zero.

5. The determinant of HTH must be greater than zero.

6. HTH must be positive definite; that is, xTHTHx > 0 for all nonzero x.

7. It must be possible to uniquely factor HTH as LLT (lower triangular) or UUT (upper triangular) where all diagonals of L or U are nonzero.

8.

![]() (where A = HTH) for i ≠ j is a necessary but not sufficient condition for observability.

(where A = HTH) for i ≠ j is a necessary but not sufficient condition for observability.

When the rank of H is less than n (or any of the equivalent conditions), the system is unobservable; that is, HTH of the normal equations is singular and a unique solution cannot be obtained. However, even when x is theoretically observable from a given set of measurements, numerical errors may cause tests for the conditions listed above to fail. Conversely, numerical errors may also allow tests for many of these conditions to pass even when the system is theoretically unobservable. Hence numerical observability may be different from theoretical observability. The matrix condition number is one metric used to determine numerical observability. Practical tests for observability are addressed in Section 5.7.

5.2 NORMAL EQUATION FORMATION AND SOLUTION

5.2.1 Computation of the Normal Equations

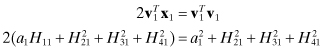

The normal equation for the weighted least-squares case of equation (4.2-3) is

(5.2-1)

![]()

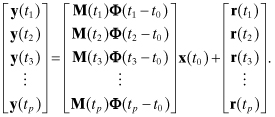

When the number of scalar measurements (m) in y is much larger than the number of unknowns (n) in x—which is usually the case—and explicit or implicit integration is required to compute H, it is generally most efficient to accumulate information in the normal equations individually for each measurement or set of measurements with a common time. That is, the measurement loop is the outer loop in forming b = HTR−1y and A = HTR−1H. For generality we assume that the state vector x is referenced to an epoch time t0, and that measurements y(ti) at other times ti for i = 1, 2, 3,…, p are modeled as the product of a measurement sensitivity matrix M(ti) and the state transition matrix from time t0 to ti; that is,

y(ti) may be a scalar or a vector, but the sum of the number of measurements for all p times is assumed to be m. If the independent variable in the model is not time but simply a measurement index, equation (5.2-2) still applies, but Φ(ti − t0) = I. In either case the total set of all measurements is represented as

(5.2-3)

The most commonly used software structure for solving the least-squares normal equation is:

where R(ti)−1/2 is an inverse “square-root” factor of the measurement noise covariance matrix R(ti) at time ti; that is, R(ti) = R(ti)1/2R(ti)T/2 and R(ti)−1/2 = (R(ti)1/2)−1. The notation b ⇐ b + c means that b + c replaces b in memory. In most cases R(ti) is assumed to be a diagonal matrix with diagonal elements in row i equal to ![]() . Thus formation of M(ti)Φ(ti − t0)R(ti)−1/2 just involves dividing each row of M(ti)Φ(ti − t0) by σi(ti). If R(ti) is not diagonal, R(ti)1/2 is usually a lower triangular Cholesky factor of R(ti).

. Thus formation of M(ti)Φ(ti − t0)R(ti)−1/2 just involves dividing each row of M(ti)Φ(ti − t0) by σi(ti). If R(ti) is not diagonal, R(ti)1/2 is usually a lower triangular Cholesky factor of R(ti).

Notice that the total number of floating point operations (flops) required to accumulate the n × n matrix A and n-element vector b in equation (5.2-4) is mn(n + 3)/2 if only the upper triangular partition of A is formed (as recommended). It will be seen later that alternate algorithms require more than mn(n + 3)/2 flops. It will also be seen that the general structure of equation (5.2-4) can be applied even when using these alternate algorithms.

An example demonstrates how the special structure of certain partitioned problems can suggest alternate solution approaches.

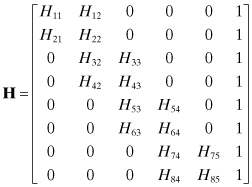

Example 5.2: Partitioned Orbit Determination

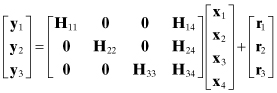

In some least-squares problems a subset of model states contribute to all measurements while other states influence only selected subsets of the measurements. For example, in orbit determination of a low-earth-orbiting satellite, ground station tracking biases only affect measurements from that ground station. For a case in which three ground stations track a satellite, the measurement sensitivity matrix H could have the structure

where

| y1 | includes all measurements from station #1 (for all passes), |

| y2 | includes all measurements from station #2, |

| y3 | includes all measurements from station #3, |

| x1, x2, x3 | are the measurement biases for ground stations 1, 2, and 3, respectively, |

| x4 | are the common states such as the satellite epoch orbit states and any other force model parameters, |

| Hij | is the measurement sensitivity matrix for measurement set i with respect to states j, |

| r1, r2, r3 | are the measurement noises for the three stations. |

Notice that this numbering scheme does not make any assumptions about measurement time order.

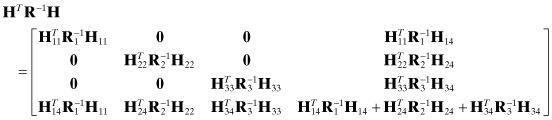

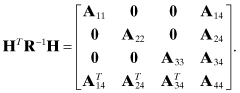

The information matrix for this problem is

and the information vector is

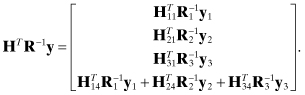

The zero partitions in HTR−1H allow the normal equation solution ![]() to be obtained in partitions without inverting the entire matrix. Specifically, for the case

to be obtained in partitions without inverting the entire matrix. Specifically, for the case

![]() can be solved for

can be solved for ![]() in partitions, starting with the common parameters. The partitioned solution is easily determined to be

in partitions, starting with the common parameters. The partitioned solution is easily determined to be

![]()

![]()

![]()

![]()

where

![]()

is the error covariance for ![]() . Likewise

. Likewise

![]()

![]()

![]()

are the error covariances for ![]() , respectively. Computation of the covariances between

, respectively. Computation of the covariances between ![]() , and

, and ![]() are left as an exercise for the reader. Hint: see the partitioned matrix solution in Appendix A.

are left as an exercise for the reader. Hint: see the partitioned matrix solution in Appendix A.

This example shows how it is possible to solve separately for subsets of states when some subsets only influence subsets of the measurements. This is a great advantage when the number of states and subsets is very large because it is only necessary to compute and store information arrays for the nonzero partitions. For example, when computing earth gravity field models, thousands of satellite arcs from multiple satellites are processed, and the orbit elements from each arc are solved for as separate states. This would not be practical without partitioning. For problems with a large number of states and ground stations (or equivalent “pass-dependent” parameters), the savings in computations and memory usage may be orders of magnitude.

Block partitioning of the measurement matrix also appears in other systems when the states are spatially distributed parameters and either the force model or measurement models have a limited spatial extent. Finite difference and finite element models of physical systems are another example of this structure.

Although the partitioned solution works best when using the normal equations, it is sometimes possible to exploit block partitioned structure when using some of the other least-squares solution techniques described below. An example appears in Section 5.3.

5.2.2 Cholesky Decomposition of the Normal Equations

In this section we address solution of the least-squares normal equations or equations that are equivalent to the normal equations. Consider the weighted least-squares normal equations of equation (4.2-3)

or for the Bayesian estimate,

Solution techniques for the two equations are identical because equation (5.2-6) can be viewed as equation (5.2-5) with the a priori estimate xa treated as a measurement. Hence we concentrate on equation (5.2-5). This can be solved by a number of methods. For example, Gauss-Jordan elimination can be used to transform (HTR−1H) to the identity matrix. This is done by computing both (HTR−1H)−1 and (HTR−1H)−1HTR−1y in one operation, or (HTR−1H)−1HTR−1y can be computed without inverting the matrix (Press et al. 2007, Section 2.1). It is important that pivoting be used in Gauss-Jordan elimination to maintain accuracy—particularly when HTR−1H is poorly conditioned. Another approach uses the LU decomposition to factor HTR−1H = LU where L is a lower triangular matrix and U is an upper triangular matrix. Then the L and U factors can be easily inverted to form P = (HTR−1H)−1 = U−1L−1. With partial pivoting, this has approximately the same accuracy as Gauss-Jordan elimination with full pivoting, but does not take advantage of the fact that HTR−1H is a positive definite symmetric matrix.

Cholesky decomposition of a positive definite symmetric matrix is similar to the LU decomposition but with the constraint that L = UT. That is,

(5.2-7)

![]()

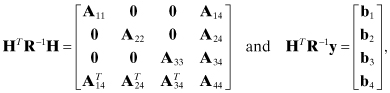

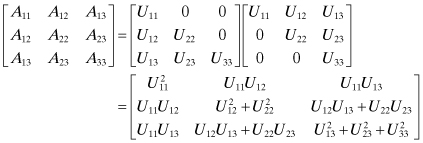

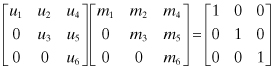

Similar factorizations can also be defined using UUT where U is upper triangular, or as UDUT where U is unit upper triangular (1’s on the diagonal) and D is a diagonal matrix of positive values. The UDUT factorization has the advantage that square roots are not required in the computation. However, we work with UTU because that is the usual definition of Cholesky factors. The factorization can be understood using a three-state example.

Example 5.3: Cholesky Factorization

Consider the Cholesky factorization of a 3 × 3 positive definite symmetric matrix A = UTU:

(5.2-8)

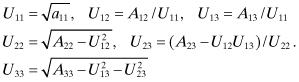

It is evident that the U elements can be computed starting from the upper left element:

(5.2-9)

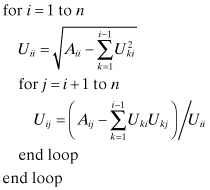

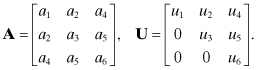

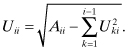

The general algorithm for a n × m matrix is

Note that the elements of U and the upper triangular portion of A can occupy the same storage locations because Aij is never referenced after setting Uij. The factorization can also be implemented in column order or as vector outer products rather than the row order indicated here (Björck 1996, Section 2.2.2).

The stability of the Cholesky factorization is due to the fact that the elements of U must be less than or equal to the diagonals of A. Note that

![]()

Hence numerical errors do not grow and the pivoting used with other matrix inversion methods is not necessary. However, Björck (1996, Section 2.7.4) shows that pivoting can be used to solve unobservable (singular) least-squares problems when HTR−1H is only positive semi-definite.

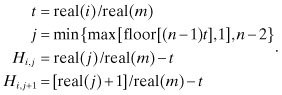

In coding Cholesky and other algorithms that work with symmetric or upper triangular matrices, it is customary to store these arrays as upper-triangular-by-column vectors such that

Use of vectors for array storage cuts the required memory approximately in half, which is beneficial when the dimension is large. For this nomenclature, the Cholesky algorithm becomes

(5.2-11)

where floor(x) is the largest integer less than or equal to x. Again the elements of U and A can occupy the same storage locations.

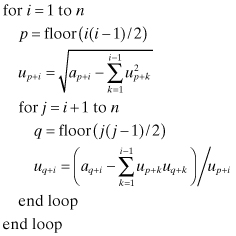

To invert U, the equation UM = I is solved for M where M = U−1 is an upper triangular matrix; that is,

which leads to

Again M and U may occupy the same storage. We leave it as an exercise for the reader to develop a general algorithm for computing M = U−1 and then forming A−1 = U−1U−T. This can also be implemented using the same storage as A. Hint: Subroutine SINV in software associated with this book and LAPACK routine DPOTF2 implement this algorithm, although the indexing can be somewhat confusing.

The number of flops required to Cholesky factor A = UTU is approximately n3/6, where a multiplication or division plus an addition or subtraction are counted as a single flop. n3/6 flops are also required to invert U, and the same to form A−1 = U−1U−T. Hence the total number of flops to invert A is about n3/2. This is half of the ∼n3 operations required to implement inversion based on LU decomposition or Gauss-Jordan elimination. However, since m is generally much greater than n, the flops required to invert HTR−1H are small compared with flops for computing HTR−1H and HTR−1y.

Use of the Cholesky factorization to invert HTR−1H has the potential advantage of greater accuracy compared with the LU or Gauss-Jordan elimination algorithms. However, the accuracy of the LU and Gauss-Jordan elimination can equal that of Cholesky factorization when full pivoting is used. Cholesky factorization is insensitive to scaling issues even without pivoting. That is, the states estimated using the least-squares method can be scaled arbitrarily with little effect on the numerical accuracy of the matrix inversion. This is demonstrated shortly in Example 5.4.

Example 5.4: Least Squares Using a Polynomial Model

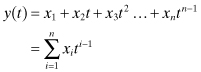

Use of the polynomial model

for least-squares data fitting results in a poorly conditioned solution when n values are not “small.” We evaluate the loss of accuracy for this polynomial model using 101 measurement samples separated in time by 0.01 s over the interval 0 to 1.0 s. For simplicity, the measurement noise variance is assumed to be 1.0, so R = I. The measurement matrix H for this case is

This structure, consisting of integer powers of variables, is called a Vandermonde matrix. Conditioning of Vandermonde matrices is very poor for even moderate values of n, and it is critical that calculations be performed in double precision. If possible, the problem should be restructured to use orthogonal basis functions such as Chebyshev polynomials. However, the polynomial problem offers a good test case for least-squares algorithms.

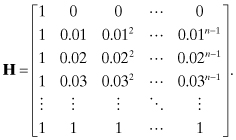

Notice that for the H matrix defined above,

HTH and HTy for this particular problem can be efficiently calculated as shown using sums of powers of ti. However, we use the method described in Section 5.2.1.

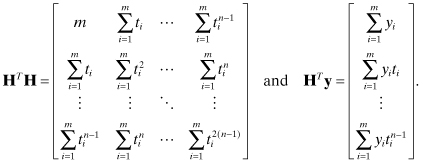

When evaluating accuracy of the solution, the quantity of most interest is the error in the estimated state:

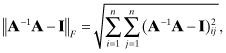

The first term should be zero and the second is the error due to measurement noise. The extent to which (HTH)−1(HTH) − I ≠ 0 is a measure of numerical errors in forming and inverting the normal equations. We use two metrics to evaluate accuracy of the polynomial solution. The first is the Frobenius norm,

where A = HTH. The second metric is the induced l1-norm

![]()

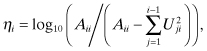

The base 10 logarithm of these metrics is computed as function of n when using double precision (53 bits mantissa) in IEEE T_floating format on a PC. We also tabulate expected precision, calculated as ![]() , computed during the Cholesky factorization of HTH.

, computed during the Cholesky factorization of HTH.

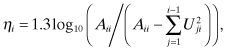

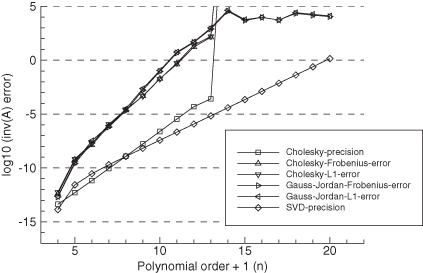

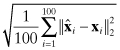

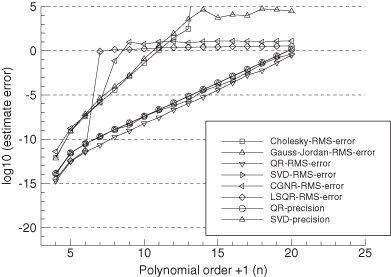

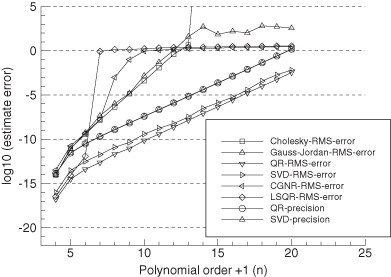

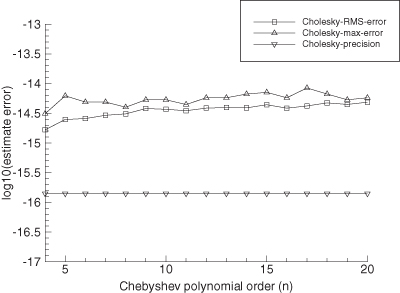

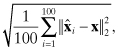

Figure 5.1 shows the results when using Cholesky factorization and Gauss-Jordan elimination with full pivoting to invert HTH. Figure 5.2 is a similar plot obtained when the first four columns of H are scaled by 100. Several conclusions can be drawn from these results:

1. Even with 101 measurements, no normal equation method will work for n > 13.

2. The Cholesky inversion is slightly more accurate than Gauss-Jordan elimination with full pivoting, but the difference is negligible. Gauss-Jordan elimination will continue to compute solutions for n > 13 even though all precision is lost.

3. The Cholesky precision calculation for number of digits lost,

tends to underestimate the actual loss of precision by a factor of about 10 for ηi < 8, and a factor of 104 as the matrix approaches singularity. In fact, the actual loss of precision seems to follow

although the reason for the factor of 1.3 is unknown. Nonetheless, ![]() is still a useful indicator of observability problems as it loosely tracks the loss of precision. Any solution where

is still a useful indicator of observability problems as it loosely tracks the loss of precision. Any solution where ![]() is greater than eight should be highly suspect.

is greater than eight should be highly suspect.

4. The “SVD precision” line in the figures is the expected precision, calculated as log10[1.1 × 10−16 × κ2(H)] from the singular values of H. Since formation of HTH doubles the condition number, the expected precision of the normal equations is log10(1.1 × 10−16) + 2 log10(κ2(H)), which has twice the slope of the “SVD precision” line. This roughly matches the actual “Cholesky” or “Gauss-Jordan” inversion accuracy up to the point where they lose all precision. Hence the precision predicted from the condition number of H is appropriate for the normal equation solution.

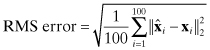

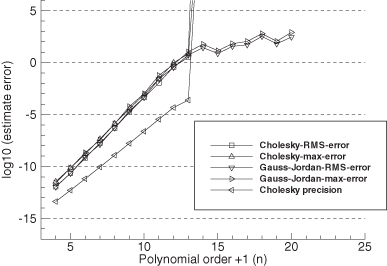

5. Scaling of the first four columns of the H matrix by 100 degrades the accuracy of the matrix inversion by the same factor. However, it has negligible effect on calculation of ![]() . This result is somewhat misleading because multiplying the columns of H by 100 implies that the corresponding elements of x must be divided by 100 if y is unchanged. Hence the fact that the errors increased by 100 in Figure 5.2 implies that the Cholesky factorization is relatively insensitive to scale changes.

. This result is somewhat misleading because multiplying the columns of H by 100 implies that the corresponding elements of x must be divided by 100 if y is unchanged. Hence the fact that the errors increased by 100 in Figure 5.2 implies that the Cholesky factorization is relatively insensitive to scale changes.

FIGURE 5.1: Least-squares normal equations inversion accuracy using polynomial model.

FIGURE 5.2: Least-squares normal equations inversion accuracy using polynomial model with H(:,1:4) multiplied by 100.

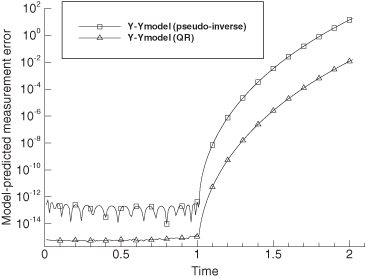

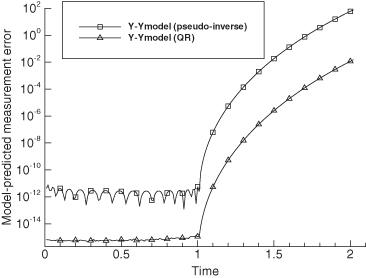

Monte Carlo evaluation is also used as an alternate check on numeric accuracy. One hundred independent samples of state vector x were randomly generated where each individual element of x was obtained from a Gaussian random number generator using a standard deviation of 1.0. Then y = Hx (with no measurement noise) was used to calculate ![]() . The two metrics used for the evaluation are averaged values of the l2- and l∞-norms:

. The two metrics used for the evaluation are averaged values of the l2- and l∞-norms:

and

![]()

where ![]() is the maximum absolute value of the elements in

is the maximum absolute value of the elements in ![]() over all samples of

over all samples of ![]() . Figures 5.3–5.4 show the results plotted on a log scale. The root-mean-squared (RMS) error is approximately equal to the Frobenius norm of A−1A − I in Figures 5.1 and 5.2 for the same conditions, and the maximum error is approximately equal to the l1-norm of A−1A − I. This suggests that numerical errors in (HTH)−1(HTH) are positively correlated rather than independent. Also notice that the RMS errors in

. Figures 5.3–5.4 show the results plotted on a log scale. The root-mean-squared (RMS) error is approximately equal to the Frobenius norm of A−1A − I in Figures 5.1 and 5.2 for the same conditions, and the maximum error is approximately equal to the l1-norm of A−1A − I. This suggests that numerical errors in (HTH)−1(HTH) are positively correlated rather than independent. Also notice that the RMS errors in ![]() are about 20 to 4000 times larger than

are about 20 to 4000 times larger than ![]() (labeled “Cholesky precision”). Again when columns of H are scaled by 100, the errors are about 100 times larger than when unscaled.

(labeled “Cholesky precision”). Again when columns of H are scaled by 100, the errors are about 100 times larger than when unscaled.

FIGURE 5.3: Monte Carlo evaluation of LS normal equations solution accuracy using polynomial model.

FIGURE 5.4: Monte Carlo evaluation of LS normal equations solution accuracy using polynomial model with H(:,1:4) multiplied by 100.

Another advantage of the Cholesky factorization is that it automatically provides information about numerical conditioning of HTR−1H. Notice the second line in equation (5.2-10):

When ![]() is very close in magnitude to Aii, the subtraction will result in a loss of precision. This happens when HTR−1H is nearly singular. The metric

is very close in magnitude to Aii, the subtraction will result in a loss of precision. This happens when HTR−1H is nearly singular. The metric

indicates the number of numerical digits lost when processing column i. The maximum ηi for i = 1 to n is somewhat correlated with log10(“condition number”) for matrix HTR−1H. Hence when the number of digits lost approaches half of the total floating point precision digits, the accuracy of the solution should be suspect. The following example demonstrates these concepts.

5.3 ORTHOGONAL TRANSFORMATIONS AND THE QR METHOD

As noted previously, solution of the normal equations is not the only method that can be used to compute least-squares estimates. Previous sections used the normal equations because they are easily understood and often used in practice. However, the normal equations are more sensitive to the effects of numerical round-off errors than alternative algorithms. This can be understood by expanding the information matrix using the SVD of matrix L−1H where R = LLT:

Notice that equation (5.3-1) is an eigen decomposition of HTR−1H since V is an orthogonal matrix and STS is the diagonal matrix of eigenvalues. Hence the condition number for HTR−1H is twice as large as that for L−1H since the singular values are squared in STS. Thus a least-squares solution technique that does not form HTR−1H would be expected to have approximately twice the accuracy of a solution that inverts (directly or indirectly) HTR−1H.

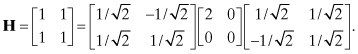

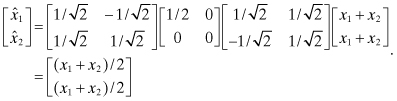

One solution method that avoids formation of the normal equations is based on the properties of orthogonal transformations. To use this QR method (originally developed by Francis [1961] for eigen decomposition), it is necessary that the measurement equation be expressed as y = Hx + r where E[rrT] = I. If E[rrT] = R ≠ I, first factor R as R = LLT and then multiply the measurement equation by L−1 as

![]()

so that E[L−1rrTL−T] = I. In most cases R is diagonal so this normalization step just involves dividing the rows of y and H by the measurement standard deviation for each measurement. Then the QR algorithm proceeds using L−1y as the measurement vector and L−1H as the measurement matrix. For simplicity in notation, we assume that the normalization has been applied before using the QR algorithm, and we drop the use of L−1 in the equations to follow. It should be noted that when measurement weighting results in a “stiff system” (one in which certain linear combinations of states are weighted much more heavily than others), additional pivoting operations are necessary in the QR algorithm to prevent possible numerical problems (Björck 1996, Section 2.7.3). Other approaches for including the weights directly in the QR algorithm are also possible (Björck 1996, p. 164). This topic is addressed again in Chapter 7 when discussing constraints.

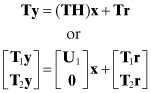

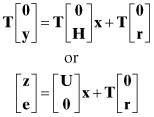

In the QR algorithm the m × n measurement matrix H is factored as H = QTR, or equivalently QH = R, where Q is an m × n orthogonal matrix and R is an upper triangular m × n matrix. Although Q and R are commonly used as the array symbols in the QR algorithm (for obvious reasons), this notation will be confusing in this book because we have already used Q for the discrete state noise covariance matrix and R for the measurement noise covariance. Hence we substitute array T for Q and U for R in the QR algorithm so that H is factored as H = TTU. (The U matrix represents an upper triangular matrix, not the orthogonal matrix of the SVD algorithm.) This implies that the measurement equation is multiplied by T = (TT)−1 (since T is orthogonal) such that TH = U; that is,

(5.3-2)

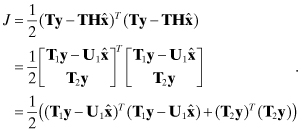

where ![]() , T1 is n × m, T2 is (m − n) × m, and U1 is n × n. The least-squares cost function is unchanged by this transformation because

, T1 is n × m, T2 is (m − n) × m, and U1 is n × n. The least-squares cost function is unchanged by this transformation because

(5.3-3)

This can also be written as

Since the second term in equation (5.3-4) is not a function of ![]() , the value of

, the value of ![]() that minimizes J is

that minimizes J is

where T1y is an n-vector. If H is of rank n, the upper triangular n × n matrix ![]() is easily computed by back-solving

is easily computed by back-solving ![]() . Notice that the cost function is J = (T2y)T(T2y)/2 for the

. Notice that the cost function is J = (T2y)T(T2y)/2 for the ![]() of equation (5.3-5).

of equation (5.3-5).

The error covariance matrix for ![]() is

is

(5.3-6)

![]()

Since T is orthogonal, it does not amplify numerical errors, and the condition number of U1 is the same as that of H. Furthermore, U1 is never squared in the solution, so the condition number does not increase as it does when forming the normal equations. Hence sensitivity to numerical errors is approximately one-half that of the normal equations.

The T matrix is computed using elementary Givens rotations, orthogonal Householder transformations, or Modified Gram-Schmidt (MGS) orthogonalization (see Lawson and Hanson 1974; Bierman 1977b; Dennis and Schnabel 1983; Björck 1996; Press et al. 2007). It will be seen in the next section that it is not necessary to explicitly calculate T.

5.3.1 Givens Rotations

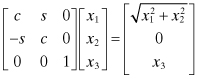

Givens rotations are defined as

![]()

where c2 + s2 = 1. The coefficients can be interpreted as cosine and sine functions of an angle, but trigonometric functions are not required. A Givens rotation can be applied to zero an element of a vector. For example

when ![]() and

and ![]() . Individual Givens rotations can be sequentially applied to zero all elements in a column of the H matrix below the diagonal where only the diagonal element is modified at each step. Then this sequence of operations is applied for remaining columns until TH = U where T represents the product of all individual rotations. Since the individual rotations do not modify the magnitude of the H columns, the transformation is numerically stable. Givens rotations are typically used to modify a few elements of a matrix or vector. While Givens rotations can be used to triangularize matrices, Householder transformations require one-half or fewer flops compared with “fast” versions of Givens rotations (Björck 1996, p. 56; Golub and Van Loan 1996, p. 218). However, Givens rotations offer more flexibility in zeroing specific sections within a matrix, and can be less sensitive to scaling problems (Björck 1996).

. Individual Givens rotations can be sequentially applied to zero all elements in a column of the H matrix below the diagonal where only the diagonal element is modified at each step. Then this sequence of operations is applied for remaining columns until TH = U where T represents the product of all individual rotations. Since the individual rotations do not modify the magnitude of the H columns, the transformation is numerically stable. Givens rotations are typically used to modify a few elements of a matrix or vector. While Givens rotations can be used to triangularize matrices, Householder transformations require one-half or fewer flops compared with “fast” versions of Givens rotations (Björck 1996, p. 56; Golub and Van Loan 1996, p. 218). However, Givens rotations offer more flexibility in zeroing specific sections within a matrix, and can be less sensitive to scaling problems (Björck 1996).

5.3.2 Householder Transformations

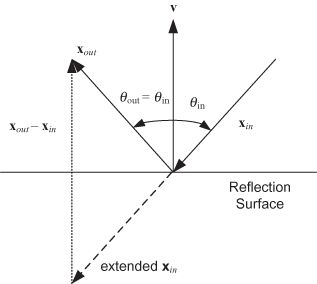

Householder transformations are called reflection operations because they behave like specular reflection of a light ray from a mirror (Fig. 5.5)

FIGURE 5.5: Specular reflection.

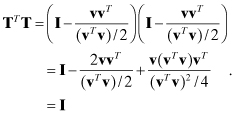

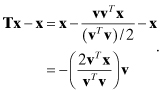

An elementary Householder reflection is defined as

(5.3-7)

![]()

where v is the vector normal to the hyperplane of reflection. It is easily shown that T is orthogonal:

The reflection property can be seen by differencing the output and input vectors,

(5.3-8)

The sign of all components of x in direction v are reversed by the transformation. A sequence of Householder transformations can be used to zero the lower triangle of the measurement H matrix as shown in the example below.

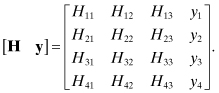

Example 5.5: Use of Householder Transformations to Triangularize an Overdetermined System of Equations

Assume that four measurements are used to solve for three states where the measurement equation is y = Hx + r. We wish to multiply the left- and right-hand side of that equation by an orthogonal transformation T that makes TH an upper triangular matrix. We start by appending the measurement vector y to H to form an augmented measurement matrix:

The first transformation should eliminate H21, H31, and H41. Since the Householder transformation produces output vector

![]()

(where x1 is defined as the first column of H), it is apparent that the last three elements of v1 should equal the last three elements of x1, with ![]() . Let v1 = [a1 H21 H31 H41]T with a1 to be defined such that

. Let v1 = [a1 H21 H31 H41]T with a1 to be defined such that

or

![]()

Thus

![]()

To minimize numerical cancellation, it is better to use the same sign (above) as the sign of H11; that is,

![]()

Thus the transformed first column of H equals

![]()

Notice that this transformation preserves the magnitude of the vector, which is a desirable property for numerical stability. The remaining columns of [H y] are also modified by the transformation using

![]()

where xj is sequentially defined as each remaining column of [H y]. Then the process is repeated to zero the two (modified) elements of the second column below the diagonal using

![]()

Finally the modified H43 is zeroed using

![]()

Notice that each subsequent transformation works with one fewer elements in vj than the preceding transformation (vj−1) since it only operates on H columns from the diagonal down. The three transformations (corresponding to the three columns) are applied as

![]()

to produce the upper triangular matrix U and transformed vector Ty. In implementing this algorithm it is only necessary to store the current vi vector at step i, not the transformation matrix T.

The total number of flops required to triangularize the m × (n + 1) matrix [H y] is approximately n2(m − n/3), which is about twice the flops required to form the normal equation. Hence there is a penalty in using the QR algorithm, but the benefit is greater accuracy.

Example 5.6: Upper Triangular Factors of Sparse Normal Equations

In Example 5.2, the information matrix from the normal equations was assumed to have the structure

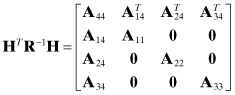

If one attempts to find an upper triangular matrix U such that UUT = HTR−1H, it is found that U will generally not contain zero partitions. However, if the state ordering is changed so that common parameters represented by x4 are moved to the top of the state vector, then the structure is changed to

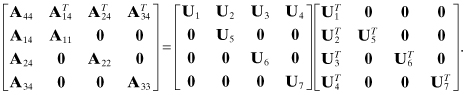

which can be factored as

Hence it is possible to develop a version of the QR algorithm that only operates with the nonzero partitions of U when processing measurements from a given ground station; for example, one need not include U6 and U7 when processing measurements from ground station #1 (corresponding to U5 on the diagonal). However, U1, U2, U3, U4 must be included when processing all measurements that are affected by the common parameters. The unused matrix partitions may be swapped in and out from disk to memory as needed. Implementation of this block partitioning using the QR method is more difficult than for the normal equations, but the savings can still be substantial.

5.3.3 Modified Gram-Schmidt (MGS) Orthogonalization

Another approach that can be used to triangularize a matrix is the Modified Gram-Schmidt (MGS) orthogonalization. In this method, the columns of H are sequentially transformed to a set of orthogonal columns. In the original Gram-Schmidt algorithm, the columns could gradually lose orthogonality due to numerical errors, with consequent loss of accuracy. The modification used in MGS first normalizes the columns at each step, with the result that the numerical errors are bounded. A row-oriented version of MGS for computing the QR decomposition

![]()

where H is m × n, U is n × n, y is m × 1, and z is n × 1 is implemented as follows:

Notice that T is not explicitly formed, but the wk vectors (k = 1 : n) contain the information required to generate T. Björck (1996, p. 65) shows that the MGS QR method applied to least-squares problems is equivalent to the Householder QR method for a slightly modified problem. Rather than use the Householder QR method to directly triangularize the [H y] matrix, it is applied to an augmented matrix with n rows of zeroes added at the top:

![]()

This corresponds to the modified measurement equation

![]()

Then a sequence of Householder transformations, denoted as T, is applied to obtain:

(5.3-9)

where T is an orthogonal (n + m) × (n + m) matrix,

![]()

and U = TH is an upper triangular n × n matrix. A recursive version of this algorithm (where the upper rows may already contain U and z arrays) is presented in Chapter 7 when discussing recursive least squares.

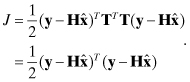

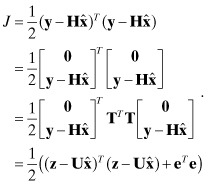

The least squares cost function is not modified by these changes, as shown by

(5.3-10)

Provided that the upper triangular n × n matrix U is of rank n, ![]() minimizes J with the result that J = eTe/2.

minimizes J with the result that J = eTe/2.

Throughout this book we use “Householder QR” to mean the standard Householder QR method, “MGS QR” to mean the MGS/augmented Householder method (since they are equivalent), and “QR” algorithm to refer to either.

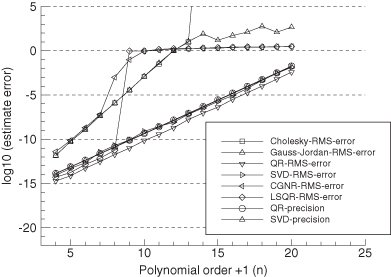

Golub and Van Loan (1996, p. 241) note that the MGS QR method has the same theoretical accuracy bounds as Householder QR, provided that it is implemented as an augmented system so that transformations also operate on y (as described above). However, several investigators have found that the MGS QR method is slightly more accurate than alternative orthogonalization methods (Björck 1996, p. 66), although the reason is not clear. Björck notes that the Householder QR method is sensitive to row permutations of H, whereas MGS is almost insensitive because it zeroes entire columns of H rather than just from the diagonal down. Björck suggests that when using the Householder QR method for weighted least-squares problems, the rows should be sorted in decreasing row norm before factorization, or column pivoting should be used. Unfortunately sorting in decreasing row norm order does not always solve the problem, as will be shown later.

Another benefit of the MGS QR approach is that measurements need not be processed all at once in a single batch, which is desirable (to avoid storing H) when n and m are large. That is, measurements may be processed in small batches (e.g., 100) where the nonzero U and z arrays from one batch are retained when processing the next batch. However, to implement this approach, additional terms must be included in the “row-oriented version of MGS” algorithm listed above. This recursive procedure is, in fact, the measurement update portion of Bierman’s (1977b) Square Root Information Filter (SRIF) that will be discussed in Chapter 10. Recursive updating of the Householder QR method can also be accomplished using Givens rank-1 updates (Björck 1996, p. 132). For more information on orthogonal transformations and the QR algorithm see Lawson and Hanson (1974), Björck (1996), Dennis and Schnabel (1983), Golub and Van Loan (1996), Bierman (1977b), and Press et al. (2007).

5.3.4 QR Numerical Accuracy

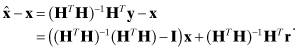

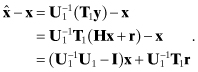

To evaluate the numerical accuracy of the QR algorithm, we again examine the error in the state estimate due to numerical errors only. Using equation (5.3-5),

However, ![]() is likely to underestimate the actual errors since it ignores errors in forming U1. Therefore, Monte Carlo evaluation of

is likely to underestimate the actual errors since it ignores errors in forming U1. Therefore, Monte Carlo evaluation of ![]() , where y = Hx, is deemed a more reliable indicator of numerical errors. Numerical results using the QR algorithm for our example polynomial problem are given later in Section 5.6.

, where y = Hx, is deemed a more reliable indicator of numerical errors. Numerical results using the QR algorithm for our example polynomial problem are given later in Section 5.6.

5.4 LEAST-SQUARES SOLUTION USING THE SVD

Another technique based on orthogonal transformations, the SVD, can also be used to solve the least-squares problem. As before, we start by computing the SVD of the measurement matrix: H = USVT where U is an m × m orthogonal (not upper triangular) matrix, V is an n × n orthogonal matrix, and S is an m × n upper diagonal matrix. When m > n (the overdetermined case), the SVD can be written as

![]()

where U1 is n × n, U2 is (m − n) × n, and S1 is n × n. U2 is the nullspace of H as it multiplies 0. Hence it is not needed to construct a least-squares solution and is often not computed by SVD algorithms. Golub and Van Loan (1996, p. 72) call SVD solutions without the U2 term a “thin SVD.”

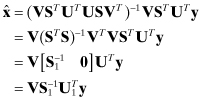

The least-squares solution can be obtained by substituting this replacement for H in the cost function and then setting the gradient to zero. However, it is easier to make the substitution in the normal equations ![]() to obtain:

to obtain:

(5.4-1)

where ![]() is the n × n diagonal matrix of inverse singular values and

is the n × n diagonal matrix of inverse singular values and ![]() is the transpose of the first n columns of U. The error in the least-squares estimate is

is the transpose of the first n columns of U. The error in the least-squares estimate is

![]()

Hence either the magnitude of elements in ![]() or the Monte Carlo statistics on

or the Monte Carlo statistics on ![]() can be used as a measure of numerical accuracy. Numerical results for our polynomial problem are given in Section 5.6.

can be used as a measure of numerical accuracy. Numerical results for our polynomial problem are given in Section 5.6.

The most successful SVD algorithms use Householder transformations to first convert H to a bi-diagonal form (nonzero elements are limited to the diagonal, and either immediately above or below the diagonal). Then iterative or divide-and-conquer techniques are used to converge on the singular values in S. Many methods are based on a routine written by Forsythe et al. (1977, chapter 9), which was based on the work of Golub and Reinsch (see Wilkinson and Reinsch 1971; Dongarra et al. 1979; Stoer and Bulirsch 1980). Since the solution is iterative, the total number of flops varies with the problem. It can be generally expected that the computational time required to obtain an SVD solution will be five or more times greater than the time required to solve the problem using the QR methods, although the ratio will be problem-dependent.

One popular reference (Press et al. 2007) states that the SVD is the method of choice for solving most least-squares problems. That advice may reflect the experience of the authors, but one suspects that their experience is limited to small problems. The arguments for using the SVD are

1. The SVD is numerically accurate and reliable.

2. The SVD automatically indicates when the system of equations is numerically unobservable, and it provides a mechanism to obtain a minimal norm solution in these cases.

3. The SVD provides information on linear combinations of states that are unobservable or weakly observable.

However, the first two arguments are not by themselves convincing (because QR methods also have these properties), and there are several reasons why use of the SVD should be avoided for problems where the number of measurement is large and much greater than the number of unknowns:

1. Multistep algorithms used to compute the SVD may result in an accumulation of numerical errors, and they can potentially be less accurate than the QR algorithm.

2.

In some real problems tens of thousands of measurements are required to obtain an accurate solution with thousands of unknown states. Hence storage of the H matrix may require gigabytes of memory, and computational time for the SVD may be an order of magnitude (or more) greater than for the QR algorithms. If observability is to be analyzed via the SVD, the QR algorithms should first be used to transform the measurement equations to the compact n-element form z = Ux + e. Then the SVD can be used on z and U with no loss of accuracy. This is the method recommended by Lawson and Hanson (1974, p. 290) when mn is large and m ![]() n. Results later in this chapter show that accuracy is enhanced and execution time is greatly reduced when using this procedure.

n. Results later in this chapter show that accuracy is enhanced and execution time is greatly reduced when using this procedure.

3. Most other solution techniques also provide information on conditioning of the solution. Minimal norm solutions in unobservable cases can be computed using other techniques.

4. Use of the SVD to obtain a minimal-norm solution in cases where the system of equations is unobservable implicitly applies a linear constraint on the estimated parameters. That is, the state estimate from the pseudo-inverse is a linear combination of the model states. If the only use of the least-squares model is to obtain smoothed or predicted values of the same quantities that are measured, this is usually acceptable. However, in many problems the states represent physical parameters, and estimation of those states is the goal. Other estimation applications attempt to predict quantities not directly measured, or predict to untested conditions. In these cases it is often not acceptable to compute a minimal-norm (pseudo-inverse) solution when the measurements alone are not sufficient to obtain a solution—this is demonstrated in a later example. Better approaches either obtain more measurements or use prior information.

5.5 ITERATIVE TECHNIQUES

Linear least-squares problems can also be solved using iterative techniques that generally fall into two categories: linear iteration and Krylov space. Iterative methods for solving nonlinear least-squares problems are discussed in Chapter 7. Before addressing iterative approaches, we first review sparse matrix storage methods because iterative methods are mostly used for large sparse systems.

5.5.1 Sparse Array Storage

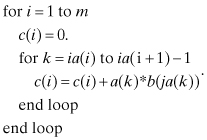

One commonly used sparse array storage method places all nonzero elements of sparse m × n matrix A in a real vector a of dimension p. The column index of elements in a are stored in integer vector ja, and another integer vector ia of dimension m + 1 stores the ja and a indices of the first element belonging to each A row. That is, elements in row i of matrix A will be found in elements ia(i) to ia(i + 1) − 1 of vectors ja and a, where ja(k) specifies the column index for a(k). Hence a matrix-vector product c = Ab can be implemented using this storage scheme as follows:

The transpose product c = ATb is implemented in a slightly different order:

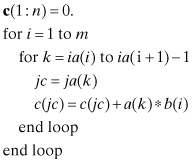

where we have used the MATLAB/Fortran 90 convention for specifying partitions of arrays and vectors; that is, c(1 : n) = c(i), 1 ≤ i ≤ n. Although both of the above matrix-vector multiplications involve some “hopping” in computer memory, compilers effectively optimize the above loops and execution can be efficient. Other sparse storage methods are possible (Björck 1996, p. 270; Anderson et al. 1999; Press et al. 2007, p. 71), and they may be more efficient for operations involving specific types of sparse arrays. Finally it should be noted that matrix-matrix products can be efficient even when sparsity is evaluated “on-the-fly.” For example, a general-purpose version of C = AB, where A is sparse, can be implemented without using sparse storage methods as follows:

where ε is a threshold for nonzero elements (e.g., 10−60 or even 0). Temporary n-element vectors d and ja are used to store the nonzero element values and column indices of each A row. This method becomes somewhat less efficient when B is a vector, but even when A is a full matrix the execution time may only increase slightly compared with a normal matrix product.

5.5.2 Linear Iteration

Iterative techniques can be used to refine an approximate solution of the equation Ax = b. The simplest method is linear iteration. Let ![]() be the exact solution to the normal equations (

be the exact solution to the normal equations (![]() ) and let

) and let ![]() be an approximate solution. Then assuming linearity,

be an approximate solution. Then assuming linearity,

(5.5-1)

![]()

which can be arranged to obtain

The quantity on the right should be evaluated before inverting HTH to obtain ![]() . Iterative refinement of this type is rarely used because accuracy is usually not much better than that of direct normal equation solutions. This statement also applies when HTH is Cholesky factored and the factors are back-solved to compute the solution to equation (5.5-2). The loss of precision in forming HTH is the limiting factor.

. Iterative refinement of this type is rarely used because accuracy is usually not much better than that of direct normal equation solutions. This statement also applies when HTH is Cholesky factored and the factors are back-solved to compute the solution to equation (5.5-2). The loss of precision in forming HTH is the limiting factor.

Other classical iteration techniques include Jacobi, Gauss-Seidel, successive overrelaxation (SOR), and Richardson’s first-order method (Kelley 1995; Björck 1996). These methods split the A matrix in Ax = b as A = A1 + A2 where A1 is a nonsingular matrix constructed so that A1x = (b − A2x) can be easily solved for x.

5.5.3 Least-Squares Solution for Large Sparse Problems Using Krylov Space Methods

Another category of iterative techniques is used to solve large sparse linear least-squares problems when it is not practical to store the entire information matrix HTH in computer memory. HTH generally fills in sparsity present in the original H matrix, and thus the storage requirements can be prohibitive. However, several Krylov space methods can solve the least-squares problem using H without forming HTH.

A Krylov subspace is defined as κk(A,x) = span{x,Ax,A2x,…,Ak−1x} where A is an n × n matrix and x is an n-element vector. Krylov space methods are iterative techniques used to either find the zeros of an affine function Ax − b = 0, or to locate the minimum of a multidimensional cost function J = (y − Hx)T(y − Hx). Krylov space algorithms fall into three classes. The first, conjugate gradient (CG) methods, assume that A is square and positive definite symmetric (Kelley 1995, Section 2.4; Björck 1996, Section 7.4). CG solvers work well with sparse array storage and are often used to solve finite-difference and finite-element problems. Unlike steepest descent methods for minimization problems, they do not take negative gradient steps. Rather, they attempt to take a sequence of steps that are orthogonal to previous steps within the Krylov subspace and in directions that minimize the cost function. As a worst case, CG algorithms should converge exactly when the number of iterations equals the number of unknown states, but if the conditioning is poor, this may not happen because of numerical errors. Furthermore, the convergence rate is unacceptably slow when the number of unknowns is thousands or hundreds of thousands. Conversely, CG algorithms can converge in tens of iterations if the conditioning is good. The bi-CG stabilized (Bi-CGSTAB) algorithm is one of the most successful of the CG methods applicable to general square matrices (see Van der Vorst 1992; Barrett et al. 1993, p.27; Kelley 1995, p. 50). Bi-CGSTAB can also handle square positive definite nonsymmetric A matrices, but convergence may be slower than for symmetric A.

The second class of Krylov space algorithms also takes a sequence of steps that are orthogonal to previous steps, but rather than recursively accumulating information “summarizing” past search directions—as done in CG solvers—these methods store past basis vectors. Because of this, storage requirements increase as iterations proceed. Implementations usually limit the storage to a fixed number of past vectors. Generalized minimum residual (GMRES) and ORTHOMIN are two of the most successful algorithms in this class (see Vinsome 1976; Kelley 1995; Barrett et al. 1993). ORTHOMIN and GMRES also work directly with overdetermined (nonsquare) H matrices to minimize a quadratic cost function.

This author found that the Bi-CGSTAB method converged faster than ORTHOMIN or GMRES when solving steady-state groundwater flow problems of the form Ax − b = 0. However, Bi-CGSTAB requires that matrix A be square, so it can only be used for least-squares problems when starting with the normal equations. This is undesirable because the condition number of HTH is twice that of H, and convergence of CG solvers is a function of the conditioning. Also the sparsity of HTH is generally much less than that of H, so storage and computations may greatly increase.

The third class of Krylov space methods was designed to work directly with least-squares problems. The most popular methods include CG on the normal equations to minimize the residual (CGNR; Barrett et al. 1993, p. 18; Kelley 1995, p. 25; Björck 1996, p. 288) and LSQR (Paige and Saunders 1982). CGNR is also called CGLS by Paige and Saunders. Even though these methods do not form HTH, they are still affected by the doubling of the condition number implied by forming HTH. CGNR and LSQR both use the measurement equations ![]() and HTv = 0, and iterate many times until the residuals are acceptably small. The LSQR algorithm attempts to minimize the effect of conditioning problems by solving the linearized least-squares problem using a Lanczos bi-diagonalization approach. LSQR is claimed to be more numerically stable than CGNR. Storage and computations are generally low, but for many flow-type problems, the performance of the LSQR algorithm without preconditioning appears to be almost identical to that of CG solutions on the normal equations. That is, the iterations tend to stall (do not reduce the residual error) after a few thousand iterations, and they can produce a solution that is unacceptable (Gibbs 1998).

and HTv = 0, and iterate many times until the residuals are acceptably small. The LSQR algorithm attempts to minimize the effect of conditioning problems by solving the linearized least-squares problem using a Lanczos bi-diagonalization approach. LSQR is claimed to be more numerically stable than CGNR. Storage and computations are generally low, but for many flow-type problems, the performance of the LSQR algorithm without preconditioning appears to be almost identical to that of CG solutions on the normal equations. That is, the iterations tend to stall (do not reduce the residual error) after a few thousand iterations, and they can produce a solution that is unacceptable (Gibbs 1998).

The convergence rate of Krylov space methods can be greatly improved if preconditioning is used to transform H or HTH to a matrix in which the eigenvalues are clustered close to 1. This can be implemented as either left or right preconditioning; for example, the root-finding problem Hx = y can be preconditioned as

(5.5-3)

![]()

where SL and SR are the left and right preconditioning matrices. Left preconditioning modifies scaling of the measurement residuals—which may be desirable in poorly scaled problems—while right preconditioning modifies scaling of the state x. Generally either left or right preconditioning is used, but not both. For the least-squares problem, direct preconditioning of HTH is not desirable because this destroys matrix symmetry. Rather, preconditioning is of the form

(5.5-4)

![]()

The goal is to find matrix SL or SR such that ![]() is close to the identity matrix. Preconditioning can either be applied as a first step where SLHSR is explicitly computed, or the multiplication can be included as part of the CG algorithm. Commonly used preconditioners include scaling by the inverse diagonals of HTH (the sum-of-squares of the columns of H), incomplete sparse Cholesky decomposition of the form LLT + E = HTH where E is small, incomplete LU decomposition for square H, or polynomial preconditioning (see Simon 1988; Barrett et al. 1993; Kelley 1995; Björck 1996). Diagonal scaling is generally not very effective and it can sometimes be difficult to compute incomplete Cholesky factors or polynomial preconditioners.

is close to the identity matrix. Preconditioning can either be applied as a first step where SLHSR is explicitly computed, or the multiplication can be included as part of the CG algorithm. Commonly used preconditioners include scaling by the inverse diagonals of HTH (the sum-of-squares of the columns of H), incomplete sparse Cholesky decomposition of the form LLT + E = HTH where E is small, incomplete LU decomposition for square H, or polynomial preconditioning (see Simon 1988; Barrett et al. 1993; Kelley 1995; Björck 1996). Diagonal scaling is generally not very effective and it can sometimes be difficult to compute incomplete Cholesky factors or polynomial preconditioners.

Another alternative to direct application of the CGNR method changes variables and inverts ![]() in partitions, where the partitions of H are implicitly inverted using other methods. This approach is somewhat similar to the red-black partitioning (Dongarra et al. 1991; Barrett et al. 1993, section 5.3) often used in iterative solution of sparse systems, and it can also be interpreted as a form of preconditioning. Numerical conditioning for the CG solver is improved because the dimension of the system to be solved is reduced. For flow and similar models resulting from finite-difference equations, those portions of the H matrix can be (implicitly) inverted efficiently using nonsymmetric preconditioned CG (PCG) solvers such as the Bi-CGSTAB method.

in partitions, where the partitions of H are implicitly inverted using other methods. This approach is somewhat similar to the red-black partitioning (Dongarra et al. 1991; Barrett et al. 1993, section 5.3) often used in iterative solution of sparse systems, and it can also be interpreted as a form of preconditioning. Numerical conditioning for the CG solver is improved because the dimension of the system to be solved is reduced. For flow and similar models resulting from finite-difference equations, those portions of the H matrix can be (implicitly) inverted efficiently using nonsymmetric preconditioned CG (PCG) solvers such as the Bi-CGSTAB method.

The CGNR algorithm applied to equation ![]() is given below. Initial values of the residual and scaled state are computed as

is given below. Initial values of the residual and scaled state are computed as

(5.5-5)

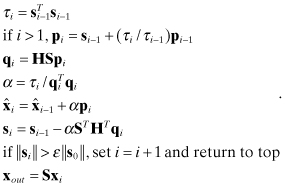

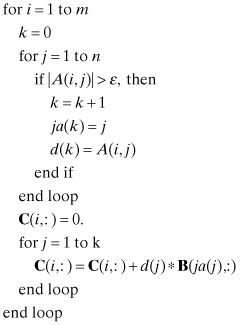

where S is a right preconditioning matrix. Then the CGNR iterations for i = 1, 2, … are

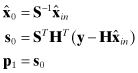

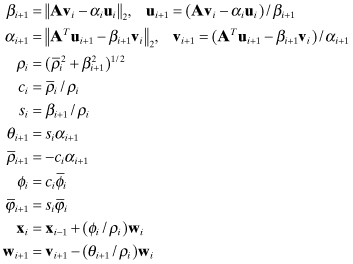

The LSQR algorithm (Paige and Saunders 1982) without preconditioning is initialized as

(5.5-7)

The LSQR iterations for i = 1, 2,… are

(5.5-8)

where ![]() is the residual vector norm

is the residual vector norm ![]() . Iterations stop if any of these tests are satisfied:

. Iterations stop if any of these tests are satisfied:

(5.5-9)

ATOL, BTOL, and CONLIM are user-specified tolerances and cond(A) is an estimate of the condition number of A as explained by Paige and Saunders (1982). To obtain a solution accurate to six digits, suggested values for ATOL and BTOL are 10−6, and the suggested CONLIM is ![]() where RELPR is the relative precision of the computer (e.g., 1.1 × 10−16 for double precision in IEEE T_floating). Preconditioning can be applied to LSQR or CGNR by redefining the A matrix.

where RELPR is the relative precision of the computer (e.g., 1.1 × 10−16 for double precision in IEEE T_floating). Preconditioning can be applied to LSQR or CGNR by redefining the A matrix.

The primary function of an iterative least-squares solver is to obtain a solution faster and with less storage than a direct method such as QR. If the estimate error covariance matrix or log likelihood function is required, one final iteration of a direct method must be used.

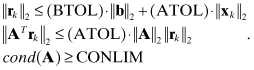

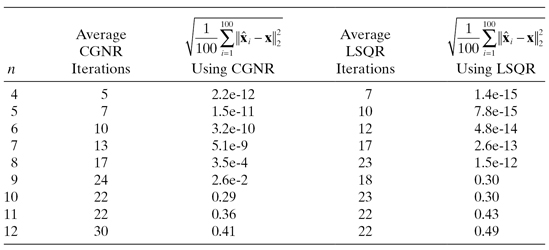

Example 5.7: Groundwater Flow Modeling Calibration Problem

Consider a flow modeling least-squares problem (Porter et al. 1997) consisting of three linearized matrix equations:

where

| δh | are perturbations in hydraulic “head” at each node (n total) of a three-dimensional (3-D) finite difference grid, |

| δk | are perturbations in hydraulic conductivity at each node of a 3-D finite difference grid, |

| δb | are perturbations in p flow common parameters such as boundary conditions, |

| Bh = ∂f/∂h and Bk = ∂f/∂k | are n × n linearizations of the steady-state flow equation f(h,k,b) = 0 where f is the net flow to the nodes, |

| Bb = ∂f/∂b | is a n × p linearization of the steady-state flow equation f(h,k,b) = 0, |

| C | is a “soft” n × n spatial continuity conductivity constraint implemented as a 3-D autoregressive model, |

| v | is the conductivity constraint error at each node, |

| Hh | is the m × n measurement partial matrix (Jacobian) for hydraulic head measurements (each measurement is a function of head at four surrounding nodes), |

| Hk | is the m × n measurement partial matrix for hydraulic conductivity measurements (each measurement is a function of conductivity at four surrounding nodes), |

| Hb | is the m × p measurement partial matrix for hydraulic conductivity measurements with respect to common parameters (usually Hb = 0), and |

| r | is random measurement noise. |

All the above arrays are very sparse because each node connects to six (or less) other nodes. Typically only a few hundred head and conductivity measurements are available for site models consisting of 104 to 106 nodes. Hence the spatial continuity “pseudo-measurement” conductivity constraint is required to obtain a solution.

Since the first (flow) equation is a hard constraint and the square Bh matrix should be nonsingular, ![]() can be substituted to obtain the reduced equations

can be substituted to obtain the reduced equations

![]()

Since square matrix C is formed using an autoregressive model applied to nearest neighbors, it should also be nonsingular. This suggests that C can be used as a right preconditioner to obtain the modified measurement equation

![]()

Notice that the CGNR operations in equation (5.5-6) involving H appear as either (HS)pi or (HS)Tqi—a matrix times a vector. Hence ![]() can be evaluated as follows:

can be evaluated as follows:

1. z = C−1pi is computed using the Bi-CGSTAB solver (use incomplete LU decomposition preconditioning with the same fill as the original matrix, and red-black partitioning),

2. ![]() is computed using the Bi-CGSTAB solver (again use incomplete LU decomposition preconditioning with the same fill as the original matrix, and red-black partitioning),

is computed using the Bi-CGSTAB solver (again use incomplete LU decomposition preconditioning with the same fill as the original matrix, and red-black partitioning),

3. a = Hkz − Hhs.

Likewise ![]() can be evaluated using a similar sequence of operations. The substitution procedure can also be used for terms involving the boundary condition parameters.

can be evaluated using a similar sequence of operations. The substitution procedure can also be used for terms involving the boundary condition parameters.

When using this procedure for the groundwater flow modeling calibration problem, CGNR convergence is usually achieved in less than 100 CGNR iterations even when using 200000 node models (Gibbs 1998). Although this problem is unusual in that the number of actual measurements (not pseudo-measurements) is much smaller than the number of unknowns, the solution techniques can be applied to other 2-D or 3-D problems.

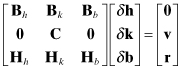

Example 5.8: Polynomial Problem Using CGNR and LSQR Solvers

The CGNR solver was also tested on the polynomial problem of Example 5.4. Right diagonal preconditioning was based on the inverse l2-norms of the columns of H, so the columns of HS were normalized to one. However, the results did not change significantly without preconditioning. Table 5.1 lists the results for CGNR and LSQR when using ε = 10−13 for CGNR and ATOL = BTOL = 10−6 for LSQR. For n < 8, the CGNR solver achieved accuracy comparable to Cholesky solutions of the normal equations, and the LSQR accuracy was almost as accurate as the QR algorithm for n < 9. This is not an entirely fair comparison because coding of the LSQR algorithm (LSQR 1982) was far more sophisticated than the elementary algorithm used for CGNR. For n ≥ 9, both the CGNR and LSQR solutions were unacceptable. (In interpreting the values of Table 5.1, recall that the x samples were generated with a standard deviation of 1.0) These results are compared with the other solution methods in the next section.

TABLE 5.1: CGNR and LSQR Accuracy on Least-Squares Polynomial Problem

Both algorithms may perform better when using alternate preconditioners. We again caution that results for simple problems should not be interpreted as general characteristics applicable to all large-scale problems.

5.6 COMPARISON OF METHODS

We now compare numerical results when using different least-squares methods on the polynomial problem of Example 5.4 and on another problem. Execution times are also discussed.

5.6.1 Solution Accuracy for Polynomial Problem

The following examples discuss solution accuracy for the basic polynomial model of Example 5.4 and minor variations.

Example 5.9: Polynomial Problem

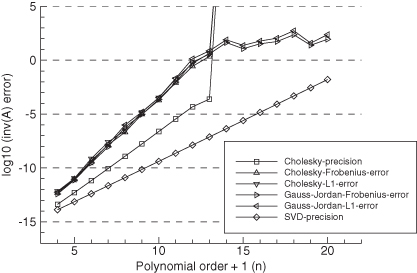

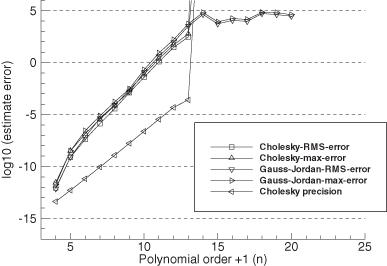

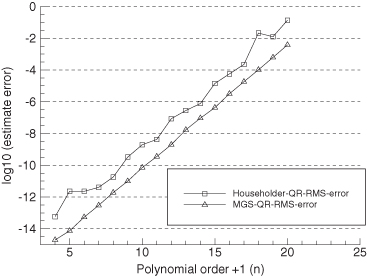

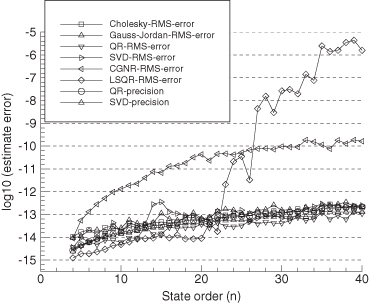

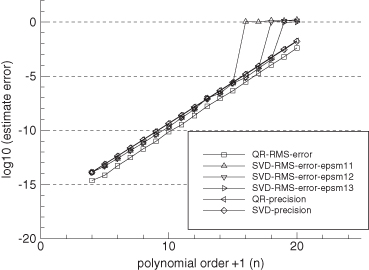

Figure 5.6 shows the 100-sample Monte Carlo RMS solution errors

for six different least-squares solution techniques versus polynomial order. As before, results are plotted on a log scale. Also shown are the accuracies obtained using condition numbers computed as the maximum ratio of singular values, and as κF(U) = ![]() U

U![]() F

F![]() U−1

U−1![]() F, which uses the Frobenius norm of the upper triangular U matrix produced by the QR method and its inverse (as discussed in Section 5.1.3). The plotted variables are labeled as follows:

F, which uses the Frobenius norm of the upper triangular U matrix produced by the QR method and its inverse (as discussed in Section 5.1.3). The plotted variables are labeled as follows:

1. Cholesky-RMS-error: the RMS error obtained when using the normal equations solved using Cholesky factorization.

2. Gauss-Jordan-RMS-error: the RMS error obtained when using the normal equations solved using Gauss-Jordan elimination.

3. QR-RMS-error: the RMS error obtained when using the QR method. Unless stated otherwise, the QR method is implemented using the MGS algorithm.

4. SVD-RMS-error: the RMS error obtained when using SVD. Unless stated otherwise, the SVD results are computed using the LAPACK DGESDD divide-and-conquer routine.

5. CGNR-RMS-error: the RMS error obtained when using CGNR method.

6. LSQR-RMS-error: the RMS error obtained when using LSQR method.

7. QR precision#: the accuracy computed as 1.1e-16 × condition number κF(U) = ![]() U

U![]() F

F![]() U−1

U−1![]() F.

F.

8. SVD precision: the accuracy computed as ![]()

FIGURE 5.6: Monte Carlo evaluation of LS solution accuracy using polynomial model.

The conclusions from Figure 5.6 are:

1. Errors of the normal equation method solved using Cholesky factorization and Gauss-Jordan method are approximately equal in magnitude up to the point at which all precision is lost (n = 13). These errors grow at twice the rate at which the condition numbers κ2(H) or κF(U) grow with polynomial order.

2. The condition number estimates κ2(H) and κF(U) closely agree for this problem. Although not shown in the plot, the LINPACK condition number estimate κ1(U) ≈ κ1(A) closely follows the other two estimates.

3. Errors of the SVD solution closely follow the accuracy computed using the condition numbers κ2(H) and κF(U). When using the LAPACK DGESVD (SVD using QR iteration) subroutine, the errors are similar to those of DGESDD, but those of the numerical recipes (Press et al. 2007) SVDCMP routine are approximately 5.5 (= log10 0.74) times larger.

4. Errors of the MGS QR method are a factor of approximately 3.5 (= log10 0.54) smaller than those of the LAPACK SVD methods. When the QR method is changed to use the Householder QR method, the errors are a factor of 12.6 (= log10 1.10) greater, or about 3.6 times greater than those of the LAPACK SVD methods.

5. When the MGS QR method is first used to transform [H y] to [U z], and then either the LAPACK or Numerical Recipes SVD routine is used on [U z], the estimation errors are comparable to those of the direct MGS QR method; that is, 3.5 times smaller than when the SVD is used directly on H. This is unexpected because the SVD methods first transform H to a nearly upper triangular matrix using Householder transformations.

6. Errors of the CGNR method at first closely follow those of the Cholesky and Gauss-Jordan methods up to n = 7, but then deteriorate rapidly. No indication of algorithm failure (such as reaching the maximum number of iterations) was given.