Let’s consider a classification problem (similar to one that we have seen so far in the book). While doing all our work we made a strong assumption without explicitly saying it: we assume that all the observations are correctly labelled! We cannot say that with certainty. To perform the labelling, we needed some manual intervention, and therefore there must be a certain number of images that are improperly classified since humans are not perfect. This is an important revelation.

Consider the following scenario: we are supposed to reach 90% accuracy in a classification problem. We could try to get better and better accuracy, but when is it sensible to stop trying? If your labels are wrong in 10% of the cases, the model, as sophisticated as it may be, will never be able to generalize to new data with a very high accuracy since it learned the wrong classes for many images. We spend quite a lot of time checking and preparing the training data and normalizing it, but not much time is typically spent checking the labels themselves. We typically assume that all classes have similar characteristics. (We will discuss later in this chapter what that exactly means; for the moment an understanding of the idea will suffice.) What if the quality of images (suppose our inputs are images) for specific classes is worse than for others? What if the number of pixels whose gray values are different than zero is dramatically different for different classes? What if some images are completely blank. What happens in that case? As you can imagine, we cannot check all the images manually and try to detect such issues. Suppose we have millions of images, a manual analysis is surely not possible.

We need a new weapon in our arsenal to be able to spot such cases and to be able to tell how a model is doing. This new weapon is the focus of this chapter and is what it is typically called “metric analysis.” Very often people in this field refer to this array of methods as “error analysis.” However, this name is a bit confusing, especially for beginners. Error can refer to too many things: Python code bugs, error in the methods, in the algorithms, errors in the choice of optimizers, and so on.

You will learn in this chapter how to obtain fundamental information about how your model is doing and how good your data is. You will do this by evaluating your optimizing metric on a set of different datasets that you can derive from your data.

Recall that we discussed, in the case of regression, how in the case of MSEtrain ≫ MSEdev we are in a regime of overfitting. Our metric is the MSE and evaluating it on two datasets, training and dev, and comparing the two values can tell us if the model is overfitting or not. We will expand this methodology in this chapter to be able to extract much more information from the data and the model.

Human-Level Performance and Bayes Error

In most of the datasets that we use for supervised learning, someone has labelled the observations. Take for example a dataset where we have images that are classified. If we ask people to classify all images (imagine this being possible, regardless of the number of images), the accuracy obtained will never be 100%. Some images maybe too blurry to be classified correctly, and people make mistakes. If, for example, 5% of the images are not classified correctly, due for example to how blurry they are, we must expect that the maximum accuracy people can reach will always be less than 95%.

For example, if we reach an accuracy of 95%, we will have ϵ = 1 − 0.95 = 0.05 or expressed as percent ϵ = 5%.

A useful concept to understand is the human-level performance, which can be defined as “the lowest value for the error ϵ that can be reached by a person performing the classification task.” We will indicate it with ϵhlp.

Let’s look at a concrete example. Let’s suppose we have a set of 100 images. Now let’s suppose we ask three people to classify the 100 images. Let’s imagine that they obtain 85%, 83% and 84% accuracy. In this case, human-level performance accuracy will be ϵhlp = 5%. Note that someone else maybe much better at this task, and therefore it’s always important to consider that the value of ϵhlp we get is an estimate and should only serve as a guideline.

Now let’s complicate things a bit. Suppose we are working on a problem in which doctors classify MRI scans in two classes: ones with signs of cancer and ones without. Now let’s suppose we calculate ϵhlp from the results of untrained students obtaining 15%, from doctors with a few years of experience obtaining 8%, from experienced doctors obtaining 2%, and from experienced groups of doctors obtaining 0.5%. What is ϵhlp in this case? You should always choose the lowest value you can get, for reasons we will discuss later.

We can now expand the definition of ϵhlp to a second definition:

Human-level performance is “the lowest value for the error ϵ that can be reached by people or groups of people performing the classification task.”

You do not need to decide which definition is right. Just use the one that gives you the lowest value of ϵhlp.

Now let’s talk a bit about the why we must choose the lowest value we can get for ϵhlp. The lowest error that that can be reached by any classifier is called the Bayes error and it’s a very important quantity. We will indicate it with ϵBayes. Usually, ϵhlp is very close to ϵBayes, at least in tasks where humans excel, like image recognition. It is commonly said that that human-level performance error is a proxy for the Bayes error. Normally it’s impossible to evaluate ϵBayes and therefore practictioners use ϵhlp, assuming the two are close since the latter is easier (relatively) to estimate.

Now keep in mind that it makes sense to compare the two values and assume that ϵhlp is a proxy for ϵBayes only if people (or groups of people) perform classification in the same way as the classifier. For example, it’s okay if both use the same images to do classification. But, in the cancer example, if the doctors use additional scans and analysis to make a diagnosis, the comparison is not fair anymore since human-level performance is not a proxy for Bayes error anymore. Doctors, having more data at their disposal, will clearly be better than the model, which has as the input only the images.

ϵhlp and ϵBayes are close to each other only when the classifications by the humans and by the model are done the same way. So always be sure that is the case before assuming that human-level performance is a proxy for the Bayes error.

Typical values of accuracy that can be reached vs. amount of time invested. At the beginning, it's very easy with machine learning to get good accuracy, and often reach ϵhlp. This is indicated by the line in the plot. After that point, progress tends to be very slow

Get better labels from humans or groups, for example from groups of doctors in the case of medical data, as in our example.

Get more labelled data from humans or groups.

Do a good metric analysis to determine the best strategy for getting better results. You will learn how to do this in this chapter.

As soon as your algorithm gets better than human-level performance you cannot rely on those techniques anymore. It’s important to get an idea of those numbers to be able to decide what to do to get better results. In our example of MRI scans, we could get better labels by relying on other sources that are not related to humans, such as checking the diagnosis a few years after the MRI time point, when it’s more clear if the patient developed cancer or not. In the case of image classification, you might decide to label a few thousands of images of specific classes. This is not usually possible, but you must be aware that you can get labels using means other than asking humans to perform the same kind of task that your algorithm is performing.

Human-level performance is a good proxy for the Bayes error for tasks that humans excel at, such as image recognition. It can be very far from Bayes error for tasks that humans don't do well.

A Short Story About Human-Level Performance

It is instructive to know about the work that Andrej Karpathy has done, while trying to estimate human-level performance in a specific case. You can read the entire story on his blog post (a long post, but worth reading) [1]. What he did is extremely informative about human-level performance. Karpathy was involved in the ILSVRC contest: ImageNet Large Scale Visual Recognition Challenge in 2014 [2]. The task was made up of 1.2 million images (training set) classified in 1000 categories and included objects like animals, abstract objects like a spiral, scenes, and many others. Results were evaluated on a dev dataset. GoogleLeNet (a model developed by Google) reached an astounding 6.7% error. Karpathy asked himself how do humans compare?

The question is a lot more complicated that it may seem at first. Since the images were all classified by humans, shouldn’t ϵhlp = 0%? Well, not really. In fact, the images were first obtained with a web search, then filtered and labelled by asking people binary questions: is this a hook or not (for example).

The images were collected, as Karpathy mentioned in his blog post, in a binary way. People were not asked to assign to each image a class by choosing from the 1000 available as the algorithms were doing. You may think that this is a technicality, but the difference in how the labelling occurs makes the correct evaluation of ϵhlp quite a complicated matter. So Karpathy set to work and developed a web interface that consisted of an image on the left and the 1000 classes with examples on the right. You can see an example of the interface in Figure 10-2.

The web interface developed by Karpathy. Not everyone finds it fun to look at 120 breeds of dogs to try to classify the dog on the left (by the way, it's a Tibetan Mastiff)

If you have a few hours to spare, try it. You will get a whole new appreciation of the difficulties of evaluating human-level performance. Defining and evaluating human-level performance is a very tricky task. It is important to understand that ϵhlp is dependent on how humans approach the classification task, and is dependent on the time invested, on the patience of the people, and on many factors that are difficult to quantify. The main reason for it being so important, apart from the philosophical aspect of knowing when a machine becomes better than humans, is that it is often taken as a proxy for the Bayes error.

Human-Level Performance on MNIST

A set of digits from the MNIST dataset almost impossible to recognize. Such examples are one of the reasons that ϵhlp cannot reach zero

Bias

Now let’s start with metric analysis: a set of procedures that will give you information on how your model is doing—how good or bad your data is—by looking at your optimized metric evaluated on different datasets.

Metric analysis consists of a set of procedures that will give you information about how your model is doing—how good or bad your data is—by looking at your optimized metric evaluated on different datasets.

To start, we need to define a third error: the one evaluated on the training dataset, indicated with ϵtrain.

The first question we want to answer is if our model is not as flexible or complex as needed to reach human-level performance. Or, in other words, we want to know if our model has a high bias with respect to human-level performance.

Calculate the error from the model from the training dataset ϵtrain and then calculate |ϵtrain − ϵhlp|. If the number is not small (bigger than a few percent), then we are in the presence of bias (sometimes called avoidable bias). In other words, our model is too simple to capture the real subtleties of our data.

Bigger networks (more layers or neurons)

More complex architectures (Convolutional Neural Networks, for example)

Train your model longer (for more epochs)

Use better optimizers (like Adam)

Do a better hyper-parameter search (we looked at it in detail in Chapter 7)

Now there is something else you need to understand. Knowing ϵhlp and reducing the bias to reach it are two very different things. Suppose you know the ϵhlp for your problem. This does not mean that you need to reach it. It may well be that you are using the wrong architecture, but you may not have the required skills to be able to develop a network sophisticated enough. It may even be that the effort required to reach that error level is prohibitive (in terms of hardware or infrastructure). Always keep in mind what your problem’s requirements are. Always try to understand what is good enough. For an application that recognizes cancer, you may want to invest as much as possible to reach the highest accuracy possible. You do not want to send someone home and discover later that they really do have cancer. On the other hand, if you build a system to recognize cats from web images, you may decide that a higher error than ϵhlp is completely acceptable.

Metric Analysis Diagram

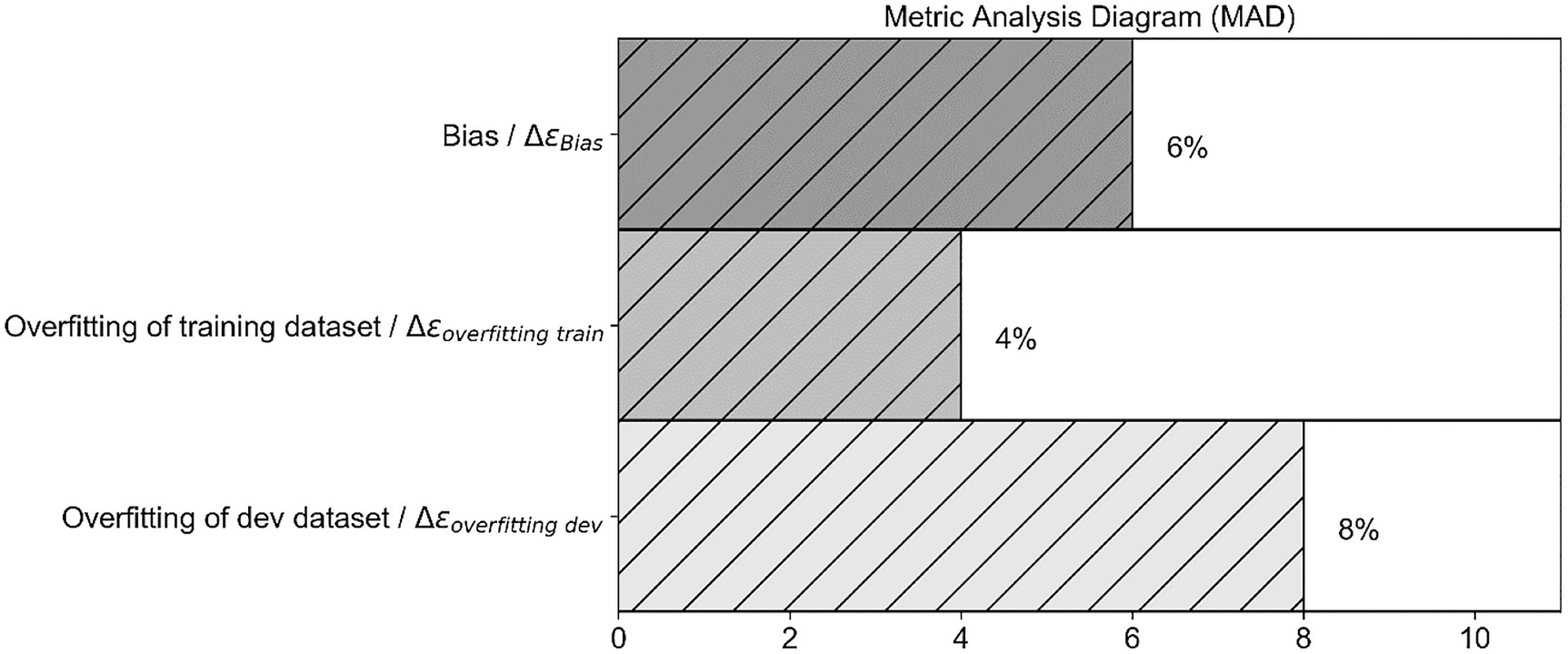

This chapter looks at different problems you will encounter when developing your models and how to spot them. We looked at the first one: bias, sometimes also called avoidable bias. We saw how this can be spotted by calculating ΔϵBias. At the end of this chapter, you will have a few of those quantities that you can calculate to spot problems.

The Metric Analysis Diagram (MAD) with just one of the quantities we encounter in this chapter: ΔϵBias

Training Set Overfitting

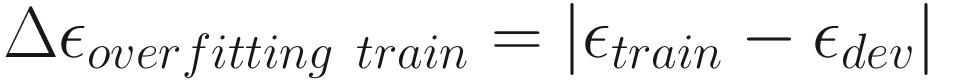

With this quantity, we can say we are overfitting the training dataset if Δϵoverfitting train is bigger than a few percent.

ϵtrain: The error of our classifier on the training dataset

ϵhlp: Human-level performance (as discussed in the previous sections)

ϵdev: The error of our classifier on the dev dataset

ΔϵBias = |ϵtrain − ϵhlp|: Measures how much “bias” we have between the training dataset and human-level performance

Δϵoverfitting train = |ϵtrain − ϵdev|: Measures the amount of overfitting of the training dataset

Training dataset: The dataset we use to train our model (you should know it by now)

Dev dataset: A second dataset we use to check the overfitting on the training dataset

The MAD diagram for our two problems: bias and overfitting of training dataset

In Figure 10-5, you can see the relative gravity of the problems we have, and you may decide which one you want to address first.

Get more data for your training set

Use regularization (see Chapter 5 for a complete discussion of the subject)

Try data augmentation (for example, if you are working with images, you can try rotating them, shifting them, etc.)

Try “simpler” network architectures

As usual, there are no fixed rules, and you must determine which techniques work best on your problem by testing .

Test Set

Now let’s quickly mention another problem you may find. Let’s recall how you choose the best model in a machine learning project (this is not specific to deep learning by the way). Let’s suppose you are working on a classification problem. First you decide which optimizing metric you want, and suppose you decide to use accuracy. Then you build an initial system, feed it with training data, and see how it is doing on the dev dataset to see if you are overfitting your training data. You will remember that in previous chapters we talked often about hyper-parameters: parameters that are not influenced by the learning process. Examples of hyper-parameters are the learning rate, regularization parameter, etc. We have seen many of them in the previous chapters.

Let’s say you are working with a specific neural network architecture; you need to search the best values for the hyper-parameters to see how good your model can get. To do that, you train several models with different values of the hyper-parameters and check their performance on the dev dataset. What can happen is that your models work well on the dev dataset but do not generalize at all, since you select only the best values using the dev dataset. You incur in the risk of overfitting the dev dataset with choosing specific values of your hyper-parameters. To see if this is the case, you create a third dataset, called the test dataset, and cut a portion of the observations from your starting dataset. Then you use that test dataset to check the performance of your models.

The MAD diagram for the three problems we might encounter: bias, overfitting the training data, and overfitting the dev data

Note that if you are not doing a hyper-parameter search, you will not need a test dataset. It is only useful when you are doing extensive searches. Otherwise, in most cases, it’s useless and takes away observations that you may use for training. What we discussed so far assumes that your dev and test sets observations have the same characteristics. If use high-resolution images from a smartphone for training and the dev dataset, and you use images from the web in low resolution for your test dataset, you may see a big |ϵdev − ϵtest|. But that will probably be due to the differences in the images and not because of an overfitting problem. Later in the chapter, we discuss what can happen when different sets come from different distributions (another way of saying that the observations have different characteristics).

How to Split Your Dataset

Now let’s briefly discuss how to split the data.

What exactly does split mean? Well, as we discussed in the previous section, you will need a set of observations to make the model learn, which you call your training set. You will also need a set of observations that will make your dev set and then a final set that is called the test set. Data is typically split so that 60% for the training set, 20% for the dev set, and 20% for test set. Usually, the kind of split is indicated in this form: 60/20/20, where the first number (60) refers to the percentage of the entire dataset that is in the training set, the second (20) to the percentage of the entire dataset that is in the dev set, and the last (20) to the percentage that is in the test set. You may find in books, blogs, or articles, sentences like this “We split our dataset 80/10/10” for example. This is what it means.

In the deep learning field you will deal with big datasets. For example, if we have m = 106, we could use a split like 98/1/1. Keep in mind that 1% of 106 is 104, so still a big number! Remember that the dev/test set must be big enough to give high confidence of the performance of the model, but not unnecessarily big. Additionally, you want to save as many observations as possible for your training set.

When deciding on how to split your dataset, if you have a big number of observations (such as 106 or even more), you can split your dataset 98/1/1 or 90/5/5. As soon as your dev and test datasets reach a reasonable size (which depends on your problem), you can stop. When deciding on how to split your dataset, keep in mind how big your dev/test sets must be.

You can compare these results to the one from the entire dataset. You will notice that they are very close—not the same, but close enough. In this case, we can proceed without worries. But if this is not the case, be sure you have a similar distribution in every dataset you’re going to use.

If the training, dev, and test datasets don’t have the same distribution, this can be quite dangerous when checking how the model is doing. Your model may end up learning from a unbalanced class distribution.

We typically talk about an unbalanced class distribution in a dataset for a classification problem when one or more classes appear a different number of times than others. This becomes a problem in the learning process when the difference is significant. A few percent of difference is often not an issue.

If you have a dataset with three classes, for example, where you have 1000 observations in each class, then the dataset has a perfectly balanced class distribution, but if you have only 100 observations in class 1, 10,000 observations in class 2, and 5,000 in class 3, then this is an unbalanced class distribution. This is not a rare occurrence. Suppose you need to build a model that recognizes fraudulent credit card transactions. It is safe to assume that those transactions are a very small percentage of the entire number of transactions that you have at your disposal!

When splitting your dataset, you must pay great attention not only to the number of observations you have in each dataset, but also to which observations go into each dataset. Note that this problem is not specific to deep learning but is important generally in machine learning.

Details on how to deal with unbalanced datasets are beyond the scope of this book, but is important to understand what kind of consequences this can have. In the next section, you learn what can happen if you feed an unbalanced dataset to a neural network. At the end of that section, we give you a few hints on what to do in such a case.

Unbalanced Class Distribution: What Can Happen

Since we are talking about how to split our dataset to perform metric analysis , it’s important to grasp the concept of unbalanced class distribution and know how to deal with it. In deep learning, you will find yourself very often splitting datasets and you should be aware of the problems you may encounter if you do it the wrong way. Let’s look at a concrete example of how bad things can go if you do it wrong.

We will use the MNIST dataset [5], and we will do basic logistic regression with a single neuron. The MNIST database is a large database of handwritten digits that we can use to train our model. The MNIST database contains 70,000 images.

“The original black and white (bilevel) images from MNIST were normalized to fit in a 20x20 pixel box while preserving their aspect ratio. The resulting images contain gray levels as a result of the anti-aliasing technique used by the normalization algorithm. The images were centered in a 28x28 image by computing the center of mass of the pixels and translating the image so as to position this point at the center of the 28x28 field” [5].

The 36003rd digit in the dataset. It is easily recognizable as a 7

The y_train_unbalanced and y_test_unbalanced arrays will contain the new labels. Note that the dataset is now heavily unbalanced. Label 0 appears roughly 10% of the time, while label 1 appears 90% of the time.

Confusion Matrix for the Model Described in the Text

Predicted Class 0 | Predicated Class 1 | |

|---|---|---|

Real class 0 | 0 | 6742 |

Real class 1 | 0 | 53258 |

How should we read this table? In the “Predicted Class 0” column, you will see the number of observations that our model predicts of being of class 0 for each real class. 0 is the number of observations our model predicts of being of class 0 and are really in class 0. 0 is the number of observations that our model predicts in class 0 but are really in class 1.

It should be easy to see that our model effectively predicts all observations to be in class 1 (a total of 6742+53258 = 60000). Since we have a total of 60,000 observations in our training set, we get an accuracy of 53258/60000 = 0.887, as our Keras code told us. But not because our model is good, simply because it has effectively classified all observations in class 1. We don’t need a neural network in this case to reach this accuracy. What happens is that our model sees observations belonging to class 0 so rarely that they almost don’t influence the learning, which is dominated by the observations in class 1.

What at the beginning seemed a nice result , turns out is a really bad one. This is an example of how badly things can go if you don’t pay attention to the distributions of your classes. This of course applies not only when splitting your dataset, but in general when you approach a classification problem, regardless of the classifier you want to train (it does not apply only to neural networks).

When splitting your dataset into complex problems, you need to pay close attention not only to the number of observations you have in your datasets, but also to what observations you choose and to the distribution of the classes.

Change your metric: In our example, you may want to use something else instead of accuracy, since it can be misleading. You could try using the confusion matrix for example, or other metrics like precision, recall, or F1 (review them if you don’t know them, as they are very important to know). Another important way of checking how your model is doing, and one that I suggest you learn, is the ROC Curve, which will help you tremendously.

Work with an undersampled dataset: If you have for example 1000 observations in class 1 and 100 in class 2, you may create a new dataset with 100 random observations in class 1 and the 100 you have in class 2. The problem with this method is that you will have a lot less data to feed to your model to train it.

Work with an oversampled dataset: You may try to do the opposite. You may take the 100 observations in class 2 and replicate them ten times to end up with 1000 observations in class 2 (sometimes called sampling with replacement).

Get more data in the class with less observations: This is not always possible. In the case of fraudulent credit card transactions, you cannot go around and generate new data, unless you want to go to jail .

Datasets with Different Distributions

Now I want to discuss another terminology issue, which will lead us to understanding a common problem in the deep learning world. Very often you will hear sentences like: “the sets come from different distributions.” This sentence is not always easy to understand. Take for example two datasets formed by images taken with a professional DSLR, and a second one created by images taken with an old smartphone. In the deep learning world, we say that those two sets come from different distributions. But what is the meaning of the sentence? The two datasets differ for various reasons: resolution of images, blurriness due to different quality of lens, amount of colors, how much is in focus, and possibly more. All those differences are what is usually meant by different “distributions.”

Let’s look at another example. We could consider two datasets: one made of images of white cats and one made of images of black cats. Also, in this case we talk about different distributions. This becomes a problem when you train a model on one set and want to apply it to the other. For example, if you train a model on a set of images of white cats, you probably are not going to do very good on the dataset of black cats, since your model has never seen black cats during training.

When talking about datasets coming from different distributions, it is usually meant that the observations have different characteristics in the two datasets: black and white cats, high- and low-resolution images, speech recording in Italian and in German, and so on.

Since data is precious, people often try to create the different datasets (train, dev, etc.) from different sources. For example, you may decide to train your model on a set made of images taken from the web and see how good it is on a set made of images you took with your smartphone. This may seem a nice idea to be able to use as much data as possible, but it may give you many headaches. Let’s see what happens in a real case so that you see the consequences of doing something like this.

We have 12,700 observations in the training dataset and 2167 in the test dataset (we will call it dev dataset from now on in this chapter).

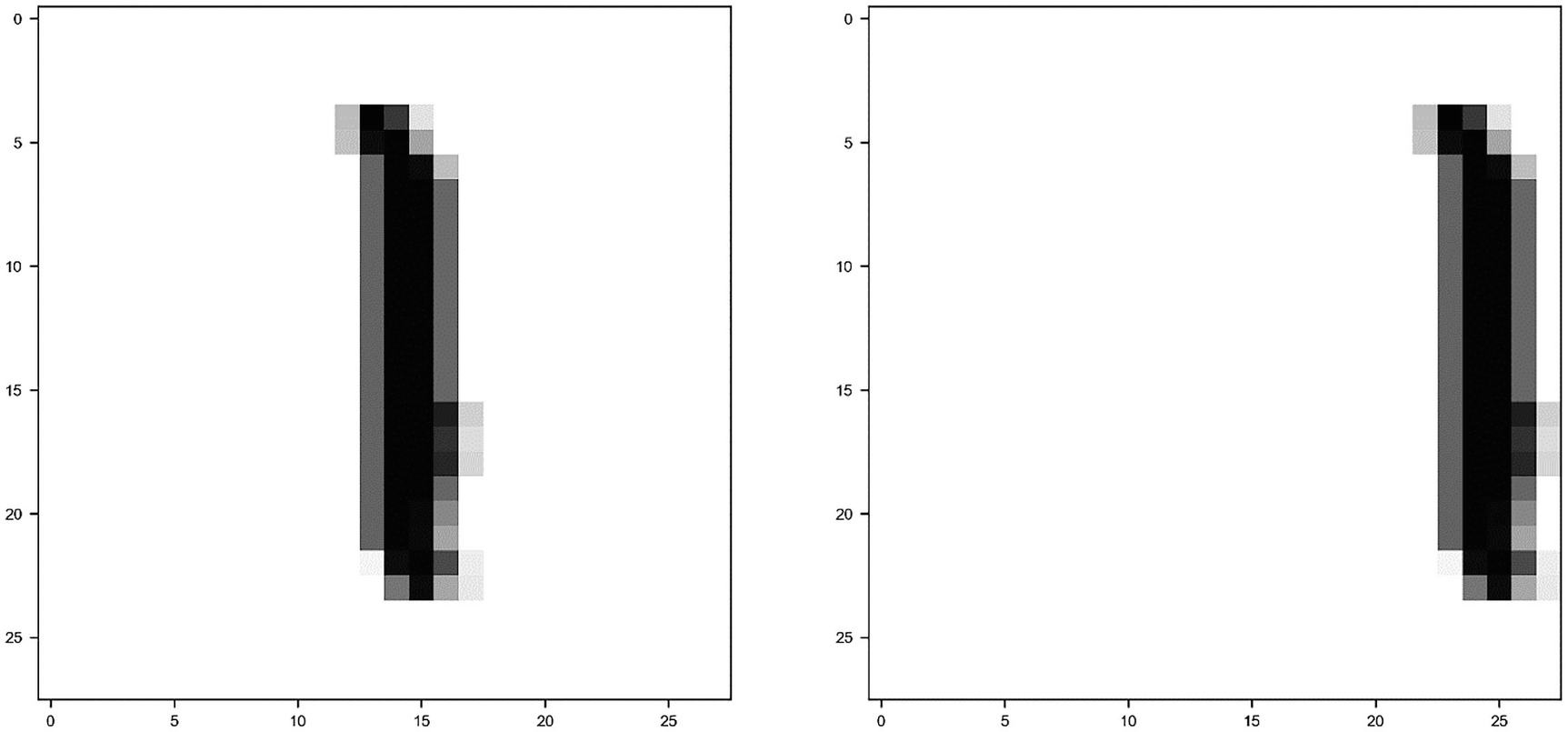

One random image from the dataset (on the left) and its shifted version (on the right)

For the training dataset, we get 97%.

For the dev dataset, we get 98%.

For the train-dev (you will see later why it’s called this), the one with the shifted images, we get 54%. A very bad result.

What has happened is that the model has learned from a dataset where all the images are centered in the box and therefore could not generalize images shifted and not centered.

When training a model on a dataset, you will get good results usually on observations that are like the ones in the training set. But how can you find out if you have such a problem? There is a relatively easy way of doing that, by expanding the MAD diagram. Let’s see how to do it.

we should expect Δϵtrain − dev ≈ 0. If the train (and train-dev) and the dev set are coming from different distributions (the observations have different characteristics), we should expect Δϵtrain − dev to be big. If we consider the MNIST example, we have in fact Δϵtrain − dev = 0.46 or 46%, which is a huge difference.

Split your training set in two: one that you will use for training, and that you will call the train set, and a smaller one that you will call “train-dev” set.

Train your model on the train set.

Evaluate your error ϵ on the three sets: train, dev, and train-dev.

Calculate the quantity Δϵtrain − dev. If it’s big this will give strong evidence that the original training and dev sets are coming from different distributions.

An example of the MAD diagram with the data mismatch problem added

The bias (between training and human-level performance) is quite small, so we are not that far from the best we can achieve (let’s assume that human-level performance is a proxy for the Bayes error). You could try bigger networks, better optimizers, and so on.

We are overfitting the datasets, so we could try regularization or get more data.

We have a strong problem with data mismatch (sets coming from different distributions) between train and dev. You will see what you could do to solve this problem later in the chapter.

We are also slightly overfitting the dev dataset during our hyper-parameter search.

Note that you don’t need to create the bar plot as we have done here. Technically, you just need the four numbers to draw the same conclusions.

Once you have your MAD diagram (or simply the numbers), interpreting it will give you hints about what you should try to get better results, for example a better accuracy.

You can carry manual error analysis to understand the difference between the sets, and then decide what to do (you see an example in the last section of this chapter). This is time consuming and usually quite difficult, because once you know what the difference is, it may be very difficult to find a solution.

You could try to make the training set more similar to your dev/test sets; for example, if you are working with images and the test/dev sets have a lower resolution you may decide to lower the resolution of the images in the training set.

As usual, there are no fixed rules. Just be aware of the problem and think about the following: your model will learn the characteristics from your training data, so when it’s applied to completely different data, it won’t usually do well. Always get training data that reflects the data you want your model to work on, not vice versa.

k-fold Cross Validation

What do you do when your dataset is too small to split into a train and dev/test set?

How do you get information on the variance of your metric?

- 1.

Partition your complete dataset in k equally big subsets: f1, f2, …, fk. The subsets are also called folds. Normally the subsets are not overlapping, which means that each observation appears in one and only one fold.

- 2.

For i going from 1 to k:

Train your model on all the folds except fi.

Evaluate your metric on the fold fi. The fold fi will be the dev set in iteration i.

- 3.

Evaluate the average and variance of your metric on the k results.

A typical value for k is 10, but that depends on the size of your dataset and on the characteristic of your problem.

Remember that the discussion about how to split a dataset also applies here.

When you are creating your folds, you must take care that your folds reflect the structure of your original dataset. If your original dataset has ten classes for example, you must make sure that each of your folds have all the ten classes with the same proportions.

Although this may seem a very attractive technique to deal generally with datasets with less than optimal size, it may be quite complex to implement. But, as you will see shortly, checking your metric on the different folds will give you important information on a possible overfitting of your training dataset.

Let’s try this on a real dataset and see how to implement it. Note that you can implement k-fold cross validation easily in sklearn, but I will develop it from scratch, to show you what is happening in the background. Everyone (well, almost) can copy code from the web to implement k-fold cross validation in sklearn, but not many can explain how it works and understand it, and therefore be able to choose the right sklearn method or parameters.

As a dataset we will use the reduced MNIST dataset containing only digits 1 and 2. We will do a simple logistic regression with one neuron to make the code easy to understand and to let you concentrate on the cross-validation part and not on other implementation details that are not relevant here. The goal of this section is to show you how k-fold cross validation works and why it’s useful, not to implement it with the smallest amount of code possible.

As before, import the MNIST dataset. Remember that the dataset has 70,000 observations and is made of grayscale images, each 28x28 pixel in size. Then select only digit 1 and 2 and rescale the labels to make sure that digit 1 has label 0 and digit 2 has label 1.

Now we need a small trick. To keep the code simple, we will consider only the training dataset and we will create folds starting from it.

You could normalize the data in one shot, but we like to make it evident that we are dealing with folds to make it clear for the reader.

- 1.

Do a loop over all the folds (in this case from 1 to 10) iterating with the variable i from 0 to 9.

- 2.

For each i, use the fold i as the dev set and concatenate all the other folds and use the result as the train set.

- 3.

For each i, train the model.

- 4.

For each i, evaluate the accuracy on the two datasets (train and dev) and save the values in the two lists: train_acc and dev_acc.

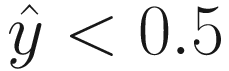

Distribution of the accuracy values for the train set (left plot) and for the dev set (right plot). Note that the two plots use the same scale on both axes

The image is quite instructive. You can see that the accuracy values for the training set are concentrated around the average, while the ones evaluated on the dev set are much more spread out! This shows how the model on new data behaves less well than on the data it has trained on. The standard deviation for the training data is 3.5 · 10−4 and for the dev set it’s 3.5 · 10−3, ten times bigger than the value on the train set. In this way you also get an estimate of the variance of your metric when applied to new data, and to how it generalizes.

If you are interested in learning how to do this quickly with sklearn, check out the official documentation for the KFold method [6]. When you are dealing with datasets with many classes (remember our discussion about how to split your sets?), you must pay attention and do what is called stratified sampling. Sklearn provides a method to do that too: stratifiedKFold [7].

You can now easily find averages and standard deviations. For the training set, we have an average accuracy of 98.5% and a standard deviation of 0.035%, while for the dev set we have an average accuracy of 98.4% with a standard deviation of 0.35%. Now you can even provide an estimate of the variance of your metric. Pretty cool!

Manual Metric Analysis: An Example

An example from fold 0 for the digit 1. The image on the left and a bar plot of the gray values of the 784 pixels as they are seen from our model on the right. Remember that as inputs we have a one-dimensional array of the 784 gray values of the pixels

Four examples of the digit 1, reshaped as one-dimensional arrays. They all look the same: a number of bars roughly equally spaced

An example from fold 0 for the digit 2. The image on the left and a bar plot of the gray values of the 784 pixels as they are seen from our model. Remember that as observations we have a one-dimensional array of the 784 gray values of the pixels of the image

How different parts of the images look when they're reshaped as a one-dimensional array. Horizontal parts are labelled as (A) and (B), while the more vertical part is labelled as (C)

Four examples of the digit 2 reshaped as one-dimensional arrays. The wider clusters of bars can clearly be seen

As you can imagine, this is an easy pattern to spot for an algorithm, and so it’s to be expected that this model works so good. Even a human can spot the images, even when reshaped, without any effort. Such a detailed analysis would not be necessary in a real-life project, but it’s really instructive to see what you can learn from your data. Understanding the characteristics of your data may help you design your model or understand why is not working. Advanced architectures, like convolutional networks, will be able to learn exactly those two-dimensional features in a very efficient way.

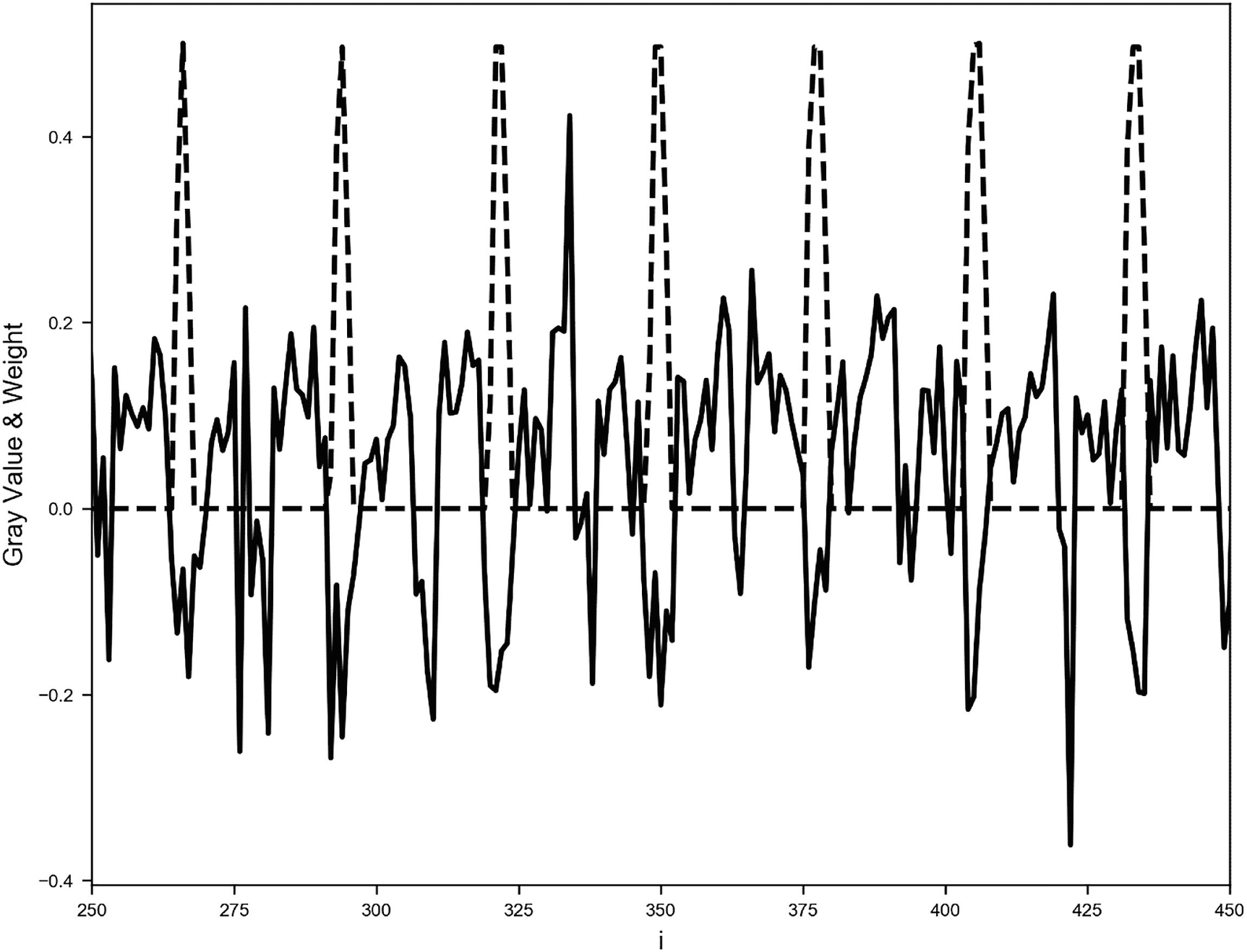

Where σ is the sigmoid function, xi for i = 1, …, 784 are the gray values of the pixel of the image, wi for i = 1, …, 784 are the weights and b is the bias. Remember that when  we classify the image in class 1 (so digit 2), and if

we classify the image in class 1 (so digit 2), and if  we classify the image in class 0 (so digit 1). Now if you remember our discussion in Chapter 2 of the sigmoid function you will remember that σ(z) ≥ 0.5 when z ≥ 0 and σ(z) < 0.5 for z < 0. That means that our network should learn the weights in such a way that for all the 1s, we have z < 0 and for all the 2s, z ≥ 0. Let’s see if that is really the case.

we classify the image in class 0 (so digit 1). Now if you remember our discussion in Chapter 2 of the sigmoid function you will remember that σ(z) ≥ 0.5 when z ≥ 0 and σ(z) < 0.5 for z < 0. That means that our network should learn the weights in such a way that for all the 1s, we have z < 0 and for all the 2s, z ≥ 0. Let’s see if that is really the case.

will be negative, and therefore σ(z) < 0.5 and the network will identify the image as a 1. Figure 10-16 is zoomed in to make this behavior more evident.

will be negative, and therefore σ(z) < 0.5 and the network will identify the image as a 1. Figure 10-16 is zoomed in to make this behavior more evident.

Plot for a digit 1 where you can find the weights wi (the solid lines) of our trained network after 600 epochs (and after reaching an accuracy of 98%) and the gray value of the pixel xi rescaled to have a maximum of 0.5 (the dashed lines)

Plot for a digit 2 where you can find the weights wi (solid lines) of our trained network after 600 epochs (and after reaching an accuracy of 98%) and the gray value of the pixel xi rescaled to have a maximum of 0.5 (dashed lines)

wi · xi for i = 1, …, 784 for a digit 1. You can see how almost all the values lie below zero. The thick line at zero is made of all the points i such that wi · xi = 0

As you can see, in very easy cases it is possible to understand how a network learns and therefore it would be much easier to debug strange behaviors. But don’t expect this to be possible when dealing with more complex cases. The analysis we did here would not be so easy, for example, if you tried to do the same with digits 3 and 8 instead of 1 and 2.

Exercises

Perform k-fold cross-validation using the built-in function of the sklearn library and compare the results.

Look at different performance metrics (for example sensitivity, specificity, ROC curve, etc.) and calculate them for the unbalanced class problem. Try to understand how these can help you in the evaluation of the models.

References

[1] https://goo.gl/iqCbC0, last accessed 09.11.2021.

[2] https://goo.gl/PCHWMJ, last accessed 09.11.2021.

[3] https://goo.gl/Rh8S6g, last accessed 09.11.2021.

[4] Schmidhuber, Jurgen, U. Meier, and D. Ciresan. “Multi-column deep neural networks for image classification.” 2012 IEEE Conference on Computer Vision and Pattern Recognition. IEEE Computer Society, 2012 (https://goo.gl/pEHZVB, last accessed 09.11.2021).

[5] http://yann.lecun.com/exdb/mnist/, last accessed 10.11.2021.

[6] https://goo.gl/Gq1Ce4, last accessed 03.12.2021.

[7] https://goo.gl/ZBKrdt, last accessed 03.12.2021.