8. Breaking God’s Code: The Discovery of Heredity, Genetics, and DNA

One fine day at the dawn of civilization, on the beautiful Greek island of Kos in the crystal-clear waters of the Aegean Sea, a young noblewoman slipped quietly through the back entrance of a stone and marble healing temple known as the Asklepieion and approached the world’s first and most famous physician. In desperate need of advice, she presented Hippocrates with an awkward problem. The woman had recently given birth to a baby boy, and though the infant was plump and healthy, Hippocrates needed only glance from fair-skinned mother to her swaddling infant to make his diagnosis: The baby’s dark skin suggested that she had a passionate tryst in the recent past with an African trader. And if news of the infidelity got out, it would spread like wildfire, enraging her husband and inflaming scandalous gossip across the island.

But Hippocrates—as knowledgeable in the science of heredity and genetics as anyone could be in fifth century BC—quickly proposed another explanation. True, some physical traits could be inherited from the father, but that did not take into account the concept of “maternal impression.” According to that view, babies could also acquire traits based on what their mothers looked at during pregnancy. And thus, Hippocrates assured her, the baby must have acquired its negroid features during pregnancy, when the woman had gazed a bit too long at a portrait of an Ethiopian that happened to be hanging from her bedroom wall.

From guessing games to a genetic revolution

Since the earliest days of civilization until well after the Industrial Revolution, people from every walk of life struggled valiantly, if not foolishly, to decipher the secrets of heredity. Even today, we still marvel at the mystery of how traits are passed from generation to generation. Who has not looked at a child or sibling and tried to puzzle out what trait came from whom—the crooked smile, skin color, intelligence or its lack, the perfectionist or lazy nature? Who has not wondered why a child inherited this from the mother, that from the father, or how brothers or sisters can be so different?

And those are just the obvious questions. What about traits that disappear for a generation and reappear in a grandchild? Can parents pass traits that they “acquire” during their lives—a skill, knowledge, even an injury—to their children? What role does environment play? Why are some families haunted for generations by disease, while others are graced with robust health and breathtaking longevity? And perhaps most troubling: What inherited “time bombs” will influence how and when we die?

Up until the twentieth century, all of these mysteries could be summed up with two simple questions: Is heredity controlled by any rules? And how does it even happen?

Yet amazingly, despite no understanding of how or why traits are passed from generation to generation, humans have long manipulated the mystery. For thousands of years, in deserts, plains, forests, and valleys, early cultures cross-mated plant with plant and animal with animal to create more desirable traits, if not entirely new organisms. Rice, corn, sheep, cows, and horses grew bigger, stronger, hardier, tastier, healthier, friendlier, and more productive. A female horse and a male donkey produced a mule, which was both stronger than its mother and smarter than its father. With no understanding of how it worked, humans played with heredity and invented agriculture—an abundant and reliable source of food that led to the rise of civilization and transformation of the human race from a handful of nomads into a population of billions.

Only in the past 150 years—really just the past 60—have we begun to figure it out. Not all of it, but enough to decipher the basic laws, to pull apart and poke at the actual “stuff” of heredity and apply the new knowledge in ways that are now on the brink of revolutionizing virtually every branch of medicine. Yet perhaps more than any other breakthrough, it has been a 150-year explosion in slow motion because the discovery of heredity—how DNA, genes, and chromosomes enable traits to be passed from generation to generation—is in many ways still a work in progress.

Even after 1865, when the first milestone experiment revealed that heredity does operate by a set of rules, many more milestones were needed—from the discovery of genes and chromosomes in the early 1900s to the discovery of the structure of DNA in the 1950s, when scientists finally began to figure out how it all actually worked. It took one and a half centuries to not only understand how traits are passed from parent to child, but how a tiny egg cell with no traits can grow into a 100-trillion-cell adult with many traits.

And we are still only at the beginning. Although the discovery of genetics and DNA was a remarkable breakthrough, it also flung open a Pandora’s box of possibilities that boggle the mind—from identifying the genetic causes of diseases, to gene therapies that cure disease, to “personalized” medicine in which treatment is customized based on a person’s unique genetic profile. Not to mention the many related revolutions, including the use of DNA to solve crimes, reveal human ancestries, or perhaps one day endow children with the talents of our choice.

* * *

Long after the time of Hippocrates, physicians remained intrigued by the idea of maternal impression, as seen in three case reports from the nineteenth and early twentieth centuries:

• A woman who was six months pregnant became deeply frightened when, seeing a house burning in the distance, she feared it might be her own. It wasn’t, but for the rest of her pregnancy, the terrifying image of flames was “constantly before her eyes.” When she gave birth to a girl a few months later, the infant had a red mark on her forehead whose shape bore an uncanny resemblance to a flame.

• A pregnant woman became so distressed after seeing a child with an unrepaired cleft lip that she became convinced her own baby would be born with the same trait. Sure enough, eight months later her baby was born with a cleft lip. But that’s only half the story: A few months later, after news of the incident had spread and several pregnant women came to see the infant, three more babies were born with cleft lips.

• Another woman was six months pregnant when a neighborhood girl came to stay in her house because her mother had fallen ill. The woman often did housework with the girl, and thus frequently noticed the middle finger on the girl’s left hand, which was partly missing due to a laundry accident. The woman later gave birth to a boy who was normal in all ways—except for a missing middle finger on his left hand.

Shattering the myths of heredity: the curious lack of headless babies

Given how far science has come in the past 150 years, one can understand what our ancestors were up against in their attempts to explain how we inherit traits. For example, Hippocrates believed that during conception, a man and woman contributed “tiny particles” from every part of their bodies, and that the fusion of this material enabled parents to pass traits to their children. But Hippocrates’ theory—later called pangenesis—was soon rejected by the Greek philosopher Aristotle, in part because it didn’t explain how traits could skip a generation. Of course, Aristotle had his own peculiar ideas, believing that children received physical traits from the mother’s menstrual blood and their soul from the father’s sperm.

Lacking microscopes or other tools of science, it’s no surprise that heredity remained a mystery for more than two millennia. Well into the nineteenth century, most people believed, like Hippocrates, in the “doctrine of maternal impression,” the idea that the traits of an unborn child could be influenced by what a woman looked at during pregnancy, particularly shocking or frightening scenes. Hundreds of cases were reported in medical journals and books claiming that pregnant women who had been emotionally distressed by something they had witnessed—often a mutilation or disfigurement—later gave birth to a baby with a similar deformity. But doubts about maternal impression were already arising in the early 1800s. “If shocking sights could produce such effects,” asked Scottish medical writer William Buchan in 1809, “how many headless babies would have been born in France during Robespierre’s reign of terror?”

Still, many strange myths persisted up until the mid-1800s. For example, it was commonly rumored that men who had lost their limbs to cannon-fire later fathered babies without arms or legs. Another common misconception was that “acquired traits”—skills or knowledge that a person learned during his or her lifetime—could be passed on to a child. One author reported in the late 1830s that a Frenchman who had learned to speak English in a remarkably short time must have inherited his talent from an English-speaking grandmother he had never met.

As for which traits come from which parent, one nineteenth century writer confidently explained that a child gets its “locomotive organs” from the father and its “internal or vital organs” from the mother.

This widely accepted view, it should be added, was based on the appearance of the mule.

The first stirrings of insight: microscopes help locate the stage

And so, as late as the mid-1800s, even as scientific advances were laying the groundwork for a revolution in many areas of medicine, heredity continued to be viewed as a fickle force of nature, with little agreement among scientists on where it occurred and certainly no understanding of how it happened.

The earliest stirrings of insight began to arise in the early 1800s, thanks in part to improvements to the microscope. Although it had been 200 years since Dutch lens grinders Hans and Zacharias Janssen made the first crude microscope, by the early 1800s technical improvements were finally enabling scientists to get a better look at the scene of contention: the cell. One major clue came in 1831 when Scottish scientist Robert Brown discovered that many cells contained a tiny, dark central structure, which he called the nucleus. While the central role the cell nucleus played in heredity would not be known for decades, at least Brown had found the stage.

Ten years later, British physician Martin Barry helped set that stage when he realized that fertilization occurred when the male’s sperm cell enters the female’s egg cell. That may sound painfully obvious today, but only a few decades earlier another common myth was that every unfertilized egg contained a tiny “preformed” person, and that the job of the sperm was to poke it to life. What’s more, up until the mid-1800s, most people didn’t realize that conception involved only one sperm and one egg. And without knowing that simple equation—1 egg + 1 sperm = 1 baby—it would be impossible to take even the first baby steps toward a true understanding of heredity.

Finally, in 1854, a man came along who was not only aware of that equation, but willing to bet a decade of his life on it to solve a mystery. And though his work may sound idyllic—working in the pastoral comfort of a backyard garden—in reality his experiments must have been numbingly tedious. Doing something no one had ever done, or perhaps dared think of doing, he grew tens of thousands of pea plants and painstakingly documented the traits of their little pea plant offspring for generation after generation. As he later wrote with some pride, “It requires indeed some courage to undertake a labor of such far reaching extent.”

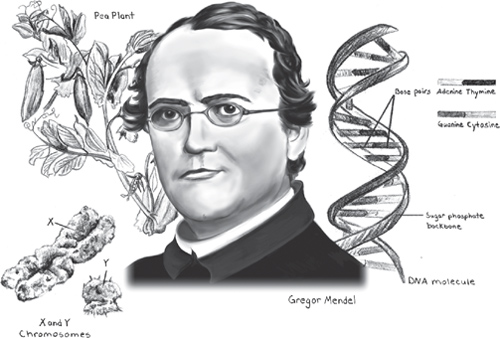

But by the time Gregor Mendel finished his work in 1865, he had answered a question humanity had been asking for thousands of years: Heredity is not random or fickle, but does indeed have rules. And the other fringe benefit—apart from a pantry perpetually stocked with fresh peas? He had founded the science of genetics.

Milestone #1 From peas to principles: Gregor Mendel discovers the rules of heredity

Born in 1822 to peasant farmers in a Moravian village (then a part of Austria), Gregor Mendel was either the most unlikely priest in the history of religion or the most unlikely researcher in the history of science—or perhaps both. There’s no question of his intelligence: An outstanding student as a youth, one of Mendel’s teachers recommended that he attend an Augustinian Monastery in the nearby city of Brünn, a once-common way for the poor to enter a life of study. Yet by the time Mendel was ordained as a priest in 1847 at the age of 26, he seemed poorly suited for religion or academics. According to a report sent to the Bishop of Brünn, at the bedside of the ill and suffering, Mendel “was overcome with a paralyzing shyness, and he himself then became dangerously ill.”

Mendel fared no better a few years later when, having tried substitute teaching in local schools, he failed his examination for a teacher’s license. To remedy this unfortunate outcome, he was sent to the University of Vienna for four years, studied a broad variety of subjects, and in 1856 took the exam a second time.

Which he promptly failed again.

And so, with dubious credentials as a priest and scholar, Mendel returned to his quiet life at the monastery, perhaps resolved to spend the rest of his life as a humble monk and part-time teacher. Or perhaps not. Because while Mendel’s training failed to help him pass his teaching exams, his education—which had included fruit growing, plant anatomy and physiology, and experimental methods—seemed calculated for something of greater interest to him. As we now know, as early as 1854, two years before failing his second teacher’s exam, Mendel was already conducting experiments in the abbey garden, growing different varieties of pea plants, analyzing their traits, and planning for a far grander experiment that he would undertake in just two years.

Eureka: 20,000 traits add up to a simple ratio and two major laws

What was on Mendel’s mind when he began his famous pea plant experiment in 1856? For one thing, the idea did not strike him out of the blue. As in other areas of the world, cross-mating different breeds of plants and animals had long been of interest to farmers in the Moravian region as they tried to improve ornamental flowers, fruit trees, and the wool of their sheep. While Mendel’s experiments may have been partly motivated by a desire to help local growers, he was also clearly intrigued by larger questions about heredity. Yet if he tried to share his ideas with anyone, he must have left them scratching their heads because at the time, scientists didn’t think traits were something you could study at all. According to the views of development at the time, the traits of plants and animals blended from generation to generation as a continuous process; they were not something you could separate and study individually. Thus, the very design of Mendel’s experiment—comparing the traits of pea plants across multiple generations—was a queer notion, something no one had thought to do before. And—not coincidentally—a brilliant leap of insight.

But all Mendel was doing was asking the same question countless others had asked before him: Why did some traits—whether Grandpa’s shiny bald head or auntie’s lovely singing voice—disappear in one generation and reappear in another? Why do some traits come and go randomly, while others, as Mendel put it, reappear with “striking regularity”? To study this, Mendel needed an organism that had two key features: physical traits that could be easily seen and counted; and a fast reproductive cycle so new generations could be produced fairly quickly. As luck would have it, he found it in his own backyard: Pisum sativum, the common pea plant. As Mendel began cultivating the plants in the abbey garden in 1856, he focused on seven traits: flower color (purple or white), flower location (on the stem or tip), seed color (yellow or green), seed shape (round or wrinkled), pod color (green or yellow), pod shape (inflated or wrinkled), and stem height (tall or dwarf).

For the next eight years, Mendel grew thousands of plants, categorizing and counting their traits across countless generations of offspring. It was an enormous effort—in the final year alone, he grew as many as 2,500 second-generation plants and, overall, he documented more than 20,000 traits. Although he didn’t finish his analysis until 1864, intriguing evidence began to emerge from almost the very start.

To appreciate Mendel’s discovery, consider one of his simplest questions: Why was it that when you crossed a purple-flowered pea plant with a white-flowered plant, all of their offspring had purple flowers; and yet, when those purple-flowered offspring were crossed with each other, most of the offspring in the next generation had purple flowers, while a few had white flowers? In other words, where in that first generation of all purple-flowered plants were the “instructions” to make white flowers hiding? The same thing occurred with the other traits: If you crossed a plant with yellow peas with a plant with green peas, all of the first-generation plants had yellow peas; yet when those pea plants were crossed with each other, most second-generation offspring had yellow peas, while some had green peas. Where, in the first generation, were the instructions to make green peas hiding?

It was not until Mendel had painstakingly documented and categorized thousands of traits over many generations that the astonishing answer began to emerge. Among those second-generation offspring, the same curious ratio kept appearing over and over: 3 to 1. For every 3 purple-flowered plants, there was 1 white-flowered plant; for every 3 plants with yellow peas, 1 plant with green peas. For every 3 tall plants, 1 dwarf plant, and so on.

To Mendel, this was no statistical fluke, but suggested a meaningful principle, an underlying Law. Delving into how such patterns of inheritance could occur, Mendel began envisioning a mathematical and physical explanation for how hereditary traits could be passed from parent to offspring in this way. In a remarkable milestone insight, he deduced that heredity must involve the transference of some kind of “element” or factor from each parent to the child—what we now know to be genes.

And that was just the beginning. Based on nothing more than his analysis of pea plant traits, Mendel intuited some of the most important and fundamental laws of inheritance. For example, he correctly realized that for any given trait, the offspring must inherit two “elements” (genes)—one from each parent—and that these elements could be dominant or recessive. Thus for any given trait, if an offspring inherited a dominant “element” from one parent and a recessive “element” from the other, the offspring would show the dominant trait, but also carry the hidden recessive trait and thus potentially pass it on to the next generation. In the case of flower color, if an offspring had inherited a dominant purple gene from one parent and a recessive white gene from the other, it would have purple-flowers but carry the recessive white-flower gene, which it could pass on to its offspring. This, finally, explained how traits could “skip” a generation.

Based on these and other findings, Mendel developed his two famous laws of how the “elements” of heredity are transferred from parent to offspring:

- The Law of Segregation: Although everyone has two copies of every gene (one from each parent), during the production of sex cells (sperm and eggs) the genes separate (segregate) so that each egg and sperm only has one copy. That way, when the egg and sperm join in conception, the fertilized egg again has two copies.

- The Law of Independent Assortment: All of the various traits (genes) a person inherits are transferred independently of each other; this is why, if you’re a pea plant, just because you inherit a gene for white flowers doesn’t mean you will also always have wrinkled seeds. It is this “independence” of gene “sorting” that we can thank for the huge variety of traits seen in the human race.

To appreciate the genius of Mendel’s accomplishment, it’s important to remember that at the time of his work, no one had yet observed any physical basis for heredity. There was no conception of DNA, genes, or chromosomes. With no knowledge of what the “elements” of heredity might be, Mendel discovered a new branch of science whose defining terms—gene and genetics—would not be coined until decades later.

A familiar theme: confident and unappreciated to his death

In 1865, after eight years of growing thousands of pea plants and analyzing their traits, Gregor Mendel presented his findings to the Brünn Natural Historical Society, and the following year, his classic paper, “Experiments in Plant Hybridization,” was published. It was one of the greatest milestones in the history of science or medicine and answered a question mankind had been asking for millennia.

And the reaction? A rousing yawn.

Indeed, for the next 34 years Mendel’s work was ignored, forgotten, or misunderstood. It wasn’t that he didn’t try: At one point, he sent his paper to Carl Nägeli, an influential botanist in Munich. However, Nägeli not only failed to appreciate Mendel’s work, but wrote back with perhaps one of the most galling criticisms in the annals of science. Having perused a paper based on nearly ten years of work and growing more than 10,000 plants, Nägeli wrote, “It appears to me that the experiments are not completed, but that they should really just begin...”

The problem, historians now believe, was that Mendel’s peers simply could not grasp the significance of his discovery. With their fixed views of development and the belief that hereditary traits could not be separated and analyzed, Mendel’s experiment fell on deaf ears. Although Mendel continued his scientific work for several years, he finally stopped around 1871, shortly after being named abbot of the Brünn monastery. When he died in 1884, he had no clue that he would one day be known as the founder of genetics.

Nevertheless, Mendel was convinced of the importance of his discovery. According to one abbot, months before his death Mendel had confidently said, “The time will come when the validity of the laws discovered by me will be recognized.” Mendel also reportedly told some monastery novices shortly before he died, “I am convinced that the entire world will recognize the results of these studies.”

Three decades later, when the world finally did recognize his work, scientists discovered something else Mendel didn’t know, but that put his work into final, satisfying, perspective. His laws of inheritance applied not only to plants, but to animals and people.

But if the science of genetics had arrived, the question now was, where?

Milestone #2 Setting the stage: a deep dive into the secrets of the cell

Although the next major milestone began to emerge in the 1870s, about the same time Mendel was giving up on his experiments, scientists had been laying the foundation for centuries before then. In the 1660s, English physicist Robert Hooke became the first person to peer through a crude microscope at a slice of cork bark and discover what he referred to as tiny “boxes.” But it was not until the 1800s that a series of German scientists were able to look more closely at those boxes and finally discover where heredity is played out: the cell and its nucleus.

The first key advance occurred in 1838 and 1839 when improvements to the microscope enabled German scientists Matthias Schleiden and Theodor Schwann to identify cells as the structural and functional units of all living things. Then in 1855, dismissing the myth that cells appeared out of nowhere through spontaneous generation, German physician Rudolf Virchow announced his famous maxim, Omnis cellula e cellula—“Every cell comes from a pre-existing cell.” With that assertion, Virchow provided the next key clue to where heredity must happen: If every cell came from another cell, then the information needed to make each new cell—its hereditary information—must reside somewhere in the cell. Finally, in 1866, German biologist Ernst Haeckel came right out and said it: The transmission of hereditary traits involved something in the cell nucleus, the tiny structure whose importance was recognized in 1831 by Robert Brown.

By the 1870s, scientists were diving deeper and deeper into the cell nucleus and discovering the mysterious activities that occurred whenever a cell was about to divide. In particular, between 1874 and 1891, German anatomist Walther Flemming provided detailed descriptions of these activities, which he called mitosis. Then in 1882, Flemming gave the first accurate descriptions of something peculiar that happened just before a cell was about to divide: long threadlike structures became visible in the nucleus and split into two copies. In 1888, as scientists speculated on the role those threads might play in heredity, German anatomist Wilhelm Waldeyer—one of the great “namers” in biology—came up with a term for them, and it stuck: chromosomes.

Milestone #3 The discovery—and dismissal—of DNA

As the nineteenth century drew to a close, the world, already busy ignoring the first great milestone in genetics, went on to dismiss the second great milestone: the discovery of DNA. That’s right, the DNA, the substance from which genes, chromosomes, hereditary traits and, for that matter, the twenty-first century revolution in genetics are built. And, like the snubbing of Mendel and his laws of heredity, it was no short-term oversight: Not long after its discovery in 1869, DNA was essentially put aside for the next half century.

It all began when Swiss physician Friedrich Miescher, barely out of medical school, made a key career decision. Because his poor hearing, damaged by a childhood infection, made it difficult to understand his patients, he decided to abandon a career in clinical medicine. Joining a laboratory at the University of Tübingen, Germany, Miescher decided instead to look into Ernst Haeckel’s recent proposal that the secrets of heredity might be found in the cell nucleus. That might sound like a glamorous endeavor, but Miescher’s approach was decidedly not. Having identified the best type of cell for studying the nucleus, he began collecting dead white blood cells—otherwise known as pus—from surgical bandages freshly discarded by the nearby university hospital. Wisely, he rejected any that were so decomposed that they, well, stunk.

Working with the least-offensive samples he could find, Miescher subjected the white blood cells to a variety of chemicals and techniques until he succeeded in separating the tiny nuclei from their surrounding cellular gunk. Then, after more tests and experiments, he was startled to discover that they were made of a previously unknown substance. Neither a protein nor fat, the substance was acidic and had a high proportion of phosphorus not seen in any other organic materials. With no idea of what it was, Miescher named the substance “nuclein”—what we now call DNA.

Miescher published his findings in 1871 and went on to spend many years studying nuclein, isolating it from other cells and tissues. But its true nature remained a mystery. Although convinced that nuclein was critical to cell function, Miescher ultimately rejected the idea that it played a role in hereditary. Other scientists, however, were not so sure. In 1885, the Swiss anatomist Albert von Kolliker boldly claimed that nuclein must be the material basis of heredity. And in 1895, Edmund Beecher (E. B.) Wilson, author of the classic textbook The Cell in Inheritance and Development, agreed when he wrote:

“...and thus we reach the remarkable conclusion that inheritance may, perhaps, be affected by the physical transmission of a particular chemical compound from parent to offspring.”

And yet, poised on the brink of a world-changing discovery, science blinked—the world was simply not ready to embrace DNA as the biochemical “stuff” of heredity. Within a few years, nuclein was all-but-forgotten. Why did scientists give up on DNA for the next 50 years until 1944? Several factors played a role, but perhaps most important, DNA simply didn’t seem up to the task. As Wilson noted in The Cell in 1925—a strong reversal from his praise in 1895—the “uniform” ingredients of nuclein seemed too uninspired compared to the “inexhaustible” variety of proteins. How could DNA possibly account for the incredible variety of life?

While the answer to that question would not unfold until the 1940s, Miescher’s discovery had at least one major impact: It helped stimulate a wave of research that led to the re-discovery of a long-forgotten milestone. Not once, but three times.

Milestone #4 Born again: resurrection of a monastery priest and his science of heredity

Spring may be the season of renewal, but few events rivaled the rebirth in early 1900 when, after a 34-year hibernation, Gregor Mendel and his laws of heredity burst forth with a vengeance. Whether divine retribution for the lengthy oversight, or an inevitable outcome of the new scientific interest, in the early 1900 not one, but three scientists independently discovered the laws of heredity—and then realized that the laws had already been discovered decades earlier by a humble monastery priest.

Dutch botanist Hugo de Vries was the first to announce his discovery when his plant breeding experiments revealed the same 3 to 1 ratio seen by Mendel. Carl Correns, a German botanist, followed when his study of pea plants helped him rediscover the laws of segregation and independent assortment. And Austrian botanist Erich Tschermak published his discovery of segregation based on experiments in breeding peas begun in 1898. All three came upon Mendel’s paper after their studies, while searching the literature. As Tschermak remarked, “I read to my great surprise that Mendel had already carried out such experiments much more extensive than mine, had noted the same regularities, and had already given the explanation for the 3:1 segregation ratio.”

Although no serious disputes developed over who would take credit for the re-discovery, Tschermak later admitted to “a minor skirmish between myself and Correns at the Naturalists’ meeting in Meran in 1903.” But Tschermak added that all three botanists “were fully aware of the fact that [their] discovery of the laws of heredity in 1900 was far from the accomplishment it had been in Mendel’s time since it was made considerably easier by the work that appeared in the interval.”

As Mendel’s laws of inheritance were reborn into the twentieth century, more and more scientists began to focus on those mysterious “units” of heredity. Although no one knew exactly what they were, by 1902 American scientist Walter Sutton and German scientist Theodor Boveri had figured out that they were located on chromosomes and that chromosomes were found in pairs in the cell. Finally, in 1909, Danish biologist Wilhelm Johannsen came up with a name for those units: genes.

Milestone #5 The first genetic disease: kissin’ cousins, black pee, and a familiar ratio

The sight of black urine in a baby’s diaper would alarm any parent, but to British physician Archibald Garrod, it posed an interesting problem in metabolism. Garrod was not being insensitive. The condition was called alkaptonuria, and though its most striking feature involved urine turning black after exposure to air, it is generally not serious and occurs in as few as one in one million people worldwide. When Garrod began studying alkaptonuria in the late 1890s, he realized the disease was not caused by a bacterial infection, as once thought, but some kind of “inborn metabolic disorder” (that is, a metabolic disorder that one is born with). But only after studying the records of children affected by the disease—whose parents in almost every case were first cousins—did he uncover the clue that would profoundly change our understanding of heredity, genes, and disease.

When Garrod first published the preliminary results of his study in 1899, he knew no more about genes or inheritance than anyone else, which explains why he overlooked one of his own key findings: When the number of children without alkaptonuria was compared to the number with the disease, a familiar ratio appeared: 3 to 1. That’s right, the same ratio seen by Mendel in his second-generation pea plants (e.g., three purpled-flower plants to one white-flower plant), with its implications for the transmission of hereditary traits and the role of “dominant” and “recessive” particles (genes). In Garrod’s study, the dominant trait was “normal urine” and the recessive trait “black urine,” with the same ratio turning up in second-generation children: For every 3 children with normal urine, 1 child had black urine (alkaptonuria). Although Garrod didn’t notice the ratio, it did not escape British naturalist William Bateson, who contacted Garrod upon hearing of the study. Garrod quickly agreed with Bateson that Mendel’s laws suggested a new twist he hadn’t considered: The disease appeared to be an inherited condition.

In a 1902 update of his results, Garrod put it all together—symptoms, underlying metabolic disorder, and the role of genes and inheritance. He proposed that alkaptonuria was determined by two hereditary “particles” (genes), one from each parent, and that the defective gene was recessive. Equally important, he drew on his biochemical background to propose how the defective “gene” actually caused the disorder: It must somehow produce a defective enzyme which, failing to perform its normal metabolic function, resulted in the black urine. With this interpretation, Garrod achieved a major milestone. He was proposing what genes actually do: They produce proteins, such as enzymes. And when a gene messed up—that is, was defective—it could produce a defective protein, which could lead to disease.

Although Garrod went on to describe several other metabolic disorders caused by defective genes and enzymes—including albinism, the failure to produce colored pigment in the skin, hair, and eyes—it would be another half-century before other scientists would finally prove him correct and appreciate his milestone discoveries. Today, Garrod is heralded as the first person to show the link between genes and disease. From his work developed the modern concepts of genetic screening, recessive inheritance, and the risks of interfamily marriage.

As for Bateson, perhaps inspired by Garrod’s findings, he complained in a 1905 letter that this new branch of science lacked a good name. “Such a word is badly wanted,” he wrote, “and if it were desirable to coin one, ‘genetics’ might do.”

* * *

In the early 1900s, despite a growing list of milestones, the new science was suffering an identity crisis, split as it was into two worlds. On the one hand, Mendel and his followers had established the laws of heredity but couldn’t pinpoint what the physical “elements” were or where they acted. On the other hand, Flemming and others had discovered tantalizing physical landmarks in the cell, but no one knew how they related to heredity. In 1902, the worlds shifted closer together when American scientist Walter Sutton not only suggested that the “units” of hereditary were located on chromosomes, but that chromosomes were inherited as pairs (one from the mother and one from father) and that they “may constitute the physical basis of Mendelian laws of heredity.” But it wasn’t until 1910 that an American scientist—surprising himself as much as anyone else—joined these two worlds with one theory of inheritance.

Milestone #6 Like beads on a necklace: the link between genes and chromosomes

In 1905, Thomas Hunt Morgan, a biologist at Columbia University, was not only skeptical that chromosomes played a role in heredity, but was sarcastic of those at Columbia who supported the theory, complaining that the intellectual atmosphere was “saturated with chromosomic acid.” For one thing, Morgan found the idea that chromosomes contained hereditary traits sounded too much like preformation, the once commonly believed myth that every egg contained an entire “preformed” person. But everything changed for Morgan around 1910, when he walked into the “fly room”—a room where he and his students had bred millions of fruit flies to study their genetic traits—and made a stunning discovery: One of his fruit flies had white eyes.

The rare white eyes were startling enough given that fruit flies normally had red eyes, but Morgan was even more surprised when he crossed the white-eyed male with a red-eyed female. The first finding was not so surprising. As expected, the first generation of flies all had red eyes, while the second generation showed the familiar 3 to 1 ratio (3 red-eyed flies for every 1 white-eyed fly). But what Morgan didn’t expect, and what overturned the foundation of his understanding of heredity, was an entirely new clue: All of the white-eyed offspring were male.

This new twist—the idea that a trait could only inherited by one sex and not the other—had profound implications because of a discovery made several years earlier. In 1905, American biologists Nettie Stevens and E. B. Wilson had found that a person’s gender was determined by two chromosomes, the so-called X and Y chromosomes. Females always had two X chromosomes, while males had one X and one Y. When Morgan saw that the white-eyed flies were always male, he realized that the gene for white eyes must somehow be linked to the male sex chromosome. That forced him to make a conceptual leap he’d been resisting for years: Genes must be a part of the chromosome.

A short time later, in 1911, one of Morgan’s undergraduate students, Alfred Sturtevant, achieved a related milestone when he realized that the genes might actually be located along the chromosome in a linear fashion. Then, after staying up most of the night, Sturtevant produced the world’s first gene map, placing five genes on a linear map and calculating the distances between them.

In 1915, Morgan and his students published a landmark book, Mechanism of Mendelian Heredity, that finally made the connection official: Those two previously separate worlds—Mendel’s laws of heredity and the chromosomes and genes inside cells were one and the same. When Morgan won the 1933 Nobel Prize in Physiology or Medicine for the discovery, the presenter noted that the theory that genes were lined up on the chromosome “like beads in a necklace” initially seemed like “fantastic speculation” and “was greeted with justified skepticism.” But when later studies proved him correct, Morgan’s findings were now seen as “fundamental and decisive for the investigation and understanding of the hereditary diseases of man.”

Milestone #7 A transformational truth: the rediscovery of DNA and its peculiar properties

By the late 1920s, many secrets of heredity had been revealed: The transmission of traits could be explained by Mendel’s laws, Mendel’s laws were linked to genes, and genes were linked to chromosomes. Didn’t that pretty much cover everything?

Not even close. Heredity was still a mystery because of two major problems. First, the prevailing view was that genes were composed of proteins—not DNA. And second, no one had a clue how genes, whatever they were, created hereditary traits. The final mysteries began to unfold in 1928 when Frederick Griffith, a British microbiologist, was working on a completely different problem: creating a vaccine against pneumonia. He failed in his effort to make the vaccine but was wildly successful in revealing the next key clue.

Griffith was studying a type of pneumonia-causing bacteria when he discovered something curious. One form of the bacteria, the deadly S type, had a smooth outer capsule, while the other, the harmless R type, had rough outer surface. The S bacteria were lethal because they had a smooth capsule that enabled them to evade detection by the immune system. The R bacteria were harmless because, lacking the smooth outer capsule, they could be recognized and destroyed by the immune system. Then Griffith discovered something even stranger: If the deadly S bacteria were killed and mixed with harmless R bacteria, and both were injected into mice, the mice still died. After more experiments, Griffith realized that the previously harmless R bacteria had somehow “acquired” from the deadly S bacteria the ability to make the smooth protective capsule. To put it another way, even though the deadly S bacteria had been killed, something in them transformed the harmless R bacteria into the deadly S type.

What was that something and what did it have to do with heredity and genetics? Griffith would never know. In 1941, just a few years before the secret would be revealed, he was killed by a German bomb during an air raid on London.

* * *

When Griffith’s paper describing the “transformation” of harmless bacteria into a deadly form was published in 1928, Oswald Avery, a scientist at the Rockefeller Institute for Medical Research in New York, initially refused to believe the results. Why should he? Avery had been studying the bacteria described by Griffith for the past 15 years, including the protective outer capsule, and the notion that one type could “transform” into another was an affront to his research. But when Griffith’s results were confirmed, Avery became a believer and, by the mid-1930s, he and his associate Colin MacLeod had shown that the effect could be recreated in a Petri dish. Now the trick was to figure out exactly what was causing this transformation. By 1940, as Avery and MacLeod closed in on the answer, they were joined by a third researcher, Maclyn McCarty. But identifying the substance was no easy task. In 1943, as the team struggled to sort out the cell’s microscopic mess of proteins, lipids, carbohydrates, nuclein, and other substances, Avery complained to his brother, “Try to find in that complex mixture the active principle! Some job—full of heart aches and heart breaks.” Yet Avery couldn’t resist adding an intriguing teaser, “But at last perhaps we have it.”

Indeed they did. In February, 1944, Avery, MacLeod, and McCarty published a paper announcing that they had identified the “transforming principle” through a simple—well, not so simple—process of elimination. After testing everything they could find in that complex cellular mixture, only one substance transformed the R bacteria into the S form. It was nuclein, the same substance first identified by Friedrich Miescher almost 75 years earlier, and which they now called deoxyribonucleic acid, or DNA. Today, the classic paper is recognized for providing the first proof that DNA was the molecule of heredity. “Who would have guessed it?” Avery asked his brother.

In fact, few guessed or believed it because the finding flew in the face of common sense. How could DNA—considered by many scientists to be a “stupid” molecule and chemically “boring” compared to proteins—account for the seemingly infinite variety of hereditary traits? Yet even as many resisted the idea, others were intrigued. Perhaps a closer look at DNA would answer that other long-unanswered question: How did heredity work?

One possible clue to the mystery had been uncovered a few years earlier, in 1941, when American geneticists George Beadle and Edward Tatum proposed a theory that not only were genes not composed of proteins, but perhaps they actually made proteins. In fact, their research verified something Archibald Garrod had shown 40 years earlier with his work on the “black urine” disease, proposing that what genes do is make enzymes (a type of protein). Or, as it came to be popularly stated, “One gene, one protein.”

But perhaps the most intriguing clue came in 1950. Scientists had known for years that DNA was composed of four “building block” molecules called bases: adenine, thymine, cytosine, and guanine. In fact, the repetitious ubiquity of those building blocks in DNA was a major reason why scientists thought DNA was too “stupid”—that is, too simplistic—to play a role in heredity. However, when Avery, MacLeod, and McCarty’s paper came out in 1944, Erwin Chargaff, a biochemist at Columbia University, saw “the beginning of a grammar of biology...the text of a new language, or rather...where to look for it.” Unafraid to take on that enigmatic book, Chargaff recalled, “I resolved to search for this text.”

In 1949, after applying pioneering laboratory skills to analyze the components of DNA, Chargaff uncovered an odd clue. While different organisms had different amounts of those four bases, all living things seemed to share one similarity: The amount of adenine (A) and thymine (T) in their DNA was always about the same, as was the amount of cytosine (C) and guanine (G). The meaning of this curious one-to-one relationship—A&T and C&G—was unclear yet profound in one important way. It liberated DNA from the old “tetranucleotide hypothesis,” which held that the four bases repeated monotonously, without variation in all species. The discovery of this one-to-one pairing suggested a potential for greater creativity. Perhaps DNA was not so dumb after all. And though Chargaff didn’t recognized the importance of his finding, it led to the next milestone: the discovery of what heredity looked like—and how it worked.

Milestone #8 Like a child’s toy: the secrets of DNA and heredity finally revealed

In 1895, Wilhelm Roentgen stunned the world and revolutionized medicine with the world’s first X-ray—an eerie skeletal photograph of his wife’s hand. Fifty-five years later, an X-ray image once again stunned the world and triggered a revolution in medicine. True, the X-ray image of DNA was far less dramatic than a human hand, looking more like lines rippling out from a pebble dropped in a pond than the skeletal foundation of heredity. But once that odd pattern had been translated into the DNA double helix—the famous winding staircase structure that scientist Max Delbrück once compared to “a child’s toy that you could buy at the dime store”—the solution to an age-old mystery was at hand.

For James Watson, a graduate student at Cavendish Laboratory in England, the excitement began to build in May 1951, while he was attending a conference in Naples. He was listening to a talk by Maurice Wilkins, a New Zealand-born British molecular biologist at King’s College in London, and was struck when Wilkins showed the audience an X-ray image of DNA. Although the pattern of fuzzy gray and black lines on the image was too crude to reveal the structure of DNA, much less its role in heredity, to Watson it provided tantalizing hints as to how the molecule might be arranged. Before long, it was proposed that DNA might be structured as a helix. But when Rosalind Franklin, another researcher at King’s College, produced sharper images indicating that DNA could exist in two different forms, a debate broke out about whether DNA was really a helix after all.

By that time, Watson and fellow researcher Francis Crick had been working on the problem for about two years. Using evidence gathered by other scientists, they made cardboard cutouts of the various DNA components and built models of how the molecule might be structured. Then, in early 1953, as competition was building to be the first to “solve” the structure, Watson happened to be visiting King’s College when Wilkins showed him an X-ray that Franklin had recently made—a striking image that showed clear helical features. Returning to Cavendish Laboratory with this new information, Watson and Crick reworked their models. A short time later, Crick had a flash of insight, and by the end of February 1953, all of the pieces fell into place: the DNA molecule was a double helix, a kind of endless spiral staircase. The phosphate molecules first identified by Friedrich Miescher in 1869 formed its two handrails, the so-called “backbone,” while the base pairs described by Chargaff—A&T and C&G—joined to form its “steps.”

When Crick and Watson published their findings in April 1953, their double helix model was a stunning achievement, not only because it described the structure of DNA, but because it suggested how DNA might work. For example, what exactly is a gene? The new model suggested a gene might be a specific sequence of base pairs within the double helix. And given that the DNA helix is so long—we now know there are about 3.1 billion base pairs in every cell—there were more than enough base pairs to account for the genes needed to make the raw materials of living things, including hereditary traits. The model also suggested how those A&T and C&G sequences might actually make proteins: If the helix were to unwind and split at the point where two bases joined, the exposed single bases could act as a template that the cell could use to either make new proteins or—in preparation for dividing—a new chromosome.

Although Crick and Watson did not specify these details in their paper, they were well aware of the implications of their new model. “It has not escaped our notice that the [base] pairing we have postulated immediately suggests a possible copying mechanism for the genetic material.” In a later paper, they added “...it therefore seems likely that the precise sequence of bases is the code which carries the genetic information.” Interestingly, only a few months earlier Crick had been far less cautious in announcing the discovery, when he reportedly “winged” into a local pub and declared that he and Watson had discovered “the secret of life.”

Milestone #9 The great recount: human beings have how many chromosomes?

By the time Crick and Watson had revealed the structural details of DNA in 1953, the world had known for years how many chromosomes are found in human cells. First described in 1882 by Walther Flemming, chromosomes are the tiny paired structures that DNA twists, coils, and wraps itself into. During the next few decades, everyone had seen chromosomes. And though difficult to see and count given technological limits of the time, by the early 1920s geneticist Thomas Painter was confident enough to boldly proclaim the number that would be universally accepted around the world: 48.

Wait—what?

In fact, it was not until 30 years later, in 1955, that Indonesian-born scientist Joe-Hin Tjio discovered that human cells actually have 46 chromosomes (arranged in 23 pairs). The finding—announced to a somewhat red-faced scientific community in 1956—was made possible by a technique that caused chromosomes to spread apart on a microscope slide, making them easier to count. In addition to nailing down the true number, the advance helped establish the role of cell genetics in medicine and led to subsequent discoveries that linked chromosomal abnormalities with specific diseases.

Milestone #10 Breaking the code: from letters and words to a literature of life

Crick and Watson may have discovered the secret to life in 1953, but one final mystery remained: How do cells use those base pair “steps” inside the DNA helix to actually build proteins? By the late 1950s, scientists had discovered some of the machinery involved—including how RNA molecules help “build” proteins by ferrying raw materials around inside the cell—but it was not until two years later that they finally cracked the genetic code and determined the “language” by which DNA makes proteins.

In August 1961, American biochemist Marshall Nirenberg and his associate J. Heinrich Matthaei announced the discovery of the first “word” in the language of DNA. It consisted of only three letters, with each letter being one of the four bases arranged in a specific order and, in turn, coding for other molecules used to build proteins. And thus the genetic code was broken. By 1966, Nirenberg had identified more than 60 of the so-called “codons,” each representing a unique three-letter word. Each three-letter word was then used to build one of 20 “sentences,” the 20 major amino acids that form the building blocks of protein. And from those protein sentences sprang the story of life, the countless biological substances found in all living things—from enzymes and hormones, to tissues and organs, to the hereditary traits that make us each unique.

By the end of 1961, as news spread across the world that the “code of life” had been broken, public reaction spanned a predictable range of extremes. An article in the Chicago Sun-Times optimistically offered that with the new information, “science may deal with the aberrations of DNA arrangements that produce cancer, aging, and other weaknesses of the flesh.” Meanwhile, one Nobel Laureate in chemistry warned that the knowledge could be used for “creating new diseases [and] controlling minds.”

By then, of course, Nierenberg had heard it all. In 1962, he wrote to Francis Crick, noting dryly that the press “has been saying [my] work may result in (1) the cure of cancer and allied diseases (2) the cause of cancer and the end of mankind, and (3) a better knowledge of the molecular structure of God.” But Nirenberg shrugged it off with good humor. “Well, it’s all in a day’s work.”

* * *

And so finally, after thousands of years of speculation, misconception, and myth, the secret of heredity, genetics, and DNA had been revealed. In many ways, the breakthrough was more than anyone could have imagined or bargained for. With the “blueprint” laid out before us, the molecular details of our wiring exposed from the inside-out, humanity collectively changed its entire way of thinking—and worrying—about every aspect of ourselves and our lives. Hidden in the microscopic coils of our DNA was the explanation for everything: the admirable and not-so-admirable traits of ourselves and families, the origins of health and disease, perhaps even the structural basis of good, evil, God, and the cosmos.

Well, not quite. As we now know, DNA has turned out to be considerably more complicated than that—except, perhaps, for the worrying.

But what’s unquestionably true and what forever changed our way of thinking was the discovery that the genetic code is a universal language. And as we sort out the details of how genes influence hereditary traits, health, and disease, one underlying truth is that the same genetic machinery underlies all living things, unifying life in a way whose significance we may not comprehend for years to come.

Fifty years later: more milestones and more mysteries

Fifty years after the genetic code was broken, a funny thing happened on the way to one of medicine’s top ten breakthroughs: It never quite arrived. Since the early 1960s, new milestones continue to appear like a perpetually breaking wave, each discovery reshaping the shoreline of an ongoing revolution. Consider just a few recent milestones:

• 1969: Isolation of the first individual gene (a segment of bacterial DNA that assists in sugar metabolism)

• 1973: Birth of genetic engineering (a segment of frog DNA is inserted into a bacterial cell, where it is replicated)

• 1984: Birth of genetic fingerprinting (use of DNA sequences for identification)

• 1986: First approved genetically engineered vaccine (hepatitis B)

• 1990: First use of gene therapy

• 1995: DNA of a single-cell organism decoded (influenza bacterium)

• 1998: DNA of a multicellular organism decoded (roundworm)

• 2000: DNA of a human decoded (a “working draft” that was subsequently completed in 2003)

When researchers at the Human Genome Project announced the decoding of the human genome in 2000, it helped initiate a new age of genetics that is now laying the groundwork for a widespread revolution in biology and medicine. Today, work from this project and other researchers around the globe has opened new insights into human ancestry and evolution, uncovering new links between genes and disease, and leading to countless other advances that are now revolutionizing medical diagnosis and treatment.

Gregor Mendel, who in 1865 dared not even speculate on what the “elements” of hereditary might be, would surely be amazed: We now know that humans have about 25,000 genes—far fewer than the 80,000 to 140,000 that some once believed—and comparable to some far simpler life forms, including the common laboratory mouse (25,000 genes), mustard weed (25,000 genes), and roundworm (19,000 genes). How could a mouse or weed have as many genes as a human being? Scientists believe that the complexity of an organism may arise not only from the number of genes, but in the complex way different parts of genes may interact. Another recent surprise is that genes comprise only 2% of the human genome, with the remaining content probably playing structural and regulatory roles. And, of course, with new findings come new mysteries: We still don’t know what 50% of human genes actually do, and despite the celebrated diversity of humans, how is it that the DNA of all people is 99.9% identical?

It is now clear that the answer to such questions extends beyond the DNA we inherit to the larger world around us. Humans have long suspected that heredity alone cannot account for our unique traits or susceptibility to disease, and new discoveries are now shedding light on one of the greatest mysteries of all: How do genes and environment interact to make us who we are?

The secret life of snips and the promise of genetic testing

For most of us, it’s difficult to imagine 3.1 billion pairs of anything, much less the chemical bases that comprise the DNA found in any given cell. So try instead to imagine 3.1 billion pairs of shoes extending into outer space. Now double that number so you’re picturing 6 billion individual shoes stretching into the cosmos. Six billion is the number of individual bases in the human genome, which scientists call “nucleotides.” As inconsequential as any one nucleotide may seem, a change to just one nucleotide—called a single nucleotide polymorphism, or SNP (pronounced “snip”)—can significantly impact human traits and disease. If you doubt that one SNP among billions could have much importance, consider that sickle cell anemia arises from just one SNP, and that most forms of cystic fibrosis arise from the loss of three nucleotides. As of 2008, researchers had identified about 1.4 million SNPs in the human genome. What causes these miniscule alterations to our DNA? Key suspects include environmental toxins, viruses, radiation, and errors in DNA copying.

The good news is that current efforts to identify SNPs are not only helping uncover the causes of disease, but creating chromosome “landmarks” with a wide range of applications. In 2005, researchers completed the first phase of a project in which they analyzed the DNA of people throughout the world and constructed a “map” of such landmarks based on 500,000 or more SNPs. This information is now revealing links between tiny genetic variations and specific diseases, which in turn is leading to new approaches to diagnosis (e.g., genetic testing) and treatment. For example, in the growing field of pharmacogenomics, doctors can use such information to make personalized treatment decisions based on a person’s genetic makeup. Recent examples include genetic tests that can identify forms of breast cancer that are susceptible to certain drugs or that can identify patients who may be susceptible to dangerous side effects when receiving the clot-preventing drug warfarin (Coumadin).

SNP findings are also providing insights into age-old questions such as how much we are influenced by genes and environment. In fact, it’s becoming increasingly clear that many common diseases—including diabetes, cancer, and heart disease—are probably caused by a complex interaction between both. In the relatively new field of epigenetics, scientists are looking at the netherworld where the two may meet—that is, how “external” factors such as exposure to environmental toxins may affect a person’s SNPs and thus susceptibility to disease.

Unfortunately, researchers are also learning that sorting out the role of SNPs and disease is a very complex business. The good news about the HapMap project is that researchers have already uncovered genetic variants associated with the risk of more than 40 diseases, including type 2 diabetes, Crohn’s disease, rheumatoid arthritis, elevated blood cholesterol, and multiple sclerosis. The bad news is that many diseases and traits are associated with so many SNPs that the meaning of any one variation is hard to gauge. According to one recent estimate, 80% of the variation in height in the population could theoretically be influenced by as many as 93,000 SNPs. As David B. Goldstein wrote in a 2009 issue of the New England Journal of Medicine (NEJM), if the risk for a disease involves many SNPs, with each contributing just a small effect, “then no guidance would be provided: In pointing at everything, genetics would point at nothing.”

While many of us are curious to try the latest genetic tests and learn about our risk for disease, Peter Kraft and David J. Hunter cautioned in the same issue of NEJM that, “We are still too early in the cycle of discovery for most tests... to provide stable estimates of genetic risk for many diseases.” However, they add that rapid progress is being made and that “the situation may be very different in just 2 or 3 years.” But as better tests become available, “Appropriate guidelines are urgently needed to help physicians advise patients...as to how to interpret, and when to act on, the results.”

“We’ll figure this out”: the promise of gene therapy

To some people, 1990 was the breakthrough year for genetics and medicine. In that year, W. French Anderson and his colleagues at the National Institutes of Health performed the first successful gene therapy in a four-year-old girl who had an immune deficiency disease caused by a defective gene that normally produces an enzyme called ADA. Her treatment involved an infusion of white blood cells with a corrected version of the ADA gene. But though the results were promising and spurred hundreds of similar clinical trials, a decade later it was clear that few gene therapy trials were actually working. The field suffered another setback in 1999, when 18-year-old Jesse Gelsinger received gene therapy for a non-life-threatening condition. Within days, the therapy itself killed Gelsinger, and the promise of gene therapy seemed to come to a crashing halt. But as one shaken up doctor said at Gelsinger’s bedside at the time of his death, “Good bye, Jesse...We’ll figure this out.”

Ten years later, scientists are beginning to figure it out. While the challenges of gene therapy are many—two major issues are how to safely deliver repaired genes into the body and how to ensure that the patient’s body accepts and uses them—many believe that the technique will soon be used to treat many genetic diseases, including blood disorders, muscular dystrophy, and neurodegenerative disorders. Recent progress includes modest successes in treating hereditary blindness, HIV, and rheumatoid arthritis. And in 2009, researchers reported on a follow-up study in which 8 out of 10 patients who received gene therapy for the defective ADA gene had “excellent and persistent” responses. As Donald B. Kohn and Fabio Candotti wrote in a 2009 editorial in the NEJM, “The prospects for continuing advancement of gene therapy to wider applications remain strong” and may soon “fulfill the promise that gene therapy made two decades ago.”

In other words, the breakthrough has arrived and continues to arrive. With the double helix unwinding in so many directions—spinning off findings that impact so many areas of science, society, and medicine—we can be patient. Like Hippocrates’ indulgence of the woman who gazed too long at the portrait of an Ethiopian on her wall, like Mendel’s years of counting thousands of pea plant traits, and like the milestone work of countless researchers over the past 150 years—we can be patient. It’s a long road, but we’ve come a long way.

* * *

Up until the early 1800s, many scientists believed—like Hippocrates 2,200 years before them—that maternal impression was a reasonable explanation for how a mother might pass some traits on to her unborn child. After all, perhaps the shock of what a pregnant mother witnessed was somehow transferred to her fetus through small connections in the nervous system. But by the early 1900s, with advances in anatomy, physiology, and genetics providing other explanations, the theory of maternal impression was abandoned by most physicians.

Most, but not all...

• In the early 1900s, the son of a pregnant mother was violently knocked down by a cart. He was rushed to the hospital, where the mother could not restrain herself from a fearful glance as the physician stitched up her son’s bloodied scalp. Seven months later she gave birth to a baby girl with a curious trait: There was a bald area on her scalp in exactly the same region and size as where her brother had been wounded.

This story, along with 50 other reports of maternal impression, appeared in an article published in a 1992 issue of the Journal of Scientific Exploration. The author, Ian Stevenson, a physician at the University of Virginia School of Medicine, did not mention genetics, made no attempt to provide a scientific explanation, and noted, “I do not doubt that many women are frightened during a pregnancy without it having an ill effect on their babies.” Nevertheless, based on his analysis, Stevenson concluded, “In rare instances maternal impressions may indeed affect gestating babies and cause birth defects.”

In the brave new world of genes, nucleotides, and SNPs, it’s easy to dismiss such mysteries as playing no role in the inheritance of physical traits—no more than, say, DNA was thought to have for 75 years after its discovery.