9. Medicines for the Mind: The Discovery of Drugs for Madness, Sadness, and Fear

One cold evening in February, 2008, a 39-year-old man dressed in a dark hat, trench coat, and sneakers ducked out of the freezing rain and into a suite of mental health offices several blocks east of Central Park in New York City. Toting two black suitcases, he climbed a short flight of steps, entered a waiting room, and—directed by the voice of God—prepared to rob psychiatrist Dr. Kent Shinbach. But the man was apparently in no hurry; told that Dr. Shinbach was busy, he put his luggage aside, sat down, and for the next 30 minutes made small talk with another patient.

Then something went terribly wrong. For no clear reason, the man suddenly got up and entered the nearby office of psychologist Kathryn Faughey. Armed with two knives and a meat cleaver, he erupted in a frenzied rage, viciously slashing and stabbing the 56-year-old therapist in the head, face, and chest, splattering blood across the walls and furniture. Hearing the screams, Dr. Shinbach rushed out of his office to help, only to find Dr. Faughey’s lifeless body on the blood-soaked carpet. Before the 70-year-old psychiatrist could escape, the man began attacking him, stabbing him in the face, head, and hands. The man finally pinned Dr. Shinbach against a wall with a chair, stole $90 from his wallet, and fled. Dr. Shinbach survived, but Dr. Faughey—who was described as a “good person” who “changed and saved people’s lives”—was pronounced dead at the scene.

It wasn’t until several days later that police arrested and charged David Tarloff with the murder and the bizarre details began to unfold. “Dad, they say I killed some lady,” Tarloff said in a phone call to his father at the time of his arrest. “What are they talking about?” As Tarloff’s dazed words suggested, what everyone would soon be talking about was not only the evidence pointing to his guilt, but his full-blown insanity. As The New York Times reported in subsequent weeks, Tarloff lived in nearby Queens and had been diagnosed with schizophrenia 17 years earlier, at the age of 22. The diagnosis was made by Dr. Shinbach, who no longer even remembered Tarloff or the diagnosis. According to Tarloff, his only motive the night of the murder had been to rob Dr. Shinbach. Dr. Faughey had somehow crossed his path, resulting in a random and tragically meaningless death. Why the sudden need for cash? Tarloff explained that he wanted to free his mother from a nursing home so they could “leave the country.”

Which is where things really get interesting.

Tarloff’s mother was in a nearby nursing home, but Tarloff’s interest had long-since escalated from healthy concern to pathologic obsession, with “harassing” visits, frequent daily phone calls, and, most recently, when he was found lying in bed with her in the ICU. According to his father, Tarloff had a long history of mental health problems. In addition to bouts of depression, anxiety, and mania, he’d displayed such obsessive-compulsive behaviors as taking 15 or 20 showers a day and calling his father 20 or more times after an argument to say “I’m sorry” in just the right way. But perhaps most striking were the recent symptoms suggesting that Tarloff had completely lost touch with reality. Apart from the auditory hallucinations in which God encouraged him to rob Dr. Shinbach, Tarloff’s paranoia and mental confusion continued after his arrest, when he blurted out in a courtroom, “If a fireman comes in, the police come in here, the mayor calls, anyone sends a messenger, they are lying. The police are trying to kill me.”

* * *

Who is David Tarloff, and how did he get this way? By most accounts, Tarloff had been a well-adjusted youth with a relatively normal childhood. One Queens neighbor reported that Tarloff had always been “the ladies man, tall and thin...with tight jeans and always good looking.” His father agreed that Tarloff had been “handsome, smart, and happy” while growing up but that something changed as he entered young adulthood and went to college. When he returned, his father recalled, Tarloff was moody, depressed, and silent. He “saw things” and believed people were “against him.” Unable to hold a job, he dropped out of two more colleges. After his diagnosis of schizophrenia, and over the next 17 years, Tarloff had been committed to mental hospitals more than a dozen times and prescribed numerous antipsychotic drugs. Yet despite some petty shoplifting and occasionally bothering people for money, neighbors viewed him with more pity than fear. One local store clerk described Tarloff as “a sad figure whose stomach often hung out, with his pants cuffs dragging and his fly unzipped.”

But in the year leading up to the murder, Tarloff’s mental health seemed to worsen. Eight months earlier, he had threatened to kill everyone at the nursing home where his mother was kept. Two months after that, police were called to his home by reports that he was behaving violently. And two weeks before the murder, he attacked a nursing home security guard. Tarloff’s mental state was clearly deteriorating, and it continued to decline after the murder. A year later, while awaiting trial in a locked institution, he claimed that he was the Messiah and that DNA tests would prove him to be the son of a nearby inmate, who himself believed he was God. Doctors despaired that Tarloff “may never be sane enough to understand the charges against him.”

Mental illness today: the most disorderly of disorders

Madness, insanity, psychosis, hallucinations, paranoia, delusions, incoherency, mania, depression, anxiety, obsessions, compulsions, phobias...

Mental illness goes by many names, and it seems that David Tarloff had them all. But Tarloff was lucky in one way: After centuries of confused and conflicting attempts to understand mental disorders, physicians are now better than ever at sorting through the madness. But though most psychiatrists would agree with the specific diagnosis given to Tarloff—acute paranoid schizophrenia—such precision cannot mask two unsettling facts: The symptoms experienced by Tarloff also occur in many other mental disorders, and his treatment failure led to the brutal murder of an innocent person. Both facts highlight a nasty little secret about mental illness: We still don’t know exactly what it is, what causes it, how to diagnose and classify it, or the best way to treat it.

Oops.

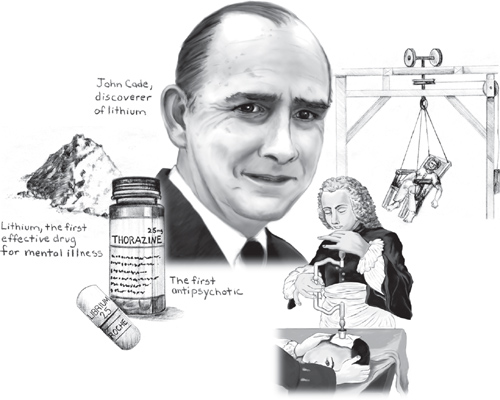

That’s not to discount the many advances that have been made over the centuries, which range from the realization in ancient times that mental illness is caused by natural factors rather than evil spirits, to the milestone insight in the late-eighteenth century that mentally ill patients fared better when treated with kindness rather than cruelty, to one of the top ten breakthroughs in the history of medicine: The mid-twentieth century discovery of the first effective drugs for madness, sadness, and fear—more commonly known as schizophrenia and manic-depression (madness), depression (sadness), and anxiety (fear).

Yet despite these advances, mental illness remains a unique and vexing challenge compared to most other human ailments. On the one hand, they can be as disabling as any “physical” disease; in addition to often lasting a lifetime, destroying the lives of individuals and families and crippling careers, they can also be fatal, as in cases of suicide. On the other hand, while most diseases have a generally known cause and leave a trail of evidence—think of infections, cancer, or the damaged blood vessels underlying heart disease—mental disorders typically leave no physical trace. Lacking objective markers and a clear link between cause and effect, they cannot be diagnosed with laboratory tests and present no clear divisions between one condition and another. All of these factors stymie the search for treatments, which—no surprise—tend to work better when you actually know what you’re treating.

Because of such challenges, the “Bible” for diagnosing mental disorders in the United States—the American Psychiatric Association’s Diagnostic and Statistical Manual of Mental Disorders (DSM-IV)—provides guidelines based mainly on “descriptive” symptoms. But as seen with David Tarloff and many patients, descriptive symptoms can be subjective, imprecise, and not exclusive to any one disorder. Even the DSM-IV—all 943 pages of it—states that “It must be admitted that no definition adequately specifies precise boundaries for the concept of a mental disorder.”

At least there is agreement on a general definition of mental illness and how debilitating it can be. According to the National Alliance on Mental Illness (NAMI), mental disorders are “medical conditions that disrupt a person’s thinking, feeling, mood, ability to relate to others, and daily functioning.” In addition, they often “diminish a person’s ability to cope with the ordinary demands of life,” affect people of all ages, races, religion, or income, and are “not caused by personal weakness.”

Recent studies have also provided eye-opening statistics as to how common and serious mental disorders are. The World Health Organization (WHO) recently found that about 450 million people worldwide suffer from various mental illnesses and that nearly 900,000 people commit suicide each year. Just as alarming, a 2008 WHO report found that based on a measure called “burden of disease”—defined as premature death combined with years lived with a disability—depression now ranks as the fourth most serious disease in the world. By 2030, it will be the world’s second most serious burden of disease, after HIV/AIDS.

But perhaps the biggest barrier to understanding and treating mental illness is the sheer number of ways the human mind can go awry: The DSM-IV divides mental illness into as many as 2,665 categories. Although such fragmentation of human suffering can be helpful to researchers, a 2001 WHO report found that the most serious mental illnesses fall into just four categories that rank among the world’s top ten causes of disability: Schizophrenia, Bipolar Disorder (manic-depression), Depression, and Anxiety. The identification of these four disorders is intriguing because, as it turns out, one of the ten greatest breakthroughs in medicine was the discovery of drugs for these same conditions—antipsychotic, anti-manic, antidepressant, and anti-anxiety drugs. What’s more, physicians have been struggling to understand these disorders for thousands of years.

The many faces of madness: early attempts to understand mental illness

“He huddled up in his clothes and lay not knowing where he was. His wife put her hand on him and said, ‘No fever in your chest. It is sadness of the heart.’”

—Ancient Egyptian papyri, c. 1550 BC

Descriptions of the four major types of mental illness date back to the dawn of civilization. In addition to this account of depression, reports of schizophrenia-like madness can be found in many ancient documents, including Hindu Vedas from 1400 BC that describe individuals under the influence of “devils” who were nude, filthy, confused, and lacking self-control. References to manic-depression—periods of hyper-excited and grandiose behavior alternating with depression—can be found as early as the second century AD in the writings of Roman physician Soranus of Ephedrus. And in the fourth century BC, Aristotle described the debilitating effects of anxiety, linking it to such physical symptoms as heart palpitations, paleness, diarrhea, and trembling.

The most durable theory of mental illness, dating back to the fourth century BC and influential up until the 1700s, was Hippocrates’ humoral theory, which stated that mental illness could occur when the body’s four “humors”—phlegm, yellow bile, black bile, and blood—became imbalanced. Thus, excess phlegm could lead to insanity; excess yellow bile could cause mania or rage; and excess black bile could result in depression. While Hippocrates was also the first to classify paranoia, psychosis, and phobias, later physicians devised their own categories. For example, in 1222 AD Indian physician Najabuddin Unhammad listed seven major types of mental illness, including not only madness and paranoia, but “delusion of love.”

But perhaps the most breathtakingly simple classification was seen in the Middle Ages and illustrated by the case of Emma de Beston. Emma lived in England at a time when mental illness was divided into just two categories: Idiots and Lunatics. The division wasn’t entirely crazy. Based on legal custom of the day, idiots were those born mentally incompetent and whose inherited profits went to the king; lunatics were those who had lost their wits during their lifetimes and whose profits stayed with the family. According to well-documented legal records of the time, on May 1, 1378, Emma’s mind was caught “by the snares of evil spirits,” as she suddenly began giving away a large part of her possessions. In 1383, at her family’s request, Emma was brought before an inquisition to have her mental state assessed. Her responses to their questions were revealing: She knew how many days were in the week but could not name them; how many men she had married (three) but could name only two; and she could not name her son. Based on her sudden mental deterioration, Emma was judged a lunatic—presumably to the satisfaction and profit of her family.

By the sixteenth and seventeenth centuries, with the growing influence of the scientific revolution, physicians began to take a harder look at mental illness. In 1602, Swiss physician Felix Platter published the first medical textbook to discuss mental disorders, noting that they could be explained by both Greek humoral theory and the work of the devil. Another key milestone came in 1621, when Robert Burton, a vicar and librarian in Oxford, England, published The Anatomy of Melancholy, a comprehensive text on depression that rejected supernatural causes and emphasized a humane view. Depression, wrote Burton, “is a disease so grievous, so common, I know not [how to] spend my time better than to prescribe how to prevent and cure a malady that so often crucifies the body and the mind.” Burton also provided vivid descriptions of depression, including: “It is a chronic disease having for its ordinary companions fear and sadness without any apparent occasion... [It makes one] dull, heavy, lazy, restless, unapt to go about any business.”

Although physicians struggled for the next two centuries to understand madness, one early milestone came in 1810, when English physician John Haslam published the first book to provide a clear description of a patient with schizophrenia. The patient, James Tilly Matthews, believed an “internal machine” was controlling his life and torturing him—an interesting delusion given that Matthews lived at the dawn of the Industrial Revolution. Haslam also summarized the confusion of many physicians when he wrote that madness is “a complex term for all its forms and varieties. To discover an infallible definition... I believe will be found impossible.” But physicians did not give up, and in 1838 French psychiatrist Jean Etienne Dominique Esquirol published the first modern treatise on mental disorders, in which he introduced the term “hallucinations” and devised a classification that included paranoia, obsessive compulsive disease, and mania.

In the meantime, by the 1800s, the term “anxiety” was beginning to appear in the medical literature with increasing frequency. Until then, anxiety had often been viewed as a symptom of melancholy, madness, or physical illness. In fact, confusion over where anxiety fit into the spectrum of mental illness underwent many shifts over the next two centuries, from Sigmund Freud’s 1894 theory that “anxiety neurosis” was caused by a “deflection of sexual excitement,” to the twentieth-century realization that the “shell shock” suffered by soldiers in wartime was a serious mental disorder related to anxiety. Although the APA did not include “anxiety” in its manuals until 1942, today the DSM-IV lists it as a major disorder, with subcategories that include panic disorder, OCD, posttraumatic stress disorder (PTSD), social phobia, and various specific phobias.

Although the profession of psychiatry was “born” in the late 1700s, madness remained a vexing problem throughout most of the nineteenth century. The problem was that the symptoms of insanity could be so varied—from angry fits of violence to the frozen postures and stone silence of catatonia; from bizarre delusions and hallucinations to manic tirades of hyper-talkativeness. But in the late 1890s, German psychiatrist Emil Kraepelin made a landmark discovery. After studying thousands of psychotic patients and documenting how their illness progressed over time, Kraepelin was able to sort “madness” into two major categories: 1) Manic-Depression, in which patients suffered periods of mania and depression but did not worsen over time; and 2) Schizophrenia, in which patients not only had hallucinations, delusions, and disordered thinking, but often developed their symptoms in young adulthood and did worsen over time. Although Kraepelin called the second category “dementia praecox,” the term schizophrenia was later adopted to reflect the “schisms” in a patient’s thoughts, emotions, and behavior.

Kraepelin’s discovery of the two new categories of madness was a major milestone that remains influential to this day and is reflected in the DSM-IV. In fact, David Tarloff is a textbook case of schizophrenia because his symptoms (hallucinations, paranoia, delusions, and incoherent speech) started in young adulthood and worsened over time. Kraepelin’s insight not only helped clear the foggy boundaries between two major mental disorders, but set the stage for the discovery of drugs to treat mental illness—a remarkable achievement given the alarming array of treatments used in the previous 2,500 years...

Bloodletting, purges, and beatings: early attempts to “master” madness

“Madmen are strong and robust... They can break cords and chains, break down doors or walls, easily overthrow many endeavoring to hold them. [They] are sooner and more certainly cured by punishments than medicines.”

—Thomas Willis, 1684

Treatments for mental illness have a long history of better serving the delusions of those administering them than the unfortunate patients receiving them. For example, while Hippocrates’ treatments undoubtedly made sense to those who believed in humoral theory, his prescriptions for eliminating excess bile and phlegm—bleeding, vomiting, and strong laxatives—were probably of little comfort to his patients. And while some ancient treatments involved gentler regimens, such as proper diet, music, and exercise, others were literally more frightening: In the thirteenth century AD, Indian physician Najabuddin Unhammad’s prescriptions for mental illness included scaring patients into sanity through the use of snakes, lions, elephants, and “men dressed as bandits.”

The Middle Ages also saw the rise of institutions to care for the mentally ill, but with decidedly mixed results. On the plus side, the religion of Islam taught that society should provide kindly care for the insane, and followers built hospitals and special sections for the mentally ill, including facilities in Baghdad (750 AD) and Cairo (873 AD). In contrast, perhaps the most famous and most notorious asylum in Europe was London’s Bethlem hospital, which began admitting insane patients around 1400. Over the next century, Bethlem became dominated by patients with severe mental illness, leading to its reputation as a “madhouse” and the popular term based on its name, “bedlam.” And that’s when the trouble really began.

Throughout the 1600s and 1700s, imprisonment and mistreatment of the insane in European asylums like Bethlem occurred with alarming frequency. Out of stigmatization and fear, society began to view mentally ill patients as incurable wild beasts who had to be restrained with chains and tamed with regular beatings and cruel treatments. “Madmen,” wrote English physician Thomas Willis in a 1684 book, “are almost never tired... They bear cold, heat, fasting, strokes, and wounds without sensible hurt.” While Willis was probably referring to extreme cases of schizophrenia and mania, the public was intrigued—and amused—to watch from a distance: At one time, up to 100,000 people visited Bethlem yearly, happy to pay the one penny admission fee to view the madmen and their “clamorous ravings, furious gusts of outrageous action, and amazing exertion of muscular force.”

Asylum directors, meanwhile, focused on “treatments” to keep their patients under control. While Willis contended that “bloodletting, vomits, or very strong purges are most often convenient,” others suggested that the best way to gain “complete mastery” over madmen was near-drowning. To that end, creative therapies included hidden trapdoors in corridors to drop unsuspecting lunatics into a “bath of surprise” and coffins with holes drilled into the cover, in which patients were enclosed and lowered into water. But perhaps the cruelest “therapy” of all was the rotating/swinging chair, as described by Joseph Mason Cox in 1806. Patients were strapped into a chair that hung from several chains and that an operator could simultaneously swing and/or revolve “with extraordinary precision.” Cox wrote that with a series of maneuvers—increasing the swing’s velocity, quick reversals, pauses, and sudden stopping—a skilled operator could trigger “an instant discharge of the stomach, bowels, and bladder, in quick succession.”

While the mistreatment of mentally ill patients continued throughout most of the eighteenth century, a key milestone occurred in the late-1700s when French physician Philippe Pinel began a movement he called the “moral treatment of insanity.” In 1793, Pinel had become director of a men’s insane asylum at Bicetre. Within a year, he had developed a new philosophy and approach to treating mental illness based on carefully observing and listening to patients, recording the history of their illness, and treating them “in a psychologically sensitive way.” In his famous 1794 Memoir on Madness, Pinel wrote that “One of the most fundamental principles of conduct one must adopt toward the insane is an intelligent mixture of affability and firmness.” Pinel also strongly opposed physical restraints unless absolutely necessary. In 1797, following a similar move by an associate at Bicetre, Pinel famously unshackled patients at Salpetriere, a public hospital for women. Today, Pinel is considered to be the father of psychiatry in France.

Unfortunately, although the moral treatment promoted by Pinel and others was influential throughout the 1800s, the model eventually failed as increasing numbers of patients became “warehoused” in large, crowded institutions. By the end of the nineteenth century, other trends had begun to dominate the field of mental illness, including a growing emphasis on the anatomy and physiology of the nervous system and new psychological approaches developed by Freud and his followers. But though Freud’s talk-based treatments were influential in the United States and were a vital precursor to modern psychotherapy, they eventually fell out of favor due to their ineffectiveness in serious mental illness and lack of a biological foundation.

And so by the early 1900s, after centuries of dismal failure, the world was ready for a new approach to treating mental illness. The first milestone finally arrived in the form of several “medical” treatments that ranged from the frightening to the bizarre. But at least they worked—sort of.

Milestone #1 Sickness, seizure, surgery, and shock: the first medical treatments for mental illness

Sickness. Insanity can have many causes, but probably one of the most humbling is syphilis, a sexually transmitted disease. Today syphilis is easily treated and cured with penicillin, but in the early 1900s it often progressed to its final stages, when it can attack the brain and nerves and cause, among other symptoms, insanity. In 1917, Austrian psychiatrist Julius Wagner-Jauregg decided to investigate a possible treatment for this one cause of mental illness based on an idea he’d been thinking about for 30 years: He would attempt to cure one devastating disease, syphilis, with another: malaria

The idea wasn’t without precedent. Malaria produces fever, and physicians had long known that, for unknown reasons, mental illness sometimes improved after a severe fever. And so in 1917, Wagner-Jauregg injected nine patients with a mild, treatable form of malaria. The patients soon developed a fever, followed by a side effect that Wagner-Jauregg called “gratifying beyond expectation.” The mental disturbances in all nine patients improved, with three being “cured.” Malaria treatment was subsequently tested throughout the world, with physicians reporting cure rates as high as 50%, and Wagner-Jauregg won the Nobel Prize in 1927 for his discovery. Although his treatment only addressed one type of insanity caused by an infection that is easily prevented today, it showed for the first time that mental illness could be medically treated.

Seizure. In 1927, Polish physician Manfred Sakel discovered that too much of a good thing—insulin—can be bad and good. Normally, the body needs insulin to metabolize glucose and thus prevent diabetes. Sakel had discovered that when a morphine-addicted woman was accidentally given an overdose of insulin and fell into a coma, she later woke up in an improved mental state. Intrigued, he wondered if a similar “mistake” might help other patients with mental illness. Sure enough, when he gave insulin overdoses to patients with schizophrenia, they experienced coma and seizures, but also recovered with improved mental functioning. Sakel reported his technique in 1933, and it was soon being hailed as the first effective medical treatment for schizophrenia. Within a decade, “insulin shock” therapy had spread throughout the world, with reports that more than 60% of patients were helped by the treatment.

While Sakel was experimenting with insulin, others were pursuing a different but related idea. Physicians had observed that epilepsy was rare in patients with schizophrenia, but in those who did have epilepsy, their mental symptoms often improved after a seizure. This begged the question: Could schizophrenia be treated by purposely triggering seizures? In 1935, Hungarian physician Ladislaus von Meduna, who was experienced in both epilepsy and schizophrenia, induced seizures in 26 patients with schizophrenia by injecting them with a drug called metrazol (cardiazol). Although the effect was unnerving—patients experienced quick and violent convulsions—the benefit was impressive, with 10 of the 26 patients recovering. Subsequent studies found that up to 50% of patients with schizophrenia could be discharged after treatment and that some had “dramatic cures.” When Meduna reported his results in 1937, insulin therapy was well-known, leaving physicians with a choice: Metrazol was cheaper and faster but produced convulsions so violent that 42% of patients developed spinal fractures. In contrast, insulin was easier to control and less dangerous but took longer. But the debate would soon be moot, as both were replaced by treatments that were less risky and more effective.

Surgery. Surgery has been used for mental illness since ancient times, when trepanning, or cutting holes in the skull, was used to relieve pressure, release evil spirits, or perhaps both. But modern psychosurgery did not begin until 1936, when Portuguese physician Egas Moniz introduced the prefrontal leucotomy—the notorious lobotomy—in which an ice pick-like instrument was inserted into a patient’s frontal lobes to sever their connections with other brain areas. The procedure seemed to work, and between 1935 and 1955 it was used in thousands of people and became a standard treatment for schizophrenia. Although Moniz won a Nobel Prize in 1949 for his technique, it eventually became clear that many patients were not helped and that its adverse effects included irreversible personality damage. After 1960, psychosurgery was modified to be less destructive, and today it is sometimes used for severe cases of mental illness.

Shock. In the late 1930s, Italian neurologist Ugo Cerletti was as impressed as anyone else when he heard that insulin and metrazol could improve the symptoms of schizophrenia. But given the risks, Cerletti thought he had a better idea. A specialist in epilepsy, he knew that electric shocks could cause convulsions, and so he joined with Italian psychiatrist Lucio Bini to develop a technique for delivering brief, controlled electric shocks. In 1938, after testing in animals, they tried their new electroconvulsive therapy, or ECT, on a delusional, incoherent man found wandering in the streets. The patient improved after just one treatment and recovered after a series of 11 treatments. Follow-up studies confirmed that ECT could improve schizophrenia, but physicians soon found it was even more effective for depression and bipolar disorder. Eventually, ECT replaced metrazol and insulin and became the preferred treatment around the world. Although ECT declined after the 1950s—due in part to concerns about its misuse—it was later refined and today is considered to be safe and effective for difficult-to-treat mental disorders.

And so by the 1940s, and for the first time in history, patients with serious mental disorders could be sickened, seized, severed, and shocked into feeling better. Not exactly confidence-inspiring, but enough of a milestone to encourage some researchers into believing they could find something better.

Milestone #2 Mastering mania: lithium takes on the “worst patient on the ward”

“He is very restless, does not sleep, is irrational, and talks from one idea to another... He is so lacking in attention that questions fail to interrupt his flight of ideas... He is dirty and destructive, noisy both day and night... He has evidently been a great nuisance at home and in the neighborhood for the last few days...”

—From the case report of “WB,” a patient with chronic mania

This report—taken from the medical records of a patient who was about to change the course of medical history—illustrates how serious and troublesome mania can be, not only for patients, but anyone in their vicinity, inside or outside an institution. Although WB was in his 50s when these comments were written, the records of his mental illness date back from decades earlier, beginning just after he joined the Australian army in 1916 at the age of 21. Within a year, he would be hospitalized for “periods of permanent excitement” and discharged as medically unfit. Over the next few decades, he would be admitted to psychiatric hospitals multiple times with bouts of mania and depression, frustrating friends and family with behavior that ranged from “quiet and well behaved” to “mischievous, erratic, loquacious, and cunning.” But perhaps his most memorable episode came in 1931, when he left a mental hospital dressed only in pajamas, entered a movie theater, and began singing to the audience.

By 1948, WB was in his 50s and had been a patient at Bundoora Repatriation Hospital in Melbourne, Australia, for five years. Diagnosed with chronic mania, the staff described him as “restless, dirty, destructive, mischievous, interfering, and long-regarded as the most troublesome patient on the ward.” Small wonder that in March, 1948 physician John Cade chose WB as his first patient to try a new drug for treating mania—despite his initial impression that the drug would have the opposite effect.

Cade had begun looking for a treatment based on the theory that mania was a state of intoxication caused by some substance circulating in the blood. Figuring that the toxic substance might be found in the urine, he collected samples from patients with mania and injected it into animals. Cade’s hunch was proved correct: The urine from patients with mania was more toxic than the urine from healthy people or those with other mental illnesses. He then began searching for the toxic substance in the urine. He soon narrowed his search to uric acid and isolated a particular form called “lithium urate.” Perhaps he could treat mania by finding some way to block its effects. But to Cade’s surprise, the compound had the opposite effect of what he expected. So he reversed his line of thinking: Perhaps lithium urate could protect against mania. After making a more pure form—lithium carbonate—he injected it into guinea pigs. When the animals responded with subdued behavior, Cade was sufficiently encouraged to try it out in people. After giving himself a dose to make sure it was safe, Cade administered the lithium to the most troublesome manic patient in the hospital...

And so on March 29, 1948, WB became the first patient in history to receive lithium for the treatment of mania. Almost immediately, WB began to “settle down,” and a few weeks later, Cade was astonished to report, “There has been a remarkable improvement... He now appears to be quite normal. A diffident, pleasant, energetic little man.” Two months after that, WB left the hospital for the first time in five years and was “soon working happily at his old job.”

In addition to WB, Cade also gave lithium to nine other patients with mania, six with schizophrenia, and three with depression, but the effects in the patients with mania was particularly dramatic. He reported his findings in the Medical Journal of Australia the following year but left further studies of lithium to other Australian researchers, who conducted pivotal trials in the 1950s. Although lithium was not approved by the U.S. Food and Drug Administration until 1970, studies since then have showed that lithium use has significantly lowered mortality and suicidal behavior. In addition, one study found that between 1970 and 1991, the use of lithium in the U.S. saved more than $170 billion in direct and indirect costs. Lithium is far from perfect—it has many side effects, some potentially serious—but it still plays an important role today in the treatment of mania and several other mental illnesses.

With equal doses of curiosity and luck, Cade discovered the first effective drug for mental illness. His discovery was also a landmark because, in showing that lithium is more effective in mania than schizophrenia, he validated Emil Kraepelin’s theory that the two disorders are distinct. It was the birth of a new understanding of mental illness and the start of a “Golden Age” of psychopharmacology that would continue for the next ten years with the discovery of three more milestone treatments.

Milestone #3 Silencing psychosis: chlorpromazine transforms patients and psychiatry

If you happened to be strolling down the streets of Paris in the early 1950s and bumped into a middle-aged man named Giovanni, you probably would have known exactly what to do: Cross quickly to the other side. Giovanni was a manual worker who often expressed himself in alarming ways, including giving impassioned political speeches in cafes, picking fights with strangers—and on at least one occasion—walking down the street with a flower pot on his head while proclaiming his love of liberty.

It’s no surprise that in 1952, while a psychiatric patient in the Val-de-Grace Military Hospital in Paris, Giovanni was selected by doctors to try out a new drug. When the results were reported later in the year, the psychiatric community reacted with shock and disbelief. But within a few years, the drug—called chlorpromazine and better known as Thorazine in the U.S.—would be prescribed in tens of millions of patients around the world, literally transforming the treatment of mental illness.

Like many discoveries in medicine, the road to chlorpromazine was convoluted and unlikely, the result of a quest that initially had little to do with its final destination. Chlorpromazine was first synthesized in 1950 by scientists in France who were looking for a better antihistamine—not to cure allergic sniffles, but because they thought such drugs could help surgeons use lower doses of anesthesia and thus help patients better tolerate the trauma of surgery. In 1951, after initial studies suggested chlorpromazine might be a promising candidate, French anesthesiologist Henri-Marie Laborit administered it to his surgical patients at the Val-de-Grace hospital. Laborit was impressed and intrigued: Not only did the drug help patients feel better after their operations, it made them feel more relaxed and calm before the operation. Laborit speculated in an early article that there might be a “use for this compound in psychiatry.”

By January, 1952, Laborit had convinced his colleagues in the hospital’s neuropsychiatry department to try the drug in their psychotic patients. They agreed and found that giving chlorpromazine along with two other drugs quickly calmed down a patient with mania. But it was not until later that year that two other psychiatrists—Jean Delay and Pierre Deniker at the Sainte-Anne Hospital in Paris—tried giving chlorpromazine alone to Giovanni and 37 other patients. The results were dramatic: Within a day, Giovanni’s behavior changed from erratic and uncontrollable to calm; after nine days he was joking with the medical staff and able to maintain a normal conversation; after three weeks he appeared normal enough to be discharged. The other psychotic patients showed a similar benefit.

Despite their initial shock, the psychiatric community was quick to embrace the new treatment. By the end of 1952, chlorpromazine was commercially available in France, and the U.S. followed with Thorazine in 1954. By 1955, studies around the world were confirming the therapeutic effects of chlorpromazine. Almost universally, psychiatrists were amazed at its effects in patients with schizophrenia. Within days, previously unmanageable patients—aggressive, destructive, and confused—were able to sit calmly with a clear mind, oriented to their surroundings, and talk rationally about their previous hallucinations and delirium. Clinicians reported that the atmosphere in mental hospitals changed almost literally overnight, as patients were not only freed from straitjackets, but from the institutions themselves.

By 1965, more than 50 million patients worldwide had received chlorpromazine, and the “deinstitutionalization” movement, for good or bad, was well underway. The impact was obvious in terms of shorter hospital stays and fewer admissions: A psychiatric hospital in Basel, Switzerland, reported that from 1950 to 1960 the average stay had decreased from 150 days to 95 days. In the United States, the number of patients admitted to psychiatric hospitals had increased in the first half of the twentieth century from 150,000 to 500,000; by 1975, the number had dropped to 200,000.

Although chlorpromazine was the most prescribed antipsychotic agent throughout the 1960s and 1970s, by 1990 more than 40 other antipsychotic drugs had been introduced worldwide. The push for new and better antipsychotics is understandable given the concerns about side effects. One study in the early 1960s found that nearly 40% of patients who took chlorpromazine or other antipsychotics experienced “extrapyramidal” side effects, a collection of serious symptoms that can include tremor, slurred speech, and involuntary muscle contractions. For this reason, researchers began to develop “second-generation” antipsychotics in the 1960s, which eventually led to the introduction of clozapine (Clozaril) in the U.S. in 1990. While clozapine and other second-generation agents pose their own risks, they’re less likely to cause extrapyramidal symptoms. Second-generation psychotics are also better at treating “negative” symptoms of schizophrenia (that is, social withdrawal, apathy, and “flattened” mood), though none are superior to chlorpromazine for treating “positive” symptoms, such as hallucinations, delusions, disorganized speech.

Despite the dozens of antipsychotics available today, it’s now clear that these drugs don’t work in all patients, nor do they always address all of the symptoms of schizophrenia. Nevertheless, coming just a few years after the discovery of lithium, chlorpromazine was a major milestone. As the first effective drug for psychosis, it transformed the lives of millions of patients and helped reduce the stigma associated with mental illness. And so by the mid-1950s, drugs were now available for two major types of mental illness, bipolar disorder and schizophrenia. As for depression and anxiety, their moment in the sun was already on the horizon.

Milestone #4 Recovering the ability to laugh: the discovery of antidepressants

Most of us think we know something about depression—that painful sadness or “blues” that periodically haunts our lives and sets us back for a few hours or days.

Most of us would be wrong.

True clinical depression—one of the four most serious mental disorders and projected to be the second-most serious burden of disease in 2030—is not so much a setback as a tidal wave that overwhelms a person’s ability to live. When major depression gets a grip, it does not let go, draining energy, stealing interest in almost all activities, blowing apart sleep and appetite, blanketing thoughts in a fog, hounding a person with feelings of worthlessness and guilt, filling them with obsessions of suicide and death. As if all that weren’t enough, up until the 1950s, people with depression faced yet one more burden: the widespread view that the suffering was their own fault, a personality flaw that might be relieved with psychoanalysis, but certainly not drugs. But in the 1950s, the discovery of two drugs turned that view on its head. They were called “antidepressants”—which made a lot more sense than naming them after the conditions for which they were originally developed: tuberculosis and psychosis.

The story of antidepressants began with a failure. In the early 1950s, despite Selman Waksman’s recent milestone discovery of streptomycin—the first successful antibiotic for tuberculosis (see Chapter 7)—some patients were not helped by the new drug. In 1952, while searching for other anti-tuberculosis drugs, researchers found a promising new candidate called iproniazid. In fact, iproniazid was more than promising, as seen that year in dramatic reports from Sea View Hospital on Staten Island, New York. Doctors had given iproniazid to a group of patients who were dying from tuberculosis, despite being treated with streptomycin. To everyone’s shock, the new drug did more than improve their lung infections. As documented in magazine and news articles that caught the attention of the world, previously terminal patients were “reenergized” by iproniazid, with one famous photograph showing some “dancing in the halls.” But although many psychiatrists were impressed by iproniazid and considered giving it to their depressed patients, interest soon faded due to concerns about its side effects.

Although reports that iproniazid and another anti-tuberculosis drug (isoniazid) could relieve depression were sufficiently intriguing for American psychiatrist Max Lurie to coin the term “antidepressant” in 1952, it was not until a few years later that other researchers began taking a harder look at iproniazid. The landmark moment finally arrived in April 1957, when psychiatrist Nathan Kline reported at a meeting of the American Psychiatric Association that he’d given iproniazid to a small group of his depressed patients. The results were impressive: 70% had a substantial improvement in mood and other symptoms. When additional encouraging studies were presented at a symposium later that year, enthusiasm exploded. By 1958—despite still being marketed only for tuberculosis—iproniazid had been given to more than 400,000 patients with depression.

While researchers soon developed other drugs similar to iproniazid (broadly known as MAOIs), all shared the same safety and side effect issues seen with iproniazid. But before long, thanks to the influence of chlorpromazine, they found an entirely new kind of antidepressant.

In 1954, Swiss psychiatrist Roland Kuhn, facing a tight budget at his hospital, asked Geigy Pharmaceuticals in Basle if they had any drugs he could try in his schizophrenic patients. Geigy sent Kuhn an experimental compound that was similar to chlorpromazine. But the drug, called G-22355, not only failed to help his psychotic patients, some actually became more agitated and disorganized. The study was dropped, but upon further review, a curious finding turned up: three patients with “depressive psychosis” had actually improved after the treatment. Suspecting that G-22355 might have an antidepressant effect, Kuhn gave it to 37 patients with depression. Within three weeks, most of their symptoms had cleared up.

As Kuhn later recounted, the effects of this new drug were dramatic: “The patients got up in the morning voluntarily, they spoke in louder voices, with great fluency, and their facial expressions became more lively... They once more sought to make contact with other people, they began to become happier and to recover their ability to laugh.”

The drug was named imipramine (marketed in the U.S. as Tofranil), and it became the first of a new class of antidepressants known as tricyclic antidepressants, or TCAs. After the introduction of imipramine, many other TCA drugs were developed in the 1960s. Lauded for their relative safety, TCAs soon became widely used, while MAOIs fell out of favor. But despite their popularity, TCAs had some safety concerns, including being potentially fatal if taken in overdose, and a long list of side effects.

The final stage in the discovery of antidepressants began in the 1960s with yet another new class of drugs called SSRIs. With their more targeted effects on a specific group of neurons—those that release the neurotransmitter serotonin—SSRIs promised to be safer and have fewer side effects than MAOIs or TCAs. However, it wasn’t until 1974 that one particular SSRI was first mentioned in a scientific publication. Developed by Ray Fuller, David Wong, and others at Eli Lilly, it was called fluoxetine; and in 1987, it became the first approved SSRI in the U.S., with its now-famous name, Prozac. The introduction of Prozac—which was as effective as TCAs but safer and comparatively free of side effects—was the tipping point in the milestone discovery of antidepressants. By 1990, it was the most prescribed psychiatric drug in North America, and by 1994, it was outselling every drug in the world except Zantac. Since then, many other SRRIs and related drugs have been introduced and found to be effective in depression.

The discovery of iproniazid and imipramine in the 1950s was a major milestone for several reasons. In addition to being the first effective drugs for depression, they opened a new biological understanding of mood disorders, prompting researchers to look at the microscopic level of where these drugs work, leading to new theories of how deficiencies or excesses of neurotransmitters in the brain may contribute to depression. At the same time, the new drugs literally transformed our understanding of what depression is and how it could be treated. Up until the late 1950s, most psychiatrists believed in the Freudian doctrine that depression was not so much a “biological” disorder as a psychological manifestation of internal personality conflicts and subconscious mental blocks that could only be resolved with psychotherapy. Many outwardly resisted the idea of drug treatments, believing they could only mask the underlying problem. The discovery of antidepressants forced psychiatrists to see depression as biological disorder, treatable with drugs that modified some underlying chemical imbalance.

Today, despite many advances in neurobiology, our understanding of depression and antidepressant drugs remains incomplete: We still don’t know exactly how antidepressants work or why they don’t work at all in up to 25% of patients. What’s more, studies have shown that in some patients psychotherapy can be as effective as drugs, suggesting that the boundaries between biology and psychology are not clear cut. Thus, most clinicians believe that the best approach to treating depression is a combination of antidepressant drugs and psychotherapy.

Apart from their impact on patients and psychiatry, the discovery of antidepressants in the 1950s had a profound impact on society. The fact that these drugs can dramatically improve the symptoms of depression—yet have little effect on people with normal mood states—helped society realize that clinical depression arises from biological vulnerabilities and not a moral failing or weakness in the patient. This has helped destigmatize depression, placing it among other “medical” diseases—and apart from the “blues” that we all experience from time to time.

Milestone #5 More than “mother’s little helper”: a safer and better way to treat anxiety

Anxiety is surely the least serious of the four major mental disorders: It goes away when the “crisis” is over, has simple symptoms compared to bipolar disorder or schizophrenia, and we’ve always had plenty of treatments, from barbiturates and other chemical concoctions, to the timeless remedies of alcohol and opium. In short, anxiety disorders are not really as serious as the other major forms of mental illness, are they?

They are. First, anxiety disorders are by far the most common mental disorders, affecting nearly 20% of American adults (versus about 2.5% for bipolar disorder, 1% for schizophrenia, and 7% for depression). Second, they can be as disabling as any mental disorder, with a complex of symptoms that can be mental (irrational and paralyzing fears), behavioral (avoidance and quirky compulsions), and physical (pounding heart, trembling, dizziness, dry mouth, and nausea). Third, anxiety disorders are as mysterious as any other mental disorder, from their persistence and resistance to treatment, to the fact that they can crop up in almost any other mental disorders, including—paradoxically—depression. And finally, prior to the 1950s, virtually all treatments for anxiety posed a risk of three disturbing side effects: dependence, addiction, and/or death.

The discovery of drugs for anxiety began in the late-1940s when microbiologist Frank Berger was looking for a drug not to treat anxiety, but as a way to preserve penicillin. Berger was working in England at the time and had been impressed by the recent purification of penicillin by Florey and Chain (see Chapter 7). But a funny thing happened when he began looking at one potential new preservative called mephenesin. While testing its toxicity in laboratory animals, he noticed that it had a tranquilizing effect. Berger was intrigued, but the drug’s effects wore off too quickly. So, after moving to the U.S., he and his associates began tweaking the drug to make it last longer. In 1950, after synthesizing hundreds of compounds, they created a new drug that not only lasted longer, but was eight times more potent. They called it meprobamate, and Berger was optimistic about its therapeutic potential: It not only relieved anxiety, but relaxed muscles, induced mild euphoria, and provided “inner peace.”

Unfortunately, Berger’s bosses at Wallace Laboratories were less impressed. There was no existing market for anti-anxiety drugs at the time, and a poll of physicians found that they were not interested in prescribing such drugs. But things changed quickly after Berger put on his marketing cap and made a simple film. The film showed rhesus monkeys under three conditions: 1) their naturally hostile state; 2) knocked out by barbiturates; and 3) calm and awake while on meprobamate. The message was clear, and Berger soon garnered the support he needed. In 1955 meprobamate was introduced as Miltown (named after a small village in New Jersey where the production plant was located), and once word of its effects began to spread, it quickly changed the world.

And the world was more than ready. Although barbiturates were commonly used in the early 1950s, the risk of dependence and fatality if taken in overdose were well-known. At the same time, recent social changes had prepared society to accept the idea of a drug for anxiety, from a growing trust in the pharmaceutical industry thanks to the recent discovery of penicillin and chlorpromazine, to the widespread angst about nuclear war, to the new work pressures brought on by the economic expansion following World War II. Although some claimed that meprobamate was promoted to exploit stressed housewives—leading to the cynical nickname “mother’s little helper”—Miltown was widely used by men and women around the world, including business people, doctors, and celebrities. By 1957, more than 35 million prescriptions had been sold in the U.S., and it became one of the top 10 best-selling drugs for years.

But while doctors initially insisted meprobamate was completely safe, reports soon began to emerge that it could be habit-forming and—though not as dangerous as barbiturates—potentially lethal in overdose. Soon, pharmaceutical companies were looking for safer drugs, and it didn’t take long. In 1957, Roche chemist Leo Sternbach was cleaning up his laboratory when an assistant came across an old compound that had never been fully tested. Sternbach figured it might be worth a second look, and once again random luck paid off. The drug not only had fewer side effects than meprobamate, but was far more potent. It was called chlordiazepoxide, and it became the first of a new class of anti-anxiety drugs known as benzodiazepines. It was soon marketed as Librium and would be followed in 1963 by diazepam (Valium) and many others, including alprazolam (Xanax). By the 1970s, benzodiazepines had mostly replaced meprobamate and began to play an increasingly important role in treating anxiety disorders.

Today, in addition to benzodiazepines, many other drugs have been found to be useful in treating anxiety disorders, including various antidepressants (MAOIs, TCAs, and SSRIs). While today’s benzodiazepines still have limitations—including the risk of dependence if taken long-term—they are considered far safer than the drugs used up until the 1950s, before Frank Berger began his quest for a way to preserve penicillin.

While some have criticized the widespread use of anti-anxiety agents, it would be foolish to dismiss the remarkable benefits these drugs have provided for millions of people who lives would otherwise be crippled by serious anxiety disorders. What’s more, similar to the earlier milestone drugs for mental illness, their discovery opened new windows into the study of normal brain functions and the cellular and molecular changes that underlie various states of anxiety. This, in turn, has advanced our understanding of the biological underpinnings of the mind and thus helped destigmatize mental illness.

How four new treatments added up to one major breakthrough

The 1950s breakthrough discovery of drugs for madness, sadness, and fear was both a “Golden Age” of psychopharmacology and a transformative awakening of the human race. First and foremost, the new drugs helped rescue countless patients from immeasurable suffering and loss. To the shock of almost everyone, they helped patients regain their ability to think and act rationally, to laugh and talk again, to be freed from irrational and crippling fears. Patients could resume relationships and productive lives or simply stay alive by rejecting suicide. Today, NAMI estimates that a combination of drugs and psychosocial therapy can significantly improve the symptoms and quality of life in 70% to 90% of patients with serious mental illness. With millions of lives saved by various drugs for mental illness, it’s no wonder that many historians rank their discovery as equal to that of antibiotics, vaccines, and the other top ten medical breakthroughs.

But almost as important as their impact on patients was the way in which these drugs transformed the prejudices and misconceptions long held by patients, families, doctors, and society. Prior to the 1950s, mental illness was often viewed as arising from internal psychological conflicts, somehow separate from the mushy biology of the brain and suspiciously linked to an individual’s personal failings. The discovery that specific drugs relieved specific symptoms implicated biochemical imbalances as the guilty party, shifting the blame from “slacker” patients to their “broken” brains.

Yet for all of the benefits of drugs for mental disorders, today most of the same mysteries remain, including what causes mental illness, why the same symptoms can appear in different conditions, why some drugs work for multiple disorders, and why they sometimes don’t work at all. What’s more, while pharmacologists continue their unending quest for better drugs and new explanations, one underlying truth seems unlikely to ever change: Drugs alone will never be sufficient...

The failures of success: a key lesson in the treatment of mental disorders

“There has been a remarkable improvement... He now appears to be quite normal. A diffident, pleasant, energetic little man.”

—John Cade, after the first use of lithium in WB, a patient with mania

You’ll recall this earlier comment from the remarkable story of WB, who after receiving lithium in 1948 for his mania became the first person to be successfully treated with a drug for mental illness. Unfortunately, that story was a lie. Unless, that is, the remaining details of WB’s life are revealed. WB did have a dramatic recovery after his treatment with lithium and he continued to do well for the next six months. But trouble began a short time later when, according to case notes, WB “stopped his lithium.” A few days later, his son-in-law wrote that WB had returned to his old ways, becoming excitable and argumentative after a trivial disagreement. Over the next two years, WB repeatedly stopped and restarted his lithium treatment, causing his behavior to zig-zag from “irritable, sleepless, and restless,” to “normal again,” to “noisy, dirty, mischievous, and destructive as ever.” Finally, two years after his milestone treatment, WB collapsed in a fit of seizures and coma. He died a few miserable days later, the cause of death listed as a combination of lithium toxicity, chronic mania, exhaustion, and malnutrition.

The full story of WB highlights why the breakthrough discovery of drugs for mental illness was both invaluable and inadequate. WB’s decline was not simply due to his failure to take his lithium, but problems with side effects, proper dosing, and even the self-satisfied symptoms of mania itself. All of these problems, in one form or another, are common in many mental disorders and a major reason why treatment can fail. As society learned during the deinstitutionalization movement of the 1960s and 1970s, drugs can produce startling improvements in mental functioning but are sometimes woeful failures in helping patients negotiate such mundane challenges as maintaining their treatment, finding a job, or locating a place to live.

Sadly, the same story continues today, sometimes to the detriment of both patients and innocent bystanders. When David Tarloff’s deterioration from schizophrenia led to the murder of psychologist Kathryn Faughey in 2008, it followed years of being on and off various anti-manic and antipsychotic medications—including lithium, Haldol, Zyprexa, and Seroquel—and being admitted and released from more than a dozen mental institutions. As Tarloff’s brother lamented to reporters after David’s arrest, “My father and I and our mother tried our best to keep him in the facility he was hospitalized in over the many years of his illness, but they kept on releasing him. We kept asking them to keep him there. They didn’t...”

* * *

In 2008, the World Health Organization issued a report on mental health care, noting that of the “hundreds of millions of people” in the world with mental disorders, “only a small minority receive even the most basic treatment.” The report advised that this treatment gap could be best addressed by integrating mental health services into primary care, with a network of supporting services that include not only the drugs described here, but collaboration with various informal community care services. It is these community services—traditional healers, teachers, police, family, and others—that “can help prevent relapses among people who have been discharged from hospitals.”

The treatment of mental illness has come a long way since Hippocrates prescribed laxatives and emetics, since asylum directors attempted to “master” madness with chains and beatings, since doctors sickened their patients with malaria and seizures. The breakthrough discoveries of the first drugs for mental illness changed the world, but also laid bare some timeless truths. As the stories of David Tarloff and WB remind us, the safety net that can catch and support patients must be woven from many strands—medications, primary care and mental health practitioners, community, and family—the absence or failure of any one of which can lead to a long, hard fall.