This chapter examines the use of Microsoft Azure Streaming Analytics to create jobs to process the incoming data streams from various sensors, perform data transformations and enrichment, and finally, to provide output results into various data formats.

It has been said that the cloud represents a once-in-a-generation technology transformation. Certainly, one of the cornerstones of this key transformation is the ability to efficiently ingest, process, and report on massive amounts of data at scale.

The Lambda Architecture

In today’s modern data analytics, a new stream processing strategy has been proposed—the “Lambda” architecture—and has been widely attributed to Nathan Marz, the creator of Apache Storm.

The fundamental essence of the Lambda architecture is that it’s designed to ingest massive quantities of incoming data by taking advantage of batch and stream processing methodolog ies. Additional attributes of the Lambda architecture include the following:

Ability to process a vast array of workloads and scenarios.

High throughput characterized by low-latency reads with frequent writes and updates.

Retaining the incoming data in the original format. This is the notion of a “data lake” .

Modeling data transformations as a series of materialized stages from the original data.

Highly scalable, nearly linear, scale-out infrastructure to provide scale up/down.

Figure 5-1 depicts the Lambda architecture.

Figure 5-1. The Lambda architecture

By merging batch and stream processing in the same architecture, the result is an optimized data analytics engine that is capable of not just processing the data, but also of delivering the right data at the right time.

It should be noted that this new just-in-time (JIT) data that can now be surfaced with streaming analytics (via the Lambda architecture) can often become the source of many types of competitive advantages for a business or enterprise that knows how to exploit this type of information across a wide array of use cases.

The real magic comes in knowing how to recognize the hidden opportunities buried deep inside the data and from there take action to explore, develop, fail-fast, and finally succeed in evoking true transformational changes in a business or industry. This is basically the essence of this book and the guiding light for our own reference implementation.

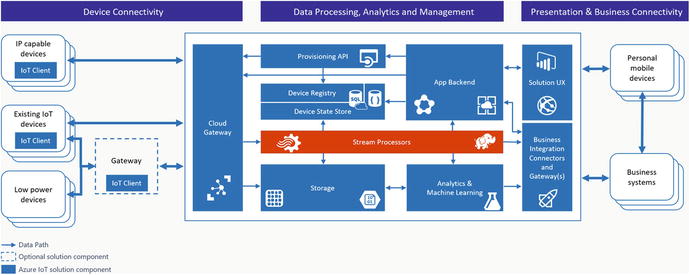

Microsoft has recognized the need for streaming analytics at scale as part of today’s modern Internet-of-Things (IoT ) solutions and has incorporated streaming capabilities into several popular architectures, such as Cortana Analytics along with the IoT Suite offerings shown in Figure 5-2.

Figure 5-2. Azure IoT suite architecture

What Is Streaming Analytics?

One of the real advantages of a great streaming analytics engine is the ability to provide real-time analytics and outputs so that data becomes actionable with a minimum of delay.

To provide context to the challenges involved in developing streaming analytics solution, the best canonical example is a scenario of counting the number of red cars in a parking lot versus counting the number of red cars that pass by any major freeway (assuming it’s not rush hour) for every 10 minute interval in a one-hour period (see Figure 5-3).

Figure 5-3. Challenges of streaming analytics

The essence of this scenario is “data in motion” versus “data at rest”. The key difference here is the element of “time” and the ability to capture and analyze periodic “slices” of data across potentially millions of events in order to detect patterns and anomalies in the massive amounts of streaming data.

Real-Time Analytics

Real-time analytics is all about the ability to process data coming from literally millions of connected devices or applications, with the inherent ability to ingest and process potentially millions of events per second. A key component of this scenario is integration with a highly-scalable publish/subscribe pattern. Another key requirement is for simplified processing capabilities on continuous streams of data that allow a solution to transform, augment, correlate, and perform temporal (time-based) operations.

Correlating streaming data with reference data is also a core requirement in many cases, as the incoming data often needs to be matched with a corresponding host record.

Streaming Implementations and Time-Series Analysis

Today’s modern businesses are demanding analytics in real-time to obtain a competitive advantage; they have moved beyond the “old school” method of hourly, daily, weekly, and monthly reporting cadences to now relying on data that is only seconds old.

In order to make streaming analytics and reporting all the more relevant, support for time-window calculations becomes even more imperative. To this end, a set of time-series windows are beneficial such as:

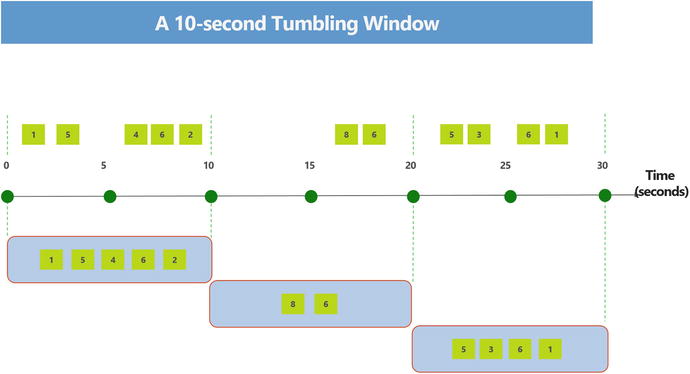

Tumbling Windows: A series of fixed-sized, non-overlapping and contiguous time intervals. The diagram in Figure 5-4 illustrates a stream with a series of events and how they are mapped into ten-second tumbling windows.

Figure 5-4. An example of tumbling windows in streaming analytics

Hopping Windows: Model scheduled overlapping windows. A hopping window specification consist of these parameters:

A time unit.

A window size, how long each window lasts

A hop size, how much each window moves forward relative to the previous one

<Optional> Offset size, an optional fourth parameter.

The illustration in Figure 5-5 shows a stream with a series of events. Each box represents a hopping window and the events that are counted as part of that window, assuming that the hop is 5, and the size is 10.

Figure 5-5. An example of hopping windows in streaming analytics

Sliding Windows: When using a sliding window, the system is asked to logically consider all possible windows of a given length and output events only for those points in time when the content of the window actually changes, in other words when an event entered or exists the window. Figure 5-6 illustrates a sliding window.

Figure 5-6. Sample sliding window in streaming analytics

Predicting Outcomes for Competitive Advantage

In addition to detecting patterns and anomalies in the data, another key element to running a business at Internet speed is the ability to shape new business outcomes by predicting what may happen in the future. This is exactly the problem domain for predictive analytics and Machine Learning, which can use historical data combined with modern data science algorithms to predict a future outcome.

One of the key methods to accelerating business and building a competitive advantage is the ability to automate, supplement, or accelerate key business decision-making processes through the use of predictive analytics and Machine Learning. In today’s business world, decisions are made every day, often without all the facts and data, so any additional insights can often result in a huge competitive advantage.

Across many industries and verticals, many bottlenecks can be found today where there is lack of actionable data. This is a key area where Azure Stream Analytics and Machine Learning can help reduce friction and accelerate results.

A recent new feature of Azure Streaming Analytics is the ability to directly invoke Azure Machine Learning Web Services as streaming data is processed in order to enrich the incoming data stream or predict outcomes that might in turn, require triggering a notification or alert. This is certainly a key feature and capability and so we will cover this in all in detail in Chapter 8 .

Stream Processing: Implementation Options in Azure

When using Microsoft Azure, there are several choices available for implementing a Stream Processing layer, as illustrated in Figure 5-7 with an IoT architecture.

Figure 5-7. Stream processing layer in an IoT architecture

With Microsoft Azure, there are many options available for creating a steam processing layer. The choices range from using Open Source Software (OSS) packages on Linux Virtual Machines to leveraging fully managed Platform-as-a-Service, such as Azure HDInsight.

Here are the basic options for running a stream processing engine in Azure:

Streaming Options: Virtual Machines - (Infrastructure-as-a-Service):

Virtual machines (running Windows or Linux)

Open Source Software Distributions:

Hortonworks

Cloudera

Roll your own: Apache Storm/Spark, Apache Samza, Twitter Heron, Kafka Streams, Apache Flink, Apache Beam (data processing workflows), Apache Mesos (project myriad).

Note that these options will also work for on-premise stream processing applications.

Azure Managed Services (Platform-as-a-Services – PaaS) Options:

Azure HDInsight: Managed Spark / Storm

Essentially managed 100% compatible Hadoop in Azure.

HBase as a columnar NoSQL transactional database running on Azure blobs.

Apache Storm as a streaming service for near real-time processing.

Hadoop 2.4 support for 100x query gains on Hive queries.

Mahout support for Machine Learning and Hadoop.

Graphical user interface for hive queries.

Azure Streaming Analytics

Process real-time data in Azure using a simple SQL language.

Consumes millions of real-time events from IoT or Event Hubs collected from devices, sensors, infrastructure, and applications.

Performs time-sensitive analysis using SQL-like language against multiple real-time streams and reference data.

Outputs to persistent stores, dashboards, or back to devices.

Choosing a Managed Streaming Analytics Engine in Azure

With the availability of Apache Storm and Spark Streaming capabilities on HDInsight, along with Azure Streaming Analytics, Microsoft has made available multiple options for both proprietary and open source technologies for implementing a streaming analytics solution.

It should be noted that both Azure managed analytics platforms provide the benefits of a managed PaaS solution, however, there are a few key distinct capabilities that differentiate them and should be considered when determining your final streaming architecture solution.

Ultimately, the final choice will be narrowed down to a few key considerations, which are highlighted in Table 5-1.

Table 5-1. Comparison Between Azure Streaming Analytics and HDInsight

Feature | Azure Stream Analytics | HDInsight Apache Storm |

|---|---|---|

Input Data formats | Supported input formats are Avro, JSON, and CSV. | Any format may be implemented via custom code. |

SQL Query Language | An easy-to-use SQL language support is available with the same syntax as ANSI SQL. | No, users must write code in Java C# or use Trident APIs. |

Temporal Operators | Windowed aggregates and temporal joins are supported out-of-the-box. | Temporal operators must be implemented via custom code. |

Custom Code Extensibility | Available via JavaScript user-defined functions. | Yes, there is availability to write custom code in C#, Java, or other supported languages on Storm. |

Support for UDFs (User Defined Functions) | UDFs can be written in JavaScript and invoked as part of a real-time stream processing query. | UDFs can be written in C#, Java, or the language of your choice. |

Pricing | Stream Analytics is priced by the number of streaming units required. A unit is a blend of compute, memory and throughput. | For Apache Storm on HDInsight, the unit of purchase is cluster-based, and is charged based on the time the cluster is running, independent of jobs deployed. |

Business Continuity / High Availability Services with guaranteed SLAs | SLA of 99.9% uptime. Auto-recovery from failures. Recovery of state-full temporal operators is built-in. | SLA of 99.9% uptime of the Storm cluster. Apache Storm is a fault-tolerant streaming platform. Customers' responsibility to ensure their streaming jobs run uninterrupted. |

Reference data | Reference data available from Azure Blobs with max size of 100MB of in-memory lookup cache. Refreshing of reference data is managed by the service. | No limits on data size. Connectors available for HBase, DocumentDB, SQL Server and Azure. Unsupported connectors may be implemented via custom code. Refreshing of reference data must be handled by custom code. |

Integration with Machine Learning | Via configuration of published Azure Machine Learning models as functions during Azure Streaming Analytics job creation. | Available through Storm Bolts. |

Note

Refer to the following link for additional information regarding choosing a managed streaming analytics platform in Azure:

Choosing a streaming analytics platform: Apache Storm comparison to Azure Stream Analytics:

https://azure.microsoft.com/en-us/documentation/articles/stream-analytics-comparison-storm

It should be noted that HDInsight supports both Apache Storm and Apache Spark Streaming. Each of these frameworks provide streaming capabilities and these capabilities may be worthy of further analysis depending on your specific implementation requirements.

See the following link for additional information:

Apache Spark for Azure HDInsight; https://azure.microsoft.com/en-us/services/hdinsight/apache-spark/

Here is an additional comparison of the three managed streaming implementations (via HDI and ASA) that are possible on Microsoft Azure :

Feature | Storm on HDI | SparkStreaming on HDI | Azure Streaming Analytics |

Programming Model | Java, C# | Scala, Python, Java | SQL Query Language |

Delivery Guarantee | At-least-once (Exactly once w/ Trident) | Exactly Once. | At least once |

State Management | Yes | Yes | Yes |

Processing Model | Event at-a-time | Micro-batching | Real-time event processing |

Scaling | Manual | Manual | Automatic |

Open Source | Yes | Yes | NA |

Streaming Technology Choice: Decision Considerations

When evaluating a managed streaming analytics platform in Azure, additional consideration should be given to the factors in order to make a better educated decision regarding your choice of Azure managed streaming platforms:

Development team expertise and background

Expertise in writing SQL queries versus writing code

Required skill levels: Analyst versus a developer for queries

Development effort and velocity

Using OOB connectors versus using OSS components

Troubleshooting and diagnostics

Custom logging, Azure operation logs

Scalability, adjustability, and pricing

Pain Points with Other Streaming Solutions

Regardless of your choice of managed streaming analytics platforms in Azure, there are many advantages to choosing a platform that runs in Azure versus a one-off solution.

The implementation options with other streaming analytics engines can leave a lot to be desired when it comes to providing a holistic and easily managed solution. A few key points are listed here that should be taken into consideration when evaluating a streaming analytics platform for your solution, include the following:

Depth and breadth: Levels of development skills required.

Completeness: Typically not end-to-end solutions.

Expertise: Need for special skills to set up and maintain.

Costs: Development, testing, and production environments and licensing.

Reference Implementation Choice: Azure Streaming Analytics

In our reference implementation and throughout the remaining chapters of this book, we will be using Azure Streaming Analytics to implement the reference solution. Azure Streaming Analytics is a fully managed cloud service for real-time analytics on streams of data using a SQL-like query language with built-in temporal semantics. It’s a perfect “fit” for the solution requirements.

Advantages of Azure Streaming Analytics

Choosing the right streaming analytics platform is a critical decision that will have major impacts on the overall performance, reliability, scalability, and overall operation of your solution.

For that reason, it is helpful to document the specific reasons for choosing Microsoft Azure Streaming Analytics and share these for future solution architecture considerations.

No Challenges with Deployment

Azure Streaming Analytics is a fully managed PaaS service in the cloud, so you can quickly configure and deploy from the Azure Portal or via PowerShell deployment scripts.

No hardware acquisition and maintenance

Bypasses requirements for deployment expertise

Up and running in a few clicks (and within minutes)

No software provisioning or maintenance

Easily expand your business globally

Mission Critical Reliability

Achieve mission-critical reliability and scale with Azure Streaming Analytics

Exactly once delivery guarantee up to the output adapter that writes the output events

State management for auto recovery

Guaranteed not to lose events or incorrect output

Preserves event order on per-device basis

Guaranteed 99.9% availability SLA

See the following link for additional details:

Event Delivery Guarantees (Azure Stream Analytics)

https://msdn.microsoft.com/en-us/library/azure/mt721300.aspx

How to achieve exactly-once delivery for SQL output:

Business Continuity

Stream Analytics processes data at a high throughput with predictable results and no data loss

Guaranteed uptime (three nines of availability)

Auto-recovery from failures

Built-in state management for fast recovery

No Challenges with Scale:

Scale to any volume of data while still achieving high throughput, low-latency, and guaranteed resiliency.

Elasticity of the cloud for scale up or scale down

Spin up any number of resources on demand

Scale from small to large when required

Distributed, scale-out architecture

Scale using slider in Azure Portal and not writing code

Low Startup Costs

Azure Stream Analytics lets you rapidly develop and deploy low-cost solutions to gain real-time insights from devices, sensors, infrastructure, and applications.

Provision and run streaming solutions for as low as $25/month

Pay only for the resources you use

Ability to incrementally add resources

Reduce costs when business needs change

Rapid Development

Reduce friction and complexity and use fewer lines of code when developing analytic functions for scale-out of distributed systems. Describe the desired transformation with SQL-based syntax, and Stream Analytics automatically distributes it for scale, performance, and resiliency.

Decrease bar to create stream processing solutions via SQL-like Language

Easily filter, project, aggregate, join streams, add static data with streaming data, and detect patterns or lack of patterns with a few lines of SQL

Built-in temporal semantics

Development and Debugging Experience Through Azure Portal

Queries in Azure Stream Analytics are expressed in a SQL-like query language. In Azure Stream Analytics, operational logging messages can be used for debugging purposes such as viewing job status, job progress, and failure messages to track the progress of a job over time; from start to processing, to output.

Manage out-of-order events and actions on late arriving events via configurations

Scheduling and Monitoring Built-In

The Azure Management Portal and Azure Portal both surface key performance metrics that can be used to monitor and troubleshoot your query and job performance.

Built-in monitoring

View your system’s performance at a glance

Help you find the cost-optimal way of deployment

Why Are Customers Using Azure Stream Analytics?

The previous section outlines some of the key advantages of utilizing Azure Streaming Analytics. By leveraging Microsoft Azure Streaming Analytics for quick infrastructure provisioning along with the low-maintenance aspects of running on a completely managed streaming analytics platform, you can avoid the usual complications listed next:

Monitoring and troubleshooting the solution.

Develop solutions and infrastructure that can scale at pace with business growth.

Develop solutions to manage resiliency, such as infrastructure failures and geo-redundancy.

Develop solutions to integrate with other components like Machine Learning, BI, etc.

Develop solution (code) for ingestion, temporal processing, and hot/cold egress operations.

Infrastructure procurement: avoid long hardware delays and provision in minutes.

Focus on building solutions, not on the solution infrastructure, and get the applications developed and deployed faster so you can truly work at Internet speed.

It should be noted that in addition to the many benefits outlined for individual Azure customers, Azure Streaming Analytics is also a core technology that makes up several other Microsoft Azure-based solution offerings, such as:

Azure IoT Suite: Microsoft provides Azure IoT Suite as part of its preconfigured IoT solutions built on the Azure platform and makes it easy to connect devices securely and ingest events at scale.

Cortana Intelligence Suite: A fully managed Big Data and advanced analytics suite to transform your data into intelligent action.

Key Vertical Scenarios to Explore for Azure Stream Analytics

There are many use cases for leveraging streaming analytics across industry verticals. Some of the more popular applications are listed here:

Financial Services:

Fraud detection

Asset tracking

Healthcare:

Patient monitoring

Government:

Surveillance and monitoring

Infrastructure, Energy, and Utilities:

Operations management in oil and gas

Smart buildings

Manufacturing:

Predictive maintenance

Remote monitoring

Retail:

Real-time customer engagement and marketing

Inventory optimization

Telco/IT:

IT Infrastructure and cellular network monitoring

Location-based awareness

Transportation and Logistics:

Container monitoring

Perishables shipment tracking

Our Solution: Leveraging Azure Streaming Analytics

In our reference solution, we use Azure Stream Analytics, which is a fully managed, cost effective, real-time, event processing engine that can help us unlock deep insights from our data. Azure Stream Analytics makes it easy to set up real-time analytic computations on data streaming from devices, sensors, web sites, social media, applications, infrastructure systems, and more. It’s perfect for our solution.

Before we begin the walk-through of our specific reference architecture implementation, we will explore the overall workflow of creating Streaming Analytics jobs in Microsoft Azure. Stream Analytics leverages years of Microsoft Research work in developing highly tuned streaming engines for time-sensitive processing, as well as SQL language integration for intuitive specifications of streaming jobs.

With a few clicks in the Azure Portal, you can author a Stream Analytics job specifying the three major components of an Azure Streaming Analytics Solution. These three components are inputs, outputs, and U-SQL queries.

Streaming Analytics Jobs: INPUT definitions

The first task in setting up an Azure Streaming Analytics job is to define the inputs for the new streaming job. The input definitions are related to the source of the incoming streaming data.

Note that, at the time of this writing, there are only two data format types supported:

JSON: Streaming message payloads in the JavaScript-Object-Notation format.

CSV: Streaming data in the Comma-Separated-Value text format. Header rows are also supported (and recommended) to provide additional column naming functionality.

When specifying data stream inputs , there are two definition types—Data Streams and Reference Data:

Data Streams: A data stream is denoted as an unbounded series of events flowing over time. Stream Analytics jobs must include at least one data stream input to be consumed and transformed by the job. Stream Analytics jobs must include at least one data stream input to be consumed and transformed by the job. The supported data stream input sources include the following input types: (at the time of this writing):

IoT Hub Streams:

Azure IoT Hub is a highly scalable publish-subscribe event ingestion platform optimized for IoT scenarios.

Used for device-to-cloud and cloud-to-device messaging streams.

Optimized to support millions of simultaneously connected devices.

Can be used to send inbound and outbound messages to IoT devices.

Event Hub Streams:

Enables inbound (only) event message streams for device-to-cloud scenarios.

Supports a more limited number of simultaneous connections.

Event Hubs enables you to specify the partition for each message sent for increased scalability.

Blob Storage: Used as an input source for ingesting bulk data as a stream. For scenarios with large amounts of unstructured data to store in the cloud, blob storage offers a cost-effective and scalable solution.

Note See the following link for more information concerning the differences between Azure IoT Hubs and Event Hubs:

Comparison of IoT Hub and Event Hubs:

https://azure.microsoft.com/en-us/documentation/articles/iot-hub-compare-event-hubs

Reference Data: Stream Analytics supports a second type of auxiliary input called reference data. As opposed to data in motion, reference data is static or slowing changing.

It is typically used for performing lookups and correlations with other data streams to enrich a dataset.

At the time of this writing, Azure blob storage is currently the only supported input source for reference data. Reference data source blobs are currently limited to 100MB in size.

Streaming Analytics Jobs: OUTPUT Definitions

We have defined our input sources for a new Azure Streaming Analytics job, so the next step is to define the output formats for the job. In order to enable a variety of application patterns, Azure Stream Analytics has different options for storing output and viewing analysis results. This makes it easy to view job outputs and provides flexibility in the consumption and storage of the job output for data warehousing and other purposes.

Note that any output configured in the job must exist before the job is started and events start flowing. For example, if you use blob storage as an output, the job will not create a storage account automatically. It needs to be provisioned by the user before the Streaming Analytics job is started.

The Output formats for Azure Streaming Analytics include the following storage options (at the time of this writing):

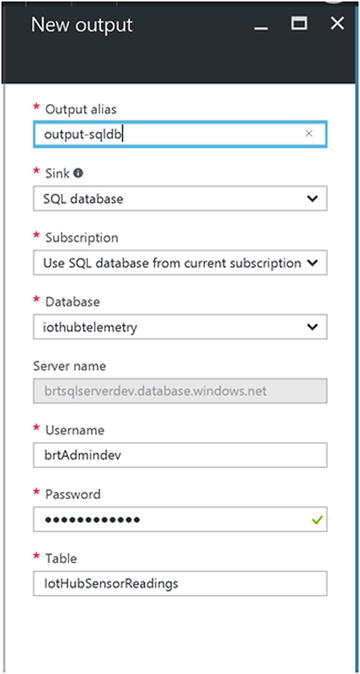

SQL Database: Azure SQL Database can be used as an output for data that is relational in nature or for applications that depend on content being hosted in a relational database. The one requirement for this Stream Analytics output option is that the destination be an existing table in an Azure SQL Database. Consequently, the table schema must exactly match the fields and their types being output from the streaming analytics job.

Azure SQL Data Warehouse: Note that an Azure SQL Data Warehouse can also be specified as an output via the SQL Database output option. This feature is currently in preview mode at the time of this writing.

Blob Storage: Blob storage offers a cost-effective and scalable solution for storing large amounts of unstructured data in the cloud.

Event Hubs: A highly scalable publish-subscribe event ingestion construct. Event Hubs are highly scalable and can handle ingestion of millions of events per second. Note that a key reason for using an Event Hub as an Output of a Stream Analytics job is so that the data can become the input of another streaming job. In this way, you can “chain” multiple streaming jobs together to complete an application scenario, such as providing real-time alerts and notifications.

Table Storage: A NoSQL key/attribute store that can be leveraged for structured data with minimal schema constraints. Table storage provides highly available, massively scalable storage, so that an application can automatically scale to meet user demand.

Service Bus Queue: Provides a First-In, First-Out (FIFO) message delivery to one or more consumers. Messages are then set up to be received and processed by the receivers in the date/time order in which they were added to the queue, and each message is received and processed by only one message consumer.

Service Bus Topic: While service bus queues provide a one-to-one communication pattern from sender to receiver, service bus topics provide a one-to-many form of communication where many applications can subscribe to a topic.

DocumentDB: A fully-managed NoSQL document database service that offers query and transactions over schema-free data, predictable, and reliable performance, as well as rapid development.

Power BI: Can be used as an output for a Stream Analytics job to provide for rich visualizations for analytical results. This capability can be used for operational dashboards, report generation, and metric driven reporting.

Data Lake Store: This output option enables you to store data of any size, type and ingestion speed for operational and exploratory analytics. At this time, creation and configuration of Data Lake Store outputs is supported only in the Azure Classic Portal.

Planning Streaming Analytics Outputs

The output stage of the streaming analytics process requires a little upfront, advanced planning, as some thought must be given to how the data will be delivered and consumed. This is where the real value of the modern Lambda architecture comes into play with the notion of “hot”, “warm”, and “cold” data paths.

Proper analysis and exploitation of these key reporting capabilities can mean the difference between creating a true competitive advantage and just creating a noisy data overload scenario. The fortunes of many businesses can rise and fall on the timeliness and accuracy of key operational data. Choose your outputs carefully.

Hot Path

The processing pathway for urgent data, for example, data that is sent from field devices to an IoT system. This data typically requires immediate analysis. It is frequently used for raising alerts and other critical notifications. The “hot path” output option(s) for Azure Streaming Analytics include:

Power BI : For real-time streaming integration along with rich, visual dashboards.

Event Hubs : For integrating with other Streaming Analytics jobs and outbound Notification hubs.

Service Bus Queues: For integration with other publisher/subscriber notification systems.

Service Bus Topics: For integration with one-to-many notification scenarios.

Warm Path

The processing path for device data that is not urgent but typically has a limited lifetime before it becomes stale. This data should be considered to have an “expiration date” and consequently should be processed in a specified period of time.

This data can also be used to augment the results generated by hot path processing to provide additional context. Examples of warm path data include diagnostic information for performance analysis, troubleshooting, or A/B testing. The data may need to be held in storage that is relatively quick to access (and therefore possibly more expensive than that required for the cold path), but the storage capacity is likely to be much less, as this data has a limited life span and is unlikely to be retained for an extended period.

The “warm path” output option(s) for Azure Streaming Analytics include:

Azure SQL Database: For near-real-time, relational data queries

DocumentDB: For near-real-time, NOSQL data queries

Cold Path

The processing pathway for data that is stored and processed later. For example, this data can be pulled from storage for processing at a later time in batch mode. The data can be held in relatively cheap, high capacity, storage due to its potential high volume and historical nature. The data is commonly used to provide statistical information, to generate analytical reports, and for auditing purposes.

The “cold path” output option(s) for Azure Streaming Analytics include:

Blob Storage: For low cost, high-scale, generic data storage

Table Storage: For low cost, high-scale, key-value-pair data storage

Data Lake Store: For unlimited, low cost, high-scale, historical data storage platform with deep analytical processing capabilities.

Power BI: For real-time streaming integration along with rich, visual dashboards.

Event Hubs: For integrating with other Streaming Analytics jobs and outbound Notification hubs.

Service Bus Queues: For integration with other publisher/subscriber notification systems.

Service Bus Topics: For integration with one-to-many notification scenarios.

Power BI for Real-Time Visualizations

Power BI can be used as an output for a Stream Analytics job to provide for a real-time, rich visualization experience of analysis results. This capability can be used for operational dashboards, dynamic report generation, and other forms of real-time, metric-driven reporting and analysis.

Note

See the following link for more information on specifying Streaming Analytics Outputs:

https://azure.microsoft.com/en-us/documentation/articles/stream-analytics-define-outputs

Streaming Analytics Jobs: Data Transformations via SQL Queries

After the Azure Streaming Analytics job input and output definitions have been created, the next task is creating the data transformations. This is where all the pieces start to come together and a complete solution can finally be configured based on the previously defined inputs and outputs.

Azure Stream Analytics offers a SQL-like query language for performing transformations and computations over incoming streams of event data. Stream Analytics query language is a subset of the standard Transaction-SQL (T-SQL) syntax for performing simple and complex streaming analytics computations.

Azure Streaming Analytics SQL: Developer Friendly

Since most developers today may already possess a good working knowledge of T-SQL, this feature makes it very approachable to become very productive in a very short amount of time when using Azure Streaming Analytics.

This portion of the Streaming Analytics job setup is where the actual processing will occur and we will map, enrich, and transform the incoming streaming data input into one or more pre-defined streaming outputs. Note that it is possible in a single Streaming Analytics job to send processed data from a single input to multiple output destinations by chaining the SQL statements together in the job.

Azure Streaming Analytics (ASA): SQL Query Dialect Features

The SQL language in ASA is very similar to T-SQL, which is the primary database language for modern SQL database application engines such as Microsoft SQL Server, IBM DB2, and Oracle Database server.

It should be noted that Azure Streaming Analytics (ASA) SQL also contains a superset of functions that support advanced analytics capabilities as “temporal” (date/time) operations such as applying sliding, hopping, or tumbling time windows to the event stream in order to get time-boxed, summarized data directly from the incoming event stream.

All this is accomplished eloquently and effortlessly using familiar T-SQL statements. To assist you in further understanding the features of the ASA SQL Query language, the following is a short synopsis of the primary capabilities available with the Azure Streaming Analytics SQL Query language.

SQL Query Language

All data transformation jobs are written declaratively as a series of T-SQL-like query language statements.

There is no additional programming required and no code compilation required.

The SQL scripts are easy to author and deploy.

Supported Data Types

The following data types are supported in the ASA SQL language:

Bigint: Integers in the range -2^63 (-9,223,372,036,854,775,808) to 2^63-1 (9,223,372,036,854,775,807).

Float: Floating-point numbers in the range - 1.79E+308 to -2.23E-308, 0, and 2.23E-308 to 1.79E+308.

Datetime: Defines a date that is combined with a time of day with fractional seconds that is based on a 24-hour clock and relative to UTC (time zone offset 0).

nvarchar (max): Text values made up of Unicode characters.

record: A set of name/value pairs. Values must be of supported data type.

array: An ordered collection of values. Values must be of supported data type.

Data Type Conversions

CAST

Data type conversions in types in Stream Analytics query language are accomplished via the CAST function. This function converts an expression of one data type to another data type in the supported types in Stream Analytics query language.

Proper care should be taken when using the CAST function over inconsistent data streams, as a failure will cause the streaming analytics job to stop if the conversion cannot be performed.

As a good example of what not to do—the ASA SQL statement in Listing 5-1 will result in an Azure Streaming Analytics job failure.

Listing 5-1. An Example of the CAST Operator Usage That Will Result in a Job Failure in an ASA SQL Statement

CAST ('Test-String' AS bigint)TRY_CAST

To avoid a catastrophic job failures due to a data type conversion failure, it is highly recommended that the TRY_CAST SQL operation be used instead. This version returns either a value cast to the specified data type if the cast succeeds or else, the call returns null.

The SQL transformation job will gracefully continue no matter the result of the TRY_CAST call. Listing 5-2 illustrates the TRY_CAST call.

Listing 5-2. An Example of the TRY_CAST Operator in an ASA SQL Statement

SELECT TweetId, TweetTimeFROM InputWHERE TRY_CAST( TweetTime AS datetime) IS NOT NULL

Temporal Semantic Function ality

All ASA SQL operators are compatible with the temporal properties of event streams. Additional functionality is added to the ASA SQL language via new operators such as:

TumblingWindow

HoppingWindow

SlidingWindow

Built-In Operators and Functions

The ASA SQL language supports other key T-SQL constructs such as filters, projections, joins, windowed (temporal) aggregates, and text and date manipulation functionality.

Advanced event stream queries can be composed via these powerful query extensions .

User Defined Functions : Azure Machine Learning Integration

The ASA SQL language now supports direct calls to Azure Machine Learning (ML) via user defined functions.

User-defined functions provide an extensible way for a streaming job to transform input data to output data using an externally defined function and accessed as part of the SQL query.

A Machine Learning function in stream analytics can be used like a regular function call in the stream analytics query language.

This functionality provides the ability to score individual events of streaming data by leveraging a Machine Learning model hosted in Azure and accessed via a web service call.

At the time of this writing, Azure Machine Learning Request-Response Service (RRS) is the only supported UDF framework and is in currently in “preview” mode.

This capability allows you easily build applications for scenarios such as real-time Twitter sentiment analytics, as illustrated in Listing 5-3. The Azure Machine Learning user-defined function named sentiment is easily incorporated into an ASA SQL query. This capability provides a powerful mechanism to leverage predictive analytics to enrich the incoming event stream data and turn it into actionable data .

Listing 5-3. Example of an Azure Machine Learning User-Defined Function

WITH subquery AS (SELECT text, sentiment(text) as result from input)SELECT text, result.[Scored Labels]INTO outputFROM subquery

Event Delivery Guarantees Provided in Azure Stream Analytics

The Azure Stream Analytics query language provides extensions to the T-SQL syntax to enable complex computations over incoming streams of events. With Azure Streaming Analytics, the following concepts related to event delivery are noteworthy to review:

Exactly once delivery

Duplicate records

Exactly Once Delivery

An “exactly once” delivery guarantee means all input events are processed exactly once by the streaming analytics system. In this way, the results are also guaranteed to be complete with no duplicate outputs. In terms of Azure Service Level Agreements (SLAs), Azure Stream Analytics guarantees exactly once delivery up to the output adapter that writes the output events.

Duplicate Records

When a Stream Analytics job is running, duplicate records may occasionally occur within the output data. These duplicate records are expected, due to the fact that Azure Stream Analytics output adapters do not write the output events in a complete transactional manner.

See the following link for more information:

How to achieve exactly-once delivery for SQL output:

Time Management Functions

The Azure Stream Analytics SQL query language extends the T-SQL syntax to enable complex computations over streams of events. Stream Analytics provides language constructs to deal with the temporal aspects of the data. For example, it is possible to assign custom timestamps to the stream events, specify time window for aggregations, specify allowed time difference between two streams of data for JOIN operation, etc.

System.Timestamp: A system property that can be used to retrieve an event’s timestamp.

Time Skew Policies: Provides policies for out-of-order and late arrival events.

Aggregate Functions: Used to perform a calculation on a set of values from a time window and return a single value.

DATEDIFF: Allowed in the JOIN predicate and allows the specification of time boundaries for JOIN operations.

Date and Time Functions: Azure Stream Analytics provides a variety of date and time functions for use in creating time-sensitive streaming analytics queries.

TIMESTAMP BY: Allows specifying custom timestamp values.

The Importance of the TIMESTAMP BY Clause

In Azure Streaming Analytics, all incoming events have a well-defined timestamp. If a solution is required to use the application time, they can use the TIMESTAMP BY keyword to specify the column in the payload which should be used to timestamp every incoming event to perform any temporal computation like windowing functions (Hopping, Tumbling, Sliding), Temporal JOINs, etc.

Note that it is recommended to use the TIMESTAMP BY clause over an “arrival time” as a best practice since the TIMESTAMP BY clause can be used on any column of type “datetime” and all ISO 8601 formats are supported. In comparison, the System.timestamp value can only be used in the SELECT clause.

Listing 5-4 illustrates a TIMESTAMP BY example that uses the TweetTime column as the application time for all incoming events.

Listing 5-4. The TIMESTAMP BY Clause

SELECT TweetId, TweetTimeFROM TweetInputTIMESTAMP BY TweetTime

Azure Stream Analytics: Unified Programming Model

As we have seen in the previous sections covering the superset of features, functions, and capabilities that extend the Azure Streaming Analytics SQL dialect, the end result is an extremely powerful, yet easily approachable, SQL-based programming model that brings together event streams, reference data, and Machine Learning extensions to create a comprehensive solution.

Azure Stream Analytics: Examples of the SQL Programming Model

The Simplest Example

Listing 5-5 is a very simple example of a streaming SQL Query that will copy all the fields in the input named iothub-input into an output named blob-output.

Listing 5-5. The Simplest ASA SQL Query Possible

select * into blob-output from iothub-inputIn many cases, the Azure Streaming Analytics SQL queries will be more complex and will usually incorporate various temporal semantics in order to surface the data related to the sliding, hopping, or tumbling timeframe windows from the event stream. As mentioned previously, this is where the real power of Azure Streaming Analytics really shines, as it is very easy to accomplish via the superset of functionality that Microsoft has added to the familiar T-SQL dialect.

To illustrate, the following temporal window examples will make the assumption that we are reading from an input stream of tweets from Twitter.

Tumbling Windows: A 10-Second Tumbling Window

Tumbling windows can be defined as a series of fixed-sized, non-overlapping, and contiguous time intervals taken from a data stream. The ASA SQL in Listing 5-6 seeks to answer the following question:

“Tell me the count of tweets per time zone every 10 seconds”

Listing 5-6. Sample Tumbling Window SQL Statement

SELECT TimeZone, COUNT(*) AS CountFROM TwitterStream TIMESTAMP BY CreatedAtGROUP BY TimeZone, TumblingWindow(second,10)

Hopping Windows: A 10-Second Hopping Window with a 5-Second “Hop”

Hopping windows are designed to model scheduled overlapping windows. The ASA SQL in Listing 5-7 seeks to answer the following question:

“Every 5 seconds give me the count of tweets over the last 10 seconds”

Listing 5-7. Sample Hopping Window SQL Statement

SELECT Topic, COUNT(*) AS TotalTweetsFROM TwitterStream TIMESTAMP BY CreatedAtGROUP BY Topic, HoppingWindow(second, 10 , 5)

Sliding Windows: A 10-Second Sliding Window

With a sliding window , the system is asked to logically consider all possible windows of a given length and output events for cases when the content of the window actually changes, for example, when an event was detected that entered or existed the window. The ASA SQL in Listing 5-8 seeks to answer the following question:

“Give me the count of tweets for all topics which are tweeted more than 10 times in the last 10 seconds”

Listing 5-8. A Sample Sliding Window SQL Statement

SELECT Topic, COUNT(*) FROM TwitterStreamTIMESTAMP BY CreatedAtGROUP BY Topic, SlidingWindow(second, 10)HAVING COUNT(*) > 10

Joining Multiple Streams

Similar to standard T-SQL language, the JOIN clause in the Azure Stream Analytics query language is used to combine records from two or more input sources. However, the JOIN clause in Azure Stream Analytics SQL are temporal in nature. This means that each JOIN must provide limits on how far the matching rows can be separated in time. The ASA SQL in Listing 5-9 seeks to answer the following question:

“List all users and the topics on which they switched their sentiment within a minute“

Listing 5-9. JOINing Multiple Streams in ASA SQL

SELECT TS1.UserName, TS1.TopicFROM TwitterStream TS1 TIMESTAMP BY CreatedAtJOIN TwitterStream TS2 TIMESTAMP BY CreatedAtON TS1.UserName = TS2.UserName AND TS1.Topic = TS2.TopicAND DateDiff(second, TS1, TS2) BETWEEN 1 AND 60WHERE TS1.SentimentScore != TS2.SentimentScore

Detecting the Absence of Events

This SQL query pattern can be extremely useful as it will provide the ability to determine if a stream has no value that matches a certain criteria. For example, Listing 5-10 is a sample ASA SQL query that will seek to provide the real-time answers for the question.

“Show me if a topic is not tweeted for 10 seconds since it was last tweeted.”

Listing 5-10. Detecting the Absence of Data in ASA SQL

SELECT TS1.CreatedAt, TS1.TopicFROM TwitterStream TS1 TIMESTAMP BY CreatedAtLEFT OUTER JOIN TwitterStream TS2 TIMESTAMP BY CreatedAtON TS1.Topic = TS2.TopicAND DATEDIFF(second, TS1, TS2) BETWEEN 1 AND 10WHERE TS2.Topic IS NULL

Note

The following link provides guidance for common Stream Analytics Usage Patterns:

Query examples for common Stream Analytics usage patterns:

The Reference Implementation

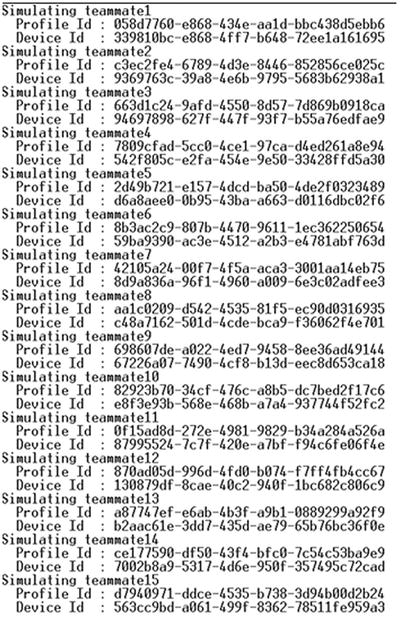

Now that we have covered all the basics related to the configuration and setup of Azure Streaming Analytics, it is time to walk through the actual configuration steps for our reference implementation solution. In this next section, we walk through the configuration of our streaming analytics job via the Azure Portal in order to implement various data pathways for our incoming IoT data streams. As part of the configuration, we will create and configure the following artifacts in Azure:

Azure Streaming Analytics Job.

Inputs: For our Azure Streaming Analytics Job. In this case, we will be using two inputs. The first one is for the incoming data stream from the IoT Hub, the second input is for reference data. In this example, we will read from a reference .CSV file in Azure blob storage to match a team member’s personal health information with their real-time health sensor information readings.

Functions: Consisting of references to Azure Machine Learning Web Services. To help enrich the data with predictive analytics. In this example, we will check to see if a team member is fatigued to the point of exhaustion.

Outputs: For output of results from the Azure Streaming Analytics Job into various storage formats: Hot, Warm, and Cold (from the Lambda architecture).

ASA SQL Query Language: Will combine all of the previous configurations to process the incoming data streams and send computed results to various output destinations.

Business Use Case Scenario

As you may recall, the use case scenario for our reference implementation involves monitoring workers health during strenuous activities. To that end, IoT sensors are being worn and by the members of the various work teams and their sensor readings are being transmitted to the Azure cloud via an IoT Hub configuration.

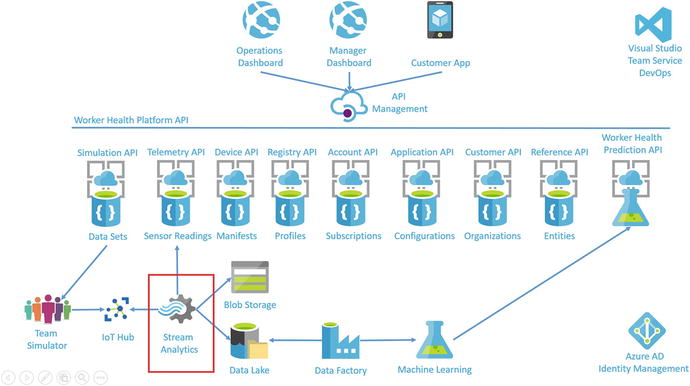

The next critical step in the process is where Azure Streaming Analytics is utilized to quickly and efficiently process the incoming data streams. Figure 5-8 denotes the use of Azure Streaming Analytics as the primary ingestion processing engine in the overall architecture.

Figure 5-8. Worker Health and Safety Reference solution

Summary

This chapter covered the all of the basic fundamental capabilities of Azure Streaming Analytics. You learned how you can easily create streaming analytics jobs that allow you to leverage all of the positive attributes that a modern, Lambda data architecture should possess, including “hot”, “warm”, and “cold” data pathways to deliver maximum business results.

You also examined the benefits of using a fully managed PaaS service like Azure Streaming Analytics, versus building your own virtualized environment in Azure using Virtual Machine Linux images and the combination of many open source tools and utilities.

Finally, you applied knowledge of Azure Streaming Analytics to the reference IoT Architecture and created two input definitions—one for the IoT Hub events and the second for reference data for team members health-related information. Next, we created a FUNCTION definition to represent an Azure Machine Learning Web Service call that we used in our ASA SQL Query.

We then created three output definitions representing Hot, Warm, and Cold data paths using output definition parameters to update corresponding Azure data platforms—Power BI for “Hot”, Azure SQL Database for “Warm”, and Azure blob STORAGE for “Cold” storage.

As can easily be seen from this chapter, Azure Streaming Analytics can play a key role in the ingestion, organization, and orchestration of IoT sensor transactions. The environment makes it easy to get started yet is extremely powerful and flexible and can easily scale to handle millions of transactions per second. A powerful stream analytics engine is critical to success for the modern enterprise seeking to operate a business at Internet speed.