This chapter examines the use of Azure Data Factory and Azure Data Lake, including where, why, and how these technologies fit in the capabilities of a modern business running at Internet speed. It first covers the basic technical aspects and capabilities of Azure Data Factory and Azure Data Lake. Following that, the chapter implements three major pieces of functionality for the reference implementation:

Update reference data that we used for the Azure Stream Analytics job. As you may recall, we used the reference data in an ASA SQL JOIN query for gathering extended team member health data.

Re-train the Azure Machine Learning Model for predicting team member health and exhaustion levels. This data will be based on updated medical stress tests that are administered to team members on a periodic basis.

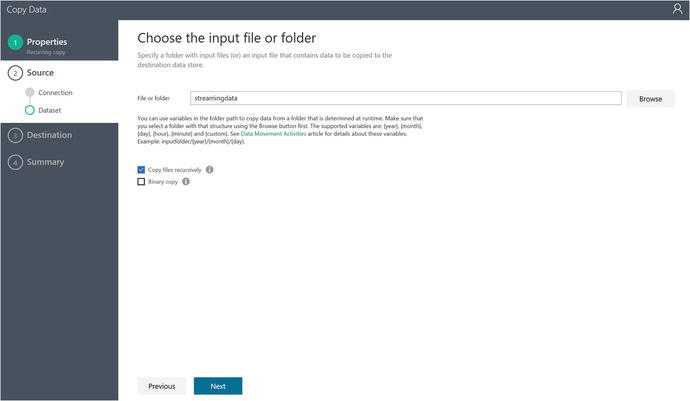

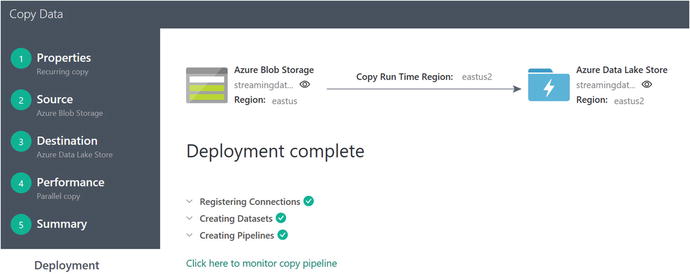

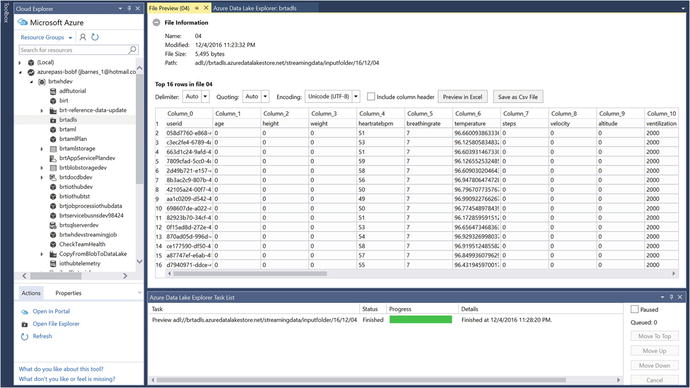

Move data from Azure blob storage to Azure Data Lake. This step prepares the reference implementation Data Lake analytics, which is the topic of Chapter 7.

Azure Data Factory Overview

Azure Data Factory fulfills a critical need in any modern Big Data processing environment. It can be seen as the backbone of any data operation, as Data Factory provides the critical core capabilities required to perform enterprise data transformation functions . This includes:

Data Ingestion and Preparation: From multiple sources; any combination of on-premise and cloud-based data sources.

Transformation and Analysis: Schedule, orchestrate, and manage the data transformation and analysis processes.

Publish and Consumption: Ability to transform raw data into finished data that is ready for consumption by BI tools or mobile applications.

Monitoring and Management: Visualize, monitor, and manage data movement and processing pipelines to quickly identify issues and take intelligent action. Alert capabilities to monitor overall data processing service health.

Efficient Resource Management: Saves you time and money by automating data transformation pipelines with on-demand cloud resources and management.

Azure Data Factory is a cloud-based data integration service that orchestrates and automates the movement and transformation of data. You can create data integration solutions using Azure Data Factory that can ingest data from various data stores (handles both on-premise and cloud-based), transform and process the data, and then publish the processing results to various output data stores.

The Azure Data Factory service is a fully managed cloud-based service that allows you to create data processing “pipelines” that can move and transform data. Data Factory has the capability to perform highly advanced and customizable ETL (Extract-Transform-Load ) functions on the data as it moves through the various stages in a processing pipeline.

These data processing “pipelines” can then be run either on a specified schedule (such as hourly, daily, weekly, etc.) or on-demand to provide a rich batch processing capability for data movement and analytics at enterprise scale.

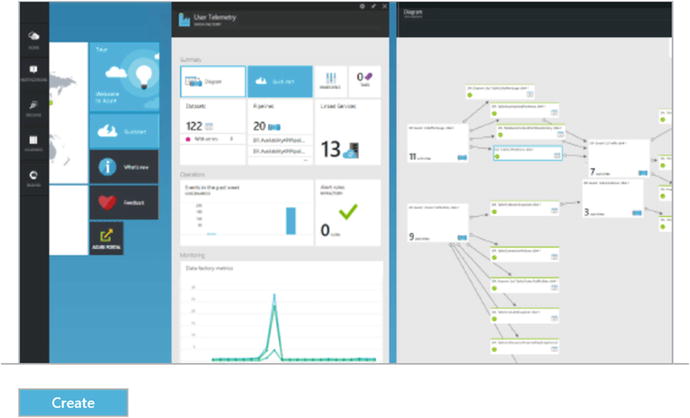

Azure Data Factory also provides rich visualizations to display the history, versions, and dependencies between your data pipelines, as well as monitor all your data pipelines from a single unified view. This allows you to easily detect and pinpoint any processing issues and set up appropriate monitoring alerts.

Figure 6-1 provides an illustration of the various data processing operations performed by Azure Data Factory, such as data ingestion, preparation, transformation, analysis, and finally publication. This data can be easily consumed by the key users of the data.

Figure 6-1. Azure Data Factory can ingest data from various data sources

Pipelines and Activities

In a normal Azure Data Factory solution, one or more data processing pipelines are typically utilized. A Data Factory pipeline is a logical grouping of activities. It’s used to group activities into a unit that together performs a single task.

Activities

Azure Data Factory activities define the actions to perform on your data. For example, you may use a copy activity to copy data from one data store to another. Similarly, you may use a hive activity, which runs a hive query on an Azure HDInsight cluster to transform or analyze your data. Data Factory supports two types of activities:

Data Movement Activities: This includes the copy activity, which copies data from a source data store to a sink data store. Data Factory supports the following data stores:

Azure:

Azure blob storage

Azure Data Lake Store

Azure SQL database

Azure SQL data warehouse

Azure table storage

Azure DocumentDB

Azure Search Index

Databases :

SQL Server*

Oracle*

MySQL*

DB2*

Teradata*

PostgreSQL*

Sybase*

Cassandra*

MongoDB*

Amazon Redshift

File Systems:

File System*

HDFS*

Amazon S3

FTP

Other Systems:

Salesforce

Generic ODBC*

Generic OData

Web Table (table from HTML)

GE Historian*

Note: Data stores denoted with a * can exist either on-premises or on an Azure Virtual Machine (IaaS). This option requires that you install the Data Management Gateway on either an on-premises or Azure Virtual Machine.

Note See the following link for more information on the Data Management Gateway.

Move data between on-premises sources and the cloud with Data Management Gateway: https://docs.microsoft.com/en-us/azure/data-factory/data-factory-move-data-between-onprem-and-cloud .

Data Transformation Activities: Azure Data Factory supports the following transformation activities that either can be added to pipelines individually or chained together with another activity.

Data Transformation Activity Environment | Compute Environment |

Hive | HDInsight [Hadoop] |

Pig | HDInsight [Hadoop] |

MapReduce | HDInsight [Hadoop] |

Hadoop Streaming | HDInsight [Hadoop] |

Machine Learning activities | Azure VM |

Stored Procedure | Azure SQL, Azure SQL Data Warehouse, or SQL Server in VM |

Data Lake Analytics U-SQL | Azure Data Lake Analytics |

Dot Net | HDInsight [Hadoop] or Azure Batch |

If you need to move data to or from a data store that the Azure Data Factory Copy Activity doesn’t support, or you need to transform data using custom logic, you can always create a custom .NET activity.

Note

For details on creating and using a custom activity, see the “Use custom activities in an Azure Data Factory pipeline” link at https://docs.microsoft.com/en-us/azure/data-factory/data-factory-use-custom-activities .

Linked Services

Linked services define the information needed for Azure Data Factory to connect to external data resources (for example: on-premises SQL Server, Azure Storage, and HDInsight running in Azure). Linked services are used for two primary purposes in Azure Data Factory:

To represent a data store: Such as an on-premise SQL Server, Oracle database, file share, or an Azure blob storage account.

To represent a compute resource: One that can host the execution of an activity. As an example, the HDInsight hive activity runs on an HDInsight Hadoop cluster and can be used to perform data transformations.

Datasets

In the larger scheme of things, linked services link the data stores to an Azure Data Factory job. Datasets represent data structures within those data stores.

As an example, an “Azure SQL linked service” might provide connection information for an Azure SQL database. An Azure SQL dataset would then specify the specific table that would contain the data for Azure Data Factory to process.

Additionally, an “Azure storage linked service” would provide connection information for Azure Data Factory to be able to connect to an Azure Storage account. From there, an Azure blob dataset would specify the container for the blob and the folder in the Azure Storage account from which the pipeline should read the incoming data.

Pipelines

An Azure Data Factory pipeline is a grouping of logically related activities. A pipeline is used to group activities into a logical unit that performs a task.

Activities define the specific actions to perform on the data. Each pipeline activity can take zero or more datasets as an input and can produce one or more datasets as output.

For example, a copy activity can be used to copy data from one Azure data store to another data store. Alternatively, one could use an HDInsight hive activity to run a hive query on an Azure HDInsight cluster in order to transform the data stream.

Azure Data Factory provides a wide range of data ingestion, movement, and transformation activities. Developers also have the freedom to choose to create a custom .NET activity to run their own custom code in an Azure Data Factory pipeline.

Scheduling and Execution

At this point, we have examined what Data Factory pipelines and activities are and how they are composed to create holistic data processing work streams in Azure Data Factory. We will now examine the scheduling and execution engine in Azure Data Factory.

It is important to note that an Azure Data Factory pipeline is active only between its start time and end time. Consequently, it is not executed before the start time or after the end time. If the pipeline is in the “paused” state, it will not get executed at all, no matter how the start and end times are set.

Note that it is not the pipeline that gets actually gets executed. Rather, it is the set of activities within the Data Factory pipeline that actually get executed. However, they do so in the overall context of the Data Factory pipeline.

The Azure Data Factory service allows you to create data pipelines that move and transform data, and then run those pipelines on a specified operational schedule (hourly, daily, weekly, etc.).

Data Factory also provides rich visualizations to display the history, version, and dependencies between data pipelines, and allows you to monitor all your data pipelines from a single unified view. This provides an easy management tool to help pinpoint issues and set up monitoring alerts.

Pipeline Copy Activity End-to-End Scenario

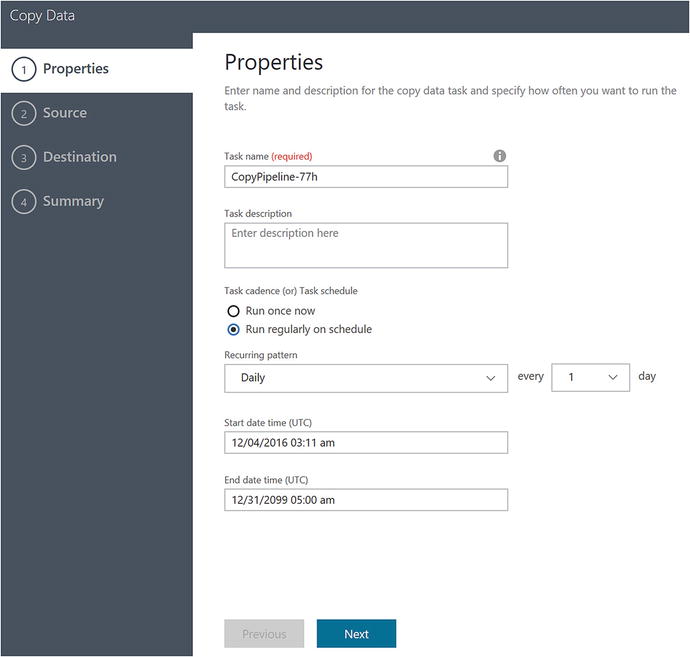

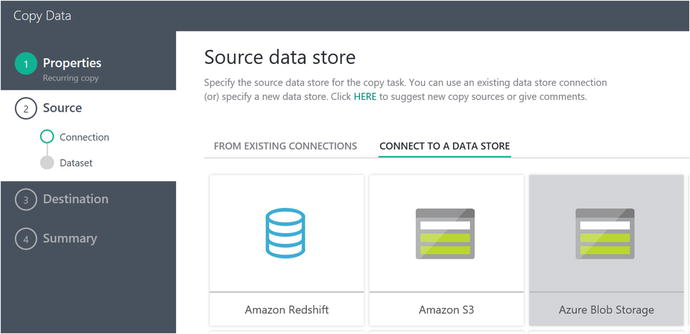

In this section, we examine a complete end-to-end example of creating an Azure Data Factory pipeline to copy data from Azure blob storage to an Azure SQL database. Along the way, we emphasize the major features and capabilities that you can exploit to make the most out of Azure Data Factory for your requirements.

Note

See this link for detailed steps to accomplish this Data Factory scenario :

Copy data from blob storage to SQL database using Data Factory: https://docs.microsoft.com/en-us/azure/data-factory/data-factory-copy-data-from-azure-blob-storage-to-sql-database

Scenario Prerequisites

Before you can create an Azure Data Factory pipeline or activities, you need the following:

Azure Subscription : If you don’t already have a subscription, you can start for free at https://azure.microsoft.com/en-us/free/?b=16.46 .

Azure Storage Account: You use the blob storage as a “source” data store in this scenario.

Azure SQL Database : You use an Azure SQL database as a destination data store in this tutorial.

SQL Server Management Studio or Visual Studio : You use these tools to create a sample database and destination table, and to view the resultant data in the database table.

JSON Definition

If you have walked through the Azure Data Factory link to “Copy Data from Blob Storage to SQL Database Using Data Factory,” you may have noticed that there are a variety of tools that you can use to define an Azure Data Factory pipeline or activity:

Copy Wizard

Azure Portal

Visual Studio

PowerShell

Azure Resource Manager template

ReST API

.NET API

No matter what the tool is used to create the initial Azure Data Factory job, ultimately, Azure Data Factory utilizes JavaScript Object Notation (JSON) to define and persist the definitions that you create via the tools.

JSON is a lightweight data-interchange format that makes it easy for humans to read and write as well as easy for machines to parse and generate. One distinct advantage of this approach is that the specific JSON configuration parameters can be finely tweaked and tuned for the scenario at hand in order to provide complete control over the configuration and run options for the Data Factory job.

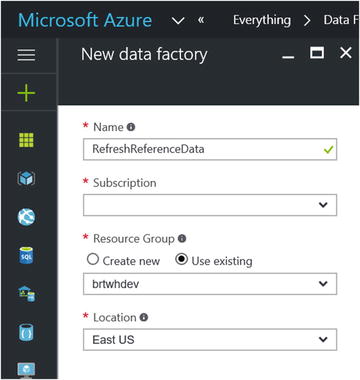

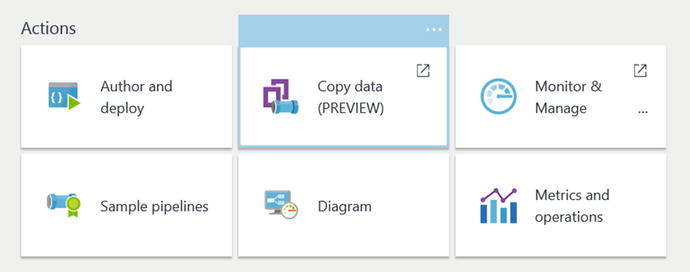

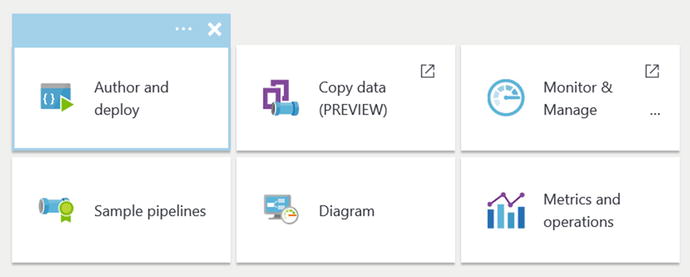

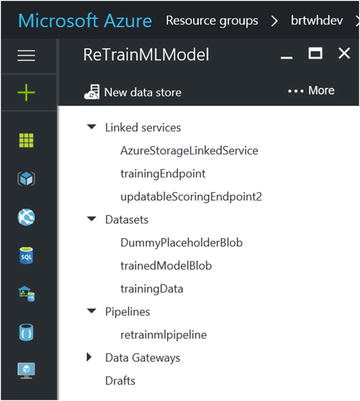

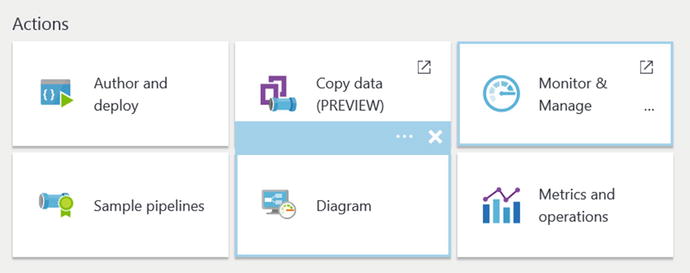

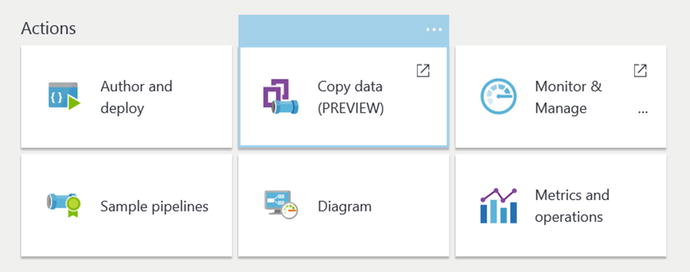

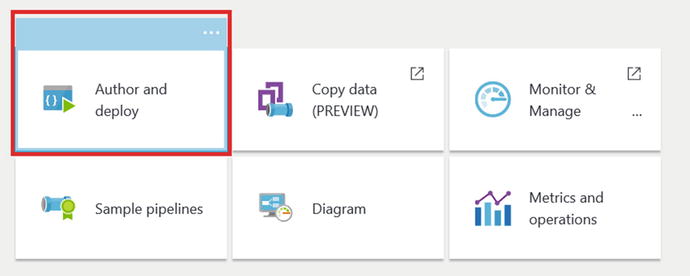

To get started, navigate (via the Azure Portal) to your Azure Data Factory job created in the link and click on the Author and Deploy option, as shown in Figure 6-2.

Figure 6-2. Azure Portal Data Factory: Author and Deploy options

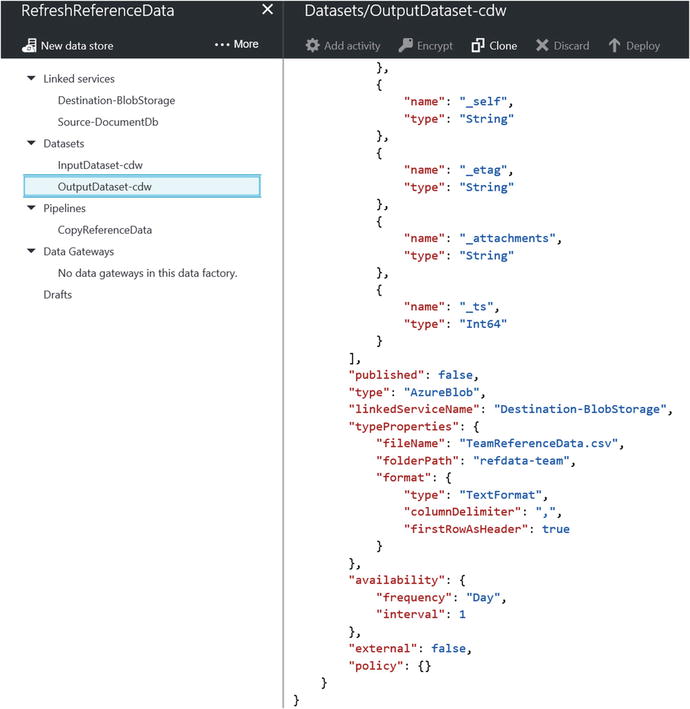

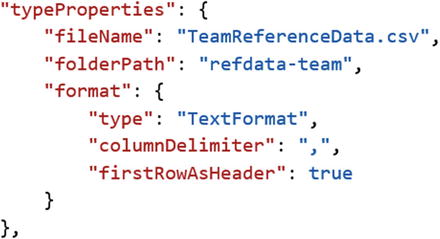

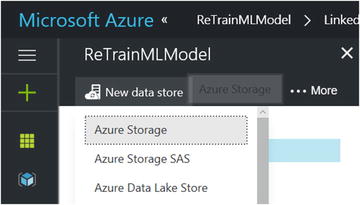

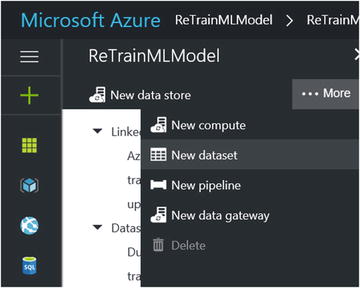

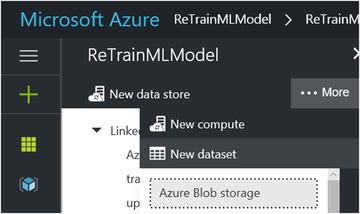

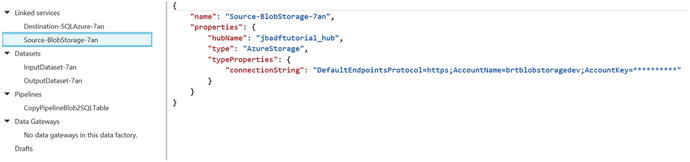

Once you have clicked on the Author and Deploy option, you will see a screen similar to the one in Figure 6-3, where you can navigate thru each step of a Data Factory pipeline job that was created and see the corresponding JSON for each step of the process.

Figure 6-3. Data Factory: Author and Deploy JSON options

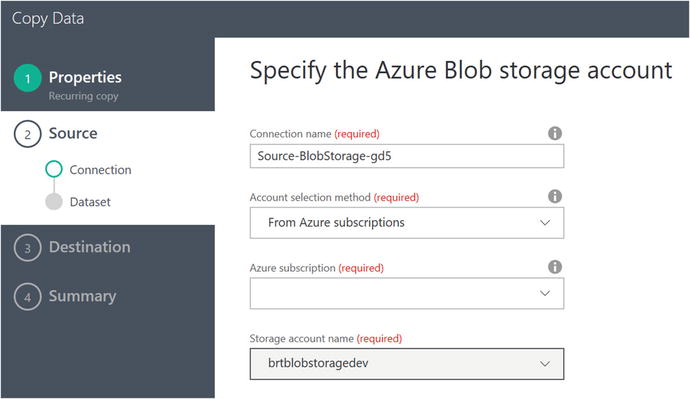

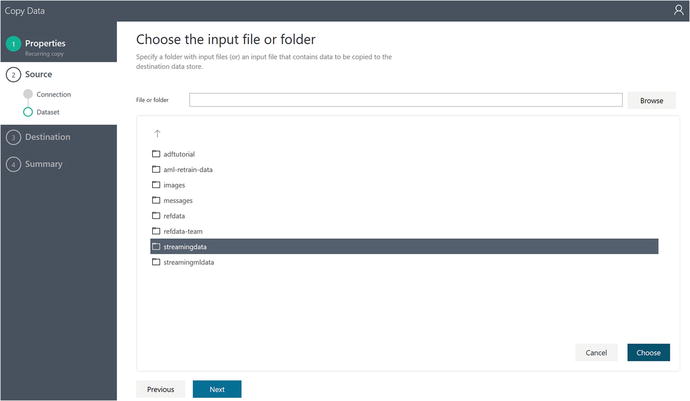

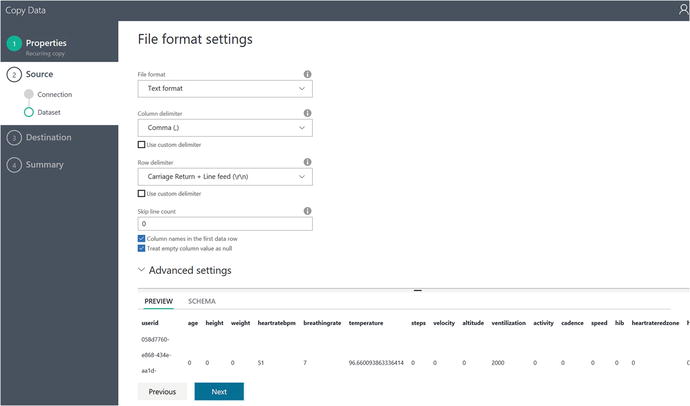

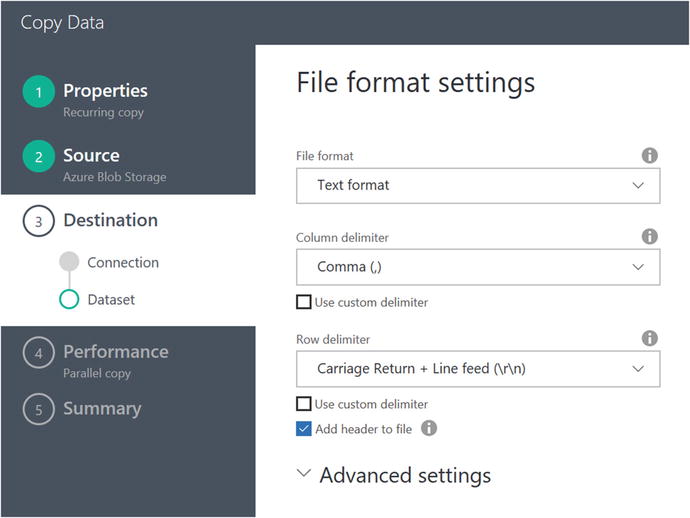

Let’s take a look at the JSON that was generated for the sample Copy Activity workflow for the Copy link. The starting point is to define the incoming and outgoing data sources for the job. In this case, we are using Azure blob storage for the input in the form of a file named EmpData.txt, which is a comma-separated value (CSV) formatted input file.

Note the two JSON code segments in Listing 6-1 that describe the Azure blob storage connection and the corresponding dataset definition for the input source .

Listing 6-1. JSON Description of INPUT Data Source for Copy Activity Definition

JSON - BLOB Storage Input Definition{"name": "Source-BlobStorage-7an","properties": {"hubName": "jbadftutorial_hub","type": "AzureStorage","typeProperties": {"connectionString": "DefaultEndpointsProtocol=https;AccountName=brtblobstoragedev;AccountKey=**********"}}}JSON - Data Definition:{"name": "InputDataset-7an","properties": {"structure": [{"name": "Column0","type": "String"},{"name": "Column1","type": "String"}],"published": false,"type": "AzureBlob","linkedServiceName": "Source-BlobStorage-7an","typeProperties": {"fileName": "EmpData.txt","folderPath": "adftutorial","format": {"type": "TextFormat","columnDelimiter": ","}},"availability": {"frequency": "Day","interval": 1},"external": true,"policy": {}}}

Note in the two JSON code segments that these two definitions completely describe the data input source even down to the field definitions within the CSV text file in Azure blob storage. This interface in the Azure Portal also allows you to easily override the standard parameters by simply editing the JSON directly.

Listing 6-2 shows sample JSON output for a Data Factory Copy Operation.

Listing 6-2. Data Factory Copy Operation JSON Parameters

Data Factory - JSON Copy Pipeline Operations{"name": "CopyPipelineBlob2SQLTable","properties": {"description": "CopyPipelineBlob2SQLTable","activities": [{"type": "Copy","typeProperties": {"source": {"type": "BlobSource","recursive": false},"sink": {"type": "SqlSink","writeBatchSize": 0,"writeBatchTimeout": "00:00:00"},"translator": {"type": "TabularTranslator","columnMappings": "Column0:FirstName,Column1:LastName"}},"inputs": [{"name": "InputDataset-7an"}],"outputs": [{"name": "OutputDataset-7an"}],"policy": {"timeout": "1.00:00:00","concurrency": 1,"executionPriorityOrder": "NewestFirst","style": "StartOfInterval","retry": 3,"longRetry": 0,"longRetryInterval": "00:00:00"},"scheduler": {"frequency": "Day","interval": 1},"name": "Blobpathadftutorial->dbo_emp"}],"start": "2016-11-22T15:06:22.806Z","end": "2099-12-31T05:00:00Z","isPaused": false,"hubName": "jbadftutorial_hub","pipelineMode": "Scheduled"}}

The JSON definition in Listing 6-2 allows you to have full control over the parameters, the mapping between the CSV file and the SQL table, and run behavior of this pipeline Copy job.

Additionally, note that within the scheduler section of the activity JSON code sample, you can specify a recurring schedule for a pipeline activity. For example, you can schedule a Data Factory pipeline copy activity to run every hour by modifying the JSON as follows:

JSON Code Fragment - Scheduler"scheduler": {"frequency": "Hour","interval": 1},

Note

See the following link for a complete overview of the JSON options for a Data Factory pipeline Copy operation:

Move data to and from Azure blob using Azure Data Factory: https://docs.microsoft.com/en-us/azure/data-factory/data-factory-azure-blob-connector#azure-storage-linked-service .

As can be seen from the composable architecture that the JSON definitions provided, Azure Data Factory is an extremely powerful and flexible tool to help manage all the critical aspects of managing Big Data in the cloud. Aspects such as data ingestion (either on-premise or in Azure), preparation, transformation, movement, and scheduling are all required features for running an enterprise-grade data management platform.

Monitoring and Managing Data Factory Pipelines

The Azure Data Factory service provides a rich monitoring dashboard capability that helps to perform the following tasks:

Assess pipeline health data from end-to-end

Identify and fix any pipeline processing issues

Track the history and ancestry of your data

View relationships between data sources

View full historical accounting of job execution, system health, and job dependencies

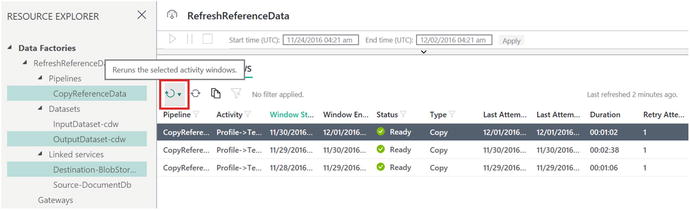

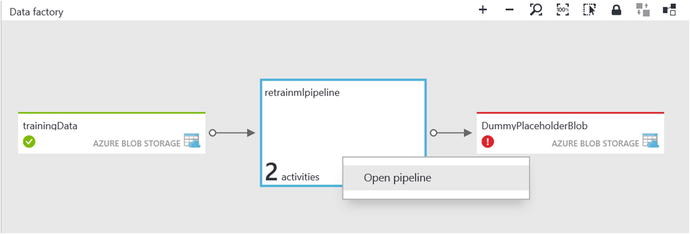

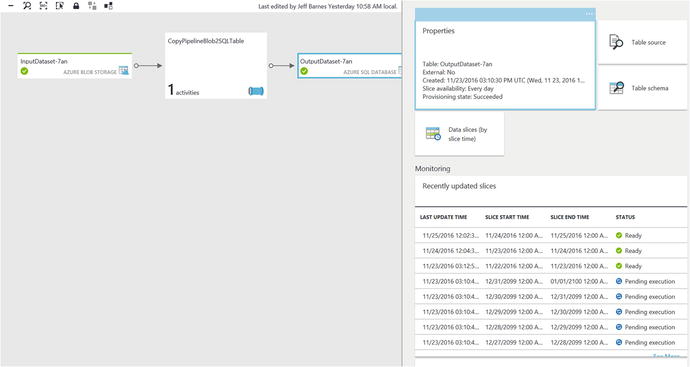

You can easily monitor the state of an Azure Data Factory pipeline job by navigating to your Data Factory job in the Azure Portal and then clicking on the Diagram option, as shown in Figure 6-4.

Figure 6-4. Azure Portal: Data Factory job, diagram view

Next, you will see a visual diagram of your Data Factory pipeline job, as shown in Figure 6-5. By clicking on either one of the Input or Output definitions, you can see the history and status of each “slice” of data that was created, along with what is scheduled to occur next.

Figure 6-5. Monitoring an Azure Data Factory job by viewing the output segment history

Note that the status for each pipeline activity in Azure Data Factory can cycle among many potential execution states, as follows:

Skip

Waiting

In-Progress

In-Progress (Validating)

Ready

Failed

Figure 6-6 represents the various states of execution that can occur when an Azure Data Factory pipeline job is active.

Figure 6-6. Data Factory pipeline job state transition flow

Azure Data Factory “slices” are the intervals in which the pipeline job is executed within the period defined in the start and end properties of the pipeline. For example, if you set the start time and end time to occur in a single day, and you set the frequency to be one hour, then the activity will be executed 24 times. In this case, you will have 24 slices, all using the same data source.

Normally, in Azure Data Factory , the data slices start in a Waiting state for pre-conditions to be met before executing. Then, the activity starts executing and the slice goes into the In-Progress state. The activity execution may succeed or fail. The slice is marked as Ready or Failed based on the result of the execution.

You can reset the slice to go back from Ready or Failed state to a Waiting state. You can also mark the slice state to Skip, which prevents the activity from executing and will not process the slice.

Note

See this link for more information: “Monitor and Manage Azure Data Factory Pipelines”: https://docs.microsoft.com/en-us/azure/data-factory/data-factory-monitor-manage-pipelines .

Data Factory Activity and Performance Tuning

Another key set of factors to consider when choosing a cloud data analytics processing system is performance and scalability . Azure Data Factory provides a secure, reliable, and high-performance, data ingestion, and transformation platform that can run at massive scale. Azure Data Factory can enable enterprise scenarios where multiple terabytes of data are moved and transformed across a rich variety of data stores, both on-premise and in Azure.

The Azure Data Factory copy activity offers a highly optimized data loading experience that is easy to install and configure. Within just a single pipeline copy activity, you can achieve load speeds similar to the following:

Load data into Azure SQL data warehouse at 1.2 GB per second.

Load data into Azure blob storage at 1.0 GB per second.

Load data into Azure Data Lake Store at 1.0 GB per second.

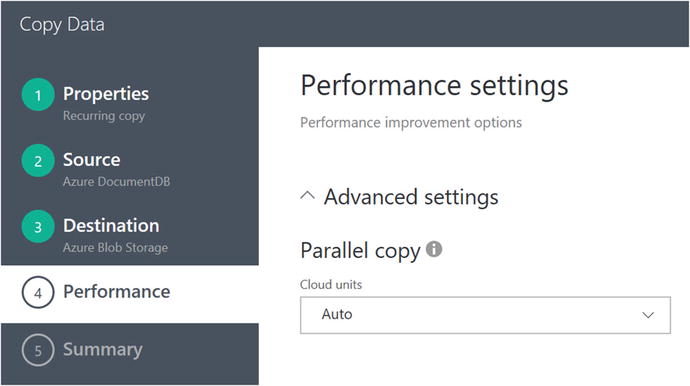

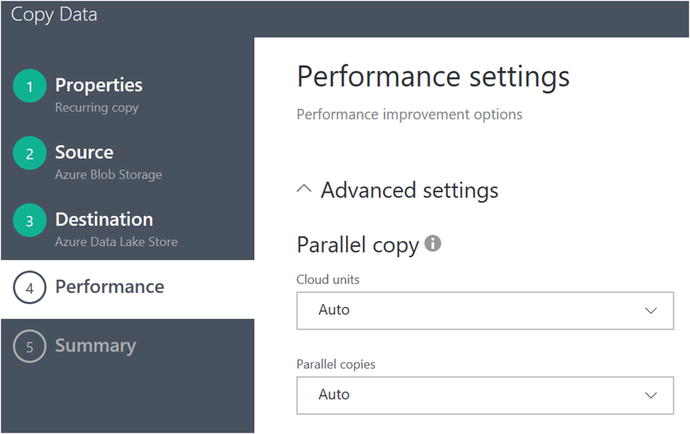

Parallel Copy

Azure Data Factory also has the ability to run copy activities from a source or write data to a destination in parallel operations executed in a Copy Activity run. This feature can have a dramatic impact on the throughput of a copy operation and can also reduce the time it takes to perform data transformation and movement functions.

You can use the JSON “parallel copies ” property to indicate the parallelism that you want copy activity to use. You can think of this property as the maximum number of threads in the copy activity that can read from your source or write to your sink data stores in parallel.

Listing 6-3. JSON Snippet of Pipeline Copy Activity Showing the parallelCopies Property

JSON Pipeline Copy Activity - "parallelCopies" Property"activities":[{"name": "Sample copy activity","description": "","type": "Copy","inputs": [{ "name": "InputDataset" }],"outputs": [{ "name": "OutputDataset" }],"typeProperties": {"source": {"type": "BlobSource",},"sink": {"type": "AzureDataLakeStoreSink"},"parallelCopies": 8}}]

For each copy activity run, Azure Data Factory determines the number of parallel copies to utilize to copy data from the source data store to the destination data store. The default number of parallel copies that are used is dependent on the type of data source and the data sink that is used.

Cloud Data Movement Units (DMUs)

A Cloud data Movement Unit (DMU) is a Data Factory measurement that represents the relative power (a combination of CPU, memory, and network resource allocation) of a single unit in Azure Data Factory. A DMU might be used in a cloud-to-cloud copy operation, but not in a hybrid copy from an on-premise data store.

By default, Azure Data Factory uses a cloud DMU to perform a single pipeline copy activity execution. To override the default, specify a value for the cloudDataMovementUnits property, as shown in the code segment in Listing 6-4.

Listing 6-4. Sample JSON snippet showing the cloudDataMovementUnits Property

Data Factory - JSON Property for "cloudDataMovementUnits""activities":[{"name": "Sample copy activity","description": "","type": "Copy","inputs": [{ "name": "InputDataset" }],"outputs": [{ "name": "OutputDataset" }],"typeProperties": {"source": {"type": "BlobSource",},"sink": {"type": "AzureDataLakeStoreSink"},"cloudDataMovementUnits": 4}}]

Note that you can achieve higher throughput by leveraging more data movement units (DMUs) than the default maximum DMUs, which is eight for a cloud-to-cloud copy activity run. As an example, you can copy data from Azure blob to Azure Data Lake Store at the rate of 1 gigabyte per second if you are set to use (100) DMUs. In order to request more DMUs than the default of eight for your subscription, you need to submit a support request via the Azure Portal.

Note

For more detailed information concerning performance and tuning for Azure Data Factory jobs, visit the “Copy Activity Performance and Tuning Guide” at https://docs.microsoft.com/en-us/azure/data-factory/data-factory-copy-activity-performance .

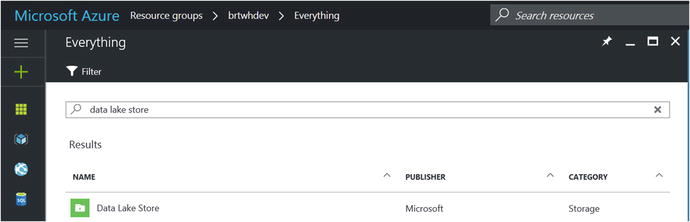

Azure Data Lake Store

Azure Data Lake Store is a hyper-scale repository and processing environment for today’s modern Big Data analytical workloads.

Azure Data Lake enables you to persist data of any size, data type, and ingestion speed, in a single location, for use in operational and data analytics research.

Hadoop Access

Azure Data Lake Store can be accessed from Hadoop and Azure HDInsight using the WebHDFS-compatible ReST APIs. The hadoop-azure-datalake module provides support for integration with the Azure Data Lake Store. The JAR file is named azure-datalake-store.jar.

Note

For Hadoop Azure Data Lake Support, visit https://hadoop.apache.org/docs/r3.0.0-alpha1/hadoop-azure-datalake/index.html .

Note that there is a distinction to be made around the meaning of the term Azure Data Lake. There are potentially two different meanings in Microsoft Azure. It is typically used to refer to a storage subsystem in Azure more commonly referred to as “Azure Data Lake Store” or “ADLS”.

The other variation of the term is “Azure Data Lake Analytics” or “ADLA,” which is an Azure-based analytics service where you can easily develop and run massively parallel data transformation and processing programs in a variety of languages such as U-SQL, R, Python, and .NET. Azure Data Lake Analytics are covered in detail in Chapter 7. For now, we will cover the basics of Azure Data Lake Store.

ADLS is specifically designed to enable analytics on the data stored in Azure Data Lake. The Data Lake storage subsystem is fine-tuned specifically for high performance for data analytics scenarios.

As a completely managed service offering from Microsoft, Azure Data Lake Store includes all the enterprise-grade capabilities one would expect from a cloud-based repository with massive scalability. The key “abilities ” provided by Azure Data Lake Store include: security, manageability, scalability, reliability, and availability. All of the characteristics are essential for real-world enterprise use cases.

With Azure Data Lake Store, you can now explore and harvest value from all your unstructured, semi-structured, and structured enterprise data by running massively parallel analytics over literally any amount of data. Azure Data Lake Store has no artificial constraints on the amount of data, number of files, or the size of individual files that can be stored. At the time of this writing, ADLS can store individual files that can be as large as petabytes in size, which is at least 200x larger than any other cloud storage service available today.

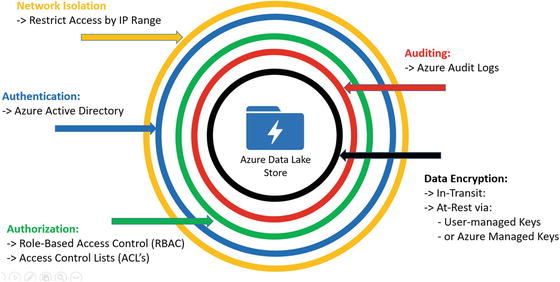

Security Layers

Azure Data Lake Store has security features that are “built-in” from the ground up. As can be seen in Figure 6-7, Azure Data Lake Store has a number of Azure security features and capabilities layered in to help provide the highest confidence in the security of the data, whether the data is at rest or in transit.

Figure 6-7. Layered security in Azure Data Lake Store

Figure 6-7 illustrates the various security layers involved in protecting your data in the Azure Data Lake Store. Here is a quick re-cap of these “built-in” security features:

Network Isolation: Azure Data Lake Store allows you to establish firewalls and define an IP address range for your trusted clients. With an IP address range, only clients that have an IP in the defined range can connect to Azure Data Lake Store.

Authentication: Azure Data Lake Store has Azure Active Directory (AAD) natively integrated to help manage users and group access and permissions. AAD also provides full lifecycle management for millions of identities, integration with on-premise Active Directory, single sign-on support, multi-factor authentication, and support for industry standard open authentication protocols such as OAuth.

Authorization: Azure Data Lake Store (ADLS) provides Role-Based Access Control (RBAC) capabilities via Access Control Lists (ACLs) for managing access to the data files in the Data Lake store. These capabilities provide fine-grained control over file access and permissions (at scale) to all data stored in an Azure Data Lake.

Auditing: Azure Data Lake Store provides rich auditing capabilities to help meet today’s modern security and regulatory compliance requirements. Auditing is turned on by default for all account management and data access activities. Audit logs from Azure Data Lake Store can be easily parsed as they are persisted in JSON format. Additionally, since the audit logs are in an easy-to-consume format such as JSON, you can you a wide variety of Business Intelligence (BI) tools to help analyze and report on ADLS activities.

Encryption: Azure Data Lake Store provides built-in encryption for both “at-rest” and “in-transit” scenarios. For data at-rest scenarios, Azure administrators can specify whether to let Azure to manage your Master Encryption Keys (MEKs) or you can use bring-your-own MEKs. In either case, the MEKs will be stored and managed securely in Azure Key Vault, which can utilize FIPS 140-2 Level 2 validated HSMs (Hardware Security Modules). For data in-transit scenarios, the Azure Data Lake Store data is always encrypted, by using the HTTPS (HTTP over Secure Sockets Layer) protocol.

Note that in Azure Data Lake Store, you can choose to have your data encrypted or have no encryption at all. If you choose encryption, all data stored in the Azure Data Lake Store is encrypted prior to persisting the data in the store. Alternately, ADLS will decrypt the data prior to retrieval by the client. From a client perspective, the encryption is transparent and seamless. Consequently, there are no code changes required on the client side to view or encrypt/decrypt the data.

ADLS Encryption Key Management

For encryption key management, Azure Data Lake Store provides two modes for managing your Master Encryption Keys (MEKs) . These keys are required for encrypting and decrypting any data that is stored in the Azure Data Lake Store.

You can either let Data Lake Store manage the master encryption keys for you or choose to retain ownership of the MEKs using your Azure Key Vault account. You can specify the mode of key management while creating a new Azure Data Lake Store account.

Tip

Get started with Azure Data Lake Analytics using the Azure Portal: https://docs.microsoft.com/en-us/azure/data-lake-analytics/data-lake-analytics-get-started-portal .

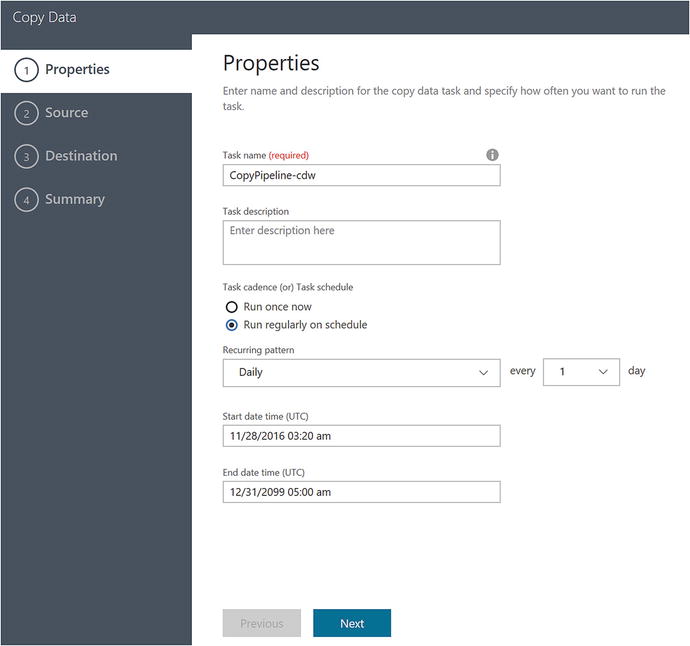

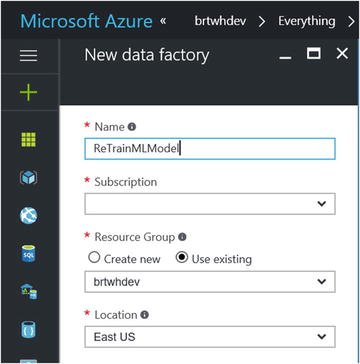

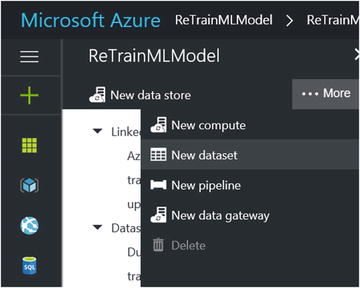

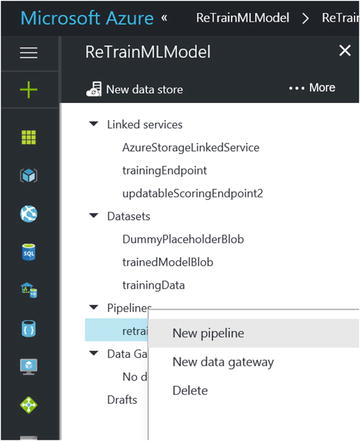

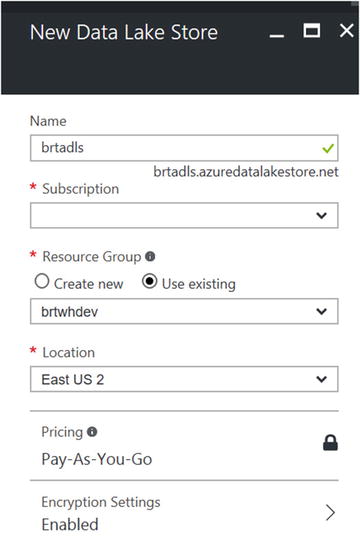

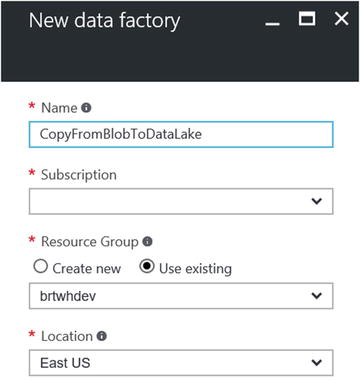

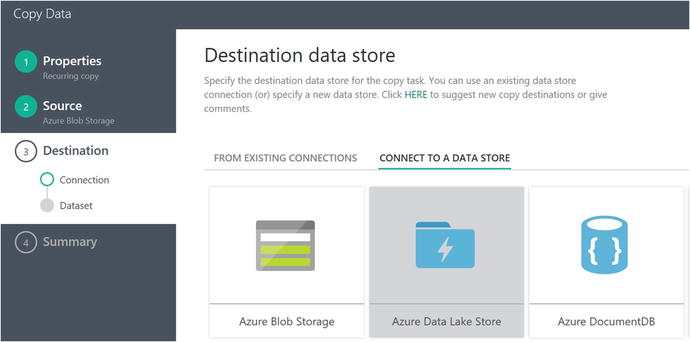

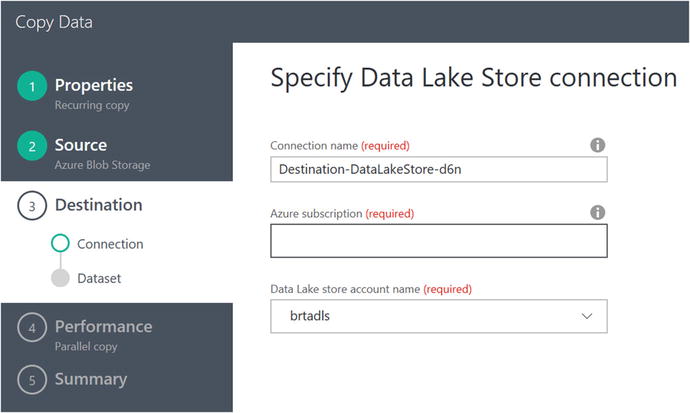

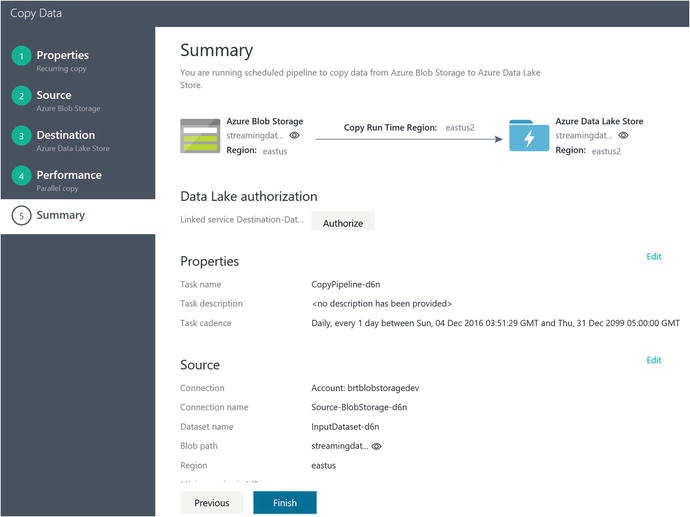

Implementing Data Factory and Data Lake Store in the Reference Implementation

Now that you have a solid background of the features and capabilities in Azure Data Factory and Azure Data Lake Store , you will put your knowledge to use by implementing a few more key pieces of the reference implementation in the remainder of this chapter. As a quick refresher, you will implement the following three pieces of functionality that are required for the reference implementation:

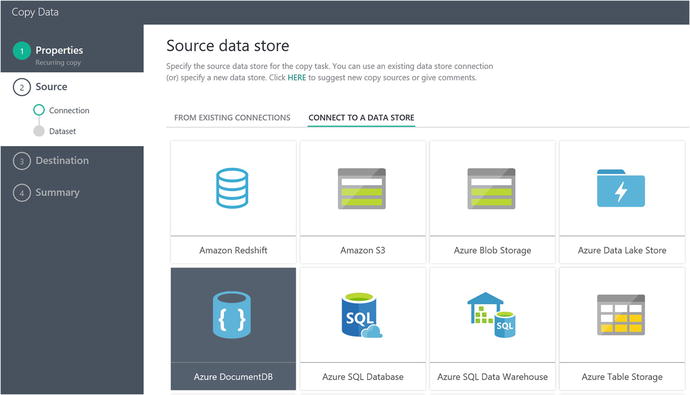

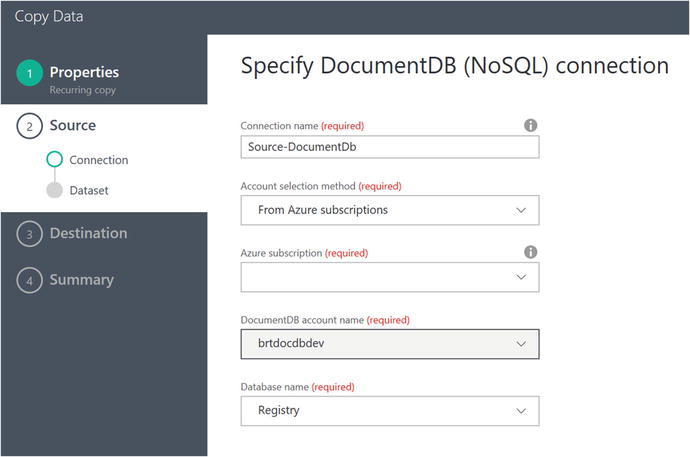

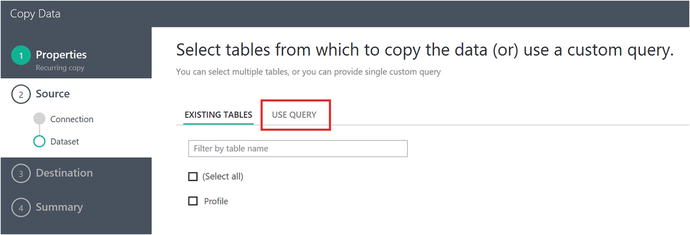

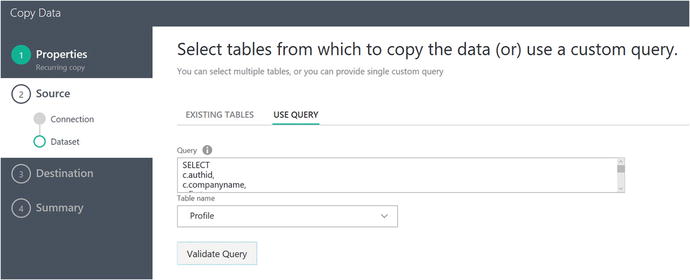

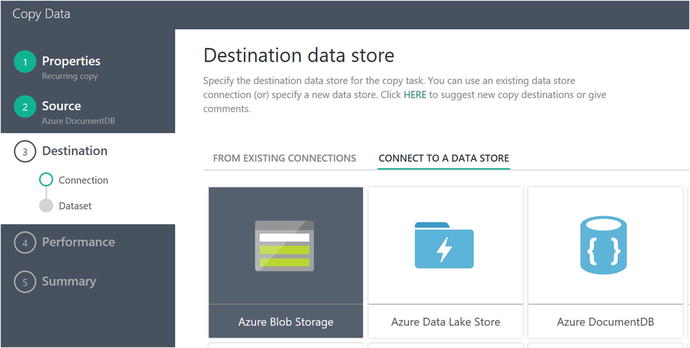

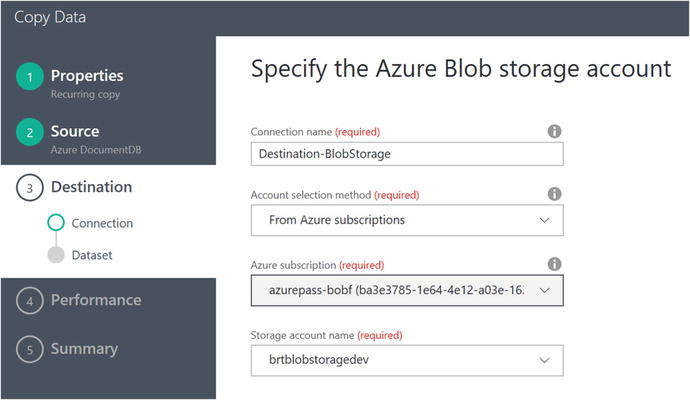

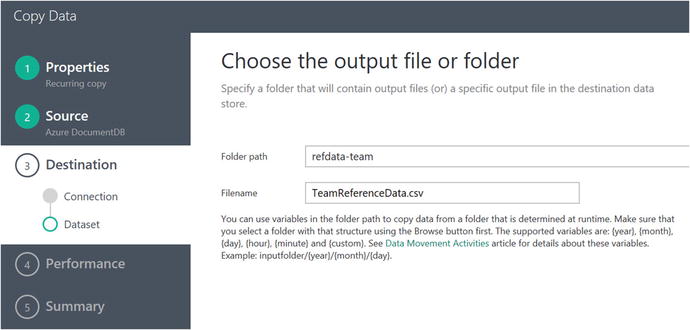

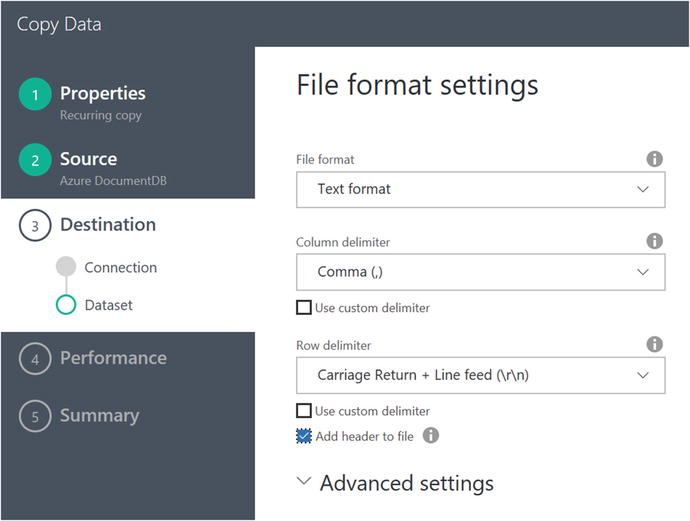

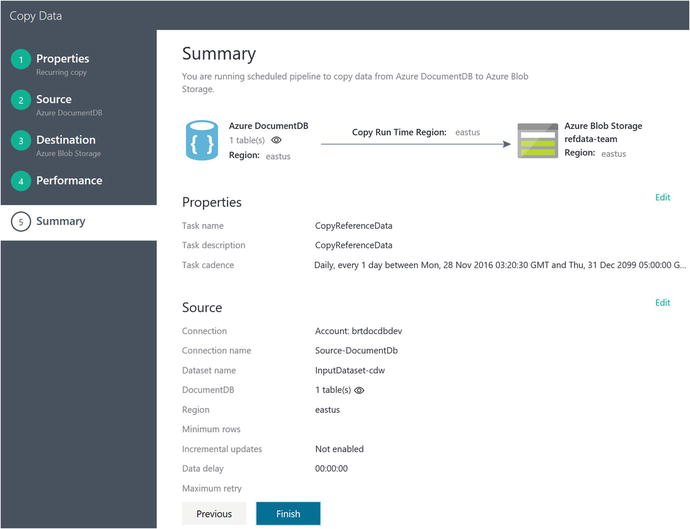

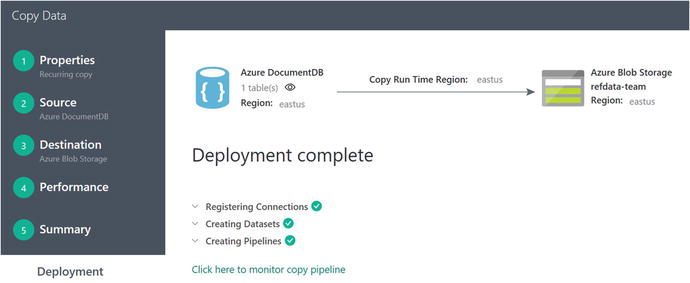

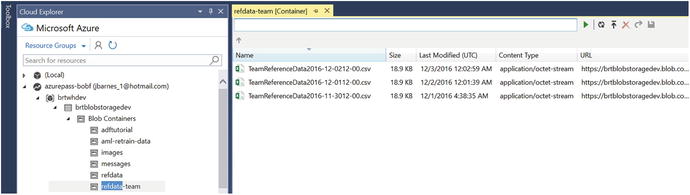

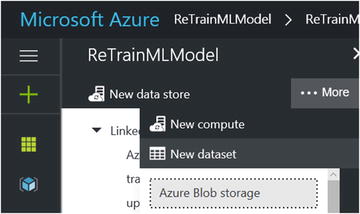

Update Reference data that you used for the Azure Stream Analytics job. You will use an Azure Data Factory copy job to copy team members’ profile data from Azure DocumentDB to a text-based CSV file in Azure Blob storage. As you may recall, you used this reference data in an ASA SQL JOIN query for gathering extended team member health data. We want to make sure that this reference data is updated periodically via a scheduled copy job.

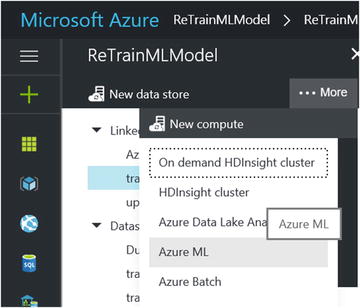

Re-train the Azure Machine Learning model for predicting team member health and exhaustion levels. We implemented a function in Chapter 5 (StreamAnalytics) to call an Azure Machine Learning Web Service. We want to update the predictive model that runs behind this service using updated medical stress data from tests that are administered to team members on a periodic basis.

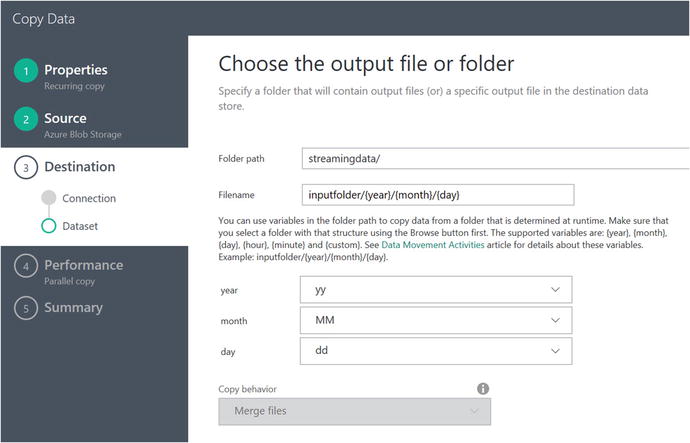

Move data from Azure blob storage to Azure Data Lake. This job will copy the data that originally came from the IoT Hub and was saved into Azure blob storage by the Azure Stream Analytics job in Chapter 5. We want to move this data from Azure blob Storage to Azure Data Lake Store.

Summary

This chapter provided a high-level overview of Azure Data Factory and Azure Data Lake Store. You implemented three Data Factory jobs to accomplish the corresponding use case scenarios for the reference implementation:

Update reference data

Re-train the Azure Machine Learning model

Move data from Azure blob storage to Azure Data Lake Store

It should now be very apparent that Azure Data Factory is the primary tool for accomplishing what is known in the Business Intelligence field as ETL (Extract, Transform, and Load) operations in Azure.

You also saw how Data Factory jobs can be edited using pure JSON to gain fine control over the execution aspects of a Data Factory job. You used parameters in Data Factory to create scheduled jobs to automate the data movement operations on a recurring, weekly, basis.

Data Lake Store is a robust and virtually limitless data store that we will explore more fully in the next chapter when we examine Data Lake analytics.