Chapter 1

Fundamentals of Cloud Computing

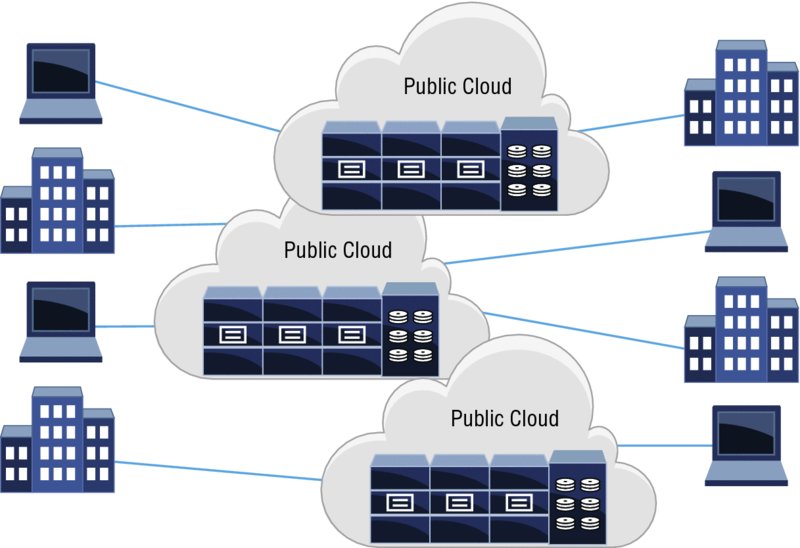

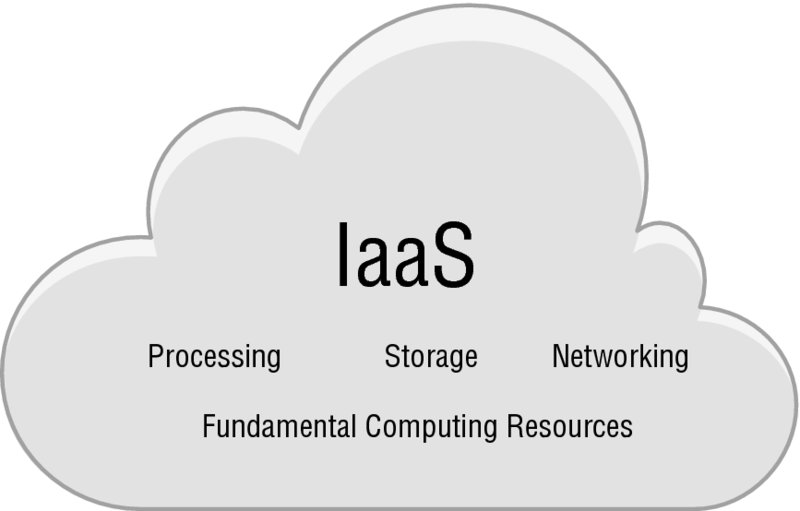

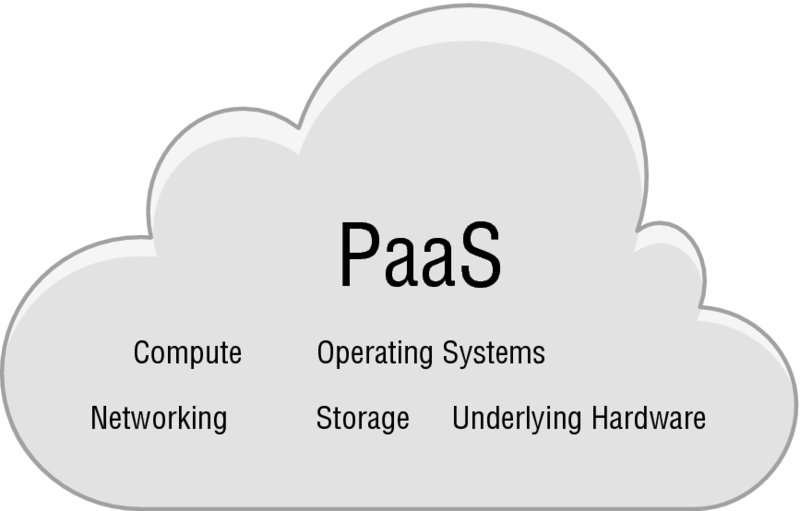

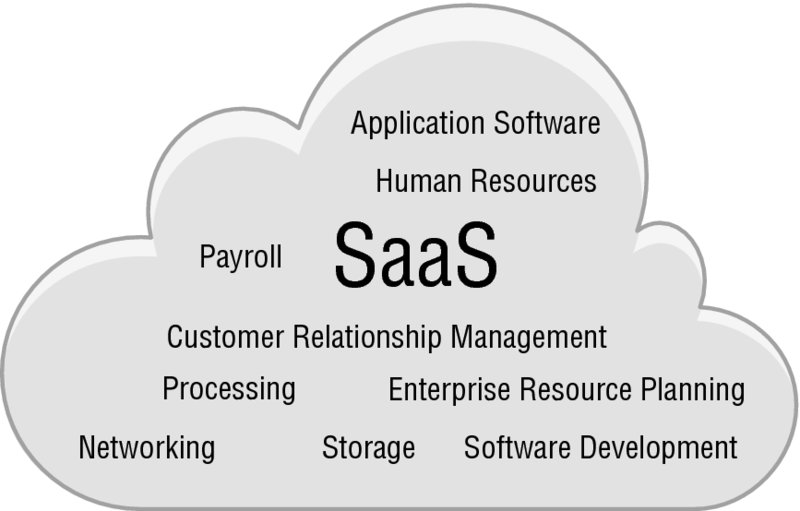

The following Understanding Cisco Cloud Fundamentals CLDFND (210-451) Exam Objectives are covered in this chapter: ✓ 1.0 Cloud characteristics and models You will set out on your journey to the destination of earning a Cisco Certified Networking Associate in cloud computing by taking a look at the big picture of this phenomenon called the cloud. You will be introduced to many definitions and concepts that will better enable you to truly understand the finer details of cloud computing as you progress on your certification journey. This book will investigate both the many generic aspects of the cloud and the details and technology pieces of the Cisco cloud service offerings. Throughout this chapter and book you will be comparing the old with the new, in other words, the traditional data center with the new cloud-based computing model. With cloud computing, you pay for only what you use and do not own the equipment as is usually the case in the traditional world of corporate data centers. Cloud computing allows you to not have to be involved with the day-to-day needs of patching servers, swapping out failed power supplies and disk drives, or doing any of the other myriad of issues you have to deal with when you own and maintain your own equipment. The cloud allows you to scale up and down on your computing needs very quickly and to pay for only what you use and not for any standby capacity. You can now deploy your applications closer to your customers no matter where they are located in the world. Also, smaller companies can use advanced services that were usually available only with the budgets of the largest corporations in the world. Cloud computing is also different from the corporate IT models in that the time to spin up new services is greatly reduced. There are no large, up-front financial commitments either; you are renting capacity instead of purchasing it. In the distant past, each and every factory or town that needed power would be responsible for the generation and distribution of its own electricity. The same was also true for many other services such as water, gas, and telephone services that we take for granted today. The up-front costs, ongoing maintenance, and general headaches of operating a power plant to run your business is unthinkable today, but this was actually commonplace in the past. A manufacturing company, for example, would rather not concentrate on making the power to run its factory but would instead rather focus on their area of expertise and purchase electricity as needed from a utility that specializes in the generation and distribution of electrical power. However, at one time, that was not an option! There was also the matter of economy of scale; it is much cheaper per unit to generate power with a single large facility than having many small generation plants connected to each individual factory in every town. The computing industry took a very different track before the cloud. Companies that needed computing resources to effectively run their companies would purchase their own large computer systems. This came with a heavy up-front expense not only for the computer systems and all of the needed peripheral equipment; they also needed to build expensive rooms to put these machines in. Since computers can be temperamental, the data centers needed to be cooled and the heat emitting from the computers needed to be removed from the data center. Computers are also rather finicky on electricity, demanding a steady and stable power source and lots of it! Then there was the staff of engineers (such as your two authors!) who meticulously designed, installed, and maintained these systems. There were programmers and too many axillary products and subsystems to count! Don’t worry, we are going to talk about a lot of these systems, but believe us, you need to have an extensive array of support products, software, and personnel to pull all of this off. What happens when your business fluctuates and you are busy during the holiday season and not so much the rest of the year, as in the case of a retailer? Well, you will need to engineer and scale the data-processing capacity to meet the anticipated heaviest load (with some additional capacity just in case). This, of course, means that there is usually a whole lot of idle capacity the rest of the year. Yes, that’s right—that very expensive compute capacity and all the trimmings such as the staff and support equipment in the corporate data center just sits there unused the rest of the year, making your financial staff very upset. It only gets worse. What happens when you need to add capacity as your company, and with it your data-processing needs, grow? As you will learn, with the progress of technology and especially with virtualization and automation, the computing world has evolved greatly and can now be offered as a utility service just like electricity, gas, and water. Sure, we had to give it the fancy name of cloud computing, but it is the process of running applications on a computer to support your business operations. However, in the past when new capacity was required, a team of engineers would need to determine the best approach to accomplish the company’s goals and determine the equipment and software that would be required. Then the equipment list would go out for bid, or quotes would need to be gathered. There would be time for the purchasing cycle to get the equipment ordered. After some time, it would be delivered at the loading dock, and the fun would really begin. The equipment would need to be unpackaged, racked, cabled, and powered up, and then usually a fair amount of configuration would take place. Then the application teams would install the software, and even more configuration would be needed. After much testing, the application would go live, and all would be good. However, this could take a very long time, and as that equipment aged, a new refresh cycle would begin all over again. With the utility model of computing, or the cloud, there are no long design, purchasing, delivery, setup, and testing cycles. You can go online, order a compute instance, and have everything up and running in literally minutes. Also, the financial staff is very happy as there are no capital expenditures or up-front costs. It is just like electricity—you pay for only what you use! The new model of computing has largely been made possible with the widespread deployment of virtualization technologies. While the concept of taking one piece of computing hardware and running many virtual computers inside of it goes back decades to the days of mainframe computers, new virtualization software and cheap, powerful hardware has brought virtualization to the mainstream and enabled the ability to do many amazing things in the cloud, as you will explore throughout this study guide. Now, with a powerful server running on commodity silicon, you can run hundreds of virtual machines on one piece of hardware; in the past, a physical server would often be used for each application. Many other functions of data center operations have also been virtualized such as load balancers, firewalls, switches, and routers. The more that is virtualized, the more cost-effective cloud services are becoming. Also, the management and automation systems that were developed around the virtualized data center allow for fast implementation of complete solutions from the cloud provider. It is often as simple as logging into a cloud dashboard using a common web browser and spinning up multiple servers, network connections, storage, load balances, firewalls, and, of course, applications. This level of automation enabled by virtualization is going to be a focus of the CCNA Cloud certification. Is this the same old data center that has been moved to the other side of the Internet, or is this something new and completely different? Well, actually it is a little of each. What is new is that the time to bring new applications online has been greatly reduced as the computing capacity already exists in the cloud data center and is available as an on-demand service. You can now simply order the compute virtual machines and associated storage and services just like you would any utility service. The cost models are now completely different in that the up-front costs have largely been eliminated, and you pay as you go for only what you are using. Also, if you require additional capacity, even for a short amount of time, you can scale automatically in the cloud and then shut down the added servers when your computing workload decreases. Because of the lower total cost of operations and short deployment times, the growth of cloud computing has been nothing short of phenomenal. Cloud companies that are only a few years old are now multibillion-dollar enterprises. The growth rates are in the double digits in this industry and seem to be increasing every year. The business model is just too great for almost any business to ignore. It can be difficult to get accurate numbers on the size of the cloud market because of company financial reporting not breaking out their cloud financials from other business entities. Also, no one seems to really agree on what cloud computing is in the first place. What is clear is that the growth of cloud computing is phenomenal and is accelerating every year as more and more companies move their operations to the cloud. It is common to see cloud growth estimates at 25 to 40 percent or even higher every year and accelerating for many years into the future with revenues way north of $100 billion per year. In later chapters, you will investigate what is going to be required to migrate your computing operations from the private data center to the cloud, as shown in Figure 1.1. Cloud migration may not be trivial given the nature of many custom applications and systems that have evolved over many years or are running on platform-specific hardware and operating systems. However, as virtualization technology has exploded and you gain more experience in the cloud, there are many tools and utilities that can make life much simpler (well, let’s just say less complicated) than doing migrations in the past. Figure 1.1 Migrations from the corporate data center to the cloud You cannot simply just take an application running on your corporate data centers and file transfer it to the cloud. Life is far from being that simple. The operating systems and applications running directly on a server, such as an e-mail server, will need to be converted to the virtual world and be compatible with the virtualization technologies used by the cloud providers. The process of migrating to the cloud is actually a very large business in itself, with many professional services teams working throughout the world every day assisting in the great migration to the cloud. In the past, the networking professional with a Cisco Certified Network Associate certification could often be found in the network room or wiring closet racking a switch or router and then configuring the VLANs and routing protocols required to make everything work. How is our world changing now that another company, the cloud company, owns the network and the computing systems? Should we all go look for another gig? Don’t worry, there will plenty for all of us to do! As you will learn on your journey to becoming a Cisco Certified Network Associate Cloud professional, the way you do things will certainly be changing, but there will always be a demand for your expertise and knowledge. The cloud engineer will still be doing configurations and working in consoles; it is just that you will be doing different but related tasks. Your work will be different than the world of IOS commands that you might know and love, but that is a good thing. You will be doing higher-level work on the cloud deployments and not be concerned with the underlying hardware and systems as much since that will be the responsibility of the cloud service providers. Also, the siloed world between the different groups in the data center, such as networking, storage, security, operating systems, and applications, will now be more integrated than the industry has ever been in the past. You will be concerned with not only the network but with storage, security, and all the other systems that must work together in both corporate and cloud data centers. As a student, there is much to learn to become CCNA Cloud certified. We will cover all the topics that the CCNA Cloud blueprint outlines and that is specific to Cisco’s intercloud and the family of products that are used, such as the UCS, the Nexus and MDS products, and the software management products in the Cisco portfolio. You will also need to learn much more than networking. Topics such as storage systems, operating systems, server hardware, and more will require your understanding and knowledge for you to be a cloud engineer. The cloud marketplace is growing and changing at a breakneck pace, and as such, you will need to be constantly learning the new offerings just to keep up. New services and offerings from the cloud providers are being released weekly, and existing products are constantly being improved and expanded. The learning will never stop! It is important to learn the terms and structure in the cloud that the CLDFND 210-451 exam covers as a strong knowledge base. From these topics, you can build and expand your knowledge in more specific and detailed topics in cloud computing. You are advised to fully understand the big picture of cloud computing and build on that as you gather knowledge and expertise. In this section, you will step back and look at how the world of computing has evolved over the decades. This will help you get some historical perspective and to see that what was once old can now be new again! Once you have an understanding of the past, we will give you a quick look at where we are today and make a few predictions of where we are headed. The history of computing is a rather large topic area, and some of us who have been in the industry for many years can sometimes be nostalgic of the good ol’ days. At the same, time we can admit to being shocked when walking through the Smithsonian museum in Washington, DC, and seeing “historical” equipment that we used to work on! In IT, it is not an understatement that the technology and, more specifically, the computing and networking industry have evolved at a very fast speed. It is also true that the only constant is change and that the change is happening at a faster pace than ever before. Back in the dark ages of computing, the computers were large, expensive, and complicated, and they had limited processing power by today’s standards. In fact, today the smartphones we carry in our pockets are more powerful than most of the room-sized computers of the past. The original architecture of computing was that of large, centralized systems that were accessed with remote systems with limited intelligence and computing power. Over the years, the large mainframes gave way to multiple minicomputers, as shown in Figure 1.2, which were still housed in climate-controlled computer rooms with dumb terminals using only a keyboard and monitor to access the processing capabilities of the minicomputers. Figure 1.2 Mainframe and minicomputers When the Intel-based servers entered the marketplace and local area networks made their first appearance, there was a fundamental shift in the industry. The power of computing moved out from the “big glass rooms” that computer rooms were called back then and into the departmental areas of the corporations. This new client-server architecture was that of distributed computing where a central file server was used to store data and offered printing services to a large number of personal computers; the central file server was the replacement of the dumb terminals used in the mainframe and minicomputer world (see Figure 1.3). This was a huge industry change where the end users of systems gained control over their computing requirements and operations. Figure 1.3 Client-server computing Client-server systems were not without their problems, however. Many islands of computing networks sprung up everywhere, and there were many proprietary protocols that meant most of these systems were not compatible with each other; hence, there was no interoperability. Data was now scattered all over the company with no central control of security, data integrity, or the ability to manage the company’s valuable data. Networking these client-server systems across a wide area network was at best expensive and slow and at worst unattainable. As the industry grew and evolved, it would frequently revert to what was old but cleaned up and presented as something new. One example is how the client-server systems were brought back into the data center, internetworked, and patched, with management and redundancy implemented; most importantly, that valuable data was collected and managed in a central area. Over time that myriad of proprietary, vendor-developed protocols, shown in Figure 1.4, slowly became obsolete. This was a major development in the networking industry as TCP/IP (Transmission Control Protocol/Internet Protocol) became the standard communications protocol and allowed the many different computing systems to communicate using a standardized protocol. Figure 1.4 Incompatible communications protocols During the consolidation era, wide area networking evolved from slow dial-up lines and either analog or subrate digital telco circuits that were all leased from the local phone companies for private use to a now open public network called the Internet with high-speed fiber optic networks widely available. With the opening of the Internet to the world and the emergence of the World Wide Web, the world became interconnected, and the computing industry flourished, with the networking industry exploding right along with it. Now that we have become interconnected, the innovation in applications opened a whole new area of what computing can offer, all leading up to the cloud computing models of today. Corporate-owned data center models that provide compute and storage resources for the company are predominate today. These data centers can be either company owned or leased from a hosting facility where many companies install equipment in secure data centers owned by a third party. As we discussed earlier in this chapter, traditional data centers are designed for peak workload conditions, with the servers being unused or lightly used during times of low workloads. Corporate data centers require a large up-front capital investment in building facilities, backup power, cooling systems, and redundant network connections in addition to the racks of servers, network equipment, and storage systems. Also, because of business continuity needs, there are usually one or more backup data centers that can take the workload should the primary data center go offline for any reason. In addition to the up-front costs, there are ongoing operational costs such as maintenance and support, power and cooling costs, a large staff of talented engineers to keep it all running, and usually a large number of programmers working on the applications that the companies require to operate their business. There are many other costs such as software licensing that add to the expense of a company operating its own data centers. This is not to say that modern data center operations are not using much of the same technology as the cloud data centers are using. They are. Server virtualization has been in use for many years, and large centralized storage arrays are found in the private data centers just as they are in the cloud facilities. With the current marketing hype surrounding cloud computing, many companies have rebranded their internal data centers to be private clouds. This is valid because they have exclusive use of the data center and implement automation and virtualization; many of the cloud technologies and processes are also found in the corporate data centers. Clearly the future of computing is to move to the shared model of service offerings of the cloud. This can be witnessed by the high growth rates of the large cloud providers. The move to the cloud has been nothing less than a massive paradigm shift in the industry to public clouds, as shown in Figure 1.5. The business case and financial models are too great to ignore. There is also the ability of cloud services to be implemented very rapidly and with elastic computing technology that can expand and contract on the fly. Companies can save the large capital expenses of owning and operating their own data centers and focusing on their core business while outsourcing their computing requirements to the cloud. Figure 1.5 Cloud computing model For smaller companies or startups, the low costs and advanced service offerings of the cloud companies are too great not to use cloud services. Also, as is apparent, the time to market or time to get an operation up and running is very fast with cloud services, where advanced services can be implemented in hours or a few short days. Technologies that were available only to large corporations with massive budgets to apply to information technologies are now available to the “little guys.” We are just getting started with widespread cloud technologies and offerings. The great migration to cloud computing has just begun, and we have a long way to go if we look at the recent and projected growth rate of the cloud service providers. The nature of the network engineer’s job is clearly going to change. How much and where it is headed is open for debate. We feel that the days of silos where each engineering discipline, such as networking, application support, storage, and the Linux and Windows teams will all blur together, and we must all become familiar with technologies outside of our core competencies. As you can see, what is old is now new again because the ability to take a piece of technology and virtualize it into many systems on the same hardware was the great enabler that got us to where we are today. Along the way, some amazing software was developed to implement and manage these new clouds. As you will see on your way to becoming CCNA Cloud certified, Cisco clearly intends to be a big player in this market and has developed a suite of cloud software and services to enable not only a single cloud but a group of clouds, the intercloud, to operate. This is a very exciting time for our industry! What is cloud computing? Well, that can be a bit complicated and convoluted with all of the marketing hype surrounding this technology area that has such a high growth rate and the attention of the world. A cynic would note that everything in the past has been relabeled to be “cloud this and cloud that.” Anything that was connected to the Internet and offered to the public now seems to be called a cloud service. The cloud marketplace is also very large and growing at a fast pace. Because of this rapid expansion, there are many sections and subsections to this new industry. There are many types of different clouds and services offered. Applications and technologies that have been in place for many years now have cloud attached to their name, while at the same time, the development of new cloud service offerings seems to be a daily event with a constant stream of new product announcements. Cloud computing is essentially outsourcing data center operations, applications, or a section of operations to a provider of computing resources often called a cloud company or cloud service provider. The consumer of cloud services pays either a monthly charge or by the amount of usage of the service. You will explore many different models and types of cloud computing in this book. While there may never be agreement on a clear and concise definition, you can turn to the organization that keeps track of these things, the National Institute of Standards and Technologies (NIST), as the authoritative source for what you are looking for. NIST SP 800-145 is the main source of cloud computing definitions. NIST defines cloud computing as: …a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models. That seems to the point and accurate, with no spin and just the facts. There seems to be agreement that the cloud is an on-demand self-service computing service and is the most common definition. That is something us as engineers really appreciate. Of course, behind this definition is so much technology it is going to make your head spin and sometimes overwhelm you, but that’s the business. In this book, you will investigate all of the models and characteristics and then dig into the technologies that make all of this work. When you are done, you will be proud to call yourself CCNA Cloud certified! There are many definitions of cloud computing. Some are accurate, and many are just spins on older, already existing offerings and technologies. It is advised that you understand the big picture of cloud computing and then dig into the finer details and not get caught up in the excitement surrounding cloud computing. It is our job to make it work, not to keep it polished for the whole world to see. While the definition of the cloud can be very generic and broad, you can get granular with the different types of service models and deployment models. These will be explored in detail in the following two chapters. Service models break down and define basic cloud offerings in what is provided by the cloud provider and what you will be responsible for as the cloud customer. For example, there are three main service categories that are defined (and many more that are not in these groups). The three main service models include Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). With Infrastructure as a Service, as shown in Figure 1.6, the cloud provider generally provides all the systems that make up a data center deployment such as the server platform, storage, and network but leaves the operating system and application responsibilities to the customer. This has been the predominate cloud service model until recently and allows the customer complete control and responsibility of the operating system and applications. Figure 1.6 Infrastructure as a Service The Platform as a Service model, shown in Figure 1.7, moves one step up the stack, and the cloud provider takes on operating system responsibilities. This is to say that the cloud company owns the responsibility of loading and maintaining either a Microsoft Windows operating system variant or one of the many different releases of Linux. With the Platform as a Service model, the cloud customer is responsible for the applications running in the cloud, and the provider takes care of all the underlying infrastructure and operating systems. Figure 1.7 Platform as a Service At the top of the stack is the Software as a Service model, as shown in Figure 1.8, which is just like it sounds: The cloud company provides a complete package including the application that can be a wide variety of solutions, such as database, enterprise resource planning, software development applications, or most any mainstream application that is currently running in corporate data centers. Figure 1.8 Software as a Service Cloud deployment models define the larger topic of where the cloud is deployed and who is responsible for the operations. There are four main cloud deployment models that include the public cloud, the private cloud, the community cloud, and finally the hybrid cloud, as illustrated in Figure 1.9. You will learn the details of these models in Chapter 3, but you will get an overview in this chapter. Figure 1.9 Cloud deployment models The public cloud is probably the most widely deployed and discussed, as illustrated in Figure 1.10. The public cloud is usually what we tend to think of when we hear the term cloud being tossed around. This includes the cloud companies that make up the intercloud, in other words, the major players in the market, such as Amazon Web Services, Microsoft Azure, and Google Cloud Services, along with many other companies. The public cloud is where a company offers cloud computing services to the public in the utility model covered earlier in the chapter. Figure 1.10 Multiple organizations sharing a public cloud service The private cloud is predominantly computing services that are privately owned and operated and are not open to the public. Corporate data centers fit into the private cloud definition. Private clouds are owned by a single organization such as a corporation or a third-party provider (see Figure 1.11). Private clouds can be either on-premises or off-site at a remote hosting facility with dedicated hardware for the private cloud. Figure 1.11 A single organization accessing a private cloud A community cloud, as shown in Figure 1.12, is designed around a community of interest and shared by companies with similar requirements. For example, companies in the healthcare or financial services markets will have similar regulatory requirements that have to be adhered to. A public cloud offering can be designed around these requirements and offered to that specific marketplace or industry. Figure 1.12 The community cloud based on common interests or requirements Finally, there is the hybrid cloud model, which is the interconnection of the various cloud models defined earlier, as illustrated in Figure 1.13. If a corporation has its own private cloud but is also connected to the public cloud for additional services, then that would constitute a hybrid cloud. A community cloud used for specific requirements and also connected to the public cloud for general processing needs would also be considered a hybrid cloud. Figure 1.13 The hybrid is a combination of clouds. As you will learn, the intercloud technologies from Cisco enable the hybrid cloud model. This will be investigated in detail in the second part of this book that covers the Cisco Cloud Administration (CLDM) 210-455 exam. This section will provide an overview of the modern data center and the business models, deployment models, and support services. The section will also discuss how to design a data center for the anticipated workload. Since the topic of data center design and operations could fill many books, we will not attempt to go into great detail as it is not required for the Understanding Cisco Cloud Fundamentals exam 210-451. However, it is important to understand the layout and basic designs of the modern data center, which is what we will accomplish in this section. There is a common design structure to the modern data center, no matter if it is a privately owned data center, a hosting facility, or a data center that is owned by a cloud company. They are all data centers, and the structure and design are common between them all. We will start by taking a closer look at what their basic requirements are. Data centers are going to consume a rather large amount of electrical power and need access to high-speed communications networks. Since they may contain sensitive or critical data, both data security and physical security are requirements. From those fundamental needs, we will look at the various components of the data center to allow you to have a good understanding of all the pieces that come together to make a fully functioning modern data center. It is a truism that data centers are real power sinks! The servers, storage systems, network gear, and supporting systems all require electricity to operate. Most data centers tend to be quite large to obtain economies of scale that make them more cost effective. This means that being connected to a power grid that has capacity and stability is critical. Also, plans must be made for the eventuality of a power failure. What do you do then? A modern data center can generate its own power if the main power grid from the local utility fails. There may also be massive racks of battery or other stored power systems to power the data center momentarily between the time the power grid fails and the local data generation systems come online. Data must come and go not only inside the systems in the data center, which is commonly called east-west traffic, but also from the outside world, which is referred to as north-south data. This will require the ability to connect to high-speed data networks. These fiber networks can be owned and operated by the same company that owns the data center or, more likely, a service provider that specializes in networking services. These networks tend to be almost exclusively fiber-optic, and it is preferable to have more than one entry point into the data center for the fiber to avoid a single point of failure. You will also notice that there will be two or more service providers connected to allow for network connectivity if one of the provider’s networks experiences problems and there is a need to fail over to the backup network provider. With the power and communications addressed, you will find that data centers are located in secure areas with security gates, cameras, and guards protecting the physical building and its grounds. Inside of the data center you will find distribution systems for power and communications and an operations center that is tasked with monitoring and maintaining operations. The floor for the data center will be raised tiles to allow cable trays for the power and sometimes communications cables to interconnect the racks inside the computer room. There will be rows of equipment cabinets to house all of the servers, storage systems, switches, routers, load balancers, firewalls, monitoring systems, and a host of other hardware needed for operations. In addition, all of the electronics in the data center generate a lot of thermal heat. This is not trivial and must be addressed with large cooling systems, or chillers, as we like to call them, to get the heat out of the data center to protect the electronics inside the enclosures. While no two data centers seem to be the same, these basic components are what make up the modern data centers found around the world. Cloud-based business models can be distilled to four basic models that IT channel companies are adopting. While there are, of course, a large variety of companies in the cloud business space, each with their own way of doing business, we will look at the more common models. The “build it” model is a natural progression in the IT industry to help customers build a private cloud. There are also many companies that have built very large public cloud offerings. The “provide or provision” category providers can either resell or relabel a vendor’s cloud offering. They can also operate their own data center and sell homegrown cloud services. Many companies follow the “manage and support” model, which includes ongoing management and support of cloud-based services such as remote monitoring and management and security offerings. With the “enabling or integration model” solution, providers work with customers in a professional services role to assist in the business and technical aspects of their startup and ongoing cloud operations. Data center deployment models encompass subjects such as usable square footage, the density of server and storage systems, and cooling techniques. In the cloud it is common to use a regional deployment model and implement multiple data centers per region into availability zones. A cloud service provider will offer regions for customers to provide their deployments. For example, there may be USA Central, Brazil, and China East regions that are selected to deploy your cloud applications. Each region will be segmented into multiple availability zones that allow for redundancy. An availability zone is usually a complete and separate data center in the same region. Should one zone fail, cloud providers can fail over to another availability zone for continuity and to alleviate a loss of service. Data center operations concern the workflow and processes conducted in the data center. This includes both compute and noncompute operations specific to the data center; the operations include all the processes needed to maintain the data center. Infrastructure support includes the ongoing installation, cabling, maintenance, monitoring, patching and updating of servers, storage, and networking systems. Security is an important component to operations, and there are both physical and logical security models. Security includes all security processes, tools, and systems to maintain both physical and logical security. Power and cooling operations maintain sufficient power, and cooling is reliably available in the data center. Of course, there will also be a management component that includes policy development, monitoring, and enforcement. The data center must be scaled not only for the present but for the anticipated workload. This can be tricky as there are many unknowns. Server technology is constantly evolving, allowing you to do more processing in less rack space, and the silicon used in the physical devices has become more power efficient as well, enabling more processing per power unit. When designing a data center, items such as square footage, power, cooling, communications, and connections all must be considered. In this section, we will contrast and compare what makes the cloud model different from the traditional corporate computing model. The traditional corporate data center utilizes the approach of purchasing and having available enough additional computing capacity to allow for times when there is a peak workload. This is terribly inefficient as it requires a significant up-front investment in technology that may rarely get used but that needs to be available during the times it is required. Also, the lead times from concept to deployment in the data center can take months or even years to complete. The differentiation is that, in theory at least, the resources are always available in the cloud and just sitting there waiting to be turned on at the click of an icon with your mouse. The cloud computing model then is that of a utility, as we have discussed. You order only what you need and pay for only what you use. The cloud model has many of the advantages of traditional data centers in that there is a huge, and constantly growing, amount of advanced offerings that would never have been possible to implement for most small and medium-sized businesses, or even large ones in some cases. If you are a business located in the United States but have many customers in Asia, you may want to store your web content in Japan, for example, to provide for faster response times in Asia. In a traditional data center model, this would probably not be practical. With these services offered, your cloud provider may have access points in many Asian countries with your web content locally stored for fast response times since the request no longer has to come all the way to the United States for the data be served out of the local web server and the content returned to Asia. Another advanced and costly service that is out of the realm of possibility for most companies is the cloud feature of availability zones offered in the cloud; for example, if you as the cloud provider have a data center go down, you can automatically shift all of your cloud services to another availability zone, and the people accessing your site in the cloud may not have even noticed what happened. There are many other examples of the differences between the traditional data center model and the cloud as it is today and as it continues to evolve. We will explore these differences and contrast the advantages of cloud computing in this book as we go along. In this section, you will learn about the common characteristics that make up a cloud computing operation. We will start with the core principle of the cloud model, which is on-demand computing, and then move on to discuss the ability to automatically expand and contract by discussing the concept of elasticity. We will look at several scaling techniques commonly deployed, including whether to scale up or scale out. The concept of resource pooling will be explored with a look at the various types of pools such as CPUs, memory, storage, and networking. Metered services will be discussed, and you will learn that with the ability to measure the consumed services, the cloud company can offer many different billing options. Finally, we will end this section by exploring the different methods commonly utilized to access the cloud remotely. The on-demand cloud services allow the customer to access a self-service portal, usually with a web browser or application programmable interface (API), and instantly create additional servers, storage, processing power, or any other services as required. If the computing workload increases, then additional resources can be created and applied as needed. On-demand allows you to consume cloud services only as needed and scale back when they are no longer required. For example, if your e-commerce site is expecting an increased load during a sales promotion that lasts for several weeks, on-demand services can be used to provision the additional resources of the website only during this time frame, and when the workload goes back to normal, the resources can be removed or scaled back. You can also refer to on-demand self-service as a just-in-time service because it allows cloud services to be added as required and removed based on the workload. On-demand services are entirely controlled by the cloud customer, and with cloud automation systems, the request from the customer will be automatically deployed in a short amount of time. Elasticity provides for on-demand provisioning of resources in near real time. The ability to add and remove or to increase and decrease computing resources is called elasticity. Cloud resources can be storage, CPUs, memory, and even servers. When the cloud user’s deployment experiences a shortage of a resource, the cloud automation systems can automatically and dynamically add the needed resources. Elasticity is done “on the fly” as needed and is different from provisioning servers with added resources that may be required in the future. Elasticity allows cloud consumers to automatically scale up as their workload increases and then have the cloud automation systems remove the services after the workload subsides. With cloud management software, performance and utilization thresholds can be defined, and when these metrics are met, the cloud automation software can automatically add resources to allow the metrics to remain in a defined range. When the workload subsides, the service that was added will be automatically removed or scaled back. With elastic computing, there is no longer any need to deploy servers and storage systems designed to handle peak loads—servers and systems that may otherwise sit idle during normal operations. Now you can scale the cloud infrastructure to what is the normal load and automatically expand as needed when the occasion arises. When additional computing capacity is needed in the cloud, the scaling up approach is one in which larger and more power servers are used to replace a smaller system, as shown in Figure 1.14. Instead of adding more and additional servers, for example, the scale-up model will instead replace the smaller with the larger. Figure 1.14 Scaling up increases the capacity of a server. You will learn throughout this book that there is a lot more to cloud capacity than just raw compute power. For example, a system may have more than enough CPU capacity but instead need additional storage read/write bandwidth if it is running a database that needs constant access with fast response times. Another example of scaling up would be a web server that sends large graphics files to remote connections and is constantly saturating its LAN bandwidth and necessitating the need to add more network I/O bandwidth. Cloud service providers offer many options when selecting services to meet your requirements, and they offer multiple tiers of capacity to allow for growth. For example, there are many different server virtual machine options available in the cloud that offer scalable compute power, with others designed for different storage applications, graphics processing, and network I/O. Scaling out is the second, and arguably more common, approach to additional capacity in the cloud, as shown in Figure 1.15. The scaling-out design utilizes additional resources that are incrementally added to your existing cloud operations instead of replacing them with a larger and more powerful system. Figure 1.15 Scaling out by adding additional servers to accommodate increased workloads For example, if the cloud web servers are experiencing a heavy load and the CPU utilization exceeds a predefined threshold of say, 85 percent, additional web servers can be added to the existing web servers to share the workload. The use of multiple web servers servicing the same web domain is accomplished with the use of load balancers that spread the connections across multiple web servers. There are many examples where elasticity can be a valuable cloud feature to implement to save money and maintain performance levels. It is desirable to design the cloud computing resources for your anticipated day-to-day needs and then to add resources as required. A company that needs to process data for business intelligence will often have a large processing job that would take a very long time to run on available servers that would then sit idle until the process repeats. In this case, many servers, sometimes in the hundreds, can be provisioned for the job to run in a timely manner, and when complete, the servers are removed, and the company will no longer be charged for their use. Many e-commerce retailers experience heavy traffic during the holiday season and then see their web traffic drop significantly for the rest of the year. It makes no sense to pay for the needed web capacity over the holiday season for the rest of the year. This is a prime use case for the value of cloud elasticity. As you are probably noticing, you can either scale up or scale out, and the provisioning can be done automatically or manually through the web configuration portal and using automation. Resource pooling is the term used to define when the cloud service provider allocates resources into a group, or pool, and then these pools are made available to a multitenant cloud environment. The resources that are pooled are then dynamically allocated and reallocated as the demand requires. Resource pooling takes advantage of virtualization technologies to abstract what used to be physical systems into virtual allocations or pools. Resource pooling hides the physical hardware from the virtual machines and allows for many tenants to share the available resources, as shown in Figure 1.16. Figure 1.16 Resource pooling A physical server may have 256 CPU cores and 1,204GB of RAM on the motherboard. The CPU and memory can be broken into pools, and these pools can be allocated to individual groups that are running virtual machines on the server. If a virtual machine needs more memory or CPU resources, with scaling and elasticity, these can be accessed from the pool. Since processing power, storage, network bandwidth, and storage are finite resources, the use of pooling will allow for their more efficient use since they are now being shared in a pool for multiple users to access as required. A physical nonvirtualized server running a single operating system uses the processing power of the CPUs installed on its motherboard. In the case of a virtualized server, it will have tens or even hundreds of virtual machines all operating on the same server at the same time. The virtualization software’s job is to allocate the physical or hard resources on the motherboard to the virtual machines running on it. The hypervisor virtualizes the physical CPUs into virtual CPUs, and then the VMs running on the hypervisor will be allocated these virtual CPUs for processing. By using virtualized CPUs, VMs can be assigned or allocated CPUs for use that are not physical but rather virtual. The virtualization function is the role of the hypervisor. Pools of CPUs can be created using administrative controls, and these pools are used or consumed by the virtual machines. When a VM is created, resources will be defined that will determine how much CPU, RAM, storage, and LAN capacity it will consume. These allocations can be dynamically expanded up to the hard quota limits based on the cloud provider’s offerings. Memory virtualization and its allocations to the virtual machines use the same concepts that we discussed in the previous section on virtualizing CPUs. There is a total and finite amount of RAM installed in a bare-metal or physical server. This RAM is then virtualized by the hypervisor software and allocated to the virtual machines. As with the allocation of processing resources, you can assign a base amount of RAM per virtual machine and dynamically increase the memory available to the VM based on the needs and limits configured for the virtual machine. When the VM’s operating system consumes all the available memory, it will begin to utilize storage for its operations. This swap file, as it is called, will be used in place of RAM for its operation and is undesirable as it results in poor performance. When configuring a VM, it is important to consider that storage space must be allocated for the swap file and that the storage latency of the swap file will have a negative impact on the performance of the server. We will cover storage systems in great detail in later chapters; however, to better understand cloud operations, we will explore storage allocations in the cloud here to gain a more complete understanding of basic cloud operations. Storage systems are usually separate systems from the actual physical servers in the cloud and in private data centers. These are large storage arrays of disks and controllers that are accessed by the servers over dedicated storage communication networks. As you can imagine, these storage arrays are massive and can store petabytes of data on each system, and there can be tens or even hundreds of these systems in a large data center. Each physical server will usually not have very many disk drives installed in it for use by the VMs running on that server. Often cloud servers will contain no hard drives at all, and storage will be accessed remotely. With these large storage systems being external from the servers and connected over a storage area network, the design of the SAN and storage arrays is critical for server and application performance. The systems must be engineered to avoid high read and write latency on the drives or contention over the storage network, for optimal performance. To alleviate performance issues, cloud providers use enterprise-grade storage systems with high disk RPM rates, fast I/O, sufficient bandwidth to the storage controller, and SAN controller interfaces that are fast enough to handle the storage traffic load across the network. Cloud service providers will offer a wide variety of storage options to meet the requirements of the applications. There can be fast permanent storage all the way to offline backup storage that can take hours or days to retrieve. Storage allocations can also be used temporarily and then deleted when the virtual machine is powered off. By using a multitiered storage model, the cloud providers offer storage services priced for the needs of their customers. One major advantage of designing the cloud storage arrays to be remote from the virtual machines they support is that this allows the cloud management applications to move the VMs from one hypervisor to another both inside a cloud data center or even between data centers. The VM will move and continue to access its storage over the storage network. This can even be accomplished where an application will continue to operate even as it is being moved between physical servers. Centralized storage is an enabler of this technology and is useful for maintenance, cloud bursting, fault tolerance, and disaster recovery purposes. As with the CPUs discussed in the previous section, storage systems are allocated into virtual pools, and then the pools are allocated for dynamic use by the virtual machines. Networking services can be virtualized like CPU cores and storage facilities are. Networking is virtualized in many different aspects, as the Understanding Cisco Cloud Fundamentals exam covers extensively. In this section, we will briefly discuss the virtualization of LAN network interface cards and switches in servers. NICs and switchports are virtualized and then allocated to virtual machines. A typical server in the cloud data center will be connected to an external physical LAN switch with multiple high-speed Ethernet LAN interfaces. These interfaces are grouped together or aggregated into channel groups for additional throughput and fault tolerance. LAN interfaces in the server are connected to a virtual switch running as a software application on the hypervisor. Each VM will have one or more connections to this virtual switch using its virtual NIC (vNIC). The LAN bandwidth and capacity is then allocated to each VM as was done with processing, memory, and storage discussed earlier. By using multiple physical NICs connecting to multiple external switches, the network can be designed to offer a highly fault-tolerant operation. However, since the available amount of LAN bandwidth is a finite resource just like the other resources on the server, it will be shared with all VMs running on the server. A cloud provider will meter, or measure, the usage of cloud resources with its network monitoring systems. Metering collects the usage data, which is valuable to track system utilization for future growth, and the data allows us to know when to use elasticity to add or remove and to scale up or scale down resources in the day-to-day operation of the cloud. This metered service data is also used for billing and reporting systems that can be viewed in the cloud management portal, or dashboard as it is commonly called. Metering is frequently used to measure resource usage for billing purposes. Examples are the amount of Internet bandwidth consumed, storage traffic over the SAN, gigabytes of storage consumed, the number of DNS queries received over a period of time, or database queries consumed. There are many examples and use cases for metering services in the cloud. Many cloud services can be purchased to run for a fixed amount of time; for example, batch jobs can be run overnight and with metered systems, and VMs can be brought online to run these jobs at a certain time and then shut down when the job is completed. Connections to the cloud provider are generally over the Internet. Since cloud data centers are at remote locations from the users accessing the services, there are multiple methods used to connect to the cloud. The most common method is to use a secure, encrypted connection over the public Internet. Web browsers can use SSL/TLS connections over TCP port 443, which is commonly known as an HTTPS connection. This allows a web browser to access the cloud services securely from any remote location that has an Internet connection. If the cloud customer needs to connect many users from a company office or its own data center, a VPN connection is commonly implemented from a router or a firewall to the cloud, and it offers network-to-network connectivity over the encrypted VPN tunnel. For high-bandwidth requirements, the solution is to connect to an interexchange provider that hosts direct high-speed network connections from the cloud to the exchange provider. The customer can also install a high-speed connection from their facility to the interexchange provider. At the exchange data center, a direct interconnection between the cloud provider and the corporate network is made. The direct connect model also supports cloud options such as cloud bursting and hybrid cloud designs where the corporate cloud and public cloud provider’s data centers are directly connected. The ability to take a software package and, by using segmentation, share it to serve multiple tenants or customers is called multitenancy. Multitenancy is a cost-effective approach when the costs of the application, its licenses, and its ongoing support agreements are shared among multiple customers accessing the application hosted in the cloud. In a multitenant application deployment, a dedicated share of the application is allocated to each customer, and the data is kept private from the other companies accessing the same application. The multi-instance model is a similar approach, with the difference being that each consumer has exclusive access to an instance of the application instead of one single application being shared. In this introductory chapter, we explored the history and evolution of computing, from the early days of computing to where we are today and where the industry is headed in the future. We then explored how cloud computing is different from the traditional models with the evolution to the utility model found in cloud computing. Virtualization was introduced, and you learned about the role it plays in the cloud. You then explored the many definitions of cloud computing, cloud growth, how to migrate operations from traditional data centers to the cloud, and how the role of the CCNA Cloud engineer will fit into working with the cloud models. The basic cloud models of public, private, hybrid, and community were each introduced and discussed. We then introduced data center business, deployment, and operational models. How to design a data center for anticipated workload was then discussed. This chapter concluded with a look at the common cloud characteristics, such as on-demand, elasticity, scaling, and pooling. These characteristics are important base concepts that will allow you to have a foundation for further discussions in future chapters. Keep the concepts covered in this chapter in mind. They provide a structure that you will build on as you progress on your journey to becoming a CCNA Cloud certified professional. Understand the basic terms and concepts of cloud computing service models. Study the service models of IaaS, PaaS, and SaaS, and understand their differences and what each model includes and excludes. Understand the basic concepts of virtualization. Know what virtualization is and how it is a key enabler of cloud computing. We will cover this in greater detail in later chapters. Know the primary cloud deployment models. You will also be expected to identify what Public, Private, Community, and Hybrid clouds are; the differences between them; and where they are best used. Understand the concepts of resource pooling. Be able to identify the pooled resources in a virtualized cloud that include CPU, memory, storage, and networking. This topic will be covered in greater detail in later chapters. Identify elasticity and scaling terminology and concepts. Cloud elasticity is the ability to add and remove resources dynamically in the cloud. Closely related to elasticity is scaling, where you can scale up your computed resources by moving to a larger server or scale out by adding additional servers. Fill in the blanks for the questions provided in the written lab. You can find the answers to the written labs in Appendix B. Name the three NIST service models of cloud computing. 1. _____________ 2. _____________ 3. _____________ Name the four main cloud deployment models. 1. _____________ 2. _____________ 3. _____________ 4. _____________ The following questions are designed to test your understanding of this chapter’s material. You can find the answers to the questions in Appendix A. For more information on how to obtain additional questions, please see this book’s Introduction. What model of computing allows for on-demand access without the need to provide internal systems and purchase technology hardware? When elastic cloud services are required, what model implements larger, more powerful systems in place of smaller virtual machines? Hypervisors and virtual machines implement what networking technologies in software? (Choose two.) What virtualization technology allocates pools of memory, CPUs, and storage to virtual machines? What is an essential component of the cloud? What is the ability to segment a software application to serve multiple tenants called? A cloud data center must be designed with what in mind? Memory pooling allows for the dynamic allocation of what resource? Which of the following are considered valid cloud deployment models? (Choose three.) The public cloud provides which of the following? (Choose three.) Which terms are NIST-defined cloud service models? (Choose three.) What three characteristics are common to the cloud? (Choose three.) When additional cloud capacity is required, what model adds virtual machines? Storage systems are interconnected to the virtual servers on the cloud using what communications technology? Tipofthehat.com is an e-commerce company that hosts its applications in its private cloud. However, during the busy holiday season, because of increased workload, it utilizes external cloud computing capacity to meet demand. What cloud deployment model is Tipfofthehat.com using? What are critical facilities of a modern data center? (Choose all that apply.) What is an example of a private dedicated connection to the cloud? What technology was instrumental in the advent of cloud computing? Which is not a valid cloud deployment model? Cloud service providers offer high availability by using what two data center deployments? (Choose two.)

An Introduction to Cloud Computing

How Cloud Computing Is Different from Traditional Computing

Computing as a Utility

The Role of Virtualization

What Cloud Computing Offers That Is New

The Growth of Cloud Computing

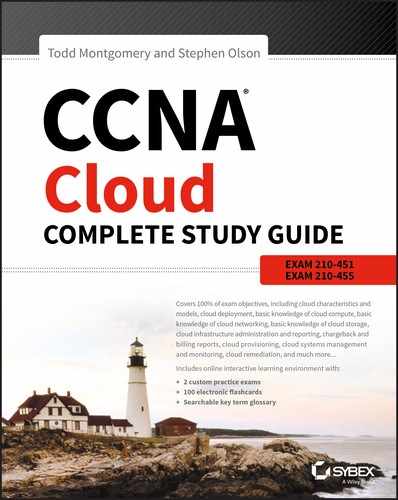

Migrating to the Cloud

A Look at How the CCNA Role Is Evolving

Preparing for Life in the Cloud

The Evolutionary History of Cloud Computing

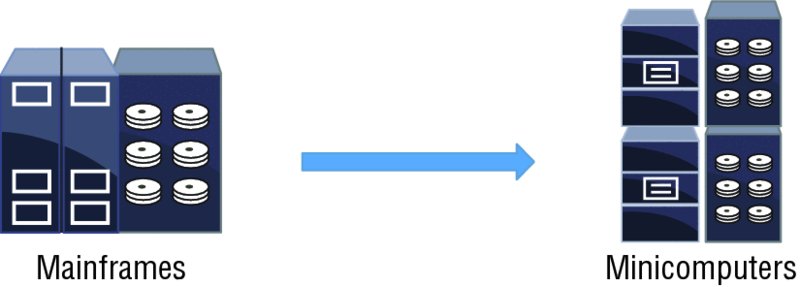

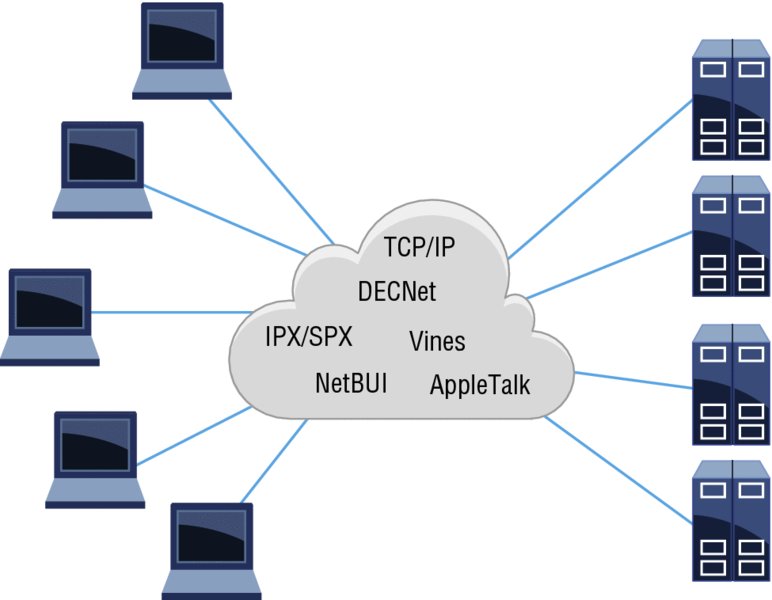

A Brief History of Computing

Computing in the Past

Computing in the Present Day

The Future of Computing

The Great Cloud Journey: How We Got Here

What Exactly Is Cloud Computing?

The NIST Definition of the Cloud

How Many Definitions Are There?

The Many Types of Clouds

Service Models

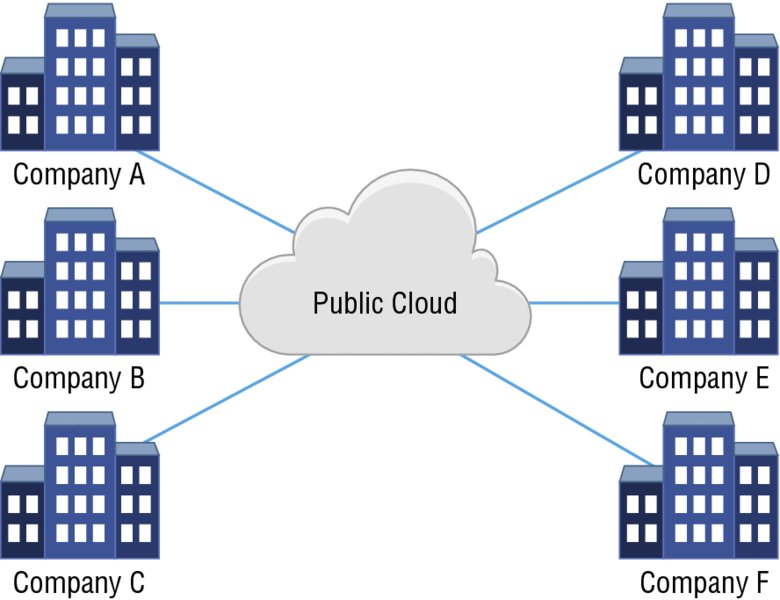

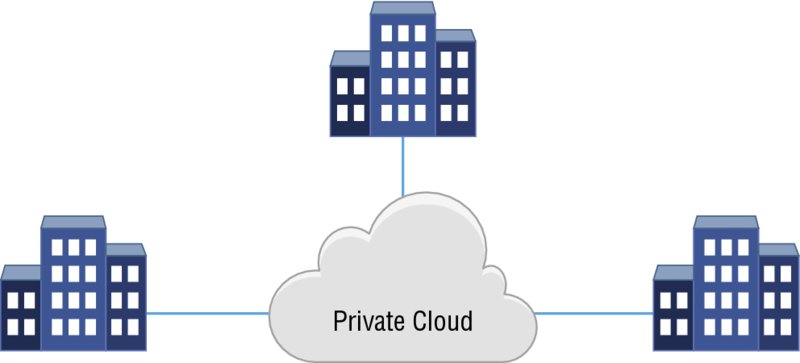

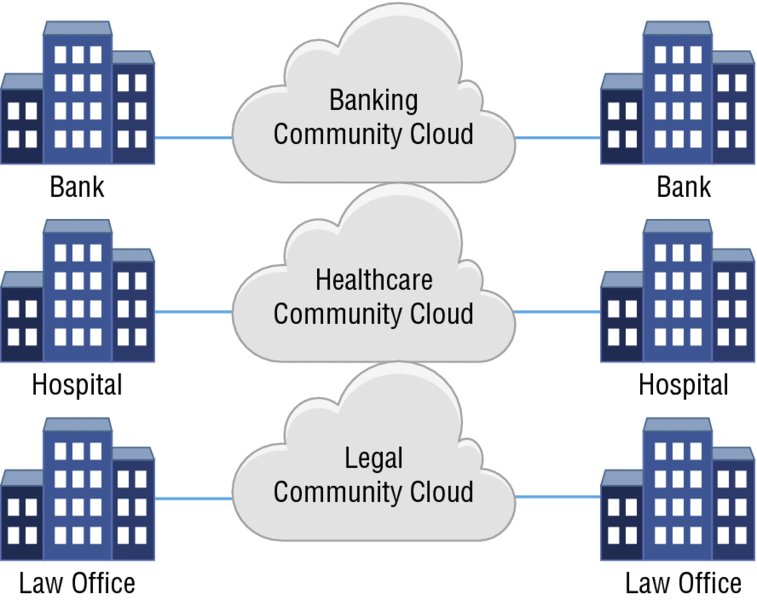

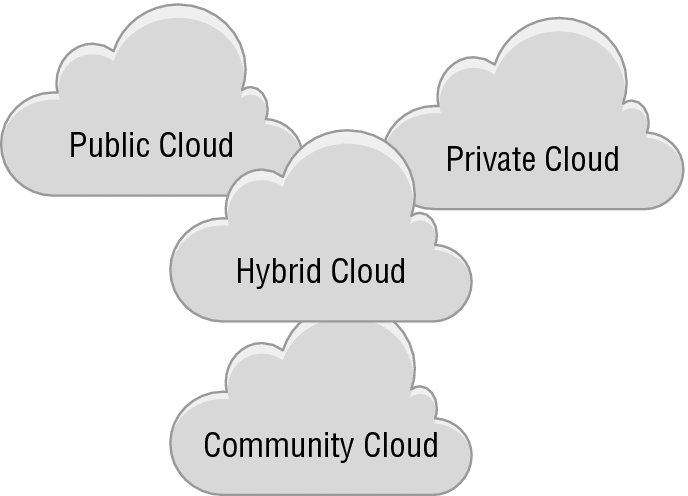

Deployment Models

Introducing the Data Center

The Modern Data Center

Business Models

Data Center Deployment Models

Data Center Operations

Designing the Data Center for the Anticipated Workload

The Difference Between the Data Center and Cloud Computing Models

Common Cloud Characteristics

On-Demand Self-Service

Elasticity

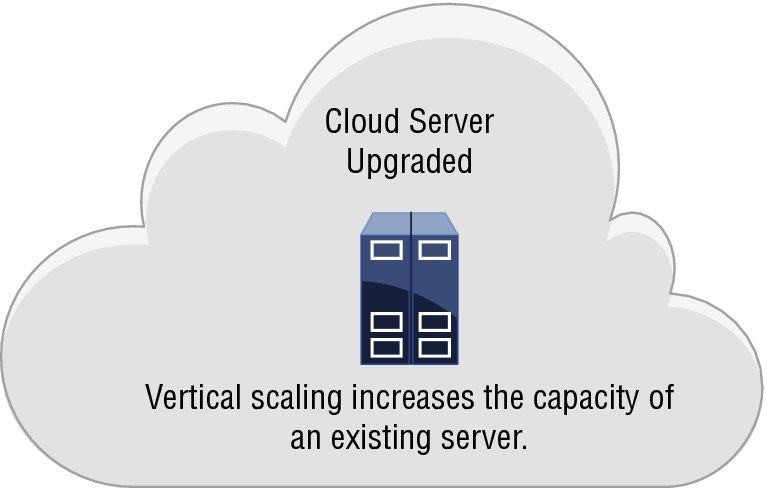

Scaling Up

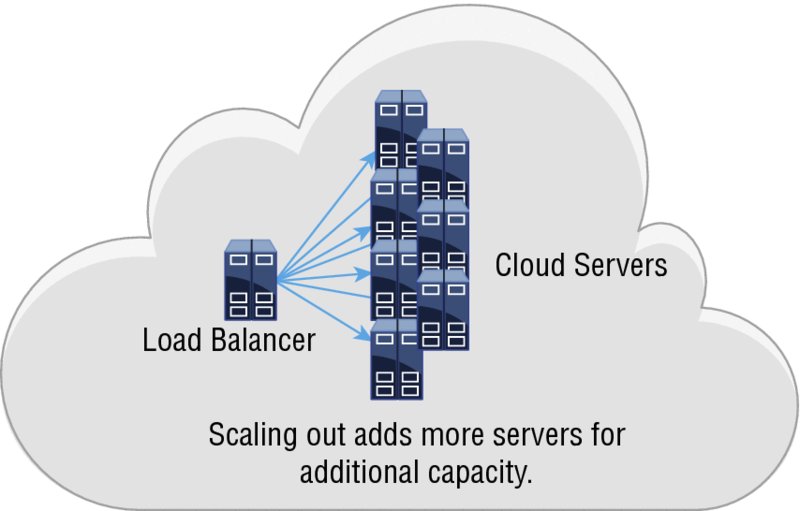

Scaling Out

Cloud Elasticity Use Cases

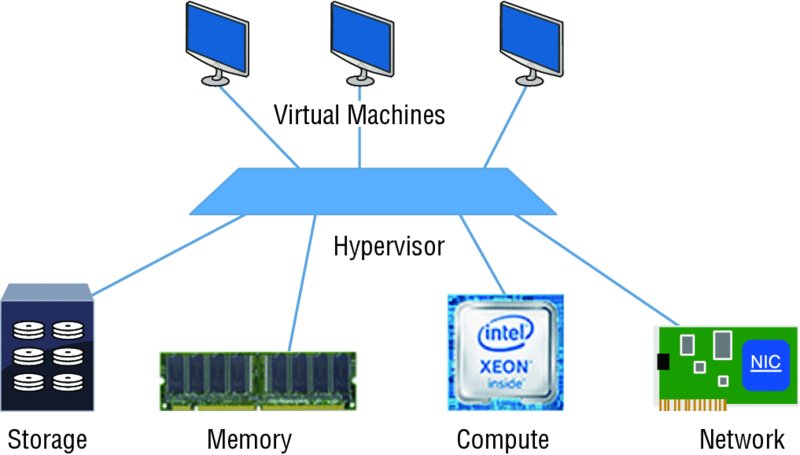

Resource Pooling

CPU

Memory

Storage

Networking

Metered Service

Cloud Access Options

Exploring the Cloud Multitenancy Model

Summary

Exam Essentials

Written Lab

Review Questions