Chapter Fifteen

Is This for Real?

In the previous chapter, you saw how you can wire relays together to make a 1-bit adder and then combine eight of them to add two bytes together. You even saw how those 8-bit adders could be cascaded to add even larger numbers, and you may have wondered, Is this really how computers add numbers?

Well, yes and no. One big difference is that computers today are no longer made from relays. But they were at one time.

In November 1937, a researcher at Bell Labs named George Stibitz (1904–1995) took home a couple of relays used in telephone switching circuits. On his kitchen table, he combined these relays with batteries, two lightbulbs, and two switches he made from strips of metal cut from tin cans. It was a 1-bit adder, just as you saw in the previous chapter. Stibitz later called it the “Model K” because he constructed it on his kitchen table.

The Model K adder was what would later be called a “proof of concept” that demonstrated that relays could perform arithmetic. Bell Labs authorized a project to continue this work, and by 1940, the Complex Number Computer was in operation. It consisted of somewhat over 400 relays and was dedicated to multiplying complex numbers, which are numbers consisting of both a real and an imaginary part. (Imaginary numbers are square roots of negative numbers and are useful in scientific and engineering applications.) Multiplying two complex numbers requires four separate multiplications and two additions. The Complex Number Computer could handle complex numbers with real and imaginary parts of up to eight decimal digits. It took about a minute to perform this multiplication.

This was not the first relay-based computer. Chronologically, the first one was constructed by Conrad Zuse (1910–1995), who as an engineering student in 1935 began building a machine in his parents’ apartment in Berlin. His first machine, called the Z1, didn’t use relays but simulated the function of relays entirely mechanically. His Z2 machine did use relays and could be programmed with holes punched in old 35mm movie film.

Meanwhile, around 1937, Harvard graduate student Howard Aiken (1900–1973) needed some way to perform lots of repetitive calculations. This led to a collaboration between Harvard and IBM that resulted in the Automated Sequence Controlled Calculator (ASCC), eventually known as the Harvard Mark I, completed in 1943. In operation, the clicking of the relays in this machine produced a very distinctive sound that to one person sounded “like a roomful of ladies knitting.” The Mark II was the largest relay-based machine, using 13,000 relays. The Harvard Computation Laboratory, headed by Aiken, taught the first classes in computer science.

These relay-based computers—also called electromechanical computers because they combined electricity and mechanical devices—were the first working digital computers.

The word digital to describe these computers was coined by George Stibitz in 1942 to distinguish them from analog computers, which had been in common use for several decades.

One of the great analog computers was the Differential Analyzer constructed by MIT professor Vannevar Bush (1890–1974) and his students between 1927 and 1932. This machine used rotating disks, axles, and gears to solve differential equations, which are equations involving calculus. The solution to a differential equation is not a number but a function, and the Differential Analyzer would print a graph of this function on paper.

Analog computers can be traced back further into history with the Tide-Predicting Machine designed by physicist William Thomson (1824–1907), later known as Lord Kelvin. In the 1860s, Thomson conceived a way to analyze the rise and fall of tides, and to break down the patterns into a series of sine curves of various frequencies and amplitudes. In Thomson’s words, the object of his Tide-Predicting Machine was “to substitute brass for brain in the great mechanical labour of calculating the elementary constituents of the whole tidal rise and fall.” In other words, it used wheels, gears, and pullies to add the component sine curves and print the result on a roll of paper, showing the rise and fall of tides in the future.

Both the Differential Analyzer and Tide-Predicting Machine were capable of printing graphs, but what’s interesting is that they did this without calculating the numbers that define the graph! This is a characteristic of analog computers.

At least as early as 1879, William Thomson knew the difference between analog and digital computers, but he used different terms. Instruments like his tide predictor he called “continuous calculating machines” to differentiate them from “purely arithmetical” machines such as “the grand but partially realized conceptions of calculating machines by Babbage.”

Thomson is referring to the famous work of English mathematician Charles Babbage (1791–1871). In retrospect, Babbage is historically anomalous in that he attempted to build a digital computer long before even analog computers were common!

At the time of Babbage (and for long afterward) a computer was a person who calculated numbers for hire. Tables of logarithms were frequently used to simplify multiplication, and tables of trigonometric functions were essential for nautical navigation and other purposes. If you wanted to publish a new set of mathematical tables, you would hire a bunch of computers, set them working, and then assemble the results. Errors could creep in at any stage of this process, of course, from the initial calculation to setting up the type to print the final pages.

Bettmann/Getty Images

Charles Babbage was a very meticulous person who experienced much distress upon encountering errors in mathematical tables. Beginning about 1820 he had an idea that he could build an engine that would construct these tables automatically, even to the point of setting up the type for printing.

Babbage’s first machine was called the Difference Engine, so named because it would perform a specific job related to the creation of mathematical tables. It was well known that constructing a table of logarithms doesn’t require calculating the logarithm for each and every value. Instead, logarithms could be calculated for select values, and then numbers in between could be calculated by interpolation, using what were called differences in relatively simple calculations.

Babbage designed his Difference Engine to calculate these differences. It used gears to represent decimal digits, and would have been capable of addition and subtraction. But despite some funding from the British government, it was never completed, and Babbage abandoned the Difference Engine in 1833.

By that time, Babbage had an even better idea, for a machine that he called the Analytical Engine. Through repeated design and redesign (with a few small models and parts actually built), it consumed Babbage off and on until his death. The Analytical Engine is the closest thing to a digital computer that the 19th century has to offer. In Babbage’s design, it has a store (comparable to our concept of memory) and a mill, which performs the arithmetic. Multiplication could be handled by repeated addition, and division by repeated subtraction.

What’s most intriguing about the Analytical Engine is that it could be programmed using cards punched with holes. Babbage got this idea from the innovative automated looms developed by Joseph Marie Jacquard (1752–1834). The Jacquard loom (circa 1801) used cardboard sheets with punched holes to control the weaving of patterns in silk. Jacquard’s own tour de force was a self-portrait in black and white silk that required about 10,000 cards.

Babbage never left us with a comprehensive, coherent description of what he was trying to do with his Analytical Engine. He was much more eloquent when writing a mathematical justification of miracles or composing a diatribe condemning street musicians.

It was up to Augusta Ada Byron, Countess of Lovelace (1815–1852), to compensate for Babbage’s lapse. She was the only legitimate daughter of the poet Lord Byron but was steered into mathematics by her mother to counteract what was perceived as a dangerous poetical temperament that Ada might have inherited from her father. Lady Lovelace studied with logician Augustus de Morgan (who has previously made an appearance in Chapters 6 and 8 of this book) and was fascinated by Babbage’s machine.

When the opportunity came to translate an Italian article about the Analytical Engine, Ada Lovelace took on the job. Her translation was published in 1843, but she added a series of notes that expanded the article to three times its original length. One of these notes contained a sample set of instructions for Babbage’s machine, therefore positioning Lovelace not quite as the first computer programmer (that would be Babbage himself), but as the first person who published a computer program.

Hulton Archive/Stringer/Getty Images

Those of us who have subsequently published tutorial computer programs in magazines and books can consider ourselves children of Ada.

To Ada Lovelace we owe perhaps the most poetical of descriptions of Babbage’s machine when she wrote “We may say that the Analytical Engine weaves algebraical patterns just as the Jacquard-loom weaves flowers and leaves.”

Lovelace also had a precociously visionary view of computing going beyond the mere calculation of numbers. Anything that could be expressed in numbers was a possible subject for the Analytical Engine:

Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent.

Considering that Babbage and Samuel Morse were almost exact contemporaries, and that Babbage also knew the work of George Boole, it’s unfortunate that he didn’t make the crucial connection between telegraph relays and mathematical logic. It was only in the 1930s that clever engineers began building computers from relays. The Harvard Mark I was the first computer to print mathematical tables, finally realizing Babbage’s dream over a hundred years later.

From the first digital computers in the 1930s to the present day, the entire history of computing can be summed up with three trends: smaller, faster, cheaper.

Relays are not the best devices for constructing a computer. Because relays are mechanical and work by bending pieces of metal, they can break after an extended workout. A relay can also fail because of a piece of dirt or stray paper stuck between the contacts. In one famous incident in 1947, a moth was extracted from a relay in the Harvard Mark II computer. Grace Murray Hopper (1906–1992), who had joined Aiken’s staff in 1944 and who would later become quite renowned in the field of computer programming languages, taped the moth to the computer logbook with the note “First actual case of bug being found.”

A possible replacement for the relay is the vacuum tube (called a “valve” by the British), which was developed by John Ambrose Fleming (1849–1945) and Lee de Forest (1873–1961) in connection with radio. By the 1940s, vacuum tubes had long been used to amplify telephones, and virtually every home had a console radio set filled with glowing tubes that amplified radio signals to make them audible. Vacuum tubes can also be wired—much like relays—into AND, OR, NAND, and NOR gates.

It doesn’t matter whether logic gates are built from relays or vacuum tubes. Logic gates can always be assembled into adders and other complex components.

Vacuum tubes had their own problems, though. They were expensive, required a lot of electricity, and generated a lot of heat. The bigger drawback was that they eventually burned out. This was a fact of life that people lived with. Those who owned tube radios were accustomed to replacing tubes periodically. The telephone system was designed with a lot of redundancy, so the loss of a tube now and then was no big deal. (No one expects the telephone system to work flawlessly anyway.) When a tube burns out in a computer, however, it might not be immediately detected. Moreover, a computer uses so many vacuum tubes that statistically they might be burning out every few minutes.

The big advantage of using vacuum tubes over relays was speed. At its very best, a relay only manages to switch in about a thousandth of a second, or 1 millisecond. A tube can switch in about a millionth of a second—one microsecond. Interestingly, the speed issue wasn’t a major consideration in early computer development, because overall computing speed was linked to the speed that the machine could read a program from a paper or film tape. As long as computers were built in this way, it didn’t matter how much faster vacuum tubes were than relays.

Beginning in the early 1940s, vacuum tubes began supplanting relays in new computers. By 1945, the transition was complete. While relay machines were known as electromechanical computers, vacuum tubes were the basis of the first electronic computers.

At the Moore School of Electrical Engineering (University of Pennsylvania), J. Presper Eckert (1919–1995) and John Mauchly (1907–1980) designed the ENIAC (Electronic Numerical Integrator and Computer). It used 18,000 vacuum tubes and was completed in late 1945. In sheer tonnage (about 30), the ENIAC was the largest computer that was ever (and probably will ever be) made. Eckert and Mauchly’s attempt to patent the computer was, however, thwarted by a competing claim by John V. Atanasoff (1903–1995), who earlier designed an electronic computer that never worked quite right.

The ENIAC attracted the interest of mathematician John von Neumann (1903–1957). Since 1930, the Hungarian-born von Neumann (whose last name is pronounced noy mahn) had been living in the United States. A flamboyant man who had a reputation for doing complex arithmetic in his head, von Neumann was a mathematics professor at the Princeton Institute for Advanced Study, and he did research in everything from quantum mechanics to the application of game theory to economics.

Bettmann/Getty Images

Von Neumann helped design the successor to the ENIAC, the EDVAC (Electronic Discrete Variable Automatic Computer). Particularly in the 1946 paper “Preliminary Discussion of the Logical Design of an Electronic Computing Instrument,” coauthored with Arthur W. Burks and Herman H. Goldstine, he described several features of a computer that made the EDVAC a considerable advance over the ENIAC. The ENIAC used decimal numbers, but the designers of the EDVAC felt that the computer should use binary numbers internally. The computer should also have as much memory as possible, and this memory should be used for storing both program code and data as the program was being executed. (Again, this wasn’t the case with the ENIAC. Programming the ENIAC was a matter of throwing switches and plugging in cables.) This design came to be known as the stored-program concept. These design decisions were such an important evolutionary step that today we speak of von Neumann architecture in computers.

In 1948, the Eckert-Mauchly Computer Corporation (later part of Remington Rand) began work on what would become the first commercially available computer—the Universal Automatic Computer, or UNIVAC. It was completed in 1951, and the first one was delivered to the Bureau of the Census. The UNIVAC made its prime-time network debut on CBS, when it was used to predict the results of the 1952 presidential election. Anchorman Walter Cronkite referred to it as an “electronic brain.” Also in 1952, IBM announced the company’s first commercial computer system, the 701.

And thus began a long history of corporate and governmental computing. However interesting that history might be, we’re going to pursue another historical track—a track that shrank the cost and size of computers and brought them into the home, and which began with an almost unnoticed electronics breakthrough in 1947.

Bell Telephone Laboratories came about when American Telephone and Telegraph officially separated their scientific and technical research divisions from the rest of their business, creating the subsidiary on January 1, 1925. The primary purpose of Bell Labs was to develop technologies for improving the telephone system. That mandate was fortunately vague enough to encompass all sorts of things, but one obvious perennial goal within the telephone system was the undistorted amplification of voice signals transmitted over wires.

A considerable amount of research and engineering went into improving vacuum tubes, but on December 16, 1947, two physicists at Bell Labs, John Bardeen (1908–1991) and Walter Brattain (1902–1987), wired a different type of amplifier. This new amplifier was constructed from a slab of germanium—an element known as a semiconductor—and a strip of gold foil. They demonstrated it to their boss, William Shockley (1910–1989), a week later. It was the first transistor, a device that some people have called the most important invention of the twentieth century.

The transistor didn’t come out of the blue. Eight years earlier, on December 29, 1939, Shockley had written in his notebook, “It has today occurred to me that an amplifier using semiconductors rather than vacuum is in principle possible.” And after that first transistor was demonstrated, many years followed in perfecting it. It wasn’t until 1956 that Shockley, Bardeen, and Brattain were awarded the Nobel Prize in physics “for their researches on semiconductors and their discovery of the transistor effect.”

Earlier in this book, I talked about conductors and insulators. Conductors are so called because they’re very conducive to the passage of electricity. Copper, silver, and gold are the best conductors, and it’s no coincidence that all three are found in the same column of the periodic table of the elements.

The elements germanium and silicon (as well as some compounds) are called semiconductors, not because they conduct half as well as conductors, but because their conductance can be manipulated in various ways. Semiconductors have four electrons in the outermost shell, which is half the maximum number that the outer shell can have. In a pure semiconductor, the atoms form very stable bonds with each other and have a crystalline structure similar to the diamond. Such materials aren’t good conductors.

But semiconductors can be doped, which means that they’re combined with certain impurities. One type of impurity adds extra electrons to those needed for the bond between the atoms. These are called N-type semiconductors (N for negative). Another type of impurity results in a P-type semiconductor.

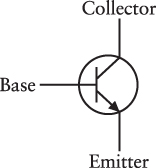

Semiconductors can be made into amplifiers by sandwiching a P-type semiconductor between two N-type semiconductors. This is known as an NPN transistor, and the three pieces are known as the collector, the base, and the emitter.

Here’s a schematic diagram of an NPN transistor:

A small voltage on the base can control a much larger voltage passing from the collector to the emitter. If there’s no voltage on the base, it effectively turns off the transistor.

Transistors are usually packaged in little metal cans about a quarter-inch in diameter with three wires poking out:

The transistor inaugurated solid-state electronics, which means that transistors don’t require vacuums and are built from solids, specifically semiconductors and most commonly silicon. Besides being much smaller than vacuum tubes, transistors require much less power, generate much less heat, and last longer. Carrying around a tube radio in your pocket was inconceivable. But a transistor radio could be powered by a small battery, and unlike tubes, it wouldn’t get hot. Carrying a transistor radio in your pocket became possible for some lucky people opening presents on Christmas morning in 1954. Those first pocket radios used transistors made by Texas Instruments, an important company of the semiconductor revolution.

However, the very first commercial applications of the transistor were hearing aids. In commemorating the heritage of Alexander Graham Bell in his lifelong work with deaf people, AT&T allowed hearing aid manufacturers to use transistor technology without paying any royalties.

The first transistor television debuted in 1960, and today tube appliances have almost disappeared. (Not entirely, however. Some audiophiles and electric guitarists continue to prefer the sound of tube amplifiers to their transistor counterparts.)

In 1956, Shockley left Bell Labs to form Shockley Semiconductor Laboratories. He moved to Palo Alto, California, where he had grown up. His was the first such company to locate in that area. In time, other semiconductor and computer companies set up business there, and the area south of San Francisco is now informally known as Silicon Valley.

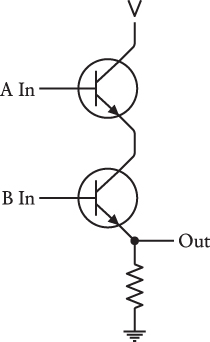

Vacuum tubes were originally developed for amplification, but they could also be used for switches in logic gates. The same goes for the transistor. Here’s a transistor-based AND gate structured much like the relay version:

Only when both the A input and the B input are voltages will both transistors conduct current and hence make the output a voltage. The resistor prevents a short circuit when this happens.

Wiring two transistors as you see here creates an OR gate. The collectors of both transistors are connected to the voltage supply and the emitters are connected:

Everything we learned about constructing logic gates and other components from relays is valid for transistors. Relays, tubes, and transistors were all initially developed primarily for purposes of amplification but can be connected in similar ways to make logic gates out of which computers can be built. The first transistor computers were built in 1956, and within a few years tubes had been abandoned for the design of new computers.

Transistors certainly make computers more reliable, smaller, and less power hungry, but they don’t necessarily make computers any simpler to construct. The transistor lets you fit more logic gates in a smaller space, but you still have to worry about all the interconnections of these components. It’s just as difficult wiring transistors to make logic gates as it is wiring relays and vacuum tubes.

As you’ve already discovered, however, certain combinations of transistors show up repeatedly. Pairs of transistors are almost always wired as gates. Gates are often wired into adders, or into decoders or encoders such as you saw at the end of Chapter 10. In Chapter 17, you’ll soon see a crucially important configuration of logic gates called the flip-flop, which has the ability to store bits, and a counter that counts in binary numbers. Assembling these circuits would be much easier if the transistors were prewired in common configurations.

This idea seems to have been proposed first by British physicist Geoffrey Dummer (1909–2002) in a speech in May 1952. “I would like to take a peep into the future,” he said.

With the advent of the transistor and the work in semiconductors generally, it seems now possible to envisage electronic equipment in a solid block with no connecting wires. The block may consist of layers of insulating, conducting, rectifying and amplifying materials, the electrical functions being connected directly by cutting out areas of the various layers.

A working product, however, would have to wait a few years.

Without knowing about the Dummer prediction, in July 1958 it occurred to Jack Kilby (1923–2005) of Texas Instruments that multiple transistors as well as resistors and other electrical components could be made from a single piece of silicon. Six months later, in January 1959, basically the same idea occurred to Robert Noyce (1927–1990). Noyce had originally worked for Shockley Semiconductor Laboratories, but in 1957 he and seven other scientists had left and started Fairchild Semiconductor Corporation.

In the history of technology, simultaneous invention is more common than one might suspect. Although Kilby had invented the device six months before Noyce, and Texas Instruments had applied for a patent before Fairchild, Noyce was issued a patent first. Legal battles ensued, and only after a decade were they finally settled to everyone’s satisfaction. Although they never worked together, Kilby and Noyce are today regarded as the coinventors of the integrated circuit, or IC, commonly called the chip.

Integrated circuits are manufactured through a complex process that involves layering thin wafers of silicon that are precisely doped and etched in different areas to form microscopic components. Although it’s expensive to develop a new integrated circuit, they benefit from mass production—the more you make, the cheaper they become.

The actual silicon chip is thin and delicate, so it must be securely packaged, both to protect the chip and to provide some way for the components in the chip to be connected to other chips. Early integrated circuits were packaged in a couple of different ways, but the most common is the rectangular plastic dual inline package (or DIP), with 14, 16, or as many as 40 pins protruding from the side:

This is a 16-pin chip. If you hold the chip so the little indentation is at the left (as shown), the pins are numbered 1 through 16 beginning at the lower left and circling around the right side to end with pin 16 at the upper left. The pins on each side are exactly ![]() inch apart.

inch apart.

Throughout the 1960s, the space program and the arms race fueled the early integrated circuits market. On the civilian side, the first commercial product that contained an integrated circuit was a hearing aid sold by Zenith in 1964. In 1971, Texas Instruments began selling the first pocket calculator, and Pulsar the first digital watch. (Obviously the IC in a digital watch is packaged much differently from the example just shown.) Many other products that incorporated integrated circuits in their design followed.

In 1965, Gordon E. Moore (then at Fairchild and later a cofounder of Intel Corporation) noticed that technology was improving in such a way that the number of transistors that could fit on a single chip had doubled every year since 1959. He predicted that this trend would continue. The actual trend was a little slower, so Moore’s law (as it was eventually called) was modified to predict a doubling of transistors on a chip every 18 months. This is still an astonishingly fast rate of progress and reveals why home computers always seem to become outdated in just a few short years. Moore’s law seems to have broken down in the second decade of the 21st century, but reality is still coming close to the prediction.

Several different technologies were used to fabricate the components that make up integrated circuits. Each of these technologies is sometimes called a family of ICs. By the mid-1970s, two families were prevalent: TTL (pronounced tee tee ell) and CMOS (see moss).

TTL stands for transistor-transistor logic. These chips were preferred by those for whom speed was a primary consideration. CMOS (complementary metal-oxide-semiconductor) chips used less power and were more tolerant of variations in voltages, but they weren’t as fast as TTL.

If in the mid-1970s you were a digital design engineer (which meant that you designed larger circuits from ICs), a permanent fixture on your desk would be a 1¼-inch-thick book first published in 1973 by Texas Instruments called The TTL Data Book for Design Engineers. This is a complete reference to the 7400 (seventy-four hundred) series of TTL integrated circuits sold by Texas Instruments and several other companies, so called because each IC in this family is identified by a number beginning with the digits 74.

Every integrated circuit in the 7400 series consists of logic gates that are prewired in a particular configuration. Some chips provide simple prewired gates that you can use to create larger components; other chips provide common components.

The first IC in the 7400 series is number 7400 itself, which is described in the TTL Data Book as “Quadruple 2-Input Positive-NAND Gates.” What this means is that this particular integrated circuit contains four 2-input NAND gates. They’re called positive NAND gates because a 5-volt input (or thereabouts) corresponds to logical 1 and a zero voltage corresponds to 0. This is a 14-pin chip, and a little diagram in the data book shows how the pins correspond to the inputs and outputs:

This diagram is a top view of the chip (pins on the bottom) with the little indentation (shown on page 193) at the left.

Pin 14 is labeled VCC and is equivalent to the V symbol that I’ve been using to indicate a voltage. Pin 7 is labeled GND for ground. Every integrated circuit that you use in a particular circuit must be connected to a common 5-volt power supply and a common ground. Each of the four NAND gates in the 7400 chip has two inputs and one output. They work independently of each other.

One important fact to know about a particular integrated circuit is the propagation time—the time it takes for a change in the inputs to be reflected in the output.

Propagation times for chips are generally measured in nanoseconds, abbreviated nsec. A nanosecond is a very short period of time. One thousandth of a second is a millisecond. One millionth of a second is a microsecond. One billionth of a second is a nanosecond. The propagation time for the NAND gates in the 7400 chip is guaranteed to be less than 22 nanoseconds. That’s 0.000000022 seconds, or 22 billionths of a second.

If you can’t get the feel of a nanosecond, you’re not alone. But if you’re holding this book 1 foot away from your face, a nanosecond is the time it takes the light to travel from the page to your eyes.

Yet the nanosecond is what makes computers possible. Each step the computer takes is a very simple basic operation, and the only reason anything substantial gets done in a computer is that these operations occur very quickly. To quote Robert Noyce, “After you become reconciled to the nanosecond, computer operations are conceptually fairly simple.”

Let’s continue perusing the TTL Data Book for Design Engineers. You will see a lot of familiar little items in this book: The 7402 chip contains four 2-input NOR gates, the 7404 has six inverters, the 7408 has four 2-input AND gates, the 7432 has four 2-input OR gates, and the 7430 is an 8-input NAND gate:

The abbreviation NC means no connection.

Moving right along in the TTL Data Book, you’ll discover that the 7483 chip is a 4-bit binary full adder, 74151 is an 8-line-to-1-line data selector, and the 74154 is a 4-line-to-16-line decoder.

So now you know how I came up with all the various components I’ve been showing you in this book. I stole them from the TTL Data Book for Design Engineers.

One of the interesting chips you’ll encounter in that book is the 74182, called a look-ahead carry generator. This is intended to be used with another chip, the 74181, that performs addition and other arithmetic operations. As you saw when an 8-bit adder was built in Chapter 14, each bit of the binary adder depends on the carry from the previous bit. This is known as a ripple carry. The bigger the numbers you want to add, the slower you’ll get the result.

A look-ahead carry generator is designed to ameliorate this trend by providing circuitry specifically for calculating a carry bit in less time than the adder itself could. This special circuitry requires more logic gates, of course, but it speeds up the total addition time. Sometimes a circuit can be improved by redesigning it so that logic gates can be removed, but it is very often the case that a circuit can be speeded up by adding more logic gates to handle specific problems.

Logic gates aren’t metaphors or imaginary objects. They are very real. Logic gates and adders were once built out of relays, and then relays were replaced by vacuum tubes, and vacuum tubes were replaced by transistors, and transistors were replaced by integrated circuits. But the underlying concepts remained exactly the same.