CHAPTER 5

Implementing Vulnerability Management Processes

In this chapter you will learn:

• The requirements for a vulnerability management process

• How to determine the frequency of vulnerability scans you need

• The types of vulnerabilities found in various systems

• Considerations when configuring tools for scanning

Of old, the expert in battle would first make himself invincible and then wait for his enemy to expose his vulnerability.

—Sun Tzu

Vulnerability Management Requirements

Like many other areas in life, vulnerability management involves a combination of things we want to do, things we should do, and things we have to do. Assuming you don’t need help with the first, we’ll focus our attention for this chapter on the latter two. First of all, we have to identify the requirements that we absolutely have to satisfy. Broadly speaking, these come from external authorities (for example, laws and regulations), internal authorities (for example, organizational policies and executive directives) and best practices. This last source may be a bit surprising to some, but keep in mind that we are required to display due diligence in our application of security principles to protecting our information systems. To do otherwise risks liability issues and even our very jobs.

Regulatory Environments

A regulatory environment is an environment in which an organization exists or operates that is controlled to a significant degree by laws, rules, or regulations put in place by government (federal, state, or local), industry groups, or other organizations. In a nutshell, it is what happens when you have to play by someone else’s rules, or else risk serious consequences. A common feature of regulatory environments is that they have enforcement groups and procedures to deal with noncompliance.

You, as a cybersecurity analyst, might have to take action in a number of ways to ensure compliance with one or more regulatory requirements. A sometimes-overlooked example is the type of contract that requires one of the parties to ensure certain conditions are met with regard to information systems security. It is not uncommon, particularly when dealing with the government, to be required to follow certain rules, such as preventing access by foreign nationals to certain information or ensuring everyone working on the contract is trained on proper information-handling procedures.

In this section, we discuss some of the most important regulatory requirements with which you should be familiar in the context of vulnerability management. The following three standards cover the range from those that are completely optional to those that are required by law.

ISO/IEC 27001 Standard

The International Organization for Standardization (ISO, despite the apparent discrepancy in the order of the initials) and the International Electrotechnical Commission (IEC) jointly maintain a number of standards, including 27001, which covers Information Security Management Systems (ISMS). ISO/IEC 27001 is arguably the most popular voluntary security standard in the world and covers every important aspect of developing and maintaining good information security. One of its provisions, covered in control number A.12.6.1, deals with vulnerability management.

This control, whose implementation is required for certification, essentially states that the organization has a documented process in place for timely identification and mitigation of known vulnerabilities. ISO/IEC 27001 certification, which is provided by an independent certification body, is performed in three stages. First, a desk-side audit verifies that the organization has documented a reasonable process for managing its vulnerabilities. The second stage is an implementation audit aimed at ensuring that the documented process is actually being carried out. Finally, surveillance audits confirm that the process continues to be followed and improved upon.

Payment Card Industry Data Security Standard

The Payment Card Industry Data Security Standard (PCI DSS) applies to any organization involved in processing credit card payments using cards branded by the five major issuers (Visa, MasterCard, American Express, Discover, and JCB). Each of these organizations had its own vendor security requirements, so in 2006 they joined efforts and standardized these requirements across the industry. The PCI DSS is periodically updated and, as of this writing, is in version 3.2.

Requirement 11 of the PCI DSS deals with the obligation to “regularly test security systems and processes,” and Section 2 describes the requirements for vulnerability scanning. Specifically, it states that the organization must perform two types of vulnerability scans every quarter: internal and external. The difference is that internal scans use qualified members of the organization, whereas external scans must be performed by approved scanning vendors (ASVs). It is important to know that the organization must be able to show that the personnel involved in the scanning have the required expertise to do so. Requirement 11 also states that both internal and external vulnerability scans must be performed whenever there are significant changes to the systems or processes.

Finally, PCI DSS requires that any “high-risk” vulnerabilities uncovered by either type of scan be resolved. After resolution, another scan is required to demonstrate that the risks have been properly mitigated.

Health Insurance Portability and Accountability Act

The Health Insurance Portability and Accountability Act (HIPAA) establishes penalties (ranging from $100 to $1.5 million) for covered entities that fail to safeguard protected health information (PHI). Though HIPAA does not explicitly call out a requirement to conduct vulnerability assessments, Section 164.308(a)(1)(i) requires organizations to conduct accurate and thorough vulnerability assessments and to implement security measures that are sufficient to reduce the risks presented by those assessed vulnerabilities to a reasonable level. Any organization that violates the provisions of this act, whether willfully or through negligence or even ignorance, faces steep civil penalties.

Corporate Security Policy

A corporate security policy is an overall general statement produced by senior management (or a selected policy board or committee) that dictates what role security plays within the organization. Security policies can be organizational, issue specific, or system specific. In an organizational security policy, management establishes how a security program will be set up, lays out the program’s goals, assigns responsibilities, shows the strategic and tactical value of security, and outlines how enforcement should be carried out. An issue-specific policy, also called a functional policy, addresses specific security issues that management feels need more detailed explanation and attention to make sure a comprehensive structure is built and all employees understand how they are to comply with these security issues. A system-specific policy presents the management’s decisions that are specific to the actual computers, networks, and applications.

Typically, organizations will have an issue-specific policy covering vulnerability management, but it is important to note that this policy is nested within the broader corporate security policy and may also be associated with system-specific policies. The point is that it is not enough to understand the vulnerability management policy (or develop one if it doesn’t exist) in a vacuum. We must understand the organizational security context within which this process takes place.

Data Classification

An important item of metadata that should be attached to all data is a classification level. This classification tag is important in determining the protective controls we apply to the information. The rationale behind assigning values to different types of data is that it enables a company to gauge the resources that should go toward protecting each type of data because not all of it has the same value to the company. There are no hard-and-fast rules on the classification levels an organization should use. Typical classification levels include the following:

• Private Information whose improper disclosure could raise personal privacy issues

• Confidential Data that could cause grave damage to the organization

• Proprietary (or sensitive) Data that could cause some damage, such as loss of competitiveness to the organization

• Public Data whose release would have no adverse effect on the organization

Each classification should be unique and separate from the others and not have any overlapping effects. The classification process should also outline how information is controlled and handled throughout its life cycle (from creation to termination). The following list shows some criteria parameters an organization might use to determine the sensitivity of data:

• The level of damage that could be caused if the data were disclosed

• The level of damage that could be caused if the data were modified or corrupted

• Lost opportunity costs that could be incurred if the data is not available or is corrupted

• Legal, regulatory, or contractual responsibility to protect the data

• Effects the data has on security

• The age of data

Asset Inventory

You cannot protect what you don’t know you have. Though inventorying assets is not what most of us would consider glamorous, it is nevertheless a critical aspect of managing vulnerabilities in your information systems. In fact, this aspect of security is so important that it is prominently featured at the top of the Center for Internet Security’s (CIS’s) Critical Security Controls (CSC). CSC #1 is the inventory of authorized and unauthorized devices, and CSC #2 deals with the software running on those devices.

Keep in mind, however, that an asset is anything of worth to an organization. Apart from hardware and software, this includes people, partners, equipment, facilities, reputation, and information. For the purposes of the CySA+ exam, we focus on hardware, software, and information. You should note that determining the value of an asset can be difficult and is oftentimes subjective. In the context of vulnerability management, the CySA+ exam will only require you to decide how you would deal with critical and noncritical assets.

Critical

A critical asset is anything that is absolutely essential to performing the primary functions of your organization. If you work at an online retailer, this set would include your web platforms, data servers, and financial systems, among others. It probably wouldn’t include the workstations used by your web developers or your printers. Critical assets, clearly, require a higher degree of attention when it comes to managing vulnerabilities. This attention can be expressed in a number of different ways, but you should focus on at least two: the thoroughness of each vulnerability scan and the frequency of each scan.

Noncritical

A noncritical asset, though valuable, is not required for the accomplishment of your main mission as an organization. You still need to include these assets in your vulnerability management plan, but given the limited resources with which we all have to deal, you would prioritize them lower than you would critical ones.

Common Vulnerabilities

Most threat actors don’t want to work any harder than they absolutely have to. Unless they are specifically targeting your organization, cutting off the usual means of exploitation is oftentimes sufficient for them to move on to lower-hanging fruit elsewhere. Fortunately, we know a lot about the mistakes that many organizations make in securing their systems because, sadly, we see the same issues time and again. Before we delve into common flaws on specific types of platforms, here are some that are applicable to most if not all systems:

• Missing patches/updates A system could be missing patches or updates for numerous reasons. If the reason is legitimate (for example, an industrial control system that cannot be taken offline), then this vulnerability should be noted, tracked, and mitigated using an alternate control.

• Misconfigured firewall rules Whether or not a device has its own firewall, the ability to reach it across the network, which should be restricted by firewalls or other means of segmentation, is oftentimes lacking.

• Weak passwords Our personal favorite was an edge firewall that was deployed for an exercise by a highly skilled team of security operators. The team, however, failed to follow its own checklist and was so focused on hardening other devices that it forgot to change the default password on the edge firewall. Even when default passwords are changed, it is not uncommon for users to choose weak ones if they are allowed to.

Servers

Perhaps the most common vulnerability seen on servers stems from losing track of a server’s purpose on the network and allowing it to run unnecessary services and open ports. The default installation of many servers includes hundreds if not thousands of applications and services, most of which are not really needed for a server’s main purpose. If this extra software is not removed, disabled, or at the very least hardened and documented, it may be difficult to secure the server.

Another common vulnerability is the misconfiguration of services. Most products offer many more features than what are actually needed, but many of us simply ignore the “bonus” features and focus on configuring the critical ones. This can come back to haunt us if these bonus features allow attackers to easily gain a foothold by exploiting legitimate system features that we were not even aware of. The cure to this problem is to ensure we know the full capability set of anything we put on our networks, and disable anything we don’t need.

Endpoints

Endpoints are almost always end-user devices (mobile or otherwise). They are the most common entry point for attackers into our networks, and the most common vectors are e-mail attachments and web links. In addition to the common vulnerabilities discussed before (especially updates/patches), the most common problem with endpoints is lack of up-to-date malware protection. This, of course, is the minimum standard. We should really strive to have more advanced, centrally managed, host-based security systems.

Another common vulnerability at the endpoint is system misconfiguration or default configurations. Though most modern operating systems pay attention to security, they oftentimes err on the side of functionality. The pursuit of a great user experience can sometimes come at a high cost. To counter this vulnerability, you should have baseline configurations that can be verified periodically by your scanning tools. These configurations, in turn, are driven by your organizational risk management processes as well as any applicable regulatory requirements.

Network Infrastructure

Perhaps the most commonly vulnerable network infrastructure components are the wireless access points (WAPs). Particularly in environments where employees can bring (and connect) their own devices, it is challenging to strike the right balance between security and functionality. It bears pointing out that the Wired Equivalent Privacy (WEP) protocol has been known to be insecure since at least 2004 and has no place in our networks. (Believe it or not, we still see them in smaller organizations.) For best results, use the Wi-Fi Protected Access 2 (WPA2) protocol.

Even if your WAPs are secured (both electronically and physically), anybody can connect a rogue WAP or any other device to your network unless you take steps to prevent this from happening. The IEEE 802.1X standard provides port-based Network Access Control (NAC) for both wired and wireless devices. With 802.1X, any client wishing to connect to the network must first authenticate itself. With that authentication, you can provide very granular access controls and even require the endpoint to satisfy requirements for patches/upgrades.

Virtual Infrastructure

Increasingly, virtualization is becoming pervasive in our systems. One of the biggest advantages of virtual computing is its efficiency. Many of our physical network devices spend a good part of their time sitting idle and thus underutilized, as shown for a Mac OS workstation in Figure 5-1. This is true even if other devices are over-utilized and becoming performance bottlenecks. By virtualizing the devices and placing them on the same shared hardware, we can balance loads and improve performance at a reduced cost.

Figure 5-1 Mac OS Performance Monitor application showing CPU usage

Apart from cost savings and improved responsiveness to provisioning requirements, this technology promises enhanced levels of security—assuming, of course, that we do things right. The catch is that by putting what used to be separate physical devices on the same host and controlling the system in software, we allow software flaws in the hypervisors to potentially permit attackers to jump from one virtual machine to the next.

Virtual Hosts

Common vulnerabilities in virtual hosts are no different from those we would expect to find in servers and endpoints, which were discussed in previous sections. The virtualization of hosts, however, brings some potential vulnerabilities of its own. Chief among these is the sprawl of virtual machines (VMs). Unlike their physical counterparts, VMs can easily multiply. We have seen plenty of organizations with hundreds or thousands of VMs in various states of use and/or disrepair that dot their landscape. This typically happens when requirements change and it is a lot easier to simply copy an existing VM than to start from scratch. Eventually, one or both VMs are forgotten, but not properly disposed of. While most of these are suspended or shut down, it is not uncommon to see poorly secured VMs running with nobody tracking them.

VMs are supposed to be completely isolated from the operating system of the host in which they are running. One of the reasons this is important is that if a process in the VM was able to breach this isolation and interact directly with the host, that process would have access to any other VMs running on that host, likely with elevated privileges. The virtualization environment, or hypervisor, is responsible for enforcing this property but, like any other software, it is possible that exploitable flaws exist in it. In fact, the Common Vulnerabilities and Exposures (CVE) list includes several such vulnerabilities.

Virtual Networks

Virtual networks are commonly implemented in two ways: internally to a host using network virtualization software within a hypervisor, and externally through the use of protocols such as the Layer 2 Tunneling Protocol (L2TP). In this section, we address the common vulnerabilities found in internal virtual networks and defer discussion of the external virtual networks until a later section.

As mentioned before, a vulnerability in the hypervisor would allow an attacker to escape a VM. Once outside of the machine, the attacker could have access to the virtual networks implemented by the hypervisor. This could lead to eavesdropping, modification of network traffic, or denial of service. Still, at the time of this writing there are very few known actual threats to virtual networks apart from those already mentioned when we discussed common vulnerabilities in VMs.

Management Interface

Because virtual devices have no physical manifestation, there must be some mechanism by which we can do the virtual equivalent of plugging an Ethernet cable into the back of a server or adding memory to it. These, among many other functions, are performed by the virtualization tools’ management interfaces, which frequently allow remote access by administrators. The most common vulnerability in these interfaces is their misconfiguration. Even competent technical personnel can forget to harden or properly configure this critical control device if they do not strictly follow a security technical implementation guide.

Mobile Devices

There is a well-known adage that says that if I can gain physical access to your device, there is little you can do to secure it from me. This highlights the most common vulnerability of all mobile devices: theft. We have to assume that every mobile device will at some point be taken (permanently or temporarily) by someone who shouldn’t have access to it. What happens then?

Although weak passwords are common vulnerabilities to all parts of our information systems, the problem is even worse for mobile devices because these frequently use short numeric codes instead of passwords. Even if the device is configured to wipe itself after a set number of incorrect attempts (a practice that is far from universal), there are various documented ways of exhaustively trying all possible combinations until the right one is found.

Another common vulnerability of mobile devices is the app stores from which they load new software. Despite efforts by Google to reduce the risk by leveraging their Google Bouncer technology, its store is still a source of numerous malicious apps. Compounding this problem is the fact that Android apps can be loaded from any store or even websites. Many users looking for a cool app and perhaps trying to avoid paying for it will resort to these shady sources. iOS users, though better protected by Apple’s ecosystem, are not immune either, particularly if they jailbreak their devices.

Finally, though lack of patches/updates is a common vulnerability to all devices, mobile ones (other than iOS) suffer from limitations on how and when they can be upgraded. These limitations are imposed by carriers. We have seen smartphones that are running three-year-old versions of Android and cannot be upgraded at all.

Interconnected Networks

In late 2013, the consumer retail giant Target educated the entire world on the dangers of interconnected networks. One of the largest data breaches in recent history was accomplished, not by attacking the retailer directly, but by using a heating, ventilation, and air conditioning (HVAC) vendor’s network as an entry point. The vendor had access to the networks at Target stores to monitor and manage HVAC systems, but the vulnerability induced by the interconnection was not fully considered by security personnel. In a world that grows increasingly interconnected and interdependent, we should all take stock of which of our partners might present our adversaries with a quick way into our systems.

Virtual Private Networks

Virtual private networks (VPNs) connect two or more devices that are physically part of separate networks, and allow them to exchange data as if they were connected to the same LAN. These virtual networks are encapsulated within the other networks in a manner that segregates the traffic in the VPN from that in the underlying network. This is accomplished using a variety of protocols, including the Internet Protocol Security’s (IPSec) Layer 2 Tunneling Protocol (L2TP), Transport Layer Security (TLS), and the Datagram Transport Layer Security (DTLS) used by many Cisco devices. These protocols and their implementations are, for the most part, fairly secure.

The main vulnerability in VPNs lies in what they potentially allow us to do: connect untrusted, unpatched, and perhaps even infected hosts to our networks. The first risk comes from which devices are allowed to connect. Some organizations require that VPN client software be installed only on organizationally owned, managed devices. If this is not the case and any user can connect any device, provided they have access credentials, then the risk of exposure increases significantly.

Another problem, which may be mitigated for official devices but not so much for personal ones, is the patch/update state of the device. If we do a great job at developing secure architectures but then let unpatched devices connect to them, we are providing adversaries a convenient way to render many of our controls moot. The best practice for mitigating this risk is to implement a Network Access Control (NAC) solution that actively checks the device for patches, updates, and any required other parameter before allowing it to join the network. Many NAC solutions allow administrators to place noncompliant devices in a “guest” network so they can download the necessary patches/updates and eventually be allowed in.

Finally, with devices that have been “away” for a while and show back up on our networks via VPN, we have no way of knowing whether they are compromised. Even if they don’t spread malware or get used as pivot points for deeper penetration into our systems, any data these devices acquire would be subject to monitoring by unauthorized third parties. Similarly, any data originating in such a compromised host is inherently untrustworthy.

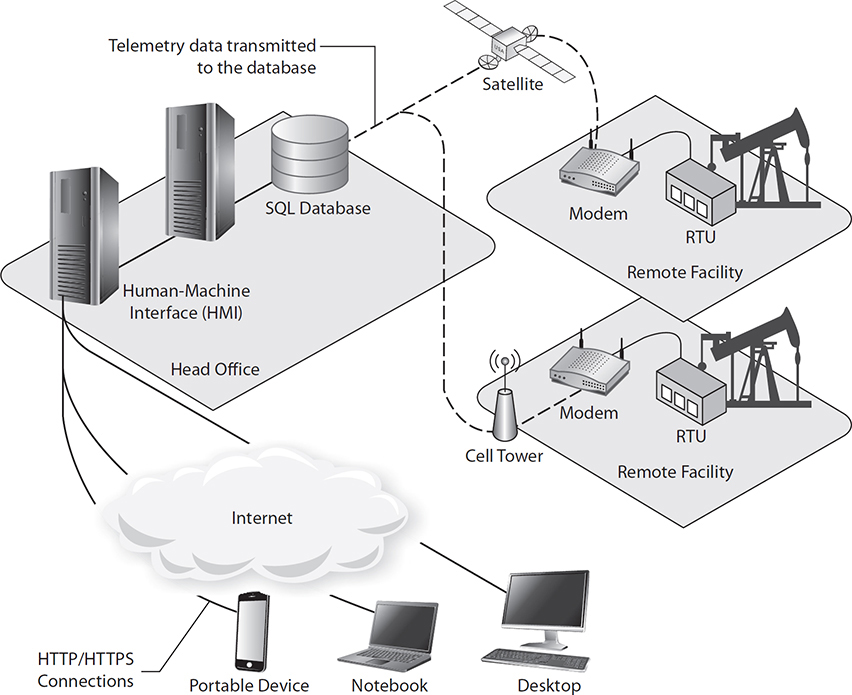

Industrial Control Systems

Industrial control systems (ICSs) are cyber-physical systems that allow specialized software to control the physical behaviors of some system. For example, ICSs are used in automated automobile assembly lines, building elevators, and even HVAC systems. A typical ICS architecture is shown in Figure 5-2. At the bottom layer (level 0), we find the actual physical devices such as sensors and actuators that control physical processes. These are connected to remote terminal units (RTUs) or programmable logic controllers (PLCs), which translate physical effects to binary data, and vice versa. These RTUs and PLCs at level 1 are, in turn, connected to database servers and Human-Machine Interaction (HMI) controllers and terminals at level 2. These three lower levels of the architecture are known as the operational technology (OT) network. The OT network was traditionally isolated from the IT network that now comprises levels 3 and 4 of the architecture. For a variety of functional and business reasons, this gap between OT (levels 0 through 2) and IT (levels 3 and 4) is now frequently bridged, providing access to physical processes from anywhere on the Internet.

Figure 5-2 Simple industrial control system (ICS)

Much of the software that runs an ICS is burned into the firmware of devices such as programmable logic controllers (PLC), like the ones that run the uranium enrichment centrifuges targeted by Stuxnet. This is a source of vulnerabilities because updating this software cannot normally be done automatically or even centrally. The patching and updating, which is pretty infrequent to begin with, typically requires that the device be brought offline and manually updated by a qualified technician. Between the cost and effort involved and the effects of interrupting business processes, it should not come as a surprise to learn that many ICS components are never updated or patched. To make matters worse, vendors are notorious for not providing patches at all, even when vulnerabilities are discovered and made public. In its 2016 report on ICS security, FireEye described how 516 of the 1552 known ICS vulnerabilities had no patch available.

Another common vulnerability in ICSs is passwords. Unlike previous mentions of this issue in this chapter, here the issue is not the users choosing weak passwords, but the manufacturer of the ICS device setting a trivial password in the firmware, documenting it so all users (and perhaps abusers) know what it is, and sometimes making it difficult if not impossible to change. In many documented cases, these passwords are stored in plain text. Manufacturers are getting better at this, but there are still many devices with unchangeable passwords controlling critical physical systems around the world.

SCADA Devices

Supervisory Control and Data Acquisition (SCADA) systems are a specific type of ICS that is characterized by covering large geographic regions. Whereas an ICS typically controls physical processes and devices in one building or a small campus, a SCADA system is used for pipelines and transmission lines covering hundreds or thousands of miles. SCADA is most commonly associated with energy (for example, petroleum or power) and utility (for example, water or sewer) applications. The general architecture of a SCADA system is depicted in Figure 5-3.

Figure 5-3 Typical architecture of a SCADA system

SCADA systems introduce two more types of common vulnerabilities in addition to those found in ICS. The first of these is induced by the long-distance communications links. For many years, most organizations using SCADA systems relied on the relative obscurity of the communications protocols and radio frequencies involved to provide a degree (or at least an illusion) of security. In what is one of the first cases of cyber attack against SCADA systems, an Australian man apparently seeking revenge in 2001 connected a rogue radio transceiver to an RTU and intentionally caused millions of gallons of sewage to spill into local parks and rivers. Though the wireless systems have mostly been modernized and hardened, they still present potential vulnerabilities.

The second weakness, particular to a SCADA system, is its reliance on isolated and unattended facilities. These remote stations provide attackers with an opportunity to gain physical access to system components. Though many of these stations are now protected by cameras and alarm systems, their remoteness makes responding significantly slower compared to most other information systems.

Frequency of Vulnerability Scans

With all these very particular vulnerabilities floating around, how often should we be checking for them? As you might have guessed, there is no one-size-fits-all answer to that question. The important issue to keep in mind is that the process is what matters. If you haphazardly do vulnerability scans at random intervals, you will have a much harder time answering the question of whether or not your vulnerability management is being effective. If, on the other hand, you do the math up front and determine the frequencies and scopes of the various scans given your list of assumptions and requirements, you will have much more control over your security posture.

Risk Appetite

The risk appetite of an organization is the amount of risk that its senior executives are willing to assume. You will never be able to drive risk down to zero because there will always be a possibility that someone or something causes losses to your organization. What’s more, as you try to mitigate risks, you will rapidly approach a point of diminishing returns. When you start mitigating risks, you will go through a stage in which a great many risks can be reduced with some commonsense and inexpensive controls. After you start running out of such low-hanging fruit, the costs (for example, financial and opportunity) will start rapidly increasing. You will then reach a point where further mitigation is fairly expensive. How expensive is “too expensive” is dictated by your organization’s risk appetite.

When it comes to the frequency of vulnerability scans, it’s not as simple as doing more if your risk appetite is low, and vice versa. Risk is a deliberate process that quantifies the likelihood of a threat being realized and the net effect it would have in the organization. Some threats, such as hurricanes and earthquakes, cannot be mitigated with vulnerability scans. Neither can the threat of social engineering attacks or insider threats. So the connection between risk appetite and the frequency of vulnerability scans requires that we dig into the risk management plan and see which specific risks require scans and then how often they should be done in order to reduce the residual risks to the agreed-upon levels.

Regulatory Requirements

If you thought the approach to determining the frequency of scans based on risk appetite was not very definitive, the opposite is true of regulatory requirements. Assuming you’ve identified all the applicable regulations, then the frequencies of the various scans will be given to you. For instance, requirement 11.2 of the PCI DSS requires vulnerability scans (at least) quarterly as well as after any significant change in the network. HIPAA, on the other hand, imposes no such frequency requirements. Still, in order to avoid potential problems, most experts agree that covered organizations should run vulnerability scans at least semiannually.

Technical Constraints

Vulnerability assessments require resources such as personnel, time, bandwidth, hardware, and software, many of which are likely limited in your organization. Of these, the top technical constraints on your ability to perform these tests are qualified personnel and technical capacity. Here, the term capacity is used to denote computational resources expressed in cycles of CPU time, bytes of primary and secondary memory, and bits per second (bps) of network connectivity. Because any scanning tool you choose to use will require a minimum amount of such capacity, you may be constrained in both the frequency and scope of your vulnerability scans.

If you have no idea how much capacity your favorite scans require, quantifying it should be one of your first next steps. It is possible that in well-resourced organizations such requirements are negligible compared to the available capacity. In such an environment, it is possible to increase the frequency of scans to daily or even hourly for high-risk assets. It is likelier, however, that your scanning takes a noticeable toll on assets that are also required for your principal mission. In such cases, you want to carefully balance the mission and security requirements so that one doesn’t unduly detract from the other.

Workflow

Another consideration when determining how often you conduct vulnerability scanning is established workflows of security and network operations within your organization. As mentioned in the preceding section, qualified personnel constitute a limited resource. Whenever you run a vulnerability scan, someone will have to review and perhaps analyze the results in order to determine what actions, if any, are required. This process is best incorporated into the workflows of your security and/or network operations centers personnel.

A recurring theme in this chapter has been the need to standardize and enforce repeatable vulnerability management processes. Apart from well-written policies, the next best way to ensure this happens is by writing it into the daily workflows of security and IT personnel. If I work in a security operations center (SOC) and know that every Tuesday morning my duties include reviewing the vulnerability scans from the night before and creating tickets for any required remediation, then I’m much more likely to do this routinely. The organization, in turn, benefits from consistent vulnerability scans with well-documented outcomes, which, in turn, become enablers of effective risk management across the entire system.

Tool Configuration

Just as you must weigh a host of considerations when determining how often to conduct vulnerability scans, you also need to think about different but related issues when configuring your tools to perform these scans. Today’s tools typically have more power and options than most of us will sometimes need. Our information systems might also impose limitations or requirements on which of these features can or should be brought to bear.

Scanning Criteria

When configuring scanning tools, you have a host of different considerations, but here we focus on the main ones you will be expected to know for the CySA+ exam. The list is not exhaustive, however, and you should probably grow it with issues that are specific to your organization or sector.

Sensitivity Levels

Earlier in this chapter, we discussed the different classifications we should assign to our data and information, as well as the criticality levels of our other assets. We return now to these concepts as we think about configuring our tools to do their jobs while appropriately protecting our assets. When it comes to the information in our systems, we must take great care to ensure that the required protections remain in place at all times. For instance, if we are scanning an organization covered by HIPAA, we should ensure that nothing we do as part of our assessment in any way compromises protected health information (PHI). We have seen vulnerability assessments that include proofs such as sample documents obtained by exercising a security flaw. Obviously, this is not advisable in the scenario we’ve discussed.

Besides protecting the information, we also need to protect the systems on which it resides. Earlier we discussed critical and noncritical assets in the context of focusing attention on the critical ones. Now we’ll qualify that idea by saying that we should scan them in a way that ensures they remain available to the business or other processes that made them critical in the first place. If an organization processes thousands of dollars each second and our scanning slows that down by an order of magnitude, even for a few minutes, the effect could be a significant loss of revenue that might be difficult to explain to the board. Understanding the nature and sensitivity of these assets can help us identify tool configurations that minimize the risks to them, such as scheduling the scan during a specific window of time in which there is no trading.

Vulnerability Feed

Unless you work in a governmental intelligence organization, odds are that your knowledge of vulnerabilities mostly comes from commercial or community feeds. These services have update cycles that range from hours to weeks and, though they tend to eventually converge on the vast majority of known vulnerabilities, one feed may publish a threat significantly before another. If you are running hourly scans, then you would obviously benefit from the faster services and may be able to justify a higher cost. If, on the other hand, your scans are weekly, monthly, or even quarterly, the difference may not be as significant. As a rule of thumb, you want a vulnerability feed that is about as frequent as your own scanning cycle.

If your vulnerability feed is not one with a fast update cycle, or if you want to ensure you are absolutely abreast of the latest discovered vulnerabilities, you can (and perhaps should) subscribe to alerts besides those of your provider. The National Vulnerability Database (NVD) maintained by the National Institute of Standards and Technologies (NIST) provides two Rich Site Summary (RSS) feeds, one of which will alert you to any new vulnerability reported, and the other provides only those that have been analyzed. The advantage of the first feed is that you are on the bleeding edge of notifications. The advantage of the second is that it provides you with specific products that are affected as well as additional analysis. A number of other organizations provide similar feeds that you should probably explore as well.

Assuming you have subscribed to one or more feeds (in addition to your scanning product’s feed), you will likely learn of vulnerabilities in between programmed scans. When this happens, you will have to consider whether to run an out-of-cycle scan that looks for that particular vulnerability or to wait until the next scheduled event to run the test. If the flaw is critical enough to warrant immediate action, you may have to pull from your service provider or, failing that, write your own plug-in to test the vulnerability. Obviously, this would require significant resources, so you should have a process by which to make decisions like these as part of your vulnerability management program.

Scope

Whether you are running a scheduled or special scan, you have to carefully define its scope and configure your tools appropriately. Though it would be simpler to scan everything at once at set intervals, the reality is that this is oftentimes not possible simply because of the load this places on critical nodes, if not the entire system. What may work better is to have a series of scans, each of which having a different scope and parameters.

Whether you are doing a global or targeted scan, your tools must know which nodes to test and which ones to leave alone. The set of devices that will be assessed constitutes the scope of the vulnerability scan. Deliberately scoping these events is important for a variety of reasons, but one of the most important ones is the need for credentials, which we discuss next.

Credentialed vs. Noncredentialed

A noncredentialed vulnerability scan evaluates the system from the perspective of an outsider, such as an attacker just beginning to interact with a target. This is a sort of black-box test in which the scanning tool doesn’t get any special information or access into the target. The advantage of this approach is that it tends to be quicker while still being fairly realistic. It may also be a bit more secure because there is no need for additional credentials on all tested devices. The disadvantage, of course, is that you will most likely not get full coverage of the target.

In order to really know all that is vulnerable in a host, you typically need to provide the tool with credentials so it can log in remotely and examine the inside as well as the outside. Credentialed scans will always be more thorough than noncredentialed ones, simply because of the additional information that login provides the tool. Whether or not this additional thoroughness is important to you is for you and your team to decide. An added benefit of credentialed scans is that they tend to reduce the amount of network traffic required to complete the assessment.

Server Based vs. Agent Based

Vulnerability scanners tend to fall into two classes of architectures: those that require a running process (agent) on every scanned device, and those that do not. The difference is illustrated in Figure 5-4. A server-based (or agentless) scanner consolidates all data and processes on one or a small number of scanning hosts, which depend on a fair amount of network bandwidth in order to run their scans. It has fewer components, which could make maintenance tasks easier and help with reliability. Additionally, it can detect and scan devices that are connected to the network, but do not have agents running on them (for example, new or rogue hosts).

Figure 5-4 Server-based and agent-based vulnerability scanner architectures

Agent-based scanners have agents that run on each protected host and report their results back to the central scanner. Because only the results are transmitted, the bandwidth required by this architectural approach is considerably less than a server-based solution. Also, because the agents run continuously on each host, mobile devices can still be scanned even when they are not connected to the corporate network.

Types of Data

Finally, as you configure your scanning tool, you must consider the information that should or must be included in the report, particularly when dealing with regulatory compliance scans. This information will drive the data that your scan must collect, which in turn affects the tool configuration. Keep in mind that each report (and there may multiple ones as outputs of one scan) is intended for a specific audience. This affects both the information in it as well as the manner in which it is presented.

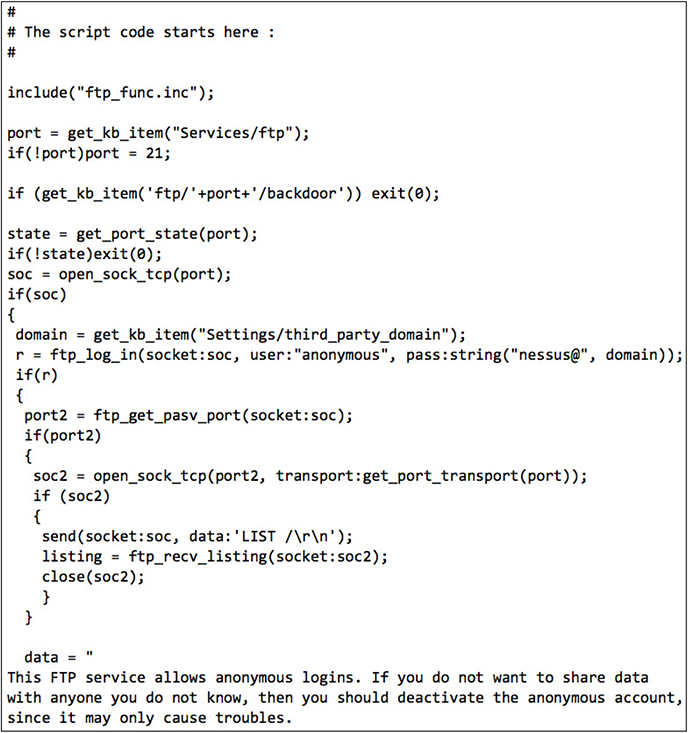

Tool Updates and Plug-Ins

Vulnerability scanning tools work by testing systems against lists of known vulnerabilities. These flaws are frequently being discovered by vendors and security researchers. It stands to reason that if you don’t keep your lists up to date, whatever tool you use will eventually fail to detect vulnerabilities that are known by others, especially your adversaries. This is why it is critical to keep your tool up to date.

A vulnerability scanner plug-in is a simple program that looks for the presence of one specific flaw. In Nessus, plug-ins are coded in the Nessus Attack Scripting Language (NASL), which is a very flexible language able to perform virtually any check imaginable. Figure 5-5 shows a portion of a NASL plug-in that tests for FTP servers that allow anonymous connections.

Figure 5-5 NASL script that tests for anonymous FTP logins

SCAP

Question: how do you ensure that your vulnerability management process complies with all relevant regulatory and policy requirements regardless of which scanning tools you use? Each tool, after all, may use whatever standards (for example, rules) and reporting formats its developers desire. This lack of standardization led the National Institute of Standards and Technologies (NIST) to team up with industry partners to develop the Security Content Automation Protocol (SCAP). SCAP is a protocol that uses specific standards for the assessment and reporting of vulnerabilities in the information systems of an organization. Currently in version 1.2, it incorporates about a dozen different components that standardize everything from an asset reporting format (ARF) to Common Vulnerabilities and Exposures (CVE) to the Common Vulnerability Scoring System (CVSS).

At its core, SCAP leverages baselines developed by the NIST and its partners that define minimum standards for vulnerability management. If, for instance, you want to ensure that your Windows 10 workstations are complying with the requirements of the Federal Information Security Management Act (FISMA), you would use the appropriate SCAP module that captures these requirements. You would then provide that module to a certified SCAP scanner (such as Nessus), and it would be able to report this compliance in a standard language. As you should be able to see, SCAP enables full automation of the vulnerability management process, particularly in regulatory environments.

Permissions and Access

Apart from the considerations in a credentialed scan discussed already, the scanning tool must have the correct permissions on whichever hosts it is running, as well as the necessary access across the network infrastructure. It is generally best to have a dedicated account for the scanning tool or, alternatively, to execute it within the context of the user responsible for running the scan. In either case, minimally privileged accounts should be used to minimize risks (that is, do not run the scanner as root unless you have no choice).

Network access is also an important configuration, not so much of the tool as of the infrastructure. Because the vulnerability scans are carefully planned beforehand, it should be possible to examine the network and determine what access control lists (ACLs), if any, need to be modified to allow the scanner to work. Similarly, network IDS and IPS may trigger on the scanning activity unless they have been configured to recognize it as legitimate. This may also be true for host-based security systems (HBSSs), which might attempt to mitigate the effects of the scan.

Finally, the tool is likely to include a reporting module for which the right permissions must be set. It is ironic that some organizations deploy vulnerability scanners but fail to properly secure the reporting interfaces. This allows users who should be unauthorized to access the reports at will. Although this may seem like a small risk, consider the consequences of adversaries being able to read your vulnerability reports. This ability would save them significant effort because they would then be able to focus on the targets you have already listed as vulnerable. As an added bonus, they would know exactly how to attack the hosts.

Chapter Review

This chapter has focused on developing deliberate, repeatable vulnerability management processes that satisfy all the internal and external requirements. The goal is that you, as a cybersecurity analyst, will be able to ask the right questions and develop appropriate approaches to managing the vulnerabilities in your information systems. Vulnerabilities, of course, are not all created equal, so you have to consider the sensitivity of your information and the criticality of the systems on which it resides and is used. As mentioned repeatedly, you will never be able to eliminate every vulnerability and drive your risk to zero. What you can and should do is assess your risks and mitigate them to a degree that is compliant with applicable regulatory and legal requirements, and is consistent with the risk appetite of your executive leaders.

You can’t do this unless you take a holistic view of your organization’s operating environment and tailor your processes, actions, and tools to your particular requirements. Part of this involves understanding the common types of vulnerabilities associated with the various components of your infrastructure. You also need to understand the internal and external requirements to mitigating the risks of flaws. Finally, you need to consider the impact on your organization’s critical business processes—that is, the impact of both the vulnerabilities and the process of identifying and correcting them. After all, no organization exists for the purpose of running vulnerability scans on its systems. Rather, these assessments are required in order to support the real reasons for the existence of the organization.

Questions

1. The popular framework that aims to standardize automated vulnerability assessment, management, and compliance level is known as what?

A. CVSS

B. SCAP

C. CVE

D. PCAP

2. An information system that might require restricted access to, or special handling of, certain data as defined by a governing body is referred to as a what?

A. Compensating control

B. International Organization for Standardization (ISO)

C. Regulatory environment

D. Production system

3. Which of the following are parameters that organizations should not use to determine the classification of data?

A. The level of damage that could be caused if the data were disclosed

B. Legal, regulatory, or contractual responsibility to protect the data

C. The age of data

D. The types of controls that have been assigned to safeguard it

4. What is the term for the amount of risk an organization is willing to accept in pursuit of its business goals?

A. Risk appetite

B. Innovation threshold

C. Risk hunger

D. Risk ceiling

5. Insufficient storage, computing, or bandwidth required to remediate a vulnerability is considered what kind of constraint?

A. Organizational

B. Knowledge

C. Technical

D. Risk

6. Early systems of which type used security through obscurity, or the flawed reliance on unfamiliar communications protocols as a security practice?

A. PCI DSS

B. SCADA

C. SOC

D. PHI

7. What is a reason that patching and updating occur so infrequently with ICS and SCADA devices?

A. These devices control critical and costly systems that require constant uptime.

B. These devices are not connected to networks, so they do not need to be updated.

C. These devices do not use common operating systems, so they cannot be updated.

D. These devices control systems, such as HVAC, that do not need security updates.

8. All of the following are important considerations when deciding the frequency of vulnerability scans except which?

A. Security engineers’ willingness to assume risk

B. Senior executives’ willingness to assume risk

C. HIPAA compliance

D. Tool impact on business processes

Use the following scenario to answer Questions 9–12:

A local hospital has reached out to your security consulting company because it is worried about recent reports of ransomware on hospital networks across the country. The hospital wants to get a sense of what weaknesses exist on the network and get your guidance on the best security practices for its environment. The hospital has asked you to assist with its vulnerability management policy and provided you with some information about its network. The hospital provides a laptop to its staff and each device can be configured using a standard baseline. However, the hospital is not able to provide a smartphone to everyone and allows user-owned devices to connect to the network. Additionally, its staff is very mobile and relies on a VPN to reach back to the hospital network.

9. When reviewing the VPN logs, you confirm that about half of the devices that connect are user-owned devices. You suggest which of the following changes to policy?

A. None, the use of IPSec in VPNs provides strong encryption that prevents the spread of malware

B. Ask all staff members to upgrade the web browser on their mobile devices

C. Prohibit all UDP traffic on personal devices

D. Prohibit noncompany laptops and mobile devices from connecting to the VPN

10. What kind of vulnerability scanner architecture do you recommend be used in this environment?

A. Zero agent

B. Server based

C. Agent based

D. Network based

11. Which vulnerabilities would you expect to find mostly on the hospital’s laptops?

A. Misconfigurations in IEEE 802.1X

B. Fixed passwords stored in plaintext in the PLCs

C. Lack of VPN clients

D. Outdated malware signatures

12. Which of the following is not a reason you might prohibit user-owned devices from the network?

A. The regulatory environment might explicitly prohibit these kinds of devices.

B. Concerns about staff recruiting and retention.

C. There is no way to enforce who can have access to the device.

D. The organization has no control over what else is installed on the personal device.

Answers

1. B. The Security Content Automation Protocol (SCAP) is a method of using open standards, called components, to identify software flaws and configuration issues.

2. C. A regulatory environment is one in which the way an organization exists or operates is controlled by laws, rules, or regulations put in place by a formal body.

3. D. Although there are no fixed rules on the classification levels that an organization uses, some common criteria parameters used to determine the sensitivity of data include the level of damage that could be caused if the data were disclosed; legal, regulatory, or contractual responsibility to protect the data; and the age of data. The classification should determine the controls used and not the other way around.

4. A. Risk appetite is a core consideration when determining your organization’s risk management policy and guidance, and will vary based on factors such as criticality of production systems, impact to public safety, and financial concerns.

5. C. Any limitation on the ability to perform a task on a system due to limitations of technology is a technical constraint and must have acceptable compensating controls in place.

6. B. Early Supervisory Control and Data Acquisition (SCADA) systems had the common vulnerability of relying heavily on obscure communications protocols for security. This practice only provides the illusion of security and may place the organization in worse danger.

7. A. The cost involved and potential negative effects of interrupting business and industrial processes often dissuade these device managers from updating and patching these systems.

8. A. An organization’s risk appetite, or amount of risk it is willing to take, is a legitimate consideration when determining the frequency of scans. However, only executive leadership can make that determination.

9. D. Allowing potentially untrusted, unpatched, and perhaps even infected hosts onto a network via a VPN is not ideal. Best practices dictate that VPN client software be installed only on organizationally owned and managed devices.

10. C. Because every laptop has the same software baseline, an agent-based vulnerability scanner is a sensible choice. Agent-based scanners have agents that run on each protected host and report their results back to the central scanner. The agents can also scan continuously on each host, even when not connected to the hospital network.

11. D. Malware signatures are notoriously problematic on endpoints, particularly when they are portable and not carefully managed. Although VPN client problems might be an issue, they would not be as significant a vulnerability as outdated malware signatures. IEEE 802.1X problems would be localized at the network access points and not on the endpoints. PLCs are found in ICS and SCADA systems and not normally in laptops.

12. B. Staff recruiting and retention are frequently quoted by business leaders as reasons to allow personal mobile devices on their corporate networks. Therefore, staffing concerns would typically not be a good rationale for prohibiting these devices.