CHAPTER 3

Responding to Network-Based Threats

In this chapter you will learn:

• Practices for network and host hardening

• Deception techniques for improving security

• Common types of access controls

• Trends in threat detection and endpoint protection

There can never be enough deception in war.

—Sun Tzu

What would you say if I told you that at Fort Knox, the entirety of the gold depository sits in a single large room located at the center of a conspicuous building with a neon sign that reads “Gold Here!”? One guard is posted at each of the 12 entrances, equipped with photographs of all known gold thieves in the area and a radio to communicate with the other guards internally. Sounds absurd, doesn’t it? This fictional scenario would be a dream for attackers, but this architecture is how many networks are currently configured: a porous border with sentries that rely on dated data, about only known threats, to make decisions about whom to let in. The only thing better for an attacker would be no security at all.

In reality, the U.S. Bullion Depository is an extremely hardened facility that resides within the boundaries of Fort Knox, an Army installation protected by tanks, armed police, patrolling helicopter gunships, and some of the world’s best-trained soldiers. Should an attacker even manage to get past the gates of Fort Knox itself, he would have to make his way through additional layers of security, avoiding razor wire and detection by security cameras, trip sensors, and military working dogs to reach the vault. This design incorporates some of the best practices of physical security that, when governed by strict operating policies, protect some of the nation’s most valuable resources. The best protected networks are administered in a similar way, incorporating an efficient use of technology while raising the cost for an attacker.

Network Segmentation

Network segmentation is the practice of separating various parts of the network into subordinate zones. Some of the goals of network segmentation are to thwart the adversary’s efforts, improve traffic management, and prevent spillover of sensitive data. Beginning at the physical layer of the network, segmentation can be implemented all the way up to the application layer. One common method of providing separation at the link layer of the network is the use of virtual local area networks (VLANs). Properly configured, a VLAN allows various hosts to be part of the same network even if they are not physically connected to the same network equipment. Alternatively, a single switch could support multiple VLANs, greatly improving design and management of the network. Segmentation can also occur at the application level, preventing applications and services from interacting with others that may run on the same hardware. Keep in mind that segmenting a network at one layer doesn’t carry over to the higher layers. In other words, simply implementing VLANs is not enough if you also desire to segment based on the application protocol.

System Isolation

Even within the same subnetwork or VLAN, there might be systems that should only be communicating with certain other systems, and it becomes apparent that something is amiss should you see loads of traffic outside of the expect norms. One way to ensure that hosts in your network are only talking to the machines they’re supposed to is to enforce system isolation. This can be achieved by implementing additional policies on network devices in addition to your segmentation plan. System isolation can begin with physically separating special machines or groups of machines with an air gap, which is a physical separation of these systems from outside connections. There are clearly tradeoffs in that these machines will not be able to communicate with the rest of the world. However, if they only have one specific job that doesn’t require external connectivity, then it may make sense to separate them entirely. If a connection is required, it’s possible to use access control lists (ACLs) to enforce policy. Like a firewall, an ACL allows or denies certain access, and does so depending on a set of rules applicable to the layer it is operating on, usually at the network or file system level. Although the practice of system isolation takes a bit of forethought, the return on investment for the time spent to set it up is huge.

Jump Box

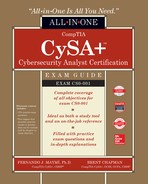

To facilitate outside connections to segmented parts of the network, administrators sometimes designate a specially configured machine called a jump box or jump server. As the name suggests, these computers serve as jumping-off points for external users to access protected parts of a network. The idea is to keep special users from logging into a particularly important host using the same workstation they use for everything else. If that daily-use workstation were to become compromised, it could be used by an attacker to reach the sensitive nodes. If, on the other hand, these users are required to use a specially hardened jump box for these remote connections, it would be much more difficult for the attacker to reach the crown jewels.

A great benefit of jump boxes is that they serve as a chokepoint for outside users wishing to gain access to a protected network. Accordingly, jump boxes often have high levels of activity logging enabled for auditing or forensic purposes. Figure 3-1 shows a very simple configuration of a jump box in a network environment. Note the placement of the jump box in relation to the firewall device on the network. Although it may improve overall security to designate a sole point of access to the network, it’s critical that the jump box is carefully monitored because a compromise of this server may allow access to the rest of the network. This means disabling any services or applications that are not necessary, using strict ACLs, keeping up to date with software patches, and using multifactor authentication where possible.

Figure 3-1 Network diagram of a simple jump box arrangement

Honeypots and Honeynets

Believe it or not, sometimes admins will design systems to attract attackers. Honeypots are a favorite tool for admins to learn more about the adversary’s goals by intentionally exposing a machine that appears to be a highly valuable, and sometimes unprotected, target. Although the honeypot may seem legitimate to the attacker, it is actually isolated from the normal network and has all its activity monitored and logged. This has several benefits from a defensive point of view. By convincing an attacker to focus his efforts against the honeypot machine, an administrator can gain insight in the attacker’s tactics, techniques, and procedures (TTPs). This can be used to predict behavior or aid in identifying the attacker via historical data. Furthermore, honeypots may delay an attacker or force him to exhaust his resources in fruitless tasks.

Honeypots have been in use for several decades, but they have been difficult or costly to deploy because this often meant dedicating actual hardware to face attackers, thus reducing what could be used for production purposes. Furthermore, in order to engage an attacker for any significant amount of time, the honeypot needs to look like a real (and ideally valuable) network node, which means putting some thought into what software and data to put on it. This all takes lots of time and isn’t practical for very large deployments. Virtualization has come to the rescue and addressed many of the challenges associated with administering these machines because the technology scales easily and rapidly.

With a honeynet, the idea is that if some is good, then more is better. A honeynet is an entire network designed to attract attackers. The benefits of its use are the same as with honeypots, but these networks are designed to look like real network environments, complete with real operating systems, applications, services, and associated network traffic. You can think of honeynets as a highly interactive set of honeypots, providing realistic feedback as the real network would. For both honeypots and honeynets, the services are not actually used in production, so there shouldn’t be any reason for legitimate interaction with the servers. You can therefore assume that any prolonged interaction with these services implies malicious intent. It follows that traffic from external hosts on the honeynet is the real deal and not as likely to be a false positive as in the real network. As with individual honeypots, all activity is monitored, recorded, and sometimes adjusted based on the desire of the administrators. Virtualization has also improved the performance of honeynets, allowing for varied network configurations on the same bare metal.

ACLs

An access control list (ACL) is a table of objects, which can be file or network resources, and the users who may access or modify them. You will see the term ACL referenced most often when describing file system or network access. Depending on the type of ACL, the object may be an individual user, a group, an IP address, or port. ACLs are powerful tools in securing the network, but they often require lots of upfront investment in setup. When using ACLs on network devices, for example, admins need to understand exactly how data flows through each device from endpoint to gateway. This will ensure that the appropriate ACLs are implemented at the right locations. Each type of ACL, whether network or file system, has its own specific requirements for best use.

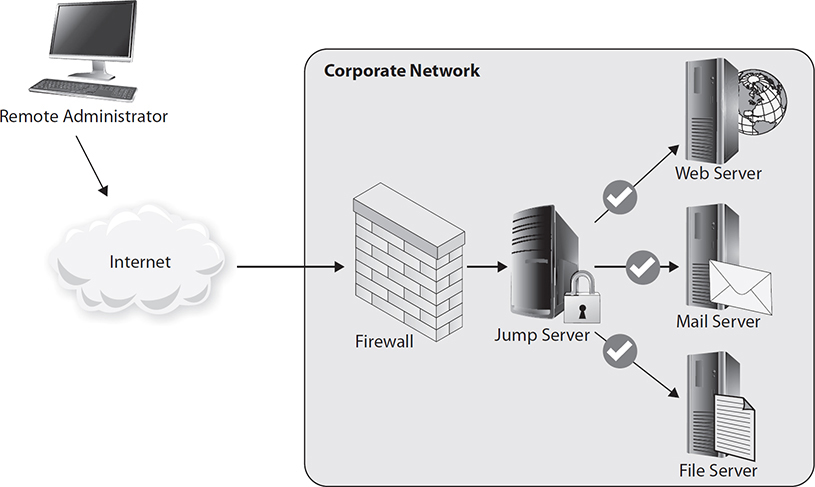

File System ACLs

In early computing environments, files and directories had owners who had complete control to read, write, or modify them. The owners could belong to groups, and they could all be granted the necessary permissions with respect to the file or directory. Anyone else would fall into an other category and could be assigned the appropriate permissions as well. These permissions provided a basic way to control access, but there was no way to assign different levels of access for different individual users. ACLs were developed to provide granular control over a file or directory. Note the difference in access to the bar.txt file in Figure 3-2. As the owner of the file, “root” has read and write access and has given read access to the user “nobody.”

Figure 3-2 Listing of a directory ACL in Linux

Network ACLs

Like file system ACLs, network ACLs provide the ability to selectively permit or deny access, usually for both inbound and outbound traffic. The access conditions depend on the type of device used. Switches, for example, may use the IP or MAC address of the source and destination to decide on whether to allow traffic because they are layer 2 devices. Routers, as layer 3 and 4 devices, may use IP, network protocol, port, or another feature to decide. In virtual and cloud environments, ACLs can be implemented to filter traffic in a similar way.

Black Hole

When probing a network, an adversary relies a great deal on feedback provided by other hosts to get his bearings. It’s a bit like a submarine using sonar to find out where it is relative to the sea floor, obstacles, and perhaps an enemy craft. If a submarine receives no echo from the pings it sends out, it can assume that there are no obstacles present in that direction. Now imagine that it never receives a response to any of the pings it sends out because everything around it somehow has absorbed every bit of acoustic energy; it would have no way to determine where it is relative to anything else and would be floating blindly.

A black hole is a device that is configured to receive any and all packets with a specific source or destination address and not respond to them at all. Usually network protocols will indicate that there is a failure, but with black holes there’s no response at all because the packets are silently logged and dropped. The sender isn’t even aware of the delivery failure.

DNS Sinkhole

The DNS sinkhole is a technique like DNS spoofing in that it provides a response to a DNS query that does not resolve to the actual IP address. The difference, however, is that DNS sinkholes target the addresses for known malicious domains, such as those associated with a botnet, and return an IP address that does not resolve correctly or that is defined by the administrator. Here’s a scenario of how this helps with securing a network: Suppose you receive a notice that a website, www.malware.evil, is serving malicious content and you confirm that several machines in your network are attempting to contact that server. You have no way to determine which computers are infected until they attempt to resolve that hostname, but if they are able to resolve it they may be able to connect to the malicious site and download additional tools that will make remediation harder. If you create a DNS sinkhole to resolve www.malware.evil to your special server at address 10.10.10.50, you can easily check your logs to determine which machines are infected. Any host that attempts to contact 10.10.10.50 is likely to be infected because there would otherwise be no reason to connect there. All the while, the attackers will not be able to further the compromise because they won’t receive feedback from the affected hosts.

Endpoint Security

Focusing on security at the network level isn’t always sufficient to prepare for an attacker. While we aim to mitigate the great majority of threats at the network level, the tradeoff between usability and security emerges. We want network-based protection to be able to inspect traffic thoroughly, but not at the expense of network speed. Though keeping an eye on the network is important, it’s impossible to see everything and respond quickly. Additionally, the target of malicious code is often the data that resides on the hosts. It doesn’t make sense to only strengthen the foundation of the network if the rest is left without a similar level of protection. It’s therefore just as important to ensure that the hosts are fortified to withstand attacks and provide an easy way to give insight into what processes are running.

Detect and Block

Two general types of malware detection for endpoint solutions appear in this category. The first, signature-based detection, compares hashes of files on the local machine to a list of known malicious files. Should there be a match, the endpoint software can quarantine the file and alert the administrator of its presence. Modern signature-based detection software is also capable of identifying families of malicious code.

But what happens when the malicious file is new to the environment and therefore doesn’t have a signature? This might be where behavior-based malware detection can help. It monitors system processes for telltale signs of malware, which it then compares to known behaviors to generate a decision on the file. Behavior-based detection has become important because malware writers often use polymorphic (constantly changing) code, which makes it very difficult to detect using signature methods only.

There are limitations with both methods. False positives, files incorrectly identified as malware, can cause a range of problems. At best, they can be a nuisance, but if the detection software quarantines critical system files, the operating system may be rendered unusable. Scale this up several hundred or thousand endpoints, and it becomes catastrophic for productivity.

Sandbox

Creating malware takes a lot of effort, so writers will frequently test their code against the most popular detection software to make sure that it can’t be seen before releasing it to the wild. Endpoint solutions have had to evolve their functionality to cover the blind spots of traditional detection by using a technique called sandboxing. Endpoint sandboxes can take the form of virtual machines that run on the host to provide a realistic but restricted operating system environment. As the file is executed, the sandbox is monitored for unusual behavior or system changes, and only until the file is verified as being safe might it be allowed to run on the host machine. Historically, sandboxes were used by researchers to understand how malware was executing and evolving, but given the increase in local computing power and the advances in virtualization, sandboxes have become mainstream. Some malware writers have taken note of these trends and have started producing malware that can detect whether its operating in a sandbox using built-in logic. In these cases, if the malware detects the sandbox, it will remain dormant to evade detection and become active at some later point. This highlights the unending battle between malware creators and the professionals who defend our networks.

Cloud-Connected Protection

Like virtualization, the widespread use of cloud computing has allowed for significant advancements in malware detection. Many modern endpoint solutions use cloud computing to enhance protection by providing the foundation for rapid file reputation determination and behavioral analysis. Cloud-based security platforms use automatic sharing of threat detail across the network to minimize the overall risk of infection from known and unknown threats. Were this to be done manually, it would take much more time for analysts to prepare, share, and update each zone separately.

Group Policies

The majority of enterprise devices rely on a directory service, such as Active Directory, to give them access to shared resources such as storage volumes, printers, and contact lists. In a Windows-based environment, Active Directory is implemented through the domain controller, which provides authentication and authorization services in addition to resource provision for all connected users. Additionally, the Active Directory service allows for remote administration using policies. Using group policies, administrators can force user machines to a baseline of settings. Features such as password complexity, account lockout, registry keys, and file access can all be prescribed though the group policy mechanism. Security settings for both machines and user accounts can be set at the local, domain, or network level using group policies.

Device Hardening

The application of the practices in this chapter is part of an effort to make the adversary work harder to discover what our network looks like, or to make it more likely for his efforts to be discovered. This practice of hardening the network is not static and requires constant monitoring of network and local resources. It’s critical that we take a close look at what we can do at the endpoint to prevent only relying on detection of an attack. Hardening the endpoint requires careful thought about the balance of security and usability. We cannot make the system so unusable so that no work can get done. Thankfully, there are some simple rules to follow. The first is that resources should only be accessed by those who need them to perform their duties. Administrators also need to have measures in place to address the risk associated with a requirement if they are not able to address the requirement directly. Next is that hosts should be configured with only the necessary applications and services necessary for the role. Does the machine need to be running a web server, for example? Using policies, these settings can be standardized and pushed to all hosts on a domain. And finally, updates should be applied early and often. In addition to proving functional improvements, many updates come with patches for recently discovered flaws.

Discretionary Access Control (DAC)

Looking back on our discussion of file system ACLs, we saw that simple permissions were the predominant form of access control for many years. This schema is called discretionary access control, or DAC. It’s discretionary in the sense that the content owner or administrator can pass privileges on to anyone else at their discretion. Those recipients could then access the media based on their identity or group membership. The validation of access occurs at the resource, meaning that when the user attempts to access the file, the operating system will consult with the file’s list of privileges and verify that the user can perform the operation. You can see this in action in all major operating systems today: Windows, Linux, and Mac OS.

Mandatory Access Control (MAC)

For environments that require additional levels of scrutiny for data access, such as those in military or intelligence organizations, the mandatory access control (MAC) model is a better option than DAC. As the name implies, MAC requires explicit authorization for a given user on a given object. The MAC model has additional labels for multilevel security—Unclassified, Confidential, Secret, and Top Secret—that are applied to both the subject and object. When a user attempts to access a file, a comparison is made between the security labels on the file, called the classification level, and the security level of the subject, called the clearance level. Only users who have these labels in their own profiles can access files at the equivalent level, and verifying this “need to know” is the main strength of MAC. Additionally, the administrator can restrict further propagation of the resource, even from the content creator.

Role-Based Access Control (RBAC)

What if you have a tremendous number of files that you don’t want to manage on a case-by-case basis? Role-based access control (RBAC) allows you to grant permissions based on a user’s role, or group. The focus for RBAC is at the role level, where the administrators define what the role can do. Users are only able to do what their roles allow them to do, so there isn’t the need for an explicit denial to a resource for a given role. Ideally, there are a small number of roles in a system, regardless of total number of users, so the management becomes much easier. You may wonder what the difference is between groups in the DAC model and RBAC. The difference is slight in that permissions in DAC can be given to both a user and group, so there exists the possibility that a user can be denied, but the group to whom he belongs may have access. With RBAC, every permission is applied to the role, or group, and never directly to the user, so it removes the possibility of the loophole previously described.

Compensating Controls

Compensating controls are any means for organizations to achieve the goals of a security requirement even if they were unable to meet the goals explicitly due to some legitimate external constraint or internal conflict. An example would be a small business that processes credit card payments on its online store and is therefore subject to the Payment Card Industry Digital Security Standard (PCI DSS). This company uses the same network for both sensitive financial operations and external web access. Although best practices would dictate that physically separate infrastructures be used, with perhaps an air gap between the two, this may not be feasible because of cost. A compensating control would be to introduce a switch capable of VLAN management and to enforce ACLs at the switch and router level. This alternative solution would “meet the intent and rigor of the original stated requirement,” as described in the PCI DSS standard.

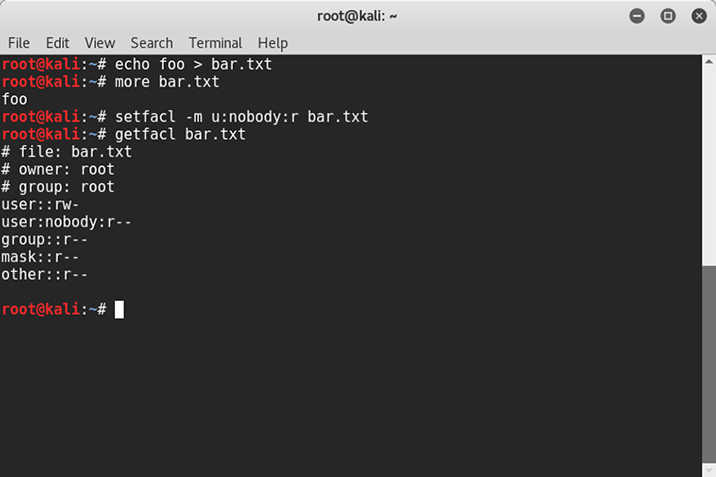

Blocking Unused Ports/Services

I’m always amazed at how many consumer products designed to be connected to a network leave unnecessary services running, sometimes with default credentials. Some of these services are shown in Figure 3-3. If you do not require a service, it should be disabled. Running unnecessary services not only means more avenues of approach for an adversary, it’s also more work to administer. Services that aren’t used are essentially wasted energy because the computers will still run the software, waiting for a connection that is not likely to happen by a legitimate user.

Figure 3-3 Listing of open ports on an unprotected Windows 8 machine

Patching

Patching is a necessary evil for administrators. You don’t want to risk network outages due to newly introduced incompatibilities, but you also don’t need old software being exploited because of your reservations about patching. For many years, vendors tried to ease the stress of patching by regularly releasing their updates in fixed intervals. Major vendors such as Microsoft and Adobe got into a rhythm with issuing updates for their products. Admins could therefore make plans to test and push updates with some degree of certainty. Microsoft recently ended its practice of “Patch Tuesday,” however, in part due to this predictability. A downside of the practice emerged as attackers began reverse engineering the fixes as soon as they were released to determine the previously unknown vulnerabilities. Attackers knew that many administrators wouldn’t be able to patch all their machines before they figured out the vulnerability, and thus the moniker “Exploit Wednesday” emerged. Although humorous, it was a major drawback that convinced the company to instead focus on improving its automatic update features.

Network Access Control

In an effort to enforce security standards for endpoints beyond baselining and group policies, engineers developed the concept of Network Access Control (NAC). NAC provides deeper visibility into endpoints and allows policy enforcement checks before the device is allowed to connect to the network. NAC ties in features such as RBAC, verification of endpoint malware protection, and version checks to address a wide swath of security requirements. Some NAC solutions offer transparent remediation for noncompliant devices. In principle, this solution reduces the need for user intervention while streamlining security and management operations for administrators.

There are, however, a few concerns about NAC: both for user privacy and network performance. Some of NAC’s features, particularly the version checking and remediation, can require enormous resources. Imagine several hundred noncompliant nodes joining the network simultaneously and all getting their versions of Adobe Flash, Java, and Internet Explorer updated all at once. This often means the network takes a hit; plus, the users might not be able to use their machines while the updates are applied. Furthermore, NAC normally requires some type of agent to verify the status of the endpoint’s software and system configuration. This collected data can have major implications on user data privacy should it be misused.

As with all other solutions, we must take into consideration the implications to both the network and the user when developing policy for deployment of NAC solutions. The IEEE 802.1X standard was the de facto NAC standard for many years. While it supported some restrictions based on network policy, its utility diminished as networks became more complex. Furthermore, 802.1X solutions often delivered a binary decision on network access: either permit or deny. The increasing number of networks transitioning to support “bring your own device” (BYOD) required more flexibility in NAC solutions. Modern NAC solutions support several frameworks, each with its own restrictions, to ensure endpoint compliance when attempting to join the enterprise network. Administrators may choose from a variety of responses that modern NAC solutions provide in the case of a violation. Based on the severity of the incident, they may completely block the device’s access, quarantine it, generate an alert, or attempt to remediate the endpoint. We’ll look of the most commonly used solutions next.

Time Based

Does your guest network need to be active at 3 A.M.? If not, then a time-based network solution might be for you. Time-based solutions can provide network access for fixed intervals or recurring timeframes, and enforce time limits for guest access. Some more advanced devices can even assign different time policies for different groups.

Rule Based

NAC solutions will query the host to verify operating system version, the version of security software, the presence of prohibited data or applications, or any other criteria as defined by the list of rules. These rules may also include hardware configurations, such as the presence of unauthorized storage devices. Often they can share this information back into the network to inform changes to other devices. Additionally, many NAC solutions are also capable of operating in a passive mode, running only as a monitor functionality and reporting violations when they occur.

Role Based

In smaller networks, limiting the interaction between nodes manually is a manageable exercise, but as the network size increases, this become exponentially more difficult due in part to the variety of endpoint configurations that may exist. Using RBAC, NAC solutions can assist in limiting the interaction between nodes to prevent unauthorized data disclosure, either accidental or intentional. As discussed, RBAC provides users with a set of authorized actions necessary to fulfill their role in the organization. NAC may reference the existing RBAC policies using whatever directory services are in use across the network and enforce them accordingly. This helps with data loss prevention (DLP) because the process of locating sensitive information across various parts of the network becomes much faster. NAC therefore can serve as a DLP solution, even when data has left the network, because it can either verify the presence of host-based DLP tools or conduct the verification itself using RBAC integration.

Location Based

Along with the surge in BYOD in networks, an increasing number of employees are working away from the network infrastructure, relying on virtual private network (VPN) software or cloud services to gain access to company resources. NAC can consider device location when making its decision on access, providing the two main benefits of identity verification and more accurate asset tracking.

Chapter Review

Proactively preparing against intrusion is a critical step in ensuring that your network is ready to respond to the unlikely event of a successful attack. By appropriately identifying critical assets on the network and segmenting them into subordinate zones, you can limit damage by using sensible network architecture. By smartly managing permissions and access control, you can be sure that only those parties who need to access data, even from within, can do so. Your policy should be so comprehensive as to include complementary or even redundant controls. In cases where certain technical means are not possible due to budget or other restrictions, these alternate controls should be put into place to address the affected areas. While you put attention into making sure the whole network is healthy and resilient, it’s also important to include endpoint and server hardening into the calculation. Turning off unnecessary services and keeping systems and software up to date are vital tasks in improving their security baseline.

What’s more, prevention is not enough as a part of your security strategy. Your security team must assess the network and understand where deception tools can add value. As a strategy used for years in war, deception was employed to make an adversary spend time and resources in ultimately futile efforts, thus providing an advantage to the deceiver. Modern deception techniques for cybersecurity supplement the tools actively monitoring for known attack patterns. They aim to lure attackers away from production resources using network decoys and to observe them to gain insight into their latest techniques, tactics, and procedures.

Enterprises are increasingly using mobile, cloud, and virtualization technologies to improve products and offer employees increases in speed and access to their resources; therefore, the definition of the perimeter changes daily. As the perimeter becomes more porous and blurred, adversaries have taken advantage and used the same access to gain illegal entry into systems. We must take steps to adjust to this new paradigm and prepare our systems to address these and future changes. The solutions discussed in the chapter provide the technical means to prepare for and respond to network-based threats.

Our discipline of information security requires a strong understanding of the benefits and limitations of technology, but it also relies on the skillful balance between security and usability. The more we understand the goals of our information systems and the risk associated with each piece of technology, the better off we will be pursuing that balance.

Questions

1. Which of the following is the correct term for a network device designed to deflect attempts to compromise the security of an information system?

A. ACL

B. VLAN

C. Jump box

D. Honeypot

2. Network Access Control (NAC) can be implemented using all of the following parameters except which one?

A. Domain

B. Time

C. Role

D. Location

3. You are reviewing the access control list (ACL) rules on your edge router. Which of the following ports should normally not be allowed outbound through the device?

A. UDP 53

B. TCP 23

C. TCP 80

D. TCP 25

4. What is the likeliest use for a sinkhole?

A. To protect legitimate traffic from eavesdropping

B. To preventing malware from contacting command-and-control systems

C. To provide ICMP messages to the traffic source

D. Directing suspicious traffic toward production systems

5. Which of the following is not a technique normally used to segregate network traffic in order to thwart the efforts of threat actors?

A. Micro-segmentation

B. Virtual LANs

C. Jump server

D. NetFlow

6. Which of the following terms is used for an access control mechanism whose employment is deliberately optional?

A. DAC

B. MAC

C. RBAC

D. EBAC

Refer to the following illustration for Questions 7–9:

7. Where would be the best location for a honeypot?

A. Circle 2

B. Circle 4

C. Either circle 2 or 5

D. None of the above

8. Which would be the best location at which to use a sandbox?

A. Any of the five circled locations

B. Circle 3

C. Circles 4 and 5

D. Circles 1 and 2

9. Where would you expect to find access control lists being used?

A. Circles 1 and 2

B. Circle 3

C. All the above

D. None of the above

Use the following scenario to answer Questions 10–11:

Your industry sector is facing a wave of intrusions by an overseas crime syndicate. Their approach is to persuade end users to click a link that will exploit their browsers or, failing that, will prompt them to download and install an “update” to their Flash Player. Once they compromise a host, they establish contact with the command-and-control (C2) system using DNS to resolve the ever-changing IP addresses of the C2 nodes. You are part of your sector’s Information Sharing and Analysis Center (ISAC), which gives you updated access to the list of domain names. Your company’s culture is very progressive, so you cannot take draconian measures to secure your systems, lest you incur the wrath of your young CEO.

10. You realize that the first step should be preventing the infection in the first place. Which of the following approaches would best allow you to protect the user workstations?

A. Using VLANs to segment your network

B. Deploying a honeypot

C. Using sandboxing to provide transparent endpoint security

D. Implementing MAC so users cannot install software downloaded from the Internet

11. How can you best prevent compromised hosts from connecting to their C2 nodes?

A. Force all your network devices to resolve names using only your own DNS server.

B. Deploy a honeypot to attract traffic from the C2 nodes.

C. Implement a DNS sinkhole using the domain names provided by the ISAC.

D. Resolve the domain names provided by the ISAC and implement an ACL on your firewall that prevents connections to those IP addresses.

12. You start getting reports of successful intrusions in your network. Which technique lets you contain the damage until you can remediate the infected hosts?

A. Instruct the users to refrain from using their web browser.

B. Add the infected host to its own isolated VLAN.

C. Deploy a jump box.

D. Install a sandbox on the affected host.

Answers

1. D. Honeypots are fake systems developed to lure threat actors to them, effectively deflecting their attacks. Once the actors start interacting with the honeypot, it may slow them down and allow defenders to either study their techniques or otherwise prevent them from attacking real systems.

2. A. Network Access Control (NAC) uses time, rules, roles, or location to determine whether a device should be allowed on the network. The domain to which the device claims to belong is not used as a parameter to make the access decision.

3. B. TCP port 23 is assigned to telnet, which is an inherently insecure protocol for logging onto remote devices. Even if you allow telnet within your organization, you would almost certainly want to encapsulate it in a secure connection. The other three ports are almost always allowed to travel outbound through a firewall, because DNS (UDP 53), HTTP (TCP 80), and SMTP (TCP 25) are typically required by every organization.

4. B. Sinkholes are most commonly used to divert traffic away from production systems without notifying the source (that is, without sending ICMP messages to it). They do not provide protection from eavesdropping and, quite the opposite, would facilitate analysis of the packets by network defenders. A very common application of sinkholes is to prevent malware from using DNS to resolve the names of command-and-control nodes.

5. D. NetFlow is a system designed to provide statistics on network traffic. Micro-segmentation, virtual LANs, and jump servers (or jump boxes) all provide ways to isolate or segregate network traffic.

6. A. The discretionary access control (DAC) model requires no enforcement by design. The other listed approaches are either mandatory (MAC) or agnostic to enforcement (RBAC and EBAC).

7. B. Honeypots, sinkholes, and black holes should all be deployed as far from production systems as possible. Therefore, the unlabeled server on the DMZ would be the best option.

8. D. Sandboxes are typically used at endpoints when executing code that is not known to be benign. Circles 1 and 2 are end-user workstations, which is where we would normally deploy sandboxes because the users are prone to run code from unknown sources. Because anything running on a router or server should be carefully vetted and approved beforehand, circles 3 and 5 are not where we would normally expect to deploy sandboxes. Circle 4 might be a possible location if it were designated solely for that purpose, but it was bundled with the data server at circle 5, which makes it less than ideal.

9. C. Access control lists (ACLs) can be found almost anywhere on a network. Endpoints use them to control which users can read, modify, or execute files, while routers can also use them to control the flow of packets across their interfaces.

10. C. Using sandboxes helps protect the endpoints with minimal impact to the users. It would be ideal to prevent them from installing malware, but the organizational culture in the scenario makes that infeasible (for now).

11. C. More often than not, malware comes loaded with hostnames and not IP addresses for their C2 nodes. The reason is that a hostname can be mapped to multiple IP addresses over time, making the job of blocking them harder. The DNS sinkhole will resolve all hostnames in a given list of domains to a dead end that simply logs the attempts and alerts the cybersecurity analyst to the infected host. Blocking IPs will not work as well, because those addresses will probably change often.

12. B. An extreme form of network segmentation can be used to keep infected hosts connected to the network but unable to communicate with anyone but forensic or remediation devices.