CHAPTER 13

Putting in Compensating Controls

In this chapter you will learn:

• Best practices for security analytics using automated methods

• Techniques for basic manual analysis

• Applying the concept of “defense in depth” across the network

• Processes to continually improve your security operations

Needle in a haystack’s easy. Just bring a magnet.

—Keith R.A. DeCandido

Security Data Analytics

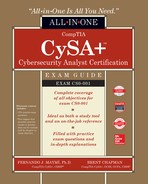

Modern corporate networks are incredibly diverse environments, with some generating gigabytes of data in just logging and event information per day. The scripting techniques and early monitoring utilities are quickly approaching the end of their utility because variety and volume of data now exceed what they were originally designed for. Managing information about your network environment requires a sound strategy and tactical tools for refining data into information, over to knowledge, and onto actionable wisdom. Figure 13-1 shows the relationship between what your tools provide at the tactical level and your goal of actionable intelligence. Data and information sources on your network are at least as numerous as the devices on the network. Log data comes from network routers and switches, firewalls, vulnerability scanners, IPS/IDS, unified threat management (UTM) systems, and mobile device management (MDM) providers. Additionally, each node may provide its own structured or unstructured data from services it provides. It’s the goal of security data analytics to see through the noise of all this network data to produce an accurate picture of the network activity, from which we make decisions in the best interest of our organizations.

Figure 13-1 Relationship among the various levels of data, information, knowledge, and wisdom

Data Aggregation and Correlation

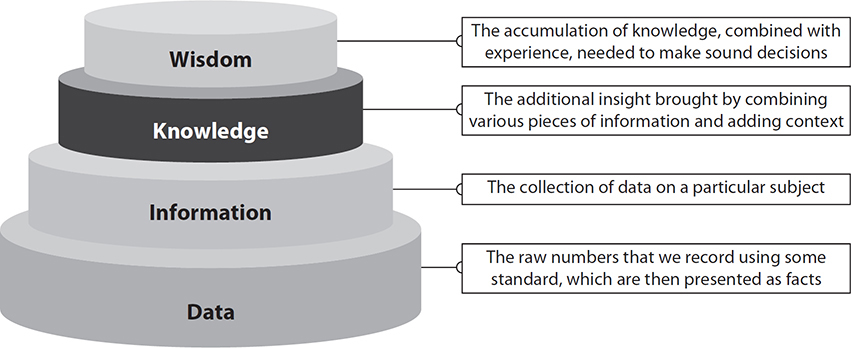

The process of collecting the correct data to inform business decisions can lead to frustration, particularly if the sources are heterogeneous. After all, data ought to be a benefit rather than an impediment to your security team. To understand why data organization is so critical to security operations, we must remember that no single source of data is going to provide what’s necessary to understand an incident. When detectives investigate a crime, for example, they take input from all manner of sources to get the most complete picture possible. The video, eyewitness accounts, and forensics that they collect all play a part in the analysis of the physical event. But before a detective can begin analysis on what happened, the clues must be collected, tagged, ordered, and displayed in a way that it useful for analysis. This practice, called data aggregation, will allow your team to easily compare similar data types, regardless of source. The first step in this process usually involves a log manager collecting and normalizing data from sources across the network. With the data consolidated and stored, it can then be displayed on a timeline for easy search and display. Figure 13-2 shows a security information and event management (SIEM) dashboard that displays the security events collected over a fixed period of time. This particular SIEM is based on Elasticsearch, Logstash, and Kibana, collectively called the ELK stack. The ELK stack is a popular solution for security analysts who need large-volume data collection, a log parsing engine, and search functions. From the total number of raw logs (over 3000 in this case), the ELK stack generates a customizable interface with sorted data and provides color-coded charts for each type.

Figure 13-2 SIEM dashboard showing aggregated event data from various network sources

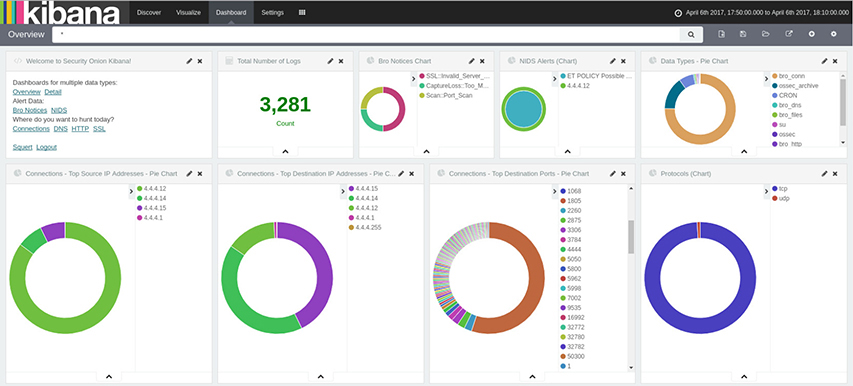

From these charts, we can see the most commonly used protocols and most talkative clients at a glance. Unusual activity is also very easy to identify. Take a look at the “Top Destination Ports” chart shown in Figure 13-3. Given a timeframe of only a few minutes, is there any good reason why one client attempts to contact another over so many ports? Without diving deeply into the raw data, you can see that there is almost certainly scanning activity occurring here.

Figure 13-3 SIEM dashboard chart showing all destination ports for the traffic data collected

Many SIEM solutions offer the ability to craft correlation rules to derive more meaningful information from observed patterns across different sources. For example, if you observe traffic to UDP or TCP port 53 that is not directed to an approved DNS server, this might be evidence of a rogue DNS server present in your network. You are taking observations from two or more sources to inform a decision about which activities to investigate further.

Trend Analysis

Like the vulnerability scanners discussed in Chapter 6, many security analytics tools provide built-in trend analysis functionality. Determining how the network changes over time is important in assessing whether countermeasures and compensating controls are effective. Many SIEMs can display source data in a time-series, which is a method of plotting data points in time order. Indexing these points in a successive manner makes it much easier to detect anomalies because you can roughly compare any single point to all other values. With a sufficient baseline, it’s easy to spot new events and unusual download activity.

We introduced trend analysis in Chapter 2, and discussed how trends could be internal, temporal, or spatial (among others). Back then, we were focused on its use in the context of threat management. Here, we apply it in determining the right controls within our architectures to mitigate those threats. The goal in both cases, however, remains unchanged: we want to answer the question, “Given what we’ve been seeing in the past, what should we expect to see in the future?” When we talk about trend analysis, we are typically interested in predictive analytics.

Historical Analysis

Whereas trend analysis tends to be forward-looking, historical analysis focuses on the past. It can help answer a number of questions, including “have we seen this before?” and “what is normal behavior for this host?” This kind of analysis provides a reference point (or a line or a curve) against which we can compare other data points.

Historical data analysis is the practice of observing network behavior over a given period. The goal is to refine the network baseline by implementing changes based on observed trends. Through detailed examination of an attacker’s past behavior, analysts can gain perspective on the techniques, tactics, and procedures (TTPs) of an attacker to inform decisions about defensive measures. The information obtained over the course of the process may prove useful in developing a viable defense plan, improving network efficiency, and actively thwarting adversarial behavior. Although it’s useful to have a large body from which to build a predictive model, there is one inherent weakness to this method: the unpredictability of humans. Models are not a certainty because it’s impossible to predict the future. Using information gathered on past performance means a large assumption that the behavior will continue in a similar way moving forward. Security analysts therefore must consider present context when using historical data to forecast attacker behavior. A simple and obvious example is a threat actor who uses a certain technique to great success until a countermeasure is developed. Up until that point, the model was highly accurate, but with the hole now discovered and patched, the actor is likely to move on to something new, making your model less useful.

Manual Review

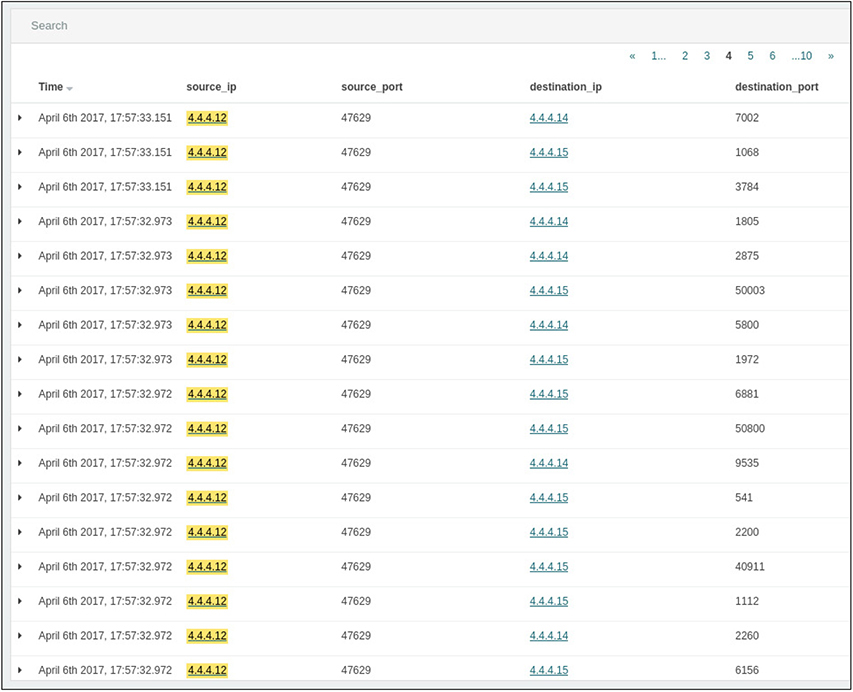

Although it’s tempting to believe that machines can do it all, at the end of the day a security team’s success will be defined by how well its human analysts can piece together the story of an incident. Automated security data analytics might take care of the bulk noise, but the real money is made by the analysts. Let’s look back at our previous examples of network scanning to explore how an analyst might quickly piece together what happened during a suspected incident. Figure 13-4 gives a detailed list of the discrete data points used for the previous graph. We can see that in under a second, the device located at IP address 4.4.4.12 sent numerous probes to two devices on various ports, indicative of a network scan.

Figure 13-4 SIEM list view of all traffic originating from a single host during a network scan

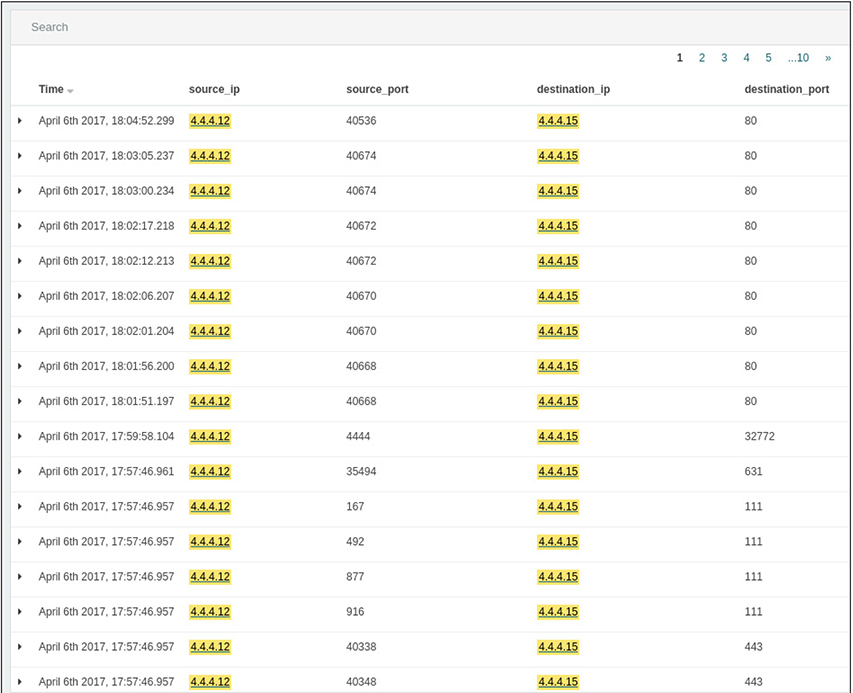

In addition to source, destination, and port information, each exchange is assigned a unique identifier in this system. After the scan is complete a few minutes later, we can see that the device located at 4.4.4.12 establishes several connections over port 80 to a device with the 4.4.4.15 IP address, as shown in Figure 13-5. It’s probably safe to assume that it’s standard HTTP traffic, but it would be great if we were able to take a look. It’s not unheard of for attackers to use well-known ports to hide their traffic.

Figure 13-5 Listing of HTTP exchange between 4.4.4.12 and 4.4.4.15 after scan completion

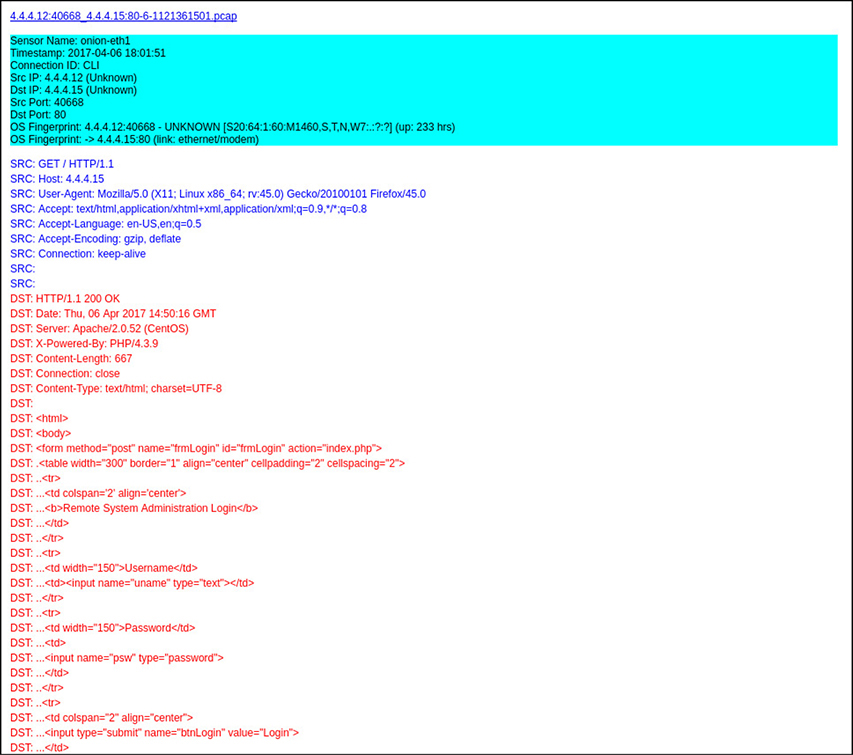

This SIEM allows us to get more information about what happened during that time by linking directly to the packet capture of the exchange. The capture of the first exchange in that series shows a successful request of an HTML page. As we review the details in Figure 13-6, it appears to be the login page for an administrative portal.

Figure 13-6 Packet capture details of first HTTP exchange between 4.4.4.12 and 4.4.4.15

Looking at the very next capture in Figure 13-7, we see evidence of a login bypass using SQL injection. The attacker entered Administrator’ or 1=1 # as the username, indicated by the text in the uname field. When a user enters a username and password, a SQL query is created based on the input from the user. In this injection, the username is populated with a string that, when placed in the SQL query, forms an alternate SQL statement that the server will execute. This gets interpreted by the SQL server as follows:

Figure 13-7 Packet capture details of a second HTTP exchange between 4.4.4.12 and 4.4.4.15 showing evidence of a SQL injection

SELECT * FROM users WHERE name='Administrator' or 1=1 #'

and password='boguspassword'

Because the 1=1 portion will return true, the server doesn’t bother to verify the real password and grants the user access. You can see the note “Welcome to the Basic Administrative Web Console” in the same figure, showing that the attacker has gained access.

Note that just because the attacker now has access to a protected area of the web server, this doesn’t mean he has full access to the network. Nevertheless, this behavior is clearly malicious, and it’s a lead that should be followed to the end. In the following subsections, we discuss how the approach to manual review we just presented using an SIEM and packet captures can be extended to other sources of information.

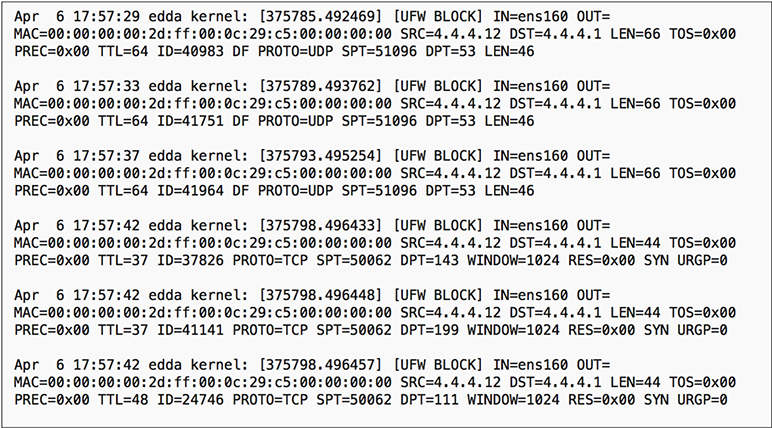

Firewall Log

Firewalls have served as the primary perimeter defense mechanism for networks large and small for many decades. Before the era of next-generation security appliances and advanced endpoint protection, firewall logs were often the primary source for information about malicious activity on the network. Figure 13-8 is a snippet of the logging data from the Uncomplicated Firewall (ufw), the default iptables firewall configuration tool for the Ubuntu operating system. Note the series of block actions against 4.4.4.12.

Figure 13-8 Selection of entries from a Linux firewall log indicating a series of blocked traffic

We can see in each entry a listing of pertinent details about the action, including time, source IP, and port number. When we compare this data with the information provided in Figure 13-3, we can see how the visual presentation might appeal more to an analyst, particularly when dealing with very large volumes of traffic.

Syslog

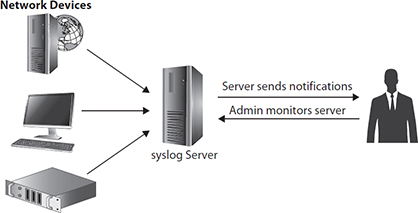

Syslog is a messaging protocol developed at the University of California, Berkeley, to standardize system event reporting. Syslog has become a standard reporting system used by operating systems and includes alerts related to security, applications, and the OS. The local syslog process in UNIX and Linux environments, called syslogd, collects messages generated by the device and stores them locally on the file system. This includes embedded systems found in routers, switches, and firewalls, which use variants and derivatives of the UNIX system. There is, however, no preinstalled syslog agent in the Windows environment. Syslog is a great way to consolidate logging data from a single machine, but the log files can also be sent to a centralized server for aggregation and analysis. Figure 13-9 shows the typical structure of the syslog hierarchy.

Figure 13-9 Typical hierarchy for syslog messaging

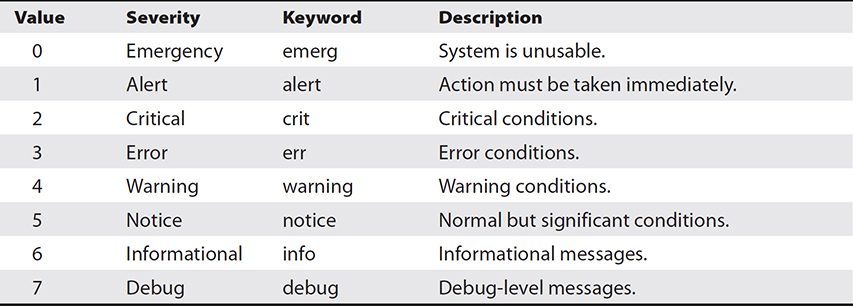

The syslog server will gather syslog data sent over UDP port 514 (or TCP port 514, in the case that message delivery needs to be guaranteed). Analysis of aggregated syslog data is critical for security auditing because the activities that an attacker will conduct on a system are bound to be reported by the syslog utility. These clues can be used to reconstruct the scene and perform remedial actions on the system. Each syslog message includes a facility code and severity level. The facility code gives information about the originating source of the message, whereas the severity code indicates the level of severity associated with the message. Table 13-1 is a list of the severity codes as defined by RFC 5424.

Table 13-1 Syslog Severity Codes, Keywords, and Descriptions

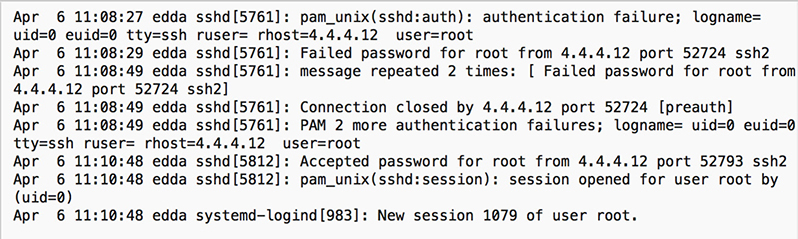

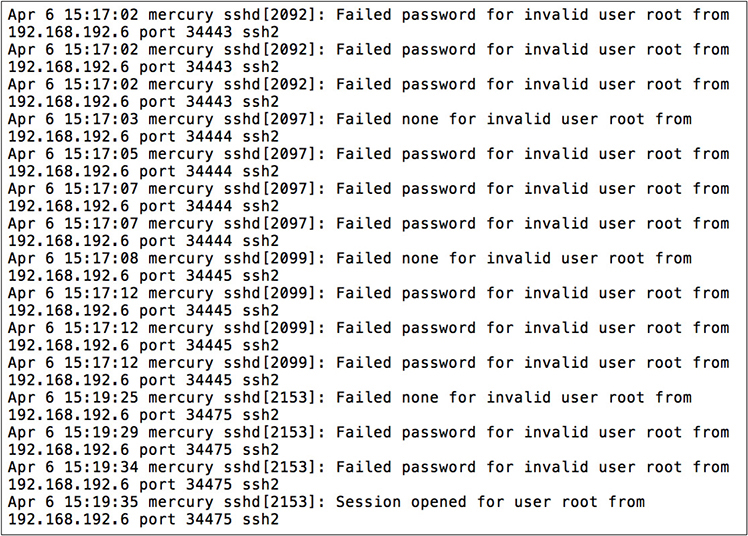

Authentication Logs

Auditing and analysis of login events is critical for a successful incident investigation. All modern operating systems have a way to log successful and unsuccessful attempts. Figure 13-10 shows the contents of the auth.log file indicating all enabled logging activity on a Linux server. Although it might be tempting to focus on the failed attempts, you should also pay attention to the successful logins, especially in relation to those failed attempts. The chance that the person logging in from 4.4.4.12 is an administrator who made a mistake the first couple of times is reasonable. However, when you combine this information with the knowledge that this device has just recently performed a suspicious network scan, the likelihood that this is an innocent mistake goes way down.

Figure 13-10 Snapshot of the auth.log entry in a Linux system

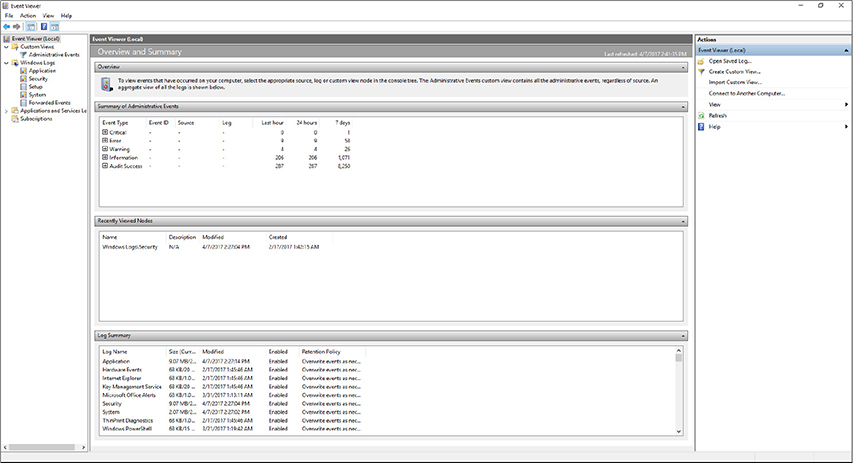

Event Logs

Event logs are similar to syslogs in the detail they provide about a system and connected components. Windows allows administrators to view all of a system’s event logs with a utility called the Event Viewer. This feature makes it much easier to browse through the thousands of entries related to system activity, as shown in Figure 13-11. It’s an essential tool for understanding the behavior of complex systems like Windows—and particularly important for servers, which aren’t designed to always provide feedback through the user interface.

Figure 13-11 The Event Viewer main screen in Windows 10

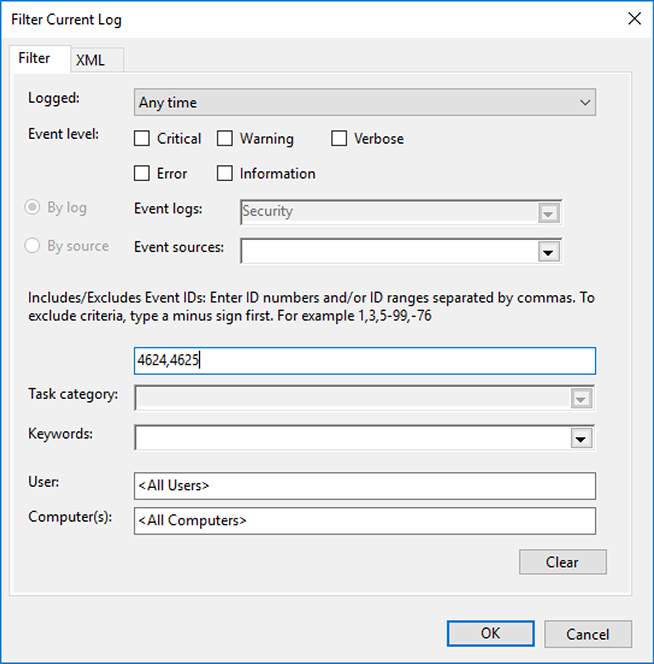

In recent Windows operating systems, successful login events have an event ID of 4624, whereas login failure events are given an ID of 4625 with error codes to specify the exact reason for the failure. In the Windows 10 Event Viewer, you can specify exactly which types of event you’d like to get more detail on using the Filter Current Log option in the side panel. The resulting dialog is shown in Figure 13-12.

Figure 13-12 The Event Viewer prompt for filtering log information

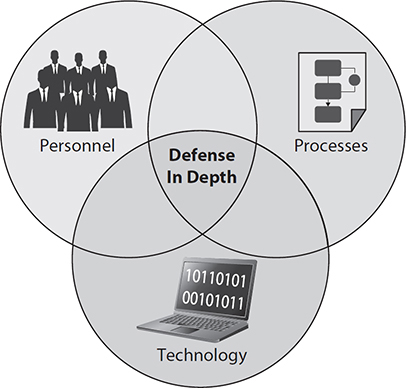

Defense in Depth

The concept of layering defense originated in the military as a way of forcing an enemy to expend resources in preparing for and conducting attacks. By varying the types of defensive systems used, and regularly changing how they’re implemented, practitioners can make it cost-ineffective for an adversary to sustain an offensive campaign. By spreading resources across locations, a defender can ensure that if one mechanism fails, additional hurdles stand between the attacker and their goal. The same concept of varying techniques as part of a rigorous defense plan can be applied to digital systems. For example, you might place firewalls at the perimeter of your network, enable ACLs on various devices, use network segmentation, and enforce group polices. If an attacker circumvents one obstacle, he would have to employ another, different technique against the other defensive measures. Using layers of technical apparatuses alone isn’t enough as it relates to information security; as Figure 13-13 depicts, we must combine processes, people, and technology to achieve the original intent of defense in depth. Defense in depth, as a multifaceted approach for physical and network defense, does suffer from one significant flaw in that it’s used primarily as a tool of attrition. As a strategy, attrition warfare aims to wear an adversary down to the point of exhaustion so that they no longer possess the will to continue. However, how do we deal with an adversary with unlimited willpower? Given enough time, an enemy will likely discover methods to circumvent the security measures we place on the network. It’s important, therefore, that defense in depth never be employed in a static manner: it must constantly be reevaluated, updated, and used alongside other best practices.

Figure 13-13 Personnel, processes, and technology provide defense in depth

Personnel

Human capital is an organization’s most important asset. Despite advancements in automation, humans remain the center of a company’s operation. Dealing with the human dimension in an increasingly automated world is an enormous challenge. Humans make errors, have different motivations, and learn in different ways.

Training

Employee training is a challenging task for organizations because it is sometimes viewed as a superfluous expense with no immediate outcomes. As a result, organizations will sacrifice training budgets in favor of other efforts more clearly tied to the mission. Skimping on training is, however, a critical mistake because an organization whose workers have stale skillsets are less effective and more prone to error.

We’ll cover two aspects of security training in this section. The first is general security awareness training. The focus for this type of training is the typical employee with an average level of technical understanding. Training these types of users on proper network behavior and how to deal with suspicious activity will go a long way. They are, in a way, a large part of the network’s defense plan. If they are well trained on what to watch for, prevention, and reporting procedures, this removes a huge burden from the security team. The second type of training is that for the security staff. Because so much of the knowledge about the domain is being created in real time, security analysts will take part in daily on-the-job training. However, it may not always be sufficient to address the variety of challenges that they are likely to encounter. If an organization is not prepared to train its analysts to face these challenges, either through in-house instruction or external training, it may quickly run into organizational and legal problems. Imagine a situation in which management doesn’t fund a security team’s training on the latest type of ransomware. Where does the fault lie if the company is breached and all of its data encrypted? In this case, providing training would have resulted in displacement of a portion, if not all, of the risk associated with the malware.

Dual Control

By assigning the responsibility for tasks to teams of individuals, as opposed to a single person, an organization can reduce the chances for catastrophic mistakes or fraud. Dual control is a practice that requires the involvement of two or more parties to complete a task. A dramatic example of this in use outside of computer security is the missile launch process of some ships and submarines. Aboard some vessels, particularly those carrying nuclear warheads, launching missiles requires the involvement of two senior military officers with special keys inserted at physically separate locations. The goal, of course, is to avoid putting the awesome power of such destructive weapons in the hands of an individual. In this case, splitting the responsibility of executing the task will assist in preventing accidental launch because two individuals must act in concert. Furthermore, because the keys must be engaged in different areas, it makes it impossible for a single person with two keys from enabling the system on his own.

An implementation of this principle in cybersecurity would be access control to a sensitive account that is protected by two-factor authentication using a password and a hardware token. Authorized users would each have a unique password only they know. To log in, however, they would have to enter their username and password and then call into an operations center for the code on a hardware token.

Separation of Duties

Another effective method to limit a user’s ability to adversely affect sensitive processes is the practice of separation of duties. Also referred to as segregation of duties, this practice places the subordinate tasks for a critical function under dispersed control. This is like dual control, with the primary difference being that the parties are given completely different tasks that work together toward a greater goal. As it applies to security, separation of duties might be used to prevent any single individual from disrupting business-critical processes, accessing sensitive data, or making untested administrative changes across an organization. For example, your organization might break down the requirements to delete sensitive data into several steps: verify, execute, and approve. By giving each task to different people, you can be sure that no one person can perform the deletion task alone. Ideally, the parties involved in performing the task do not belong to the same group. By granting access to individuals who don’t work in the same group, the team can reduce the likelihood of conflicts of interest or, worse, collusion. Should an attacker compromise an account and attempt to use those credentials, a separation of duties policy would prevent access because only one condition would be met for access. Separation of duties also applies generally to the reporting structure of your team. Security team auditors, for example, should not report to members of the production team. Imagine the awkward position if, as a junior analyst, you must report problems with a production system to your boss, who manages these systems.

Third Parties and Consultants

Outside consultants are called to assist because of their special knowledge in an area. In practice, they often act in much the same way as regular employees, although they may not have been subject to the same level of vetting due to time constraints. They will often be tightly integrated with existing teams for effectiveness and efficiency. Since consultants will undoubtedly be exposed to confidential or proprietary company information, an organization must weigh the risks associated with this as part of the greater compensating controls strategy. This strategy must include a nondisclosure agreement (NDA), clearly defined policies for the use of outside equipment on the company network, and a comprehensive description of responsibilities and expectation for the contractor.

Cross-Training

An organization can choose to rotate employees assigned to certain jobs to expose them to a new environment and give them additional context for their role in the organization’s processes. Cross-training is not only a cost-effective way to provide training, but it’s also a way of ensuring that backup personnel are available should primary staff be unavailable. Cross-training has obvious benefits for technical training, but there are additional benefits outside of improving skill. It also helps in team development by providing an opportunity for team members to cover for one another, and it gives each member improved visibility over another’s role in the company.

Mandatory Vacation

Given the stress associated with dealing with sensitive data and processes on a day-to-day basis, it’s a good idea to direct team members to take vacations for at least a week at regular intervals. This serves two purposes related to security. First, by removing an individual from a position temporarily, it allows problems that may have been concealed to become apparent. Furthermore, as workers remain in high-stress position for extended periods, the chances for burnout and complacency increase. Thus, mandatory vacations help prevent the occurrence of mistakes and make the employee more resilient to social engineering attempts. For the sake of continuity, it’s good practice to align mandatory vacations with rotation of duties and cross-training.

Succession Planning

As much as companies would like to hold on to their best and brightest forever, it’s a reality that people move on at some point. Planning for this departure is a necessary part of any mature organization’s continuity process. In the military, succession planning is a well-understood and practiced concept. The inherent risks with service, along with the normal schedule of assignments, means that units must think in the future tense and work on achieving present-day mission success. As with our armed forces, a succession plan in your organization means an orderly transition of responsibilities to a designated person. Ideally, this person is preselected and prepared on the new role with minimal disruption, but this isn't always the case. You must also recognize the natural tension between succession planning and training. Organizations must strike a balance between taking care of current employees with training, but not so much that they neglect preparing for the future force. To prevent this, the organization and its subordinate teams ought to remain focused on the tasks necessary to achieve strategic goals. A practical step in succession planning includes the creation of a playbook, or continuity book, that can be passed on during the changeover process. This playbook should have a description and technical steps involved in team processes, and it should be written in such a way that a team member can pick it up and continue operations with minimal delay. In fact, an effective way to test out the utility of your succession plan is during a job rotation, or any of the aforementioned practices that involve taking a role from the primary employee.

Processes

Filling in the gap between employees in your organization and technology tools are the processes. This key part of the network security plan needs to be well thought out and include clear policies and procedures for all users. We covered some of these concepts in Chapter 11 when we discussed frameworks such as ISO/IEC 27000, COBIT, and ITIL, which ensure an organization follows best practices and is regularly reviewing and improving its security posture. Processes should be reviewed on a yearly basis at the very least, and need to mirror what the current trends are in technology. Moreover, these processes need to be built in such a way as to remain flexible to address evolving threats.

Continual Improvement

Even though processes are designed to be unambiguous, particularly regarding appropriate behavior on the network and consequences for policy violation, they should remain “living” things subject to improvement as necessary. It should not be surprising that our personnel and technology are changing regularly. The threats to our systems change even more quickly. This constantly changing environment requires that we continually examine our processes and look for opportunities for improvement. This managed optimization is the hallmark of mature organizations.

This process of improvement requires changes, and these must be carefully managed. Most organizations implement a change control process to ensure alterations to staffing, processes, or technology are well thought out. All changes to the security processes, whether technical, staff, or policy, should be reviewed and updated to reflect the current threat.

Scheduled Reviews

An organization should plan recurring reviews of its security strategy to keep pace with the threats it faces and to validate whether existing security policies are useful. As with software vendors, updates should be made to policy as necessary to maintain a strong security stance and remain aligned with any new business goals. In regulated environments, these reviews are typically required in order to remain in compliance. In all organizations, they require senior management focus and are absolutely essential to prevent erosion of the security posture.

Retirement of Processes

A natural part of improvement is the retirement of a process. Whether the process is no longer relevant, a new process has been developed, or the process no longer aligns with the organization’s business goals, there must be a formal mechanism to remove it. Retirement of processes is similar to the change control process described earlier. The process in question is reviewed by relevant stakeholders and company leadership, the adjustments made, and the replacement policy clearly communicated to the organization. It’s critical that those involved in the day-to-day execution of tasks within the processes don’t continue to use outdated versions.

Technology

Your organization’s network security plan cannot exist without technology. Although it is as important as people and processes, technology operates at a totally different speed. It’s best used to perform repetitive tasks for which humans are ill-suited, such as enforcing policies, monitoring traffic, alerting to violations, and preventing data from leaving the network. Technological solutions can also be used as compensating controls, minimizing risk at times of human error. But the supporting relationship between people, processes, and technology also goes the other way. As malware evolves to evade next-generation security devices, it is the people and processes supporting the technology that come together to prevent catastrophic damage to your organization.

Automated Reporting

Technology is often best applied to repetitive tasks that require a high degree of accuracy. After collection and analysis are rapidly performed using any of the security data analytics techniques described earlier in this chapter, the security devices can send notifications based on the specifications and preferences of the security team. Automated reporting features are found in most modern security products, including security appliances and security suites. You should spend some time determining exactly what you’d like to report because having constant pings from your security devices can lead to “alert fatigue.”

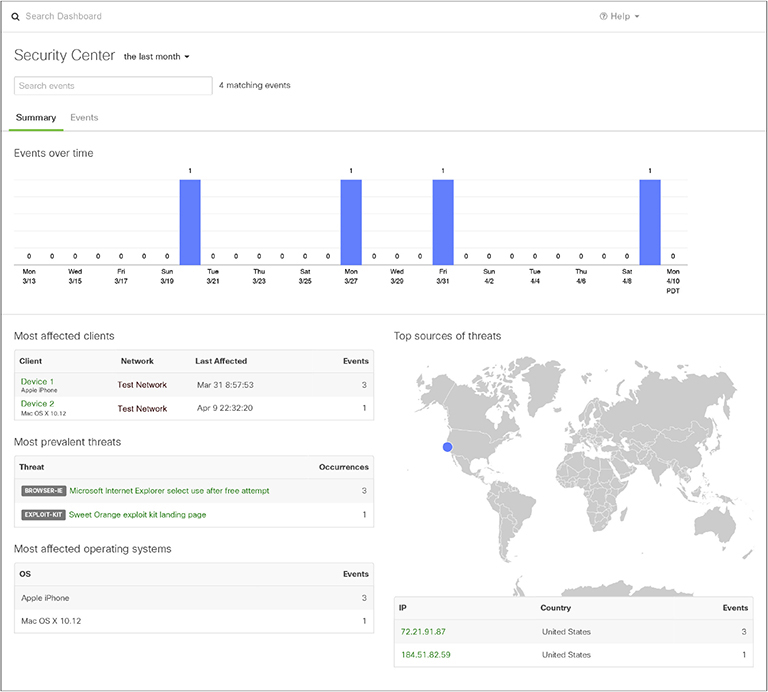

Security Appliances

Security appliances perform functions that traditionally were spread across multiple hardware devices. Nowadays, security appliances can act as firewalls, content filters, IDSs/IPSs, and load balancers. Since these devices provide several network and security functions, potentially to replacing existing devices, their interfaces often provide seamless integration between functions with a central management console. Figure 13-14 is an example of an integrated dashboard provided by a security appliance vendor.

Figure 13-14 Sample dashboard of a security appliance

Note that it provides an overview of the organization’s traffic, anomalies, usage, and additional sections for details on client and application behavior. This appliance also provides a separate area for security specific alerts and settings, as shown in Figure 13-15.

Figure 13-15 Overview of the security events for the organization’s network

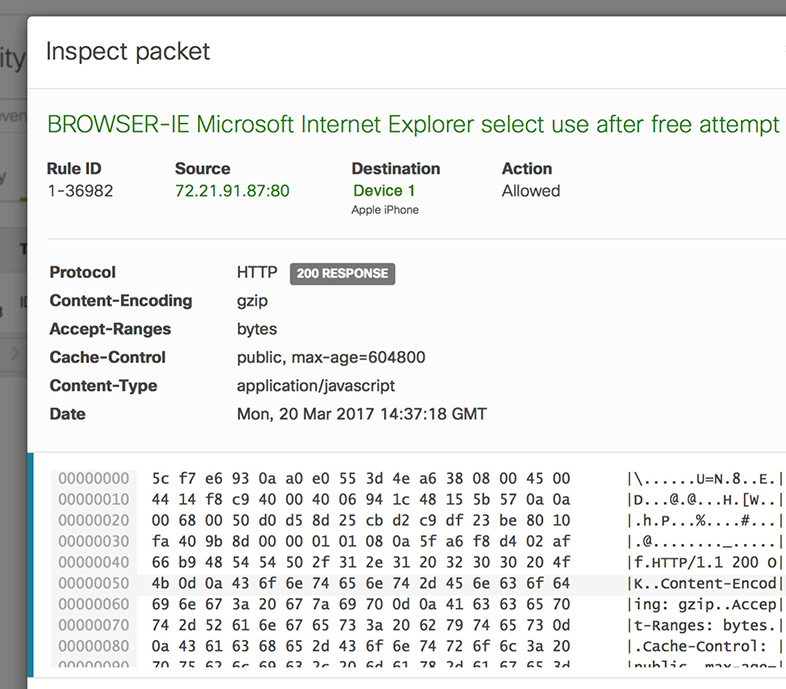

In addition to providing general information about the source, destination, and frequency associated with the event, the security appliance automatically creates a packet capture. Figure 13-16 shows the details provided using the packet capture functionality of this appliance.

Figure 13-16 Packet inspection screen provided by the security appliance

An analyst can review this information and make a call to escalate or to refine the rule in the case of a false positive. Additionally, any of the data found on these screens can be sent by e-mails based on a schedule.

Security Suites

Security suites are a class of software that provide multiple security- and management-related functions. Included in most security suites are endpoint scanning and protection, mobile device management (MDM), and phishing detection. Also called multilayered security, security suites often rely on vast databases of threat data to deliver the most up-to-date detection and protection against network threats.

Outsourcing

Outsourcing presents several challenges that you must be familiar with as a security analyst. Because you are entrusting the security of your network to an outside party, there are several steps you should take to protect your company’s network and data:

• Access control Access to you via the company’s interface should be highly and thoroughly inspected because outside access to them might mean access to you.

• Contractor vetting Both the company and its employees should be subject to rigorous standards for vetting. After all, you are potentially entrusting the fate of your organization to them. Background checks should be conducted and verified. Every effort should be made to ensure that the company’s internal processes are aligned to your own.

• Incident handling and reporting You should understand and agree on the best procedures for incident handling. Any legal responsibilities should be communicated clearly to the company, particularly if you operate in a regulatory environment.

Cryptography

Cryptographic principles underpin many security controls found on our network. Cryptographic hash functions, for example, provide a way for us to maintain integrity. They provide a virtual tamper-proof seal for our data. Similarly, digital signatures give us a way to verify the source of a message, often using a public-key infrastructure (PKI). Perhaps the most well-known principle, encryption, provides confidentiality of our data. The use of encryption might be mandatory, depending on the domain your organization operates in. Regulatory requirements such as the Health Insurance Portability and Accountability Act (HIPAA), the Payment Card Industry (PCI), and Sarbanes-Oxley (SOX) Act compel organizations to use encryption when dealing with sensitive data.

Other Security Concepts

Your organization’s security policies need to be both robust enough to address known threats and agile enough to adjust to emerging dangers. Techniques that might be useful in thwarting an adversary’s actions will lose efficacy over time, or the threat may no longer be relevant. Incorporating a sensible network defense plan as part of a multilayered approach will significantly improve your defensive posture.

Network Design

Cloud services, Infrastructure as a Service (IaaS), SECaaS, and BYOD practices mean that the perimeter is no longer sufficient as your primary defense apparatus. In fact, much of the technical infrastructure might be hidden from you if your organization uses a subscription service. This means that the people and processes aspects of network security become critically important to your company holding onto its data.

Network Segmentation

Segmentation not only improves the efficiency of your network by reducing the workload on routers and switches, it also improves security by creating divisions along the lines of usage and access. For certain organizations, network segmentation will allow them to remain compliant with regulations regarding sensitive data.

Chapter Review

As security analysts, we cannot control the people behind the attacks, how often they attack, or how they choose to go about it, but we can give our own team the tools necessary to stay safe during these events. Through a combination of people, processes, and technology—each depending on and supporting one another—we can offset the inherent and increasing risks in today’s environment. In the past, we focused primarily on technology as the enabler. However, this works as much as a sports team focusing solely on offense works. Security requires a holistic approach, which includes managing our technology, understanding how it aligns with our business processes, and giving the people in our organization the tools to be successful. People, processes, and technology are all integral to dealing with risk.

Questions

Use the following scenario to answer Questions 1–3:

You notice a very high volume of traffic from a host in your network to an external one. You don’t notice any related malware alerts, and the remote host does not show up on your threat intelligence reports as having a suspected malicious IP address. The source host is a Windows workstation belonging to an employee who was involved in an altercation with a manager last week.

1. You are not sure if this is suspicious or not. How can you best determine whether this behavior is normal?

A. Manual review of syslog files

B. Historical analysis

C. Packet analysis

D. Heuristic analysis

2. You decide to do a manual review of log files. Which of the following data sources is least likely to be useful?

A. Firewall logs

B. Security event logs

C. Application event logs

D. Syslog logs

3. Which of the following personnel security practices might be helpful in determining whether the employee is an insider threat?

A. Security awareness training

B. Separation of duties

C. Mandatory vacation

D. Succession planning

4. Your organization requires that new user accounts be initiated by human resources staff and activated by IT operations staff. Neither group can perform the other’s role. No employee belongs to both groups, so nobody can create an account by themselves. Of which personnel security principle is this an example?

A. Dual control

B. Separation of duties

C. Succession

D. Cross-training

5. Your organization stores digital evidence under a two-lock rule in which anyone holding a key to the evidence room cannot also hold a key to an evidence locker. Each lead investigator is issued a locker with key, but they can only enter the room if the evidence custodian unlocks the door to the evidence room. Of which personnel security principle is this an example?

A. Dual control

B. Separation of duties

C. Succession

D. Cross-training

6. Your organization has a process for regularly examining assets, threats, and controls and making changes to your staffing, processes, and/or technologies in order to optimize your security posture. What kind of process is this?

A. Succession planning

B. Trend analysis

C. Security as a service

D. Continual improvement

Refer to the following illustration and scenario for Questions 7–9:

You notice an unusual amount of traffic to a backup DNS server in your DMZ. You examine the log files and see the results illustrated here. All your internal addresses are in the 10.0.0.0/8 network, while your DMZ addresses are in the 172.16.0.0/12 network. The time is now 3:20 p.m. (local) on April 6th.

7. What does the log file indicate?

A. Use of a brute-force password cracker against an SSH service

B. Need for additional user training on remembering passwords

C. Manual password-guessing attack against an SSH service

D. Pivoting from an internal host to the SSH service

8. What would be your best immediate response to this incident?

A. Implement an ACL to block incoming traffic from 192.168.192.6.

B. Drop the connection at the perimeter router and begin forensic analysis of the server to determine the extent of the compromise.

C. Start full packet captures of all traffic between 192.168.192.6 and the server.

D. Drop the connection at the perimeter router and put the server in an isolation VLAN.

9. How could you improve your security processes to prevent this attack from working in the future?

A. Block traffic from 192.168.192.6.

B. Improve end-user password security training.

C. Implement automated log aggregation and reporting.

D. Disallow external connections to SSH services.

Use the following scenario to answer Questions 10–11:

You were hired as a security consultant for a mid-sized business struggling under the increasing costs of cyber attacks. The number of security incidents resulting from phishing attacks is trending upward, which is putting an increased load on the business’s understaffed security operations team. That team is no longer to keep up with both incident responses and an abundance of processes, most of which are not being followed anyway. Personnel turnover in the security shop is becoming a real problem. The CEO wants to stop or reverse the infection trend and get security costs under control.

10. What approach would you recommend to quickly reduce the rate of compromises?

A. Trend analysis

B. Automated reporting

C. Security as a Service

D. Security awareness training

11. How would you address the challenge of an overworked security team? (Choose two.)

A. Cross-training the security staff

B. Outsourcing security functions

C. Retirement of processes

D. Mandatory vacations

E. Increasing salaries and/or bonuses

Answers

1. B. Historical analysis allows you to compare a new data point to previously captured ones. Syslog files and captured packets would be unlikely to tell whether the behavior is normal unless they contained evidence of compromise. Heuristic analysis could potentially be useful, but it is not as good of an answer as historical analysis.

2. D. Windows systems are not normally configured to use syslog. All other log files would likely be present and might provide useful information.

3. C. If the employee is required to go on vacation and the unusual activity ceases, then it is likely due to employee activity. Because the replacement individual would have the exact same duties, the absence of such activity by the substitute might indicate malicious or at least suspicious behavior.

4. B. Separation of duties is characterized by having multiple individuals perform different but complementary subtasks that, together, accomplish a sensitive task.

5. A. Dual control is characterized by requiring two people to perform similar tasks in order to gain access to a controlled asset.

6. D. Continual improvement is aimed at optimizing the organization in the face of ever-changing conditions. Trend analysis could be a source of data for this effort, but this would be an incomplete answer at best.

7. A. The speed at which successive attempts were made make it unlikely that this incident was the result of a manual attack or a forgetful user. There is no evidence to indicate that pivoting, which is lateral movement inside a target network once an initial breach is made, has taken place yet, given that the connection was established less than a minute ago.

8. D. The immediate goal of the response should be to isolate the host suspected of being compromised. Blocking future attempts and learning what the attacker is up to are both prudent steps, but should be done only after the server is isolated.

9. D. The only given choice that would stop this attack in the future is to prevent external connections to SSH. Remote users who need such access should be required to connect over a VPN first, which would give them an internal IP address.

10. D. The issue seems to be that users are more often falling for phishing attacks, which points to a need for improved personnel training more so than any other approach.

11. B, C. Outsourcing some of the security operations can strike a balance between the need to keep some functions in-house while freeing up time for the security team. Additionally, the organization appears to have excessive processes that are not being followed, so retiring some of those would likely lead free up some more time. Cross-training might be helpful if the workload was uneven compared to the skillsets, but there is no mention of that being the case in the scenario.