Cyber-specifications

capturing user requirements for cyber-security investigations

Alex W. Stedmon; Dale Richards; Siraj A. Shaikh; John Huddlestone; Ruairidh Davison

Abstract

In order to capture important user requirements data, it is necessary to take a user-centered approach to understand security from a human factors perspective. Human Factors and Ergonomics are the disciplines that look to support user needs and requirements of products and processes through a detailed understanding of the user’s capabilities, limitations and expectation of those products or processes. A range of methods and approaches are available to assist with the collection of user requirements in sensitive domains and this chapter considers practical issues of their use for cyber-security. A framework of macro-ergonomic security threats is reinterpreted for the cyber domain and illustrated using issues of trust in order to raise awareness for cyber investigations.

Introduction

In many security domains, the “human in the system” is often a critical line of defense in identifying, preventing, and responding to any threats (Saikayasit et al., in print). Traditionally, these domains have been focused in the real world, ranging from mainstream public safety within crowded spaces and border controls, through to identifying suspicious behaviors, hostile reconnaissance and implementing counter-terrorism initiatives. In these instances, security is usually the specific responsibility of front-line personnel with defined roles and responsibilities operating to organizational protocols (Saikayasit et al., 2012; Stedmon et al., 2013).

From a systems perspective, the process of security depends upon the performance of those humans (e.g., users and stakeholders) in the wider security system. Personnel are often working in complex and challenging work environments where the nature of their job is that specific security breaches may be very rare and so they are tasked with recognizing small state changes within a massive workflow, but where security incidents could have rapid and catastrophic consequences (e.g., airline baggage handlers not identifying an improvised explosive device in a passengers luggage).

Furthermore, with the increasing presence of technology in security provision there is a reliance on utilizing complex distributed systems that assist the user, and in some instances are instrumental in facilitating decisions that are made by the user. However, one of the significant benefits of deploying dedicated security personnel is that they often provide operational flexibility and local/tacit knowledge of their working environment that automated systems simply do not possess (Hancock and Hart, 2002; Stedmon et al., 2013).

Through a unique understanding of their work contexts (and the ability to notice subtle patterns of behavior and underlying social norms in those environments) security personnel represent a key asset in identifying unusual behaviors or suspicious incidents that may pose a risk to public safety (Cooke and Winner, 2008). However, the same humans also present a potential systemic weakness if their requirements and limitations are not properly considered or fully understood in relation to other aspects of the total system in which they operate (Wilson et al., 2005).

From a similar perspective, cyber-security operates at a systemic level where users (e.g., the public), service providers (e.g., on-line social and business facilitators) and commercial or social outlets (e.g., specific banking, retail, social networks, and forums) come together in the virtual and shared interactions of cyber-space in order to process transactions. A key difference is that there are no formal policing agents present, no police or security guards that users can go to for assistance. Perhaps the closest analogy to any form of on-line policing would be moderators of social networks and forums but these are often volunteers with no formal training and ultimately no legal responsibility for cyber-security. Whilst there are requirements for secure transactions especially when people are providing their personal and banking details to retails sites, social media sites are particularly vulnerable to identity theft through the information people might freely and/or unsuspectingly provide to third parties. There is no common law of cyber-space as in the real-world and social norms are easily distorted and exploited creating vulnerabilities for security threats.

Another factor in cyber-security is the potential temporal distortions that can occur with cyber-media. Historic postings or blogs might propagate future security threats in ways that real-world artifacts may not. For example, cyber-ripples may develop from historic posts and blogs and may have more resonance following a particular event. Cyber poses a paradoxical perspective for security. Threats can emerge rapidly and dynamically in response to immediate activities (e.g., the UK riots of 2011), while in other ways the data are historical and can lie dormant for long periods of time. However, that data still possesses a presence that can be as hard hitting as an event that has just only happened. Understanding the ways in which users might draw significance from cyber-media is an important part of understanding cyber-influence.

From a cyber-security perspective, the traditional view of security poses a number of challenges:

• Who are the users (and where are they located at the time of their interaction)?

• Who is responsible for cyber-security?

• How do we identify user needs for cyber-security?

• What methods and tools might be available/appropriate for eliciting cyber-requirements?

• What might characterize suspicious behavior within cyber-interactions?

• What is the nature of the subject being observed (e.g., is it a behavior, a state, an action, and so on)?

Underlying these challenges are fundamental issues of security and user performance. Reducing the likelihood of human error in cyber-interactions is increasingly important. Human errors could not only hinder the correct use and operation of cyber technologies they can also compromise the integrity of such systems. There is a need for formal methods that allow investigators to analyze and verify the correctness of interactive systems, particularly with regards to human interaction (Cerone and Shaikh, 2008). Only by considering these issues and understanding the underlying requirements that different users and stakeholders might have, can a more integrated approach to cyber-security be developed from a user-centered perspective. Indeed, for cyber-investigations, these issues pose important questions to consider in exploring the basis upon which cyber interactions might be based and where future efforts to develop solutions might be best placed.

User Requirements and the Need for a User-Centered Approach?

When investigating user needs, a fundamental issue is the correct identification of user requirements that are then revisited in an iterative manner throughout the design process. In many cases, user-requirements are not captured at the outset of a design process and they are sometimes only regarded when user-trials are developed to evaluate final concepts (often when it is too late to change things). This in itself is a major issue for developing successful products and process that specifically meet user needs. A further consideration in relation to user requirements for cyber-security is in the forensic examination techniques more commonly employed in accident investigations to provide an insight into the capabilities and limitations of users at a particular point when an error occurred.

More usually when writing requirement specifications, different domains are identified and analyzed (Figure 5.1).

For example, if a smart closed circuit television (CCTV) system was being designed it would be necessary to consider:

• Inner domain—product being developed and users (e.g., CCTV, operators) including different levels of system requirements to product level requirements.

• Outer domain—the client it is intended to serve (e.g., who the CCTV operator reports to).

• Actors—human or other system components that interact with the system (e.g., an operator using smart software, or camera sensors that need to interact with the software, etc.).

• Data requirements—data models and thresholds.

• Functional requirements—process descriptors (task flows and interactions), input/outputs, messages, etc.

This offers a useful way of conceptualizing requirements and understanding nonfunctional requirements such as quality assessment, robustness, system integrity (security), and resilience. However, a more detailed approach is often needed to map user requirements. User requirements are often bounded by specific “contexts of use” for investigations as this provides design boundaries as well as frames of reference for communicating issues back to end-users and other stakeholders. In order to achieve this, it is often necessary to prioritize potential solutions to ensure that expectations are managed appropriately (Lohse, 2011).

Seeking to understand the requirements of specific end-users and involving key stakeholders in the development of new systems or protocols is an essential part of any design process. In response to this, formal user requirements elicitation and participatory ergonomics have developed to support these areas of investigation, solution generation and ultimately solution ownership (Wilson, 1995). User requirements embody critical elements that end-users and stakeholders need and want from a product or process (Maiden, 2008). These requirements capture emergent behaviors and in order to achieve this some framework of understanding potential and plausible behavior is required (Cerone and Shaikh, 2008). Plausible behaviors encompass all possible behaviors that can occur. These are then mapped to system requirements that express how the interactive system should be designed, implemented, and used (Maiden, 2008). However, these two factors are not always balanced and solutions might emerge that are not fully exploited or used as intended. Participatory ergonomics approaches seek to incorporate end-users and wider stakeholders within work analysis, design processes and solution generation as their reactions, interactions, optimized use and acceptance of the solutions will ultimately dictate the effectiveness and success of the overall system performance (Wilson et al., 2005).

User-centered approaches have been applied in research areas as wide as healthcare, product design, human-computer interaction and, more recently, security and counter-terrorism (Saikayasit et al., 2012; Stedmon et al., 2013). A common aim is the effective capture information from the user’s perspective so that system requirements can then be designed to support what the user needs within specified contexts of use (Wilson, 1995). Requirements elicitation is characterized by extensive communication activities between a wide range of people from different backgrounds and knowledge areas, including end-users, stakeholders, project owners or champions, mediators (often the role of the human factors experts) and developers (Coughlan and Macredie, 2002). This is an interactive and participatory process that should allow users to express their own local knowledge and for designers to display their understanding, to ensure a common design base (McNeese et al., 1995; Wilson, 1995). End-users are often experts in their specific work areas and possess deep levels of knowledge gained over time that is often difficult to communicate to others (Blandford and Rugg, 2002; Friedrich and van der Pool, 2007). Users often do not realize what information is valuable to investigations and the development of solutions or the extent to which their knowledge and expertise might inform and influence the way they work (Nuseibeh and Easterbrook, 2000).

Balancing Technological and Human Capabilities

Within cyber-security it can be extremely difficult to capture user requirements. Bearing in mind the earlier cyber-security issues, it is often a challenge to identify and reach out to the users that are of key interest for any investigation. For example, where user trust has been breached (e.g., through a social networking site, or some form of phishing attack) users may feel embarrassed, guilty for paying funds to a bogus provider, and may not want to draw attention to themselves. In many ways this can be similar for larger corporations who may be targeted by fraudsters using identity theft tactics to pose as legitimate clients. Whilst safeguards are in place to support the user-interaction and enhance the user-experience, it is important to make sure these meet the expectations of the users for which they are intended.

In real world contexts, many aspects of security and threat identification in public spaces still rely upon the performance of frontline security personnel. However this responsibility often rests on the shoulder of workers who are low paid, poorly motivated and lack higher levels of education and training (Hancock and Hart, 2002). Real-world security solutions attempt to embody complex, user-centered, socio-technical systems in which many different users interact at different organizational levels to deliver technology focused security capabilities.

From a macro-ergonomics perspective it is possible to explore how the systemic factors contribute to the success of cyber-security initiatives and where gaps may exist. This approach takes a holistic view of security, by establishing the socio-technical entities that influence systemic performance in terms of integrity, credibility, and performance (Kleiner, 2006). Within this perspective it is also important to consider wider ethical issues of security research and in relation to cyber investigations, the balance between public safety and the need for security interventions (Iphofen, in print; Kavakli et al., 2005).

Aspects of privacy and confidentiality underpin many of the ethical challenges of user requirements elicitation, where investigators must ensure that:

• End-users and stakeholders are comfortable with the type of information they are sharing and how the information might be used.

• End-users are not required to breach any agreements and obligations with their employers or associated organizations.

In many ways these ethical concerns are governed by Codes of Conduct that are regulated by professional bodies such as the British Psychological Society (BPS) but it is important that investigators clearly identify the purpose of an investigation and set clear and legitimate boundaries for intended usage and communication of collected data.

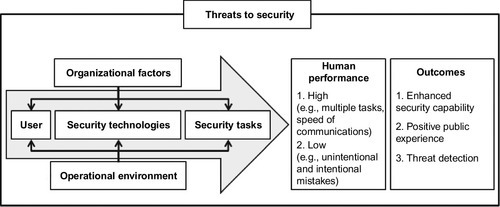

The macro-approach is in contrast to micro-ergonomics, which traditionally focuses on the interaction of a single user and their immediate technology use (Reiman and Väyrynen, 2011). This has often been a starting point for traditional human factors approaches; however by understanding macro-level issues, the complexity of socio-technical factors can be translated into micro-level factors for more detailed analysis (Kleiner, 2006). For example, Kraemer et al. (2009) explored issues in security screening and inspection of cargo and passengers by taking a macro-ergonomics approach. A five-factor framework was proposed that contributes to the “stress load” of frontline security workers in order to assess and predict individual performance as part of the overall security system (Figure 5.2). This was achieved by identifying the interactions between: organizational factors (e.g., training, management support, and shift structure), user characteristics (i.e., the human operator’s cognitive skills, training), security technologies (e.g., the performance and usability of technologies used), and security tasks (e.g., task loading and the operational environment).

The central factor of the framework is the user (e.g., the frontline security operator, screener, inspector) who has specific skills within the security system. The security operator is able to use technologies and tools to perform a variety of security screening tasks that support the overall security capability. However these are influenced by task and workload factors (e.g., overload/under load/task monotony/repetition). In addition, organizational factors (e.g., training, management support, culture and organizational structures) as well as the operational environment (e.g., noise, climate, and temperature) also contribute to the overall security capability. This approach helps identify the macro-ergonomic factors where the complexity of the task and resulting human performance within the security system may include errors (e.g., missed threat signals and false positives) or violations (e.g., compromised or adapted protocols in response to the dynamic demands of the operational environment) (Kraemer et al., 2009). This macro-ergonomic framework has been used to form a basis for understanding user requirements within counter-terrorism focusing on the interacting factors and their influence of overall performance of security systems including users and organizational processes (Saikayasit et al., 2012; Stedmon et al., 2013).

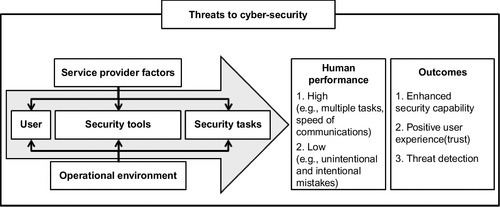

This framework provides a useful perspective on cyber-security and can be redrawn to embody a typical user and provides a basis for exploring user requirements (Figure 5.3).

In this way, cyber-security can be understood in terms of the user (e.g., adult or child) who has a range of skills but also presents vulnerabilities within the overall cyber-security security system (e.g., a child may not know the exact identity of someone who befriends them in a chat-room; an adult may not realize the significance of imparting details about a relative to friends on a social networking site; a lonely person may be susceptible to others people’s approaches on the pretext of friendship). The user has a range of technologies and tools to perform a variety of tasks, some of which will be focused either explicitly or implicitly on their own security or the wider security of the network (e.g., login/password protocols, user identity checks) and in a similar way to the security framework, performance is shaped by task and workload factors (e.g., overload/underload/task monotony/repetition). Within cyber-security, a key difference to the establish security framework is that organizational factors are supplanted by service provider factors. In this way cyber policies may dictate specific security measures but in terms of a formal security capability (policing the web in a similar way that security personnel police public spaces—supported by formal training, management support, culture and organizational structures) there is no such provision. Indeed, individual user training is at best very ad-hoc and in most cases nonexistent. The operational environment is only constrained by a user with access to the web. The user is just as capable of performing their tasks sitting on a busy train (where others can view their interaction or video them inputting login/password data) or in the comfort and privacy of their own home.

A particularly interesting area of cyber-security is that of user trust. From a more traditional perspective, as with any form of technology or automated process there must be trust in the system, specific functionality of system components, communication within the system and a clear distinction of where authority lies in the system (Taylor and Selcon, 1990). Applying this to cyber-trust a range of issues present themselves:

• User acceptance of on-line transactions are balanced against the risks and estimated benefits.

• Trust is generated from the technology used for interactions (e.g., the perception of secure protocols against the vulnerability of open networks) and also in the credibility of the individuals or organizations that are part of the interaction process (Beldad et al., 2010).

• To develop on-line trust, the emphasis is on individuals and organizations to present themselves as trustworthy (Haas and Deseran, 1981). In order to achieve this, it is important, to communicate trust in a way that users will identify with (e.g., reputation, performance, or even website appearance).

• Web-based interactions offer users with multiple “first-time” experiences (e.g., buying products from different websites, or joining different chat-rooms). This suggests that people who lack experience with online transactions and with online organizations might have different levels of trust compared to those with more experience (Boyd, 2003).

• Security violations in human-computer interaction may be due to systematic causes such as cognitive overload, lack of security knowledge, and mismatches between the behavior of the computer system and the user’s mental model (Cerone and Shaikh, 2008).

• To some extent users will develop their own mental models and of such interactions by which to gauge subsequent procedures. Understanding the constructs and evolution of these mental models and how they evolve is a key factor in understanding the expectations of users for new cyber-interactions.

These factors can be related back to the cyber-security framework in order to highlight key issues for user requirements investigations (Table 5.1).

Table 5.1

Using the Cyber-Security Framework to Map Issues of Cyber-Trust

Using the cyber-security framework to identify potential user requirements issues is an important part of specifying cyber-specifications. However, in order to capture meaningful data it is also important to consider the range of methods that are available. The use of formal methods in verifying the correctness of interactive systems should also include analysis of human behavior in interacting with the interface and must take into account all relationships between user’s actions, user’s goals, and the environment (Cerone and Shaikh, 2008).

Conducting User Requirements Elicitation

As previously discussed, whilst methods exist for identifying and gathering user needs in the security domain, they are relatively underdeveloped. It is only in the last decade that security aspects of interactive systems have been started to be systematically analyzed (Cerone and Shaikh, 2008); however, little research has been published on understanding the work of security personnel and systems which leads to the lack of case studies or guidance on how methods can be adopted or have been used in different security settings (Hancock and Hart, 2002; Kraemer et al., 2009). As a result it is necessary to revisit the fundamental issues of conducting user requirements elicitation that can then be applied to security research.

User requirements elicitation presents several challenges to investigators, not least in recruiting representative end-users and other stakeholders upon which the whole process depends (Lawson and D’Cruz, 2011). Equally important, it is necessary to elicit and categorize/prioritize the relevant expertise and knowledge and communicate these forward to designers and policy makers, as well as back to the end-users and other stakeholders.

One of the first steps in conducting a user requirements elicitation is to understand that there can be different levels of end-users or stakeholders. Whilst the term “end-user” and “stakeholder” are often confused, stakeholders are not always the end-users of a product or process, but have a particular investment or interest in the outcome and its effect on users or wider community (Mitchell et al., 1997). The term “end-user” or “primary user” is commonly defined as someone who will make use of a particular product or process (Eason, 1987). In many cases, users and stakeholders will have different needs and often their goals or expectations of the product or process can be conflicting (Nuseibeh and Easterbrook, 2000). These distinctions and background information about users, stakeholders and specific contexts of use allow designers and system developers to arrive at informed outcomes (Maguire and Bevan, 2002).

Within the security domain and more specifically within cyber-security, a key challenge in the initial stages of user requirements elicitation is gaining access and selecting appropriate users and stakeholders. In “sensitive domains,” snowball or chain referral sampling are particularly successful methods of engaging with a target audience often fostered through cumulative referrals made by those who share knowledge or interact with others at an operational level or share specific interests for the investigation (Biernacki and Waldorf, 1981). This sampling method is useful where security agencies and organizations might be reluctant to share confidential and sensitive information with those they perceive to be “outsiders.” This method has been used in the areas of drug use and addiction research where information is limited and where the snowball approach can be initiated with a personal contact or through an informant (Biernacki and Waldorf, 1981). However, one of the problems with such a method of sampling is that the eligibility of participants can be difficult to verify as investigators rely on the referral process, and the sample includes only one subset of the relevant user population. More specifically within cyber-security, end-users may not know each other well enough to enable such approaches to gather momentum.

While user requirements elicitation tends to be conducted amongst a wide range of users and stakeholders, some of these domains are more restricted and challenging than others in terms of confidentiality, anonymity, and privacy. These sensitive domains can include those involving children, elderly or disabled users, healthcare systems, staff/patient environments, commerce, and other domains where information is often beyond public access (Gaver et al., 1999). In addition, some organizations restrict how much information employees can share with regard to their tasks, roles, strategies, technology use and future visions with external parties to protect commercial or competitive standpoints. Within cyber-security, organizations are very sensitive of broadcasting any systemic vulnerabilities which may be perceived by the public as a lack of security awareness or exploited by competitors looking for marketplace leverage. Such domains add further complications to ultimately reporting findings to support the wider understanding of user needs in these domains (Crabtree et al., 2003; Lawson et al., 2009).

Capturing and Communicating User Requirements

There are a number of human factors methods such as questionnaires, surveys, interviews, focus groups, observations and ethnographic reviews, and formal task or link analyses that can be used as the foundations to user requirements elicitation (Crabtree et al., 2003; Preece et al., 2007). These methods provide different opportunities for interaction between the investigator and target audience, and hence provide different types and levels of data (Saikayasit et al., 2012). A range of complementary methods are often selected to enhance the detail of the issues explored. For example, interviews and focus groups might be employed to gain further insights or highlight problems that have been initially identified in questionnaires or surveys. In comparison to direct interaction between the investigator and participant (e.g., interviews) indirect methods (e.g., questionnaires) can reach a larger number of respondents and are cheaper to administer, but are not efficient for probing complicated issues or tacit knowledge (Sinclair, 2005).

Focus groups can also be used, where the interviewer acts as a group organizer, facilitator and prompter, to encourage discussion across several issues around pre-defined themes (Sinclair, 2005). However, focus groups can be costly and difficult to arrange depending on the degree of anonymity required by each of the participants. They are also notoriously “hit and miss” depending on the availability of participants for particular sessions. In addition, they need effective management so that all participants have an opportunity to contribute without specific individuals dominating the interaction or people being affected by peer pressure not to voice particular issues (Friedrich and van der Poll, 2007). As with many qualitative analyses, care also needs to be taken in how results are fed into the requirements capture. When using interactive methods, it is important that opportunities are provided for participants to express their knowledge spontaneously, rather than only responding to directed questions from the investigator. This is because there is a danger that direct questions are biased by preconceptions that may prevent investigators exploring issues they have not already identified. On this basis, investigators should assume the role of “learners” rather than “hypothesis testers” (McNeese et al., 1995).

Observational and ethnographic methods can also be used to allow investigators to gather insights into socio-technical factors such as the impact of gate-keepers, moderators or more formal mechanisms in cyber-security. However, observation and ethnographic reviews can be intrusive, especially in sensitive domains where privacy and confidentially is crucial. In addition, the presence of observers can elicit behaviors that are not normal for the individual or group being viewed as they purposely follow formal procedures or act in a socially desirable manner (Crabtree et al., 2003; Stanton et al., 2005). Furthermore, this method provides a large amount of rich data, which can be time consuming to analyze. However, when used correctly, and when the investigator has a clear understanding of the domain being observed, this method can provide rich qualitative and quantitative real world data (Sinclair, 2005).

Investigators often focus on the tasks that users perform in order to elicit tacit information or to understand the context of work (Nuseibeh and Easterbrook, 2000). Thus the use of task analysis methods to identify problems and the influence of user interaction on system performance is a major approach within human factors (Kirwan and Ainsworth, 1992). A task analysis is defined as a study of what the user/system operation is required to do, including physical activities and cognitive processes, in order to achieve a specified goal (Kirwan and Ainsworth, 1992). Scenarios are often used to illustrate or describe typical tasks or roles in a particular context (Sutcliffe, 1998). There are generally two types of scenarios: those that represent and capture aspects of real work settings so that investigators and users can communicate their understanding of tasks to aid the development process; and those used to portray how users might envisage using a future system that is being developed (Sutcliffe, 1998). In the latter case, investigators often develop “user personas” that represent how different classes of user might interact with the future system and/or how the system will fit into an intended context of use. This is sometimes communicated through story-board techniques either presented as scripts, link-diagrams or conceptual diagrams to illustrate processes and decision points of interest.

Whilst various methods assist investigators in eliciting user requirements, it is important to communicate the findings back to relevant users and stakeholders. Several techniques exist in user experience and user-centered design to communicate the vision between investigators and users. These generally include scenario-based modeling (e.g., tabular text narratives, user personas, sketches and informal media) and concept mapping (e.g., scripts, sequences of events, link and task analyses) including actions and objects during the design stage of user requirements (Sutcliffe, 1998). Scenario-based modeling can be used to represent the tasks, roles, systems, and how they interact and influence task goals, as well as identify connections and dependencies between the user, system and the environment (Sutcliffe, 1998). Concept mapping is a technique that represents the objects, actions, events (or even emotions and feelings) so that both the investigators and users form a common understanding in order to identify gaps in knowledge (Freeman and Jessup, 2004; McNeese et al., 1995). The visual representations of connections between events and objects in a concept map or link analysis can help identify conflicting needs, create mutual understandings and enhance recall and memory of critical events (Freeman and Jessup, 2004). Use-cases can also be used to represent typical interactions, including profiles, interests, job descriptions and skills as part of the user requirements representation (Lanfranchi and Ireson, 2009). Scenarios with personas can be used to describe how users might behave in specific situations in order to provide a richer understanding of the context of use. Personas typically provide a profile of a specific user, stakeholder or role based on information from a number of sources (e.g., a typical child using a chat-room; a parent trying to govern the safety of their child’s on-line presence; a shopper; a person using a home-banking interface). What is then communicated is a composite and synthesis of key features within a single profile that can then be used as a single point of reference (e.g., Mary is an 8-year-old girl with no clear understanding of internet grooming techniques; Malcolm is a 60-year-oldman with no awareness of phishing tactics). In some cases personas are given names and background information such as age, education, recent training courses attended and even generic images/photos to make them more realistic or representative of a typical user. In other cases, personas are used anonymously in order to communicate generic characteristics that may be applicable to a wider demographic.

User requirements elicitation with users working in sensitive domains also presents issues of personal anonymity and data confidentiality (Kavali et al., 2005). In order to safeguard these, anonymity and pseudonymity can be used to disguise individuals, roles and relationships between roles (Pfitzmann and Hansen, 2005). In this way, identifying features of participants should not be associated with the data or approaches should be used that specifically use fictitious personas to illustrate and integrate observations across a number of participants. If done correctly, these personas can then be used as an effective communication tool without compromising the trust that has been built during the elicitation process.

Using a variety of human factors methods provides investigators with a clearer understanding how cyber-security, as a process, can operate based on the perspective of socio-technical systems. Without a range of methods to employ and without picking those most suitable for a specific inquiry, there is a danger that the best data will be missed. In addition, without using the tools for communicating the findings of user requirements activities, the overall process would be incomplete and end-users and other stakeholders will miss opportunities to learn about cyber-security and/or contribute further insights into their roles. Such approaches allow investigators to develop a much better understanding of the bigger picture such as the context and wider systems, as well as more detailed understandings of specific tasks and goals.

Conclusion

A user-centered approach is essential to understanding cyber-security from a human factors perspective. It is also important to understand the context of work and related factors contributing to the overall performance of a security system. The adaptation of the security framework goes some way in helping to focus attention. However, while there are many formal and established methodologies that are in use, it is essential that the practitioner considers the key contextual issues as outlined in this chapter before simply choosing a particular methodology. Whilst various methods and tools can indeed be helpful in gaining insight into particular aspects of requirements elicitation for cyber-security, caution must be at the forefront as a valid model for eliciting such data does not exist specifically for cyber-security at present. At the moment, investigations rely on the experience, understanding and skill of the investigator in deciding which approach is best to adopt in order to collect robust data that can then be fed back into the system process.

Acknowledgment

Aspects of the work presented in this chapter were supported by the Engineering and Physical Sciences Research Council (EPSRC) as part of the “Shades of Grey” project (EP/H02302X/1).