Chapter 7. Special Topics in Cyber Security Engineering

with Julia Allen, Warren Axelrod, Stephany Bellomo, and Jose Morales

In This Chapter

• 7.2 Security: Not Just a Technical Issue

• 7.3 Cyber Security Standards

• 7.4 Security Requirements Engineering for Acquisition

• 7.5 Operational Competencies (DevOps)

7.1 Introduction

Earlier chapters mention some special topics for readers who want to dig a little deeper in specific areas. Those topics are presented here:

• This chapter starts with a discussion of governance, recognizing that security is more than just a technical issue, and describes how organizations can set clear expectations for business conduct and then follow through to ensure that the organization fulfills those expectations.

• Then this chapter describes some findings on cyber security standards, an important area that is still evolving.

• Organizations that perform acquisition—rather than development—can achieve an emphasis on security requirements by using Security Quality Requirements Engineering for Acquisition (A-SQUARE). All too often, cyber security is left to contractors, with little attention from acquisition organizations until systems are delivered or operational. We hope to see organizations address cyber security much earlier in the acquisition lifecycle, and security requirements are a good place to start.

• Next, this chapter discusses DevOps. In the field we are finally seeing recognition of the synergy of development and operations, after years of seeing them treated as disparate activities in a stovepiped project environment.

• Finally, this chapter discusses some recent research work that seeks to identify ways organizations can use malware analysis to identify security requirements that have led to vulnerabilities in earlier systems. These overlooked requirements can then be incorporated into the security requirements for future systems.

Each of these topics is unique, and you may not be interested in all of them. We offer them as a deeper exploration in specific areas of cyber security engineering.

7.2.1 Introduction

This section defines the scope of governance concern as it applies to security. It describes some of the top-level considerations and characteristics to use as indicators of a security-conscious culture and to determine whether an effective program is in place.

Security’s days as just a technical issue are over. Security is becoming a central concern for leaders at the highest levels of many organizations and governments, and it transcends national borders. Today’s organizations face constant high-impact security incidents that can disrupt operations and lead to disclosure of sensitive information. Customers are demanding greater security as evidence suggests that violations of personal privacy, the disclosure of personally identifiable information, and identity theft are on the rise. Business partners, suppliers, and vendors are requiring greater security from one another, particularly when providing mutual network and information access. Networked efforts to steal competitive intelligence and engage in extortion are becoming more prevalent. Security breaches and data disclosure increasingly arise from criminal behavior motivated by financial gain as well as state-sponsored actions motivated by national strategies.

Current and former employees and contractors who have or had authorized access to their organization’s system and networks are familiar with internal policies, procedures, and technology and can exploit that knowledge to facilitate attacks and even collude with external attackers. Organizations must mitigate malicious insider acts such as sabotage, fraud, theft of confidential or proprietary information, and potential threats to our nation’s critical infrastructure. Recent CERT research documents cases of successful malicious insider incidents even during the software development lifecycle.2

2. Refer to the CERT Insider Threat website (www.cert.org/insider-threat/publications) for presentations and podcasts on this subject.

In the United States, managing cyber security risk is becoming a national imperative. In February 2013, the U.S. president issued an executive order3 to enhance the security of the nation’s critical infrastructure, resulting in the development of the National Institute of Standards and Technology (NIST) Cybersecurity Framework [NIST 2014]. According to the IT Governance Institute, “boards of directors will increasingly be expected to make information security an intrinsic part of governance, integrated with processes they already have in place to govern other critical organizational resources” [ITGI 2006]. The National Association of Corporate Directors (NACD) states that the cyber security battle is being waged on two levels—protecting a corporation’s most valuable assets and the implications and consequences of disclosure in response to legal and regulatory requirements [Warner 2014]. According to an article in NACD Magazine, “Cybersecurity is the responsibility of senior leaders who are responsible for creating an enterprise-wide culture of security” [Warner 2014]. At an international level, the Internet Governance Forum (IGF)4 provides a venue for discussion of public policy issues, including security, as they relate to the Internet. Ultimately, directors and senior executives set the direction for how enterprise security—including software security—is perceived, prioritized, managed, and implemented. This is governance in action.

3. www.whitehouse.gov/the-press-office/2013/02/12/executive-order-improving-critical-infrastructure-cybersecurity

4. www.intgovforum.org/cms/home-36966

The Business Roundtable (an association of chief executive officers of leading U.S. companies) recommends the following in its report More Intelligent, More Effective Cybersecurity Protection [Business Roundtable 2013]:

As additional evidence of this growing trend, the Deloitte 2014 Board Practices Report: Perspective from the Boardroom states the following [Deloitte 2014]:

According to the Building Security In Maturity Model [McGraw 2015],

While growing evidence suggests that senior leaders are paying more attention to the risks and business implications associated with poor or inadequate security governance, a recent Carnegie Mellon University survey indicates that there is still a lot of room for improvement [Westby 2012]:

• Boards are still not undertaking key oversight activities related to cyber risks, such as reviewing budgets, security program assessments, and top-level policies; assigning roles and responsibilities for privacy and security; and receiving regular reports on breaches and IT risks.

• 57% of respondents are not analyzing the adequacy of cyber insurance coverage or undertaking key activities related to cyber risk management to help them manage reputational and financial risks associated with the theft of confidential and proprietary data and security breaches.

Governance and Security

Governance means setting clear expectations for business conduct and then following through to ensure that the organization fulfills those expectations. Governance action flows from the top of the organization to all of its business units and projects. Done right, governance augments an organization’s approach to nearly any business problem, including security. National and international regulations call for organizations—and their leaders—to demonstrate due care with respect to security. This is where governance can help.

Moreover, organizations are not the only entities that benefit from strengthening enterprise5 security through clear, consistent governance. Ultimately, entire nations benefit. “The national and economic security of the United States depends on the reliable functioning of critical infrastructure. Cybersecurity threats exploit the increased complexity and connectivity of critical infrastructure systems, placing the nation’s security, economy, and public safety and health at risk” [NIST 2014].

5. We use the terms organization and enterprise to convey the same meaning.

Definitions of Security Governance

The term governance applied to any subject can have a wide range of interpretations and definitions. For the purpose of this chapter, we define governing for enterprise security6 as follows [Allen 2005]:

6. As used here, security includes software security, information security, application security, cyber security, network security, and information assurance. It does not include disciplines typically considered within the domain of physical security such as facilities, executive protection, and criminal investigations.

• Directing and controlling an organization to establish and sustain a culture of security in the organization’s conduct (values, beliefs, principles, behaviors, capabilities, and actions)

• Treating adequate security as a non-negotiable requirement of being in business

The NIST Cybersecurity Framework defines information security governance as follows: “The policies, procedures, and processes to manage and monitor the organization’s regulatory, legal, risk environmental, and operational requirements are understood and inform the management of cybersecurity risk” [NIST 2014].

In the context of security, governance incorporates a strong focus on risk management. Governance is an expression of responsible risk management, and effective risk management requires efficient governance. One way governance addresses risk is to specify a framework for decision making. It makes clear who is authorized to make decisions, what the decision-making rights are, and who is accountable for decisions. Consistency in decision making across an enterprise, a business unit, or a project boosts confidence and reduces risk.

Duty of Care

In the absence of some type of meaningful governance structure and way of managing and measuring enterprise security, the following questions naturally arise (and in all of them, organization can include an entire enterprise, a business or operating unit, a project, and all of the entities participating in a software supply chain):

• How can an organization know what its greatest security risk exposures are?

• How can an organization know if it is secure enough to do the following:

• Detect and prevent security events that require business-continuity, crisis-management, and disaster-recovery actions?

• Protect stakeholder interests and meet stakeholder expectations?

• Comply with regulatory and legal requirements?

• Develop, acquire, deploy, operate, and use application software and software-intensive systems?

• Ensure enterprise viability?

ANSI has the following to say with respect to an organization’s fiduciary duty of care [ANSI 2008]:

As a result, director and officer oversight of information and cyber security (including software security) is embedded within the duty of care owed to enterprise shareholders and stakeholders. Leaders who hold equivalent roles in government, non-profit, and educational institutions must view their responsibilities similarly.

Leading by Example

Demonstrating duty of care with respect to security is a tall order, but leaders must be up to the challenge. Their behaviors and actions with respect to security influence the rest of the organization. When staff members see the board and executive team giving time and attention to security, they know that security is worth their own time and attention. In this way, a security-conscious culture can grow.

It seems clear that boards of directors, senior executives, business unit and operating unit leaders, and project managers all must play roles in making and reinforcing the business case for effective enterprise security. Trust, reputation, brand, stakeholder value, customer retention, and operational costs are all at stake if security governance and management are performed poorly. Organizations are much more competent in using security to mitigate risk if their leaders treat it as essential to the business and are aware of and knowledgeable about security issues.

Characteristics of Effective Security Governance and Management

One of the best indications that an organization is addressing security as a governance and management concern is a consistent and reinforcing set of values, beliefs, principles, behaviors, capabilities, and actions that are consistent with security best practices and standards. These measures aid in building a security-conscious culture [Coles 2015]. These measures can be expressed as statements about the organization’s current behavior and condition.7

7. See also “Characteristics of Effective Security Governance” [Allen 2007] for a table of 11 characteristics that compares and contrasts an organization with effective governance practices and one where these practices are missing.

Leaders who are committed to dealing with security at a governance level can use the following list to determine the extent to which a security-conscious culture is present (or needs to be present) in their organizations:

• The organization manages security as an enterprise issue, horizontally, vertically, and cross-functionally throughout the organization and in its relationships with partners, vendors, and suppliers. Executive leaders understand their accountability and responsibility with respect to security for the organization, their stakeholders, the communities they serve including the Internet community, and the protection of critical national infrastructures and economic and national security interests.

• The organization treats security as a business requirement. The organization sees security as a cost of doing business and an investment rather than an expense or discretionary budget-line item. Leaders at the top of the organization set security policy with input from key stakeholders. Business units and staff are not allowed to decide unilaterally how much security they want. Adequate and sustained funding and allocation of adequate security resources are given.

• The organization considers security as an integral part of normal strategic, capital, project, and operational planning cycles. Strategic and project plans include achievable, measurable security objectives and effective controls and metrics for implementing those objectives. Reviews and audits of plans identify security weaknesses and deficiencies as well as requirements for the continuity of operations. They measure progress against plans of action and milestones. Determining how much security is enough relates directly to how much risk exposure an organization can tolerate.

• The organization addresses security as part of any new project initiation, acquisition, or relationship and as part of ongoing project management. The organization addresses security requirements throughout all system/software development lifecycle phases, including acquisition, initiation, requirements engineering, system architecture and design, development, testing, release, operations/production, maintenance, and retirement.

• Managers across the organization understand how security serves as a business enabler (versus an inhibitor). They view security as one of their responsibilities and understand that their team’s performance with respect to security is measured as part of their overall performance.

• All personnel who have access to digital assets and enterprise networks understand their individual responsibilities with respect to protecting and preserving the organization’s security, including the systems and software that it uses and develops. Awareness, motivation, and compliance are the accepted, expected cultural norm. The organization consistently applies and reinforces security policy compliance through rewards, recognition, and consequences.

The relative importance of each of these statements depends on the organization’s culture and business context. For those who are accustomed to using ISO/IEC 27001 [ISO/IEC 2013], similar topics can be found at a higher level.

7.2.2 Two Examples of Security Governance

Payment Card Industry

The development, stewardship, and enforcement of the Payment Card Industry (PCI) Data Security Standard (DSS) [PCI Security Standards Council 2015] represent a demonstrable act of governance by the PCI over its members and merchants. This standard presents a comprehensive set of 12 requirements for enhancing payment account data security and “was developed to facilitate the broad adoption of consistent data security measures globally.” It “applies to all entities involved in payment card processing—including merchants, processors, acquirers, issuers, and service providers” [PCI Security Standards Council 2015].

An additional standard that is part of the PCI DSS standards suite is the Payment Application Data Security Standard (PA-DSS) [PCI Security Standards Council 2013]. PA-DSS specifically addresses software security. Its purpose is to assist software vendors of payment applications to develop and deploy products that are more secure, that protect cardholder data, and that comply with the broader PCI standard. All 14 PA-DSS practice descriptions include detailed subpractices and testing procedures for verifying that the practice is in place. The PCI Standards Council maintains a list of validated payment applications that meet this standard. Payment card merchants can use it to select applications that ensure better protection of cardholder data.

U.S. Energy Sector

In response to the U.S. 2013 executive order, the U.S. Department of Energy (DOE) developed the Electricity Subsector Cybersecurity Capability Maturity Model (ES-C2M2) to improve and understand the cyber security posture of the U.S. energy sector. The 10 domains that compose the model and the companion self-assessment method “provide a mechanism that helps organizations evaluate, prioritize, and improve cybersecurity capabilities” [DoE 2014a]. U.S. energy sector owners and operators are using the model to improve their ability to detect, respond to, and recover from cyber security incidents. As a result of the successful use of ES-C2M2, the DOE developed an equivalent model [DoE 2014b], with which the U.S. oil and natural gas subsector concurred, that is experiencing active adoption and use. The development, use, and stewardship of these models are strong examples of security governance for two national critical infrastructure sectors.

7.2.3 Conclusion

Most senior executives and managers understand governance and their responsibilities with respect to it. The intent here is to help leaders expand their perspectives to include security and incorporate enterprise-wide security thinking into their own—and their organizations’—governance and management actions. An organization’s ability to achieve and sustain adequate security starts with executive sponsorship and commitment. Standards such as ISO 27001 [ISO/IEC 2013] reinforce and supplement industry initiatives.

To be able to certify compliance with security standards, we clearly first need to have a set of generally accepted standards. Although there have been quite a number of attempts to achieve acceptable information security standards, no overarching set is being consistently followed.

Governments around the globe favor the Common Criteria for Information Technology Security Evaluation, also known as ISO/IEC 15408. The PCI DSS [PCI Security Standards Council 2015] and the PA-DSS [PCI Security Standards Council 2013] apply to those handling payment card information and the vendors of software that process this information, respectively.

A subset of software systems need to be certified according to the Common Criteria or PCI standards in order for their acquisition and/or use to be permitted. It is noteworthy that, in both these cases, the standard-setting authorities (i.e., various government agencies and payments processing companies) are relatively powerful in terms of purchasing power (governments) or scope of influence (PCI).

Other compliance or certification reviews—such as auditing against International Organization for Standardization (ISO) or International Electrotechnical Commission (IEC) standards in general as well as specific audit reviews in particular—usually target specific departments and processes within an organization, and the certifications mostly apply only to an examined process at a specific point in time (for example, SSAE 16 reviews, which replaced SAS 70 Type 1 and Type 2 reviews). Such reviews usually do not dig deeply into the particular technologies in operation, nor do they determine whether these technologies meet a certain quality level. However, certain types of technical audits examine program code, platforms, networks, and the like.

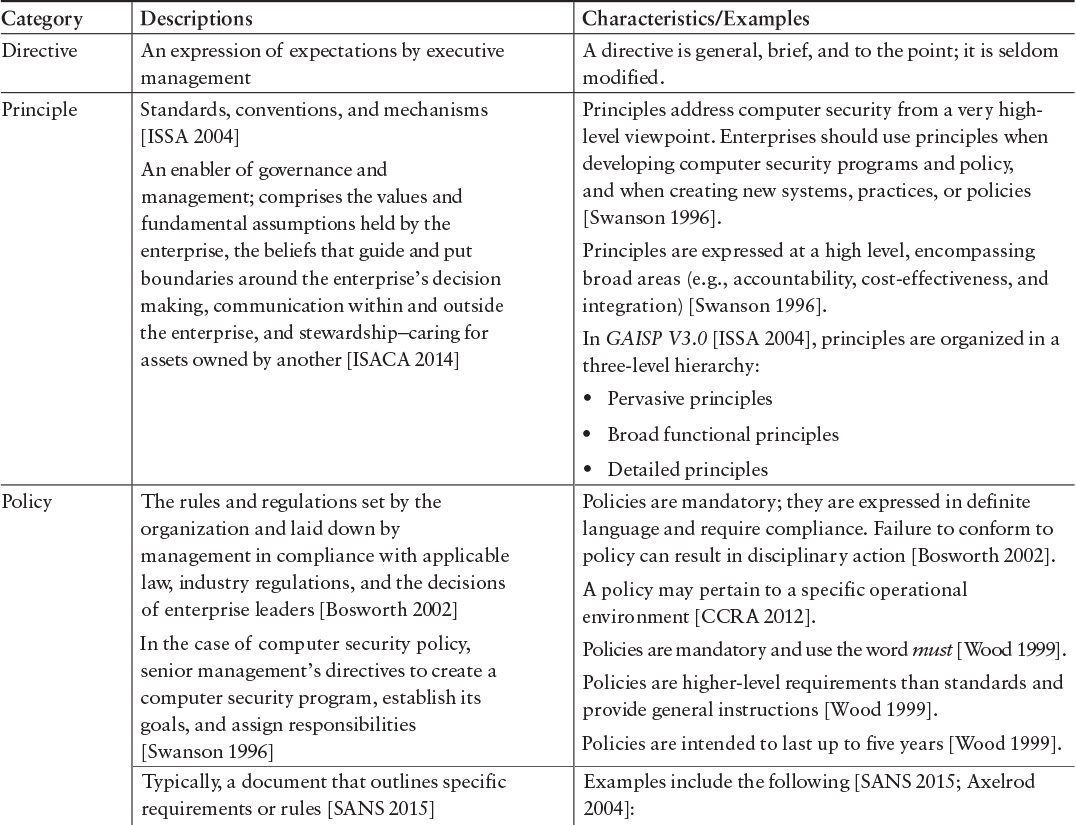

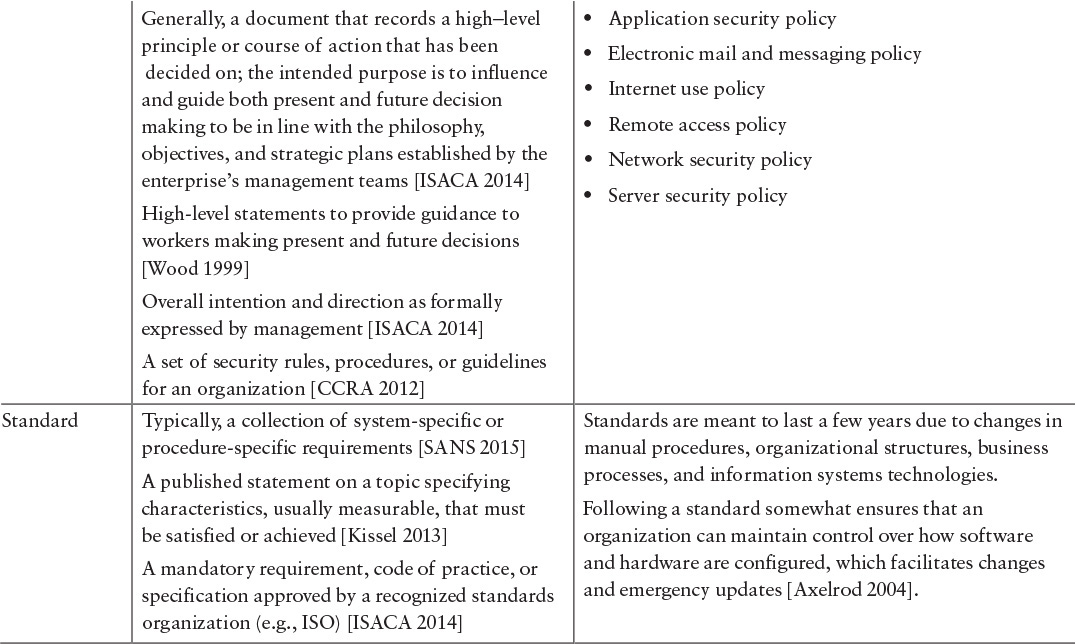

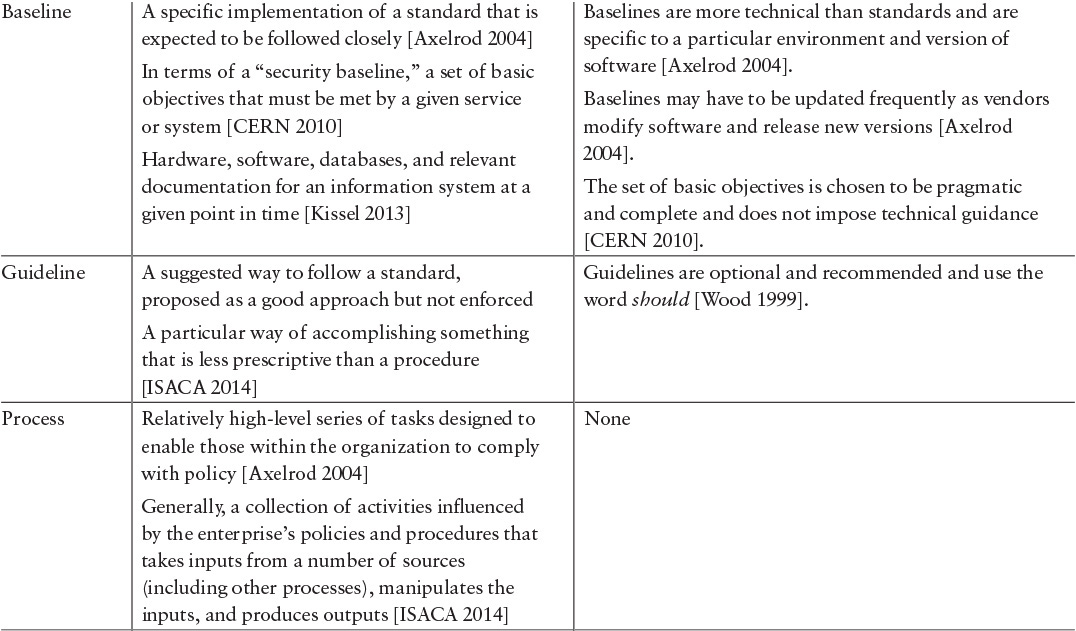

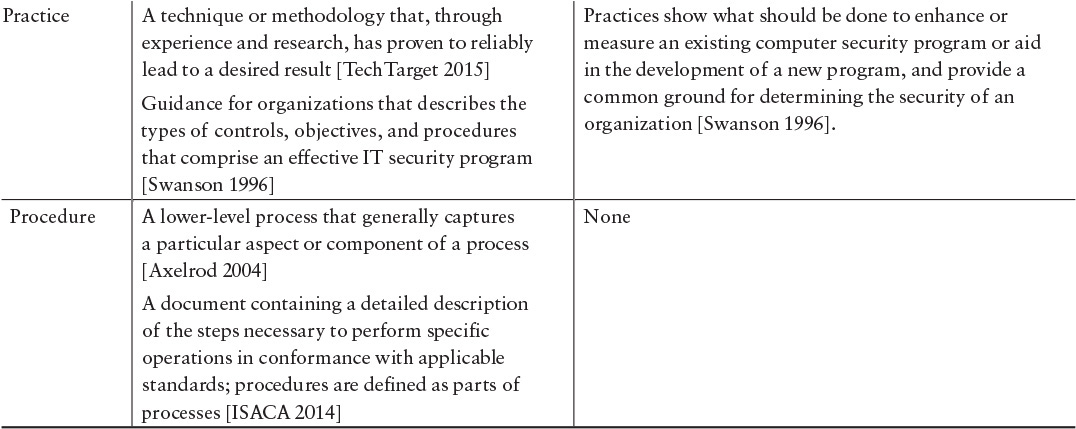

Some regulators require broader “policy and procedures” compliance reviews. For example, the National Association of Securities Dealers Regulations (NASDR) requires securities firms in the U.S. to perform and report on extensive reviews of back-office and IT policy and procedures to ensure that they actually exist, are fully documented, and meet certain governance requirements. This situation suggests that we must better understand what standards are and how they relate to directives, policy, guidelines, procedures, etc. Table 7.1 provides a comparison among these various categories.

Table 7.1 includes descriptions from a variety of sources. It is interesting to note that they are not all definitions, and though some descriptions are similar, they are not entirely consistent. Nonetheless, our intent is to show the range of descriptions—where they are the same and where they differ. Inconsistencies might result in the misuse of definitions and terms, confusion about the meaning of terms, and consequent misdirection. These problems could account in large part for the inconsistent use of information security standards.9

9. A similar lack of focus and specificity exists in attempts to define policy standards and procedures for cyber warfare, which can have much graver outcomes. Nevertheless, the lack of cyber security standards, despite the proliferation of policies, is extremely costly in financial, economic, and social terms.

The main result of this disparity of descriptions is a hierarchy of rules, some of which are mandatory (“must”) and some of which are optional (“should”). These rules are set at different levels within an organization, usually apply to lower-level groups, and are enforced and audited by other parties.

We need accepted standards and a rich compendium of baselines in order to support universal measures of software cyber security assurance. When standards are not used, the fallback is usually to substitute common, essential, or “best” practices, with the argument that an organization cannot be faulted if it is as protective as or more protective than its peers in applying available tools and technologies.10

10. Donn Parker asserts that it is impossible to calculate cyber security risk, so metrics are meaningless [Parker 2009]. Steven Lipner claims that a metric for software quality does not exist [Lipner 2015]. Parker suggests implementing the best practices of peer organizations. Peter Tippett, who claims to have invented the first antivirus product, stated that there is no such thing as a “best practice” and instead uses the term “essential practice” to refer to a generally accepted approach [Tippett 2002].

This logic is somewhat questionable since it frequently leads to compliance with mediocrity: If one organization is vulnerable, then other organizations that applied the same level of security are vulnerable also. We see this phenomenon in action when similar organizations fall victim to similar successful attacks.

Experts have argued that software monocultures lead to less secure systems environments. We can consider this argument in the context of software cyber security assurance processes: When many organizations in the same industries use similar systems and observe similar security measures, those organizations become more vulnerable. Organizations could benefit from varying their approaches to cyber security assurance.

7.3.2 A More Optimistic View of Cyber Security Standards

We are starting to see the emergence of cyber security standards that provide more cause for optimism than the earlier work referred to in Section 7.3.1. For example, a recent interview11 highlighted the following cyber security standards:

11. www.forbes.com/sites/peterhigh/2015/12/07/a-conversation-with-the-most-influential-cybersecurity-guru-to-the-u-s-government/

• NIST SP 800-160: System Security Engineering: An Integrated Approach to Building Trustworthy Resilient Systems

• IEEE/ISO 15288: Systems and Software Engineering—System Life Cycle Processes

• The NIST TACIT approach for system security engineering: Threat, Assets, Complexity, Integration, Trustworthiness12

12. Summarized in slide form at http://csrc.nist.gov/groups/SMA/fisma/documents/joint-conference_12-04-2013.pdf.

Another important document is NIST SP 800-53: Recommended Security Controls for Federal Information Systems and Organizations. This document has undergone several revisions in recent years and is being used extensively in government software systems acquisition and development. In addition, standards such as ISO/IEC 27001, ISO/IEC 27002, ISO/IEC 27034, and ISO/IEC 27036 all provide useful support, and NIST Special Publication 800-161 addresses supply chain risk.

7.4 Security Requirements Engineering for Acquisition

Although much work in security requirements engineering research has been aimed at in-house development, many organizations acquire software from other sources rather than develop it in-house. These organizations are faced with the same security concerns as organizations doing in-house development, but they usually have less control over the development process. Acquirers therefore need a way to assure themselves that security requirements are being addressed, regardless of the development process.

There have been some efforts related to acquisition of secure software. The Open Web Application Security Project (OWASP) group provides guidance for contract language that can be used in acquisition; the guidance includes a brief discussion of requirements [OWASP 2016]. An SEI method is available to assist in selecting COTS software [Comella-Dorda 2004]. The Common Criteria approach provides detailed guidance on how to evaluate a system for security [Common Criteria 2016]. In addition, there are security requirements engineering methods, such as SQUARE [Mead 2005], SREP [Mellado 2007], and Secure Tropos [Giorgini 2006]; some of these methods address the acquisition of secure software. The recent NIST Special Publication 800-53, Revision 4 provides guidance for selecting security controls [NIST 2013], and NIST Special Publication 800-161 addresses supply chain risk [NIST 2015].

We next examine various acquisition cases for security requirements engineering, using the SQUARE process model as a baseline.

7.4.1 SQUARE for New Development

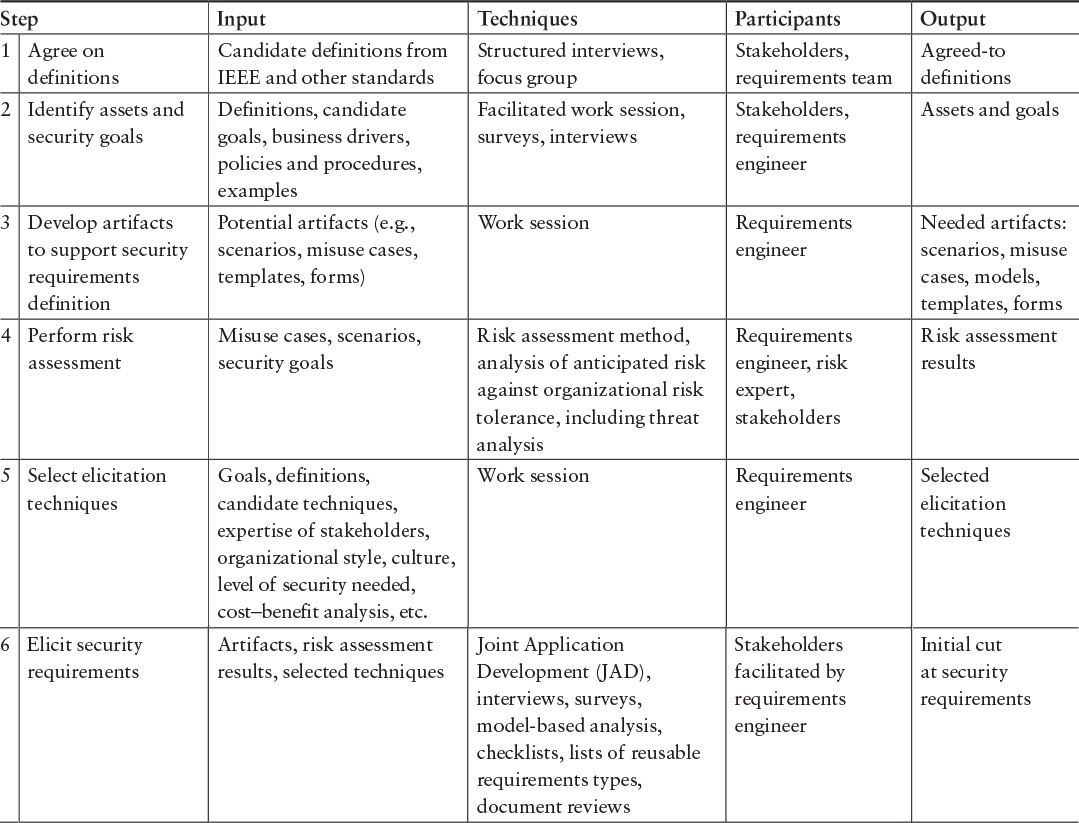

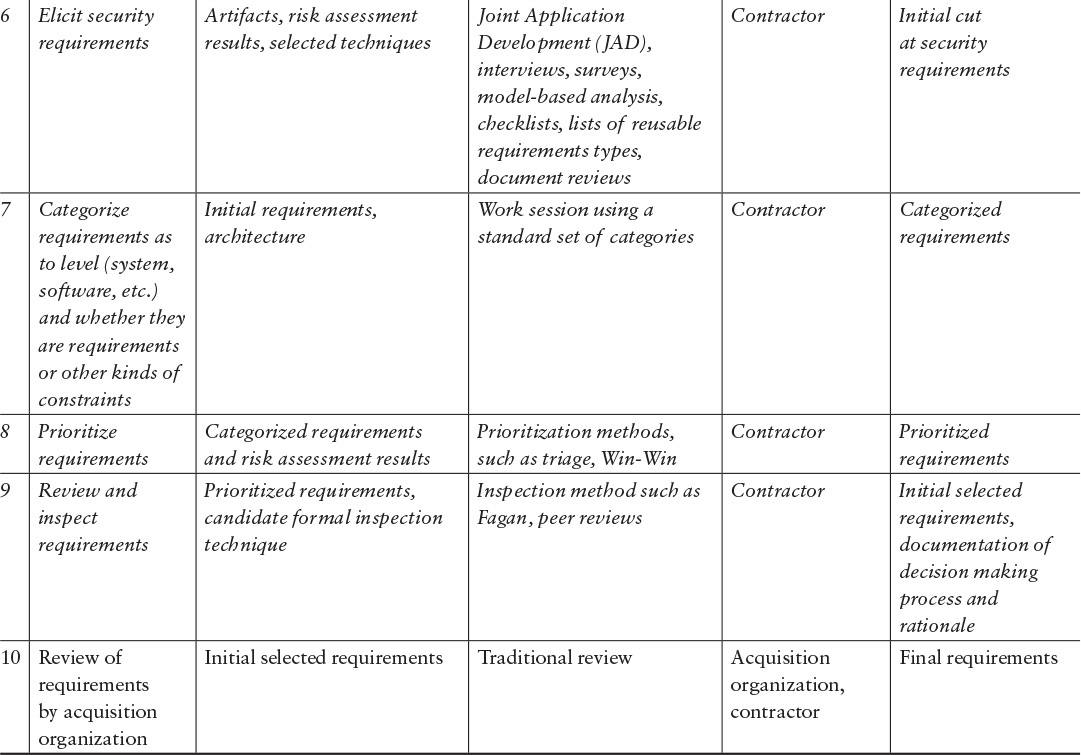

The SQUARE process for new development is shown in Table 7.2. This process has been documented [Mead 2005]; described in various books, papers, and websites [Allen 2008]; and used on a number of projects [Chung 2006]. This is the process that was used as the basis for SQUARE for Acquisition (A-SQUARE).

7.4.2 SQUARE for Acquisition

We next present various acquisition cases and the associated SQUARE adaptations.

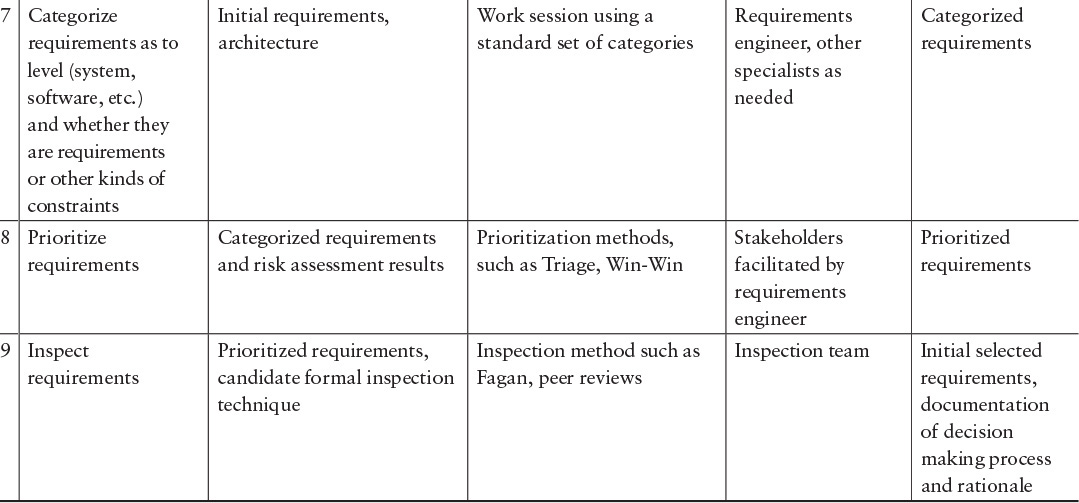

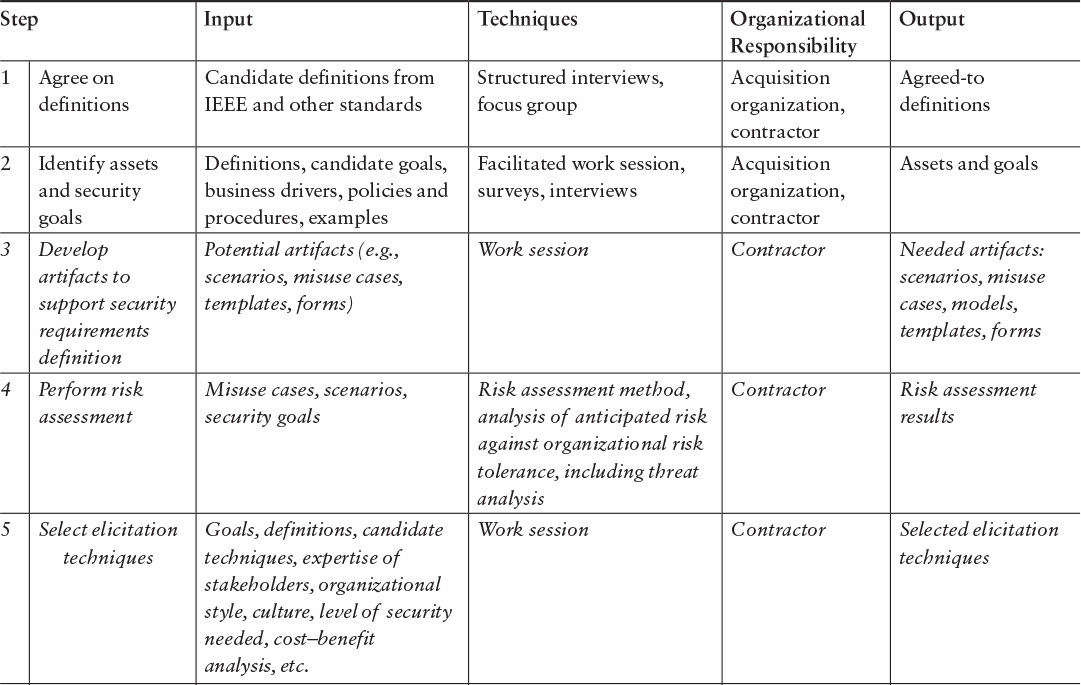

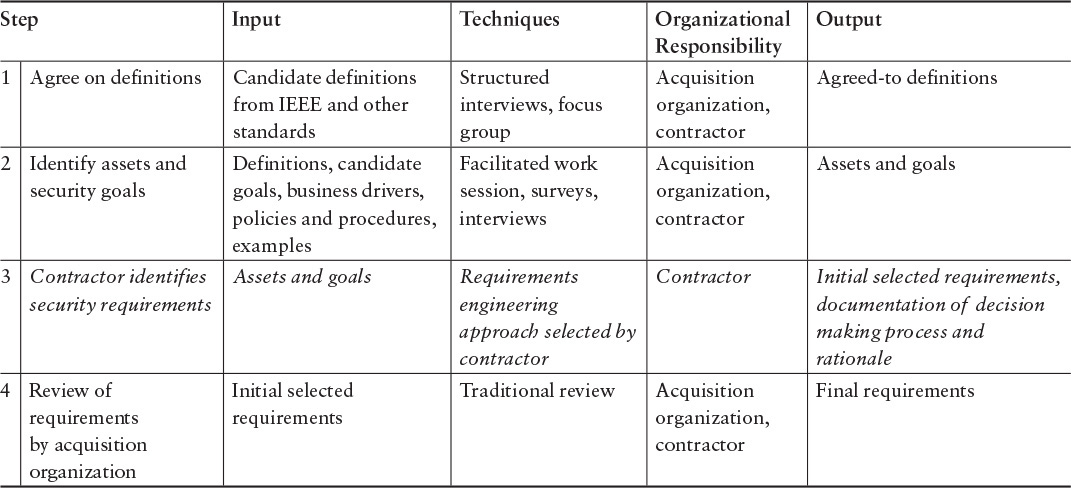

Case 1: The Acquisition Organization Has the Typical Client Role for Newly Developed Software

In this example, the contractor is responsible for identifying requirements. We use SQUARE as the underlying method, but the contractor could use another method to identify the security requirements. If SQUARE is used throughout, steps 3–9 (highlighted in italics in Table 7.3) are performed by the contractor. This case presumes that the contract award has been made, and the contractor is on board. The acquisition organization has the typical client role in this example. It’s important to note the client involvement in steps 1, 2, and 10. Also note that if the acquisition organization works side by side with the contractor, the separate review in step 10 can be eliminated, as the client inputs are considered in the earlier steps.

In the event that the contractor’s security requirements engineering process is unspecified, the resulting compressed process looks as shown in Table 7.4.

Case 2: The Acquisition Organization Specifies the Requirements as Part of the RFP for Newly Developed Software

If an acquisition organization specifies requirements as part of an RFP, then the original SQUARE for development should be used (refer to Table 7.2). Note that relatively high-level security requirements may result from this exercise, since the acquisition organization may be developing the requirements in the absence of a broader system context. Also, the acquisition organization should avoid identifying requirements at a granularity that overly constrains the contractor.

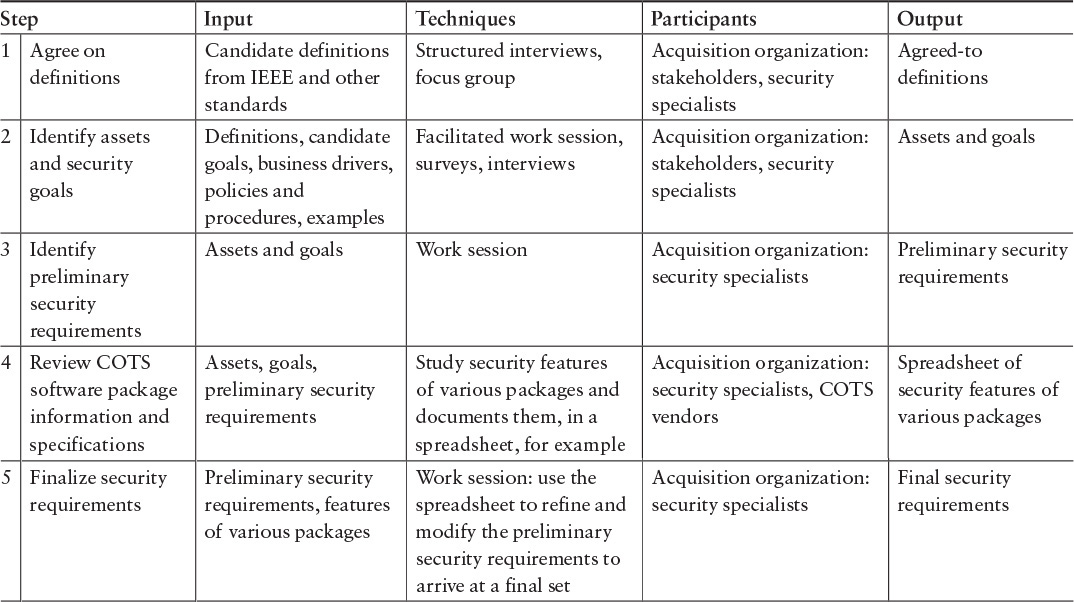

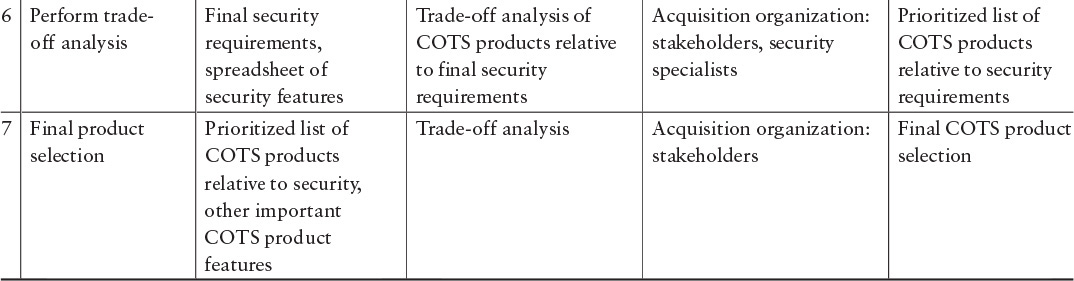

Case 3: Acquisition of COTS Software

In acquiring COTS software, an organization should develop a list of requirements for the software and compare those requirements with the software packages under consideration (see Table 7.5). The organization may need to prioritize security requirements together with other requirements [Comella-Dorda 2004]. Compromises and trade-offs may need to be made, and the organization may have to figure out how to satisfy some security requirements outside the software itself—for example, with system-level requirements, security policy, or physical security. The requirements themselves are likely to be high-level requirements that map to security goals rather than detailed requirements used in software development.

Note that in acquiring COTS software, organizations often do minimal trade-off analysis and may not consider security requirements at all, even when they do such trade-off analysis. The acquiring organization should consider “must have” versus “nice to have” security requirements. In addition, reviewing the security features of specific offerings may help the acquiring organization identify the security requirements that are important.

7.4.3 Summary

The original SQUARE method has been documented extensively and has been used in a number of case studies and pilots. In addition, a number of associated robust tools exist. Academic course materials and workshops are also available for SQUARE. SQUARE for Acquisition is not quite as mature, as it was developed more recently. Here we have presented alternative versions of SQUARE for use in acquisition. It has been taught in university and government settings, and there is a prototype tool for A-SQUARE. Organizations that use SQUARE or A-SQUARE will succeed in addressing security requirements early and will avoid the pitfalls of operational security flaws that result from overlooked security requirements.

It is important to note that A-SQUARE is just one approach for identifying security requirements during acquisition. We suggest exploring other existing methods before making a decision on which one to use.

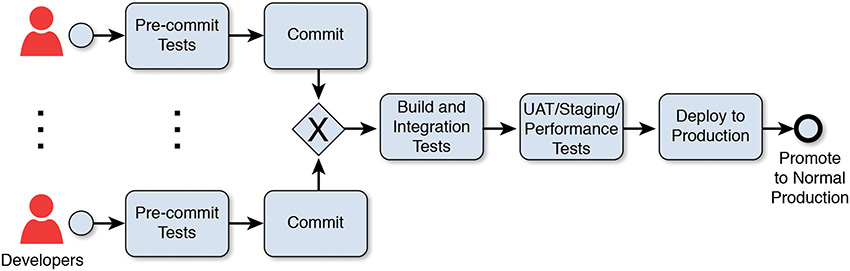

7.5.1 What Is DevOps?

DevOps is a synergistic convergence of emerging concerns that stem from two separate communities: the software development community and the operations community (release engineers and system administrators). Prior to the birth of the DevOps movement, both communities suffered from the inability to rapidly and reliably deploy software, and each felt the effects in different ways. From the software development perspective, Agile teams found progress on delivery of new features grinding to a halt as they entered the integration, certification, and deployment phases of the software lifecycle. From the operations perspective, the release engineers suffered from unstable production software and painful releases. Symptoms of dysfunction included a culture of finger pointing between development (Dev) and operations (Ops) teams, delays due to late discovery of security or resiliency issues, and error-prone/human-intensive release processes.

Problems such as these drove a handful of practitioners to talk openly about practices they apply on their own projects to address similar problems. This discussion ultimately led to the birth of the DevOps movement. In 2009, Flickr’s John Allspaw and Paul Hammond gave a cornerstone talk at Velocity, titled “10+ Deploys Per Day”14 that got the attention of the operations community. They described several practices for reducing deployment cycle time while maintaining a high degree of operational stability/resiliency. In 2009, Patrick Debois from Belgium and Andrew “Clay” Shafer from the United States coined the term DevOps. Debois held the first DevOpsDays event in Ghent in 2010. Today, DevOpsDays conferences are held in cities all over the world. The initial scope of the DevOps movement focused primarily on cloud-based information technology (IT) systems; however, the scope has since broadened. There is general agreement that the specific DevOps practices used on each project can and should be evaluated for suitability and tailored for each project context. We are seeing some DevOps practices applied to non-IT-based systems, including embedded and real-time safety-critical systems, such as avionics, automobiles, and weapons systems [Regan 2014].

14. www.youtube.com/watch?v=LdOe18KhtT4

DevOps is essentially a term for a group of concepts that catalyzed into a movement. At a high level, DevOps can be characterized by two major themes: (1) collaboration between development and operations staff and (2) a focus on improving operational work efficiency and effectiveness. We discuss these themes in the next sections.

Collaboration Between Development and Operations Staff

The focus of DevOps is to break down artificial walls between development and operations teams that have evolved due to organizational separation of these groups. We refer to this separation as “stovepiping.” The idea is to change the culture from one in which development teams “throw the software over the fence” to the operations teams to a more collaborative, integrated culture. Examples of suggested DevOps practices to break down these barriers from both sides of the fence include earlier involvement of release engineers in the software design and assignment of production support “pager duty” to the software developers after a new feature release to instill a sense of post-development ownership.

Focus on Improvement in Operational Work Efficiency and Effectiveness

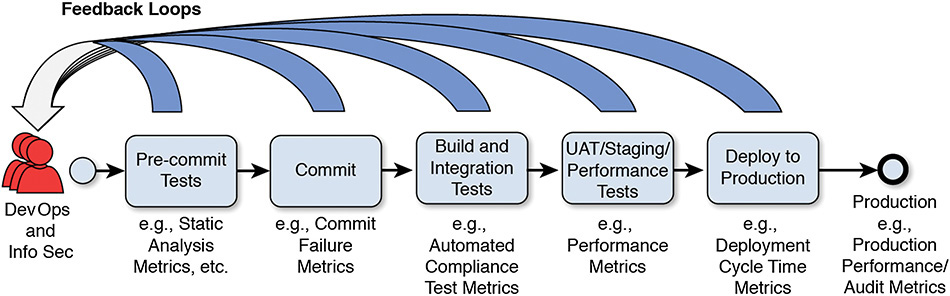

The focus of the trend toward improved efficiency is based on Lean principles [Nord 2012] and is about improving efficiency and effectiveness of the end-to-end deployment lifecycle. Lean objectives adopted by the DevOps community include reducing waste, removing bottlenecks, and speeding up feedback cycle time. This effort requires team members to have the ability to reason about the various stages features go through during a DevOps deployment cycle. The popular book by Humble and Farley, Continuous Delivery, introduces the concept of deployment pipeline for this purpose (shown in Figure 7.1). The deployment pipeline provides a mental model for reasoning where bottlenecks and inefficiencies occur as features make their way from development through testing and, finally, to production.

Figure 7.1 Example of a Deployment Pipeline [Bass 2015]

For many projects, software releases were high-risk events; because of this, many software projects bundled various features together, delaying delivery of completed functionality to users. Continuous delivery suggests a paradigm shift in which features/development changes are individually deployed after the software has passed a series of automated tests/pre-deployment checks. We revisit the deployment pipeline in the next section.

7.5.2 DevOps Practices That Contribute to Improving Software Assurance

The previous section provides background on the emergence of the DevOps movement and a brief discussion of related trends and concepts. In this section, we turn our attention to emerging DevOps practices that contribute to improving software assurance (which is defined in Chapter 1, “Cyber Security Engineering: Lifecycle Assurance of Systems and Software”). In this section, we focus primarily on software assurance as it relates to cyber security.

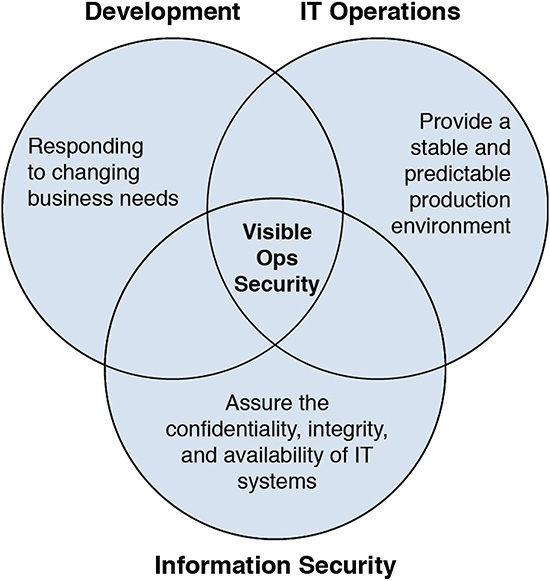

In the popular book Visible Ops Security, the authors describe competing objectives between development and operations groups that cause natural tension [Kim 2008]. Development teams are pressured to make changes faster to respond to business needs. On the other hand, operations teams are incentivized to reduce risk and minimize change because they are responsible for maintaining stable, secure, and reliable IT service. The idea behind Visible Ops Security is that integrating information security (InfoSec) staff on DevOps projects helps alleviate this conflict (see Figure 7.2).

Figure 7.2 Visible Ops Security Focuses on the Point Where IT Operations, Development, and Information Security Objectives Overlap [Kim 2008]

The process of integrating InfoSec into the DevOps project context is described in four phases in Visible Ops Security [Kim 2008]. In the next sections, we use these four phases to structure the discussion of security-related DevOps practices. The term DevOpsSec refers to extending the concept of DevOps by integrating InfoSec.

Phase 1: Integration of InfoSec Experts

The long-term goal of integrating InfoSec professionals into DevOps projects is to gain visibility into potential software assurance risks. In Phase 1, InfoSec experts gain situational awareness by analyzing processes supporting daily operations such as change management, access control, and incident handling procedures. This analysis requires establishing an ongoing and trusting relationship between InfoSec professionals and the rest of the project team (developer and operations staff) and balancing the concerns shown in Figure 7.2. In addition, the team must establish an integration strategy. Integration strategies may involve co-locating InfoSec personnel with the project team for the life of the project or having a cross-functional, matrixed integration. Regardless of the integration strategy, InfoSec teams should resist the urge to create a new stand-alone DevOpsSec team, which can result in a new stovepipe within the organization.

Phase 2: Business-Driven Risk Analysis

Phase 2 focuses first on understanding what matters most to the business (thus the business-driven part of the phase title). Once the team understands business priorities and key business processes, it identifies IT controls required to protect critical resources. The team can apply several practices in this phase to address security concerns; examples include threat modeling and analysis and DevOpsSec requirements and design analysis. We discuss these practices in the next sections.

Threat Modeling and Analysis

Risk analysis approaches, such as those described in the Resilience Management Model analysis method [Caralli 2011b], may be applied in this phase to identify critical resources. Critical resources may be people, processes, facilities, or system artifacts, such as databases, software processes, configuration files, etc. After identifying critical resources, the team applies a structured analysis method to identify ways to protect those assets. In the spirit of Lean, threat modeling and analysis activities should give special consideration to protecting resources related to the business processes that provide the highest value to the organization. (Value depends on the business objectives and may take many forms, such as monetary assets, user satisfaction, mission goal achievement, etc.)

DevOpsSec Requirements and Design Analysis

Because architectural design decisions can have broad implications, InfoSec should factor into early reviews of emerging requirements and architectural design decisions. Context-specific design choices, in the form of architecture design tactics, can have a significant impact on resiliency and/or security posture. For example, a recent research study of architecture tactics used on real software projects to enable deployability revealed the use of several security-related design tactics, including fault detection, failover, replication, and module encapsulation/localization tactics [Bellomo 2014]. InfoSec professionals can leverage feasibility prototypes—in addition to document-driven analysis—to experiment with options for high-risk architectural changes and to analyze potential runtime threat exposure for new design concepts.

Phase 3: Integration and Automation of Information Security Standards/Controls

In Phase 3, the objective is to improve the quality of releases by integrating and automating information security standards compliance checks into projects and builds. In the spirit of improving efficiency, teams should use automation to replace manual and/or error-prone tasks as much as possible. There are several opportunities for automation at each stage throughout the deployment pipeline. The earlier a deficiency is detected in the deployment pipeline, the less costly (and painful) it is to address. For example, a secure coding error found at build or check-in time can usually be fixed by the developer quickly with little impact to others, whereas that same coding change made in a later stage could impact other components using that code. Automated tests may be executed against a variety of software and environmental artifacts such as code files, runtime software components, configuration files, etc. Many types of tests can be automated; for our purposes, we focus on automated tests that teams can leverage for security analysis and detection purposes. Test artifacts become available at different stages in the deployment pipeline; therefore, we have organized the discussion of the following information security automation tests according to the stages shown in Figure 7.1.

Pre-Commit Tests

For code-level security conformance checking, teams can run static analysis tests against code or other artifacts prior to checking in code. (Figure 7.1 refers to checking in code as “committing code.”) Common static analysis approaches used in the DevOps context include code complexity analysis, secure coding standards conformance checking, IT/web secure coding best practices conformance checking, and code-level certification control/policy violations checking. The results of these tests can provide greater insight into the overall health of the system for risk analysis. In practice, some project teams also integrate static analysis tests into the continuous build and integration cycle.

Build and Integration Tests

In addition to static analysis, an increasingly common DevOps best practice involves integrating security compliance tests into the continuous integration build cycle. These automated compliance tests run every time the developer checks in code or initiates a new build of the software. If a violation is detected, the build fails and the offending developer is notified immediately. Ideally, work does not continue until the build is fixed to avoid pushing the problem downstream.

The following Twitter case study provides an example of a success story about an InfoSec team that successfully integrated a suite of security tests into the continuous build and integration lifecycle.

User Acceptance Testing/Staging/Performance Tests

User acceptance tests and performance tests can provide a variety of InfoSec insights. During user acceptance testing (UAT), InfoSec team members can observe live usage patterns to see if they reveal any new security concerns. Because the staging environment (ideally) reflects the configuration of the production environment, the InfoSec team can analyze the runtime configuration for vulnerabilities that are hard to detect in design documents (staging is a pre-production testing environment). Results of nonfunctional tests (e.g., resiliency, performance, or scalability tests) may provide new insights into how well the system responds or performs under strenuous circumstances. In addition, the DevOps community advocates complete—or as complete as possible—test coverage to maintain confidence as the system evolves.

Deploy to Production

Several DevOps practices improve assurance posture while code is in the process of being deployed. However, before we discuss these practices, let’s first visit some of the practices that should be applied before the deployment stage. For example, Infrastructure as Code (IaC) is a recommended practice. IaC refers to use of automated scripts and tooling for provisioning of infrastructure and environment setup. A benefit of the IaC approach to building infrastructure is that the InfoSec team can examine the automation scripts to ensure conformance to security controls rather than manually evaluating disparate individual environments for configuration violations. The practice of building infrastructure from scripts also helps enforce configuration parity across all environments (e.g., development, staging, production), which helps to minimize vulnerabilities introduced by manual configuration changes. Regular checks should done to ensure that open source software patches are up to date and known vulnerabilities are addressed prior to release.

Once the pre-deployment environment conformance checks are clean and all tests have passed, it is time to deploy code into production. We highly recommend using automated scripts for releasing code into production. These automated scripts, and associated configuration files, are also useful artifacts for InfoSec analysis. They can be used by InfoSec team members to validate that the software deployment configuration conforms to the approved design document.

Phase 4: Continuous Monitoring and Improvement

Visible Ops Security primarily focuses on the need for the operations community to embrace continuous process improvement and monitoring. In the following sections we discuss those needs as well as two other dimensions, deployment pipeline metrics and system health and resiliency metrics, which have received a lot of attention in the DevOps community in recent years.

DevOpsSec and Process Improvement

Continuous monitoring and improvement is a fairly well-accepted practice in the software community; however, according to some practitioners, a move in this direction poses some challenge for the operations community. During a roundtable interview for a 2015 IEEE Software Magazine Release Engineering special issues article, we interviewed release engineers from Google, Facebook, and Mozilla. They explained that the shift toward organizational process improvement requires a mindset change for release engineers to move from a triage/checklist mentality (rewarded by managers for years) toward institutionalized processes [Adams 2015]. From an InfoSec perspective, this shift is useful and necessary. It is very difficult, if not impossible, for InfoSec personnel to determine whether operational processes are secure when they are executed in an ad hoc manner.

Use of Deployment Pipeline Metrics to Minimize Security Bottlenecks

Another key concept behind DevOps is the idea of monitoring end-to-end deployment pipeline cycle time and individual stage feedback cycle time. The development, operations, and security stakeholders (shown on the left side of Figure 7.3) gain insight about the quality at each stage of the deployment pipeline by monitoring feedback results. Some examples of metrics for each stage are shown in Figure 7.3. At the Pre-commit Tests stage, DevOpsSec stakeholders get feedback from static analysis or code-level secure coding results; at the Commit stage, stakeholders get feedback on commit failures; at the Build and Integration stage and at the Testing stages, stakeholders get feedback on automated compliance tests; at the Deploy to Production stage, stakeholders get feedback on deployment cycle time (or deployment failures and rollbacks); and at the Production stage, stakeholders get feedback on performance, outages, audit logs, etc. If any of these feedback loops break down or become bottlenecks that slow down deployment, teams must perform analysis to address the issue. In this way, DevOpsSec teams have targeted metrics and insight to continuously improve deployment cycle time, focusing attention where the real problems are.

System Health and Resiliency Metrics

Because of the emphasis on improving workflow efficiency, a key DevOps practice area is monitoring and metrics. These capabilities benefit InfoSec professionals in two ways: (1) They provide additional data to improve “situational awareness” during early risk analysis and (2) once the software is in production, the metrics and logs produced through DevOps processes can be used for cyber-threat analysis. DevOps metrics are commonly used for purposes such as reducing end-to-end deployment cycle time and improving system performance/resiliency. The interest in metrics such as these has led to the emergence of tools and techniques to make operational metrics more visible and useful. A popular mechanism for improving data visibility, bolstered by the DevOps vendor community, is the DevOps dashboard. DevOps dashboards are tools that usually come configured with a set of canned metrics and a way to implement custom metrics. Most dashboards can also be programmed to send alerts to administrators when a threshold is exceeded; some dashboards also suggest threshold boundaries based on normal historic system usage.

A key source of input for DevOps dashboards and alerts is log files (e.g., audit, error, and status logs). As with traditional system development, log data is typically routed to a common location, where it can be accessed for dashboard display and other analysis purposes. As with traditional projects, audit logs—particularly information about changing privileges or roles—continue to be useful artifacts for InfoSec analysis. These logs can also be used to support anomaly detection analysis, which involves monitoring for deviation from normal usage patterns. Operations staff use approaches such as anomaly detection analysis to monitor for issues ranging from software responsiveness to cyberattack. In fact, at DevOps DC in June 2015, there was much talk of work that focused on developing complex algorithms leveraging metrics generated from various DevOps tools to identify patterns of deviation and malicious behavior.

7.5.3 DevOpsSec Competencies

The previous section describes several DevOps practices that promote software assurance. This section describes several InfoSec competencies needed to support these DevOps practices. Traditional InfoSec competencies are still necessary to provide a strong foundation for supporting DevOps projects. However, due to the focus on automation and rapid feature delivery, some additional skills (or, in some cases, refreshing of skills) are required. In the sections that follow, we list examples of DevOpsSec-specific competencies; for consistency, we organize these skills around the four phases for integrating InfoSec into DevOps projects.

Phase 1: Integrating InfoSec Experts

People Skills

Successful DevOps InfoSec professionals must be capable of establishing and maintaining an ongoing trusting relationship between developers and operations groups. They must also be able to balance competing goals, as shown in Figure 7.2.

DevOpsSec Integration Strategy

It is not always easy to change the mindset of an organization that has lived with a stovepiped information security operating model for many years. Successful InfoSec professionals need to understand the organizational context, including opportunities and limitations, and must be able to formulate successful integration strategies. Depending on the situation, they may also need creativity and a positive attitude to devise and implement integration strategies that can work in their environment.

Security Analysis for Daily Operations

To obtain situational awareness with respect to daily operations, DevOps InfoSec professionals need skills to analyze processes and identify risks related to daily operational support. These skills and processes address topics such as security risks in current change management, access control, and incident handling procedures.

Phase 2: Business-Driven Risk and Security Process Analysis

Business-Aligned Threat Modeling

InfoSec professionals must have the skills to apply risk/threat-based analysis approaches (e.g., RMM) to identify and protect critical resources. This requirement is not new; however, there are several new challenges with respect to DevOps. Functionality may be delivered more frequently (e.g., multiple times per day), so InfoSec professionals must perform risk analysis faster and more efficiently than ever before. Constantly changing software means InfoSec professionals are working with a moving target. There is little tolerance for big, lengthy risk assessments, so risk analysis methods must be tailored to fit within this operating context. In addition, the deployment pipeline itself is a set of integrated tools that must be operationally resilient and protected from cyberattack. The virtualized environments on which many of the software systems developed by DevOps projects are deployed are increasingly software intensive (e.g., virtual machines, container technology). So, the scope of a risk assessment may need to extend beyond the software to be deployed. The deployment pipeline and the visualized infrastructure the software is running on may need to be included in risk assessments.

DevOpsSec Requirements and Design Analysis

InfoSec professionals need skills to rapidly and competently review architectural designs for potential vulnerabilities in a fast-paced environment. Ideally, InfoSec professionals integrated with DevOps teams have technical skills to provide guidance to developers as they consider design options. For example, design tactics should be considered to promote security and resiliency.

Phase 3: Integration and Automation of Information Security Standards/Controls

Security Tool Automation

As described in the Twitter case study, successful DevOps InfoSec professionals have strong technical capabilities that allow them to develop, or at least interpret, results from security automation tools. Opportunities exist at all stage of the development pipeline for InfoSec professionals to help develop and/or set up automation tools. As shown in Figure 7.3, examples of security-related automation tools include build and integration tests; UAT, staging, and performance tests; pre-deployment tests; and post-deployment anomaly detection and monitoring.

Enforcing Environment Conformance

With the advent of virtual machine technology, infrastructure has migrated from being primarily hardware intensive to being largely software intensive. This change has enabled the creation of the infrastructure environment through automation (generally using scripts). DevOps InfoSec professionals should have sufficient technical skills and depth to understand the risks related to using these technologies as well as the benefits. A benefit of the move toward IaC and script-driven provisioning is that environment configuration and policy conformance checking can be more easily automated. This benefit is possible because conformance-checking tools can run the verification tests against the automated scripts. Leveraging capabilities such as these speeds up the certification process and reduces the risk of vulnerabilities related to environment inconsistency/nonconformance.

Patches and Open Source

At this writing, the majority of DevOps projects are still IT projects and typically use a significant amount of COTS (e.g., virtual machine software, containers, middleware, databases, and libraries) as well as open source components. Consequently, InfoSec professionals should be capable of verifying that all necessary security patches are applied and that known vulnerabilities in third-party software are mitigated.

Phase 4: Continuous Monitoring and Improvement of Competencies

Process Institutionalization and Continuous Measurement/Monitoring

DevOps InfoSec professionals should have sufficient skills to evaluate process effectiveness and provide guidance and assistance when needed. They should also be capable of measuring process deviation and interpreting results.

Deployment Process Streamlining to Minimize Security Bottlenecks

InfoSec professionals must be able to analyze end-to-end deployment pipeline processes with an eye toward minimizing information security process-related bottlenecks. They must have the creativity to bring new options to the table for streamlining and improving efficiency of traditional information security tasks (e.g., automating manual tasks) as well as willingness to challenge the status quo. For this analysis, InfoSec professionals (shown on the left side of Figure 7.3) should understand what metrics are generated as a natural byproduct of the deployment lifecycle and be capable of proposing data-driven improvements.

DevOps Metrics for Security Analysis (e.g., Dashboards and Logs)

InfoSec professionals should be capable of using data from mining of dashboards and logs to gain a better sense of situational awareness with respect to the overall health and resiliency of the system. They should also understand what production metrics are available, or can be easily made available, for ongoing cyber-threat analysis. For example, audit logs are useful artifacts for monitoring privilege or role-escalation attacks.

7.6 Using Malware Analysis16

16. This section was written by Nancy Mead with Jose Morales and Greg Alice.

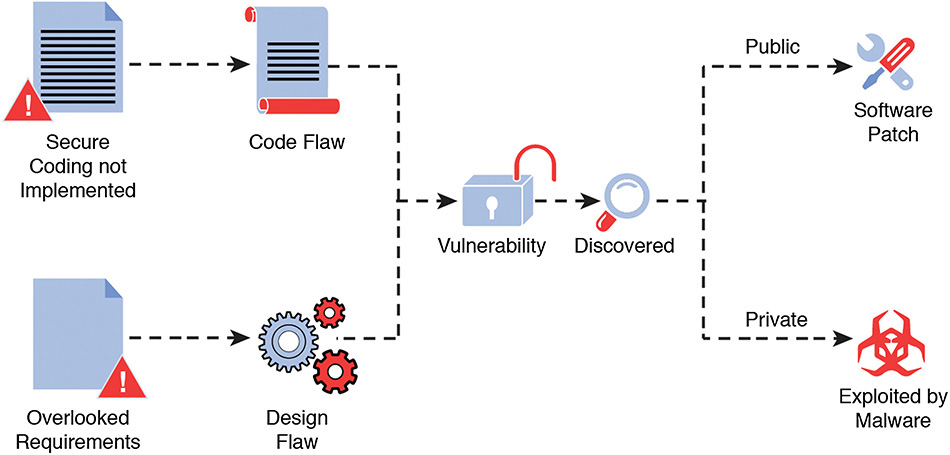

Hundreds of vulnerabilities are publicly disclosed each month [NIST 2016]. Exploitable vulnerabilities typically emerge from one of two types of core flaws: code flaws and design flaws. In the past, both types of flaws have facilitated several cyberattacks, a subset of which manifested globally.

We define a code flaw as a vulnerability in a code base that requires a highly technical, crafted exploit to compromise a system; examples are buffer overflows and command injections. Design flaws are weaknesses in a system that may not require a high level of technical skill to craft exploits to compromise the system. Examples include failure to validate certificates, non-authenticated access, automatic granting of root privileges to non-root accounts, lack of encryption, and weak single-factor authentication. A malware exploit is an attack on a system that takes advantage of a particular vulnerability.

A use case [Jacobson 1992] describes a scenario conducted by a legitimate user of the system. Use cases have corresponding requirements, including security requirements. A misuse case [Alexander 2003] describes use by an attacker and highlights a security risk to be mitigated. A misuse case describes a sequence of actions that can be performed by any person or entity to harm the system. Exploitation scenarios are often documented more formally as misuse cases. In terms of documentation, misuse cases can be documented in diagrams alongside use cases and/or in a text format similar to that of a use case.

Several approaches for incorporating security into the software development lifecycle (SDLC) have been documented. Most of these enhancements have focused on defining enforceable security policies in the requirements gathering phase and defining secure coding practices in the design phase. Although these practices are helpful, cyberattacks based on core flaws have persisted.

Major corporations, such as Microsoft, Adobe, Oracle, and Google, have made their security lifecycle practices public [Lipner 2005; Oracle 2014; Adobe 2014; Google 2012]. Collaborative efforts, such as the Software Assurance Forum for Excellence in Code (SAFECode) [Bitz 2008], have also documented recommended practices. These practices have become de facto standards for incorporating security into the SDLC.

These security approaches are limited by their reliance on security policies, such as access control, read/write permissions, and memory protection, as well as on standard secure code writing practices, such as bounded memory allocations and buffer overflow avoidance. These processes are helpful in developing secure software products, but—given the number of successful exploits that occur—they fall short. For example, techniques such as design reviews, risk analysis, and threat modeling typically do not incorporate lessons learned from the vast landscape of known successful cyberattacks and their associated malware.

The extensive and well-documented history of known cyberattacks can be used to enhance current SDLC models. More specifically, a known malware sample can be analyzed to determine whether it exploits a vulnerability. The vulnerability can be studied to determine whether it results from a code flaw or a design flaw.

For design flaws, we can attempt to determine the overlooked requirements that resulted in the vulnerability. We make this determination by documenting the misuse case corresponding to the exploit scenario and creating the corresponding use case. Such use cases represent overlooked security requirements that should be applied to future development and thereby avoid similar design flaws leading to exploitable vulnerabilities. This process of applying malware analysis to ultimately create new use cases and their corresponding security requirements can help enhance the security of future systems.

7.6.1 Code and Design Flaw Vulnerabilities

Two types of flaws lead to exploitable vulnerabilities: code flaws and design flaws. A code flaw is a weakness in a code base that requires specifically crafted code-based exploits to compromise a system; examples are buffer overflows and command injections. More specifically, code flaws result from source code being written without the implementation of secure coding techniques.

Design flaws result from weaknesses that do not necessarily require code-based exploits to compromise the system. More specifically, a design flaw can result from overlooked security requirements. Examples are failure to validate certificates, non-authenticated access, root privileges granted to non-root accounts, lack of encryption, and weak single-factor authentication. Some design flaws can be exploited with minimal technical skill, leading to more probable system compromise. The process leading to a vulnerability exploit or remediation via software update is shown in Figure 7.4 [Mead 2014]. We focus on vulnerabilities resulting from a design flaw. In these cases, the overlooked requirements can be converted to a use case applicable to future SDLC cycles.

A large body of well-documented and studied cyberattacks is available to the public via multiple sources. In this section, we describe some publicly disclosed cases of exploited vulnerabilities that facilitated cyberattacks and arose from design flaws. For each case, we describe the vulnerability and the exploit used by malware. We also present the overlooked requirement(s) that led to the design flaw that created the vulnerability. By learning from these cases and analyzing associated malware, we can create use cases that can be included in SDLC models.

Case 1: D-Link Routers

In October 2013, a vulnerability was discovered that granted unauthenticated access to a backdoor of the administrative panel of several D-Link routers [Shywriter 2013; Craig 2013]. Each router runs as a web server, and a username and password are required for access. The router’s firmware was reverse engineered, and the web server’s authentication logic code revealed that a string comparison with “xmlset_roodkcableoj28840ybtide” granted access to the administration panel.

A user could be granted access by simply changing his or her web browser’s user-agent string to “xmlset_roodkcableoj28840ybtide.” Interestingly, the string in reverse partially reads “editby04882joelbackdoor.” It was later determined that this string was used to automatically authenticate configuration utilities stored within the router.

The utilities needed to automatically reconfigure various settings and required a username and password (which could be changed by a user) to access the administration panel. The hardcoded string comparison was implemented to ensure that these utilities accessed and reconfigured the router via the web server whenever needed, without requiring a username and password.

The internally stored configuration utilities should not have been required to access the administration panels via the router’s web server, which is typically used to grant access to external users. A non-web-server-based communication channel between internally stored proprietary configuration utilities and the router’s firmware could have avoided the specific exploit described here, although further analysis could have led to a more general solution.

Case 2: Android Operating System

In 2014, Xing et al. [Xing 2014] discovered critical vulnerabilities in the Android operating system (OS) that allowed an unprivileged malicious application to acquire privileges and attributes without user awareness. The vulnerabilities were discovered in the Android Package Manager and were automatically exploited when the operating system was upgraded to a newer version.

A malicious application already installed in a lower version of Android OS would claim specific privileges and attributes that were available only in a higher version of the Android OS. When the OS was upgraded to the higher version, the claimed privileges and attributes were automatically granted to the application without user awareness.

The overlooked requirement in this case was to specify that during an upgrade of the Android OS, previously installed applications should not be granted privileges and attributes introduced in the higher OS without user authorization.

Case 3: Digital Certificates

In March 2013, analysts discovered that malware authors were creating legitimate companies for the sole purpose of acquiring verifiable digital certificates [Kitten 2013]. These certificates are used by malware to be authenticated and allowed to execute on a system since they each possess a valid digital signature.

When an executable file starts running on an operating system such as Windows, a check for a valid digital signature is performed. If the signature is invalid, the user receives a warning that advises him or her not to allow the program to execute on the system. By possessing a valid digital signature, malware can execute on a system without generating any warnings to the user.

The reliance on a digital signature to allow execution of binaries on a system is no longer sufficient to avoid malware infection. The overlooked requirement in this case was to, along with verifying the digital signature, carry out multiple security checks before granting execution privilege to a file, such as the following:

• Scanning the file for known malware

• Querying whether the file has ever been executed in the system before

• Checking whether the digital signature was seen previously in other legitimate files executed on this system

Examining the Cases

In each of the cyberattack cases described here, the vulnerabilities could have been avoided had they been identified during requirements elicitation. Using risk analysis and/or good software engineering techniques, teams can identify all circumstances of use and craft appropriate responses for each case. These cases can also be generalized and applied as needed.

The following abstracts can lead to requirements statements:

• Case 1: Identify all possible communication channels—Designate valid communication channels and do not permit other communication channels to gain privileges.

• Case 2: Do not automatically transfer privileges during an upgrade—Request validation from the user that the application or additional user should be granted privileges.

• Case 3: Require multiple methods of validation on executable files—In addition, as a default, consider asking for user confirmation prior to running an executable file.

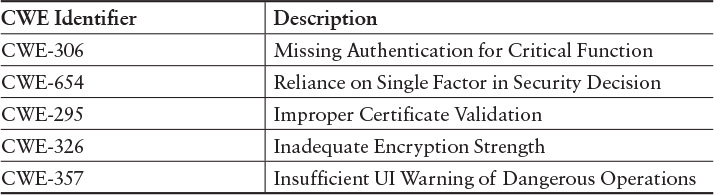

In addition to the specific cases of exploited vulnerabilities discussed above, there have been several other cyberattacks on software systems that used one or more exploited vulnerabilities resulting from either a code flaw or a design flaw. These vulnerabilities are defined in a hierarchical structure using the Common Weakness Enumeration (CWE) [Kitten 2013; MITRE 2014].

The CWE provides a common language to discuss, identify, and handle causes of software security vulnerabilities. These vulnerabilities can be found in source code, system design, or system architecture. An individual CWE represents a single vulnerability type. A subset of CWEs can be attributed to design flaws resulting from overlooked requirements; some of the design flaw CWEs pertinent to the cases above are listed in Table 7.6.

Table 7.6 Sampling of Design Flaw CWEs [Mead 2014]

The lessons learned from previous cyberattacks and the underlying CWEs can be used to better understand overlooked requirements and resulting security implications. Analyzed and publicly disclosed cyberattacks provide details about how attackers implemented an exploit on a specific vulnerability. CWEs provide a better understanding of security vulnerabilities underlying a cyberattack. Combining information from these two sources facilitates the creation and inclusion of use cases that capture the overlooked requirements that lead to design flaws.

7.6.2 Malware-Analysis–Driven Use Cases

Malware exploits vulnerabilities to compromise a system. Vulnerabilities are normally identified by analyzing a software system or a malware sample. When a vulnerability is identified in a software system, it is documented and remedied via a software update.

Vendors inform the public of vulnerabilities that are considered critical and that impact a large user base; the OpenSSL Heartbleed vulnerability is an example of such a vulnerability [Wikipedia 2014a]. A vulnerability is usually identified via malware analysis after the malware has entered the wild and compromised systems. Sometimes the discovered exploited vulnerability in the analyzed malware is a zero-day vulnerability.

Zero-day [Wikipedia 2014b] vulnerabilities are some of the biggest threats to cyber security today because they are discovered in private. They are typically kept private and exploited by malware for long periods of time. “Zero-days” afford malware authors time to craft exploits. Zero-days are not guaranteed to be detected by conventional security measures, making their threat even more serious.

One approach to avoid creating vulnerabilities is to implement secure lifecycle models. These models can be enhanced with the inclusion of use cases that are derived from previously discovered vulnerabilities that resulted from design flaws. Analyzing malware that exploits a vulnerability provides details of the vulnerability itself and, more importantly, provides details of the exploit implementation. The exploit details can offer additional insight into the vulnerability and the underlying design flaw.

The previous examples indicate that standard secure lifecycle practices may not be adequate to identify all avenues for potential attacks. Potential attacks must be addressed at requirements collection time (and at every subsequent phase of the lifecycle).

The selected case studies illustrate that malware analysis can reveal needed security requirements that may not be identified in the normal course of development, even when secure lifecycle practices are used. At present, malware discovery is often used to develop patches or address coding errors but not necessarily to inform future security requirements specification. We believe that failure to exercise this feedback loop is a serious flaw in security requirements engineering, which tends to start with a blank slate rather than use lessons learned from prior successful attacks. We recommend that secure lifecycle practices be modified to benefit from malware analysis.

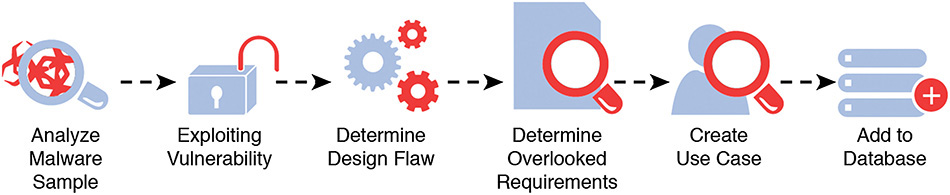

Examining techniques that are focused on early security development lifecycle activities can reveal how malware analysis might be applied. We recommend a process for creating malware-analysis–driven use cases that incorporates malware analysis into a feedback loop for security requirements engineering on future projects and not just into patch development for current systems [Mead 2014]. Such a process can be implemented using the following steps (also illustrated in Figure 7.5 [Mead 2014]:

1. A malicious code sample is analyzed both statically and dynamically.

2. The analysis reveals that the malware is exploiting a vulnerability that results from either a code flaw or a design flaw.

3. In the case of a design flaw, the exploitation scenario corresponds to a misuse case that should be described. The misuse is analyzed to determine the overlooked use case.

4. The overlooked use case corresponds to an overlooked security requirement.

5. The use case and corresponding requirements statement is added to a requirements database.

6. The requirements database is used in future software development projects.

Steps 1 and 2 include standard approaches to analyzing a malicious code sample. The specific analysis techniques used in steps 1 and 2 are beyond the scope of this paper. In step 2, the analysis is used to determine whether the exploited vulnerability is the result of a code flaw or a design flaw. Typically, the source of the vulnerability can be determined through detailed analysis of the exploit code. Of course, these steps presume that the malware is detected, which in itself is a challenge.

Step 2 illustrates the advantage of malware analysis by leveraging the exploit code to determine the flaw type. Standard vulnerability discovery and analysis without malware analysis excludes exploit code and may make flaw type identification less straightforward.

Step 3 details how the exploit was carried out in the form of a misuse case, which provides the needed information to determine the overlooked use case that led to the design flaw.

In step 4, the overlooked use case is the basis for deciding what may have been the overlooked requirement(s) at the time the software system was created that led to the design flaw. These are the requirements that should have been included in the original SDLC of the software system, which would have prevented creation of the design flaw that led to the exploited vulnerability.

Steps 5 and 6 record the overlooked use case and corresponding requirement(s) for use in future SDLC cycles. This process is meant to enhance future SDLC cycles in a simplified manner by providing known overlooked requirements that led to exploited vulnerabilities. By including these requirements in future SDLC cycles, the resulting software systems can be made more secure by helping to avoid the creation of exploitable vulnerabilities.

7.6.3 Current Status and Future Research

We recommend incorporating the feedback loop described in this chapter into the secure software development process as a standard practice. We have described the process steps to support such a feedback loop. Researchers have studied Security Quality Requirements Engineering (SQUARE) [Mead 2005] and proposed modifications to incorporate malware analysis. In one pilot study, researchers found that requirements developed to mitigate a successful prior attack on an existing system in the same domain were given higher priority by the customer of the new system under development.

As mentioned earlier in this chapter, an extended case study explored the proposed process of analyzing a malware sample. Using a sample of malware that steals data from Android mobile devices, we determined the exploitation scenario that was used by the malware exploit. Our investigation into the consequences of this malware exploit revealed a design flaw in a mobile application that could compromise user data. We studied the design flaw to determine the applicable misuse cases and used those misuse cases to ascertain the missing security requirements to be used on future mobile applications for the Android platform [Alice 2014; Mead 2015].

A prototype tool has been developed to support the process of enhancing exploit reports to include misuse cases, use cases for mitigation, and overlooked security requirements. These enhanced reports are then stored in a database that can be used by requirements engineers on future systems. The source code for the MORE tool (Malware Analysis Leading to Overlooked Security Requirements) is available for free download from www.cert.org/cybersecurity-engineering/research/security-requirements-elicitation.cfm.

Exploit kits [McGraw 2015] are often used by malware in a plug-and-play fashion to infect a system. An exploit kit is a piece of software that contains working exploits for several vulnerabilities. It is designed to run primarily on a server (an exploit server) to which victim machines are redirected after a user clicks a malicious link, either in a webpage or an email. The victim machine is scanned for vulnerabilities. If a vulnerability is identified, the exploit kit automatically executes any applicable exploit to compromise the machine and infect it with malware. In general, malware either has exploits built into its binary or relies on exploit kits to initially compromise a machine. The implications of exploit kits is another area of exploration that may provide code samples for use in the process.

An open question for consideration: Do specific types of malware exist that are likely to occur in specific kinds of critical systems, such as control systems? Analysis of historical and current malware incidents may help to identify exploits that target specific types of applications. Knowing these exploit types in advance could help requirements engineers identify standard misuse cases and the needed countermeasures for their specific application types.