Chapter 7

Cyber-Security Techniques in Distributed Systems, SLAs and other Cyber Regulations

Soumitra Ghosh, Anjana Mishra, Brojo Kishore Mishra

C. V. Raman College of Engineering, Bhubaneswar, Odisha, India

Email: [email protected], [email protected], [email protected]

Abstract

This chapter focuses on various cybersecurity techniques meant for parallel as well as distributed computing environment. The distributed systems basically are candidates for giving increased performance, extensibility, increased availability, and resource sharing. The necessities, like multi-user configuration, resource sharing, and some form of communication between the workstations, have created a new set of problems with respect to privacy, security, and protection of the system as well as the user and data. So, new age cybersecurity techniques to combat cybercrimes and protect data breaches are the need of the hour. The chapter also focuses on the need for service level agreements (SLAs) that prevail between a service provider and a client relating to certain aspects of the service such as quality, availability, and responsibilities. The Cuckoo’s Egg lessons on cybersecurity by Clifford Stoll as well as various amendments to curb fraud, data breaches, dishonesty, deceit and other such cybercrimes, are also thoroughly discussed herein.

Keywords: Cybersecurity, cybercrime, threat, security, computing, computer security, risk, vulnerability, parallel, distributed

7.1 Introduction

Distributed computing is a more generic term than parallel computation. More often than not, both terms are used interchangeably. Unlike parallel computing where concurrent execution of tasks is the working principle, distributed computing deals with additional capabilities like consistency, partition tolerance and availability.

A system like Hadoop or Spark is an example of distributed computing system that is highly robust and reliable in the sense that it can handle node and network failures. Such systems achieve data loss prevention through data replication over multiple nodes in the network. However, both systems are also designed to perform parallel computing. Unlike HPC systems like MPI, these new kinds of systems are able to continue with a massive calculation even if one of the computational nodes fail.

7.1.1 Primary Characteristics of a Distributed System

A distribution middleware connecting a network of autonomous computers is known as a distributed system. Any typical arrangement of a distributed system enables users to share different resources and capabilities with a solo and integrated consistent network.

- Concurrency: Enabling sharing of resources by various nodes in a distributed setup at the same time is the concept behind concurrency.

- Transparency: The perception of the system as a single unit instead of an assembly of autonomous components is the main idea behind transparency. The aim of a distributed system designer is to hide the complexity of the same as much as possible. Transparency parameters can be usage permissions, relocation, concurrency, breakdown, diligence and user resources.

- Openness: The objective of openness is to design the network in a way that can be easily configured and/or modified. Developers often require adding new features or modify/replace any existing feature in any distributed node, which is easily facilitated if there is proper support for interoperability based on some standard protocols. Also, well-defined interfaces smoothen the process [1].

- Reliability: Distributed systems are capable of being highly resistant to failures, secure and more consistent when compared to a single system.

- Performance: Performance wise, a distributed system also outperforms an autonomous system by a huge margin by enabling maximum utilization of resources.

- Scalability: Any distributed system must be designed keeping in mind that it may have to be extended or what we call scaling based on future requirement or increasing demand. A system may demand scaling on parameters like geography, size or administration [1].

- Fault Tolerance: Fault tolerance and reliability of a system can be considered close neighbors. A fault-tolerant system is one that provides high reliability even in the toughest of scenarios, be it subsystem failures, network failures, etc. Providing security to the system in various ways possible, like increasing redundancy of data, preventing denial-of-service (DoS) attacks, upgrading the level of resilience of the system, etc., makes a system a fault-tolerant one.

7.1.2 Major Challenges for Distributed Systems

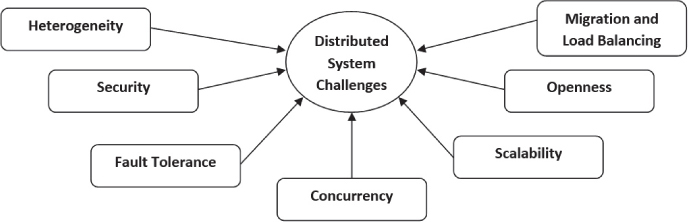

The challenges of a distributed system is shown in Figure 7.1.

Figure 7.1 Challenges of a distributed system.

Heterogeneity: Services and applications spanning a varied group of computers and networks can be used, run, and accessed by users over the Internet.. Hardware devices of different variants (PC, tablets, etc.) running various operating systems (Windows, iOS, etc.) may need to exchange information or communicate among themselves to serve a particular purpose. Programs written in unlike languages will be able to converse with one another only when these dissimilarities are dealt with. For this to take place, like the Internet protocols, agreed upon standards also need to be required and accepted [3]. The word middleware applies to a software layer that provides a programming abstraction in addition to masquerading the heterogeneity of the core networks, programming languages and operating systems hardware.

A program code that can be relocated from one computer to a different one and executed at the destination is referred to as a mobile code. One such example of a mobile code is Java applet.

Security: While using public networks, security becomes a huge concern with respect to distributed environment. Security, which can be described in broad terms as confidentiality (protection against revelation to illegitimate persons), integrity (protection against modification or dishonesty) and availability (protection against meddling with ways to use resources), must be provided in DSS. Encryption procedures can be used to fight these concerns, such as those of cryptography, but they are still not infallible. Denial-of-service (DoS) attacks can still take place, where a server or service is showered with fake requests, usually by botnets (zombie computers). The possible threats are data leakage, integrity infringement, denial-of-service (DoS) and illegal handling.

Fault Tolerance and Handling: Fault tolerance becomes more complicated when some unreliable components become an integral part of the distributed system. Distributed systems require the fault tolerating ability of a system and function normally. Failures are unavoidable in any system; some subsystems may stop functioning whereas others go on running fine. So as you would expect, we require a means to detect failures (by employing several techniques like issuing checksums), mask failures (by retransmitting upon failure to obtain acknowledgement), recover from failures (by rolling back to a previous safe state if a server crashes), and build redundancy (by replicating data to prevent data loss in case a particular system in the network crashes).

Concurrency: Numerous clients trying to ask for a shared resource at the same moment may lead to concurrency issues. This is critical as the results of any such information may depend on the completion order and so synchronization is needed. Moreover, distributed systems do not have a global clock, hence the need for synchronization becomes more evident for proper functioning of all components in the network.

Scalability: Scalability issues arise when a system is not well equipped to handle a sudden boost of any number of resources or number of users or both. The architecture and algorithms must be efficiently used in this situation. Scalability can be thought of comprising primarily three dimensions:

- Size: Size represents the number of users and resources to be processed. Difficulty that arises in this scenario is overloading.

- Geography: Geography represents the distance linking users and resources. The difficulty that arises in this scenario is communication reliability.

- Administration: As the dimension of distributed systems grows, many of the nodes need to be controlled. The difficulty that arises in this scenario administrative chaos [3].

Openness and Extensibility: Interfaces should be alienated and openly obtainable to facilitate trouble-free additions to existing components and those components that are freshly put in [2]. If the well-defined interfaces for a system are available, it is easier for developers to insert fresh features or substitute components in the future. Openness issues become serious when an already published content is suddenly taken back or reversed. Besides, often than not there is no central authority in open distributed systems, as dissimilar systems may have their personal mediator. For example, organizations like Facebook, Twitter, etc., allow developers to build their own software interactively through their API [2].

Migration and Load Balancing: Some sense of independency must exist among tasks and users or applications so that when certain tasks are required to move within the system, other tasks of users or applications are not affected and in order to get to get a better performance out of the system, the load must be distributed among the available resources.

7.2 Identifying Cyber Requirements

Before putting ourselves into the world of the Internet, a few things need to be very clear in our minds if we need to distance ourselves from cyberbullies or prevent any privacy breach of our personal data. As a matter of fact, having answers to a few layman’s questions would really come in handy before we start our journey in this cyber world.

- Who has a certified way in? (Would address issues related to confidentiality).

- Who is allowed to craft alterations to the information? (Would address issues related to integrity).

- When is there is a need to access the data? (Would address issues related to availability) [4].

Confidentiality, integrity and availability, also known as the CIA triad, is a framework of standards to provide data security to a company. It is also called the AIC triad in order to avoid any confusion with the Central Intelligence Agency.

In this context, confidentiality ensures that the information is received by the correct person for whom the information was actually intended by the sender. Integrity ensures the message or information received by the receiver is the original message that was actually sent by the sender and no sort of modification or alteration of the actual message was done during the message transmission. Availability is an assurance of the trustworthiness of the data used by authentic people.

There are other factors besides the CIA triad which have grown in importance in recent years, such as Possession or Control, Authenticity, and Non-Repudiation.

7.3 Popular security mechanisms in Distributed Systems

7.3.1 Secure Communication

Suitable adjustments are made to provide a safe medium of communication amid clients and servers so that the CIA factors are preserved. Secure channel provides a safe way of communication between a client and a receiver, preventing any intrusion of a third party from happening so that the confidentiality and/or integrity and/or authentication is not compromised. There are several protocols by which we achieve this purpose. Here we will discuss a few of the protocols that help preserve the authentication factor [5].

7.3.1.1 Authentication

Authentication and integrity are interdependent. For instance, consider a disseminated framework that supports verification in aid of a relationship, but does not provide rules for guaranteeing the integrity of the information. Alternatively, a framework that just ensures data truthfulness, while not measuring for validation. This is why the information authentication and truthfulness must be as one. In numerous conventions, this mix functions admirably. To guarantee truthfulness of information once it is exchanged subsequent to the right verification, we make use of special keys encrypted by the session keys. The session key, which is a shared secret key, applies to the encryption of information truthfulness (integrity) and discretion (confidentiality). Such a key is usable while, the set up channel exists. At the point when the channel is shut, the session key is lost. Here we will talk about the verification strategies in view of the session key [6, 7].

7.3.1.2 Shared Keys Authorship-Based Authentication

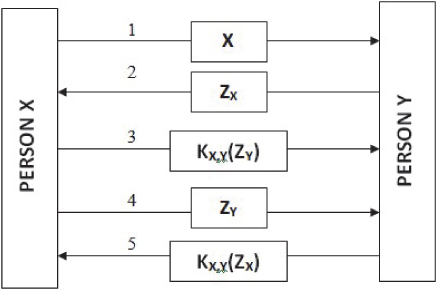

Figure 7.2 depicts an authentication protocol based on shared keys. If a person, say X, wishes to build a communication channel with another person, say Y, their communication is facilitated by sending a request message (say message1) by X to Y.

Figure 7.2 Shared secret key-based authentication.

The challenge ZY is sent back to X from Y through message2. This challenge can consist of any random number. X encrypts the challenge with the secret key KX,Y, which is shared by y, and sends the encrypted challenge to Y in the form of message3. At that point, when Y gets a response from KX,Y (ZY) to its own challenge ZY, and to check whether ZY is included or not, he decrypts the message using the shared key. This way he knows X exists on the other side and figures out who else is required for encryption of ZY with ZX,Y. Y exhibits that talks with X, however X still did not demonstrate talks with Y, so he sends the challenge ZX (via message4) that it is answered with return of KX,Y(Rx) (via message5). X is assured speaking with Y when it decodes message5 to find KX,Y and ZX. In this fashion, (N(N – 1))/2 keys would be required to manage “N” hosts.

7.3.1.3 Key Distribution Center-Based Authentication

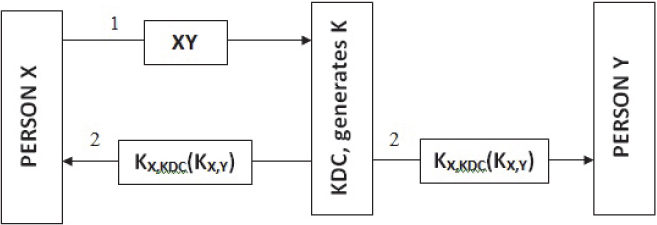

The key distribution center (KDC) is another technique which can be utilized as an authentication method. The key distribution center shares a secret key with each user, but no two users are required to have a shared key. With the key distribution center, it is important to deal with N keys.

Figure 7.3 shows how this authentication works. X expresses its interest to communicate with Y by sending a message to the Key Distribution Center. A message is returned to X by the Key Distribution Center that contains secret shared keys KX,Y which can be used by X. Furthermore, using the shared key KX,Y which is encrypted by secret key KY, KDC is sent by the Key Distribution Center to Y. The Needham-Schroeder verification protocol is outlined in view of this model [6].

Figure 7.3 Role of KDC in authentication.

7.3.1.4 Public key encryption based authentication

Figure 7.4 depicts the use of public key cryptography as an authentication protocol. X being the first person, makes the first move of sending challenge ZX to user Y, which is encrypted by its public key K + Y. A challenge must be sent to X by Y after the latter decrypts the message. X is assured of communicating to Y, in view of the fact that Y is the only user who can decrypt this message by means of the private key associated with the public key of X. When Y receives the channel establishment request from X, it returns the decrypted challenge accompanying its own challenge ZY to authenticate X and generate session key KX,Y.

Figure 7.4 Public key encryption based on mutual authentication.

An encrypted message with public key K + X related to X includes Y response to the challenge X, own challenge ZY and session key that is shown as message 2 in the figure. Only X is able to decrypt the message using the private key K – X related to K + X. Finally, X returns his response to the challenge Y using the session key KX,Y which is produced by B. Therefore, it can decode messages 3 and, in fact, Y talks to X [5].

7.3.2 Message Integrity and Confidentiality

Besides authentication, a safe medium must also pledge confidentiality and integrity. Information truthfulness states that there should be no modification in the message during transmission of the same from the sender to the recipient. Confidentiality ensures that messages reach the intended receiver and not to any eavesdropper. Message encryption helps us achieve confidentiality. Cryptography can be carried out by using a shared secret key with the recipient or by means of the public key of the recipient [8, 9].

7.3.2.1 Digital Signatures

A digital signature can be thought of as the digital counterpart of a handwritten signature or printed seal that offers better security than conventional signatures. A computerized signature guarantees approval of confirmation and respectability of any message or electronic report. Uneven cryptography, which is a sort of open key cryptography, frames the premise of advanced marks. By the utilization of an open key calculation, for example, RSA, two keys can be created, one private and one open. To make an advanced mark, marking programming, such as an email program, makes a restricted hash of the electronic information to be agreed upon. The private key is then used to encode the hash.

The encrypted hash along with other data, such as the hashing algorithm is the digital signature. The purpose behind encoding the hash rather than the whole message or report is that a hash capacity can change over a discretionary contribution to a settled length hash esteem, which is generally substantially shorter, in this manner sparing time as hashing is considerably quicker than marking. Each piece of hashed information creates an interesting code. Any adjustments to the information brings about an alternate esteem. This encourages us to approve the integrity of the information by utilizing the endorser’s open key to unscramble the hash. In the event that the unscrambled hash coordinates a moment of processed hash of similar information, it demonstrates that the information hasn’t changed since it was agreed upon. On the off chance that the two hashes don’t coordinate, the information can be thought of as being endangered. As it were, the information has either been altered somehow (honesty) or the mark was made with a private key that doesn’t compare to the general population key displayed by the endorser (verification).

7.3.2.2 Session Keys

During the formation of a protected channel, after completion of the verification stage, the users generally connect with a master session key to guarantee privacy. Another strategy is utilizing the same keys for classification and secure key settings. Assume that, the key that was utilized to build up the session is being utilized to guarantee both trustworthiness and classification of the message as well. In this situation, each time the key is imperiled, an assailant can unscramble messages transmitted during the old discourse, which isn’t at all satisfactory. Be that as it may, on the off chance that we utilize the session key to meet our motivation, if there should be an occurrence of a traded off key situation, the assaulted can interrupt just a single session and transmitted messages during different sessions stay private. In this manner, the blend of the keys into long-haul session keys, which are less expensive and brief, is typically a decent decision for executing a protected channel for information trade.

7.3.3 Access Controls

In dispersed frameworks, when a customer and server make a protected channel between them, the customer more often than not produces demands for some administration from the server. Without legitimate access rights, such demands can’t be dealt with. Revocation of access is a very critical thought in access control. It is imperative that when we have given a access to some resource to a party, we have the capacity to that back that access again, if or when required. Some well known access control models are talked about below [10].

7.3.3.1 Access Control Matrix

An Access Control Matrix is a table that expresses a subject’s entrance rights on a resource. These rights can be of the sort read, compose, and execute. Assurance by a program called supervisory reference will apply incorporated questions on administration issues, for example, making, changing and erasing objects. A reference record subject runs errands and chooses whether or not the subject is approved to perform specific activities. The access control matrix lists all procedures and documents in a network. Each line means a procedure (Object) and every segment implies a document (Subject) [8, 11]. Every network passage is the entrance rights that a subject has for that protest. At the end of the day, each time the circumstance of S asks for an approach known as M from thing O, the supervisory reference might take a look at it, regardless of whether M exists in M [S, O]. In the event that m in M[S, O] isn’t generally to be had, the call fizzles out. An access control list (ACL) is a rundown of access control entries (ACE), where each ACE recognizes a trustee and determines the entrance rights permitted, denied, or reviewed for that trustee.

7.3.3.2 Protection Domains

An access control list (ACL) is able to help actualize an effective access control framework, through evacuating unfilled earnings. However, an access control list or highlight list pays little respect to other criteria. The protection domain technique decreases the utilization of access control lists. Protection domain is an arrangement of sets containing access rights and questions. Each match precisely indicates which activities are permitted to run for each protest. Solicitations for activities, are dependably issued inside the range. Along these lines, the supervisory reference first looks through its insurance area, at whatever point the subject demands a question’s activity. As per space, the supervisory reference can check regardless of whether the application can be run or not. Rather than being approved to do the supervisory reference in the whole assignment, each subject could be allowed to complete a declaration to decide it has a place with which sort of gathering. One needs to convey his endorsement to supervisory reference each time they need to peruse a site page from the Internet. We secure it with digital signatures to ensure the beginning of the testament and its well-being [9].

7.3.3.3 Trusted Code

The ability to migrate code between hosts has been created in recent years with the development of distributed systems. Such systems can be protected by a tool known as Sandbox, which enables running programs downloaded from the Internet in separation to prevent system failures or software vulnerabilities. If while trying to set up a rule is prohibited by the host, the program will come to a halt. If one wants to build a more flexible sandbox, playground designing procedures can be downloaded from the internet.

Playground is a selective machine that makes arrangements for versatile code, which can comprise neighborhood resources, organize associations with outside servers, and provide records for applications that keep running over the field. But mobile local sources of machines are alienated from playground physically and are not easily reachable by received code from the Internet. Remote procedure calls can help clients of such a machine accomplish a playground. Then again, there is no portable code for exchanging to open machines in the field. The refinement, including playground and sandbox, can be detected in Figure 7.6.

Figure 7.6 Schematic of a sandbox and a playground.

7.3.3.4 Denial of Service

The purpose of access control is to allow authentic users to have access to resources. Denial-of-service is an attack that stops authentic users from getting access to resources. Since distributed systems are open in nature, the need for protection against DoS is even more essential. It becomes very difficult to prevent or manage DoS attacks that run from a single/multiple source(s) to arrange a distributed denial-of-service (DDoS) attack. The intention usually is to install a malicious software into a victim’s machine [12]. Firewall plays an important role here in restricting traffic into a internal network from the outside world based on various filters according to suitable needs of the organization [11].

7.4 Service Level Agreements

A service level agreement, abbreviated SLA, is more about providing the basis for post-incident legal combat than increasing quality, availability and responsibilities of service. It is a contract between a service supplier (internal/external) and the user that consists of the levels of service that a customer can expect from that service supplier. Service level agreements are output-based in that their intention is exclusively to sketch out what the end user will receive [13].

7.4.1 Types of SLAs

Service level agreements are classified into various levels:

- Customer-Based SLA: A customer-based SLA is a contract consisting of all services that are used by an individual group of customers. For example, a service level agreement involving a provider (IT service provider) and the finance section of a large company for services such as finance system, procurement/purchase system, billing system, payroll system, etc.

- Service-Based SLA: A service-based SLA is not restricted to any specific customer group, instead it is a contract for all clients using the services being provided by the service supplier. For example:

- – A mobile service provider provides a regular service to all its clients and offers definite repairs as a part of an offer with the general charges.

- – An email system for the whole firm. Because of varied levels of services being provided to different customers, critical situations may arise because of lack of uniformity.

- Multilevel SLA: A multilevel SLA is categorized into different levels to address different sets of customers for the same services within the same SLA. For example:

- – Corporate-level SLA: It covers all the common service level management (usually known as SLM) concerns relevant to every customer throughout the entire firm. These concerns are probably less unstable and so updates (SLA reviews) are less commonly needed.

- – Customer-level SLA: It covers all service level managementconcerns related to the particular client set, in spite of the services being used.

- – Service-level SLA: It covers all service level management concerns related to the exact services, in relation to this precise client set [15].

The parameters that describe service levels for an Internet service provider are supposed to assure:

- A narrative of the service being offered: Repairs of parts such as domain name servers, active host configuration protocol servers, and network connectivity.

- Dependability: Duration of availability of service.

- Receptiveness: This implies service time inside which a request will be replied to.

- Procedure for reporting problems: How problems will be reported, who can be contacted, technique for escalation, and what other remedies are undertaken to get through the issue effectively.

- Supervising and accounting level of service: Usually concerned with who will monitor a general performance, what information will be collected and how often as well as how much right to use the client is given to performance data, what information could be collected and how often, in addition to how much access the purchaser is given to all the performance facts.

- Penalty for not meeting service commitments: May cover credit or settlement to clients, or allowing the client to end the connection.

- Get away terms or limitations: Occasions under which the service levels were assured no longer hold. For example, an exclusion from uptime provisions in situations of various natural calamities destroying the ISP’s gadgets.

The content of every service level agreement varies according to different service providers but uniformly covers common topics, such as quality and volume of task (taking into account both accuracy and correctness), receptiveness, efficiency and speed. The document serves as a common understanding of responsibilities, services, guarantees, areas prioritized and warranties offered by the service supplier.

Commonly used technological descriptions to enumerate the service level, such as mean time between failures (MTBF) or mean time to recovery, response, or resolution (MTTR), which mention a threshold value (average or minimum) for service level performance are mentioned in an SLA [13].

7.4.2 Critical Areas for SLAs

The information security team and the information technology team must work hand-in-hand to develop the most important terms of service level agreements. SLAs must focus on those areas in which information technology groups have the most perceptive outcome and control in order to have a radical effect on the information security program. It is seen that the effects of such agreements are lessened if the information security teams fail to restrict the SLAs to only the most vital items. Analyzing the risks associated with an organization or assessing past events may point the way to the most vital components for a specific firm. In most cases, the following areas are proposed as important issues:

7.4.2.1 Examining the Network

Network scans are carried out by many information security teams to recognize susceptibilities of the network. Recognizing the problems is just the starting point of the development. How the crisis should be handled or taken care of is the second step. The third step can be thought of as how it can be integrated into a course to avoid any occurrence in the future. To automate the network scanning practice, different firms use various commercial tools. One such tool is ISS Internet Scanner, which, if used, can document vulnerabilities grouped into categories of High Risk, Medium Risk and Low Risk. Following are various risk levels defined in the Help Index of ISS Internet Scanner:

- High: This refers to any susceptibility that allows an intruder to gain immediate admittance into a system such as gaining superuser entry and access permissions or finding a way around a firewall. For example, a susceptible Sendmail 8.6.5 version permits a fraud to carry out instructions on mail servers, installations of BackOrifice, NetBus or an Admin account devoid of any password.

- Medium: Medium susceptibility may refer to intrusions providing confidential data, slowing down performance or having a high prospective of giving system admittance to an impostor. For example, recognition of active modems on the network, zone transfers, and writable FTP directories.

- Low: Low susceptibility may refer to network breaches providing confidential data that could eventually lead to a compromise. For example, all users can access Floppy Drives, limited SSL validation may be performed by IE, not enabling logon and logoff auditing.

The Internet technology groups may look for the following in a report:

- Modifications in configuration

- Installing service packs

- Setting up patches

- Modifications in ACL (access control list)

- Registry edits

- Stopping services

These recommendations helps the Internet technology groups by cutting short the time requirements for finding solutions to the above problems, which would further lead to quick implementations. As you would expect, the extent of the time required to resolve the shortfall will be decided based on the severity of the openness. Say, high vulnerabilities must be resolved within 48 hours, medium vulnerabilities may be resolved within a week and Low vulnerabilities may be resolved within a month.

7.4.2.2 Forensics

Forensics plays a very important role in covering many investigations. Internet security teams are regularly asked at some point in an investigation what took place, when it occurred and/or whether it should have taken place or not. Log files are very vital with respect to carrying out a forensics inquiry without which the inquiry usually results in failure.

Therefore, SLAs with respect to forensics can consist of:

- Duration of time held on to before being overwritten.

- Proxy logs, firewall logs, server logs, syslogs, DHCP logs, and client logs.

- Setting the threshold required to move the logs to any central logging server, portable media, offsite information storage, etc.

7.4.2.3 Managing Records/Documents

More often than not, an efficient IT firm will usually be associated with a properly managed information protection plan. In addition to this, the Internet technology groups should have the suitable abilities, good records of internet technology processes, gears and assets, which must be kept up-to-date for a suitable execution of an Internet security agenda. A well-documented network topology is essential while conducting risk evaluations, network scanning or responding to possibilities or analytical requirements, etc. Service Level Agreement related to network topology may address points like updating the network topology on a monthly basis by respective Internet technology groups.

Points that may be kept in mind while preparing SLAs related to document management are:

- Alterations in settings

- Modifications in network infrastructure

- Alterations in API

- System failure logs and relevant crisis resolution

- Rate of recurrence of records assessment

- Thorough task explanation

- Accountable persons for every file on paper service and preservation contracts

7.4.2.4 Backing Up Data

The basis of any strong Internet security program is data backup. Data backups allow an organization to recover its data either fully or from some recognized baseline or checkpoints in case of some unpleasant occurrence. Some fundamental issues that need to be considered when preparing a SLA include:

- Theoretical support records.

- Substantiation of the information on support for soundness.

- Credentials of which server or application are backed-up and the specific program.

- Validation of reinstatement by a planned test on a regular basis.

- Label of an offsite storage place and the pickup and/or dropoff schedule.

- Label of which backups include classified or patented data about the organization.

- Justification of the classification idea used by the Internet technology groups to trace backups.

- Backup-related documentation comprising ways to carry out, reinstate, etc.

- Verification of anti-virus before backing up.

The founding of Internet security service level agreements between the Internet technology groups and the Internet security groups is essential to give surety to the project that practical actions are employed in the project. This provides the Internet technology groups with rational prospect and offers the Internet security groups with an authoritative place anywhere in the line, except last [14].

7.5 The Cuckoo’s Egg in the Context of IT Security

Even though a book on digital security from 1986 is not requested much today, the visionary ideas from 30 years ago are still relevant today. It may sound obsolete, yet amidst the sprouting Internet technology (IT) of 1986, the belief in systems offering ongoing protection from malware and hacking existed at that point. In the University of California’s Lawrence Berkeley Research Center, the astronomer Clifford Stoll was given the job of tracking a 75-cent accounting error in a centralized server PC’s custom records. It was discovered that nine seconds of execution time were stolen by a programmer misusing vulnerabilities in a UNIX content manager framework.

A nitty-gritty account of the digital spying war that followed was written about in the 1989 book called The Cuckoo’s Nest: Tracking a Spy Through the Maze of Computer Espionage. It is a first-person account of Stoll’s hunt for the hacker that broke into the lab’s computer and how he ran test programs to expose a hidden network of spies. This genuine international mystery novel provided numerous individuals with their first exposure to digital security. It superbly mixed Cold War antagonistic vibe, processing personal data and the protection versus security face-off. The book reports Stoll’s adventure as he tries to get assistance from the U.S. and German governments to take care of this genuine danger that no one wanted to claim.

The egg in The Cuckoo’s Egg title alludes to how the programmer bargained a considerable amount casualties. The essentialness lies in the way that, in actuality, a cuckoo bird does not lay its eggs in its own nest. Rather, she waits for an unattended nest of some other bird. The mother cuckoo at that point sneaks in, lays her egg in the empty nest, and escapes, abandoning her egg to be incubated by another mother. Like the cuckoo bird, Stoll’s programmers exploited security defenselessness in the effective and extensible GNU Emacs content tool framework that Berkeley had introduced on the greater part of its UNIX machines. As Stoll stated, “The survival of cuckoo chicks relies upon the numbness of different species” [16].

It is uniquely significant that the meaning of the cuckoo’s egg in the book is a malware program that an aggressor uses to supplant a real program. More specifically, it was swapped for atrun, which is executed at regular intervals meaning the aggressor needed to hold back for five minutes at the most before their pernicious code was executed. Stoll alludes to this as the “hatching” of the cuckoo’s egg [17].

“I watched the cuckoo lay its egg: once again, he manipulated the files in my computer to make himself super-user. His same old trick: use the Gnu-Emacs move-mail to substitute his tainted program for the system’s atrun file. Five minutes later, shazam! He was system manager” [18].

The spy ring invested a considerable measure of energy endeavoring to assume control of standard client accounts so they could sign in as those clients and audit the framework without causing an alert. In one moment, subsequent to turning into a framework head with the Emacs assault, one programmer opened up the framework’s secret word document. Despite everything he didn’t recognize what the passwords were to every one of the clients on the framework since they were scrambled. Rather than attempting to break them, he just deleted one of them. He picked a particular client and eradicated the client’s secret word. When he signed in as that client later, the framework would allow access since there was no secret key guarding the record. Inevitably, the programmer began downloading the whole secret key record to his home PC. Stoll later found that the programmer executed a splendid new assault. He scrambled each word in the lexicon with a similar calculation that encoded passwords and looked at the encoded passwords in the downloaded watchword record with the scrambled word reference words. On the off chance that he found any that coordinated, he could now sign in as a genuine client. Savage power word reference assaults are standard today, however in those days, this was another thought.

Stoll regularly kept running into government administration and spies who were anxious to take any data that Stoll had with respect to his examination but who were additionally reluctant to share anything that they knew in kind. There’s also a second important issue. As Stoll is wrapping up the book, he finishes up by stating that in the wake of sliding down this Alice-in-Wonderland gap, he found that the political left and right have a common reliance on PCs. However, the right sees PC security as important to ensure privileged insights into national matters, whereas their “leftie” counterparts were stressed over an intrusion of their protection.

The Snowden case is only the last out of a progression of exchanges about protection versus security that the United States and different nations have made in the previous twenty years. As Bruce Schneier brings up, this is a false contention stating that the levelheaded discussion wasn’t about security versus protection, rather freedom versus control [18].

He and different distinguished scientists describe why this isn’t an either-or choice. He mentioned that one can have security and at the same time protection; however, you need to work for it. In this book, arguably Stoll was the first who raised the issue. He battled with it in those days as we on the whole are doing today.

The third determinative issue is the digital spying risk. The business world truly ended up mindful of the issue with the Chinese government’s information trade-off with Google toward the end of 2009. The U.S. military had been managing the Chinese digital observation danger, in those days celebrated as Titan Rain, for a decade prior to that. In any case, Stoll claims that his book depicts the main open situation where spies utilized PCs to direct secret activities, this time supported by the Russians. The occasions in The Cuckoo’s Egg began happening in August 1986, just about 15 years before Titan Rain, and a portion of the administration characters that Stoll mentions in the book imply that they thought there were other nonpublic surveillance movements that happened sooner than that. The fact of the matter is that the digital secret activities risk has been around for somewhere in the range of 30 years and hint at not leaving at any point in the near future.

The fourth and last determinate issue is not really a digital issue at all, but rather an insight problem. All through the book, Stoll battles with whether or not to distribute his discoveries. He frames the issue this way: “If you describe how to make a pipe bomb, the next kid that finds some charcoal and saltpeter will become a terrorist. Yet if you suppress the information, people won’t know the danger” [16].

7.6 Searching and Seizing Computer-Related Evidence

7.6.1 Computerized Search Warrants

A court order might be issued to look through a PC or electronic media if there is reasonable justification to trust that the media contains or is booty, confirmation of a wrong doing, products of wrong doing, or an instrumentality of a wrong doing. For more data, one can refer to Fed. R. Crim. P. 41(c).

Three critical issues concerning court orders for search warrants have been addressed below: particularity, reasonable time period for analyzing seized electronic gadgets or storage media, and the maintenance of seized information.

7.6.1.1 Particularity

Court orders should especially portray the place to be sought and the things to be seized. “At the point when electronic capacity media are to be looked in light of the fact that they store data that is proof of a wrongdoing, the things to be seized under the warrant ought to more often than not center around the substance of the applicable records as opposed to the physical stockpiling media” (Searching and Seizing Computers and Obtaining Evidence in Criminal Investigations, Computer Crime and Intellectual Property Section, Criminal Division, U.S. Division of Justice, Washington, D.C. (3rd ed 2009) at 72).

One approach is in any case an “all records” depiction; include constraining dialect expressing the wrongdoing, the suspects, and important era, if pertinent; incorporate unequivocal cases of the records to be seized; and after that show that the records might be seized in any shape, regardless of whether electronic or non-electronic (Id. at 74-77).

In a few wards, judges or justices may force particular conditions on how the pursuit is to be executed or expect police to disclose how they intend to constrain the hunt before the warrant might be conceded.

7.6.1.2 Reasonable Time Period for Examining Seized Electronic Equipment

Courts have held that the Fourth Amendment requires the measurable examination of a PC or electronic hardware to be directed inside a sensible time (United States v. Mutschelkaus, 564 F. Supp. 2d 1072, 1077 (D.N.D. 2008)).

Drawn out postponement in acquiring a court order to look through a seized electronic gadget can be held to be absurd under the Fourth Amendment. For instance, in U.S. v. Mitchell, 565 F.3d 1347, 1351 (eleventh Cir. 2009), a 21-day delay in acquiring a court order for the litigant’s PC was held to be irrational.

There might be forced law authorization purposes behind deferrals, including pausing while a warrant can be secured or sitting tight for the fulfillment of all the more pressing dynamic examinations that required criminological inspector assets. So also, confounded legal investigation as a result of the volume of records or the nearness of encryption may give convincing motivations to delay.

7.6.1.3 Irrational Retention of Seized Data

In United States v. Ganias, 755 F.3d 125 (2d Cir. 2014), the United States Court of Appeals for the Second Circuit held that they consider regardless of whether the Fourth Amendment grants authorities executing a warrant for the seizure of specific information on a PC to seize and inconclusively hold each record on that PC for use in future criminal examinations. They hold that it doesn’t. For instance, if police look and grab an electronic gadget for proof of one wrongdoing, hold the documents and, years after the fact, scan the records to confirm in a different criminal examination, that will disregard the Fourth Amendment, as indicated by the Second Circuit’s choice in Ganais.

7.6.2 Searching and Seizing

As data and interchanging innovations have entered regular day-to-day existence, PC-related unlawful activity has drastically expanded. As PCs or other information stockpiling gadgets can provide methods for carrying out wrongdoing or be a vault of electronic data that is confirmation of a wrongdoing, the utilization of warrants to scan for and seize such gadgets is given increasing significance. The pursuit and seizure of electronic proof is in many regards the same as some other inquiry and seizure. For example, as with some other inquiry and seizure, the pursuit and seizure of PCs or other electronic stockpiling media must be conducted pursuant to a warrant which is issued by a district court if there is probable cause to believe that they contain evidence of a crime [20].

Realizing that criminals have not missed out on the PC revolution, the United States Department of Justice has published a manual committed solely to the laws of searching for and seizing PCs and electronic surveillance of the Internet. This record was composed with an end goal to help law enforcement organizations the nation over in getting electronic proof in criminal investigations.

According to James K. Robinson, Assistant Attorney General of the Criminal Division, this manual was essential for law enforcement operators and prosecutors, as well as for any American who utilizes a PC. He further stated that electronic security is essential to all of us, and the department needs everybody to recognize what their rights are. Entitled, “Searching and Seizing Computers and Obtaining Electronic Evidence in Criminal Investigations,” the Criminal Division’s Computer Crime and Intellectual Property Section (CCIPS) made this report. The psychological distress caused by PC-related crime requires prosecutors and law enforcement specialists to see how to get electronic confirmation put into PCs. Electronic records, for example, PC arrange logs, messages, word handling documents, and “.jpg” picture records, have progressively given the legislature vital confirmation in criminal cases.

The manual provides government law enforcement operators and prosecutors with a methodical process that can enable them to comprehend the legitimate issues that emerge when they look for electronic proof in criminal investigations. The manual diagrams how electronic observation laws apply to the Internet, and also how the courts have connected the Fourth Amendment to PCs.

This manual replaces Federal Guidelines for Searching and Seizing Computers (1994), and also the 1997 and 1999 supplements. Despite the fact that organizations getting together and making a game plan accomplished the objective of giving efficient control to every government specialist and lawyer in the field of PC investigation and arrest, intervening changes in the law and the rapid development of the Internet since 1994 has cultivated the need for a fresh direction. This manual is intended as an updated version of the recommended rules on seeking and seizing PCs with direction on the statutes referring to acquiring electronic confirmation in cases involving PC systems and the Internet. The manual offers help, and is not a specialist. Its examination and conclusions ponder current troublesome areas of the law, and doesn’t speak to the official position of the Justice Department or other offices. It has no administrative impact.

The law overseeing electronic confirmation in criminal investigations has two essential sources: the Fourth Amendment to the U.S. Constitution, and the statutory security laws arranged at 18 U.S.C. 2510-22, 18 U.S.C. 2701-11, and 18 U.S.C. 3121-27. Although other established and statutory issues are covered now and again, most circumstances exhibit either a sacred issue under the Fourth Amendment or a statutory issue under these three statutes. The association of this handbook mirrors that division: session 1 and session 2 address the Fourth Amendment law of hunt and seizure, and session 3 and session 4 center around the statutory issues, which emerge generally in cases including PC systems and the Internet [19].

7.7 Conclusion

This chapter focused on various cybersecurity techniques meant for parallel as well as distributed computing environment. The distributed systems basically are candidates for giving increased performance, extensibility, increased availability, and resource sharing. The necessities like multiuser configuration, resource sharing, and some form of communication between the workstations have created a new set of problems with respect to privacy, security, and protection of the system as well as the user and data. So, new age cybersecurity techniques to combat cybercrimes and protect data breaches are the need of the hour. The chapter also focused on the need for service level agreements (SLA) that prevail between a service provider and a client relating to certain aspects of the service such as quality, availability, and responsibilities. The Cuckoo’s Egg lessons on cybersecurity by Clifford Stoll as well as various amendments to curb fraud, data breaches, dishonesty, deceit and such other cybercrimes have also been thoroughly discussed here.

References

1. Mishra, K. S., & Tripathi, A. K. (2014). Some issues, challenges and problems of distributed software system. International Journal of Computer Science and Information Technologies. Varanasi, India, 7(3).

2. Nadiminti, K., De Assunao, M. D., & Buyya, R. (2006). Distributed systems and recent innovations: Challenges and benefits. InfoNet Magazine, 16(3), 1-5.

3. http://www.ejbtutorial.com/distributed-systems/challenges-for-a-distributed-system

4. https://www.rsaconference.com/writable/presentations/file_upload/grc-f42.pdf

5. Shahabi, Reza Nayebi. “Security Techniques in Distributed Systems.” system 2: 3.

6. Liu, H., Luo, P., & Wang, D. (2008). A scalable authentication model based on public keys. Journal of Network and Computer Applications, 31(4), 375-386.

7. Wang, F., & Zhang, Y. (2008). A new provably secure authentication and key agreement mechanism for SIP using certificateless public-key cryptography. Computer Communications, 31(10), 2142-2149.

8. Malacaria, P., & Smeraldi, F. (2013). Thermodynamic aspects of confidentiality. Information and Computation, 226, 76-93.

9. Chandra, S., & Khan, R. A. (2010). Confidentiality checking an object-oriented class hierarchy. Network Security, 2010(3), 16-20.

10. Andress, J. (2014). The basics of information security: understanding the fundamentals of InfoSec in theory and practice. Syngress.

11. Prowell, S., Kraus, R., & Borkin, M. (2010). Seven deadliest network attacks. Elsevier.

12. Kumar, P. A. R., & Selvakumar, S. (2011). Distributed denial of service attack detection using an ensemble of neural classifier. Computer Communications, 34(11), 1328-1341.

13. https://www.paloaltonetworks.com/cyberpedia/what-is-a-service-level-agreement-sla

15. Kearney, K. T., & Torelli, F. (2011). The SLA model. In Service Level Agreements for Cloud Computing (pp. 43-67). Springer, New York, NY.

16. https://researchcenter.paloaltonetworks.com/2013/12/cybersecurity-canon-cuckoos-egg/

17. https://security.stackexchange.com/questions/16889/what-is-a-cuckoos-egg/16892

18. Stoll, C. (2005). The cuckoo’s egg: tracking a spy through the maze of computer espionage. Simon and Schuster.

19. https://www.govcon.com/doc/justice-department-releases-guide-to-searchin-0001

20. https://www.lexology.com/library/detail.aspx?g=13704bd6-f4e4-4157-b111-9c58ccfd9f7d