Chapter 9

Organization Assignment in Federated Cloud Environments based on Multi-Target Optimization of Security

Abhishek Kumar1, Palvadi Srinivas Kumar2, T.V.M. Sairam3

1 Computer Science Department, Aryabhatta College of Engineering & Research Center (ACERC Ajmer), Rajasthan, India

2 Department of Computer Science and Engineering, Sri Satya Sai University of Technology and Medical Sciences, Sehore, Madhya Pradesh, India

3 Department of Computing Science and Engineering, Vellore Institute of Technology, Chennai, India

Emails: [email protected]

Abstract

Cloud security alliance permits interconnected cloud computing conditions of various cloud service providers (CSPs) to share their assets and convey more proficient administration execution. In any case, each CSP gives an alternate level of security regarding expense and execution. Rather than expending the entire arrangement of cloud benefits that are required to send an application through a solitary CSP, purchasers could profit by the cloud organization by adaptively allocating the administrations to various CSPs while keeping in mind the end goal to fulfil every one of their administrations’ security necessities. In this chapter, we demonstrate the administration task issue in unified cloud situations as a multi-target enhancement issue in light of security. The model enables shoppers to consider an exchange between three security factors- cost, execution, and hazard when appointing their administrations to CSPs. The cost and execution of the conveyed security administrations are assessed utilizing an arrangement of quantitative measurements which we propose. Next we address the issue of utilizing preemptive streamlining technique, the client’s needs. Reproductions demonstrated that the model aids in decreasing the rate of security and execution infringement.

Keywords: Cloud computing, cloud service providers

9.1 Introduction

Cloud is a sort of hybrid and appropriated architecture involving user-friendly interactions among related and virtualized PCs, which effectively serves as no less than one bound together data resource from the perspective of organization level planning and verification developed between the expert center and users. Cloud enrollment brings about another chapter of effective and economically feasible new plans for activity and sector openings. The requirement of distributed computation depends in part on prerequisites such as limiting costs, business dexterity, diminishing capital use, and removing a provisioning package. These administrations are referred to as work processes which are an accumulation of undertakings that are handled in view of administration prerequisites. In cloud, administration is given to clients or diverse clients have distinctive QoS requirements. Booking the administrations for various client prerequisites is troublesome. The planning technique ought to be created for numerous work processes with various QoS necessities. Mapping the different work processes to assets with various QoS necessities is NP hard. Booking of work processes is done by various calculations to consider numerous QoS parameters. There are numerous current calculations created for various QoS; for example, economic based, service time based or even both can be considered.

Assuming there are more than two quality of service parameters in a single target work, the issue ends up being all the more troublesome. This chapter focuses on numerous QoS parameters, such as cost and time, in addition to dependability. Whereas different framework utilized MQMCE calculations fulfill the numerous QoS parameters, such as diminishing cost and time, in addition to expanding unwavering quality and accessibility in a solitary target work. Multi-objective optimization is an area of multiple criteria decision-making that is concerned with scientific streamlining issues, including the ability to upgrade more than one target at the same time. For different target issues, the objectives are to point out conflicts and figure out the concurrent progress of every goal. Many, or even most, tried-and-true arranging issues genuinely have unique outcomes.

There are two general ways to deal with different target optimizations. The primary way is to join the independent target limits into a singular cluster limit or migrate all objects to the relevant cluster. As seen in a previous study, affirmation of a lone target is acceptable with systems, for instance, requirement theory, measured total methodology; however, the issue lies in the best possible determination of the weights or utility capacities to portray the chiefs inclinations. Moreover, it can be extremely hard to correctly and precisely select these weights, in spite of somebody being acquainted with the issue space. Aggravating this disadvantage is the fact that scaling is required among goals and little disturbances in the weights can in some cases prompt very extraordinary arrangements. In this previous instance, the requirement is to transfer objects to the basic cluster, an obliging worth has to be set up for every part of these past goals. This will be genuinely subjective. Within the two cases, a accelerated procedure could reestablish an individual plan and then a course of action for game plans that can be dissected for trade-offs. Consequently, a boss often leans towards a game plan with a good course of action that takes into consideration the various targets.

The second methodology is to add the two different modules to a unique module.while making concenation operation the two different node operations were perfomed based on operation the action of the task will be done on the particular server. The certifyable issue GA has a flexible usage and simply designed with userfriendly framework. The task was perfomed on the individuals data. In this implementation at first including more customers seek resources by then investigate the regard uninhibitedly then at the end streamline the variables capably. Finally, convey the results in an outstandingly when appeared differently in relation to past methodologies.

9.2 Background Work Related to Domain

9.2.1 Basics on Cloud computing

The basic idea of dispersed registering is to pass on computational requirements as organizations over the cloud. Buyers are not essential to place assets into a far-reaching PC to lead their business; Rather, they can obtain conveyed processing organizations in light of their solicitations [8]. The basic gear is by and large encouraged in generous DCs using complex virtualization practices to recognize anomalous state preparation, flexibility, furthermore, accessibility. As mentioned above the ease of use of servers depends on the flexibility which doesn’t need money for hosting in servers. In this regard it is required to propogating the things and utilizing the things in the propogating way [13].

The servers in the cloud depends on the budget we are investing on the server with 3 different modes i.e.; Paas, Saas, Iaas [17]. For the different services of the cloud there are multiple frameworks which are providing by Amazon such as EC2, S3, etc., EC2 is a service which provides better service with best availability nature with 99.99%. Efficency. the avilbility of server depends on the budget we are investing on server and availability. Mostly such type of servers were mostly used for Private cloud services means it is used in case of specific organizations etc [1, 3-5].

9.2.2 Clouds Which are Federated

In the standard environment of the cloud the cloud service depends on the cloud service provider (CSP). The cloud service provider depends on the service provider and there service. If in the case of single cloud the overall cloud should be stored in the single cloud. The CSP should give good service, flexibility, ease of use, easy access, availability all the time, etc., the output of csp results to good quality of service (QoS). The application programing interphase (API) is the basic fundamental need for the service.

9.2.3 Cloud Resource Management

Resource management defins the minimum number of things that are needed for the development and maintaining the server. Resource management should be in such a way that it should maintain the server capacity if how many requests may have [6-9, 11-15]. Cloud resource approach depends on the modes of the services such as SaaS, PaaS, IaaS.

For the cloud server we are using we can scale up or scale down the services, storage and other based on our requirement. Auto-scaling organizations are given by PaaS providers, for instance, Google App Engine. Auto-scaling for IaaS is mind-boggling by virtue of the nonattendance of and the deficiencies in the available standards. In disseminated registering, paying little respect to whether single or joined, the assortment is capricious and visit, and brought together organization and control may be not ready to give nonstop organizations and helpful confirmations. In this way, the united organization can’t reinforce adequate responses for cloud resource organization approaches (See Table 9.1).

Table 9.1 Reasons for the federation of cloud.

| Reasons | Description |

| Sharing | True notion of sharing between parties have different organizational policies and different technical capabilities. |

| Fault tolerance | Service replication across different providers in case one provider faces an outage the service placed on other providers can be activated as a failsafe. |

| Improved QoS | Minimizing the latency and delays by serving the request from a more geographically nearest provider or reducing the response time by serving the request from a more capable provider. |

| Cost efficiency | Can shuffle between providers for a cheaper provider. For example only using spot instance from different providers. |

| Reducing Service Level Agreement (SLA) Violation | In the case of resource scaling out, cloud service provider (CSP) can reduce it is penalization by renting resources from other federation members. |

| Provider independence | The consumer would not be dependent upon a single provider. |

| Contract ending | Ifacontract with one provider is on the verge of ending, no worry of service blockage. |

9.3 Architectural-Based Cloud Security Implementation

There are two kinds of architectural levels in cloud security:

- Simple Storage Service or Simple Storage Servers

- Operational server also known as servers for computational purpose

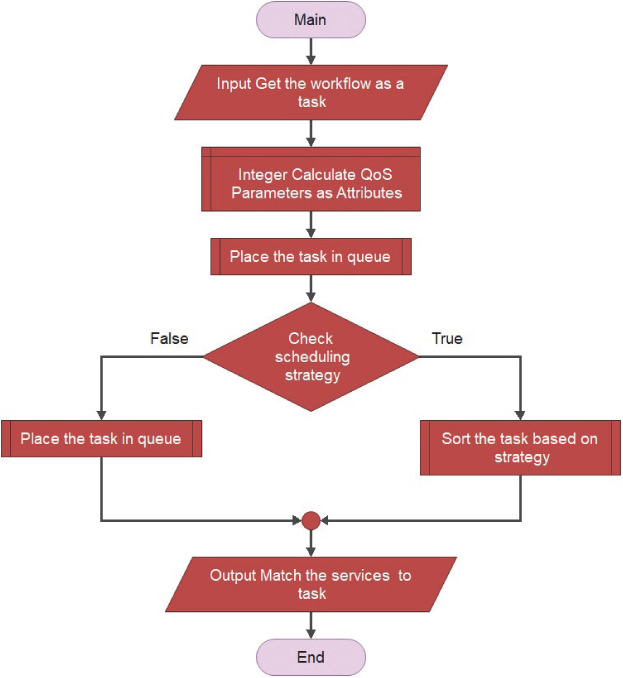

The limit server gives the limit and change. Figure 9.1 demonstrates the working of the general furthest reaches of MQMCE.

Figure 9.1 Overview of MQMCE.

The number of communication from client to server shown in Figure 9.2.

Figure 9.2 MQMCE scheduler process.

Finally the tasks were differentiased into three types namely:

- Task Assignment.

- Task scheduling.

- Task Execution.

Figure 9.2 shows the scheduling stategy. these are the three things necessary for QoS. Pre-processing is a thing which can be perfomed before the task comes into the pictue. Qos is needed for saying the underneath the quality. Task execution explains the step by step procedure for perfoming the task on the framework. Work process W(i) addressed by 5 types of tuples.

(9.1)

where,

- T(ij) is number of restricted endeavors.

- TQ(i) is the timeshare for work process.

- (CQI) is incurred significant damage sum for work process.

- A(vK) is the general availability of benefits for customer essentials.

- RLQ(i) is the relentless quality amount of application.

Let us consider T as a cluster of undertaking, S be the set of open organizations, MQMCE design the plan of the execution by comparing errand with the sensible organization by succeeding of for diminishing the turn-around time, diminish the holding up time, increment reaction time, increment asset usage and lessen a cost as a solitary target way.

(9.2)

(9.3)

- The measure of associations in the cloud is limited. What’s more, as a rule, the measure of errands holding up which needs to execute, is higher than the association check.

- The assignments which have a place in the working technique with least time surplus and cost surplus steady quality surplus ought to be masterminded first. The reason takes after the above.

- The assignment with the smallest covariance ought to be held first.

- The errand with enduring quality executed by the sorting out figuring. MQMCE algorithm There is two estimations are utilized for mapping associations to client necessities: sorting the task, scheduling system, assume the work strategy as attempts T and assets as an association’s S.

- Extract the data of every undertakings and if any assignment is prepared errand in the all errands and expects that the prepared errand as t.

- The system is executed to the point that the minute that all the game-plan of masterminded tries is in arranging. Sort Task(S),

9.4 Expected Results of the Process

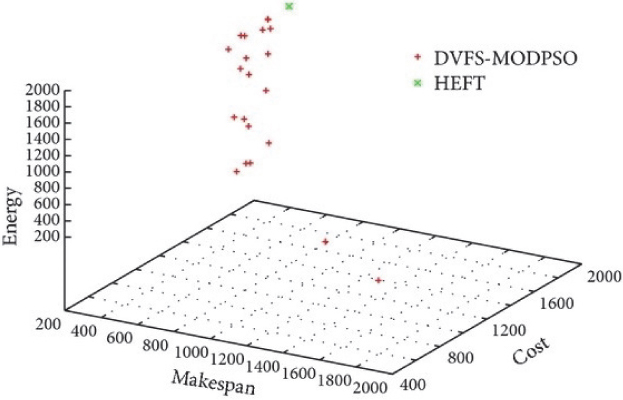

In our investigations, we restricted the number of assets to 12, as we found that, for these sorts of work process applications, a few assets are not utilized by any stretch of the imagination. Figure 9.3 demonstrates the asset loads when booking the manufactured work process on 12 assets.

Figure 9.3 Obtained non-dominated solutions for the parallel workflow.

Besides, despite the fact that the number of assets expanded, the aggregate cost and aggregate vitality utilization generally wasn’t diminished, as shown in Figure 9.4.

Figure 9.4 Obtained non-dominated solutions for the hybrid workflow.

From this figure, we can see that the QoS measurements diminish right off the bat, as the quantity of assets increases until reaching the number 6. This can be explained by the fact that while expanding the number of assets; there are fewer errands executed in an asset, along these lines, assignments can increase their execution times and the assets have a greater opportunity to downsize their voltages and frequencies which can be extremely viable in diminishing aggregate vitality utilization. In the wake of accomplishing 6 assets, the QoS measurements start to rise; the reason for this is that time executions are commanded by interprocessor interchanges, subsequently diminishing the open doors for downsizing voltages and frequencies of assets. Therefore, the edge quantities of assets that limited the QoS measurements could be acquired.

Figure 9.5 Obtained non-dominated solutions for the synthetic workflow.

9.5 Conclusion

Cloud technology has increased the likelihood of providing anything as an association over the Internet. In the interim, different clients benefit from various quality of service necessities. The booking system ought to be made for different types of work with various quality of service prerequisites. The Multi-workflow QoS-Constrained Scheduling strategy accomplishes the client’s different QoS targets; for example, execution time, execution cost and, moreover, ceaselessly planing the types of work. In existing tallies, they focus just on cost or time or both, yet not on unchanged quality and accessibility. In this proposed figuring fulfill the various Quality of Service like time, cost and furthermore consistency and transparency. This leads to planning done to a client’s paticular QoS prerequisites in a solitary target way. In future work, a combination of MQMCE with MOGA will be used to upgrade the above QoS in light of more client necessities.

References

1. Yu, J., Buyya, R., & Tham, C. K. (2005, July). Cost-based scheduling of scientific workflow applications on utility grids. In e-Science and Grid Computing, 2005. First International Conference on (pp. 8-pp). Ieee.

2. Salehi, M. A., Javadi, B., & Buyya, R. (2012). QoS and preemption aware scheduling in federated and virtualized Grid computing environments. Journal of Parallel and Distributed Computing, 72(2), 231-245.

3. Lin, C., & Lu, S. (2011, July). Scheduling scientific workflows elastically for cloud computing. In Cloud Computing (CLOUD), 2011 IEEE International Conference on (pp. 746-747). IEEE.

4. Liu, K., Jin, H., Chen, J., Liu, X., Yuan, D., & Yang, Y. (2010). A compromised-time-cost scheduling algorithm in swindew-c for instance-intensive cost-constrained workflows on a cloud computing platform. The International Journal of High Performance Computing Applications, 24(4), 445-456.

5. Selvarani, S., & Sadhasivam, G. S. (2010, December). Improved cost-based algorithm for task scheduling in cloud computing. In Computational intelligence and computing research (iccic), 2010 ieee international conference on (pp. 1-5). IEEE.

6. Xu, M., Cui, L., Wang, H., & Bi, Y. (2009, August). A multiple QoS constrained scheduling strategy of multiple workflows for cloud computing. In Parallel and Distributed Processing with Applications, 2009 IEEE International Symposium on (pp. 629-634). IEEE.

7. Parsa, S.,& Entezari-Maleki, R. (2009). RASA: A new task scheduling algorithm in grid environment. World Applied sciences journal, 7(Special issue of Computer & IT), 152-160.

8. Salehi, M. A., Javadi, B., & Buyya, R. (2012). QoS and preemption aware scheduling in federated and virtualized Grid computing environments. Journal of Parallel and Distributed Computing, 72(2), 231-245.

9. RajkumarBuyya, CheeShinYeo, Srikumar Venugopal, JamesBroberg, and IvonaBrandic.Cloud Computing forms: Vision, Hype, and Reality for Delivering Computing as the 5th Utility”, Future Generation Computer Systems, Elsevier Science, Amsterdam, June 2009, Volume 25, Number 6, pp.599-616.

10. Zhao, H., & Sakellariou, R. (2006, April). Scheduling multiple DAGs onto heterogeneous systems. In Parallel and Distributed Processing Symposium, 2006. IPDPS 2006. 20th International (pp. 14-pp). IEEE.

11. Yu, J., & Buyya, R. (2006, June). A budget constrained scheduling of workflow applications on utility grids using genetic algorithms. In Workflows in Support of Large-Scale Science, 2006. WORKS’06. Workshop on (pp. 1-10). IEEE.

12. Blythe, J., Jain, S., Deelman, E., Gil, Y., Vahi, K., Mandal, A., & Kennedy, K. (2005, May). Task scheduling strategies for workflow-based applications in grids. In Cluster Computing and the Grid, 2005. CCGrid 2005. IEEE International Symposium on (Vol. 2, pp. 759-767). IEEE.

13. Liu, K., Jin, H., Chen, J., Liu, X., Yuan, D., & Yang, Y. (2010). A compromised-time-cost scheduling algorithm in swindew-c for instance-intensive cost-constrained workflows on a cloud computing platform. The International Journal of High Performance Computing Applications, 24(4), 445-456.

14. Salehi, M. A., Javadi, B., & Buyya, R. (2012). QoS and preemption aware scheduling in federated and virtualized Grid computing environments. Journal of Parallel and Distributed Computing, 72(2), 231-245.

15. Lin, C., & Lu, S. (2011, July). Scheduling scientific workflows elastically for cloud computing. In Cloud Computing (CLOUD), 2011 IEEE International Conference on (pp. 746-747). IEEE.

16. Liu, K., Jin, H., Chen, J., Liu, X., Yuan, D., & Yang, Y. (2010). A compromised-time-cost scheduling algorithm in swindew-c for instance-intensive cost-constrained workflows on a cloud computing platform. The International Journal of High Performance Computing Applications, 24(4), 445-456.

17. Selvarani, S., & Sadhasivam, G. S. (2010, December). Improved cost-based algorithm for task scheduling in cloud computing. In Computational intelligence and computing research (iccic), 2010 ieee international conference on (pp. 1-5). IEEE.

18. Xu, M., Cui, L., Wang, H., & Bi, Y. (2009, August). A multiple QoS constrained scheduling strategy of multiple workflows for cloud computing. In Parallel and Distributed Processing with Applications, 2009 IEEE International Symposium on (pp. 629-634). IEEE.

19. Mandal, A., Kennedy, K., Koelbel, C., Marin, G., Mellor-Crummey, J., Liu, B., & Johnsson, L. (2005, July). Scheduling strategies for mapping application workflows onto the grid. In hpdc (pp. 125-134). IEEE.

20. Yu, J., Buyya, R., & Tham, C. K. (2005, July). Cost-based scheduling of scientific workflow applications on utility grids. In e-Science and Grid Computing, 2005. First International Conference on (pp. 8-pp). Ieee.