Our investigation of application design has led us to dependency injection in various forms and working with environments such as web applications, data persistence, filesystems, and GUIs. The techniques we've examined help us tie together the otherwise disparate parts into cohesive modules that are easy to test and maintain.

Such scenarios typically call for the use of utility libraries that facilitate better application design. When you work with persistence, you use an ORM tool like Hibernate. When you work with service clusters, it may be Oracle Coherence.

One of the major impediments to smooth integration between a dependency injector and a given library is that many of these libraries already provide a minimal form of IoC. In other words, they provide some means, usually half baked, to construct and wire objects with dependencies. Generally, these are specific to their areas of need, such as a pluggable connection manager in the case of a persistence engine or a strategy for listening to certain events in the case of a graphical user interface GUI.

Most of the time these solutions focus on only a very specific area and ignore broader requirements for testing and component encapsulation. These typically come with restrictions that make testing and integration difficult—and downright impossible in certain cases. In this chapter, we'll show how this lack of architectural foresight can lead to very poor extensibility solutions and how, with a little bit of thought, a library can be very flexible and easy to integrate with. We'll study popular frameworks that have made the wrong choice by either hiding too much of the component model or by creating their own.

We'll now do a short analysis of how frameworks create fragmentation by each creating its own partial dependency injection idiom.

Nearly every application these days does some form of DI. That is, it moves part of its object construction and wiring responsibilities off to configured library code. This can take many forms. In the Java Servlet framework, a very primitive form of this is evident with the registration of servlets and filters in web.xml, as shown in listing 10.1.

Example 10.1. web.xml is a precursor to solutions using DI

<?xml version="1.0" encoding="UTF-8"?>

<web-app version="2.4"

xmlns="http://java.sun.com/xml/ns/j2ee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/j2ee

http://java.sun.com/xml/ns/j2ee/web-app_2_4.xsd" >

<filter>

<filter-name>webFilter</filter-name>

<filter-class>

com.wideplay.example.RequestPrintingFilter

</filter-class>

</filter>

<filter-mapping>

<filter-name>webFilter</filter-name>

<url-pattern>/*</url-pattern>

</filter-mapping>

<servlet>

<servlet-name>helloServlet</servlet-name>

<servlet-class>

com.wideplay.example.servlets.HelloServlet

</servlet-class>

</servlet><servlet-mapping>

<servlet-name>helloServlet</servlet-name>

<url-pattern>/hi/*</url-pattern>

</servlet-mapping>

</web-app>In listing 10.1, we have a web.xml configuration file for the servlet container that configures a single web application with

The filter is a subclass of javax.servlet.Filter and performs the simple task of tracing each request by printing its contents. It's configured with the <filter> tag as follows:

<filter>

<filter-name>webFilter</filter-name>

<filter-class>com.wideplay.example.RequestPrintingFilter</filter-class>

</filter>This is reminiscent of Spring's <bean> tag, where a similar kind of configuration occurs. By analogy that might be:

<bean id="webFilter" class="com.wideplay.example.RequestPrintingFilter"/>

Similarly, there's a <servlet> tag that allows you to map a javax.servlet.http.HttpServlet to a string identifier and URL pattern. The essential difference between this solution and that offered by Spring, Guice, or any other dependency injector is that the servlet framework does not allow you to configure any dependencies for servlets or filters. This is a rather poor state of affairs, since we can virtually guarantee that any serious web application will have many dependencies.

This configuration is fairly restrictive on the structure of servlets (and filters):

A servlet must have a public, nullary (zero-argument) constructor.

A servlet class must itself be public and be a concrete type (rather than an interface or abstract class).

A servlet's methods cannot be intercepted for behavior modification (see chapter 8).

A servlet must effectively have singleton scope.

We can already see that this is getting to be an inexorable set of restrictions on the design of our application. If all servlets are singletons with nullary constructors, then testing them is very painful.[34] As a result of this and other deficiencies in the programming model, many alternative web frameworks have arisen on top of the servlet framework. These aim to simplify and alleviate the problems portended by the restrictiveness of the servlet programming model, not the least of which has to do with DI (see table 10.1 for a comparison of these solutions).

Table 10.1. Java web frameworks (based on servlet) and their solutions for DI<br></br>

Out-of-box solution | Website | ||

|---|---|---|---|

| [a] | |||

| [b] | |||

| [c] | |||

Custom factory, no DI | Partially through plug-in.[a] |

| |

Custom factory, no DI | Fully, depending on plug-in. |

| |

Extension integrates Spring through HiveMind lookups. |

| ||

Apache Tapestry 5 | TapestryIoC injector | Partially via extension.[b] |

|

JavaServer Faces 1.2 | Built-in, very limited DI[c] | Extension integrates variable lookups in Spring. |

|

Spring IoC | Pretty much tied to Spring. |

| |

Google Guice | Integrates Spring fully via Guice's Spring module. |

| |

[a] I say partially because Wicket's page objects cannot be constructed by DI libraries (and are therefore constructor injected), even with plug-ins. [b] Tapestry's Spring integration requires special annotations when looking up Spring beans. There is no official support for Guice. | |||

As you can see from table 10.1, there isn't a standard programming model for integrating dependency injectors with libraries based around servlets. Only Apache Struts2 and Google Sitebricks fully integrate Guice and Spring.

The situation doesn't get much better with other types of libraries either. The standard EJBs programming model provides its own form of dependency injection that supports direct field injection and basic interception via the javax.interceptor library. Direct field injection, as we discussed in chapter 3, is very difficult to test and mandates the presence of nullary constructors, which also means that controlling scope is not an option—all stateless session EJBs (service objects) exist for the duration of a method call. Stateful session EJBs are somewhat longer lived, as you saw in chapter 7. But these too are scoped outside your direct control and only marginally longer lived.

Why are we at such a sorry circumstance? Frameworks ought to simplify our lives, not complicate them. The reasons are numerous, but essentially it boils down to the fact that we are only recently learning the value of testability, loose-coupling, scope, and the other benefits ascribed to DI. Moreover, the awareness of how to go about designing with these principles in mind is still maturing. Guice is only a little over two years old itself. PicoContainer's and Spring's modern incarnations are even more recent than that.

That having been said, let's look at what future frameworks and libraries can do to avoid these traps and prevent this sort of unnecessary fragmentation.

Most of the critical problems with integration can be avoided if framework designers keep one fundamental principle in mind: testability. This means that every bit of client and service code that a framework interacts with ought to be easy to test. This naturally leads to broader concepts like loose coupling, scoping, and modular design. Library components should be easily replaced with mock counterparts. And pluggable functionality should not mandate unnecessary restrictions on user code (servlets requiring a public, nullary constructor, for example).

Replacement with mocks is at once the most essential and most overlooked feature in framework designs. While most frameworks are themselves rigorously tested, they often fail to consider that it is equally important that client code be conducive to testing. This means considering the design of client code as much as the design of APIs and of implementation logic. Frameworks designed with such a leaning often turn out to be easier to use and understand, since there are fewer points of integration, and these are naturally more succinct. Another significant problem is encapsulation—you saw this in the previous chapter when considering the design of your classes. The more public classes you expose, the more danger there is that clients will begin to extend and use them. If you want to make major changes to your framework's architecture or internal design, this process is difficult if your users are heavily tied to several parts of your framework. The Spring Framework's SpringMVC suffers from this. Every single class is public and nonfinal. This means users can bind to any of this code, and it can never change without breaking a lot of client applications.

Even many of Spring's modules extend and use other parts of the framework. This is why people find it very difficult to use Spring modules outside the Spring injector. Spring Security is a classic example of this—many of its components rely strongly on Spring's lifecycle system and on JavaBeans property editors, which make it difficult to port for use with any other dependency injection system or even to use by hand.

Forcing users to extend framework base classes for functionality is also problematic because it blurs the lines between a dependency and a parent class. Composition is a preferable option, because a delegate is easily replaced with a mock or stub for unit and integration testing. Parent class methods are harder to mock and therefore less conducive to testing.

These problems can be classified broadly into three categories:

Those that prevent testing or make it very difficult

Those that restrict functionality by subverting interception and scope

Those that make integration difficult or impossible (the most egregious)

None of these is particularly unavoidable or even necessary, especially if you carefully consider them early in the life of your framework.

Let's start by looking at an egregious instance, the rigid configuration anti-pattern, which makes both testing and integration difficult.

Most frameworks provide some form of customization of behavior. This is really a major part of their appeal—for example, Hibernate allows you to use a variety of databases, connection pools, transaction strategies, and so on, taking the basic behavior of the library to the breadth of various use cases. Often this is done via the use of external resource bundles such as .properties or XML files. Most of this configuration is generally about specifying a certain amount or type of something (max_connections=.. ; or, timeout=.. ; or, enable_logging=true; and so on). So this kind of configuration is appropriate.

But sometimes libraries also provide pluggable services. They allow you to customize behavior by writing small components, usually adhering to a library interface, and plugging them in. Sometimes they are called plug-ins, other times extensions. But essentially the idea is the same—they are a user-provided dependency of the framework. It is when these plug-ins are configured that things often go wrong in framework integration. Many frameworks will use the same configuration mechanism (XML file or resource bundle) to specify the plug-in. Generally this, too, takes the form of a string name/value pair such as

extensibility.plugin=com.example.MyPlugin

The property extensibility.plugin identifies which plug-in component is being set. On the right side is the name of the user-provided class. On the surface this looks like a clean approach. It is concise, easy to understand, and specifies the needed extension nicely. On closer inspection it reveals several problems that make it both difficult and unwieldy to test and integrate.

TYPE UNSAFE CONFIGURATION ANTI-PATTERN

The gravest of these is probably the disregard for type-safety. Since resource bundles are stored as raw strings, there's no way to verify that the information is present in its appropriate form. You could easily misspell or mistype the configuration parameter or even leave it out completely.

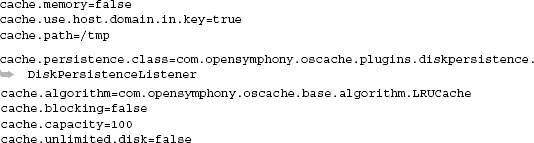

OSCache is a framework that provides real-time caching services for applications. OSCache stores its configuration in a resource bundle named oscache.properties. This is loaded by the cache controller when it is started up and then configured. A sample configuration file for OSCache is shown in listing 10.2.

In listing 10.2, we are shown a cache configuration that sets several routine options, such as whether or not to use a disk cache

cache.path=/tmp

and where to store the files for this disk cache, its capacity, interaction model, and so on:

cache.path=/tmp ... cache.blocking=false cache.capacity=100 cache.unlimited.disk=false

And then, there's one interesting line right in the middle that specifies the kind of caching service to use. In this case it's OSCache's own DiskPersistenceListener:

The problem with this is immediately obvious—we have no knowledge when coding this property of whether OSCache supports a DiskPersistenceListener. It isn't checked by the compiler. A simple but all-too-common misspelling goes undetected and leaves you with a runtime failure:

This is very similar to the issues with string identifiers (keys) we encountered in chapter 2. Furthermore, even if it is spelled correctly, things may not work as expected unless it implements the correct interface:

package com.example.oscache.plugins;

public class MyPersistenceListener {

...

}MyPersistenceListener is a custom plug-in I've written to persist cache entries in a storage medium of my choice. We can configure OSCache by specifying the property thusly:

cache.persistence.class=com.example.oscache.plugins.MyPersistenceListenerThis is incorrect because MyPersistenceListener doesn't implement PersistenceListener from OSCache. This error goes undetected until runtime, when the OSCache engine attempts to instantiate MyPersistenceListener and use it. The correct code would be

package com.example.oscache.plugins;

import com.opensymphony.oscache.base.persistence.PersistenceListener;public class MyPersistenceListener implements PersistenceListener {

...

}Now, you may be saying, this is only one location where things can go wrong and a bit of extra vigilance is all right. You could write an integration test that will detect the problem—a somewhat verbose but passable solution if used sparingly. But now consider what happens if you misspell the left-hand side of the same configuration property:

cache.persistance.class=com.example.oscache.plugins.MyPersistenceListener(Persistence is spelled with an e.)

In this case, not only is there no compile-time check, but any sanity check will completely miss the error! This is because property cache.persistence.class, if not explicitly set, will automatically use a default value. Everything appears to be fine, and the configuration throws no errors even in an integration test because you're freely allowed to set as many unknown properties as you like, with no regard to their relevance. Adding values for cache.persistence.class, jokers.friend.is.batman, and my.neck.hurts are all valid properties that a resource bundle won't complain about.

This is a bad state of affairs, since even extra vigilance can let you down. It also leads programmers to resort to things like copying and pasting known working configurations from previous projects or from tutorials.

UNWARRANTED CONSTRAINTS ANTI-PATTERN

This kind of name/value property mapping leads to strange restrictions on user code, particularly, the plug-in code we have been studying—our plug-in is specified using a fully qualified class name:

cache.persistence.class=com.example.oscache.plugins.MyPersistenceListenerThe implication here is that MyPersistenceListener must implement interface PersistenceListener (as we saw just earlier) but also that MyPersistenceListener must have a public nullary constructor that throws no checked exceptions:

package com.example.oscache.plugins;

import com.opensymphony.oscache.base.persistence;

public class MyPersistenceListener implements PersistenceListener {

public MyPersistenceListener() {

}

...

}This necessity arises from the fact that OSCache uses reflection to instantiate the MyPersistenceListener class. Reflective code is used to read and manipulate objects whose types are not immediately known. In our case, there's no source code in OSCache that's aware of MyPersistenceListener, yet it must be usable by the original OSCache code in order for plug-ins to work. Using reflection, OSCache is able to construct instances of MyPersistenceListener (or any class named in the property) and use them for persistence. Listing 10.3 shows an example of reflective code to create an instance of an unknown class.

Example 10.3. Creating an object of an unknown class via reflection

String className = config.getProperty("cache.persistence.class");

Class<?> listener;

try {

listener = Class.forName(className);

} catch (ClassNotFoundException e) {

throw new RuntimeException("failed to find specified class",

e);

}

Object instance;

try {

instance = listener.getConstructor().newInstance();

} catch (InstantiationException e) {

throw new RuntimeException("failed to instantiate listener",

e);

} catch (IllegalAccessException e) {

throw new RuntimeException("failed to instantiate listener",

e);

} catch (InvocationTargetException e) {

throw new RuntimeException("failed to instantiate listener",

e);

} catch (NoSuchMethodException e) {

throw new RuntimeException("failed to instantiate listener",

e);

}Let's break down this example. First, we need to obtain the name of the class from our configuration property:

String className = config.getProperty("cache.persistence.class");This is done using a hypothetical config object, which returns values by name from a resource bundle. The string value of our plug-in class is then converted to a java.lang.Class, which gives us reflective access to the underlying type:

Class<?> listener;

try {

listener = Class.forName(className);

} catch (ClassNotFoundException e) {

throw new RuntimeException("failed to find specified class",

e);

}Class.forName() is a method that tries to locate and load the class by its fully qualified name. If it is not found in the classpath of the application, an exception is thrown and we terminate abnormally:

try {

listener = Class.forName(className);

} catch (ClassNotFoundException e) {

throw new RuntimeException("failed to find specified class",

e);

}Once the class is successfully loaded, we can try to create an instance of it by obtaining its nullary constructor and calling the method newInstance():

Object instance;

try {

instance = listener.getConstructor().newInstance();

} catch (InstantiationException e) {

throw new RuntimeException("failed to instantiate listener ",

e);

} catch (IllegalAccessException e) {

throw new RuntimeException("failed to instantiate listener", e);

} catch (InvocationTargetException e) {

throw new RuntimeException("failed to instantiate listener", e);

} catch (NoSuchMethodException e) {

throw new RuntimeException("failed to instantiate listener",

e);

}There are four reasons why creating the instance may fail. And this is captured by the four catch clauses in the previous example:

InstantiationException—The specified class was really an interface or abstract class.IllegalAccessException—Access visibility from the current method is insufficient to call the relevant constructor.InvocationTargetException—The constructor threw an exception before it completed.NoSuchMethodException—There is no nullary constructor on the given class.

If none of these four cases occurs, the plug-in can be created and used properly by the framework. Looked at from another point of view, these four exceptions are four restrictions on the design of plug-ins for extending the framework. In other words, these are four restrictions placed on your code if you want to integrate or extend OSCache (or any other library that uses this extensibility idiom):

You must create and expose a concrete class with

publicvisibility.This class must also have a

publicconstructor.This class should not throw checked exceptions.

It must have a nullary (zero-argument) constructor, which is

public.

Apart from the restriction of not throwing checked exceptions, these seem to be fairly restrictive. As you saw in the chapter 9, in the section "Objects and design," it's quite desirable to hide implementation details in package-local or private access and expose only interfaces and abstract classes. As you've seen throughout this book, both immutability and testing suffer[35] when you're unable to set dependencies via constructor, since you can't declare them final and they're hard to swap out with mocks.

Without serious contortions like the use of statics and/or the singleton anti-pattern, we are left with a plug-in that can't benefit from DI. Not only does this spell bad weather for testing, but it also means we lose many other benefits such as scoping and interception.

CONSTRAINED LIFECYCLE ANTI-PATTERN

Without scoping or interception, your plug-in code loses much of the handy extra functionality provided by integration libraries like warp-persist and Spring. A persistence listener for cache entries can no longer take advantage of warp-persist's @Transactional interceptor, which intercedes in storage code and wraps tasks inside a database transaction. You're forced to write extra code to create and wrap data actions inside a managed database transaction. This similarly applies to other AOP concerns that we encountered in chapter 8, such as security and execution tracing.

Security, transaction, and logging code can no longer be controlled from one location (the interceptor) with configurable matchers and pointcuts. This leads to a fragmentation of crosscutting code, which adds to the maintenance overhead of your architecture.

Scoping is similarly plagued since the one created instance of your plug-in is automatically a singleton. Services that provide contextual database interactivity can no longer be used, since they are dependencies created and managed by an injector that's unavailable to plug-in code. Data actions we perform routinely, like opening a session to the database and storing or retrieving data around that session, cannot be performed directly. This also means that sessions cannot be scoped around individual HTTP requests.

These are all unwarranted constraints placed on user code by a rigid configuration system.

Similarly, a class of anti-patterns that I classify as black box design makes integration and testing extremely difficult. I examine some of these in the following section.

Another commonly seen symptom of poor design in integration or framework interoperability is the tendency toward black box systems. A black box system is something that completely hides how it works to the detriment of collaborators. This is not to be confused with the beneficial practice of encapsulation, which involves hiding implementation details to prevent accidental coupling.

Black box systems don't so much hide the specific logic of their behaviors as they hide the mechanism by which they operate. Think of this as a runtime analog rigid configuration anti-pattern. Black box anti-patterns allow you to test in very limited ways, and they prevent the use of certain design patterns and practices we've examined by the excessive use of static fields, or abstract base-class functionality.

FRAGILE BASE CLASS ANTI-PATTERN

Many programmers are taught to use inheritance as a means of reusing code. Why rewrite all this great code you've done before? This is a noble enough idea in principle. When applied using class inheritance, it can lead to odd and often confounding problems. The first of these problems is the fragile base class. When you create a subclass to share functionality, you're creating a tight coupling between the new functionality and the base class. The more times you do this, the more tight couplings there are to the base class.

If you're the world's first perfect programmer, this isn't an issue. You would write the perfect base class that never needed to change, even if requirements did, and it would contain all the possible code necessary for its subclasses' evolution. Never mind that it would be enormous and look horrible.

But if you're like everyone else, changes are coming. And they are likely to hurt a lot. Changing functionality in the base class necessitates changing the behavior of all classes that extend it. Furthermore, it's very difficult to always design a correctly extensible base class. Consider the simple case of a queue based on a array-backed list:

This is a pretty simple class. Queue uses ArrayList to represent items it stores. When an item is enqueued (added to the back of the list), it is inserted at index 0 in the ArrayList:

public void enqueue(I item) {

super.add(0, item);

end++;

}We increment the end index, so we know what the length of the queue is currently. Then when an item is removed from the queue, we decrement the end index and return it:

public I dequeue() {

if (end == −1) return null;

end—;

return super.get(end + 1);

}We need to return the item at (end + 1) because this is the last item in the list (pointed at by end, before we decremented it). So far, so good; we saved ourselves a lot of array insertion and retrieval code, but now look at what happens when we start using this class in unintended ways:

Queue<String> q = new Queue<String>();

q.enqueue("Muse");

q.enqueue("Pearl Jam");

q.clear();

q.enqueue("Nirvana");

System.out.println(q.dequeue());We expect this program to print out "Nirvana" and leave the queue in an empty state. But really it throws an IndexOutOfBoundsException. Why? It's the fragile base class problem—we've used a method directly from the base class, ArrayList, which clears every element in the queue. This seemed natural enough at the time. However, since Queue doesn't know about method clear(), except via its base class, it doesn't update index end when the list is emptied. When dequeue() is called, end points at index 2 since enqueue() has been called three times. In reality, end should be pointing at index 0 since there's only one item in the queue.

This has left the queue in a corrupt state, even though the underlying ArrayList is functioning as expected. How do we fix this? We could override method clear() and have it reset the end index:

public class Queue<I> extends ArrayList<I> {

private int end = −1;

public void enqueue(I item) {

super.add(0, item);

end++;

}

public I dequeue() {

if (end == −1) return null;

end—;

return super.get(end + 1);

}

@Override

public void clear() {

end = −1;

super.clear();

}

}That's certainly an option, but what about other methods that ArrayList provides? Should we override everything? If we do, we're certainly assured that the behavior will be correct, but then we've gained nothing from the inheritance. In fact, we've probably overridden a few methods that have no place in a Queue data structure anyway (such as get(int index), which fetches entries by index). We'd have been much better off making the ArrayList a dependency of Queue and using only those parts of it that we require, as shown in listing 10.4.

Example 10.4. Replacing inheritance with delegation in class Queue

public class Queue<I> {

private int end = −1;

private final ArrayList<I> list = new ArrayList<I>();

public void enqueue(I item) {

list.add(0, item);

end++;

}

public I dequeue() {

if (end == −1) return null;

end--;

return list.get(end + 1);

}

}Listing 10.4 shows how replacing inheritance with delegation helps solve the fragile base class problem with a much more resilient design pattern. Now we can easily add functionality to the Queue with no danger of unintentionally leaking ArrayList functionality:

public class Queue<I> {

private int end = −1;

private final ArrayList<I> list;

public Queue(ArrayList<I> list) {

this.list = list;

}

public void enqueue(I item) {

list.add(0, item);

end++;

}

public I dequeue() {

if (end == −1) return null;

end--;

return list.get(end + 1);

}

public void clear() {

end = −1;

list.clear();

}

}And now we can use it safely:

Queue<String> q = new Queue<String>(new ArrayList<String>());

q.enqueue("Muse");

q.enqueue("Pearl Jam");

q.clear();

q.enqueue("Nirvana");

System.out.println(q.dequeue());

assert null == q.dequeue();This code correctly prints out "Nirvana" and leaves the queue empty, and it's a more satisfying solution.

Several open source frameworks make liberal use of abstract base classes to share functionality with little or no regard for the abstract base class problem. Another problem with base classes for sharing code is that they cannot be replaced with a mock object. This makes testing them tricky, especially when you have more than one level of inheritance. The Apache CXF framework has some classes that sport four and five levels of inheritance. Isolating problems can be very difficult in such cases.

While these problems are pretty rough, they're not all insurmountable. Good design can overcome them and lead to the same amount of flexibility with no loss of testing or integration capability. In the following section we'll look at how programmatic configuration can make life easier.

The solution to rigid configuration and black box anti-patterns is surprisingly simple: programmatic configuration. Rather than shoehorn plug-in configuration via the rather lean path of resource bundles (.properties, XML, or flat text files), programmatic configuration takes the attitude that configuring plug-ins is the same as DI.

If you start to look at the plug-in as a dependency of the framework, then it becomes simple and natural to write integration between frameworks and injectors, between frameworks and tests, and indeed between various frameworks.

Bean Validation is the common name for an implementation of the JSR-303[36] specification. Bean Validation is an initiative of the Java Community Process to create a flexible standard for validating data model objects via declarative constraints.

Listing 10.5 shows a typical data model object with annotations representing the declarative constraints acted on by the JSR-303 runtime.

Example 10.5. A data model object representing a person

public class Person {

@Length(max=150)

private String name;@After("1900-01-01")

private Date bornOn;

@Email

private String email;

@Valid

private Address home;

public void setName(String name) {

this.name = name;

}

public Date getBornOn() {

return bornOn;

}

public void setBornOn(Date bornOn) {

this.bornOn = bornOn;

}

public String getEmail() {

return email;

}

public void setEmail(String email) {

this.email = email;

}

public Address getHome() {

return home;

}

public void setHome(Address home) {

this.home = home;

}

}

public class Address {

@NotNull

@Length(max=200)

private String line1;

@Length(max=200)

private String line2;

@Zip(message="Zipcode must be five digits exactly")

private String zipCode;

@NotNull

private String city;

@NotNull

private String country;

public String getLine1() {

return line1;

}

public void setLine1(String line1) {

this.line1 = line1;}

public String getLine2() {

return line2;

}

public void setLine2(String line2) {

this.line2 = line2;

}

public String getZipCode() {

return zipCode;

}

public void setZipCode(String zipCode) {

this.zipCode = zipCode;

}

public String getCity() {

return city;

}

public void setCity(String city) {

this.city = city;

}

public String getCountry() {

return country;

}

public void setCountry(String country) {

this.country = country;

}

}Listing 10.5 is of class Person, which represents a person in a hypothetical roster. Each of Person's fields is tagged with an annotation representing a constraint to be applied on instances of the object. Some have an optional message attribute, which can be used to customize the error message reported on validation failure:

@Zip(message="Zipcode must be five digits exactly")

private String zipCode;If all the constraints pass, then the instance is considered valid:

Person lincoln = new Person();

lincoln.setName("Abraham Lincoln");

lincoln.setBornOn(new Date());

lincoln.setEmail("[email protected]");

Address address = new Address();

address.setLine1("1600 Pennsylvania Ave");

address.setZipCode("51245");

address.setCity("Washington, D.C.");

address.setCountry("USA");

lincoln.setHome(address);

List<InvalidValue> errors = validator.validate(lincoln);

System.out.println(String.format("There were %d error(s)", errors.size()));On running this code, you'll see an output of how many errors there were (there were none). Each of the constraints on the code is a custom annotation that we've made up for the purposes of this demonstration. The constraints to which these annotations are attached are determined by the annotation declaration itself. Listing 10.6 illustrates some of the annotations we've used in listing 10.5's example of Person and Address data classes.

Example 10.6. Custom annotations that act as constraint-plug-in "configurators"

import java.lang.reflect.ElementType

import java.lang.reflect.RetentionPolicy

@Target(ElementType.FIELD)

@Retention(RetentionPolicy.RUNTIME)

@ConstraintValidator(ZipConstraint.class)

public @interface Zip {

String message() default "Zip invalid";

}

@Target(ElementType.FIELD)

@Retention(RetentionPolicy.RUNTIME)

@ConstraintValidator(LengthConstraint.class)

public @interface Length {

int min();

int max();

}

@Target(ElementType.FIELD)

@Retention(RetentionPolicy.RUNTIME)

@ConstraintValidator(NotNullConstraint.class)

public @interface NotNull {

String message() default "Cannot be null";

}The immediate thing that is apparent from listing 10.6 is that each of these annotations refers to a real validator. In the case of @Zip, it refers to class ZipConstraint:

@Target(ElementType.FIELD)

@Retention(RetentionPolicy.RUNTIME)

@ConstraintValidator(ZipConstraint.class)

public @interface Zip {

String message() default "Zip invalid";

}Similarly, @Length, which validates that fields have a minimum and maximum length, is bound to the LengthConstraint class:

@Target(ElementType.FIELD)

@Retention(RetentionPolicy.RUNTIME)

@ConstraintValidator(LengthConstraint.class)

public @interface Length {

int min();

int max();

}Essentially, this is JSR-303's plug-in configuration mechanism. You use the class name in a meta-annotation, to specify which validator plug-in it should use. This is a type-safe meta-annotation that prevents you from registering anything but a subtype of java.beans.validation.Constraint, which the validation framework expects.

This is simple and yet quite powerful. We mitigate the problems of misspelling the class name and property name and of specifying the wrong plug-in type all in one stroke. Furthermore, JSR-303 doesn't mandate the use of reflection to create instances of user plug-ins. Unlike the recalcitrants we encountered earlier in this chapter, JSR-303 doesn't have a rigid configuration mechanism and so places no restrictions on your plug-in code.

This is achieved by providing the runtime with a user-written ConstraintFactory. This is a simple interface that JSR-303 runtimes use to obtain plug-in instances from your code:

/**

* This class manages the creation of constraint validators.

*/

public interface ConstraintFactory {

/**

* Instantiate a Constraint.

*

* @return Returns a new Constraint instance

* The ConstraintFactory is <b>not</b> responsible for calling

Constraint#initialize

*/

<T extends Constraint> T getInstance(Class<T> constraintClass);

}To take advantage of a dependency injector, you simply register an instance of ConstraintFactory that obtains Constraints from the injector. Here's a very simple Guice-based implementation that creates an injector and uses it to produce constraints when called on by the JSR-303 runtime:

public class GuiceConstraintFactory implements ConstraintFactory {

private final Injector injector = Guice.createInjector(new MyModule());

public <T extends Constraint> T getInstance(Class<T> constraintKey) {

return injector.getInstance(constraintKey);

}

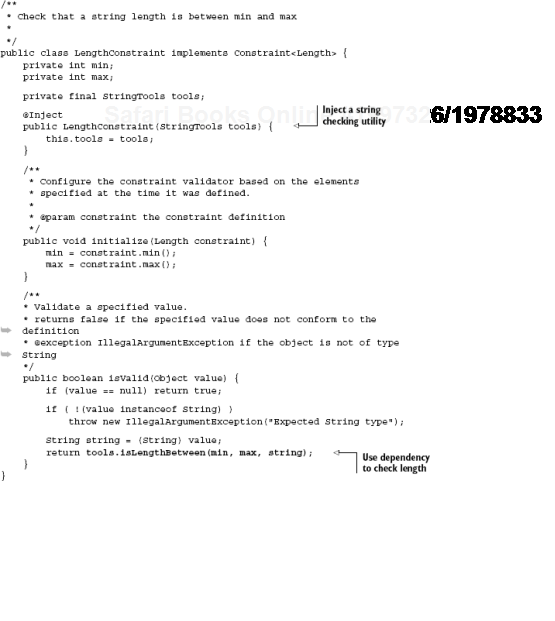

}This allows you to create constraint plug-ins with their own dependencies and lifecycle, and they can be capable of interception if needed. Let's take a look at an example of the LengthConstraint, which was tied to the @Length annotation, ensuring a maximum length for strings in listing 10.7.

In listing 10.7, LengthConstraint is dependency injected with StringTools, a reusable utility that does the dirty work of checking string length for us. We're able to take advantage of Guice's constructor injection because of the flexibility that JSR-303's ConstraintFactory affords us:

public class LengthConstraint implements Constraint<Length> {

private int min;

private int max;

private final StringTools tools;

@Inject

public LengthConstraint(StringTools tools) {

this.tools = tools;

}

...

}LengthConstraint dutifully ensures that it's working with a String before passing this on to its dependency to do the hard yards. LengthConstraint is itself configured using an interface method initialize() that provides it with the relevant annotation instance:

/**

* Configure the constraint validator based on the elements

* specified at the time it was defined.

*

* @param constraint the constraint definition

*/

public void initialize(Length constraint) {

min = constraint.min();

max = constraint.max();

}Recall that we set this value in the Person class for field name to be between 0 and 150 (see listing 10.5 for the complete Person class):

public class Person {

@Length(max=150)

private String name;

...

}Now, every time the validator runs over an instance of Person,

Person lincoln = new Person();

lincoln.setName("Abraham Lincoln");

...

Address address = new Address();

...

lincoln.setHome(address);

List<InvalidValue> errors = validator.validate(lincoln);the LengthConstraint plug-in we wrote will be run. Being flexible in providing us an extension point with the ConstraintFactory and the @ConstraintValidator meta-annotation, JSR-303 rids itself of all the nasty perils of rigid configuration internals. It encourages user code that's easy to test, read, and maintain. And it allows for maximum reuse of service dependencies without any of the encumbering ill effects of static singletons or black box systems.

JSR-303 is an excellent lesson for framework designers who are looking to make their libraries easy to integrate and elegant to work with.

In this chapter we looked at the burgeoning problem of integration with third-party frameworks and libraries. Most of the work in software engineering today is done via the use of powerful third-party frameworks, many of which are designed and provided by open source communities such as the Apache Software Foundation or OpenSymphony.

Many companies (like Google and ThoughtWorks) are releasing commercially developed libraries as open source for the good of the community at large.

Leveraging these frameworks within an application is an often tedious and difficult task. They're generally designed with a specific type of usage in mind, and integrating them is often a matter of some complexity. The better-designed frameworks provide simple, flexible, and type-safe configuration options that allow you to interact with the framework as you would with any one of your services. Extending such frameworks is typically done via the use of pluggable user code that conforms to a standard interface shipped along with the library. Frameworks that sport rigid configuration such as via the use of resource bundles (.properties, XML, or flat text files) cause problems because they place undue restrictions on the classes written for plug-in extensions. Because they are simple strings in a text file, these class names are also prone to misspelling and improper typing and are forced to be publicly accessible.

Moreover, since they're instantiated using reflection, these plug-in classes must have at least one public constructor that accepts no arguments (a nullary constructor). This immediately places unwarranted constraints on your code—you cannot declare fields final in your plug-in class. Not only does this have grave consequences for visibility, but it also makes your classes hard to test and swap out with mocked dependencies. The restriction on who creates your plug-in class also means that your code cannot take advantage of any of the other benefits of dependency injection. Lifecycle, scoping, and AOP interception must all be given up. This is particularly egregious in a scenario where your plug-in uses the same kind of services that your application does, for instance, a database-backed persistence system. Transactions, database connectivity, and security must all be managed independently from the application by your plug-in code.

The alternative is to share services via the use of static state and the singleton anti-pattern. This has negative consequences for testing, as you saw in chapter 5.

A well-designed framework, on the other hand, is cognizant of the testability mantra and allows you to configure plug-ins programmatically; that is, within the application itself. JSR-303 is a validation framework that allows you to create constraint plug-ins that are tied to declarative constraints placed on data model objects. These plug-ins are applied universally based on annotation metadata and help specify the validity of a data object. JSR-303's constraint plug-ins are created and configured via the use of a custom ConstraintFactory interface. ConstraintFactory is a plug-in factory that you provide, which creates instances of the actual constraint plug-ins for JSR-303's runtime.

Programmatic configuration means that your plug-in configuration code is type-safe, contract-safe, and well-defined within the bounds of the framework's usage parameters. It also means that you can control the creation and wiring of your own code, giving it dependencies, scoping, and rigorous testability. This makes it open to all the great benefits of dependency injection that you've so come to love!

In the next chapter you'll apply many of the patterns you've learned up to this point and create a fully functional, running demo application.

[34] See the problems with testing singletons described in chapter 1 and more comprehensively in chapter 5.

[35] For a thorough examination of this, see chapter 4's investigation of testability and chapter 5's section "The singleton anti-pattern."

[36] Find out more about JSR-303 at http://jcp.org/en/jsr/detail?id=303.