15

Cloud Communication: Different Security Measures and Cryptographic Protocols for Secure Cloud Computing

Anjana Sangwan

Swami Keshvanand Institute of Technology, Gramothan and Management, Jaipur, India

Abstract

In this chapter, cloud communication and the various platforms used for it are introduced. Also discussed, are the security measures provided by the cloud and as well as some well-known protocols for encryption of data that establish a secure communication for clouds. Some of these protocols like Secure Shell Protocol (SSP), Internet Protocol Security (IPSec), Kerberos, Wired Equivalent Privacy (WEP), and WiFi Protected Access (WPA) are outlined and shown how these have been effective in the cloud for secure communication.

Keywords: Cloud communication, platform, security, encryption, communication, protocol

15.1 Introduction

Cloud communications is the mix char of multiple communication modalities. These cover methods such as voice, email, chat, and video, in an integrated fashion to trim or eliminate communication lag. Cloud communications is originally internet-based communication. The technology has taken the world to a new level and has made it into a global village. Internet has reduced the distances to an extent that people in the different country of the world can now see and talk with each other through Cloud communications. This internet based communication application combined the different communication modalities like voice, video, email, and chat to reduce communication lag.

This has originated with the introduction of Voice Over Internet Protocol (VOIP). A branch of cloud communication is cloud telephony, which refers specifically to voice communications.

The storage, applications and switching are directed and hosted by a third party through the cloud. Cloud services are a broader expression of cloud communication. These services act as the initial data center for enterprises, and cloud communications is one of the services offered by cloud service providers. Hence we can conclude that cloud communications provides a variety of communication resources, from servers and storage to enterprise applications such as data security, email Backup and data recovery, and voice, which are all delivered over the internet. The cloud provides a hosting environment that is elastic, critical, scalable, secure, and readily available.

Cloud communications [1] providers provide communication services through servers owned and maintained by them. These services are then pervaded by the user, through the cloud. The users can just pay for the services used by them. The service providers also offer disparate communication resources from the storage and the servers to enterprise applications like email, data recovery, backup, voice, and security. The present status provided by the cloud is easily available, adaptable, flexible, prompt, and secure.

Some of the application and communication issues that can be used by an enterprise and are applicable, covered by cloud communications that include Private branch exchange, call center, text messaging, SIP Trunking, Voice broadcast, Call tracking software, contact center telephony, interactive voice response, and fax services. All these benefit build different communication necessary of an enterprise. These contain of intra- and inter-branch communication, customer relations, inter-department memos, call forwarding, conference, and tracking services and operations center.

Cloud communications is foremost for the enterprises as it is the center for all communications, which are managed, hosted, and maintained by the third-party service providers. The enterprise has to pay the fees for these services provide to them.

15.2 Need for Cloud Communication

Many organizations today agree that the cloud is not only a powerful, flexible, and reliable platform for office richness applications, but also an equally compelling platform for business communications solutions along with phone, fax, voice, short message service (SMS) text, and video conferencing. Add the ability to integrate business communications solutions directly into Office 365, Salesforce, Service Now, and other cloud-based Software as a Service (SaaS) solutions, and the business benefits are enormous. However, there is a gap between the capabilities of communications systems that support human and machine conversation, and the demands of modern commerce as enabled by cloud technology. Bridging this gap offers a substantial business opportunity. These are some points that show why many organizations moving towards the clouds [2]:

- Cost—Expected monthly costs. This may seem like old news, but many companies do not admit just how much they can recover by moving their communications to the cloud. By introduce a phone system over the Internet, businesses are on credit on an “as needed” basis, paying only for what they use. That makes cloud-based communication systems completely cost-effective for limited businesses—wipe-out the need to pay for the installation and maintenance of a traditional phone system.

- Management—Outsource IT support to the provider. The management of an on-premise solution can be very big-ticket. Because of the complexity of today’s communications systems, it can often take an entire IT department to manage. Cloud-based communications can help lighten the burden by drop maintenance, IT work load, and some of the more costly internal infrastructure, including servers and storage systems.

- Scalability—Scale up or down based on users. Anyone who has moved or expanded an on-premise phone system knows just how difficult it can be. Whether a business is growing, moving or sizing down, the cloud provides the flexibility and scalability the business needs now and in the future. With cloud-based systems, businesses can access and add new features without any new hardware requirements.

- Vendor management—One vendor for everything. With cloud communications, a vendor manages communication systems off-site, and IT departments are freed up to focus on other high-priority issues.

- Technology—Quick updates. With cloud communications, service is outsourced, and upgrades are set out through automatic software updates. This allows organizations to stay join on their business and leave the upgrades to the cloud communications vendor.

- Quality of service—Maximize uptime and downtime scope. For many businesses, uptime is crucial. To keep things running, they rely on the ability to scale and leverage remote work teams or serve customers from anywhere. For these kinds of businesses, cloud communications maximizes uptime and coverage through multiple, remotely hosted data centers, helping them hold off costly interruptions and downtime.

- Affordable Redundancy—Leverage shared resources. With an on-premise communication system, hardware and software geographic redundancy can be challenging to deliver. But when multiple businesses share resources in a cloud environment, they get access to a level of redundancy that would be too expensive to procure with an on-premises solution.

- Disaster recovery—Business continuity made easy. Businesses are using the cloud to protect themselves from the affects of disasters. With cloud communications, they can get up and running quickly after a disaster, or in some cases, continue running the entire time. Some reroute calls to remote locations and cell phones. Others rely on remote access to voicemail or use cloud-based auto attendants continue taking calls and providing information. It is a hard-to-resist combination of reliability, resiliency, and redundancy.

- Simplicity—Easy to use interface. With a cloud-based interface, it is easy for employees to talk, chat, collaborate, and connect anytime through a single platform.

- Mobility—Feature-rich mobile integration. Many businesses need to keep their teams connected and communicating efficiently even when they are miles, states, or even countries apart. With cloud-based unified communications, remote workers have access to the full feature set from their mobile devices anywhere they go, just as if they were sitting at their desks. It opens up a whole new world of productivity and possibilities.

15.3 Application

“Cloud computing” takes hold as 69% of all internet users have either stored data online or used a web-based software applications.

Customers that use webmail services, store data online, or account software programs such as word processing applications whose service is located on the web. In that process, these customers are making use of “cloud computing,” an emerging architecture by which required data and applications store in cyberspace, with authorization that users can access them through any web-connected device. Cloud telephony services were predominantly used for business processes, such as advertising, e-commerce, human resources, and payments processing.

With the advancement of remote computing technology, clear lines between cloud and web applications have blurred. The term cloud application has gained great cachet, sometimes leading application vendors with any online aspect to brand them as cloud applications.

Cloud and web applications approach data residing on distant storage. Both use server processing power that may be located on premises or in a distant data center [3].

A key difference between cloud and web applications is architecture. A web application or web-based application must have an unbroken internet connection to function. Conversely, a cloud application or cloud-based application performs processing tasks on a local computer or workstation. An internet connection is required primarily for downloading or uploading data.

A web application is unusable if the remote server is inaccessible. If the remote server becomes unavailable in a cloud application, the software installed on the local user device can still operate, although it cannot upload and download data until service at the remote server is restored.

The difference between cloud and web applications can be illustrated with two common productivity tools, email and word processing. Gmail, for example, is a web application that requires only a browser and internet connection. Through the browser, it is possible to open, write, and organize messages using search and sort capabilities. All processing logic occurs on the servers of the service provider (Google, in this example) via either the internet’s HTTP or HTTPS protocols.

A CRM application accessed through a browser under a fee-based software as a service (SaaS) arrangement is a web application. Online banking and daily crossword puzzles are also considered web applications that do not install software locally.

15.4 Cloud Communication Platform

Cloud communication platform is the platform that provides information technology services and products to the users on demand. This system provides all data and voice communication services through a third-party foreign the organization and is accessed over the public internet infrastructure.

CPaaS stands for Communications Platform as a Service. A CPaaS is a cloud-based platform that enables developers to add real-time communications features (voice, video, and messaging) in their own applications without needing to build backend infrastructure and interfaces.

Traditionally, real-time communications (RTC) have taken place in applications built specifically for these functions. For example, you might use your native mobile phone app to dial your bank, but have you ever wondered why you cannot video chat a representative right in your banking app?

These dedicated RTC applications—the traditional phone, Skype, Face-Time, WhatsApp, etc.—have been the paradigm for a long time because it is costly to build and operate a communications stack, from the real-time network infrastructure to the interfaces to common programming languages.

CPaaS offers a complete development framework for building real-time communications features without having to build your own. This typically includes software tools, standards-based application programming interfaces (APIs), sample code, and pre-built applications. CPaaS providers also provide support and product documentation to help developers throughout the development process. Some companies also offer software development kits (SDKs) and libraries for building applications on different desktop and mobile platforms [4].

Communications platform as a service (CPaaS) is a cloud-based delivery model that allows organizations to add real time communication capabilities such as voice, video, and messaging to business applications by deploying application program interfaces (APIs). The communication capabilities delivered by APIs include Short Message Service, Multimedia Messaging Service, telephonic communication, and video.

15.5 Security Measures Provided by the Cloud

Cloud communication provider will offer security allocation such as data encryption authentication protocols to help protected communication in the cloud.

Some factors that ensure cloud security:

- ➢ Cloud Protection is Not a Network Protection

A common confusion is that cloud-based service providers action complete security degree for cloud communications. While this may be true for the applications residing in the cloud, it does not cover the network used, call flows, media, or endpoints not in the cloud. When implementing cloud communications, the company must regulate what is assured by the service provider and what must be assured on the business end.

- ➢ Real-Time Security for Your Network

Most companies expand firewalls to care for their data; however, this is not in real time. IP-based Session Initiation Protocol (SIP), which is used on VoIP based communication, operates in real-time passing both voice and video among the cloud and the network. Not implementing security amount to hold your unsecured SIP communications boost the risk of real-time VoIP based attacks, such as Denial of Service (DoS) and eavesdropping. While your firewall will protect data flow, it is not acceptable to look after VoIP communication because you may have to turn off firewall features to get your voice and video communications to work; thus, opening your network up to potential attacks [5].

Adding a session border controller (SBC) to the servers that come in tap with the cloud will no doubt increase your cloud communication security on the network member end. The SBC is a SIP firewall that protects and encrypts real-time communication by:

- ➢ Protecting Denial of Service Rush

DoS rush overwhelm the network with uncool traffic in an attempt to look for deficiency in the VoIP system. An SBC will protect your network by divide VoIP traffic from malicious activity and protecting it from any degradation in quality that frequently occurs during a DOS attack.

- ➢ Encryption

SBCs use secure real-time encryption, making communication unseen to hackers.

- ➢ IP Traffic Management

An SBC can mitigate voice traffic on a network; thus, limiting the number of allowable sessions that can take place at the same time. This is similar to DoS protection, and it helps establish Quality of Service.

- ➢ Toll Fraud Protection

Many hackers only break into a VoIP system just to make person toll calls, but an SBC can deny secondary dial tones and prevent this type of attack.

15.6 Achieving Security With Cloud Communications

When using a cloud communications service provider, you should establish a security plan and determine your responsibilities versus the cloud communication provider’s responsibilities. Also, use and restore your virus protection and make aware software locally as well as updating your soft-phones and other endpoints. Finally, adding SBCs at all sites that connect to the cloud will not only protect the SIP call flow but establish high-quality voice and video is delivered.

It is important to function that a secure transmission is not the only component in IP-based communications. While your cloud communications partner can hit secure transmissions, you must also protect your endpoints and network to earn entire security.

Cloud communications is changing the way business communicates. From cloud PBX being a more adjustable back-up to on-premise legacy PBX systems, to achieving true unified communications by being able to integrate different channels like voice, fax, conferencing, SMS, and team messaging into one cloud-based service, thus the birth of Unified Communications-as-a-Service (UCaaS).

With all the improvements in cloud communications, Gartner is predicting that unified communications spending will grow at 3.1% CAGR through 2020. There is just a lot of optimism regarding the rise of cloud in terms of business communications.

One of the possible concerns is, of course, security. It is not that enterprises are not recognizing the interest of moving to the cloud, it is just that the idea of having your conversations and other sensitive data pass through the public Internet is quite scary, and if you also own a business, big or small, it should concern you as well [6].

That is why if you plan to move your communications to the cloud, you should realize that you are putting an enormous amount of trust to your UCaaS provider because they will be the ones responsible in safeguarding your data as it passes through your end, through their data centers, and the public Internet. So before choosing your next provider, be sure that you are well-versed about cloud security and multi-layered defense. For more info on this topic, read our guide article on the most useful SaaS solutions for small businesses.

Here are some of the things your next UCaaS provider should give you details about when it comes to cloud security:

- Safe and secure data center. The UCaaS providers should have the ideal a strong physical location with redundant power and reliable disaster recovery procedures. It would not hurt if they have multiple data centers from different locations to maximize redundancy so that when one data center goes down, another can take over. Security and reliability should also be backed by independent certifications.

- Encrypted data including voice. All data that passes through the provider’s network should be encrypted in transit and at rest. This, of course, includes voice data. Voice traffic should be encrypted to prevent eavesdropping or any form of hacking during the flow of any data.

- User access control and account administration. Providers should also be able to give you a way to control user access. This includes defining user roles and permissions. The company or the administrators should also be able to demote and revoke these accesses in certain situations like when a user leaves the company. Vendor should also implement a strong password policy and, if possible, two-factor authentication. A nice plus would be a single sign-on feature to prevent employees or users’ log-in fatigue.

- Fraud prevention measures. The UCaaS provider should have protections against possible fraud risks like toll fraud and credentials theft. Continuous monitoring against anomalies and other fraud indicators should be a must. Provider should also promote best practices against fraud to all their clients. This is not to dissuade anyone from moving to cloud communications. This is just to make companies aware that while there are a lot of benefits, moving to the cloud has its own security risks and their provider of choice should not only be aware of these risks but are also implementing countermeasures to protect their subscribers.

15.7 Cryptographic Protocols for Secure Cloud Computing

Cryptography in the cloud employs encryption techniques to protected data that will be used or stored in the cloud. It allows users to conveniently and securely access shared cloud services, as any data that is hosted by cloud providers is protected with encryption. Cryptography in the cloud protects sensitive data without delaying information exchange.

Cryptography in the cloud allows for securing critical data beyond your corporate IT environment, where that data is no longer under your control. Cryptography expert Ralph Spencer Poore explains that “information in motion and information at rest are best protected by cryptographic security measures. In the cloud, we don’t have the luxury of having actual, physical control over the storage of information, so the only way we can ensure that the information is protected is for it to be stored cryptographically, with us maintaining control of the cryptographic key.”

The application of cryptography in cloud computing has four basic security requirement such as non-repudiation, authentication, integrity and confidentiality. Cloud computing provides what industry experts call a computing environment that is distributed and consisting of a series of heterogeneous components. The components here include firmware, networking, software, and hardware [7].

The benefits of cloud computing are being realized by more companies and organizations every day. Cloud computing gives clients a virtual computing infrastructure on which they can store data and run applications. But, cloud computing has introduced security challenges because cloud operators store and handle client data outside of the reach of clients’ existing security measures. Various companies are designing cryptographic protocols tailored to cloud computing in an attempt to effectively balance security and performance.

Most cloud computing infrastructures do not provide security against untrusted cloud operators, which poses a challenge for companies and organizations that need to store sensitive, confidential information such as medical records, financial records, or high-impact business data. As cloud computing continues to grow in popularity, there are many cloud computing companies and researchers who are pursuing cloud cryptography projects in order to address the business demands and challenges relating to cloud security and data protection.

There are various approaches to extending cryptography to cloud data. Many companies choose to encrypt data prior to uploading it to the cloud altogether. This approach is beneficial because data is encrypted before it leaves the company’s environment, and data can only be decrypted by authorized parties that have access to the appropriate decryption keys. Other cloud services are capable of encrypting data upon receipt, ensuring that any data they are storing or transmitting is protected by encryption by default. Some cloud services may not offer encryption capabilities, but at the very least should use encrypted connections such as HTTPS or SSL to ensure that data is secured in transit.

15.8 Security Layer for the Transport Protocol

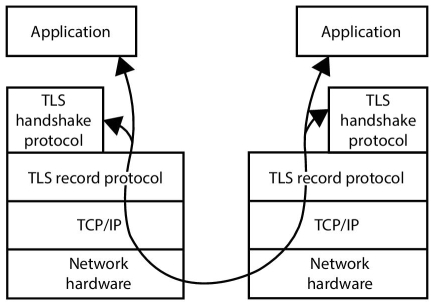

The TLS or the Security Layer for the Transport Protocol in the cloud computing purposes refers to a protocol with a built in capability that allows the client server applications in the virtual mode to carry out communication across the network.

We discuss following protocols in brief:

- Secure Shell Protocol

The SSH protocol (also referred to as Secure Shell) is a method for secure remote login from one computer to another. It provides several substitute options for strong authentication, and it care for the communications security and integrity with strong encryption. It is a secure alternative to the non-protected login protocols (such as telnet, rlogin) and insecure file transfer methods (such as FTP).

- Typical Uses of the SSH Protocol

The protocol is used in corporate networks for:

- providing secure access for users and automated processes

- interactive and automated file transfers

- issuing remote commands

- managing network infrastructure and other mission-critical system components.

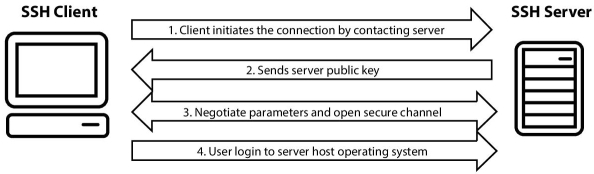

- How Does the SSH Protocol Work

The protocol works in the client-server model, which means that the connection is established by the SSH client connecting to the SSH server. The SSH client drives the connection setup process and uses public key cryptography to verify the identity of the SSH server. After the setup phase the SSH protocol uses stable symmetric encryption and hashing algorithms to ensure the privacy and integrity of the data that is exchanged between the client and server.

The figure below presents a simplified setup flow of a secure shell connection.

- Strong Authentication With SSH Keys

There are several options that can be passed down for user authentication. The most common ones are passwords and public key authentication.

The public key authentication method is primarily used for automation and sometimes by system administrators for single sign-on. It has turned out to be much more widely used than we ever anticipated. The idea is to have a cryptographic key pair—public key and private key—and configure the public key on a server to authorize access and grant anyone who has a copy of the private key access to the server. The keys used for authentication are called SSH keys. Public key authentication is also used with smartcards, such as the CAC and PIV cards used by US government.

The main use of key-based authentication is to set up secure automation. Automated secure shell file transfers are used to seamlessly integrate applications and also for automated systems and configuration management [8].

We have found that large organizations have way more SSH keys than they imagine, and managing SSH keys has become very relevant. SSH keys grant access as user names and passwords do. They require a similar provisioning and termination processes.

In some cases we have found several million SSH keys authorizing access into production servers in customer environments, with 90% of the keys actually being unused and representing access that was provisioned but never terminated. Ensuring proper protocols, processes, and review also for SSH usage is critical for proper identity and access management. Traditional identity management projects have overlooked as much as 90% of all credentials by ignoring SSH keys. We provide services and tools for implementing SSH key management.

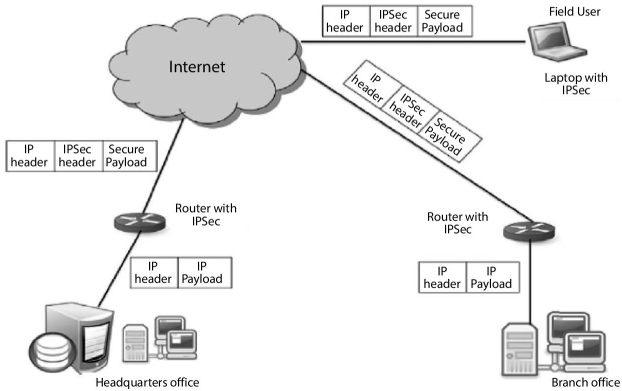

15.9 Internet Protocol Security (IPSec)

IPsec is determined for use with both current versions of the Internet Protocol, IPv4, and IPv6. IPsec protocol headers are included in the IP header, where they appear as IP header extensions when a system is using IPsec.

The most important protocols considered a part of IPsec include:

- The IP Authentication Header (AH), specified in RFC 4302, defines an optional packet header to be used to guarantee connectionless integrity and data origin authentication for IP packets, and to protect against replays.

- The IP Encapsulating Security Payload (ESP), specified in RFC 4303, defines an optional packet header that can be used to provide confidentiality through encryption of the packet, as well as integrity protection, data origin authentication, access control and optional protection against replays or traffic analysis.

- Internet Key Exchange (IKE), defined in RFC 7296, “Internet Key Exchange Protocol Version 2 (IKEv2),” is a protocol defined to allow hosts to specify which services are to be incorporated in packets, which cryptographic algorithms will be used to provide those services, and a system for sharing the keys used with those cryptographic algorithms.

- Previously defined on its own, the Internet Security Association and Key Management Protocol (ISAKMP) is now specified as part of the IKE protocol specification. ISAKMP defines how Security Associations (SAs) are set up and used to define direct connections between two hosts that are using IPsec. Each SA defines a connection, in one direction, from one host to another; a pair of hosts would be defined by two SAs. The SA includes all relevant attributes of the connection, including the cryptographic algorithm being used, the IPsec mode being used, encryption key and any other parameters related to the transmission of data over the connection.

Numerous other protocols and algorithms use or are used by IPsec, including encryption and digital signature algorithms, and most related protocols are described in RFC 6071, “IP Security (IPsec) and Internet Key Exchange (IKE) Document Roadmap.”

15.9.1 How IPsec Works

The first step in the process of using IPsec occurs when a host verify that a packet should be transmitted using IPsec. This may be done by checking the IP address of the source or destination against policy configurations to determine whether the traffic should be considered “interesting” for IPsec purposes. Interesting traffic triggers the security policy for the packets, which means that the system sending the packet applies the appropriate encryption and/or authentication to the packet. When an incoming packet is determined to be “interesting,” the host verifies that the inbound packet has been encrypted and/or authenticated properly.

The second step in the IPsec process, called IKE Phase grants the two hosts using IPsec to negotiate the policy sets they use for the secured circuit, authenticate themselves to each other, and initiate a secure channel between the two hosts.

IKE Phase 1 sets up an initial secure channel between hosts using IPsec; that secure channel is then used to securely negotiate the way the IPsec circuit will encrypt and/or authenticate data sent across the IPsec circuit.

There are two options during IKE Phase 1: Main mode or Aggressive mode. Main mode provides greater security because it sets up a secure tunnel for exchanging session algorithms and keys, while Aggressive mode grants some of the session configuration data to be passed as plaintext but enables hosts to establish an IPsec circuit more quickly [9].

Under Main mode, the host initiating the session sends one or more proposals for the session, indicating the encryption and authentication algorithms it prefers to use, as well as other aspects of the connection; the responding host continues the negotiation until the two hosts agree and establish an IKE Security Association (SA), which defines the IPsec circuit.

When Aggressive mode is in use, the host initiating the circuit specifies the IKE security association data unilaterally and in the clear, with the responding host responding by authenticating the session.

The third step in setting up an IPsec circuit is the IKE Phase 2, which itself is conducted over the secure channel setup in IKE Phase 1. It depends upon the two hosts to negotiate and initiate the security association for the IPsec circuit carrying actual network data. In the second phase, the two hosts negotiate the type of cryptographic algorithms to use on the session, as well as agreeing on secret keying material to be used with those algorithms. Nonces, randomly selected numbers used only once to provide session authentication and replay protection, are exchanged in this phase. The hosts may also negotiate to enforce perfect forward secrecy on the exchange in this phase.

Step four of the IPsec connection is the actual exchange of data across the newly created IPsec encrypted tunnel. From this point, packets are encrypted and decrypted by the two endpoints using the IPsec SAs setup in the previous three steps.

The last step is termination of the IPsec tunnel, usually when the communication between the hosts is complete, when the session times out, or when a previously specified number of bytes has been passed through the IPsec tunnel. When the IPsec tunnel is terminated, the hosts discard keys used over that security association.

15.10 Kerberos

Kerberos is a computer network authentication protocol that works on the basis of tickets town nodes communicating over a non-secure network to prove their status to one another in a protected way. The protocol was named after the character Kerberos (or Cerberus) from Greek mythology, the ferocious three-headed guard dog of Hades. Its designers marked it mainly at a client–server model and it bring mutual authentication—both the user and the server verify each other’s identity. Kerberos protocol messages are protected across eavesdropping and replay attacks.

That is, the same key is used to encrypt and decrypt messages. Microsoft’s implementation of the Kerberos protocol can also make defined use of asymmetric encryption. A private/public key combination can be used to encrypt or decrypt fundamental authentication messages from a network client or a network service.

- ➢ What Is Kerberos Authentication? In this section

- Kerberos Authentication Benefits

- Kerberos V5 Protocol Standards

- Supported Extensions to the Kerberos V5 Protocol

- Technologies Related to Kerberos Authentication

- Kerberos Authentication Dependencies

The Kerberos version 5 authentication protocol provides a mechanism for authentication—and mutual authentication—between a client and a server, or between one server and another server.

Windows Server 2003 implements the Kerberos V5 protocol as a security support provider (SSP), which can be accessed through the Security Support Provider Interface (SSPI). In addition, Windows Server 2003 implements extensions to the protocol that permit initial authentication by using public key certificates on smart cards.

The Kerberos Key Distribution Center (KDC) uses the domain’s Active Directory directory service database as its security account database. Active Directory is required for default NTLM and Kerberos implementations.

The Kerberos V5 protocol considers that initial transactions between clients and servers take place on an open network in which packets transmitted along the network can be monitored and modified at will. The assumed environment, in other words, is very much like today’s Internet, where an attacker can easily stand as either a client or a server, and can readily eavesdrop on or tamper with communications between legitimate clients and servers.

- Kerberos Authentication Benefits

The Kerberos V5 protocol is more secure, more elastic, and more dynamic than NTLM. The benefits gained by using Kerberos authentication are:

- Delegated authentication

Windows services impersonate a client when accessing resources on the client’s behalf. In many cases, a service can outright its work for the client by accessing resources on the local computer. Both NTLM and the Kerberos V5 protocol provide the information that a service needs to impersonate its client locally. However, some distributed applications are designed so that a front-end service must impersonate clients when connecting to back-end services on other computers. The Kerberos V5 protocol introduces a proxy mechanism that enables a service to impersonate its client when connecting to other services. No equivalent is available with NTLM.

- Interoperability

Microsoft’s implementation of the Kerberos V5 protocol is based on standards-track specifications that are supported to the Internet Engineering Task Force (IETF). As a result, the implementation of the Kerberos V5 protocol in Windows Server 2003 lays a foundation for interop-erability with other networks in which the Kerberos V5 protocol is used for authentication.

- More efficient authentication to servers

With NTLM authentication, an application server must connect to a domain controller in order to authenticate each client. With the Kerberos V5 authentication protocol, on the other hand, the server is not required to go to a domain controller. Instead, the server can authenticate the client by examining credentials presented by the client. Clients can obtain credentials for a particular server once and then repeat those credentials throughout a network logon session. Renewable session tickets replace pass-through authentication. For more information about what renewable session tickets are and how they work, please see “How the Kerberos Version 5 Authentication Protocol Works.”

- Mutual authentication

By using the Kerberos protocol, a party at either end of a network connection can verify that the party on the other end is the entity it claims to be. Although NTLM enables servers to verify the identities of their clients, NTLM does not enable clients to verify a server’s identity, nor does NTLM enable one server to verify the identity of another. NTLM authentication was designed for a network environment in which servers were assumed to be genuine. The Kerberos V5 protocol makes no such assumption.

- Delegated authentication

- Kerberos V5 Protocol Standards

The Kerberos authentication protocol originated at MIT more than a decade ago, where it was developed by engineers working on Project Athena. The first public turnout was the Kerberos version 4 authentication protocol. After board industry analysis of that protocol, the protocol’s authors developed and released the Kerberos version 5 authentication protocol.

The Kerberos V5 protocol is now on a standards track with the IETF. The implementation of the protocol in Windows Server 2003 closely follows the specification defined in Internet RFC 1510. In addition, the mechanism and format for passing security tokens in Kerberos messages follows the specification defined in Internet RFC 1964. The Kerberos V5 protocol specifies mechanisms to:

- Authenticate user status. When a user wants to gain access to a server, the server needs to verify the user’s identity. Consider a situation in which the user claims to be, for example, [email protected]. Because access to resources are based on identity and associated permissions, the server must be sure the user really has the identity it claims.

- Securely package the user’s name. The user’s name—that is, the User Principal Name (UPN): [email protected], for example—and the user’s credentials are packaged in a data structure called a ticket.

- Securely deliver user credentials. After the ticket is encrypted, messages are used to transport user credentials along the network.

- ➢ Kerberos Authentication Dependencies

This section reviews dependencies and summarizes how each dependency relates to Kerberos authentication.

- Operating System

Kerberos authentication relies on client performance that is built in to the Windows Server 2003 operating system, the Microsoft Windows XP operating system, and the Windows 2000 operating system. If a client, domain controller, or target server is running an earlier operating system, it cannot natively use Kerberos authentication.

- TCP/IP Network Connectivity

For Kerberos authentication to occur, TCP/IP network connectivity must exist between the client, the domain controller, and the target server.

- Domain Name System

The client uses the fully qualified domain name (FQDN) to access the domain controller. DNS must be functioning for the client to obtain the FQDN.

For best completion, do not use Hosts files with DNS. For more information about DNS, see “DNS Technical Reference.”

- Active Directory Domain

Kerberos authentication is not supported in previous operating systems, such as the Microsoft Windows NT 4.0 operating system. You must be using user and computer accounts in the Active Directory directory service to use Kerberos authentication. Local accounts and Windows NT domain accounts cannot be used for Kerberos authentication.

- Time Service

For Kerberos authentication to function correctly, all domains and forests in a network should use the same time source so that the time on all network computers is synchronized. An Active Directory domain controller acts as an authoritative source of time for its domain, which guarantees that an entire domain has the same time. For more information, see “Windows Time Service Technical Reference.”

- Service Principal Names

Service principal names (SPNs) are one and only identifiers for services running on servers. Every service that uses Kerberos authentication needs to have an SPN set for it so that clients can identify the service on the network. If an SPN is not set for a service, clients have no way of locating that service. Without correctly set SPNs, Kerberos authentication is not possible. For more information about user-to-user authentication, see “How the Kerberos Version 5 Authentication Protocol Works,” and search for “The User-to-User Authentication Process.”

- Domain Name System

- ➢ Overview/Main Points

Weaknesses/Limitations (version 4 and 5)

- Biggest lose: assumption of secure time system, and resolution of synchronization required. Could be fixed by challenge-response protocol during auth handshake.

- Password guessing: no authentication is required to request a ticket, hence attacker can gather equivalent of/ etc/passwd by requesting many tickets. Could be fixed by D-H key exchange.

- Chosen plaintext: in CBC, prefix of an encryption is encryption of a prefix, so attacker can disassemble messages and use just part of a message. (Is this true for PCBC?) Does not work in Kerberos IV, since the data block begins with a length byte and a string, destroying the prefix attack?

- Limitation: Not a host-to-host protocol. (Kerberos 5 is user-to-user; Kerberos 4 is only user-to-server).

Weaknesses in proposed version 5 additions

- Inter-realm authentication is allowed by forwarding, but no way to derive the complete “chain of trust”, nor any way to do “authentication routing” within the hierarchy of authentication servers.

- ENC_TKT_IN_SKEY of Kerberos 5 allows trivial cut and paste attack that prevents mutual authentication

- Kerberos 5 CRC-32 checksum is not collision proof (as MD4 is thought to be).

- K5 still uses timestamps to authentication KRB_SAFE and KRB_PRIV messages; should use sequence numbers.

Recommendations

- Add challenge/response as alternative to time-based authentication

- Use a typical encoding for credentials (ASN.1), to avoid message ambiguity; prevents having to re-analyze each change in light of the redundancy in the binary encodings of various messages as a possible attack point (like prefix attack above)

- Explicitly allow for handheld authenticators that answer a challenge using Kc, rather than just using Kc to decrypt a ticket.

- Multi-session keys should be used to negotiate true onetime session keys

- Support for special hardware (e.g., keystone)

- Don’t distribute tickets without some minimal authentication

- No point in including IP address in credentials, since network is assumed to be evil

- Relevance

Kerberos IV is widely disseminated, so even the limitations/ weaknesses fixed in version 5 are worth addressing. Also, important to note that, just as for software correctness, any nontrivial change to the system results in a whole new system whose security properties must be re-derived “from first principles”, or risk introducing security holes.

- Flaws

Some of the constraints in Kerberos IV stem from its initial assumptions in the MIT Athena environment, and some of the formal techniques for security verification that lead to the discovery of certain attacks were not widely known when it was designed. The attacks described on Kerberos 5 suggest that a thorough verification using formal methods would be wise.

- Operating System

15.11 Wired Equivalent Privacy (WEP)

- WEP is a security protocol for Wi-Fi networks. Since wireless networks transmit data over radio waves, it is easy to intercept data or “eavesdrop” on wireless data transmissions. The goal of WEP is to make wireless networks as secure as wired networks, such as those connected by Ethernet cables.

- The wired equivalent privacy protocol adds security to a wireless network by encrypting the data. If the data is intercepted, it will be unrecognizable to system that intercepted the data, since it is encrypted. However, authorized systems on the network will be able to recognize the data because they all use the same encryption algorithm. Systems on a WEP-secured network can typically be authorized by entering a network password.

15.11.1 Authentication

Two methods of authentication can be used with WEP: Open System authentication and Shared Key authentication.

In Open System authentication, the WLAN client need not provide its credentials to the Access Point during authentication. Any client can authenticate with the Access Point and then attempt to associate. In effect, no authentication occurs. Subsequently, WEP keys can be used for encrypting data frames. At this point, the client must have the correct keys.

In Shared Key authentication, the WEP key is used for authentication in a four-step challenge-response handshake:

- The client sends an authentication request to the Access Point.

- The Access Point replies with a clear-text challenge.

- The client encrypts the challenge-text using the configured WEP key and sends it back in another authentication request.

- The Access Point decrypts the response. If this matches the challenge text, the Access Point sends back a positive reply.

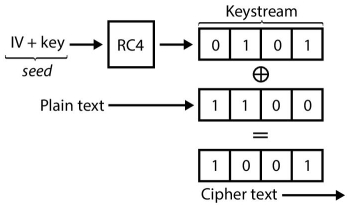

After the authentication and association, the pre-shared WEP key is also used for encrypting the data frames using RC4.

At first glance, it might seem as though Shared Key authentication is more secure than Open System authentication, since the latter offers no real authentication. However, it is quite the reverse. It is possible to derive the keystream used for the handshake by capturing the challenge frames in Shared Key authentication. Therefore, data can be more easily intercepted and decrypted with Shared Key authentication than with Open System authentication. If privacy is a primary concern, it is more advisable to use Open System authentication for WEP authentication, rather than Shared Key authenticati on; however, this also means that any WLAN client can connect to the AP. (Both authentication mechanisms are weak; Shared Key WEP is deprecated in favor of WPA/WPA2.)

15.12 WiFi Protected Access (WPA)

- WPA is a security protocol designed to invent secure wireless (Wi-Fi) networks. It is similar to the WEP protocol, but offers improvements in the way it handles security keys and the way users are authorized.

- For an encrypted data transfer to work, both systems on the outset and end of a data transfer must use the same encryption/decryption key. While WEP provides each authorized system with the same key, WPA uses the temporal key integrity protocol (TKIP), which dynamically changes the key that the systems use. This prevents intruders from creating their own encryption key to match the one used by the secure network.

- WPA also implements something called the Extensible Authentication Protocol (EAP) for authorizing users. Instead of authorizing computers based soley on their MAC address, WPA can use several other methods to verify each computer’s identity. This makes it more challenging for unauthorized systems to gain access to the wireless network.

Wi-Fi Protected Access (WPA) is a security standard for users of computing devices equipped with wireless internet connections. WPA was developed by the Wi-Fi Alliance to provide more sophisticated data encryption and better user authentication than Wired Equivalent Privacy (WEP), the original Wi-Fi security standard. The new standard, which was ratified by the IEEE in 2004 as 802.11i, was designed to be backward-compatible with WEP to encourage quick, easy adoption. Network security professionals were able to support WPA on many WEP-based devices with a simple firmware update.

WPA has discrete modes for enterprise users and for personal use. The enterprise mode, WPA-EAP, uses more stringent 802.1x authentication with the Extensible Authentication Protocol (EAP). The personal mode, WPA-PSK, uses preshared keys for simpler implementation and management among consumers and small offices. Enterprise mode requires the use of an authentication server. WPA’s encryption method is the Temporal Key Integrity Protocol (TKIP). TKIP includes a per-packet mixing function, a message integrity check, an extended initialization vector and a re-keying mechanism. WPA provides strong user authentication based on 802.1x and the Extensible Authentication Protocol (EAP). WPA depends on a central authentication server, such as RADIUS, to authenticate each user.

Software renew that allows both server and client computers to implement WPA became available during 2003. Access points (see hot spots) can operate in mixed WEP/WPA mode to support both WEP and WPA clients. However, mixed mode effectively provides only WEP-level security for all users. Home users of access points that use only WPA can operate in a special home mode in which the user need only enter a password to be connected to the access point. The password will trigger authentication and TKIP encryption.

15.13 Wi-Fi Protected Access II and the Most Current Security Protocols

WPA2 superseded WPA in 2004. WPA2 uses the Counter Mode Cipher Block Chaining Message Authentication Code Protocol (CCMP). It is based on the applicable obligatory Advanced Encryption Standard algorithm, which organizes message authenticity and integrity verification, and it is much secure and more reliable than the original TKIP protocol for WPA.

WPA2 still has vulnerabilities; primary among those is unauthorized access to the enterprise wireless network, where there is an invasion of attack vector of certain Wi-Fi Protected Setup (WPS) access points. This can take the invader several hours of concerted effort with state-of-the-art computer technology, but the threat of system compact should not be deduced. It is approved the WPS be disabled for each attack vector access point in WPA2 to discourage such threats.

15.13.1 Wi-Fi Protected Access

Though these threats have traditionally, and virtually completely, been directed at business wireless systems, even home wireless systems can be exposed by weak passwords or passphrases that can make it accessible for an attacker to deal those systems. Privileged accounts (such as administrator accounts) should always be supported by stronger, longer passwords and all passwords should be changed frequently.

15.13.2 Difference between WEP, WPA: Wi-Fi Security Through the Ages

Since the late 1990s, Wi-Fi security protocols have supported multiple upgrades, with outright deprecation of older protocols and significant correction to newer protocols. A stroll through the history of Wi-Fi security serves to focus both what’s out there right now and why you should avoid older model.

15.14 Wired Equivalent Privacy (WEP)

Wired Equivalent Privacy (WEP) is the most abroad used Wi-Fi security protocol in the world. This is a function of age, backwards compatibility, and the fact that it comes out first in the protocol selection menus in many router control panels.

WEP was ratified as a Wi-Fi security standard in September of 1999. The first versions of WEP weren’t particularly strong, even for the time they were free, because U.S. restrictions on the export of separate cryptographic technology led to manufacturers enclose their devices to only 64-bit encryption. When the restrictions were lifted, it was increased to 128-bit. Despite the introduction of 256-bit WEP, 128-bit remains one of the most common implementations.

Despite modification to the protocol and an increased key size, over time numerous security bloom were discovered in the WEP standard. As computing power increased, it became easier and easier to effort those bugs. As early as 2001, proof-of-concept effort were floating around, and by 2005, the FBI gave a public demonstration (in an effort to boost awareness of WEP’s flaws) where they cracked WEP passwords in minutes using freely available software.

Despite various enhancements, work-around, and other experiments to shore up the WEP system, it remains highly vulnerable. Systems that rely on WEP should be upgraded or, if security upgrades are not a choice, replaced. The Wi-Fi Alliance officially retired WEP in 2004.

15.15 Wi-Fi Protected Access (WPA)

Wi-Fi Protected Access (WPA) was the Wi-Fi Alliance’s express response and replacement to the increasingly possible burden of the WEP standard. WPA was formally approve in 2003, a year before WEP was officially retired. The most familiar WPA configuration is WPA-PSK (Pre-Shared Key). The keys used by WPA are 256-bit, a significant development over the 64-bit and 128-bit keys used in the WEP system.

Some of the significant changes implemented with WPA admitted message integrity checks (to terminate if an attacker had captured or altered packets passed between the access point and client) and the Temporal Key Integrity Protocol (TKIP). TKIP employs a per-packet key system that was entirely more secure than the fixed key system used by WEP. The TKIP encryption standard was later superseded by Advanced Encryption Standard (AES).

Although what a powerful improvement WPA was over WEP, the ghost of WEP haunted WPA. TKIP, a basic component of WPA, was designed to be easily rolled out via firmware upgrades onto existing WEP-enabled devices. As such, it had to recover certain elements used in the WEP system which, ultimately, were also exploited.

WPA, like its predecessor WEP, has been shown via both proof-of-concept and applied public demonstrations to be vulnerable to intrusion. Interestingly, the process by which WPA is usually breached is not a direct attack on the WPA protocol (although such attacks have been successfully demonstrated), but by attacks on a supplementary system that was rolled out with WPA—Wi-Fi Protected Setup (WPS)—which was designed to make it easy to link devices to modern access points.

15.16 Conclusions

Secure cloud communication is still a leading challenge due to the huge number of attacks made on the cloud. In addition, the amount of resources in the cloud and the various functions, it carries out means that eventually, hackers and others penetrate the system and determine how the various security functions work. Although use of different security protocol play an meaningful role to protect the cloud.

References

- 1. Armbrust, M., Fox, A., Griffith, R., Joseph, A.D., Katz, R.H., Kon-winski, A., Lee, G., Patterson, D.A., Rabkin, A., Stoica, I., Zaharia, M., Above the clouds: A Berkeley view of cloud computing, Berkeley Tech. Rep. UCB-EECS-2009-28, 2009.

- 2. Cloud Services—Cloud Computing Solutions, Accenture, Accenture White paper. Retrieved on 2014-01-29.

- 3. www.sersc.org/journals/IJSIA/vol10_no2_2016

- 4. https://beacontelecom.com

- 5. Bala Chandar, R., Kavitha, M.S., Seenivasan, K., A proficient model for high end security in cloud computing. Int. J. Emerg. Res. Manag. Technol., 5, 10 697–702, 2014.

- 6. https://technet.microsoft.com/pt-pt/library.

- 7. http://www.netgear.com/docs/refdocs/Wireless/wirelessBasics.htm.

- 8. http://www.wi-fiplanet.com/tutorials/article.php.

- 9. http://www.computerbits.com/archive/2003/0200/hotspotsecurity.html.

Note

- Email: [email protected]