![]()

CHAPTER TEN

E-DIAGNOSIS SUPPORT SYSTEMS

An E-DSS for Lower Back Pain

Lin Lin, Paul Jen-Hwa Hu, Olivia R. Liu Sheng, Joseph Tan

A. Overview of Lower Back Pain

III. Designing an E-Diagnosis Support System for Lower Back Pain: Key Challenging Characteristics

A. Diagnosis Involving Uncertainty

B. Clinical Decision Making Involving Multiple Diagnoses

C. Completeness of a Clinical Diagnosis Support System

IV. A Web-Based e-Diagnosis Support System for Lower Back Pain

B. Knowledge Acquisition and Representation

V. A Framework for Evaluation of an e-Diagnosis Support System

A. Knowledge Base Verification

C. Evaluation of Clinical Efficacy

VIII. Appendix A: Formal Proof of a Consensus Model—Based Inference Algorithm

IX. Appendix B: Formal Description of the Modified Turing Test

XI. Teleradiology Case: Present and Future

Learning Objectives

- Learn the SOAP process for lower back pain diagnosis

- Recognize the challenges in designing an e-DSS for lower back pain

- Understand the systems architecture, knowledge representation, and knowledge inference mechanisms for e-DSS design for lower back pain

- Identify the components and functions of an e-DSS evaluation framework in an e-health system and environment

- Identify some future directions of e-DSS research

Introduction

Over the years, the use of decision support systems (DSS) in clinical health care has received varying levels of attention from information systems researchers and practitioners (Berner and Ball, 1998; Buchanan and Shortliffe, 1975; Heckerman, Horvitz, and Nathwani, 1992; Leaper, Horrocks, Staniland, and deDombal, 1972; Vaughn and others, 1998, 1999; Waddell, 1987). Of particular interest is diagnosis decision making, a fundamental aspect of clinical health services. From a clinical information management perspective, diagnosis refers to a classification process in which a service provider assigns a clinical case to one or more prespecified illness or disease categories (using, for example, ICD-10 or mix-group classification), based on the patient's symptoms and information collected for the case (Suojanen, Andreassen, and Olesen, 2001). Obviously, an incorrect or inadequate diagnosis can adversely affect subsequent patient treatment or management plans. In many cases, a clinical diagnosis decision requires complex and highly specialized knowledge that may not be readily available or accessible. In this context, the value of an electronic diagnosis support system (e-DSS) that is designed to encompass pertinent diagnostic knowledge and to make that knowledge available to care providers and patients is significant.

This chapter discusses the development, implementation, and evaluation of an e-DSS for clinicians to help them address or resolve problems with lower back pain (LBP). LBP prevents many adults from being able to work or live a normal lifestyle (Waddell, 1987). Diagnosis of LBP problems requires understanding a complex anatomical and physiological structure, factoring in several diverse considerations, and juggling with nonstandardized terminology (Danek, n.d.). Jackson, Llewelyn-Phillips, and Klaber-Moffett (1996) report that only about 15 percent of LBP patients receive accurate diagnoses with some degree of certainty. Because clinicians' acquisition of complex diagnostic knowledge is mostly experiential and entails an intensive and time-consuming process, the use of an e-DSS to support a clinician's diagnosis is both beneficial and justifiable. The primary focus of this discussion is e-DSS design challenges, architecture, implementation, and evaluation. Aside from highlighting the challenging characteristics of LBP diagnosis, we describe how multiple methods are employed to acquire the targeted knowledge from two highly experienced experts who practice in different clinics and geographic regions. Following this, evaluation of the e-DSS—including its verification, validation, clinical evaluation designs, and important results—is documented. Finally, the chapter raises a series of questions that still need to be asked or answered in establishing future directions for this domain.

The e-DSS presented here is Web-based. Architecturally, the system consists of a knowledge base, an inference engine, a case repository, and two interfaces for convenient system access and knowledge update. The case repository uses a star schema–based data warehouse designed to support clinicians' ability to reference and analyze previous clinical cases. To ensure the system's validity and utility, evaluations of the e-DSS include knowledge base verification, system validation, and clinical efficacy assessment.

Overview of Lower Back Pain

Anatomically, the term lower back refers to a complex structure of vertebrae, disks, spinal cord, and nerves. LBP is a physiological and medical condition often accompanied by or resulting from common colds and other illnesses. According to Waddell (1987), four out of five adults will experience significant LBP in their lifetime. Diagnosis of LBP problems is challenging. The underlying pathology of a persistent and oppressive LBP problem is not always easily identified (Nachemson, 1985). Often, clinicians have to evaluate physical, social, behavioral, and environmental issues. In addition, LBP patients often exhibit other symptoms commonly noted in individuals who are free of LBP (Bounds, Lloyd, Mathew, and Waddell, 1988).

LBP patients are treated by different groups of clinicians, including physical therapists, orthopedists, general practitioners, chiropractors, and pain specialists (for example, physiatrists). These care providers differ subtly, if not noticeably in their training and in their approaches to pain epidemiology, diagnosis decision making, therapeutic protocol and selection, and overall patient management plans. Of these specialists, physical therapists probably are the most sought-after care providers for LBP problems; therefore, the e-DSS was developed with physical therapy as the main treatment modality.

The SOAP Process

SOAP (subjective testing, objective testing, assessment, planning) is a common service process used by physical therapists to manage LBP patients; it consists of four phases (see Figure 10.1).

FIGURE 10.1. SOAP: A COMMON SERVICE PROCESS FOR MANAGING PATIENTS WITH LOWER BACK PAIN

Phase 1, Subjective testing, entails qualitative tests to obtain relevant patient history and clinical information. This phase includes a question-and-answer session using mostly programmed question items to identify the nature of the pain and its probable causes. Based on the patient's responses, the therapist collects information such as pain description, location, activation, severity and frequency, together with important patient or case symptoms. The therapist also acquires information on the patient's assessment of and concerns about the pain in relation to his or her clinical history. By documenting and analyzing such information, the therapist then generates one or multiple preliminary diagnoses.(For convenience, we will use the plural term diagnoses throughout this chapter, with the understanding that some LBP patients may have a simple single diagnosis.)

Phase 2, Objective testing, involves physical examinations to confirm or refine the preliminary diagnoses. This testing usually proceeds in an iterative fashion and thus may require additional subjective question-and-answer sessions, especially when the objective results do not support the preliminary diagnoses. Phases 1 and 2 are knowledge-intensive, and their effectiveness demands considerable experience on the part of the therapist.

In Phase 3, Assessment, the therapist confirms his or her diagnoses and proceeds with an evaluation of pain epidemiology and severity and plausible therapeutic protocols.

Phase 4, the final phase, is Planning for patient management, in which the therapist designs, selects, and administers therapeutic treatments. The attending therapist continually monitors and evaluates the patient's response and revises the diagnosis and treatment plan accordingly. This process terminates when the therapist observes satisfactory patient response and recovery.

Essential in the SOAP process are the care provider's preliminary diagnoses, which are generated during subjective testing and which have significant effects on subsequent patient assessment, therapeutic protocol selection, and overall treatment plan. Obviously, inadequate preliminary diagnoses are likely to result in unnecessary testing and an ineffective treatment plan, thus diminishing service quality and adversely affecting the patient's well-being.

Designing an E-Diagnosis Support System for Lower Back Pain: Key Challenging Characteristics

From a clinical decision-making perspective, diagnosis of LBP has a number of challenging characteristics that have a significant impact on designing an effective e-DSS product:

- Effective LBP diagnosis requires both highly specialized knowledge and wide-ranging considerations on the part of the therapist.

- A therapist may develop multiple preliminary diagnoses partially because of incomplete patient or case information.

- Experienced therapists often find it difficult to articulate their underlying diagnostic knowledge for the benefit of newer therapists.

- Accessible diagnostic knowledge tends to be limited to sources in close geographic proximity or personal networks; timely sharing or reuse of large-scale knowledge is rare.

- Coupled with the stringent time constraints common to clinicians, all of the impediments on this list are likely to result in a reduced or suboptimal level of service.

The clinical significance of LBP and limited support for diagnostic knowledge sharing and reuse beyond geographical constraints make a knowledge-based e-DSS approach desirable and justifiable, though not easy. Yet an adequately designed and implemented e-DSS for LBP could facilitate and enhance clinicians' acquiring, sharing, and using the specialized knowledge that is indispensable in the effective care and management of LBP patients.

In this context, an e-DSS that incorporates pertinent LBP diagnostic knowledge and demonstrates sufficient validity and efficacy through rigorous evaluation would be of great value. Supported by an adequately designed e-DSS, clinicians would become increasingly effective and efficient in collecting and interpreting patient and case information and making appropriate diagnosis decisions, ultimately leading to improved service quality and patient outcomes. Three major challenges of e-DSS design are diagnosis involving uncertainty, clinical decision making involving multiple diagnoses, and completeness of an e-DSS.

Diagnosis Involving Uncertainty

Uncertainty, which is common to many clinical diagnostic tasks, represents a fundamental challenge to e-DSS design. Several methods have been proposed to represent or reason about uncertainty. For example, researchers have used a certainty factor scheme to represent or model the confidence level of a system-recommended solution or diagnosis (Buchanan and Shortliffe, 1975). The exact certainty value associated with a particular rule is usually determined by the presence of specific evidence suggested by experts or the extant literature. The overall degree of belief for a particular diagnosis is then calculated, using a probabilistic summation that combines all relevant evidence (Buchanan and Shortliffe, 1975). Bayesian theory, based on Bayes' Theorem and probabilistic chain rules, has also been applied to represent and reason about uncertainty. Typically, the underlying causal relationships among the evidence and diagnostic variables are modeled using a directed acyclic graph in which each node incorporates the respective prior and posterior probabilistic distributions (Przytula and Thompson, 2000). A set theory concept known as belief function has also been employed (Dempster, 1967; Shafer, 1976). A belief function models the interaction of independent criteria. Despite the differences in their representation or modeling specifics, all of these methods would typically require substantial numerical probability estimates by LBP experts.

Numerical probability estimates from LBP experts can be difficult to obtain and prone to bias, especially when there is a lack of adequate anchors or calibration (Kahneman, Slovic, and Tversky, 1982). The likelihood of unsystematic or inconsistent assessments tends to increase with the sheer volume and complexity of knowledge. Previous work has examined different methods for eliciting such probability estimates, ranging from probability scales for predicting assessments to risk-laden gambling (van DerGaag and others, 2002). However, none of these methods can be directly applied to LBP diagnosis, for the following reasons: First, therapists are not experienced in numerical probability assessment, making the use of a probability scale to articulate, visualize, and interpret conditional probabilities difficult and unlikely to be effective. Second, from a human reasoning perspective, the probabilistic combination of individual rules in LBP diagnosis differs from that common in diagnostic tasks examined by previous e-DSS projects. For instance, with LBP, the presence or absence of a particular piece of evidence can completely rule out one or more diagnoses, whereas in other situations, this is more likely to merely affect respective degrees of belief. To address this “exclusive negation or elimination” characteristic the proposed LBP project uses a certainty factor scheme that is based on an intuitive and easy-to-use uncertainty representation and reasoning framework, which could reduce the cost of soliciting probability assessments from LBP experts. Hence, the suggested approach is based on accumulated experiential knowledge or predetermined verbal probability estimation (van DerGaag and others, 2002) that is intuitive and capable of facilitating domain experts' estimates significantly, compared with methods based on Bayesian theory or belief function. The performance of the resulting system is still robust and does not require (and is not highly sensitive to) precise estimates for each individual rule. Hence, the suggested approach is computationally unique and differs from previous use of a certainty factor or its modifications (Cabrero-Canosa and others, 2003).

Clinical Decision Making Involving Multiple Diagnoses

The support of simultaneous multiple decision outcomes has been identified as a challenge to DSS development (Suojanen, Andreassen, and Olesen, 2001). Earlier work—for example, Pathfinder (Heckerman, Horvitz, and Nathwani, 1992)—bypassed this challenge by assuming that a patient at any given point in time can suffer from only one disease from a prespecified, mutually exclusive set. Accordingly, each disease can be modeled as a single state of a discrete stochastic variable. Other case studies—such as MUNIN (Suojanen, Andreassen, and Olesen, 2001)—modeled each disease as a stochastic variable and therefore were able to accommodate simultaneous multiple diagnoses.

Owing to the rule-based approach employed in the LBP project, using a single stochastic variable to represent diagnosis is inadequate as in all of the approaches described so far. We addressed the multiple-diagnosis requirement by examining the confidence level of each individual diagnosis independently—that is, allowing these to compete on the basis of certainty. Theoretically, rule-based systems using a certainty factor scheme support such competitions among individual rules. However, a review of previous research cases in the literature suggested no explicit efforts toward addressing the reasoning of multiple decision outcomes. In fact, evaluation of previous cases focused predominantly on accuracy-based metrics (metrics that focus on “accuracy” as the main scoring criteria), which are insufficient for assessing the performance of a system designed to support multiple diagnoses simultaneously. This, clearly, is another limitation of previous cases.

Completeness of a Clinical Decision Support System

Judged by scope and clinical readiness, most systems reported in previous research cases are of limited completeness, particularly with regard to system evaluation (Shortliffe and Davis, 1975; Smith, Nugent, and McClean, 2003). A review of these cases revealed a focus on modeling, algorithm development, or computational performance enhancement. While interesting, the resulting systems were a long way from clinical deployment and use. In addition, previous system evaluations were mostly ad hoc and focused predominantly on output performance assessment, that is, evaluation of primarily the accuracy of the predicted diagnoses, and thus incomplete with respect to fundamental dimensions of system evaluation. Engelrecht, Rector, and Moser (1995) suggest that evaluation of a DSS include verification, validation, and impact assessments at individual and organizational levels. Shortliffe and Davis (1975) advocate evaluating a system's clinical efficacy using real-world cases and settings. Chau and Hu (2002) reported that health care professionals tend to exhibit a tool-oriented view of technologies and are likely to accept a system only when it offers considerable utility in patient care and management. Thus, evaluation of an e-DSS must include assessment on fundamental dimensions, using clinical cases; in this particular case, evaluation should include knowledge base verification, system validation, and clinical efficacy.

A Web-Based e-Diagnosis Support System for Lower Back Pain

An intelligent, Web-based e-DSS was designed, implemented, and evaluated to support clinicians' diagnosis of LBP problems. Key objectives of this design included verifiable knowledge, validated system utility and clinical efficacy, and user-friendly interfaces for knowledge access and update. The Web is a prevailing platform that allows round-the-clock access to the e-DSS, without geographic constraints (Bharati and Chaudhury, in press); in addition to assisting clinicians' diagnoses, this system will also support self-service by patients to access information from the database.

Compared with most previous developmental projects, our e-DSS is more complete and clinic-ready, due to careful attention given to key issues in problem analysis, uncertainty modeling and reasoning, system design, implementation, and evaluation. Drawing on Engelrecht, Rector, and Moser's (1995) and Shortliffe and Davis's (1975) analysis, the e-DSS evaluation presented here includes knowledge base verification, system validation (using a modified Turing test), and a clinical efficacy assessment using 180 cases collected from real-world clinical settings.

E-DSS System Architecture

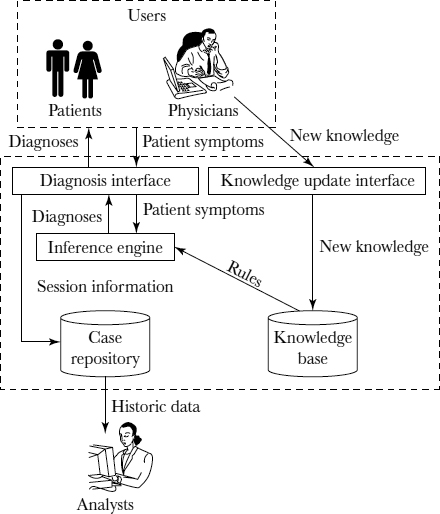

As shown in Figure 10.2, the e-DSS system architecture consists of a knowledge base, an inference engine, two Web-based interfaces for knowledge access and update, and a case repository.

The knowledge base consists of a total of 140 rules and uses a modified certainty factor scheme specifically designed to relieve burdensome probability estimation requirements previously placed on domain experts. The rules were extracted from two highly experienced therapists and stored in a relational database that was developed using MySQL. Simultaneous multiple diagnoses are supported by an inference engine enabled by an algorithm built on a consensus model for combining individual rules, which will be explained in more detail later in the chapter. The inference algorithm also captures the exclusive negation or elimination property of LBP diagnosis. The inference engine was implemented using ANSI standard Java 2. Based on the factual data and clinical evidence provided by a clinician or patient and the subsequent activation of the relevant rules in the knowledge base, the e-DSS generates one or multiple diagnoses of varying certainty values. The diagnoses of the highest certainty value or the ones with certainty values exceeding a prespecified threshold are then recommended to the user.

FIGURE 10.2. ARCHITECTURE OF A WEB-BASED DIAGNOSIS SUPPORT SYSTEM

The e-DSS includes two user interfaces. The graphic design of the diagnosis interface allows a clinician or patient to describe and visualize relevant symptoms using nonmedical terminology. Guided by the sequenced question items, the user provides information on symptoms or pain assessments. Upon completion, the user submits his or her responses to the inference engine, which, in turn, generates preliminary diagnoses. A clinician can override the system recommendation by submitting his or her own diagnoses. In addition, a clinician can activate the explanatory panel to review the system's decision-making process. The entire diagnostic session, including the final diagnoses reached, is stored in the case repository for future training or data mining purposes.

The e-DSS also has a second interface, the knowledge update interface, which allows clinicians to update the knowledge base. Using a graphics tool, a clinician can add, remove, or modify an existing rule, in terms of its left-hand or right-hand side or both. Allowing clinicians to update the knowledge base is important because the diagnostic knowledge or heuristics may evolve dynamically over time. This e-DSS therefore allows individual clinicians to update the knowledge base without needing to become familiar with the knowledge representation schema of the system in any detail. Both interfaces were implemented using JavaServer Pages and Java servlets technology.

The case repository was implemented as a data warehouse. A star schema was employed, with the fact table providing the session information and dimensions representing key factors that included patient demographics and symptoms. By slicing and dicing the data, clinicians can gain insights into existing diagnostic knowledge or discover new information—for example, significant indicators suggesting a particular diagnosis, diagnostic patterns specific to a clinician or a group of clinicians, or relationships between particular demographic variables and diseases. Implemented in Oracle, this repository also serves as a data cleansing and preparation mechanism for data mining.

Knowledge Acquisition and Representation

Domain knowledge was solicited from two highly experienced therapists. One is a European therapist and the other practices in the United States. Each one has more than fifteen years of clinical experiences in diagnosing and treating LBP problems. Using multiple methods that included unstructured and structured interviews, field observations, and scenario-based case reasoning and analysis, three knowledge engineers worked closely with both experts for six months. Fourteen common diagnoses, or decision outcomes, were identified, as shown in Table 10.1.

Also identified were fifteen questions that are critical to therapists' diagnosis of LBP problems. Table 10.2 summarizes these question items, which can be broadly characterized as involving patient demographics, current symptoms, clinical history, and follow-up items specific to gender or pain description. Jointly, the experts singled out 152 legitimate responses to these question items. On average, a question has approximately ten conceivable responses.

TABLE 10.1 DECISION OUTCOMES INCLUDED IN DIAGNOSIS SUPPORT SYSTEM

| Diagnosis Category | Description |

| 1 | Acute disk-related pain |

| 2 | Acute disk-related pain with radiculopathy or myelopathy |

| 3 | Chronic disk-related pain (degenerative disk disease or instability) |

| 4 | Chronic disk-related pain with radiculopathy or myelopathy |

| 5 | Stenosis, osteoporosis, or compression fracture |

| 6 | Acute facet joint–related pain |

| 7 | Chronic facet joint–related pain (degenerative joint disease) |

| 8 | Neuropathy of a peripheral nerve, such as the sciatic or cluneal |

| 9 | Sacroiliac joint-related pain and other pelvic ring–related conditions |

| 10 | Hip joint–related pain |

| 11 | Miscellaneous musculoskeletal pathologies, including muscle, tendon, or ligament strains or sprains, bursitis, compartment syndromes, fractures, and kissing spine |

| 12 | Nonmusculoskeletal internal pathologies, including neoplasms |

| 13 | Gynecological |

| 14 | Inappropriate illness behavior |

Analysis of therapists' diagnosis of LBP problems suggested several challenging characteristics. First, therapists usually make diagnostic decisions by combining evidence or information acquired in the question-and-answer session in some additive fashion. Hence, a diagnosis supported by more evidence (for example, positive responses to multiple questions) is seen as more likely than one supported by less evidence. This suggests independent contributions of the respective pieces of evidence toward recommending a particular diagnosis, without considerable interactions among them. Hence, an additive method for combining relevant evidence can represent and address the uncertainty associated with individual rules.

Moreover, therapists often find it difficult to estimate uncertainty in diagnosis. Unlike the case of MYCIN, in which those assisting in its development were able to estimate certainty with impressive precision (a certainty factor of 0.525) (Buchanan and Shortliffe, 1985), the domain experts for LBP found it difficult to assess or estimate the numerical probability of individual rules, in spite of the different methods that the knowledge engineers had attempted to apply. Previous human probability judgment research suggests that individuals might feel more comfortable rendering verbal probability expressions (for example, “maybe” or “possibly”) than numerical probability estimates (van DerGaag and others, 2002). To physical therapists, certainty values may be more natural, meaningful, and comprehensible when expressed by using a verbal probability scale than when expressed numerically. Based on results of the knowledge elicitation process, certainty was thus defined at three verbal levels: “impossible,” “neutral,” and “likely.” Here is a sample rule:

TABLE 10.2. QUESTION ITEMS INCLUDED IN DIAGNOSIS SUPPORT SYSTEM

If observation shows patient age is between 30 and 40

Then recommend diagnosis “Chronic Disc Degenerative” with certainty level of “likely”

As well, a therapist often excludes a particular diagnosis on observing evidence that suggests its impossibility, regardless of other evidence supporting that diagnosis. In regard to decision modeling, this characteristic is unique and has not been examined previously. An intuitive schema for addressing this uncertainty characteristic was therefore advanced, based on the verbal probability estimation method just described. Structurally, knowledge in the format of an “if-then” production rule was implemented—that is, “if response, then diagnosis,” with a certainty level based on the underlying verbal probability estimation.

Knowledge Inference

The knowledge inference in the e-DSS was based on a voting scheme in which a rule with a probability of “likely” generates one vote toward the diagnosis or diagnoses it recommends. A rule with a probability of “neutral” has no effect on the voting, whereas a rule with an “impossible” verbal probability invalidates all votes that its recommended diagnoses receives from other rules. In other words, an exclusive negation or elimination effect completely removes diagnoses from consideration. According to this scheme, the votes received by each diagnosis are summed; those receiving the most votes or exceeding a prespecified threshold are then recommended to the user.

Theoretically, this scheme is based on consensus-based modeling and differs from the probabilistic summation approach used in previous research cases (Buchanan and Shortliffe, 1985). The central principle of a consensus-based model is the theoretical adequacy of a consensus position established by the voting of multiple experts or participants. We represent the LBP diagnosis using a consensus model primarily because each response independently affects the likelihood of a diagnosis instead of being simply an additive factor, as in the probabilistic summation approach. For details about the consensus model and a formal mathematical proof of our inference algorithm, please refer to Appendix A.

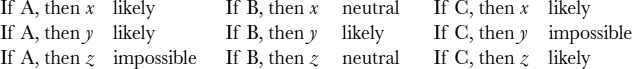

As an illustration, consider a patient with three symptoms, A, B, and C. Let's assume that there are three possible diagnoses, x, y, and z. (The actual number of possible diagnoses is often much larger.) We have the following set of rules:

When combining all evidences, diagnosis x receives two votes. Although diagnosis y also receives two votes, symptom C makes it an impossible choice. Similarly, symptom A makes diagnosis z an unlikely outcome. Therefore, in this case, diagnosis x wins out.

Two important prerequisites have to be met for the above algorithm to work. First, each of the rules must have high level of credibility (the rule should have higher than a 50 percent chance of being true). Second, the prior probabilities of the diagnoses should be similar, if not the same as this is an important assumption for the algorithm to work accurately in the computation software. This indicates that all diagnoses should have similar incidence rates in the general population. Although this is unlikely in the real world, the algorithm can easily be modified to adapt to more complicated scenarios in which each diagnosis has a different incidence rate and the rates are known. This further permits complex simulation of the system to be performed, allowing us to study the behavior of complex system variations and generate theoretical principles for related scenarios.

A FRAMEWORK FOR EVALUATION OF AN E-DIAGNOSIS SUPPORT SYSTEM

As previously noted, the e-DSS evaluations were designed to include knowledge base verification, system validation, and clinical efficacy and to use real-world test cases. Five therapists, all clinically active and with at least ten years of experience in LBP diagnosis and treatment, participated. Three of them practice in a nationwide pain clinic in the United States; the other two practice in Europe. The inclusion of therapists who practice in different regions extends the generality of the evaluation results.

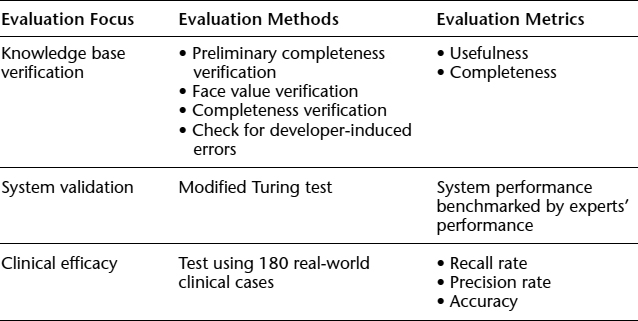

Table 10.3 summarizes the evaluation framework, showing the method of evaluation and metrics for each area of evaluation. The design and key analysis results of each evaluation are discussed in the sections that follow.

TABLE 10.3. EVALUATION FRAMEWORK FOR AN E-DIAGNOSIS SUPPORT SYSTEM

Knowledge Base Verification

Verification refers to ensuring a system's compliance with its defined requirements and specifications (Kak et al., 1990). For knowledge base verification, the focus was on validating each stored rule, including its left- and right-hand sides and their pairing or mapping using the relevant medical foundation. The criteria included consistency and completeness. More simply, the aim was to detect and correct any syntactic or semantic errors that would adversely affect the knowledge base's consistency or completeness.

Using a questionnaire, each therapist verified the usefulness and completeness of the rules by examining each rule's left-hand side (that is, patient responses) and right-hand side (that is, diagnoses). Based on the collective assessment results, the knowledge base was verified as satisfactorily complete, containing the decision variables and diagnoses essential to the physical therapists' clinical services. The evaluators made few suggestions to include an additional diagnosis, remove an existing diagnosis, or allow additional responses to a decision variable. As a group, the therapists discussed the few suggestions face to face and recommended against any changes.

The therapists were then asked individually to verify each rule at face value, with particular emphasis on the mapping or pairing of its left- and right-hand sides. When examining a rule, a therapist reported his or her assessment of its left- and right-hand side pairing scores, together with a confidence level of the assessment. Once individual assessments were completed, the therapists reviewed the assessments collaboratively in panel meetings. A revised certainty level was suggested for 8 of the 140 rules stored in the knowledge base, using the confidence value suggested by the group. Overall, the face-value verification was satisfactory, suggesting that the system embraces verified or verifiable knowledge for LBP diagnosis.

The completeness of the e-DSS knowledge base was also assessed by examining whether the system was able to reach some diagnosis for all clinical cases tested. All of the 180 real-world clinical cases received at least one diagnosis recommendation from the e-DSS. While not exhaustive, our test cases were sizable in number and exhibited considerable diversity.

In addition, potential developer-induced errors in the rules implemented in the back-end database were examined. Judged by our knowledge representation scheme and the integrity constraints enforced by the relational data model, the knowledge base appeared to have no serious syntactic or semantic problems stemming from system development errors.

System Validation

Validation verifies that the system performs at a level that is acceptable to domain experts or users (Biswas, Abramczyk, and Oliff, 1987; Kak et al., 1990). The e-DSS was validated through a modified Turing test that involved all five therapists and twenty clinical cases. Commonly used to validate knowledge-based systems, a Turing test (Walczak, Pofahl, and Scorpio, 2003) can generate artifacts of a system, whereby the system's intelligence or performance is assessed. In spite of the concerns raised by some researchers, a Turing test is generally considered to be a valid method and particularly effective for evaluating a system's performance with respect to domain experts' expectations (Gordon and Shortliffe, 1985). Accordingly, a modified Turing test was designed and used to validate the e-DSS, with a particular focus on system efficacy.

The validation approach used twenty previously diagnosed clinical cases. Several considerations were crucial to case selection:

- A minimum of one case for each diagnosis supported by the e-DSS was included for validation.

- The selection aimed at preserving the case distribution in clinical settings; in particular, the diagnoses of test cases had to exhibit a distribution comparable to that in the underlying case population. Accordingly, based on a sample population of 180 clinical cases, the diagnosis distribution was analyzed and 20 test cases were selected. This fairly large sample population was randomly collected from a national clinic so that it would resemble, to some degree, the underlying diagnosis space.

- The choice of how many test cases to include was based on a trade-off between data requirements for the intended analysis and the risk of overwhelming the five participating therapists.

First, using the modified Turing test procedures described next, each expert's competence was estimated. It should be noted that all experts would not be equally competent for a particular case; similarly, an expert would probably not be equally competent across all clinical cases. Hence, the unit of analysis was an expert's diagnosis of a test case.

The modified Turing test used consisted of four phases. In the case-solving phase, each expert (along with the e-DSS) diagnosed each case assigned. In the second phase, the identity of the expert who rendered each diagnosis was removed. This allowed the other experts to conduct a blind evaluation of each diagnosis recorded for each case. In the third phase, each expert rated each diagnosis. Using a rating r and a certainty factor c, each expert gave an explicit assessment of each presented diagnosis, together with his or her self-reported confidence in the assessment made. Each r and its certainty factor c(r) were measured by using a seven-point Likert scale, with 1 being “not certain or confident at all” and 7 being “very confident or certain.” Thus, the experts assessed one another as well as the e-DSS. In the final phase, the e-DSS was evaluated by producing a validity measure for each test case. This validity measure was calculated as the average rating of the system's diagnoses by the human experts, taking into account each expert's competence and subjective certainty assessment. The global validity of the system was then rated by averaging its validity score over the test cases under examination. In short, if the system “fools” the experts into thinking that it is a real expert (indicated by comparing the validity score it receives with those received by real experts), we can conclude that the system behaves like an expert, hence validating its performance. For mathematical details about the Turing test, please refer to Appendix B.

Evaluation of Clinical Efficacy

The e-DSS's clinical efficacy (that is, its utility in relation to domain experts' expectations) was further evaluated, using 180 real-world cases randomly collected from a nationwide pain clinic in the United States. Two highly experienced therapists examined each case before its inclusion in the clinical efficacy evaluation. Only one of the two therapists had actively participated in the development of the e-DSS.

Three measurements were used for this evaluation:

- Recall rate: the portion of an expert's diagnoses that are correctly recommended by the e-DSS. As defined here, with a focus on false negatives, recall rate measures the e-DSS's power.

- Precision rate: a system's efficiency, mainly anchored in false positives. In this case study, precision rate is defined as the portion of the diagnoses recommended by the e-DSS that are actually made by an expert.

- Accuracy: a measurement based on primary diagnosis. Results from previous field observations and interviews with numerous therapists suggested that a therapist often first pursued the primary diagnosis—that is, the one in which he or she was most confident. Hence, primary diagnosis is relatively more important clinically than other diagnoses. A 100 percent accuracy is therefore assigned when the primary diagnosis of an expert is included in the system's recommendation, and 0 percent otherwise.

Each therapist individually reviewed the diagnoses documented for each test case and rendered his or her diagnoses if it differed from the ones documented. Together, the experts reviewed, discussed, and consolidated their individual assessments and diagnoses to produce a “gold standard” diagnosis for each test case. The resulting diagnoses were then used as the “correct diagnoses,” which in turn were used to evaluate the e-DSS's clinical efficacy. The overall performance of the e-DSS, as shown in Table 10.4, is similar to that of the five experts.

TABLE 10.4. COMPARATIVE ANALYSIS OF PERFORMANCE: E-DIAGNOSIS SUPPORT SYSTEM VERSUS HUMAN EXPERTS

Table 10.5 shows that the e-DSS averaged 75.82 percent in recall, 64.56 percent in precision, and 73.08 percent in accuracy. Overall, the measures suggested that the e-DSS exhibited reasonably satisfactory efficacy, particularly when compared with the disappointingly low likelihood of LBP patients' receiving adequate diagnoses (Jackson, Llewelyn-Phillips, and Klaber-Moffett, 1996).

The analysis also revealed a number of interesting observations. For example, the test set included one case for acute facet joint–related pain (diagnosis category 6) and one case for gynecological pain (diagnosis category 13), reflecting the infrequent occurrence of such cases in clinical settings. That both cases were diagnostically challenging was suggested by the system's poor performance (0.00) in each category. This may indicate a need to further evaluate and enhance our diagnostic knowledge of these LBP problems.

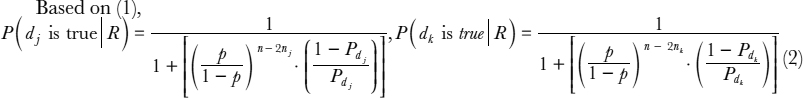

The system's performance in the acute disease categories (for example, categories 1 and 2) also suggested a need for further evaluation of the assumption that all LBP diagnosis categories have comparable or identical clinical occurrence rates. Without categories 1 and 2, our system averaged 82.67 percent in recall, 69.33 percent in precision, and 77.33 percent in accuracy. Based on equation (2) in Appendix A, our algorithm has a tendency to favor diagnoses with high prior probabilities. That is, a diagnosis with high prior probability has a higher posterior probability for being true, even if it receives the same number of votes as other diagnoses.

TABLE 10.5. SUMMARY OF CLINICAL EFFICACY EVALUATION RESULTS FOR E-DIAGNOSIS SUPPORT SYSTEM

A comparative analysis of the system's performance on chronic (for example, categories 3, 4, and 7) and acute (for example, categories 1 and 2) LBP problems suggests that chronic problems might have had a higher prior probability than acute ones, thus biasing our system. Findings from an interview with a senior therapist supported this speculation. The differential performance might also be partially attributed to potential biases in expert selection; for example, our selected therapists were more experienced in diagnosing chronic LBP problems than acute LBP problems. This suggests that the prior probability (p) might not be constant across different diagnosis categories. A refined knowledge representation and reasoning approach is needed to examine these concerns further.

Conclusion

Lower back pain is a common physiological and medical condition among adults, often depriving them of the ability to pursue routine activities and normal lifestyles. Recognizing the scarcity of key diagnostic knowledge and the frequency with which patients do not receive adequate care for LBP problems, we report on the development of an e-diagnosis support system to support clinicians in effective LBP diagnosis and its clinical evaluation.

Previous work on diagnostic support systems has uncovered important challenges. For example, different methods have been proposed for modeling, representing, or processing clinical reasoning under uncertainty, including applied machine learning algorithms (Bounds, Lloyd, Mathew, and Waddell, 1988; Graham and Espinosa, 1989; Vaughn and others, 1999), certainty factors (Buchanan and Shortliffe, 1975, 1985), Bayesian theory (Andersen, Olesen, Jensen, and Jensen, 1989; Przytula and Thompson, 2000), belief functions (Dempster, 1967; Shafer, 1976) and fuzzy logic (Zadeh, 1983). By and large, these methods require significant probability estimations by domain experts and thus are likely to create bottlenecks in an already time-consuming and tedious knowledge acquisition process. Another major challenge to designing and implementing diagnosis support systems in clinical health care (Suojanen, Andreassen, and Olesen, 2001) is the fact that clinical diagnosis may simultaneously involve multiple decision outcomes. While most prior cases addressed tasks involving single decision outcomes (Bounds, Lloyd, Mathew, and Waddell, 1988; Graham and Espinosa, 1989; Vaughn and others, 1999), the LBP problems presented here involve simultaneous multiple preliminary diagnoses. Findings from interviews with therapists and observations of their practice suggested that a therapist is likely to generate one to three preliminary diagnoses when examining a LBP patient. As noted, these preliminary diagnoses vary in certainty in ways that that require proper representation and reasoning. A final challenge is that a clinically viable system needs thorough evaluation in order to be widely accepted and used by targeted clinicians.

Motivated by the need for a system-based approach to support clinicians' diagnoses of LBP problems, we report on a Web-based e-DSS that addresses the challenging characteristics of LBP diagnosis. The e-DSS uses an intuitive and easy-to-understand framework for representing and reasoning about the uncertainty embedded in simultaneous multiple decision outcomes. Evaluations of the e-DSS addressed knowledge base verification, system validation, and clinical efficacy. As noted, analysis of these evaluations using multiple performance metrics revealed that the e-DSS does embrace important and verifiable diagnostic knowledge, provides a performance level similar to that of domain experts, and exhibits adequate clinical efficacy.

Despite the initial success reported by this system, several questions have emerged that require continued attention and serious investigation. First, how can the work presented here be more or less extended to enhance our understanding of other similarly rich and potentially difficult e-health related cases—for instance, the electronic diagnosis, prevention, and treatment management of many other ailments of a physical, cognitive, mental, psychological, or chronic nature, such as carpal tunnel syndrome, arthritis, multiple sclerosis, migraines, and depression?

Second, how might the intelligence of the e-DSS be enhanced? For example, can the system be designed to provide reasoning capability, such as the ability to identify the reasons behind the observed differential e-DSS effectiveness across LBP diagnosis categories? By understanding the source of inconsistent performance, we can further explore the assumption adopted (that is, identical prior probability of all diagnosis categories) and at the same time shed light on plausible boundaries of the proposed framework for representing and reasoning about uncertainty. A refined framework may then be analyzed and developed.

Finally, knowledge replenishment may be another area worth exploring. Although it allows the knowledge base to be updated by individual clinicians, the current e-DSS offers limited support for systematic knowledge replenishment. Such support is essential because clinicians' diagnostic knowledge is likely to evolve dynamically over time. In light of this, an automated machine learning or data mining approach may be able to adapt to important changes in knowledge instead of experts' having to repeat the tedious, expensive, and time-consuming knowledge engineering process. Such automated approaches would allow desirable integration or fusion of knowledge extracted or discovered through different methods, improving the quality of the knowledge base. For instance, the knowledge revealed by such data mining techniques could be used as anchors (data which are used as starting point for human experts to generate required estimation and projection) to complement the knowledge of human experts. Hence, the use of a Bayesian network to support knowledge integration is an appealing possibility. An investigation of the use of a Bayesian network to combine the diagnostic knowledge from different sources has been initiated, using the existing rule base, inference engine, case repository, and knowledge update module to support a hybrid DSS (Lin, Sheng, Hu, and Pirtle, 2002).

Chapter Questions

- Would you argue that the SOAP scheme recommended for lower back pain diagnosis could be used for other types of chronic ailments? If so, give instances and discuss how this scheme would assist in guiding the therapists. How can e-technology be applied in automating such a scheme?

- What are key features in the design of the e-DSS for LBP as discussed by the authors? Can you think of any other factors that may be important to consider? If you were asked to participate in the e-DSS design, what other concerns would you feel should be addressed?

- What is the significance of evaluation of the e-DSS? What type of feedback is important, and how do you think the feedback assists in the evaluation? Please discuss.

- In this chapter, we learned that knowledge acquisition and representation play vital roles in the design of a Web-based e-DSS for LBP. How do these considerations differ in the design of traditional health care systems?

- Imagine that this e-DSS for lower back pain is available to an occupational therapist in your workplace. How might people who may have LBP problems benefit from such a system? Please discuss its socioeconomic value.

Appendix A: Formal Proof of a Consensus Model–Based Inference Algorithm

Using Bayes' theory, we formally prove the consensus model–based inference algorithm presented in this chapter:

Assuming n independent observations 01, …, 0n and for each observation 0i, there exists a rule ri in the format of “if 0i, then x” with a verbal probability p(ri), p(ri) ![]() {impossible, neutral, likely}.

{impossible, neutral, likely}.

Let pi be the probability that rule ri is correct (1 ≤ i ≤ n) and Px be the prior probability that diagnosis x is true.

Also, consider the following additional assumptions:

- If p(ri) = impossible, P(x is true |R) = 0, where R is any set of observations that contains 0i. That is, the presence or absence of certain evidence can rule out a diagnosis completely.

- pi = pj = p,

1 ≤ i, j ≤ n—that is, the prior probability for each rule being correct is identical. This assumption is reasonable, in part because of the comprehensive and nondiscriminant nature of therapists' practices in diagnosing and treating patients with various LBP problems. In this context, the decision rules extracted from their diagnostic knowledge should have a comparable baseline of correctness.

1 ≤ i, j ≤ n—that is, the prior probability for each rule being correct is identical. This assumption is reasonable, in part because of the comprehensive and nondiscriminant nature of therapists' practices in diagnosing and treating patients with various LBP problems. In this context, the decision rules extracted from their diagnostic knowledge should have a comparable baseline of correctness.

Assumption 1 implies that x cannot be true when there exists any observation on the left-hand side of a rule with a certainty level of “impossible.” Hence, we consider only cases associated with rules with certainty levels of “neutral” or “likely.” Assume there are m rules with a certainty level of “likely” (m <= n) and all other rules are “neutral.” According to Bayes' Theorem, we then can derive the following proposition:

Proposition 1

When p > 0.5, P(x is true | m likely rules), 5 is monotonically increasing in m—that is, the more “likely” rules there are, the higher the likelihood that x is true.

As shown, the Bayesian probability under discussion monotonically increases in m when p > 0.5.

Given the domain experts' extensive clinical experience, the assumption that p > 0.5 is reasonable. For single-diagnosis problems, our voting scheme suggests an increasing likelihood of accepting a diagnosis when it receives more “likely” votes.

To extend this illustrative example to multidiagnosis scenarios, assume that there are l diagnoses d1, …, dl. For each observation 0i, there are l rules rij (1 ≤ i ≤ n,1 ≤ j ≤ l) in the format of “if 0i, then dj” with a verbal probability p(rij), p(rij) ![]() {impossible, neutral, likely}.

{impossible, neutral, likely}.

Let pij be the probability that rij is correct, and Pdj be the prior probability that diagnosis dj is correct. Including the two assumptions from the previous illustration and focusing likewise on scenarios involving no rules with a certainty level of “impossible,” we arrive at the following proposition:

Proposition 2

![]() j, k, 1 ≤ j, k ≤ l, for an observation set R, if the following are true:

j, k, 1 ≤ j, k ≤ l, for an observation set R, if the following are true:

- p > 0.5

- Pdj = Pdk

- Based on R, dj receives nj votes and dk receives nk votes, where nj > nk,

then P(dj is true |R) > P(dk is true |R)—that is, based on the same set of observations, the diagnoses that receive more votes are more likely to be true.

Proof:

Since ![]() j, k,1 ≤ j, k ≤ 1, Pdj = Pdk, in light of the monotonic characteristic of the function, proposition 2 is proved.

j, k,1 ≤ j, k ≤ 1, Pdj = Pdk, in light of the monotonic characteristic of the function, proposition 2 is proved.

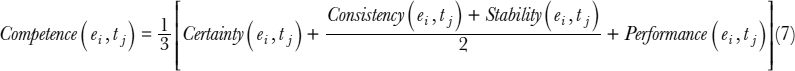

Appendix B: Formal Description of the Modified Turing Test

For n experts ei(1 <= i <= n) and m test cases tj (1 <=j <= m) on the panel, let Solij represent the solution by expert i for case j. The e-DSS was denoted as expertn+1. The modified Turing test that was used consisted of four phases. In the case-solving phase, each expert (including the e-DSS) diagnosed each of the m cases assigned, resulting in a total of m · (n + 1) diagnoses. In the second phase, the identity of the experts who rendered the diagnoses were removed. This allowed the other experts to conduct a blind evaluation of each diagnosis recorded for each case. In the third phase, each expert rated each diagnosis presented. Using a rating r and a certainty factor c for each rating, each expert gave an explicit assessment of each presented diagnosis, together with his or her self-reported confidence in the assessment made. As a result, an m · (n + 1) rank matrix rijk was produced for expert ei for evaluating the diagnosis by expert ek for case tj(Solkj), or ri(Solkj). Each r and its certainty factor c(r) were measured by using a seven-point Likert scale, with 1 being “not certain or confident at all” and 7 being “very confident or certain.” The e-DSS was evaluated in the final phase of the modified Turing test and produced a validity measure Vsys(tj) for each test case. This validity measure was calculated as the average rating of the system's diagnoses by the human experts, taking into account each expert's competence and subjective certainty assessment. The global validity of the system Vsys was then rated by averaging its validity score over the test cases under examination.

Essentially, sources of individual competence estimation and the specific measurements used in the e-DSS evaluation were as follows:

- Certainty: an expert's certainty while rating other experts' diagnoses. In particular, the certainty of an expert i on case j is denoted Certainty (ei, tj), which is calculated as his average certainty ratings (c(r)) on all other experts' diagnoses of case j (including the e-DSS's diagnosis):

- Consistency: an expert's confidence in making a diagnosis, signified by the rating an expert specifies for his or her own diagnosis:

- Stability: the certainty of an expert's rating of his or her own solution:

The performance of each expert (including the e-DSS) on a given case is thus measured by the average rating received from other experts (including the e-DSS) weighted by their respective certainty.

Fundamental to our competence estimation is (a) intentional self-reflection based on certainty, (b) unintentional self-reflection based on consistency and stability, and (c) external competence based on performance. If equal weights are assigned for these different sources for competence estimation, then we have the following equation:

Using the metrics presented, the human experts' average rating of the e-DSS's diagnoses on a specific case can then be calculated, weighted by their respective estimated competence and certainty level specified, as:

Thus, the overall e-DSS validity can be estimated by averaging across the validity score for each case.

In fact, comparative scores rather than actual values were used for rating and confidence, to ease interpretation of these measurements. Also, all of these rating or confidence measurements were normalized—that is, divided by 7 because of the seven-point Likert scale used. As a result, Vsys is inclusively bounded between 0 and 1.

Consistent with Knauf, Gonzalez, and Abel (2002), it is further argued that a single Vsys cannot accurately reflect the system's performance. Use of a single measurement is inadequate for comparing a system's performance with that of human experts. Indeed, deliberation of a system's capability requires careful interpretation and comparison of the system's performance by human experts.

To take human experts' performance into consideration, we modified Knauf, Gonzalez, and Abel's approach by introducing Vexp, which is defined as follows:

Vexp(tj)i, reflects expert i's performance on case j as evaluated by his or her peers. Expert i's overall performance can be then calculated as follows:

References

Andersen, S. K., Olesen, K. G., Jensen, F. V., & Jensen, F. (1990). HUGIN: A shell for building Bayesian belief universes for expert systems. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA. In Proceedings of the Eleventh International Joint Conference on Artificial Intelligence (pp. 332–337).

Berner, E. S., & Ball, M. J. (1998). Clinical decision support systems: Theory and practice. New York: Springer-Verlag, 1999.

Bharati, P., & Chaudhury, A. (in press). An empirical investigation of decision-making satisfaction in Web-based decision support systems. Decision Support Systems.

Biswas, G., Abramczyk, R., & Oliff, M. (1987). OASES: An expert system for operations analysis—The system for cause analysis. IEEE Transactions on Systems, Man, and Cybernetics, 17(2), 133–145.

Bounds, D. G., Lloyd, P J., Mathew, B., & Waddell, G. (1988). A multilayer perceptron network for the diagnosis of low back pain. In Proceedings of IEEE International Conference on Neural Networks (Vol. 2, 481–489). San Diego, CA.

Buchanan, B. G., & Shortliffe, E. H. (1975). A model of inexact reasoning in medicine. Mathematical Biosciences, 23, 351–379.

Buchanan, B. G., & Shortliffe, E. H. (1985). Rule-based expert systems: The MYCIN experiments of the Stanford Heuristic Programming Project. Reading, MA: Addison-Wesley.

Cabrero-Canosa, M., Castro-Pereiro, M., Graña-Ramos, M., Hernandez-Pereira, E., Moret-Bonillo, V., Martin-Egaña, M., & Verea-Hernando, H. (2003). An intelligent system for the detection and interpretation of sleep apneas. Expert Systems with Applications, 24(4), 335–477.

Chau, P., & Hu, P.J. (2002). Examining a model for information technology acceptance by individual professionals: An exploratory study. Journal of Management Information Systems, 18(4), 191–229.

Danek, M. S. (n.d.). Causes of low back pain. Retrieved from http://www.back.com/causes.html

Dempster, A. P. (1967). Upper and lower probabilities induced by a multi-valued mapping. Annals of Mathematical Statistics, 38, 325–339.

Engelrecht, R., Rector, A., & Moser, W. (1995). Assessment and evaluation of information technologies. Amsterdam, Netherlands: IOS Press.

Gordon, J., & Shortliffe, E. (1985). A method for managing evidential reasoning in a hierarchical hypothesis space. Artificial Intelligence, 26, 323–357.

Graham, J. H., & Espinosa, A. (1989). Computer assisted analysis of electromyographic data in diagnosis of low back pain. IEEE International Conference on Systems, Man and Cybernetics, 3, 1118–1123.

Heckerman, D. E., Horvitz, E. J., & Nathwani, B. N. (1992). Toward normative expert systems: Part I: The Pathfinder Project. Methods of Information in Medicine, 31, 90–105.

Jackson, D., Llewelyn-Phillips, H., & Klaber-Moffett, J. (1996). Categorization of low back pain patients using an evidence-based approach. Musculoskeletal Management, 2, 39–46.

Kahneman, D., Slovic, P., & Tversky, A. (1982). Judgment under uncertainty: Heuristics and biases. Cambridge, England: Cambridge University Press.

Kak, A. C., Andress, K. M., and others. (1990). Hierarchical evidence accumulation in the PSEIKI system and experiments in model-driven mobile robot navigation, North-Holland, pp. 353–370. In Uncertainty in Artificial Intelligence. New York: Elsevier.

Knauf, R., Gonzalez, A. J., & Abel, T. (2002). A framework for validation of rule-based systems. IEEE Transactions on Systems, Man and Cybernetics, 32(3, Part B), 281–295.

Leaper, D. J., Horrocks, J. C., Staniland, J. R., & deDombal, F. T. (1972). Computer assisted diagnosis of abdominal pain using estimates provided by clinicians. British Medical Journal, 4, 350–354.

Lin, L., Sheng, O.R.L., Hu, P., & Pirtle, M. (2002). Adaptive medical knowledge management: An integrated rule-based and Bayesian network approach. In Proceedings of the Tenth Annual Workshop on Information Technologies and Systems.

Nachemson, A. L. (1985). Advances in low-back pain. Clinical Orthopedics and Related Research, 200, 266–278.

Przytula, K. W., & Thompson, D. (2000). Construction of Bayesian networks for diagnostics. IEEE Aerospace Conference Proceedings, 5, 193–200.

Shafer, G. (1976). A mathematical theory of evidence. Princeton, NJ: Princeton University Press. Shortliffe, E. H., & Davis, R. (1975). Some considerations for the implementation of knowledge-based expert systems. SIGART Newsletter, 55, 9–12.

Smith, A. E., Nugent, C. D., & McClean, S. I. (2003). Evaluation of inherent performance of intelligent medical decision support systems: Utilizing neural networks as an example. AI in Medicine, 27(1), 1–27.

Suojanen, M., Andreassen, S., & Olesen, K. G. (2001). A method for diagnosing multiple diseases in MUNIN. IEEE Transactions on Biomedical Engineering, 48(5), 522–532.

van DerGaag, L. C., Renooij, S., Witteman, C.L.M., Aleman, B.M.P., & Taal, B. G. (2002). Probabilities for a probabilistic network: A case study in oesophageal cancer. AI in Mediane, 25(2), 123–148.

Vaughn, M. L., Cavill, S. J., Taylor, S. J., Foy, M. A., & Fogg, A.J.B. (1998). Interpretation and knowledge discovery from a MLP network that performs low back pain classification. IEEE Colloquium on Knowledge Discovery and Data Mining, pp. 2/1-2/4.

Vaughn, M. L., Cavill, S. J., Taylor, S. J., Foy, M. A., & Fogg, A.J.B. (1999). Using direct explanations to validate a multi-layer perceptron network that classifies low back pain patients. In Proceedings of the 6th International Conference on Neural Information Processing (Vol. 2, pp. 692–699).

Waddell, G. (1987). A new clinical model for the treatment of low back pain. Spine, 12(7), 632–644.

Walczak, S., Pofahl, W. E., & Scorpio, R. J. (2003). A decision support tool for allocating hospital bed resources and determining required acuity of care. Decision Support Systems, 34(4), 445–456.

Zadeh, L. A. (1983). The role of fuzzy logic in the management of uncertainty in expert systems. Fuzzy Sets and Systems, 11, 199–227.

Teleradiology Case: Present and Future

Yao Y. Shieh, Mason Shieh

We have introduced a case on “Teleradiology” here as this technology often incorporates the use of e-DSS and e-diagnosis on the part of teleradiologists. However, the case is an expansion of e-medicine and telemedicine discussion, a central theme of the e-health domains explored throughout Part Three of this text.

Telemedicine can be defined in a broad sense as any medicine-related interaction between a user and a provider that is not done face-to-face. The provider can be either a human being or an intelligent entity such as a search engine, an interactive video demonstration program, or a rule-based inference engine. The communication channels do not have to be identical in both directions. For instance, the user can send image data to a provider via satellite, and the provider can respond with a diagnostic report by telephone. Among others, telemedicine covers the following important scenarios:

- E-consultation: A patient (or a generalist) taps into the expertise of a physician (or a specialist) located at a geographically distant site.

- On-line scheduling: An informed e-patient consults Web sites such as CSS Credentialing (www.tese.com) and Certified Doctor (www.certifieddoctor.com) for relevant information, leading to an on-line appointment with a prospective physician.

- Decision support: A patient uses software as an aid in choosing among treatment options. Examples of such software include an interactive video program provided by Duke University Medical Center or the Comprehensive Health Enhancement Support System produced by University of Wisconsin.

- Remote patient monitoring: Health status signals of patients with chronic illnesses are sent to a monitoring center for regular monitoring.

- E-learning: A physician takes on-line continuing medical education courses, or an e-consumer surfs the Internet for on-line health information.

Of the five scenarios, e-consultation receives the most attention and is therefore the focus of this case discussion.

Teleradiology: A Booming Niche in Telemedicine

Despite its great potential, telemedicine, by and large, has had minimal usage. In addition to the cost of technology and the lack of third-party reimbursement, limited usage of telemedicine has been attributed to cultural factors such as a lack of education in the use of telemedicine equipment and a lack of communication between physicians and videoconferencing engineers. However, one telemedicine niche, teleradiology, is an exception; it has enjoyed rapid proliferation in the past decade. Following two decades of research on the display and management of digital medical images, teleradiology is moving ahead of most other e-subspecialties.

Four elements separate teleradiology from other telemedicine services:

- Endorsement of the Digital Imaging and Communications in Medicine (DICOM) standard by the American College of Radiology ensures interoperability among products from different vendors. Open competition made possible by standardization has driven rapid advancement of technologies and provided large cost reductions.

- Teleradiology comes as a free by-product of picture archiving and communications systems (PACS). Many radiology departments have gradually abandoned film-based operation to embrace soft-copy reading because of PACS. As a result, a radiologist can read images anywhere, anytime, as long as he or she has access to a reading station that is connected to a PACS server. Consequently, there is no additional cost in supporting a teleradiology operation. In contrast, nonradiologic telemedicine is very different from routine, daily medical practice and therefore requires an additional investment for videoconferencing equipment as well as additional personnel support.

- Teleradiology is non-interactive by nature. Most telemedicine services require that the physician interact with the patient in real time. Thus, telemedicine demands extra effort to coordinate participants, which can be very frustrating and resource-consuming. In contrast, requests for teleradiology consults can be answered when it is convenient for radiologists.

- Teleradiology enjoys exceptional acceptance as far as reimbursement is concerned. Private insurance companies and government agencies such as Medicare and Medicaid have been reluctant to reimburse for general telemedicine services. In contrast, teleradiology has been fairly uniformly recognized by third parties (Thrall and Boland, 2002).

Teleradiology has come a long way from its initial status as a low-end system to supplement evening and weekend coverage. In the past few years, teleradiology has evolved into a core technology of virtual radiology departments in which radiologists can read images anytime, anywhere within an institution. With the rapid growth of the Internet, teleradiology faces the new challenge of being a potential core technology in a global e-health care infrastructure, readily accessible at an affordable cost to patients and health care providers.

Challenges that teleradiology faces as it progresses toward fulfilling its potential include the following:

- Digitization across the spectrum of modalities

- Evolution into a multisite, multispecialty teleradiology network

- Evolution into cross-disciplinary telemedicine

- Equitable teleradiology service for the underserved

- Telecommunication networks

- Sophisticated data compression

- Reimbursements to promote remote teleradiology

- Patient confidentiality and security

Each of these challenges is discussed in the following sections.

Digitization Across the Spectrum of Modalities

Mammography is the last remaining modality that needs to be converted into digital format to achieve total digitization of radiology. Although a small number of digital mammography products have received clearance from the Food and Drug Administration, digital mammography remains immature. Standard mammography provides higher resolution, which is important in detecting early breast cancer. In addition, digital mammography costs about four to five times more than standard mammography. It will take time for the technology to mature and the cost to drop so that digital mammography can be widely accepted.

Evolution into a Multisite, Multispecialty Teleradiology Network

Teleradiology was first developed in the 1980s in order to supplement evening and weekend coverage. It was meant for casual use that involved relatively small amounts of image and text data, with no long-term image archiving or automatic pre-fetch of previous studies for comparison. Since the advent of PACS, teleradiology operation has become routine within many hospitals, even to the extent of crossing organizational, regional, and national boundaries.

The radiology departments of Texas Tech University Health Sciences Center (TTUHSC) at Lubbock and El Paso are networked by permanent T-1 links to form a multispecialty radiology entity. The two campuses, which are 300 miles apart, benefit from complementing each other's subspecialties (X-Rays, MRI, CT Scanning, Digital Imaging) as well as workload sharing. Routine teleradiological interpretation service is also provided by TTUHSC to Lubbock Cancer Center. The ultimate goal is to achieve a global teleradiology network from which patients, radiologists, health care staff, and researchers in any part of the world can benefit.

Evolution into Cross-Disciplinary Telemedicine

Over the past decade, the technology of teleradiology has matured; it can now provide advanced functions such as work lists related to the patient's images to be analyzed, long-term archiving, and pre-fetching of earlier studies within the jurisdiction of the radiology department. In the modern information era, however, a radiologist also needs relevant clinical information generated outside the radiology department, such as laboratory test results, electrocardiography reports, pharmacy reports, and medical histories, in order to deliver optimal health care. Unfortunately, during the past few decades, clinical data have been fragmented and isolated in the health care departments where they are collected. Consequently, these data often are incompatible and cannot be exchanged efficiently.

Electronic health records (EHRs) enable effective data sharing between different departments by creating a centralized repository of electronic health records. A patient's data, collected from multiple sources, are filtered and translated by an interface engine into a common format before they are deposited into EHRs. With this technology, the patient's data can be accessed and viewed from any application program.

An even more ambitious concept called a virtual medical record (VMR) has also been proposed. A VMR would assemble a patient's medical record from various sources without drawing from a centralized repository, thus enabling collection of patient medical information that spans institutional, regional, and even national boundaries. However, VMRs require that all participating systems adopt a common methodology for linking various databases. This is the next step that TTUHSC is considering.

Recently, the Integrating the Healthcare Enterprise (IHE) initiative, sponsored by the Radiological Society of North America and the Healthcare Information and Management Systems Society, has been promoting the use of established standards such as DICOM and Health Level Seven (HL7) in order to support optimal patient care. Encouraged by their initial success with Health Information System, Radiological Information System, and PACS integration in support of optimal radiology operations, IHE sponsors are now trying to extend this initiative to other medical fields.

Equitable Teleradiology Service for the Underserved

Since the late 1970s, the World Health Organization has promoted the concept of equitable primary health care: provision of essential health care, using practical and scientifically and socially sound methods and technologies, to those who need it, at an affordable price. Although teleradiology has flourished in the past few years, most of its growth has been confined to metropolitan areas. Little progress has been accomplished in rural areas, where teleradiology service arguably is needed the most.

Radiology service in remote or rural areas often suffers from the problems of out-of-date radiology equipment, inadequately trained technologists, and lack of access to modern telecommunication infrastructures. Due to the low volume of radiographic examinations at any one site, it is difficult for a typical remote clinic to bear the financial burden of maintaining modern radiographic equipment. A more practical approach is sharing of costs by multiple clinics in the same general region.

Cost sharing can be done either with mobile modality equipment or with a hub-spokes topology. A mobile modality truck can travel from one town to another on a round-robin basis. The TTUHSC's mobile mammography service in the West Texas region provides a working example. Other popular mobile modalities include computed tomography, magnetic resonance, and positron emission tomography. Each clinic would contribute in proportion to the image volume it generates. A recent study showed that hospitals must have an annual volume of 1,500 to 2,000 magnetic resonance procedures to hold their cost per procedure to that of a mobile company (Fratt, 2002).

A hub-spokes topology stations modality equipment in a specific clinic, preferably one located in the geographic center of the region. This clinic is the hub, and the other clinics are the spokes. Instead of traveling a long distance to a metropolitan clinic, patients in the region travel a shorter distance to this hub to have radiological procedures done. Presumably, the hub clinic would contribute more financially than other clinics because of the special convenience it enjoys.

Between 1996 and 1998, the TTUHSC Radiology Department maintained an innovative teleradiology service in a triangular rural area in West Texas surrounding three rural hospitals, none of which had a resident radiologist. A radiologist recruited from that region hopped from one hospital to the next. While he was working at one hospital, images generated at the other two hospitals could be sent to him to read. This arrangement gave the radiologist the opportunity to perform special procedures, as well as to build good personal relationships with local referring physicians.

Traditionally, remote clinics have trouble retaining skillful radiologists because the low revenues generated by these clinics often cannot support technologists' high salaries. Combining the volume of procedures from several clinics, however, can generate sufficient revenues to recruit and retain registered technologists. Continuing education to keep technologists current on procedures and technology can be accomplished by allowing technologists to take on-line courses instead of having to travel long distances for training.

Telecommunication Networks

Extensive high-bandwidth telecommunication networks have been built worldwide, but populations in remote areas still have limited or no access to this type of infrastructure. Broadband telecommunication services such as direct subscriber lines (DSL) and integrated services digital networks (ISDN) are only available in select urban areas. Generally speaking, remote areas are limited to network service with bandwidth limited by regular telephone lines. The same factors, such as the high costs of installing fiber-optic cables, that limit bandwidth make populations in these areas the ones who need teleradiology service the most. Thus, in teleradiology, the notion of remoteness has more to do with affordable access to advanced network infrastructure than with geographic distance. For instance, a patient in Los Angeles can tap into the expertise of the M. D. Anderson Cancer Center in Houston through teleradiology much more easily than a patient in rural West Texas can, even though the patient in Los Angeles is several times farther away. The adoption of teleradiology in remote and rural primary health care settings requires equitable access to high-bandwidth network infrastructure, which thus is a limiting factor in the growth of the field.

Satellite communication presents a viable alternative in remote areas where no wired infrastructure exists. According to experts' forecasts, the satellite communication business will expand very rapidly to provide faster and cheaper communication service in the near future (Lamminen, 1999). Wireless technology is becoming popular in delivering e-health care. Some teleradiology functions can be performed using wireless personal digital assistants (PDAs). Wireless telephones with interactive video capability are already available in Japan. Given the rapidly advancing technology, it is expected that patient medical data will be exchanged routinely through wireless handheld devices in the near future.

Sophisticated Data Compression

The transmission of a typical radiographic study incurs significant latency if there is no high-bandwidth communication channel between the originating site and the consulting site. Data compression techniques aimed at reducing transmission time can be classified into two categories: lossless and lossy. Lossless compression results in a very moderate compression ratio and therefore is of limited help. Lossy compression can be done at a very high compression ratio, at the expense of losing diagnostically significant detail. Essentially, lossy compression is a low-pass filter technique that preserves the gross features and eliminates high-contrast details.