System Design

It is a distinguishing mark of a very good name that the plant should offer its hand to the name and the name should grasp the plant by the hand…

Carolus Linnaeus

Preface to Critica Botanica(1737)

Thirty years ago, when people thought of embedded systems, they primarily thought of custom computing machines built from electronic (discrete and integrated) circuits. In other words, most of the value of the work was in the hardware and hardware design; software was just some glue used to hold the finished product together. Yet with miniaturization of hardware, increases in speed, and increases in volume, commodity hardware has become very inexpensive. So much so that only high-volume products can justify the cost of building elaborate custom hardware. Instead, in many situations, it is best to leverage fast, inexpensive commodity components to provide a semicustom computing platform. In these situations, the final solution relies heavily on software to provide the application-specific behavior. Consequently, software development costs now often dominate the total product development cost. This is true despite the fact that software is so easily reproduced and reused from project to project.

Enter Platform FPGA devices. The embedded systems designer has a blank slate and can implement custom computing hardware almost as easily as software. The trade-off (there is always a trade-off) is that while configuring an FPGA is easy, creating the initial hardware design (from gates up) is not. For this reason, we do not want to have to design every hardware project from the ground up. Rather we want to leverage existing hardware cores and design new cores for reuse. This means we have to know what cores are already available, how these cores are typically used, how they perform, and how to build custom hardware cores that will be (ideally) reusable.

Another consequence of the shift from hardware to software is that system designers also have to understand much more about system software than the typical applications programmer, especially when designing for Platform FPGAs. Rather than simply writing applications that execute in the safe confines of a virtual address space found in a desktop system, we need to understand enough about system software (device drivers and operating system internals) to bridge the application code to our custom hardware. It is also necessary to understand what happens before the operating system begins along with the idea of using one platform (a workstation) to develop code for another platform (the embedded system). With commodity hardware, much of this complexity could be hidden from the average embedded systems programmer because the hardware vendor provided the tools and low-level system software. Not so when the hardware and software are completely programmable.

Therefore the aim of this chapter is to describe system design on a Platform FPGA. First we must discuss the principles of system design. Specifically, we address the metrics of quality design and concepts such as abstraction, cohesion, and reuse. Of course — as with many of the chapters in the text — whole books could be (and have been) written about each of these subsections. Our goal here is to provide enough of an introduction to address Platform FPGA issues. With a better understanding of design principles, we next consider the hardware design aspects, including how to leverage existing resources (base systems, components, libraries, and applications). Finally, the chapter concludes with the software aspects of system design. This includes the concepts of cross-development tools, bootloaders, root filesystem, and operating systems for embedded systems.

After completing the white pages of this chapter, the reader will have an abstract understanding of several central ideas in embedded systems design. Specifically:

• the principles of system design, including how to assemble Platform FPGA systems to be used in embedded system designs,

• the general classes of hardware components available to a Platform FPGA designer (and how to create custom hardware),

• the software resources, conventions, and tools available to develop the software components of an embedded system.

The gray pages of this chapter build on this knowledge with specific, detailed examples of these concepts in practice.

3.1 Principles of System Design

To manage the complexity of designing large computing systems we use a number of concepts. Abstraction, classification, and generalization are used to give meaning to components of a design. Hierarchy, repetition, and rules for enumeration are used to add meaning to assembled components. In this way, humans can develop software programs with tens of millions of lines of code, manage billion-dollar budgets, and develop multimillion gate hardware designs. This section focuses on some of the principles that guide good system design. This is far from an exact science and at times it is very subjective. The best way to read this section is to simply internalize the concepts, observe how they are applied to simple examples, and then consciously make decisions when you are building your own designs. In practice, it is difficult to learn good design skills from a textbook. It comes from experience and learning from others. Our goal here is to try to accelerate that learning by setting the stage and providing a common vocabulary.

3.1.1 Design Quality

To start we ask, what is “good” design? What is “bad” design? In short, system designs can be judged by many criteria and these criteria fall into one of two broad classes. External criteria are characteristics of a design that an end user can observe. For example, a malfunctioning design is often directly observable by the user. If the person turns up the volume on a Digital Video Recorder (DVR) and the volume goes down, then presence of the design flaw is obvious. However, there are many internal criteria that we also use to judge the quality of a design. These characteristics are inherent in the structure or organization of the design, but not necessarily directly observable by the user. For example, a user may not be able to observe the coding style used in their DVR but others (the manufacturer or a government procurement office) may be very interested in the quality of the design because it impacts the ability to fix and maintain the design. Clearly, some of these qualities can be measured quantitatively but many are very subjective.

A number of concepts and terms have been invented and reinvented in different domains and at different times. Hence, few of the terms that follow have universally accepted definitions. So when one author may use the term verification to casually mean that a system works with a set of test data, another author might call that validation (reserving that the term verification for designs has been rigorously proved to be correct).

The first set of terms is related to a system performing its intended function. The term correctness usually means the system has been (mathematically) shown to meet a formal specification. This can be very time-consuming for the developer but, in some cases, portions of the system must be formally verified (for example, if a mistake in the embedded system would put a human life at risk). Two other terms related to correctness are reliability and resilience. (In some domains, resilience is known as robustness.) The definition of reliability depends on whether it is applied to the hardware or software of the system. Reliable hardware usually means that the system behaves correctly in the presence of physical failures (such as memory corruption due to cosmic radiation). This is accomplished by introducing redundant hardware so that the error can be corrected “on the fly.” Reliable software usually means that the system behaves correctly even though the formal specification is incomplete. For example, a specification may inadvertently omit what to do if a disk drive fills to its capacity. A reliable implementation might stop recording even though the specification does not state it explicitly. Because most complete systems are too large to formally specify every behavior, a reliable system results in designers making correct assumptions throughout the design. The last term, resilience (or robustness), is closely related to reliability. However, whereas reliability focuses on detecting and correcting corruptions, resilience accepts the fact that errors and faults will occur and the design “works around” problems even if it means working in a degraded fashion. In terms of software, one can think of reliability as doing something reasonable even though it wasn’t specified. In contrast, resilience is doing something reasonable even though this should never had happened. Finally, there is dependability. This can be thought of as a spectrum, on one end is protection against natural phenomenon and on the other end malicious attacks. A dependable system shields the system from both. To help clarify the difference, consider the following three scenarios.

Correctness Example

As an example of correctness, consider the following example. Embedded systems are used in numerous medical systems and it is absolutely critical that the machine does not have any human errors in the design. This is usually accomplished by incorporating additional safety interlocks and formally proving the correctness of software-controlled, dangerous components. With the many different interacting components, one would describe all valid states mathematically and then prove that for all possible inputs, the software will never enter an invalid state. (An invalid state being defined as a state that could harm the patient.)

Reliability Example

For most applications, formally describing all valid states can be enormously taxing (and itself error prone) so frequently designers fall back on informal specifications. Informal specifications often unintentionally omit direction for some situations. This can occur, for example, when a product gets used in a perfectly reasonable, but unexpected way. The specification might state that a camera needs to work with USB 1.1, 1.2, or 2.0. Assuming future versions of USB remain backwards compatible, a reliable design would not stop working if it was plugged into a version 3.0 USB hub. Likewise, if a Platform FPGA was intended to fly on a spacecraft, one would expect that the system will be more vulnerable to cosmic radiation. A reliable (hardware) design might put Triple Modular Redundancy on critical system hardware and periodically check/update the configuration memory to detect corruption.

Resilience Example

Resilience and robustness are different from reliability. These become very important in an embedded system because the computing machines interact with the physical world and the physical world is not as orderly as simple discrete zeroes and ones. For example, many sensors change as they age. Actuators are often connected to mechanical machines that can wear out. A resilient design behaves correctly even when something that is not supposed to happen, happens. For example, a thermometer connected to an embedded system may be expected to be in an environment that will never exceed 100° Celsius. If, because the sensor has become uncalibrated, the sensor begins to report temperatures such as 101, 102, or 103, for example, then the system should behave sensibly. A reliable system would try to fix the result coming from the sensor; a resilient system might treat 103 the same as 100 and continue.

In addition to these three system design characteristics, many other terms are used to judge the quality of a system design. For example, verifiability would be the degree to which parts of the system can be formally verified, i.e., proven correct. The term maintainability refers to the ability to fix problems that arose from unspecified behavior, whereas repairability refers to fixing situations where the behavior was specified but the implementation was incorrect. We tend to think of maintainability as the ability to adapt a product over its lifetime (version 1 followed by version 2 and so on). A repairable system design allows for easy bug fixes — especially once the product is in the field (upgrading a version 1 product to version 1.1). Along the lines of maintainability is the idea of evolvability; the subtle difference is the changes are due to new features (evolvability) versus previous changes to existing requirements (maintainability). Of course, portability (a system design that can move to new hardware or software platforms) and interoperability (a system design that works well with other devices) are important measures of design quality as well.

By themselves, these “-ability” terms do not have quantities associated with them. Nonetheless, being conscious of them during the development of an embedded system can be constructive. When the system developer needs to make a decision, these terms provide a common vocabulary to discuss, document, and teach design. For example, given two options, the system designer can record their reasoning — i.e., this option will be more portable and maintainable. This is critical in design reviews and helps teach less experienced designers why a decision was made. Lacking a written justification, a beginner might easily assume the decision was arbitrary. Or worse, without a concise way of describing decisions, the option not taken ends up not even being documented. The less experienced designer might not even realize a decision was made.

3.1.2 Modules and Interfaces

As suggested earlier, we are not going to be able to build very large systems by directly connecting millions of simple components. Rather, we use simple components to build small systems and use those systems to build bigger systems and so on. This is more commonly referred to as the bottom-up approach. We may also want to consider the design from a top level and work our way down defining each subcomponent in more detail, which is referred to as the top-down approach. Of course, these approaches are widely used in both hardware and software designs. (If one were to replace the last few sentences with subroutine or function in place of component, we could have just as easily been discussing the modularity of software designs.) Overall, these two approaches, or design philosophies, will be used throughout Platform FPGA design. The next few subsections will dwell on these in more detail.

First, we will use the general concept of a module to mean any self-contained collection of operations that has (1) a name, (2) an interface (to be defined next), and (3) some functional description. Note that a module could be hardware, software, or even something less concrete. However, for the moment, one can think of it as a subroutine in software or a VHDL component. We will expand on two key aspects interface and functional description, next.

There are two meanings to the term interface: usually one is talking about a formal, syntactical description, but for system design we have to also consider a broader definition. The term formal interface is the module’s name and an enumeration of its operations, including, for each operation, its inputs (if any), outputs (one or more), and name. It may also include any generic compile-time parameters and type information for the inputs and outputs. The general interface includes the formal interface and any additional protocol or implied communication (through shared, global memory, for example).

Broadly speaking, the formal interface is something that can be inspected mechanically. So if two modules should interact, then their interfaces must be compatible and a compiler or some other automated process can check the modules’ interactions. However, the general interface is not so carefully codified. Someone may think “how a module is intended to be used” and this cannot, in general, be checked automatically.

To make these concepts more clear, consider a pseudorandom number generator. (For those not familiar with the pseudorandom number generators, there are two main operations. The first “seeds” the sequence by setting the first number in the sequence. The second operation generates the next number in the sequence.) The formal interface might include two operations and a name drand48:

void srand48(long int seedval)

double drand48(void)

The general interface includes the way to use these operations; for example, the function called srand48 is used in order to seed the pseudorandom number generator and drand48 produces a stream of random numbers. How to interact with the module is part of its general interface, but is not formally expressed in a way that, for example, a compiler could enforce.

Other cases of general interface occur when some of the inputs or outputs of a module are stored somewhere in shared memory. For instance, a direct memory access (DMA) module will have a formal interface that includes starting addresses and lengths, but its general interface also includes the fact that there are blocks of data stored in RAM that will be referenced. To be more explicit, the DMA engine transfers a block of data, but is ignorant of its internal format.

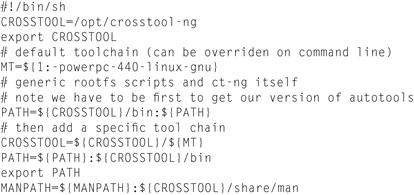

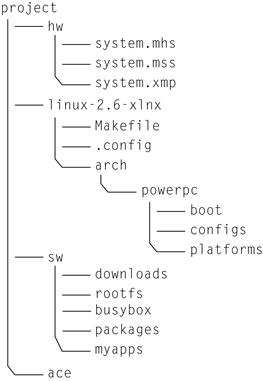

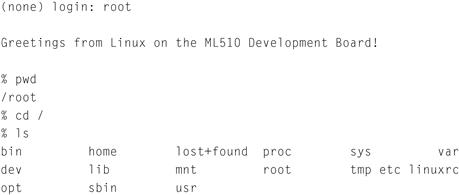

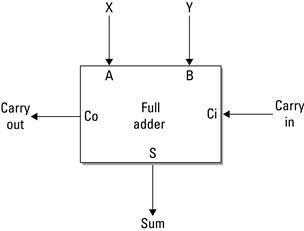

A module can also include a functional description. The des-cription can be implicit, by which we mean the name is so universal that by convention we simply understand what the function is. For example, if a module is called a “Full Adder” (Figure 3.1), we do not need to say any more because the functionality of a full adder is well known.

Figure 3.1 Implicit module description of a full adder.

The functional description can be informal, which means its intended behavior is described in comments, exposition, or narrative. This is a very common way of describing a module: someone records the functionality in a manual page or some document or in the implementation as comments. For example, when describing the full adder in narrative we could state:

The full adder component will add three bits together, X, Y and a carry in bit. The addition will result in both sum and carry out bits.

The functional description can also be formal where the behavior is either described mathematically (in terms of sets and functions) or otherwise codified, such as a C subroutine or a behavioral description in VHDL, for example:

– Assign the Sum output signal

S <= A xor B xor Ci;

– Assign the Carry Out output signal

Co <= (A and B) or (A and Ci) or (B and Ci);

Graphically, a module is very simple to denote, it can be as simple as just a box. If we wanted to be more specific, we can give a module a name, which, using a relatively new standard, is shown with a colon (:) followed by the name. In a design, we might want to distinguish between a module (a component in our toolbox) versus an instance (a component being used in a design). Instances (formally defined later) are shown with the module name underlined. Finally, we might want to have multiple instances in our design. If there is no ambiguity, we simply show multiple (underlined) boxes with the same module name, as is seen in Figure 3.2(a). However, if we want to make the distinction clear or if we need to refer to specific instances, we can give them names, illustrated in Figure 3.2(b). This is accomplished by putting a unique, identifying name to the left of the colon and module name.

Figure 3.2 Two instances of a module system: (a) the default instance format and (b) the id to give the instance a unique name.

Two more related terms are implementation and instance. An implementation (of a module) is some realization of the module’s intended functionality. Of course, a module may have more than one implementation (just like in VHDL where an entity may have more than one architecture). An instance is use of an implementation. In software, there is generally a one-to-one relationship between an implementation and an instance because the same instance is reused in time. However, in hardware it is common to use multiple copies of an implementation; each copy is an instance. (The verb instantiate means “to make a copy of an instance.”)

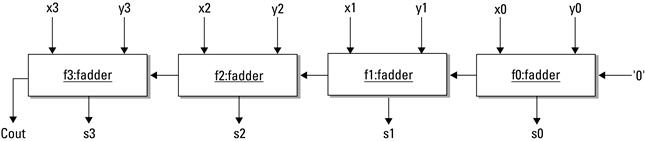

Graphically, we distinguish an instance from a simple module by underlining the name in the box. If we want to highlight the fact that there are multiple instances of the same object, we can name the instances by labeling the module with an instance name, colon, module name. For example, Figure 3.2 show two instances of a module. Figure 3.3 is a simple example of four 1-bit full adders being instantiated to produce a 4-bit full adder.

Figure 3.3 Four formally defined modules to generate a 4-bit full adder from 1-bit full adders.

3.1.3 Abstraction and State

Now we are ready to define two major concepts in system design: abstraction and state. These concepts are applied to the modules of a system and are described here. The dictionary defines abstract as an adjective to do with or existing in thought rather than matter (as in abstract art). The verb means to take out of, extract, or remove; in short, to summarize. An abstraction is the act or an artifact of abstracting or taking away; an abstract or visionary idea. Hence, a module is an abstraction of some functionality in a system. We will talk about wanting to make good abstractions — and we’ll discuss the mechanics shortly — but, first, let’s make sure we understand abstraction.

A module is a good abstraction if its interface and description provide some easily understood idea, but its implementation is significantly more complex. In other words, a good abstraction captures all of the salient features of an idea and cuts out all of the details unimportant to realizing the idea.

Our goal in creating abstractions is to overcome the fact that humans can only keep a relatively few number of things in our short-term memory. Typically, psychologists will say that we manage seven items at a time. Individuals will vary but the idea of keeping 100,000s of details on hand in short-term memory is not feasible. So what a good abstraction does is create an uncluttered, coherent picture of the module while shielding us from details that are not immediately relevant. If it is a bad abstraction, it forces us to think not about the module as a single entity, but rather makes us think about how it is implemented. We will see that a good abstraction is also important in reuse.

A great example of how a good abstraction can serve us comes from a subway map. A map of the London subway was first published in 1908. The map was rich in detail: it shows the exact route that the trains take, it is drawn to scale, it shows when the train is above ground, and it even shows rivers and other features.

However, take the perspective of a rider. If I walk up to the map, what am I looking for? Most likely, I want to know what train I need to get on. I am trying to get from point A to point B, and I need to know quickly (before my train departs!) which train I need to hop on. So information such as whether the train travels above ground or below ground doesn’t matter to me. The number of turns a train takes or whether it travels east-west for a while is irrelevant to whether it gets me to point B.

In 1933, the London Underground map was changed. The new map created a bit of stir because a number of people felt like it was less informative. One might ask, what does it hurt to have extra information? The answer is subtle: by abstracting away much of the detail, the map could print the station names in a larger font. By not being true to the relative distances and physical locations of stations, the size of the map could be made smaller. Removing physical features, such as rivers, allowed the train lines to be drawn thicker. The results of these changes make the map much more readable. Functionally trumps literal correctness.

This is the goal of good abstraction: hide the details that do not serve a purpose. If the primary function is to be able to walk up to the map and make a fast decision about which train to get on, then readability is the most important feature. All of the extra information harms this function by distracting the user. So it is with reusable components.

Next, we want to consider another key concept: state. Hardware designers are very familiar with idea of state because it is explicit in the design of sequential machines and we can point to the memory devices (flip-flops) and say, “that’s where the state is stored.” However, in system design, it is a little less concrete because a module’s state is stored in multiple places and in different kinds of memory devices (flip-flops, static RAM, register files, … even off-chip).

Formally, state is a condition of memory. We say something “has state” or is “stateful” if it has the ability to hold information over time. So an abacus and blackboard both have state. A sine wave does not have state. (Note: a sine wave is closely tied to the sense of time and it does not hold information over time.) In general, anything that is (strictly) functional does not have state. Most handheld calculators nowadays have state (they can keep a running total, for example). However, it is possible to build a calculator that does not have state.

Our interest in state has to do with identifying the state in a module. In the mechanics of system design, we will need to separate functionalities into modules, and abstraction and state are going to be major factors in how we derive a module. Because state in a module is not as explicit, the designer needs to consciously identify the states a module can be in and what operations might change that state. In short, good abstraction and careful management of state will lead to good modules and improve the design.

3.1.4 Cohesion and Coupling

The concepts are abstraction and state; the measures are cohesion and coupling. Cohesion is a way of measuring abstraction. If the details inside of a module come together to implement an easily understood function, then the module is said to have cohesion.

Coupling is a measure of how modules of a system are related to one another. A system’s coupling is judged by the number of and types of dependencies between modules. Explicit dependence exists when one module communicates directly with another. For example, if the output signal of module A is the input to another module B, then we say that A and B depend on each other. In software, if A might invoke a function in module B, then we say A depends on B (but B does not necessarily depend on A). The rule for determining dependence is “if a change to module A requires a designer to inspect module B (to see if the change impacts B), then B depends on A.

However, and this is where state comes into play, dependence is not always explicit. Two modules can be dependent in a number of ways. If two modules share state, then there is dependence. For example, if one module uses a large table in a Block RAM to keep track of some number of events and another module will occasionally inspect that table, the latter is dependent of the former. If someone wants to make a change to the format of the table, that change will impact the latter module. This is where explicitly identifying state becomes critical to the formation of modules within a system.

Dependence can crop up in even more subtle ways. Two modules may not have any shared state and may not explicitly communicate via signals or subroutine calls but are dependent. For example, they may be dependent because the system may rely on them completing their tasks at the same time. Hence, the system is coupled in time. Another more esoteric example might be in an embedded system that has sensors and actuators. Two modules may not communicate directly, but if one module is changing an actuator that another module senses, there may be a dependence. Dependence in itself is not bad. Indeed, some dependence is necessary between modules because, we assume, the modules are designed to work together to form the system. What we are interested in is the degree of coupling in the system — that is, the number and type of dependencies.

In general, explicit dependencies that arise from formal interfaces are the best forms of dependence, and a system composed of modules with a unidirectional sequence of dependencies will generally lead to good quality designs. However, if there are many implicit dependencies, circular dependencies (where one module A depends on module B and B depends on module A), or large numbers of dependencies, then chances are the design can be improved.

One way of reducing coupling in a system is through encapsulation. Encapsulation involves manipulating state and introducing formal interfaces. The idea is to move state into a module and make it exclusive (not shared). Often called “information hiding,” it sounds overly secretive, but it is a very effective technique. One consequence is that if one wants to change the module, then there is much more freedom to do things like change the format of the state without the risk of introducing a bug into another module. If the module has good abstraction, then information hiding also allows the module be reimplemented in isolation. All that is necessary is to keep the interface the same.

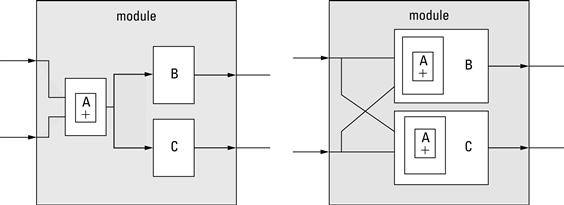

Coupling is the result of dependencies between modules. Some coupling is inevitable. What kind of system is composed of modules that do not interact at all? The goal here is to avoid coupling when it is unnecessary. A number of techniques will manipulate the degree of coupling. For example, consider the two (very simple) designs in Figure 3.4.

Figure 3.4 (a) Original design and (b) modified design with lower coupling.

In the first design of Figure 3.4(a), the two inputs to the module, x and y, are summed together in submodule A and the results are passed to the submodules B and C. In the second design, the summation is duplicated inside each of the submodules. In the first design, we had two dependencies — submodule B depends on submodule A and submodule C depends on submodule A. In the second design there are no submodule dependencies, so we have clearly reduced the coupling in the design.

What is the advantage? It may be hard to see the advantage in this example because submodule A is so simple and is unlikely to change. However, suppose submodule A was originally designed to work only on unsigned numbers. Over time, it was determined that submodule C also needed to be able to work with signed numbers. So a designer that is looking at modifying submodule C would necessarily have to change both C and A. However, if one changes A, then one must consider the effect on submodule B. Perhaps B will work fine with the change to A. However, the point is that systems with a high degree of coupling have this cascading effect where a simple change cannot be made in isolation. Rather, coupling forces the designer to consider the whole module and understand everything in order to ensure that a change does not break something.

There are two disadvantages to this change. One might argue that we have traded an explicit measure of quality (design size) for a subjective improvement in another measure of quality (maintainability). However, this change does not necessarily increase the design size! In this case, it is entirely possible that submodule A’s functionality will simply merge into the CLBs already allocated by the submodules B and C. Hence, it is possible that there is no net gain in CLBs allocated. Of course, this is not always true and one has to weigh the costs — would the extra CLBs require a larger FPGA?

A second disadvantage to duplicating submodules in order to decrease coupling is that the designer now has to maintain the same component in two places. So if a bug is discovered in submodule A, then it is fixed once in the first design. However, in the alternative design, one has to fix the bug in both submodules B and C. (A word from the trenches: one person’s bug might be another person’s feature. So A may be performing in a way that doesn’t match its description and B requires that it be fixed; however, module C may actually depend on the incorrect behavior to work!)

Wrapping up this discussion, it should be clear that many factors go into applying the design principles described. As this example shows, there are a number of trade-offs for even very simple designs!

3.1.5 Designing for Reuse

In addition to improving design quality, another use of these design principles is to make reusable components. With the increasing complexity of designs, it is in our favor to construct designs with the intention of their reuse. To get started, we must first understand what is necessary to create and identify reusable designs. One indicator is with high cohesion and low coupling, which leads to reusable design components. Note the hidden costs though:

Essentially, RCR says that you have to read the documentation and understand how to use the module before you can reuse it and RCWR says that someone has to put extra effort into designing a module for others to reuse Poulin et al. (1993). For example, when writing a C program to copy data, say 32 bytes of data, we could write our own for-loop to exactly copy the data. We could reuse this loop and possibly generalize it over time (copy words versus bytes, a variable size, or forward versus backward). In contrast, one could learn the “string.h” module in the standard C library. This module provides a rich set of data movement operations. These include operations such as strcpy, strncpy, memcpy, and memmove. The trade-off is learning how to use strcpy versus the time it takes to create your own copying function. In some cases, it could be easier to generate your own in place of learning a potentially complex component. This would suggest that the RCR of the module is fairly high and this discourages reuse. Another cost associated with reuse is RCWR, which is the cost associated with making your custom-created component fully reusable.

One way of managing RCWR is to take an incremental approach: design a specific component for the current design. If it is needed again, copy-and-generalize it. Over several designs, add the generality needed to make it a reusable component.

In VHDL, this can be done through introducing generics into the design. Moreover, one point of doing custom computing machines is to take advantage of specialization! So simply adding generality without leaving the option of generating application-specific versions through generics is counterproductive.

Refactoring is the task of looking at an existing design and rearranging the groupings and hierarchy without changing its functionality. Figure 3.4 illustrates refactoring. Often, it is done to make reusable components. The common use of refactoring is to improve some of the implicit and explicit quality measures mentioned in subsection 3.1.1.

One final word about testing. The value of reusable components is clear. But, of course, there is the danger that components might be refactored and accidentally change their functionality. Regression testing is used to prevent this. It usually is automated and might be simulation driven (à la test benches) or it may be a set of systems that wraps around the component and exercises its functionality. (Multiple systems are needed because one wants to also test all of the generics that are set at compile-time.)

3.2 Control Flow Graph

Throughout the text we use the idea of a software reference design. There are many ways to represent a system — from formal specifications to informal (but common) requirements, specification, and design documents to modeling languages such as UML. In addition to these representations, it is often common for a designer to build a rapid prototype — a piece of software that functionally mimics the behavior of the whole system, even hardware modules that have not been implemented yet. We refer to this software prototype as the software reference design. The major drawback of software reference design is the cost associated with creating it but, as a specification of the system, it has a number of advantages. The first is that it is generally a complete specification. (If there is any question about how the future system is to behave, one can observe how it behaves in the reference design.) Another advantage is that it is executable — a designer can gather valuable performance data from running the software reference design with specific input data sets. Finally, because the software reference design is computer readable, the specification can be analyzed by existing software tools.

Through the next several chapters we will assume that a software reference design exists. Here we show how computation in software reference design can be represented mathematically. The next chapter uses this notation to help make decisions about what parts of the system should be implemented in hardware versus software.

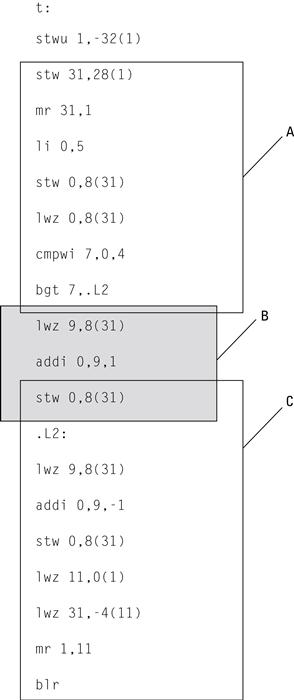

We do this by borrowing some concepts from compiler technology — primarily the control flow graph. The control flow graph summarizes all possible paths of program from start to finish in a single, static data structure. Formally, a Control Flow Graph (CFG) is graph ![]() where the vertices (or nodes) V of the graph are basic blocks and the directed edges indicate all possible paths that program could take at run time. A basic block is a maximal sequence of sequential instructions with a Single Entry and Single Exit (SESE) point. Figure 3.5 illustrates this definition. The first group of instructions (A) is not a basic block because it is not maximal — the first instruction (store word with update) should be included. The second group of instructions (B) is a basic block. The last group (C) is not a basic block because there are two places to enter the block (at the store word instruction after the add immediate, or by branching to label .L2).

where the vertices (or nodes) V of the graph are basic blocks and the directed edges indicate all possible paths that program could take at run time. A basic block is a maximal sequence of sequential instructions with a Single Entry and Single Exit (SESE) point. Figure 3.5 illustrates this definition. The first group of instructions (A) is not a basic block because it is not maximal — the first instruction (store word with update) should be included. The second group of instructions (B) is a basic block. The last group (C) is not a basic block because there are two places to enter the block (at the store word instruction after the add immediate, or by branching to label .L2).

Figure 3.5 Groups of instructions; (A) and (C) are not basic blocks, (B) is a basic block.

An edge (b1, b2) in a control flow graph indicates that after executing basic block b1 the processor may immediately proceed to execute basic block b2. If the basic block ends with a conditional branch, there are two edges leaving the basic block. If it does not end with a branch or if the last instruction is an unconditional branch, there will be a single edge out. Two special vertices in the graph, called Entry and Exit, are always added to the set of basic blocks. They designate the initial starting and stopping points, respectively.

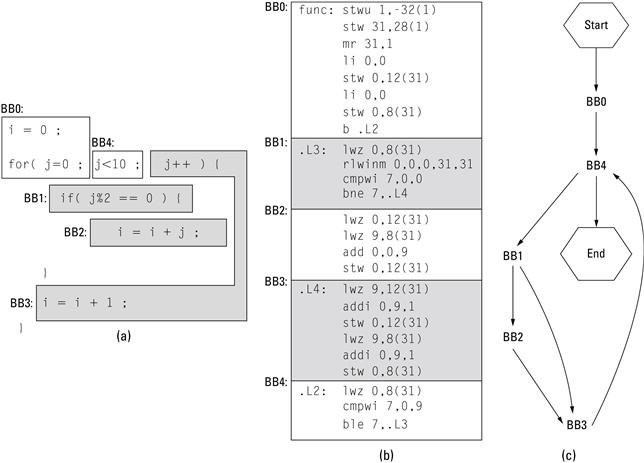

Informally, a basic block sequence of instructions that we know, by definition, will be executed as a unit. The edges in the CFG show all the potential sequences in which these units are executed. For example, the simple subroutine shown in Figure 3.6(a) has identified the basic blocks in a C program. In Figure 3.6(b), the C program has been compiled to PowerPC assembler code and the basic blocks have been identified. Finally, the control flow graph is illustrated in Figure 3.6(c). Note that it is possible to identify the basic blocks in a C file if one knows how the compiler emits assembly code. Unless it is obvious, we use assembly code to illustrate basic blocks.

Figure 3.6 Basic blocks in (a) C source code (b) translated to assembly (c) a control flow graph.

Compiler researchers and developers use the control flow graph in a number of ways. Often, graph algorithms applied to the CFG will result in a set of properties that guide transformations (optimizations) designed to improve the performance of the program. When combined with data dependence (see Chapter 5) the CFG can be used to determine what parts of the program can be executed in parallel (and thus implemented spatially in hardware). For now, our immediate need is to visualize the software reference design. The next chapter uses the basic blocks as the atomic unit that can be partitioned between hardware and software.

3.3 Hardware Design

Thus far, the discussion of system design has been high level and general. Next we turn toward hardware design and, very specifi-cally, the hardware components available to the Platform FPGA designer. We begin with a brief description about how these common architectural components evolved and then describe several of the broad classes of hardware modules available. This section ends with a general description of how the designer can expand their toolbox with custom hardware modules.

3.3.1 Origins of Platform FPGA Designs

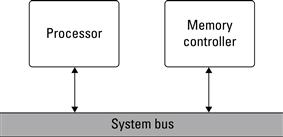

Simply put, designers rarely want to build an embedded system from scratch. To be productive, an embedded systems designer will typically begin with an existing architecture, remove the unneeded components, and then add cores to meet the project requirements. The processor-memory model, seen in Figure 3.7, which is basic desktop PC architecture, has worked well as a starting point. To begin, we briefly review some key computer architecture components so we are able to understand and use them in our Platform FPGA designs.

Figure 3.7 The fundamental process-memory model to be used as a base system in Platform FPGA designs.

The introduction of the IBM Personal Computer (PC) in 1980 had an enormous impact on the practice of building computing machines. The intent was to make a system that would appeal to consumers and hobbyists alike; therefore, low-level details of the system were readily available. This spurred third-party development of peripherals and (probably unintentionally) compatible machines from other manufacturers. As the speed of the microprocessor, volume of machines, and competition increased, the cost actually decreased. It became possible for manufacturers and vendors to try different computer architecture designs. Ultimately, the architecture that has evolved is what is common in today’s desktop computers.

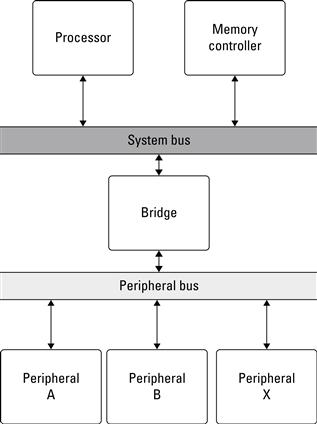

Later computers use a two-bus system where the processor and memory reside on a processor-specific system bus and the lower speed peripherals (serial ports, printers, video monitor) reside on a generic, standard peripheral bus. Figure 3.8 shows this arrangement, which allows the system components to evolve rapidly in terms of clock frequencies, voltages, and so on while maintaining compatibility with the third-party peripherals, which do not change as quickly.

Figure 3.8 The two bus process-memory model used to support parallel, independent high-speed and low-speed communication.

Embedded computing architectures have not changed much from this basic arrangement; in fact, this foundation has allowed designers to focus on improving the individual components. The bus model is arguably insufficient for certain application needs, but it serves the needs of general applications well. This organization makes a good starting point for our designs with Platform FPGAs. We can utilize the hard or soft processor core(s), on-chip memory and off-chip memory controllers, and peripherals to support system input and output to build a base system that resembles these computer organizations.

Platform FPGAs have adopted these two basic processor-memory model from the desktop computer architecture because it provides an established framework that can be built upon for custom designs. With the addition of existing components and cores, more complex systems can be constructed, often within a considerably shorter time frame than traditional embedded systems designs. In fact, in section 3.A you already assembled a simple Platform FPGA system when building the “hello world” FPGA example. Within this section we aim to go into more detail on what comprises a Platform FPGA base design (system). We use the the basic computer organization processor-memory model and expand on it to work up to a useful base system.

A valid question to ask at this point is “why create a generic base system when we are using FPGAs?” FPGAs by their very nature are programmable and application specific. In an ideal world where a designer’s time could be infinitely spent on the project, there were no deadlines, and money was no object, creating completely custom designs would make sense. Unfortunately, the ever-growing demand for first-to-market solutions requires designs to be up and running and brought to market quickly. FPGAs offer an additional advantage of providing field programmability to the mix, allowing a potentially less than ideal solution being initially offered, then updated in later revisions.

3.3.2 Platform FPGA Components

Chapter 2 discussed the components that exist in an FPGA, such as logic cells, blocks, and on-chip memory. While these components are useful in all FPGA designs, they will be the building blocks for much larger systems. With the ideas of modularity, cohesion, and coupling of components and designs, we want to begin to build base systems that can be used and reused as a starting point for embedded systems design. We have already discussed the strengths of this approach and introduced the prevalent organization with the processor-memory model. Because each design is different, it is obvious that the design will require modifications, but the processor is a good place to start.

Processor

Generally speaking, the processor offers the designer control and a familiar design environment. Even if the final design will require little or no involvement from the processor, its use within the rapid prototyping or early development stages can help rapidly evolve the design. For us, the processor is an obvious starting point when describing and building a processor-memory model design. In a Platform FPGA, two types of processors can exist, hard and soft core processors. Chapter 2 discussed the hard processor cores in detail and even gave examples of PowerPC 440 integrated in the FPGA fabric on the Xilinx Virtex 5 FX series FPGAs.

Other Platform FPGAs provide sufficient reconfigurable resou-rces that a soft processor core can be implemented in the logic blocks and surrounding resources of the FPGA. Soft processors offer a great deal of flexibility, as they are by their very nature configurable. Unlike hard processors, where functions have been fixed since fabrication, incremental improvements to a soft processor (such as the more recent addition of the memory management unit to the Xilinx MicroBlaze processor) provide the designer with a more flexible design approach.

While this could quickly turn into a long discussion on the advantages and disadvantages of processors in hardware versus software, we focus instead on the processor’s capabilities. For instance, even the most basic processors, requiring a minimal amount of resources (i.e., PicoBlaze), can operate in what is called stand-alone mode, offering only the most basic functionality (such as stdin/stdout). More advanced processors may include memory management units (MMU) to support more verbose operating systems (such as Linux). There are even processors that offer coherency with shared memory between multiple processors to create a multicore processor (similar to what exists in commodity desktop PCs).

Overall, knowing the processor’s role in the application can help dictate which processors can and cannot be used. For instance, the PicoBlaze is well suited for more complex state machines, but not for running Linux. Likewise, soft processors may offer wider flexibility when migrating from one FPGA device family to another (or even to a different FPGA vendor). Before choosing which processor will be the cornerstone of the design, consider the following questions:

• Does the FPGA include hard processors?

• Are there sufficient resources to implement a soft processor?

• What role will the processor play in your design?

• What type of software will be used on the processor?

• How much time will the processor be used versus hard cores?

Some of these questions may be easier to answer than others, but being able to address them before moving too far along the design process is important. Chapter 4 helps address questions regarding identifying suitable functions to be implemented in hardware versus software. This chapter is more concerned with construction of the platform and augmenting it to meet the design’s needs. If the FPGA does include a hard processor core(s), the initial design might be best suited to include its use rather than expend additional resources on a soft processor. If, however future generations of the system will include different FPGAs, it might make more sense to use a soft processor core that can be moved between FPGAs with as little effort as possible. Vendors and developers of soft cores should be able to provide enough information for a design to determine if it is feasible to include in the chosen FPGA.

Memory

In order for the processor to do any useful work, memory must be included to store operations and data. Different computer organizations and memory hierarchies could be discussed at this point. Whether the processor follows the Von Neumann or Harvard architecture or contains levels 1, 2, and 3 cache is arguably too low level for embedded systems designers. Instead, we focus on the following questions:

• What type of memory is available?

• Is there on-chip and/or off-chip memory?

• How much on-chip/off-chip memory is available?

• Is the memory volatile or nonvolatile?

• How does the processor interface with the memory?

• How does the system interface with memory?

As mentioned in Chapter 2, modern FPGAs include varying amounts of on-chip memory (often referred to as block RAM). The uses of this memory are wide and varied based on the application. The memory can be included within a component, core, or as part of the base system. The location of the memory dictates its interface and accessibility. For example, say a custom core includes a FIFO built from on-chip memory, it may have a standard FIFO interface (enqueue/dequeue), which only the custom core can access, or it may be accessible to a processor as a way of loading data into the custom core to be operated on (such as a single precision floating point addition core). When designing systems with on-chip memory needs, it is important to identify how it will be used within the system.

In the event the design requires more memory than is available on-chip, off-chip memory is required. There are many different forms of off-chip memory and knowing which type to use is a difficult decision that goes beyond the scope of this chapter. However, interfacing with the particular memory is important to address now. A memory controller is required to handle memory transactions, requests for access to memory in order to read or write data from a specific address. The memory controller is a soft core that can be configured to meet the specific needs of the memory it is interfacing with. For example, each DDR2 DIMM has specific operational characteristics that require complex state machines to interface with it. Fortunately, for the designer, many of these memory controllers have already been designed with generic parameters to allow for quick integration with different memory manufacturers. Within FPGA designs it is possible to include processor-centric memory access, where the processor issues all requests on behalf of the system, or to include Direct Memory Access (DMA), where cores within the system can request memory directly.

For both on-chip and off-chip memory it is difficult to provide strict design rules, as they can be used in such a variety of ways. However, utilizing them efficiently is of critical importance because the rate at which memory is increasing lags behind the processor Wulf & McKee (1995), and with the addition of multiple sources contending for a single resource, this demand for memory only further exacerbates the problem. Chapter 6 covers how to tackle memory bandwidth management questions more efficiently. Of key importance is configuring the system to tightly integrate the components needing low-latency access to memory and separating them from components that may not access memory as frequently or at all.

Buses

Now that we have described the two main components in a processor-memory model design, we must start to address the various ways to connect them. The simplest approach (beyond a strict point-to-point interface) is to provide a bus. The processor(s) and memory controller(s) connect to the bus via a standard bus interface. The bus interface is specific to the particular bus, but at the simplest level consists of address, data, read/write requests, and acknowledgment signals. The bus also includes a bus arbiter, which controls access to the bus. When a core needs to communicate with another core on the bus, it issues its request for access to the bus. Based on the current state of the bus, the arbiter will either grant access or deny access, causing the core to reissue its request. A core that can request access to the bus is considered a bus master. Not all cores need to be bus masters; in fact, many custom cores are created as bus slaves, which only respond to bus transactions.

A bus on an FPGA is implemented within the configurable logic, making it a soft core. For example, Xilinx uses IBM’s CoreConnect library of buses, arbiters, and bridges. More details regarding the CoreConnect library are presented in section 3.A. Some important design considerations need to be addressed when using buses.

• What cores will need to directly communicate?

• Do certain cores communicate more often than others?

• Do specific cores require a higher bandwidth between them?

As mentioned earlier, it is common to find a two-bus system in desktop computers. This is done to isolate the lower speed peripheral devices from higher speed devices (such as the processor and memory). In system design, it may be advantageous to put certain cores on one bus and others on a separate bus. By adding a bridge between the two buses, it is possible for cores to still communicate, although at the cost of additional latency.

System Bus

In multiple bus designs the bus with the highest bandwidth that connects the processor, memory controller, and remaining high-speed devices (such as a network controller) together is often referred to as the system bus. Xilinx uses IBM CoreConnect’s Processor Local Bus (PLB) as its equivalent system bus. When the number of cores needing access to the bus is relatively small, connecting all of the cores on a single bus is a logical, resource-efficient decision. In Platform FPGA designs, the system bus is the fundamental mechanism to communicate between the processor and custom hardware core. As the number of hardware cores grows, a decision must be made as how to most efficiently support these additional cores in combination with the already existing system. One solution is to introduce a second bus.

Peripheral Bus

A second bus may be added to separate the design into different domains. In some cases this is done for high-speed and low-speed designs. In others, it may be to provide a subset of the cores with a dedicated bandwidth for communication. In either event, addition of a second bus, often known as the peripheral bus, allows two arbiters to control communication across the two buses. With a single bus, if the processor was accessing data from the memory controller, any other cores needing to communicate would be required to wait for the memory transaction to complete. In a two-bus system, those cores could be migrated to the peripheral bus and allowed to communicate in parallel.

Bridges

In some cases it is necessary for a core on the system bus to communicate with a core on the peripheral bus. This requires the addition of a bridge. A bridge is a special core that resides on both buses and propagates requests from one bus to another. A bridge functions by interfacing as a bus master on one bus and a bus slave on the other. The slave side responds to requests that must be passed along to the other bus. The master side issues those requests on behalf of the original sender. Sometimes only a single bridge is required; if the peripheral bus only will respond to requests from the system bus, a system-to-peripheral bridge is required. However, if cores on the peripheral bus need access to the system bus (say for access to the off-chip memory controller), then a second bridge, a peripheral-to-system bridge, is required. The common nomenclature for describing the interfaces of a bus in terms of master side and slave side is as follows. The system-to-peripheral bridge means that the bridge is a slave on the system bus and a master on the peripheral bus. This may seem backward, but the reason is quite simple. In order to communicate from the system bus to cores on the peripheral bus, the bridge must respond to system bus requests (making it a slave on the system bus) and issue the request on the peripheral bus (making it a master on the peripheral bus).

Peripherals

Now that we have established a mechanism to connect cores together we should address some of the peripherals a system designer may add to a design. When we talk about peripheral cores, we usually are referring to hardware cores that interface to peripheral devices, such as printers, GPS devices, and LCD displays. Peripherals themselves are traditionally the components around the central processing unit. In our case, some peripherals (such as a video graphics adapter) may be entirely implemented in the FPGA, but often the peripheral is external to the FPGA and the hardware core provides the interface.

In Chapter 7, we dedicate the whole chapter to interfacing with the outside world. Here we simply mention common peripherals found in Platform FPGA designs.

A number of high-speed communication cores have been implemented as FPGA cores. There is a PCI Bridge and a PCI Arbiter — the former is needed to connect the FPGA’s system bus to an existing full-function PCI bus, whereas the latter includes the logic to create a full-function PCI bus. A variety of Ethernet cores are available for connecting the Platform FPGA to a (wired) Ethernet network. Likewise, a variety of USB cores provide support for different versions and capabilities. Many of the older (low-speed) communication cores have been implemented as well, including UARTs, I2C, and SPI.

As part of the principles of system design, building cores for reuse leads to the eventual accumulation of a library or repository of cores. These cores may provide functionality that many of the base systems need. For example, more and more cores are requiring Internet access whether it be for debugging purposes or to update an embedded system’s database; having a core that can be integrated quickly into a design that has been tested previously reduces design time significantly.

Building Base Systems

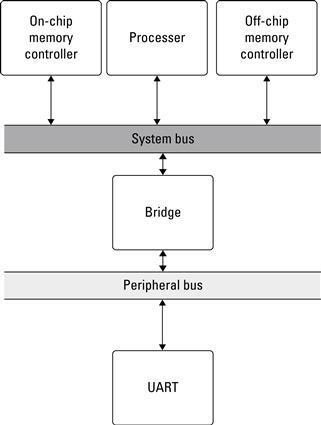

Enough talk, it is time to put these concepts together and build a simple base system, consisting of a process, on-chip memory, off-chip memory, and a UART for serial communication with a host PC. We are being a little generic still in terms of the actual core, but in section 3.A we will be more specific with respect to Xilinx FPGAs. Still, we have a processor, two types of memory, and a UART. We have yet to mention what would be used to connect these components together because that is a little more application specific. In some cases it makes sense to separate the high-speed and low-speed devices onto different buses.

In this example, there would be no immediate need for such a separation. Remember that this is the base system from which larger designs will be built. As a result we want to be flexible enough in our design to allow custom cores to be added without requiring significant changes to this base system. For that fact, we will include both a system bus and a peripheral bus. Figure 3.9 depicts this initial base system. Notice that with the addition of buses, we need to include a bridge. Because the UART is a slave on the peripheral bus, there is no requirement for adding a second bridge to allow it to master the system bus. If future designs require this, we can go back and add the peripheral-to-system bridge.

Figure 3.9 Block diagram of the base system consisting of a processor, on-chip and off-chip memory, and a UART.

While drawing boxes suffices for an academic approach, it is insufficient for practical implementations. We would not build this base system with schematic capture software because the number of signals and wires to connect would be enormous. Instead, we use hardware description languages. Using the bottom-up design approach, we could create a single HDL file and instantiate each of the components within it.

Not only do we need to connect all of the components input/output signals, we need to connect the input/output signals that go off-chip. For example, the UART includes transmit and receive pins that are routed off-chip to a RS232 IC to provide serial communication between the FPGA and the outside world. This requires additional constraints to be set to notify the synthesis tool that a signal is to be routed to a specific pad and pin.

In practice, even this amount of work is inefficient. FPGA and software tool vendors provide GUIs or wizards to help automate this process. For the beginning designer, the tools are a great starting point because they help the designer identify key components and how they are connected. For the more experienced designer, the tools and wizards may prove to be less useful.

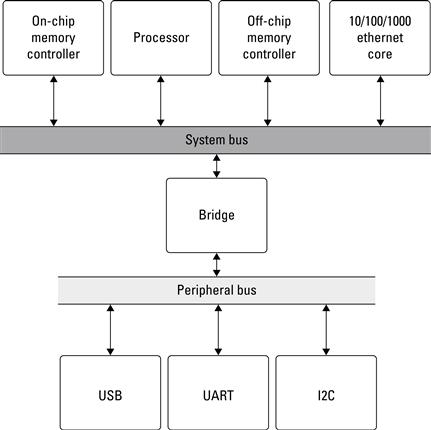

3.3.3 Adding to Platform FPGA Systems

Now that we have a base system, let’s go ahead and add some custom compute cores. Many embedded systems devices are now including some form of network interface, whether it be wired or wireless. For demonstration purposes, we will add a TCP/IP 10/100/1000 Mbit Ethernet network core. The network core will provide us with access to the FPGA from anywhere in the globe via a Web interface. Adding this core to the base system can be as simple as adding the instance to the top-level HDL file and updating the pin constraints file. We will add the network core to the system bus because as data are transferred to and from the FPGA, we will use the off-chip memory as a temporary storage buffer. Figure 3.10 is the modified block diagram of this base system. In addition to networking, we have also connected a USB and I2C interface to the peripheral bus just to start to round out the theoretical design.

Figure 3.10 Block diagram of the base system with the additional cores: networking, USB, and I2C.

The benefit of using the bus (or two-bus) within the design is it allows the system to be modified (adding or removing cores) with ease. As long as the new core has the same bus interface, typically a standard such as the PLB that is published and widely available, a systems designer just needs to connect up the signals. In the event that the new core is a slave on the bus, the new core will need to be assigned a unique address range from the system’s address map. The address range is how other cores on the bus will communicate with the new core.

Address Space

From a hardware perspective, the “location” of data is straightforward and easily identifiable. Off-chip memory is physically located outside of the FPGA’s packaging, typically as a separate memory module or, more commonly, as a Dual In-line Memory Module (DIMM). From a design perspective, accessing the memory is nothing more than through the memory’s address space. Off-chip memory may be addressable from the address range 0x30000000–0x3FFFFFFF, for a total of 256 MB of addressable data. Globally addressable on-chip memory, located on the same bus as the off-chip memory controller, may have an address range 0xFFFF0000–0xFFFFFFFF, for a total of 64 KB of addressable data. In Platform FPGA designs it is possible to automatically generate these address ranges or to set specific address ranges.

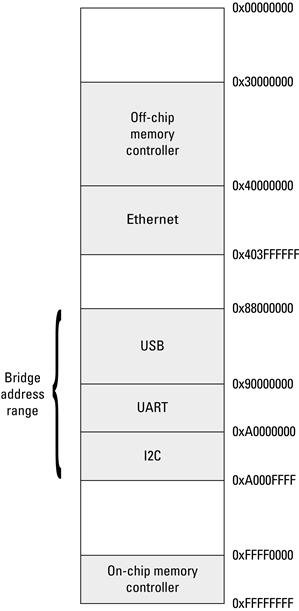

For on-chip memory that is only local to a compute core, the address range is user defined and commonly word addressable (instead of byte addressable). The address range is also important for any compute cores that must communicate with the processor. We mention this information here because up until now we have not interacted with a range of compute cores or memory. Figure 3.11 shows that address map for the two-bus base system mentioned previously. Each core that has an address range is at least a slave on the bus. The processor is a bus master only and therefore does not include an address range within the address map.

Figure 3.11 One possible address map for the theoretical two-bus system with networking, USB, I2C, and UART.

In the two-bus system, the bridge acts as a middleman between requests issued from the system bus to the peripheral bus. The bridge must be assigned an address range that will span all of the cores on the peripheral bus. If the bridge is given the incorrect address range, requests may never make it to the peripheral bus or to the destination hardware core.

3.3.4 Assembling Custom Compute Cores

In Chapter 2, hardware description languages were introduced with a few examples to help the reader grasp some of the concepts. We also covered how to use existing tools and wizards to create components and custom core templates. Now it is time to cover how to design and assemble custom compute cores. While there is a large body of writing on how to design digital computing systems, both manually and automatically, we look at the process from a more systematic engineering approach. To start, we want to answer the age-old question of “why build custom compute cores?” Once answered we discuss design approaches, consider design rules and guidelines, look at how to test and debug hardware, and finally culminate with a functional custom core.

Why Build Custom Cores?

We begin with the most important question, “why build custom cores?” It is widely believed that hardware development is difficult and because there are more software professionals in the workforce than there are hardware engineers, some might argue to use processors and build software. Furthermore, processors are inexpensive and cost-effective, and processor manufacturers put an enormous amount of design effort into a piece of hardware that will ship many millions of units over its lifetime.

Often, the immediate response to the question “why design hardware?” is “for performance” and by performance the speaker typically means “speed.” This is true; however, there are other compelling reasons to implement hardware as well. These include computational efficiency and predictability. We’ll look at each of these reasons in detail because it is important for a hardware designer to know when a hardware solution is and is not justified.

Advantage: Speed

Because custom hardware designs are often used to speed up applications, some designers will occasionally make the mistake of generalizing that “hardware is faster than software.” However, the idea that hardware is faster than software is a fallacy. In fact, if naively implemented, hardware is often slower than software. Moreover, it is true that any hardware design implemented in an FPGA will perform 5× to 10× slower (and consumes more area on a chip) than the same circuit implemented directly in silicon (using the equivalent process technology). If the design we happen to implement is similar to a processor, then we gain nothing and lose much in speed (and area). So how does FPGA hardware outperform a processor?

Practically speaking, there are two reasons why some FPGA designs have a performance advantage plus a couple of minor reasons. The first practical advantage is rooted in the execution model. The sequential computing model of the standard von Neumann processor creates an artificial barrier to performance by expressing the task as a set of serial operations. With hardware, the task’s inherent parallelism can be expressed directly. To compensate for its inherently serial operation, modern processors commit a significant portion of the hardware resources to extracting the instruction-level parallelism to increase their speed, which we will revisit shortly when we discuss efficiency. Although less significant overall, another way that the execution model can impede performance is in instruction processing. The processor model has to commit resources to fetching, decoding, and dispatching instructions, all of which are functionality that is commonly part of the hardware design. For some applications, memory bandwidth limits the performance and part of the bandwidth is consumed by instructions being fetched from memory. In hardware designs, the instructions are implicitly part of the design.

The second practical reason FPGAs have been able to outperform standard processors has to do with specialization. In general-purpose processors, data paths and operation sizes are organized around general requirements. This typically means 32- or 64-bit buses and functional units. Thus, to multiply an integer by some constant c requires a full-sized multiplier in a processor. However, if additional information about the application is known, an FPGA-based implementation can be created with customized function units to meet the exact needs of the application. For example, a constant multiplier can be orders of magnitude faster than a general-purpose multiplier.

Although, run-time reconfiguration is possible, it is currently not in widespread use. Nonetheless, FPGAs can use this technique to outperform a general-purpose processor by using information only available at run time to produce specialized circuits. For example, a circuit that computes Triple-DES (an encryption algorithm) can be several orders of magnitude faster if the key is known in advance. This particular example has been demonstrated elsewhere Hennessy & Patterson (2002). Unfortunately, building run-time reconfigurable designs is a challenging, time-consuming process. Until design tools and methodologies mature and become easier to use, it is unlikely that this important source of improved performance will become common. The final chapter discusses run-time reconfiguration in more detail.

Advantage: Efficiency

Suppose a hardware implementation of task A takes exactly the same amount of time to complete as the equivalent task executed on a processor. We can assume that the software implementation is easier to develop. Is there any reason to build a hardware implementation? The answer is yes when the hardware solution is more efficient. By efficiency, what we are concerned with is how to accomplish a fixed task with a variable amount of resources. By resources, we could be talking about area on a chip, the number of discrete chips, or the cost of the solution. While speed is a predominant reason to commit to a hardware design, efficiency is still a valid reason. A hardware design plus a processor is often more efficient than two processors.

For example, suppose we have a network interface that implements a standard protocol (such as TCP/IP over Ethernet). If we needed to augment an existing computer (that is already loaded to its capacity) to handle network events, then the two options might include adding another processor dedicated to network traffic and building a custom network interface that offloads the network tasks. If both approaches meet the minimum criteria, then we say the more efficient solution is the one with a lower cost. If the system is being deployed on a single chip, then the more efficient solution is the one that uses less area.

Advantage: Predictability

While efficiency is an important consideration, it is often the case that a processor is not being used to its full capacity. Thus, someone might argue that the processor can simply multitask in new functionality. Even if this does not overload the processor, there is another compelling reason to use a hardware implementation. This case arises in embedded systems where timing constraints are very important. When there are real-time demands on the system, scheduling becomes important. Hardware designs have the benefit of being very predictable, which in turn makes scheduling easier.

So, there are cases when it makes sense to move a task to hardware if it makes that task more predictable or if it makes scheduling tasks on the processor easier. For real-time systems, where the goal is to satisfy all of the constraints, predictability is often more valuable than simply making the system faster.

Disadvantages

Perhaps the biggest disadvantage to building hardware solutions is one already mentioned, the development effort required. Compared to the numbers of professionals that can code software, there is a small number of hardware designers. Moreover, most people will assert that designing hardware is more difficult than coding software. Certainly, for large designers, there are more details that one has to attend to. So, unless there is a compelling reason (in terms of the performance metrics from Chapter 1 or the advantages just mentioned), then it may not be worth the extra effort. A second disadvantage is the loss of generality. It is simply the nature of our modern world that product requirements will evolve over time. The loss of generality has the potential of negatively impacting the design over time.

In summary, Platform FPGAs offer speed, efficiency, and predictability advantages over software-only solutions — compelling advantages for many emerging embedded computing system projects. However, there is no universal answer to the question. As a Platform FPGA designer, part of your task includes determining when a simple microcontroller is appropriate.

Design Composition

In general, there are two ways of building digital computing machines. The first is the one that has been traditionally covered in most sophomore-level digital logic courses, which begins with logic gates. The second, which is sometimes covered in later courses, starts with higher level logic blocks from which complex systems are composed.

In the first approach, the designer begins with requirements that are translated into a specification (expressed in various forms such as Boolean expressions, truth tables, and finite state machines). From there, all of the various formal techniques are used to reduce the number of states, minimize the Boolean functions, and realize the machine in the fewest number of components. When we say “built from gates” this is the approach we are talking about.

In the second approach, the designer starts with logic blocks that have a predetermined functionality — such as decoders, n-to-1 multiplexers, and flip-flops. These components are selected by the designer and are arranged creatively to meet the requirements. Logic gates may be used but their need is diminished by the functionality provided by the other components. The second approach is what we will focus on for the remainder of this book. There are many reasons to use the first approach; however, for practical designs utilizing millions of gates, maintaining the design becomes a daunting task that is simplified by a more modular design approach.

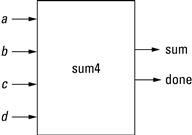

Generally speaking, there are three steps when designing modular custom cores. The first step is to identify the inputs and outputs of the core. In some designs the inputs and outputs are already set based on the functionality of the system. For example, in a bus-based system the inputs and outputs are initially fixed to at least the bus signals. Additional signals may be added based on the design (i.e., connecting an interrupt signal from the core to the processor). These signals may change through the design process, but establishing a solid interface to a top-level component will greatly assist in not only the component’s composition, but aid in the design of any components that eventually will use this core.

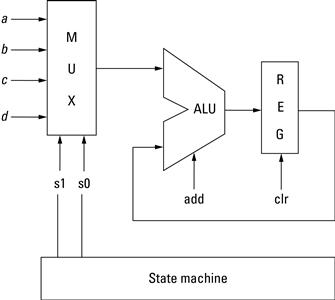

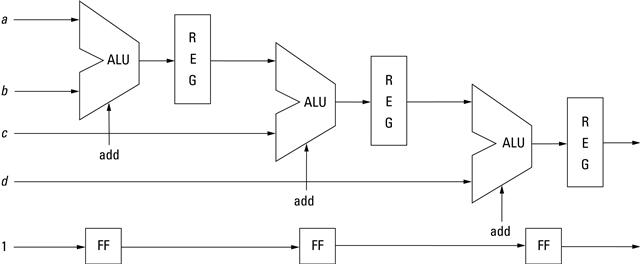

The second step is to identify the operations and compose a data path, usually a collection of multistage computations (i.e., a pipeline). Each component is designed with a particular function in mind. The exact operations needed may not be as clear during the beginning of the design phase, but determining the necessary low-level functionality (or subcomponents) allows for the construction of a data path. The data path represents the flow of data through the component. Once the flow has been established it becomes possible to construct a computation pipeline. A pipeline in hardware contributes to the performance and efficiency of the design. Capturing the stages of the pipeline may initially be difficult, but starting a design with the concept of supporting pipeline operations makes the process much more manageable.

The third step is to develop a controlling circuit that sequences the operations, usually a finite state machine. We often think of hardware in terms of parallelism, that is, independent operations that can be executed at the same time. Parallelism is one of the keys to achieving speedup over processor-based designs. However, many designs still require computations in some sequential flow. Consider a simple equation:

![]()

It is possible to build hardware to multiply x*y in parallel with the multiplication of 4*z, but addition of the two results must wait for completion of the multiplication. A finite state machine can be used to control the computation by first performing the two independent (parallel) multiplications and then performing the addition.

Bottom-Up and Top-Down Design

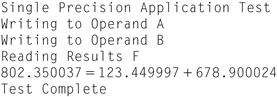

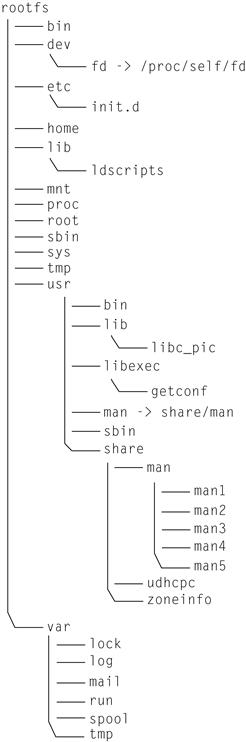

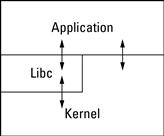

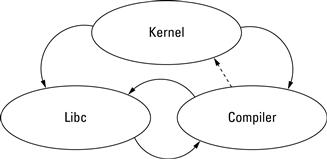

Earlier we mentioned two design approaches, bottom up and top down. In many FPGA designs, the bottom-up approach is used when assembling systems, as described previously. This same approach can be used for assembling custom compute cores. For example, when using the structural HDL method, each component is built by instantiating subcomponents. Before the top-level design can be completed, each of the subcomponents must be designed and tested. In this approach, modularity and designing for reuse are very important.