Managing Bandwidth

Computation Is Cheap, Bandwidth Is Everything

RCS Lab Mantra

Perhaps the most fundamental issue in building an FPGA computing system is managing the flow of data through the system. The computational resources in Platform FPGAs are enormous and, as we have seen in the previous chapter, specific algorithms often have plentiful parallelism, especially if there is a computation that is specialized and relatively small. In this case it is very easy to instantiate a large number of those function units on a single chip. However, without considering the rates at which various function units consume and produce data, it is very likely that any potential performance gains may be lost because the function units are idle, waiting to receive their inputs or transmit their results. Performance issues include both rate of computation and power because an idle function unit is still using static power. There is also a correctness issue for some real-time systems as well. It is often the case that data are arriving from an instrument at a fixed rate — failing to process data in time is frequently considered a fault.

In this chapter the learning objects are related to examining bandwidth issues in a custom computing system.

• Starting out, Section 6.1 looks at the problem of balancing bandwidth, that is, to maximize throughput in a spatial design with the minimum amount of resources.

• Then, considering the target is a Platform FPGA, we spend time discussing the various methods used to access memory, both on-chip and off-chip, along with managing bandwidth when dealing with streaming data from instruments off-chip.

• Finally, we close this chapter with a discussion of portability and scaling with respect to a system’s performance in Section 6.3. As semiconductor technology advances, system designers are provided more configurable resources to take advantage of. The issue now is not one of “how do I add more function units?” but rather “how do I scale the bandwidth of the interconnection network?”

6.1 Balancing Bandwidth

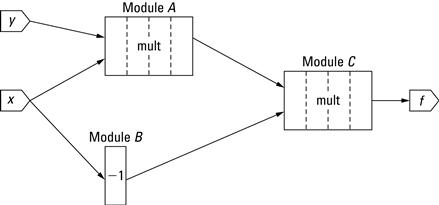

Let’s begin with a motivating example. Suppose we have a simple computation, ![]() , that we need to apply repeatedly to a large number of sequential inputs. A simple spatial design of locally communicating function units is shown in Figure 6.1.

, that we need to apply repeatedly to a large number of sequential inputs. A simple spatial design of locally communicating function units is shown in Figure 6.1.

Figure 6.1 A simple network used to compute ![]() .

.

In order to maximize the frequency of the design, it is likely that the multipliers chosen for this design will be pipelined, and for this example it is assumed that these multipliers will have four levels of pipelining. We will also assume that the ![]() unit completes in a single-cycle operation.

unit completes in a single-cycle operation.

Now let’s analyze the timing in this example. At ![]() , the inputs

, the inputs ![]() and

and ![]() are present at the inputs of modules

are present at the inputs of modules ![]() and

and ![]() . At

. At ![]() ,

, ![]() has been computed and the result is present at the input of module

has been computed and the result is present at the input of module ![]() . However, the product

. However, the product ![]() is still being computed, so we have to stall the computation. A stall occurs in two cases: (1) whenever a computation unit has some, but not all, of its inputs or (2) when it does not have the ability to store its output, that is, the unit is prevented from proceeding because it is waiting on one or more inputs or waiting to store a result. Thus, module

is still being computed, so we have to stall the computation. A stall occurs in two cases: (1) whenever a computation unit has some, but not all, of its inputs or (2) when it does not have the ability to store its output, that is, the unit is prevented from proceeding because it is waiting on one or more inputs or waiting to store a result. Thus, module ![]() is stalled as well because it has to hold its result until module

is stalled as well because it has to hold its result until module ![]() uses it. These units continue to stall until

uses it. These units continue to stall until ![]() , at which point module

, at which point module ![]() produces its product, which then allows module

produces its product, which then allows module ![]() to consume both data and — for exactly one cycle — all three units can proceed in parallel. (Module

to consume both data and — for exactly one cycle — all three units can proceed in parallel. (Module ![]() is operating on the first two

is operating on the first two ![]() and

and ![]() values while modules

values while modules ![]() and

and ![]() begin operating on the next two

begin operating on the next two ![]() and

and ![]() values.)

values.)

Clearly, this pattern is going to repeat and modules ![]() and

and ![]() will stall again until

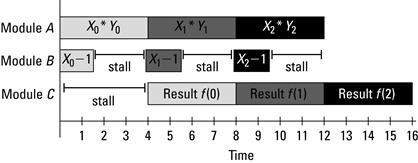

will stall again until ![]() . This is shown graphically in Figure 6.2. So if we have

. This is shown graphically in Figure 6.2. So if we have ![]() number of

number of ![]() and

and ![]() inputs, the network will take

inputs, the network will take ![]() cycles to compute all of the results. This is undesirable because one-quarter of our module cycles are spent waiting for data!1

cycles to compute all of the results. This is undesirable because one-quarter of our module cycles are spent waiting for data!1

Figure 6.2 Illustration of the stalls example.

Even though FPGA resources have been allocated to these units, they are not contributing to the solution when they are idle. Moreover, they are continuously consuming static power — even if the transistors are not changing values. Finally, the throughput (one result every four cycles) is not much better than a time-multiplexed solution and probably slower than a general-purpose processor (which will have a faster clock frequency). What we aim for is a throughput of one result every cycle.

Readers may already recognize the solution to this problem. By adding a four-stage FIFO or buffer into the network, we can make this computation produce a new result every cycle after ![]() . There is more than one place to insert these buffers, but Figure 6.3 shows three buffers following the results of module

. There is more than one place to insert these buffers, but Figure 6.3 shows three buffers following the results of module ![]() . The introduction of buffers so that a network of computations does not stall is known as pipeline balancing. Chapter 5 discussed pipelining, both in terms of between compute cores and within individual computations. Implicit is the problem of how to best balance the pipeline. This problem is further complicated when dealing with larger systems that span multiple compute cores operating at different frequencies. Through the rest of this chapter we aim to address these problems with respect to Platform FPGA designs.

. The introduction of buffers so that a network of computations does not stall is known as pipeline balancing. Chapter 5 discussed pipelining, both in terms of between compute cores and within individual computations. Implicit is the problem of how to best balance the pipeline. This problem is further complicated when dealing with larger systems that span multiple compute cores operating at different frequencies. Through the rest of this chapter we aim to address these problems with respect to Platform FPGA designs.

Figure 6.3 High-throughput network to compute ![]() .

.

6.1.1 Kahn Process Network

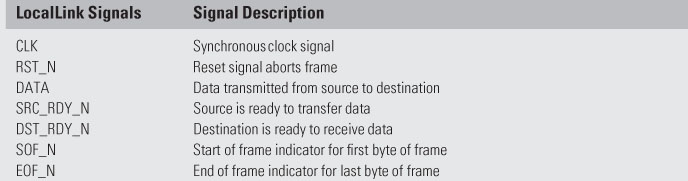

In the previous stalls example, we built our network of computational components in a style known as a Kahn Process Network (KPN) Kahn (1974). In a KPN, one or more processes are communicating through FIFOs, with blocking reads and nonblocking writes. By this we mean it is assumed that the system is designed to support writing into a FIFO without the FIFO becoming full and losing data. Graphically, a KPN resembles a conventional streaming architecture where the source writes to the destination, as seen in Figure 6.4. Here, each node (circle) represents a process and each edge (![]() ,

, ![]() ,

, ![]() ) between the nodes is a unidirectional communication channel.

) between the nodes is a unidirectional communication channel.

Figure 6.4 Kahn process network diagram of a simple single precision floating-point system.

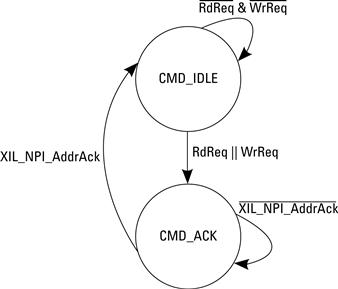

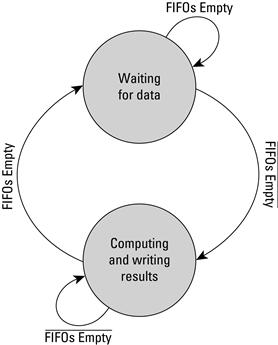

The network, which is represented as a directed graph, is also called a data-flow graph. As part of the example, we mentioned that the operation only begins when all of its inputs are available and there is a place for it to write its output. This requirement is called the data-flow firing rule. A short finite state machine, as seen in Figure 6.5, can be implemented to support the data-flow firing rule.

Figure 6.5 Wait/compute states of Kahn process network finite state machine.

We already implemented a Kahn process network in Section 3.A when we built the single precision floating-point adder. In that example, before processing data (performing the addition), we had to wait until not only both operands arrived into their FIFOs, but that result FIFO was ready to receive the added result and the adder was ready for new inputs as well. Had we not checked the status of result FIFO it would have been feasible for the system to have lost data between the inputs and the results by allowing the adder to consume data from the input FIFOs, but not being able to store the results.

An additional feature found in our single precision addition system is the ability to check the status of each FIFO, whether they were full or empty, in parallel to the FIFO read and write operations. This is in contrast to a conventional software implementation where the status of the FIFO would first need to be read prior to any operations occurring. As a result, in a software implementation, these sequential operations can add extra latency to the system. Through the explicit parallelism with FPGAs we can begin to see and understand the effects that something as simple as independent FIFO operations have on the efficiency of the system.

While the Kahn process network supports the unidirectional flow of data from a source to a final destination, it does not offer any mechanism to provide feedback between compute processes. The requirement of “unbound” FIFOs between processes makes for challenging designs in systems where FIFOs are a fixed resource that cannot be reallocated, that is, with FPGAs, when the Block RAM has been allocated as a FIFO and the design is synthesized and mapped to the device, that memory is fixed to that process. Instead, we will see that Platform FPGA designs can quickly incorporate the Kahn process network with fixed on-chip memory resources by including feedback between the compute cores. This approach is discussed within both Section 6.A and Chapter 7.

6.1.2 Synchronous Design

In a systolic data-flow implementation, every operation shares the same clock and data are injected into the network every clock cycle. If we can determine at design time that all data will arrive at the inputs at the right time (as was done in the second implementation of the network in the example), then we can avoid checking the data-flow firing rules and we get maximum throughput. Moreover, the FIFOs used in the example can be replaced with a smaller chain of buffers. This is called a synchronous design.

6.1.3 Asynchronous Design

In contrast, the asynchronous design strategy uses simple finite state machines to read inputs, drive the computation, and write the outputs. This requires additional resources, but has the bene-fit that there is no global clock, so the design can be partitioned across different clock domains. If the buffers are added as in the second implementation example, then it too has the benefit of getting maximum throughput.

In both synchronous and asynchronous cases, we have assumed that each operation takes a fixed number of clock cycles. However, the model can be extended to also include variable-length latency operations by using the maximum latency (to always get maximum throughput). The asynchronous design can use the most frequent latency to usually get maximum throughput.

The key to both of these design strategies is that FIFOs have the correct minimum depth. However, we do not want to waste resources — especially memory resources that are relatively scarce on an FPGA device. So the key to this problem is to find the mini-mum number of buffers needed to maximize throughput. The next section investigates how to best utilize the bandwidth of the FPGA in terms of on-chip communication, off-chip memory, and streaming instrument inputs.

6.2 Platform FPGA Bandwidth Techniques

As with the partitioning problem in Chapter 4, the analytical solution is most useful as a general guide to solving the problem rather than an automatic tool. Unfortunately, just as before, practical issues complicate the clean mathematical analysis. This section considers two places where these techniques can be applied and then considers some practical issues. First, we will look at integrating designs with on-chip and off-chip memory. A variety of memory types and interfaces exist and understanding when each is applicable is important to Platform FPGA designs.

Then, we will consider data streaming into the FPGA from some instrument. For practical embedded systems designs the “instrument” may be a sensor (such as temperature or accelerometer), some digitally converted signal (say from an antenna or radar), or even low-speed devices such as keyboards and mice. In some cases the instrument may need to use off-chip memory as a buffer or as intermediate storage, so while these two sections are presented individually, designs may require that the two functionalities be combined together. Clearly, we cannot discuss every type of instrument; for brevity we focus our attention on high-speed devices, which cause tighter constraints in FPGA designs.

6.2.1 On-Chip and Off-Chip Memory

Most embedded systems will require some amount of on-chip and/or off-chip memory (RAM) because many modern systems are data-intensive. This often means that the designer has to pay some attention to the memory subsystem. As mentioned earlier, the FPGA fabric does not include embedded caches found with modern processors.2 As a result, the designer must be aware of the system’s memory requirements in order to implement a suitable interface to memory so as to not create a memory bottleneck.

Memory requirements differ between software and hardware designs. In software there is less of an emphasis on where data are stored so long as they are quickly available to the processor when they are needed. When writing an application, the programmer does not typically specify in which type of memory (disk, Flash, RAM, registers) data should reside. Instead, more conventional memory hierarchy, depicted in Figure 6.6, is used. This is the typical hierarchy covered in computer organization textbooks. The ![]() axis refers to capacity and the

axis refers to capacity and the ![]() axis refers to access time. Non-volatile storage such as hard drives provide greater capacity, but at the cost of performance. Access times are typically in the millisecond range. Volatile storage such as off-chip memory provides less storage than disk, but typically at least an order of magnitude faster access times. Cache and registers occupy the peak, providing small but fast access. We mention this because unlike programmers targeting general-purpose processors, who are able to exploit caches and the system’s memory hierarchy, Platform FPGA designers must either construct custom caches or rely upon a modified memory hierarchy.

axis refers to access time. Non-volatile storage such as hard drives provide greater capacity, but at the cost of performance. Access times are typically in the millisecond range. Volatile storage such as off-chip memory provides less storage than disk, but typically at least an order of magnitude faster access times. Cache and registers occupy the peak, providing small but fast access. We mention this because unlike programmers targeting general-purpose processors, who are able to exploit caches and the system’s memory hierarchy, Platform FPGA designers must either construct custom caches or rely upon a modified memory hierarchy.

Figure 6.6 Traditional memory hierarchy comparing storage capacity to access times.

From a custom compute core’s perspective, we modify the drawing of the memory hierarchy for Platform FPGAs by removing the cache and replacing it with user-controlled local and remote on-chip memory (storage), as shown in Figure 6.7. On-chip memory is considered local storage when the memory resides within a custom compute core, maybe as Block RAM. Remote storage is still on-chip memory, except that it does not reside within the compute core. An example of this is on-chip memory that is connected to a bus, accessible to any core through a bus transaction. There is a fine line between local and remote storage. In fact, the locality is relative to the accessing core. If compute core A needed to read data from compute core B’s local memory, we would say that A’s remote memory is B’s local memory.

Figure 6.7 FPGA compute core’s memory hierarchy with local/remote storage in place of cache.

Figure 6.8 shows the various memory locations from a compute core’s perspective. Here, the register’s values are valid to the compute core immediately for read or write access. Local storage access is short, within a few clock cycles, whereas remote storage is longer, within a few tens of clock cycles. In the example the compute core would need to traverse the bus in order to retrieve remote storage data. Finally, we show the off-chip memory controller to provide access to off-chip memory. Access times to off-chip memory range in the tens to hundreds of clock cycles since the request travels off-chip.

Figure 6.8 Various memory locations with respect to an FPGA’s compute core with access times increasing from registers, to local storage, to remote storage, and finally to off-chip memory.

While physically there is a difference between cache and on-chip local/remote storage, the concept of moving frequently used data closer to the computation unit remains the same. The difference is the controlling mechanism. For caches, sophisticated controllers support various data replacement policies, such as direct-mapped, set associative, and least recently used. In Platform FPGA designs we are left to implement our own controller. This may seem like a lot of additional work, but keep in mind many custom compute cores most likely do not follow conventional memory access patterns or protocols, so creating custom controllers may actually be necessary to achieve higher computation rates.

Unlike most software programmers, hardware designers must be aware of the physical location of data to be processed. We must know whether data are in registers ready to be computed upon, in on-chip memory requiring reads through a standard interface such as a Block RAM, or in off-chip memory requiring additional logic (and time) to retrieve data. In each of these cases, the mechanism to access data may differ. Consider reading data from a regis-ter; data are always able to be accessed (and modified), typically within a single clock cycle. The designer references the register by name rather than by address. In contrast, consider how to access data from an on-chip memory, such as Block RAM. When using on-chip memories, we must include a set of handshaking signals. These signals may consist of address, data in, data out, read enable, and write enable. In this case, the memory is located within the compute core. This allows for fast access from the compute core, but can limit access by other compute cores. This is similar to a nonshared level 1 cache in terms of accessibility. Read and write access times are typically within one to two clock cycles.

The Block RAM can also be connected to the bus to provide more compute cores access to the memory. This comes at a cost of traversing the bus. The memory is still on-chip, but the interface to access the memory changes to a bus request. We mention this case because it more closely models one implementation for interfacing with off-chip memory, namely through a bus. However, for off-chip memory, instead of a simple bus interface to translate bus requests into Block RAM requests, additional logic is required to correctly signal the physical off-chip memory component.

Off-Chip Memory Controllers

The additional logic is in the form of a memory controller. In Platform FPGA designs, memory controllers reside on-chip as a soft core that interfaces between off-chip memory and the compute cores. The memory controller is responsible for turning memory requests into signals sent off-chip, freeing the designer from adding this signaling complexity into every compute core needing access to off-chip memory and, if multiple cores need off-chip access, having to multiplex between the requests ourselves.

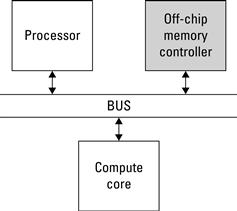

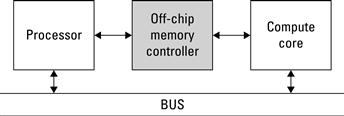

Because the memory controller is a soft core, it is possible to construct different interfaces to both off-chip memory and on-chip compute cores. For example, in Figure 6.9, the memory controller is connected directly to the processor. Alternatively, the memory controller could be connected to a shared bus to allow other cores to access off-chip memory, as seen in Figure 6.10. Simi-larly, some memory controllers can provide direct access to more than one component; Figure 6.11 shows both the processor and the compute core interfacing to the memory controller directly.

Figure 6.9 The processor is connected directly to the memory controller.

Figure 6.10 The memory controller is connected to a shared bus to support requests by any core on the bus.

Figure 6.11 The processor and compute core are connected directly to the memory controller.

A conventional system may use a bus-based memory controller to provide the greatest flexibility in the design, allowing any master on the bus to read or write to off-chip memory. In Platform FPGA designs this type of access may suffice for many components, but providing a custom compute core with the ability to access memory directly can yield significant performance advantages that are otherwise unrealizable in traditional microprocessor designs. We discuss how memory accesses differ with these physical implementations shortly.

Memory Types

Up until now we have ignored the type of off-chip memory we have chosen to interface with. When purchasing development boards, vendors often populate the boards with one or more types of memory. These may include DDR SDRAM, DDR2 SDRAM, SRAM, Flash memory, EEPROM, etc. For those less familiar with these memories, you may be thinking of how they differ. For example, SDRAM versus SRAM differ in terms of latency, capacity, and maybe most noticeably cost (SRAM costs are significantly greater than SDRAM). SDRAM versus Flash memory differs in terms of long-term volatile versus nonvolatile storage and access time. With such a wide variety of memory, we must familiarize ourselves with the diff-erent memory controllers. This task may seem daunting, and for designers who are required to build a memory controller from scratch, it can be. However, in many cases, memory controllers have already been designed that can be instantiated from a repository/library of soft cores. This is one of the strongest benefits when designing with Platform FPGAs, using commodity off-the-shelf components (in our case hardware cores) whenever possible in a design.

When starting a design, it is common to begin with a development board that may contain various necessary and unnecessary peripherals. Vendors typically supplement these development boards with a repository of IP cores, which allows systems to be assembled rapidly. Within these repositories exist different memory controllers that can be instantiated within the design to provide access to the development board’s off-chip memory. Ultimately, a design will move away from the development board to a custom design, which may mean a different type (or capacity) of memory.

Fortunately, with some adjustments and modifications to the generics of the memory controller hardware core, different memory can be used [for example, using error correcting code (ECC) logic if the SDRAM DIMM supports it or not]. The goal of this section is not to teach you how to build a memory controller from scratch, but to understand how the different memory controller interfaces can affect a design. Specifically, we are interested in the memory access associated with these different interfaces. This is a shift from traditional processors where all of the computation is done by the processor, so one type of memory access may be all that is necessary. In Platform FPGA designs we can incorporate many different memory access types to support lower resource utilization, lower latency, or higher bandwidth memory requirements when needed. Moreover, we can provide some compute cores with high bandwidth access to memory while limiting memory access to other cores.

Memory Access

How the memory controllers are connected and used can have a dramatic effect on the system, even if a highly efficient memory controller and type of memory is used. It is up to the designer to understand how to connect the memory controller in order to meet the memory bandwidth needs. In Platform FPGA terms, the important trade-off is resource utilization.

Programmable I/O

For starters, the processor can handle transfers between memory and compute cores. With programmable I/O the processor performs requests on behalf of the compute core. This approach uses a limited amount of resources. The requirement is that each compute core be located on the same bus as the processor. The processor can read data from memory and write it to the compute core, or the processor can read data from the compute core and write it to memory. In this situation, the processor plays the central communication role. As a result, the processor may end up performing less computation while performing these memory transactions on behalf of the compute cores.

The effect is less noticeable as the amount of computation performed in the FPGA fabric increases, reducing the amount of computation being performed by the processor. In practice, there are a variety of ways to pass data among the processor, memory, and compute core. From the processor’s perspective, both memory and the compute core are slaves on the system bus with a fixed address range.

A software approach, such as C/C++, could involve pointers where the processor would access data stored in off-chip memory and then pass it to the compute core. We can also use pointers to provide array-indexed access to off-chip memory. Figure 6.9 depicts the system design used to connect the processor, memory, and compute core to allow data to be transferred from off-chip memory to a custom compute core.

Listing 6.1 provides a simple example of a stand-alone C application to read data from off-chip memory and transfer it to a compute core. We assume that the address space of the off-chip memory controller begins at address 0x10000000 and that the compute core begins at address 0x40000000. The functionality of the compute core is irrelevant for this example; just assume that writing data to its base address is how the processor interfaces with the compute core. Accessing memory as an array, mem_ptr[i], reduces the complexity associated with pointers. In this case, because both pointers were defined as type int (and we assume the processor is a 32-bit processor), incrementing the pointer results in reading from and writing to the next 32-bit data word.

DMA Controller

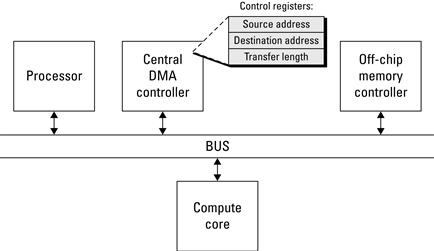

As a simple solution to the memory transfer problem, the processor doing the work may be acceptable; however, it is certainly not efficient. The time the processor spends reading and writing data is time that could be spent performing other computations. Most modern processors circumvent this problem by introducing Direct Memory Access (DMA) to perform memory transactions in place of the processor. There are variations on the implementation, but the basic idea is that the processor issues a request to the DMA controller, which then performs the memory transaction. This frees the processor to perform other computations while the DMA controller is operating. This works by allowing the DMA controller to act as both a bus slave and a bus master. As a slave, the DMA controller responds to requests from the processor (or any other bus master) to set up a memory transaction. Then, as a master, the DMA controller arbitrates for the bus and communicates with the memory controller to complete the memory transaction. The order of communication depends on the direction of the transfer (memory read versus memory write), so it is possible to issue a read from a compute core and write data to off-chip memory. To set up the transaction, the DMA controller needs at least the following information: source address, destination address, and transfer length. The source address is where data should be read from, the destination address is where data are to be written to, and the transfer length is the number of bytes in the transfer. Figure 6.12 is a block diagram representation of a simple system, including a processor, DMA controller, memory controller, and compute core.

Figure 6.12 Central DMA controller to offload memory transactions from the processor.

Bus-Based DMA Interface

While the DMA controller may add an improvement in performance by reducing the overall involvement by the processor, there are still drawbacks. In the programmable I/O implementation the processor’s compute capacity is degraded when it performs the memory transactions on behalf of the compute core. Recalling the example (Listing 6.1) where during the for-loop the processor is acting as a middleman between memory and the compute core, we can free the processor from much of this responsibility by adding a DMA controller to perform the bulk of the work for each memory transaction. This approach is an improvement, but the processor is still involved and data must still be passed between the DMA controller and the compute core.

We can improve this further by giving each compute core the ability to issue transactions to memory independently. This is still considered DMA, but instead of a centralized DMA controller performing transactions, each compute core can independently issue its own read or write transactions to the memory controller. With a bus connecting the compute cores and memory controller, the arbiter for the bus becomes the communication controller, managing requests for bus access to issue memory transactions. The memory controller still only sees a single transaction at a time (note that some memory controllers can handle more than a single transaction at a time).

To support DMA within a custom compute core, additional logic must be added to the core’s interface. Typically, a core can be viewed as a bus slave, responding to requests from other cores on the bus. The example of programmable I/O where the processor writes data to the compute core is an example of this interface. A slave device cannot issue requests on the bus; in order to do so we must make the device a bus master. It is possible for a core to be a slave, a master, or both a slave and a master. Although, practically speaking, a core is usually only a bus slave or both a bus slave and a master. The processor is one of the few bus master-only cores.

That being said, for DMA support we only need a bus master, but we will include a bus slave to allow the processor and other compute cores to still communicate with the core. Because the bus interface depends on the actual bus used in the design, the specifics of the finite state machine needed to incorporate a bus master into a custom compute core will be covered in Section 6.A. From a hardware core’s perspective, a master transaction involves asserting the request to the bus arbiter, waiting for the arbiter to grant bus access, and waiting for the request to complete.

We mention bus master information because the memory controller is viewed as a slave on the bus. Once the memory controller responds to the bus transaction, the next core can issue its memory request across the bus. This approach alleviates the processor from performing memory transactions on behalf of compute cores and can improve performance by allowing a transaction to only traverse the bus once (previously, the processor would fetch data and then pass data to the compute core, requiring two trips across the bus). Requests to the memory controller are still performed sequentially by the bus, but any core that can be granted access to the bus can issue requests.

Direct Connect DMA Interface

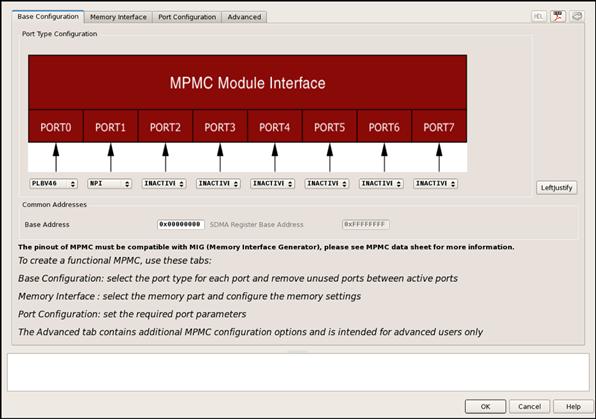

In some situations it may not be necessary for every core to access off-chip memory. While we could create bus masters and bus slaves accordingly, a larger question should be addressed, namely, why not directly connect compute cores to the memory controller? This approach avoids contention for the bus, especially from other cores that need the bus but do not need access to the memory controller. Overall, this results in lower latency and higher bandwidth transactions to memory. A direct connect to the memory controller does require additional resources, both for the compute core and the memory controller. Some vendors supply memory controllers with multiple access ports (Xilinx offers a multiport memory controller, MPMC). Section 6.A presents one custom direct connect interface for the Xilinx MPMC known as the Native Port Interface.

By circumventing the bus, memory transactions no longer need to arbitrate for the bus, which reduces latency. In designs that require low latency, this may be the only way to meet the timing requirements. In some designs, only a few compute cores may need direct access to memory, and under these conditions is it feasible to directly connect the cores to the memory controller. As the number of cores needing access to memory increases, using a bus-based DMA interface is more appropriate, as each direct connect DMA interface requires resources that would normally be shared by a bus implementation.

One mechanism used to hide latency to off-chip memory is double buffering. Double buffering refers to requests that are made while the previous request is still in transit. This allows the memory controller to begin responding to the second request while the first request finishes. During read requests from memory, using double buffering can result in a best-case effective zero latency access for all requests after the first request.

Memory Bandwidth

When implementing designs we must be aware of the memory requirements before building the system. One important consideration mentioned earlier in this chapter is bandwidth. Bandwidth can be calculated based on the operating frequency and data width. For example, a bus operating at 100 MHz over 64-bit data words offers:

![]()

Calculating the bandwidth requirements of a compute core can help identify what type of memory controller and interconnect is suitable for the design.

In addition to raw bandwidth, we must consider setup times and latency. When a compute core initiates a transfer, depending on the interconnect, the request may need to wait to be granted access to master the bus, wait for the memory controller to complete its current transaction, or wait for data to be returned. To improve this latency would require a more directly connected interface to memory and/or operating the memory controller at a higher frequency, allowing it to perform more operations in the same time period.

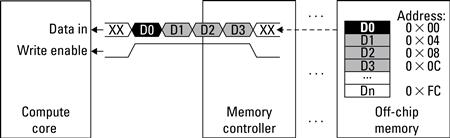

Alternatively, a core may issue burst transfers. A burst transfer allows the compute core to issue a single request to the memory controller to read or write multiple sequential data, as depicted in Figure 6.13. This reduces the number of total memory transactions needed by allowing a single transaction to span more than a single data word and can save a significant amount of time when transferring large amounts of data. In a burst transfer the latency for the first datum is still the same, but each datum after the first arrives in a pipelined fashion, one datum per clock cycle. For example, a burst transfer of four 32-bit sequential data words would take the initial latency time for the first word, denoted as ![]() , plus three clock cycles for the remaining three words:

, plus three clock cycles for the remaining three words:

![]()

Figure 6.13 Burst transfer of four 32-bit sequential data words from off-chip memory to a compute core.

There are, unfortunately, two limitations to burst transfers. The first is data must be in a contiguous memory region. A burst transfer consists of a base address and a burst length. Data are transferred starting from the base address up to the burst length. The second is that the length may be limited by an upper bound, requiring multiple burst transactions for such large request lengths. As of this writing, Xilinx imposes a 16-word upper bound on memory requests across a bus and through a direct connect to off-chip memory.

To summarize, what we are trying to emphasize is that unlike traditional microprocessors, Platform FPGAs provide a flexibility to solve complex bandwidth needs. The flexibility sometimes comes at a price, often in terms of resources or programmability. Understanding when and how to chose the right combination is a responsibility the system designer must not take lightly.

6.2.2 Streaming Instrument Data

So far we have focused on interfacing with on/off-chip memory, but many embedded systems designers often face situations where the system must incorporate an application-specific instrument. An exotic example is a system with a number of science instruments flying on a satellite circling the Earth. These instruments are usually “dumb” in that they incorporate very little control and often have very little storage. Once turned on, they begin generating data at a fixed rate. It is up to the computing system to process this data and transmit it to the receiving station within a specific time frame (in this case, when the satellite is over the receiving station on earth). Unlike the off-chip memory problem where the consequence of a poor memory hierarchy is bad performance, the consequence of not being ready to accept data from an instrument is lost data. Data can be lost in two ways: if the device cannot process data arriving from the instruments fast enough or if the device cannot transmit the computed results back, to earth in this example, within the requisite amount of time.

There are plenty of less exotic examples as well. Embedded video cameras and other embedded systems sensors have similar characteristics. Data arrive at some fixed rate and must be processed, otherwise they may be lost. For commercial components, failure to do so results in loss of sales and unsatisfied customers.

These examples essentially break down to bandwidth issues: can the FPGA perform the necessary computation in the specified time period and, if not, how can we buffer data so as to not lose it? This is the focus of this section, how to support high bandwidth instruments. Chapter 7 explores a variety of interconnects that can be used to interface with external devices. In this chapter it is all about bandwidth, how to plan for it, and how to use it. We also are interested in using on-chip and off-chip memory intelligently for short-term storage when the need arises to buffer data.

Many instruments may have a fixed sample rate, say, every 10 milliseconds, and the device must operate on new data. The designer can calculate the exact amount of time allotted to processing data and work to design a compute core accordingly. As the sample rate increases, the amount of time to process data decreases unless the designer prepares the system to be pipelined as described in the previous chapter.

Traditionally, we have considered pipelines as a mechanism to achieve higher overall throughput in general-purpose computers. Here we use them not only to achieve high throughput, but to alleviate the tight timing constraints that may be placed on a designer with a high sampling rate. The requirement on pipelining is that the sampling rate (arrival rate of new data) be less than or equal to the slowest stage in the pipeline. Even still, this requirement can be circumvented if each stage is pipelined as well; however, at this point it becomes a granularity debate as to what is a stage in the pipeline. With the use of pipelines we can view the flow of data from the instrument through each compute core and finally to its destination as a stream. FPGAs can efficiently handle multiple streams in parallel at very high frequencies, which is what has attracted so many designers away from microprocessors.

When working with more than a single instrument or input, we must now pay attention to the additional complexities. At the beginning of this chapter we spoke about balancing bandwidth with the use of buffers. By knowing the input rate for the instruments, we can calculate the size and depth of the buffers needed to balance the computation. In addition to using on-chip memories as buffers, these memories can be used to cross clock domains.

One challenging aspect of embedded systems design is dealing with different clock domains. This problem may be further complicated when including instruments with different operating frequencies. Using on-chip memory as FIFOs, certain vendors support dual port access to the memory, which enables different read and write clocks (and, in some cases, data widths). Using these buffers with different read and write clocks, a designer can solve two problems with one component, buffering and clock domain crossing. With Platform FPGA designs, this also results in the use of fewer resources than implementing both a buffer and clock logic individually. Here we are able to take advantage of the physical construct of the FPGA and the on-chip memory (i.e., Block RAM), which have both capabilities built into the FPGA fabric.

Where real issues begin to arise is when a compute unit cannot process data fast enough and on-chip memory does not provide sufficient storage space. In this event, it is necessary to use off-chip memory as a larger intermediate buffer. Fortunately, we can incorporate the information presented in the previous section to help solve this problem. By calculating the bandwidth requirement we can determine which memory controller and interconnect are appropriate.

Let’s consider three cases where off-chip memory buffer can be useful. These three cases are actually general enough to be applicable for large on-chip memory storage as well, but we present them here for consistency sake. A compute core requiring memory as a buffer may need to:

Another unique capability FPGAs provide over commodity processors is the ability to gather data without introducing any instrumentation effect in the computation. Let’s say we want to construct a histogram of data arriving from an off-chip source, as seen in Figure 6.14. We can build a second component to perform a histogram calculation based on the input to be processed by the compute core. Unlike a microprocessor design where the histogram computation would require part of the processor’s time (slowing down the computation), a parallel histogram hardware core can perform its computation without disturbing the system. In this example, we may also be able to achieve a higher precision in our histogram, as we could sample the input at a higher frequency than a processor, which may not be able to sample and compute quickly enough before the next input arrives.

Figure 6.14 Streaming I/O compute core plus parallel data acquisition (histogram) core.

Inserting data probes into a system can provide a significant amount of insight into the operation and functionality of the design. Often, this information is collected during the research and development stages of the design; however, in some applications these data may prove to be a valuable resource for debugging or improving future designs. These probes can be created to monitor a variety of components, with each running independently. Depending on the functionality, these probes may require additional resources, such as on-chip or access to off-chip memory, and so must be planned for accordingly.

FPGAs have already been shown to be useful in a variety of applications with one or more instruments, but often the FPGA’s role is as some glue logic to aggregate all of the instrument signals for the processor. Instead, we emphasize that using the FPGA to do more computation can be of great benefit. To support computation, it may be necessary to introduce buffers, which may be small on-chip memories or large off-chip RAMs. With the application dictating the buffering needs, the designer must carefully consider the system’s bandwidth in order to obtain significant gains in performances. These gains are not necessarily over a conventional processor; in fact, the gains may be finer resolution in the computation, more power-efficient designs, or the collection of run-time data that will spawn new and even better designs.

6.2.3 Practical Issues

Perhaps the biggest issue for FPGA-based implementations is the cost to instantiate a FIFO or a buffer. In CMOS transistors, the number of transistors used to instantiate a buffer and FIFO is different. For FPGAs, they are often just different configurations of the same resource. Second, the number of transistors in CMOS is directly proportional to the number of stages in the buffer. The cost model for FPGAs is much more complicated. If the buffer has one stage, the tools will probably instantiate a flip-flop. If there are between two and 16, most likely the tools will use shift registers (SLR16), which essentially cost a function generator and no data storage. For a deeper buffer, the tools might instantiate a few SLR16s but, more likely, would configure an on-chip memory as either distributed RAM or Block RAM. A consequence of this is that the placement of the buffers matters a lot in an FPGA system. A design that uses many of the BRAM resources might want to distribute many small buffers throughout the network rather than use one BRAM near the sink. Of course, the analytical formulation just presented does not account for these subtle cost issues.

An associated issue to allocating and placing these memories is how to connect them while still meeting the specified timing requirements. The reliance on advancements in place-and-route technology has aided the designer in meeting more complex design requirements, but there are often timing constraints that the tools cannot resolve. The designer must identify where the longest delay occurs in the design and either introduce a buffer (maybe with the addition of a flip-flop) or, if possible, hand place and route a portion of the design. Hand routing designs is a more advanced topic than we wish to explore within this chapter and because our focus thus far has been on bandwidth, we should consider the constraint from the perspective of solving a bandwidth issue. The introduction of a buffer may require a change to the other buffers in the system in order to compensate for the added delay.

6.3 Scalable Designs

Consider a designer who spends a significant amount of time designing a hardware core to be used in a Platform FPGA-based embedded systems design. The designer has carefully partitioned the design, identified, and implemented spatial parallelism based on a specific FPGA device, such as the Xilinx FX130T FPGA. In many embedded systems, once the design has satisfied its requirements and the product is shipped, the designer may switch to maintenance mode to support any unforeseen bugs in the system. This follows the product development life cycle from Chapter 1.

We now want to shift our focus away from simple maintenance and instead turn our attention to scalability. With each new generation of FPGA devices the amount of available resources increases. When going from one generation to the next, the number of logic blocks, logic elements, and block memories might double. New resources, such as single/double precision floating-point units may appear. And, the number of available general-purpose I/O pins may increase. While these advancements provide the designer with more capacity, capability, and flexibility, it often raises an important question: “How do we modify our design to take advantage of these additional resources?” Simply increasing the number of compute cores may have adverse effects on the system unless careful consideration is made.

6.3.1 Scalability Constraints

At the same time we want to consider how to create a design to be as scalable as possible and to utilize the current FPGA’s resources most efficiently. Consider a designer who builds a compute core that utilizes 20% of the FPGA resources. If the design allows for multiple instances of that compute core to be included, we could conceivably instantiate five cores and use 100% of the resources. These calculations are rather straightforward, requiring little math in the computation.

![]()

Practically speaking, this simple formula helps set the upper bound on the actual number of compute cores obtainable on the device. This does not tell the whole story, as we must further analyze the compute core to determine if the system will be saturated past a certain number of cores. In essence we want to find the sweet spot between maximum resource utilization and performance. Arguably, one of the most important scalability constraints is bandwidth. Can we sustain the necessary bandwidth — whether to/from a central processor, between each compute core, or to/from memory — as we increase the number of cores? Simply put, these three components, the processor bus, and memory are at the heart of Platform FPGA design. To see any significant performance gains when scaling the design, we must be considerate of their bandwidth’s impact on the system.

Processor’s Perspective

Let’s start by looking at scalability from the processor’s perspective. As we add more compute cores we may be also adding more work for the processor, such as managing each additional core. Of course this analysis is application specific, but the overarching goal of scalable designs is to achieve greater performance, which may be in terms of computation, power consumption, or any number of metrics. To do this with Platform FPGAs we want to offload the application’s compute-intensive sections that would normally run sequentially on the processor and instead run them in parallel in hardware. Any resulting responsibilities the processor is left with to control these additional hardware cores is a necessary trade-off for the potential parallelism and performance gains.

We consider the “bandwidth” from the processor’s perspective as the amount of “attention” the processor is able to provide a compute core. This vague description is intended to focus the discussion less on data being transferred between the processor and compute core and more specifically on the time the processor is able to interact with the core. We could also consider this to be time spent by the processor to perform some sort of “control” of the hardware core rather than time spent performing its own computations.

To draw on an analogy, imagine a parent feeding a child. The parent feeds the child one spoon at a time until the food is gone and the job is done. The spoon represents the fixed bandwidth between the processor (the parent) and the compute core (the child). The total attention needed is how long it takes to finish the task. Now if we add a second child, the parent is still able to feed each child one spoonful at a time, but the total time to feed both children increases or each child receives only half of the parent’s attention. In scalable designs we want to identify the amount of attention a compute core needs and scale the design to not exceed the processor’s capacity.

![]()

Compute Core’s Perspective

Next, let’s consider scalability from the compute core’s perspective. The compute core may need to communicate with other cores that may be similar to the processor case we just covered. However, unlike the processor that can only communicate with one core at a time, each compute core can issue requests to any other compute core, presenting a larger constraint on the interconnecting resource, which we referred to earlier as a bus. As we add more compute cores to this bus, we run the risk of saturating that shared resource. The bus has a fixed upper bound on its bandwidth, which is the operating frequency multiplied by the data width:

![]()

To calculate the effect of adding additional compute cores onto the bus, we need to take into account what each core’s bus bandwidth needs are. To do this we can follow the same formula for the bus bandwidths, but include the core’s bus utilization as well.

![]()

Here we assume that the core can operate at a different frequency than the bus, but must interface with the bus at a fixed data width. The reason is while a core could issue requests at half of the frequency of the bus, each request requires the entire data width of the bus. (Note: it is possible for more sophisticated buses to circumvent this restriction, and we leave those specific implementation details to our readers.) To calculate the required bus bandwidth we can sum up each core’s bus bandwidth. The bus saturates when the required bus bandwidth is greater than the available bus bandwidth.

We can also consider alternative interconnects, such as a crossbar switch, to connect the cores. A crossbar switch allows more than one core to communicate with another core in parallel. This increases the overall bandwidth of the interconnect at the cost of additional resources. The additional resources stem from allowing multiple connections to be made in parallel. You could think of this almost as adding additional buses between all of the cores so that if one is busy, the core could use a different bus. Figure 6.15 shows a four-port crossbar switch with bidirectional channels. Each output port is connected to a four-input multiplexer, totaling in four four-port multiplexers. The multiplexer’s select lines are driven by some external controller that decides which input ports are connected to which output ports.

Figure 6.15 A four-port crossbar switch and its internal representation based on multiplexers.

Now when we consider scalability, we do so in terms of the size of the interconnect as well as bandwidth. As we increase the size, we increase the number of cores that can connect and communicate in parallel. If we double the number of connections from four to eight, we are doubling the number of cores that can communicate in parallel, from four to eight. This results in a ![]() increase in total bandwidth. Of course, this also doubles the number of multiplexers, which the designer must take into account when scaling the system.

increase in total bandwidth. Of course, this also doubles the number of multiplexers, which the designer must take into account when scaling the system.

Memory Perspective

Finally, we consider the more classical bandwidth constraint of memory. This includes both on-chip and off-chip memory. We are able to sustain a higher on-chip bandwidth with Platform FPGAs, as we can distribute the memory throughout the system and allow parallel access to the memory. With off-chip memory we have a fixed resource, such as the memory controller and I/O pins connecting the physical memory module. To calculate the available memory bandwidth we consider the operating frequency and data width of the memory controller. We are limited to the memory controller because even if the memory module is capable of faster access or higher bandwidth, the bottleneck is at the memory controller where data are then distributed across the FPGA.

![]()

Furthermore, we must consider how the memory controller is connected to each component. For scalable designs, a bus allows for the memory controller to be shared more equally between each of the compute cores. While this solution to the scalability problem may appear to be simple on the surface, a bottleneck can be created if the bus bandwidth is less than the memory bandwidth.

The type of memory also plays an important role in the bandwidth problem. A designer needs to consider how the type of memory will affect the system and understand where the potential bottlenecks can occur. As the saying goes, “the chain is as strong as its weakest link,” and the slowest component in the system is the weakest link. When purchasing fast SRAM, the performance can be quickly negated by a slow memory controller, slow system bus, or slow compute core. Reducing the number of intermediate steps between memory and the compute core can be beneficial, but at the cost of limiting access to the rest of the system.

6.3.2 Scalability Solutions

Now that we have identified some of the scalability constraints, we want to investigate potential solutions. In some cases these solutions are very specific to only a subset of applications, whereas in other cases these solutions may increase the resource utilization beyond an acceptable level. Our goal is not to solve every problem, but to get readers thinking of novel solutions by being exposed to some of the simpler solutions.

Overcoming Processor Limitations

The processor’s computation and control trade-off are where the processor will either perform computation at the expense of idle hardware cores or perform hardware core control at the expense of computation. In the first few chapters of this book we have been less hardware accelerator focused and more processor-memory focused. That is, use the FPGA as a platform to reduce the number of chips needed for embedded systems design, but still have a more traditional processor-centric compute model. Moreover, in this model the processor is performing a majority of the computation, sampling inputs and calculating results. In the latter half of this book we are shying away from a processor-centric compute model by considering the processor to act more as a controller of computation performed within the FPGA fabric. We started with partitioning an application and assembling custom compute cores to run in hardware. Then, to increase performance we looked at spatial parallelism of hardware designs.

By moving more of the computation into hardware, we can free up more time for the processor to spend controlling the application flow rather than performing computation. To help alleviate some of the processor’s burdens, we use direct memory access to allow hardware cores to retrieve memory. For applications with instruments streaming data into the FPGA, we can perform as much computation within hardware before interrupting the processor. We use these interrupts instead of polling to reduce bus traffic, letting the hardware core tell the processor when its computation is complete, rather than having the processor check its status continuously.

When considering how to improve the performance of a Platform FPGA-based system from the processor’s perspective, we want to:

Overcoming Bus Bandwidth Limitations

To improve the bus bandwidth the common approaches are to improve the two contributing factors to the bandwidth calculation, that is, the operating frequency and data width of the bus. By doubling the frequency or the data width, the bus bandwidth is doubled. Running the bus at a higher frequency forces each core connecting to the bus to interface at that same frequency (it is possible using clock domain crossing logic to have the bus interface operate at one frequency and the core operate at a different frequency). Likewise, increasing the data width increases the required resources to implement the wider bus.

Alternatively, to satisfy the bus bandwidth requirement, it may be possible to partition the design into multiple buses. This depends on the communication patterns of the cores on the buses; however, it can be a viable solution to the bus bandwidth limitation. It is also possible to bridge each of the buses together to provide access to cores on other buses at the cost of additional latency through the bridges.

By partitioning the buses, what we are actually trying to accomplish is segregating cores that need to communicate with each other on a single bus. These cores may not need to communicate with every other core in the system, so by moving them onto a separate bus, we can improve the total bus bandwidth across each bus.

In a scalable design where the same hardware core is replicated numerous times, the separate bus approach may not be suitable. This is especially true if the core being replicated only communicates with the processor and off-chip memory. In these cases, it may be possible to replicate the logic within a hardware core and share a single interface to the bus. The result is a larger signal hardware core but potentially more efficient bus utilization, as the hardware core itself will need to prioritize and arbitrate requests rather than the arbiter. Requests within the hardware core can also be grouped together to form a more efficient burst transaction.

Using burst transfers whenever possible can result in an improvement in the utilization of the bus by transmitting more data over the course of fewer requests. Because the bus arbiter will see fewer requests being issued by each of the cores, the arbiter is able to spend less time arbitrating over the requests and spend more time granting access to the bus. The hardware cores will also benefit by receiving (or sending) data at a higher bandwidth.

Therefore, a quick recap on the proposed solutions to improve bus bandwidth limitations includes:

Overcoming Memory Bandwidth Limitation

This chapter has already covered many ways to overcome the memory bandwidth limitation. Initially, this chapter focused on bandwidth in general, which is a core concern for any design. The shift to off-chip memory bandwidth is due to the processor-memory model and contention for memory bandwidth with the increasing number of custom hardware cores. A bus provides a simple mechanism to access the shared resource, off-chip memory. Unfortunately, the bus may be inadvertently degrading performance by sharing on-chip communication — say between two hardware cores — with off-chip memory requests. When the bus is granted for on-chip communication, the memory controller may sit idle, waiting for the next request.

Giving priority to memory requests can alleviate some of these idle times by allowing the request to be issued to the memory controller. Then while the request is being fulfilled, the bus can be used for on-chip communication, until data are ready to be transmitted back to the hardware core. Of course, balancing the requests can be challenging and the result could have a negative impact on on-chip communication.

A more ideal solution may be to separate on-chip communication from off-chip memory requests. At the expense of additional logic, a hardware core can be responding to an on-chip request through one interface (such as a bus) while issuing an off-chip memory request through a separate interface. If a core has a direct connection to the memory controller, then the memory bandwidth is not restricted to the bus bandwidth, and requests do not need to wait for another core’s transaction to complete before being issued.

Finally, by using double buffering we can hide the latency of subsequent requests by overlapping requests with the transmission of data. We already mentioned this approach earlier, but it is important to emphasize the role double buffering can play in improving memory utilization.

In summary, how memory is accessed can have a significant effect on the performance of the system. When designing with bandwidth in mind to achieve high performance efficiently we must:

Chapter in Review

This chapter’s focus has been on bandwidth, a critical consideration when designing systems for Platform FPGAs. Beginning with the problem of balancing bandwidth, we investigated methods and techniques such as the Kahn Process Network to maximize performance with a minimum amount of resources. This was followed by a thorough discussion of on-chip and off-chip memory, including interfaces and controllers, the differences in memory access, and performance. With embedded systems, streaming instruments play a large role in the final product; therefore, we also spent time discussing various methods to efficiently support complex on-chip communication bandwidth requirements. Finally, we discussed designs from a portability and scalability perspective. Our interests are motivated by the ever-advancing semiconductor technology in hopes of reusing existing hardware components and scaling systems to yield greater functionality on future FPGA devices.

In the gray pages that follow, we further investigate these bandwidth questions with respect to the Xilinx Virtex 5 FPGA. We consider bandwidth in terms of on-chip memory access from FIFOs and BRAMs along with off-chip memory access. Included in these gray pages are examples to help readers implement the various memory interfaces in their own design. The final demonstration covers the Xilinx Native Port Interface (NPI) integration into a custom compute core to provide efficient, high bandwidth transfers to and from off-chip memory.

Exercises

P6.1. Considering Figure 6.1, if the amount of time to perform a multplication was reduced by a factor of 2, draw the corresponding system, including any necessary buffers to maintain a pipeline balance.

P6.2. Draw the interface between two components where one is producing data and the second is consuming data. Be sure to follow the Kahn Process Network model.

P6.3. Describe the similarities and differences between synchronous and asynchronous design.

P6.4. Draw the interface for a FIFO. Compare this interface to that of a BRAM.

P6.5. Write the VHDL that will instantiate a BRAM and function like a FIFO. Include in this design a mechanism to report to the user the number of elements currently stored in the FIFO.

P6.6. Write an application that will use the Central DMA controller to read 512 Bytes of data from DDR2 and write it to a hardware core. Assume data are stored at 0x0123000 and must be written to 0xB001000.

P6.7. Compare the performance of a system that is operating in CMDA mode with that of a system running as a bus master that is able to access memory on its own.

P6.8. Implement a Native Port Interface design that will support direct memory access reads and writes to the MPMC without the aid of the processor.

References

1. Kahn G. The semantics of simple language for parallel programming. In: Rosenfeld JL, ed. Information Processing ’74 (pp 471–475). Amsterdam: North Holland; 1974.

2. Xilinx, Inc. LocalLink interface specification (SP006) v2.0 San Jose, CA: Xilinx, Inc. 2005.

3. Xilinx, Inc. Answer record 24912 — 11.1 EDK, MPMC v5.00.a — How do I create an NPI Core and connect it to MPMC in EDK? 2009a; http://www.xilinx.com/ last accessed June 2010.

4. Xilinx, Inc. Block memory generator data sheet (DS512) v3.3 San Jose, CA: Xilinx, Inc. 2009b.

5. Xilinx, Inc. Distributed memory generator (DS322) v4.3 San Jose, CA: Xilinx, Inc. 2009c.

6. Xilinx, Inc. FIFO generator data sheet (DS317) v5.3 San Jose, CA: Xilinx, Inc. 2009d.

7. Xilinx, Inc. Multi-port memory controller (MPMC) v5.04.a San Jose, CA: Xilinx, Inc. 2009e.

8. Xilinx, Inc. XPS central DMA controller (DS579) v2.01b San Jose, CA: Xilinx, Inc. 2009f.