Introducing a collection of standardized service endpoints into an established IT enterprise will almost always result in a need to manage the marriage of service-orientation with legacy encapsulation. This chapter provides a set of patterns dedicated to addressing common challenges with service encapsulation of legacy systems and environments.

Legacy Wrapper (441) establishes the fundamental concept of wrapping proprietary legacy APIs with a standardized service contract, while Multi-Channel Endpoint (451) builds on this concept to introduce a service that decouples legacy systems from delivery channel-specific programs. File Gateway (457) further provides bridging logic for services that need to encapsulate and interact with legacy systems that produce flat files.

Legacy systems must often be encapsulated by services established by proprietary component APIs or Web service adapter products. The resulting technical interface is frequently fixed and non-customizable. Because the contract is pre-determined by the product vendor or constrained by legacy component APIs, it is not compliant with contract design standards applied to a given service inventory.

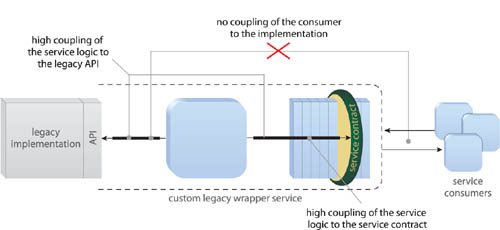

Furthermore, the nature of API and Web service adapter contracts is often such that they contain embedded, implementation-specific (and sometimes technology-specific) details. This imposes the corresponding forms of implementation and technology coupling upon all service consumers (Figure 15.1).

Although a legacy system API or a Web service adapter will expose an official, generic entry point into legacy system logic, it is often unadvisable to classify such an endpoint as an official member of a service inventory. Instead, it can be safer to view legacy APIs and Web service adapters as extensions of the legacy environment providing just another proprietary interface that is available for service encapsulation.

This perspective allows for the creation of a standardized legacy wrapper service that expresses legacy function in a standardized manner. The result is a design that enables the full abstraction of proprietary legacy characteristics (Figure 15.2), which provides the freedom of evolving or replacing the legacy system with minimal impact on existing service consumers.

The application of this pattern is typically associated with the introduction of a new service contract. However, when wrapping only the parts of a legacy resource that fall within a pre-defined service boundary, this pattern may result in just the addition of a new capability to an existing service contract.

Either way, the wrapper service (or capability) will typically contain logic that performs transformation between its standardized contract and the native legacy interface. Often, this form of transformation is accomplished by eliminating and encapsulating technical information, as follows:

• Eliminating Technical Information– Often legacy input and output data contain highly proprietary characteristics, such as message correlation IDs, error codes, audit information, etc. Many of these details can be removed from the wrapper contract through additional internal transformation. For example, error codes could be translated to SOAP faults, and message correlation IDs can be generated within the wrapper service implementation. In the latter case, the consumers can communicate with the wrapper service over a blocking communication protocol such as HTTP and hence would not need to know about correlation IDs.

• Encapsulating Technical Information– When service consumers still need to pass on legacy-specific data (such as audit-related information) the message exchanged by the legacy wrapper contract can be designed to partition standardized business data from proprietary legacy data into body and header sections, respectively. In this case, both the legacy wrapper service and its consumer will need to carry out additional processing to assemble and extract data from the header and body sections of incoming and outgoing messages.

The former approach usually results in a utility service whereas the latter option will tend to add the wrapper logic as an extension of a business service. It can be beneficial to establish a sole utility wrapper service for a legacy system so that all required transformation logic is centralized within that service’s underlying logic. If capabilities from multiple services each access the native APIs or Web service adapter interfaces, the necessary transformation logic will need to be distributed (decentralized). If a point in time arrives where the legacy system is replaced with newer technology, it will impact multiple services.

Note

Some ERP environments allow for the customization of local APIs but still insist on auto-generating Web service contracts. When building services as Web services, these types of environments may still warrant encapsulation via a separate, standardized Web service. However, be sure to first explore any API customization features. Sometimes it is possible to customize a native ERP object or API to such an extent that actual contract design standards can be applied. If the API is standardized, then the auto-generated Web service contract also may be standardized because it will likely mirror the API.

Adding a new wrapper layer introduces performance overhead associated with the additional service invocation and data transformation requirements.

Also, expecting a legacy wrapper contract alone to fully shield consumers from being affected from when underlying legacy systems are changed or replaced can be unrealistic. When new systems or resources are introduced into a service architecture, the overall behavior of the service may be impacted, even when the contract remains the same. In this case, it may be required to further supplement the service logic with additional functions that compensate for any potential negative effects these behavioral changes may have on consumers. Service Façade (333) is often used for this purpose.

Legacy Wrapper makes legacy resources accessible on an inter-service basis. It can therefore be part of any service capability that requires legacy functionality. Entity and utility services are the most common candidates because they tend to encapsulate logic that represents either fixed business-centric boundaries or technology resources. Therefore, this pattern is often applied in conjunction with Entity Abstraction (175) and Utility Abstraction (168).

Patterns that often introduce proprietary products or out-of-the-box services, such as Rules Centralization (216), also may end up having to rely on Legacy Wrapper to make their services part of a federated service inventory. Service Data Replication (350) can be combined with this pattern to provide access to replicated proprietary repositories, and File Gateway (457) may be applied to supplement the wrapper contract with specialized internal legacy encapsulation logic.

Because of the broker-related responsibilities that a legacy wrapper service will generally need to assume, it is further expected that it will require the application of Data Format Transformation (681) or Data Model Transformation (671), and possibly Protocol Bridging (687).

Legacy Wrapper is a therefore frequently applied together with Enterprise Service Bus (704) in order to leverage the broker capabilities natively provided by ESB platforms.

Some solutions need to exchange information with data sources or other systems via different delivery channels. For example, a banking solution may need to receive the same account data from a desktop client, a self-service Internet browser, a call center, a kiosk, an ATM, or even a mobile device. Each of these delivery channels will have its own communications and data representation requirements.

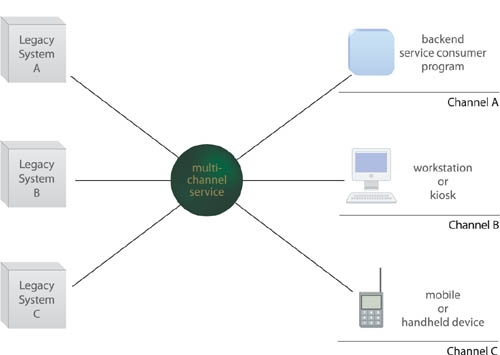

Traditional legacy systems were commonly customized to support specific channels. When new channels need to be supported, new systems were developed, and/or existing systems were integrated. As a result, legacy multi-channel environments led to multiple silos and duplicated islands of data and functionality (Figure 15.5).

This, in turn, led to inconsistency in how systems delivered the same functionality across different implementations and further helped proliferate disparate data sources and tightly coupled consumer-to-system connections.

A service is introduced to decouple both modern solutions and legacy systems from multiple channels. The architecture for this multi-channel service is designed so that it exposes a single, standardized contract to the channel providers, while containing all of the necessary transformation, workflow, and routing logic to ensure that data received via different channels is processed and relayed to the appropriate backend systems (Figure 15.6).

The abstraction achieved by this service insulates legacy systems and other services from the complexities and disparity of multi-channel data exchange and further insulates the channel providers from forming negative types of coupling.

A multi-channel service essentially encapsulates process logic associated with common tasks. Its primary responsibility is to ensure that these tasks get carried out consistently, regardless of where (or which channel) input data comes from. Designing multi-channel services requires careful analysis of the data offered over the supported channels to ensure that the single service contract can serve the (often disparate) requirements of different channel-based consumers.

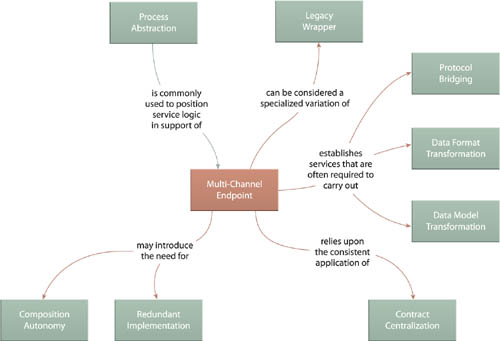

This pattern is based on many of the same concepts as Legacy Wrapper (441), except that it is specific to supporting multiple delivery channels. Multi-Channel Endpoint is therefore commonly applied with industry-standard technologies, such as those that relate to Web services and REST services, so as to support as wide of a range of potential channels as possible.

When building these services as Web services, SOAP headers can be used to establish a separation of channel-specific information in order to avoid convoluted or unreasonably bloated contract content. Additionally, the legacy channel-specific systems themselves may need to be augmented to work with the multi-channel service. This change may especially be significant when this pattern is combined with Orchestration (701), in which case legacy systems may find themselves as part of larger orchestrated business processes.

Multi-channel services often warrant classification as a distinct service model that is a combination of task and utility service. They are similar to task services in that they encapsulate logic specific to business processes, but unlike task services, their reuse potential is higher because the business processes in question are common across multiple channels.

Because they are often required to provide complex broker, workflow, and routing logic, it is further typical for multi-channel services to be hosted on ESB and orchestration platforms. Some ESB products even provide legacy system adapters with built-in multi-channel support.

Even though this pattern helps decouple traditionally tightly-bound clients and systems, it can introduce the need for services that themselves need to integrate and become tightly coupled to various backend legacy environments and resources.

Due to the highly processing-intensive nature of some multi-channel services, this pattern can lead to the need for various infrastructure upgrades, including orchestration engines and message brokers. Depending on the amount of concurrent data exchanged, multi-channel services can easily become performance bottlenecks due to the quantity of logic they are generally required to centralize.

In many ways, Legacy Wrapper (441) acts as a root pattern for Multi-Channel Endpoint in that it establishes the concept of abstraction and decoupling legacy resources. As shown in 707) patterns, such as Protocol Bridging (687), Data Format Transformation (681), and Data Model Transformation (671).

Figure 15.7 Because of its similarity to Inventory Endpoint (260), Multi-Channel Endpoint relates to several of the same patterns.

Composition Autonomy (616) and Redundant Implementation (345) can be further applied to help scale and support multi-channel services in high volume environments. Service Façade (333) is also useful for abstracting routing, workflow, and broker logic within the internal service architecture.

Note

Multi-Channel Endpoint can be viewed as an extension of the Multi-Channel Access strategy described in Understanding SOA with Web Services (Newcomer, Lomow). Whereas Multi-Channel Access emphasizes the reusability aspect of services across channels, Multi-Channel Endpoint focuses on centralized brokering and workflow abstraction aspects critical to the implementation of channel-independent services.

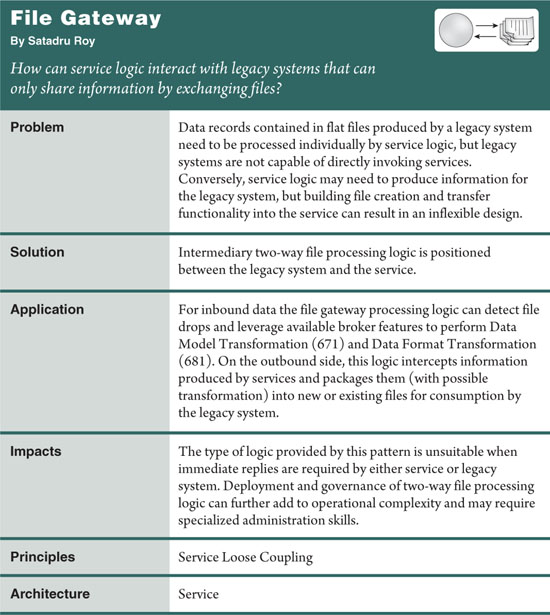

Quite often, service-enabled applications need to process information that is produced only periodically by legacy systems as flat files that can contain a large number of data records separated by delimiters. Any application that needs to access the data in these files will usually be required to iterate through each record to perform necessary calculations.

However, such applications also have to take on the additional responsibility of polling directories to check when the files are actually created and released by the legacy system. Once detected, these files then may need to be parsed, moved (or removed), renamed, transformed, and archived before processing can be considered successful. This has traditionally resulted in overly complex and inflexible data access architectures that revolve around file drop polling and file format-related processing.

The limitations of this architecture further compound when this form of processing needs to be encapsulated by a service. Legacy systems are designed with the assumption that other legacy consumers are accessing the flat files they produce and therefore have no concept of a service, let alone a means of invoking services (as depicted in Figure 15.8).

Service-friendly gateway logic is introduced, capable of handling file detection and processing and subsequent communication with services. This allows services to consume flat files as legacy consumer systems would. It further introduces the opportunity to optimize this logic to improve upon any existing limitations in the legacy environment (Figure 15.9).

The gateway logic introduced by this pattern is most commonly wrapped in a separate utility component positioned independently from business services and is therefore capable of acting as an intermediary between legacy systems and services requiring access to the legacy data. However, the option also exists to limit this logic to individual service architectures.

This file processing logic will typically be comprised of routines that carry out the following tasks:

• File drop polling with configurable polling cycles.

• File and delimiter parsing and data retrieval with configurable parameters that determine how many records should be read and processed at one time.

• File data transformation, as per Data Format Transformation (681) and possible Data Model Transformation (671).

• Service invocation and file data transfer to services.

• File cleanup, renaming, and/or archiving.

Note that making the polling frequency and number of records processed at a time configurable allows the file processing logic to control the volume of messages sent to a service over a period of time. If a given service takes longer than usual to process a batch of records, these two settings can be tweaked to allow the service to catch up and, similarly, the rate can be increased if the service is processing incoming messages quicker than they are generated.

When services need to share data with legacy systems only capable of receiving flat files, this logic can act as the recipient and broker of messages responsible for transforming them into the legacy format. For example, for the first batch of incoming data the file gateway component can write records out to a flat file with special character-based delimiters separating each data record from another. Subsequent incoming data could then be appended to this file until a pre-specified file size limit is reached, after which it creates a new file and repeats this process.

Most of the impacts of this pattern are related to the impacts of file transfer in general. Because the process of transferring files is inherently asynchronous, it naturally introduces (often significant) latency to just about any data exchange scenario.

It can further be challenging to position file gateway components as reusable utility services due to the frequent need to configure the parameters of file transfer and processing for each service-to-legacy system file transfer.

Careful planning for mangement, administration, and monitoring is also required to maintain expectations related to message processing volumes and performance.

As explained previously, File Gateway commonly introduces utility services that depend on Data Format Transformation (681) and possible Data Model Transformation (671) to perform the conversion logic necessary for flat file data to be packaged and sent to services.

When viewing file transfer itself as a communications protocol, this pattern can be viewed as a variation of Protocol Bridging (687) as it essentially overcomes disparity in legacy and service communication platforms.

The application of Legacy Wrapper (441) may require File Gateway when a legacy system producing flat files needs to be wrapped by a standardized service contract and Service Agent (543), Service Callback (566), and Event-Driven Messaging (599) can be further applied to establish more sophisticated processing extensions around the file gateway logic.

Figure 15.10 File Gateway establishes relationships with all of the transformation patterns associated with Service Broker (707).

File Gateway is also commonly applied together with Enterprise Service Bus (704) whereby the file gateway component is deployed within the bus platform and is designed to leverage its inherent broker and routing features.